Abstract

We present the first IceCube search for a signal of dark matter annihilations in the Milky Way using all-flavour neutrino-induced particle cascades. The analysis focuses on the DeepCore sub-detector of IceCube, and uses the surrounding IceCube strings as a veto region in order to select starting events in the DeepCore volume. We use 329 live-days of data from IceCube operating in its 86-string configuration during 2011–2012. No neutrino excess is found, the final result being compatible with the background-only hypothesis. From this null result, we derive upper limits on the velocity-averaged self-annihilation cross-section, \(\langle \sigma _A \mathrm{v} \rangle \), for dark matter candidate masses ranging from 30 GeV up to 10 TeV, assuming both a cuspy and a flat-cored dark matter halo profile. For dark matter masses between 200 GeV and 10 TeV, the results improve on all previous IceCube results on \(\langle \sigma _A \mathrm{v} \rangle \), reaching a level of 10\(^{-23}\) cm\(^3\) s\(^{-1}\), depending on the annihilation channel assumed, for a cusped NFW profile. The analysis demonstrates that all-flavour searches are competitive with muon channel searches despite the intrinsically worse angular resolution of cascades compared to muon tracks in IceCube.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There is strong evidence for extended halos of dark matter surrounding the visible component of galaxies. Independent indications of the existence of dark matter arise from gravitational effects at both galactic and galaxy-cluster scales, as well as from the growth of primordial density fluctuations which have left their imprint on the cosmic microwave background [1]. The nature of the dark matter is, however, still unknown. The most common assumption is that dark matter is composed of stable relic particles, whose present-day density is determined by freeze-out from thermal equilibrium as the universe expands and cools [2–4]. We focus here on a frequently considered candidate – a cosmologically stable massive particle having only weak interactions with baryonic matter, namely a Weakly Interacting Massive Particle (WIMP).

Within this particle dark matter paradigm, the Milky Way is expected to be embedded in a halo of WIMPs, which can annihilate and produce a flux of neutrinos detectable at Earth. The differential flux depends on the annihilation cross section of the WIMPs as

where \(\langle \sigma _\mathrm{A} \mathrm{v} \rangle \) is the product of the self-annihilation cross section, \(\sigma _\mathrm{A}\), and the WIMP velocity, \(\mathrm{v}\), averaged over the velocity distribution of WIMPS in the halo, which we assume to be spherical, \(m_\chi \) is the WIMP mass, \(\mathrm{d}N_\nu /\mathrm{d}E\) is the neutrino energy spectrum per annihilation and \(J_\mathrm{a}(\psi )\) is the integral of the squared of the dark matter density along the line of sight. Therefore, searches for the dark matter annihilation signal in the Galactic halo can probe the WIMP self-annihilation cross-section, given their spatial distribution. The expected signal is particularly sensitive to the adopted density profile of the dark matter halo, which determines the term \(J_\mathrm{a}(\psi )\) in Eq. (1), \(\psi \) being the angle between the direction to the Galactic Centre and the direction of observation [5, 6]. The density profile of dark matter halos determined by numerical simulations of structure formation is still under debate [7–12]. To explicitly quantify the effect of the choice of the halo profile on the results of our analysis, we adopt two commonly used models: the Navarro–Frenk–White (NFW) cusped profile [9], and the Burkert cored profile [8, 13]. We use the values for the parameters that characterize each profile from the Milky Way model presented in [14]. The difference between the two profiles is relevant only within the Solar circle, i.e., at radii less than 10 kpc.

In this paper we use data from the IceCube neutrino telescope to search for high energy neutrinos from the Galactic Centre and halo that may originate from dark matter annihilations. There have been several studies triggered by the observation of a electron and positron excess in the cosmic ray spectrum [15–17] which favour models in which WIMPs annihilate preferably to leptons [18–24]. We keep, though, the analysis agnostic in terms of the underlying specific particle physics model that could give rise to WIMP dark matter. In this sense it is a generic approach, and our results can be interpreted within any model that predicts a WIMP.

We use data collected in 329.1 live-days of detector operation between May 2011 and March 2012. The analysis focuses on identifying particle cascades produced by neutral or charged current neutrino interactions occurring inside the DeepCore sub-array of IceCube, being thus sensitive to all flavours. The analysis does not explicitly try to remove muon tracks from charged current \(\nu _{\mu }\) interactions, but the event selection has been optimized to identify and select the more spherical light pattern produced in the detector by particle showers.

2 The IceCube neutrino observatory

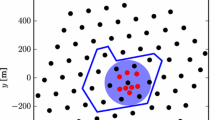

The IceCube Neutrino Observatory [25] is a neutrino telescope located about one kilometer from the geographical South Pole and consisting of an in-ice array and a surface air shower array, IceTop [26]. The in-ice array utilizes one cubic kilometer of deep ultra-clear glacial ice as its detector medium. This volume is instrumented with 5160 Digital Optical Modules (DOMs) that register the Cherenkov photons emitted by the particles produced in neutrino interactions in the ice. The DOMs are distributed on 86 strings and are deployed between 1.5 km and 2.5 km below the surface. Out of the 86 strings, 78 are placed in a triangular grid of 125 m side, evenly spaced over the volume, and are referred to as IceCube strings. The remaining 8 strings are referred to as DeepCore strings. They are placed in between the central IceCube strings with a typical inter-string separation of 55 m. They have a denser DOM spacing and photomultiplier tubes with higher quantum efficiency. These strings, along with some of the surrounding IceCube strings, form the DeepCore low-energy sub-array [27]. In the analysis described below, an extended definition of DeepCore was used, which includes one more layer of the surrounding IceCube strings, leaving a 3-string wide veto volume surrounding the fiducial volume used, see Fig. 1. While the original IceCube array has a neutrino energy threshold of about 100 GeV, the addition of the denser infill lowers the energy threshold to about 10 GeV.

The analysis presented in this paper uses a specific DeepCore trigger, which requires that at least three hits are registered within 2.5 \(\upmu \)s of each other in the nearest or next-to-nearest neighboring DOMs in the DeepCore sub-array. When this condition is fulfilled, the trigger opens a ±6 \(\upmu \)s readout window centered around the trigger time, where the full in-ice detector is read out. The average rate of this trigger is about 260 \(s^{-1}\).

Schematic overview of the IceCube string layout seen from above. Gray-filled markers indicate IceCube strings and black markers indicate the DeepCore strings with denser DOM spacing. All IceCube strings marked with a black border are included in the definition of the extended DeepCore volume used in the analysis

3 Signal and background simulations

In order to keep the analysis general we will assume that WIMPs annihilate with 100 % branching ratio into a few benchmark channels (\(b\bar{b}\), \(W^+W^-\), \(\nu \bar{\nu }\), \(\mu ^+\mu ^-\) and \(\tau ^+\tau ^-\)) and present results for these cases. Those channels effectively bracket the final particle spectra of realistic models with several final states. The neutrino spectra were calculated using PYTHIA [28] by producing a resonance at twice the mass under consideration and forcing it to decay to the desired channel. The program then takes care of the further hadronization and/or decays in the standard way. We ignore the possible WIMP spin in this approach, which can effect the final neutrino spectrum, mainly when considering annihilations through the W\(^{+}\)W\(^{-}\) channel [29]. We assume that the detected neutrinos have undergone full flavour mixing given the very long oscillation baseline from the source, so there are equal numbers of the three flavours. The expected angular distribution of signal events in the sky is obtained by reweighting the originally simulated isotropic distribution by \(J_\mathrm{a}(\psi )\).

There are two backgrounds to any search for neutrinos from the Galaxy: atmospheric neutrinos and atmospheric muons, both produced in cosmic-ray interactions in the atmosphere. To estimate the effect of these backgrounds on the analysis, a sample of atmospheric muons was generated with the CORSIKA package [30] and a sample of atmospheric neutrinos was simulated with GENIE [31] between 10 GeV and 200 GeV, and with NUGEN [32] from 200 GeV up to \(10^9\) GeV, adopting the spectrum in [33]. However, the analysis does not use background simulations to define the cuts, but instead relies on azimuth-scrambled data. This reduces the systematic uncertainties and automatically accounts for any unsimulated detector behavior. The background simulations were used to verify the overall validity of the analysis and the performance of the different cut levels. Since the majority of triggers in IceCube are due to atmospheric muons, the distributions of the variables used in the analysis must agree between data and the CORSIKA simulation at early selection levels, while at higher selection levels the data should show a significant fraction of atmospheric neutrinos. Atmospheric muons and particles resulting from neutrino interactions in or near the detector are propagated through the detector volume and their Cherenkov light emission simulated. Cherenkov photons are then propagated through the ice using the PPC package [34], and the response of the detector calculated. From this point onwards, simulations and data are treated identically through further filtering and data cleaning.

The score distribution for the BDT trained on the \(b\bar{b}\) 100 GeV signal channel (left) and for the BDT trained on the \(W^+W^-\) 300 GeV signal channel (right). The plot shows the passing event rate (in Hz) for simulated atmospheric muons (blue line) and atmospheric neutrinos (green lines), as well as for the sum of these two components (total MC, purple line), compared with the data passing rate. The passing rate for each signal channel the BDT was trained for is also shown (grey lines), normalized to the experimental data rate. The final cuts on the score are marked with vertical lines. Events were kept if any of the scores were above the cut values. The lower panel in each plot shows the ratio of the data passing rate to the total expected background

4 Data selection

The triggered data are first cleaned of potential noise hits that could effect the performance of the track and cascade reconstruction algorithms. Hits that lie outside a predetermined time window around the trigger time or which do not have another causally connected hit within a predefined radius, are removed. The data is then filtered by a fast algorithm that selects events starting in the DeepCore fiducial volume, in order to remove events triggered by through-going atmospheric muons. The IceCube strings surrounding DeepCore are used as a veto for incoming tracks. The algorithm selects events with the “amplitude-weighted” centre of gravity of all hits inside the DeepCore volume,Footnote 1 and no more than one hit in the surrounding IceCube strings causally connected with that point. This filter reduces the passing event rate by nearly a factor of 10.

The event sample is further reduced by requiring a minimum number of eight hits in the event distributed in at least four strings. This ensures that the remaining events can be well reconstructed. The events are then processed through a series of reconstructions aimed at determining their type (cascade or track), arrival direction and energy. In a first stage, two first-guess reconstructions are applied; fits for a track hypothesis and for a cascade hypothesis are performed in order to obtain a quick characterization of the events and perform a first event selection. These fits are based on the position and time of the hits in the detector, but do not include information about the optical properties of the ice, in order to speed up the computation. The track hypothesis performs a \(\chi ^2\) fit of a straight line to the hit pattern of the event, returning a vertex and a velocity [35]. The cascade hypothesis is based on determining the amplitude-weighted centre of gravity of the hits in the event and its associated time. The algorithm calculates the three principal axes of the ellipsoid spanned by the spacial distribution of hits, and the longest principal axis is selected to determine the generic direction of the event. Since the specific incoming direction along the selected axis is ambiguous, the hit times are projected onto this axis, from latest to earliest, to characterize the time-development of the track so that it points towards where the incident particle originated. The tensor of inertia reconstruction is generally only suitable as a first guess of the direction for track-like events, since for cascade-like events the three principal axes of the ellipsoid will be close to equal in size. This property, however, can be used to discriminate between tracks and cascades. Additionally, a series of cuts based on variables derived from the geometrical distribution of hits, as well as from information from the first guess reconstructions, are applied. These cuts bring the experimental data rate down by a factor of about 3000 with respect to trigger level, while keeping about 50 % of the signal, depending on the WIMP mass and annihilation channel considered.

Left All-flavour neutrino effective area as a function of energy for the two event selections of the analysis, the low-energy (LE) and high-energy (HE) selections. Right Cumulative angular resolution (based on the space angle between the reconstructed and true direction of incoming neutrinos) at final analysis level

Example of the space angle PDF for one of the signal channels considered (\(\chi \chi \rightarrow b\bar{b}\)) and two halo profiles, the Burkert profile (left) and the NFW profile (right). In each plot the signal PDF, \(f_\mathrm{S}(\psi )\), is shown as a thick black line, and the two components of the background PDF, the scrambled data, \(f_\mathrm{sd}(\psi )\), and the scrambled signal, \(f_\mathrm{ss}(\psi )\), are shown as the gray shaded area and the thin black line, respectively. The angle \(\psi \) represents the angular distance between the direction of reconstructed tracks and the location of the Galactic Center

At this point three sophisticated likelihood-based reconstructions are applied on all the remaining events. The likelihood reconstructions aim at determining a set of parameters \(\mathbf{{a}}=(\mathbf{{x}_0}, t_0, {\mathbf \xi}, E_0)\) given a set of measured data points \({d_i}\) (e.g. time and spatial coordinates of every hit in an event). Here \(\varvec{x}_0\) is an arbitrary point along the track, \(t_0\) is the event time at position \(\mathbf{{x}_0}\), \(\mathbf{\xi}\) is the direction of the incoming particle and \(E_0\) is the deposited energy of the event. The reconstructions attempt to find the value of \(\mathbf{{a}}\) that maximizes the likelihood function, which is based on the Probability Density Function (PDF) of measuring the data point \({d_i}\) given the set of parameters \(\mathbf{{a}}\). For a cascade reconstruction there are seven degrees of freedom, while an infinite track reconstruction has only six since the point \(\mathbf{{x}_0}\) can be chosen arbitrarily along the track. The first reconstruction is based on an infinite track hypothesis, fitting only direction, not energy. The second reconstruction uses a cascade hypothesis, and it fits for the vertex position, direction and energy of the cascade. These two reconstructions use an analytic approximation for the expected hit times in the DOMs given a track or cascade hypothesis [36], rather than a full description of the optical properties of the ice. Since the focus of this analysis is to identify cascades, an additional, more advanced, cascade reconstruction is also performed, using the previous one as a seed. This second cascade reconstruction uses the full description of the optical properties of the Antarctic ice, as well as information of the position of non-hit DOMs through a term added to the energy likelihood. The three likelihood reconstructions return the best fit values of the variables of the vector \(\mathbf{{a}}\) they fit for, as well as a likelihood value of their respective hypothesis, which is used in a further selection of events using linear cuts.

The final selection of events uses Boosted Decision Trees (BDT) [37] to classify events as signal-like or background-like. Two BDTs were trained using data as background and a different benchmark reference signal each. One of the BDTs (BDT\(_\text {LE}\)) was trained using the neutrino spectrum from a 100 GeV WIMP annihilating into \(b\bar{b}\), while the other, BDT\(_\text {HE}\), was trained on the neutrino spectrum of a 300 GeV WIMP annihilating into \(W^+W^-\). These two spectra were chosen to represent a soft and hard neutrino spectrum respectively, so the sensitivity of the analysis to other WIMP masses and/or annihilation channels with similar spectra can be evaluated with the same cuts on the BDT output scores. This removes the need to train a different BDT specifically for each mass and annihilation channel. Since no variables depending on the arrival direction of the events are used in the BDT training, the event sample is kept blind with respect to the position of the Galactic Centre in the sky.

Seven variables that showed a good separation power between signal and background, selected among an initial larger set of variables that were tried, were used to train the BDTs. The variables are based on the different geometrical patterns that tracks and cascades leave in the detector, as well as on their different time development. The whole data set was classified by the two BDTs so each event was assigned two BDT scores. In order to decide on the best cut value on each BDT output, the range of BDT score values was scanned and the sensitivity of the analysis was calculated for each of them. The scores producing the best sensitivity for each of the two signal channels for which the BDTs were trained were selected. Events with a BDT\(_\text {LE}\) score above the optimal value are referred to as the “low-energy” (LE) sample, and events with a BDT\(_\text {HE}\) score above the corresponding cut value are referred to as the “high-energy” (HE) sample. The remaining number of events in each sample is 5892 events in the LE sample and 2178 events in the HE sample. The overlap between the two samples (events which have both BDT scores above the respective cut values) is 664 events. The final BDT score distributions for the 100 GeV \(b\bar{b}\) and the 300 GeV \(W^+W\) channels are presented in Fig. 2, with the vertical lines marking the optimal cut values used to select the final event sample.

Distributions of the \(\psi \) angles of the final event samples. The bin contents are directly proportional to the number of observed events, to which we choose not to assign any statistical uncertainty. Left the Low Energy (LE) sample, which contains 5892 observed events. Right the High Energy (HE) sample, which contains 2178 observed events

After the BDT classification, the data has been reduced by a factor of about \(1(3)\times 10^{6}\) for the LE(HE) sample, but still contains about 20 % of atmospheric muon contamination. The remaining signal in the two benchmark scenarios considered amounts to about 6 %(8 %) respectively. A summary of the event selection rates, as well as signal efficiency, is given in Table 1. The effective area for the two event selections, a measure of how efficient the detector is for the present analysis, is shown in the left plot of Fig. 3. The right plot in the same figure shows the cumulative angular resolution (space angle between the reconstructed and true direction of the incoming neutrino) for the two benchmark channels used in training the BDTs.

5 Systematic uncertainties

In order to estimate the effect of experimental systematic uncertainties on the final sensitivity, Monte Carlo simulation studies were done, where the parameters defining a given input were varied within their estimated uncertainty. The main source of systematic uncertainties is the limited knowledge of the optical properties of the ice, both the bulk ice between 1450 m and 2500 m, as well as the “hole ice”, i.e. the ice that forms as the water in the hole drilled for the string deployment refreezes. The scattering and absorption coefficients of the ice as a function of depth have been determined by in-situ flash measurements, and a standard “ice model” for IceCube has been derived [38]. The effect on the uncertainty of the estimated absorption and scattering length was investigated by varying the baseline settings by ±10 % individually. Their contribution to the uncertainty on the sensitivity lies in the range 8 %–12 %. Furthermore, there are indications that the hole ice contains residual air bubbles that result in a shorter scattering length in this ice compared to the ancient glacial bulk ice surrounding it. In the baseline simulation data sets the scattering length of the hole ice is set to 50 cm. Varying this parameter between 30 cm and 100 cm yields a 10 %–24 % change on the sensitivity. Recently, a more detailed modeling of the bulk ice has been developed [39]. It includes anisotropic scattering and accounts for the tilt of the different ice layers across the IceCube volume. Preliminary studies indicate that the effect on the sensitivity of this model is negligible for high-energy events, but it can be sizable for the lowest-energy events, reducing the sensitivity for low WIMP masses up to 25 %. These effects have not been included in this analysis.

The overall efficiency of the process of converting the Cherenkov light into a detectable electrical signal by the DOM is another source of uncertainty. This effect was investigated by changing the DOM efficiency in the signal simulation by ±10 %, according to measurements of the performance of the DOMs in laboratory tests before deployment, as well as in in-situ calibration measurements after deployment. This uncertainty translates into an uncertainty on the final sensitivity of 10 %–35 %, depending on event selection. The effect is stronger for low-energy events that can fall under the detector threshold if less light is being captured. Additional, but minor, effects arise from the implementation of the photomultiplier dark noise in the simulation, the timing and geometry calibration of the detector and from the intrinsic randomness of several steps of the analysis, like time-scrambling of the data or the many pseudo-experiments performed to calculate the sensitivity.

All systematic uncertainties considered are summarized in Table 2 together with the total (quadratic sum) for the low and high-energy selections for both halo profiles. In order to be conservative, the limits presented in Sect. 6 for each WIMP mass and annihilation channel were rescaled by the corresponding total systematic uncertainty shown in Table 2.

Upper limits (90 % C.L., solid black line) on the velocity-averaged WIMP self-annihilation cross section, \(\langle \sigma _{\mathrm{A}} \mathrm{v}\rangle \), for the NFW halo model together with the corresponding sensitivities (dashed black line) and their 1\(\sigma \) (green) and 2\(\sigma \) (yellow) statistical uncertainties. The black dots represent the masses probed, while the black line in between is drawn to guide the eye. Each plot corresponds to a different annihilation channel as indicated in the legend. The local dark matter density used was \(\rho _{\mathrm{local}}\) \(=\) 0.47 GeV/cm\(^3\) [14]

Upper limits (90 % C.L., solid black line) on the velocity-averaged WIMP self-annihilation cross section, \(\langle \sigma _{\mathrm{A}} \mathrm{v}\rangle \), for the Burkert halo model together with the corresponding sensitivities (dashed black line) and their 1\(\sigma \) (green) and 2\(\sigma \) (yellow) statistical uncertainties. The black dots represent the masses probed, while the black line in between is drawn to guide the eye. Each plot corresponds to a different annihilation channel as indicated in the legend. The local dark matter density used was \(\rho _{\mathrm{local}}\) \(=\) 0.49 GeV/cm\(^3\) [14]

6 Analysis method

We use the distribution of the space angle \(\psi \) between event directions and the Galactic Centre to construct a likelihood function and test the signal hypothesis (excess of events at small \(\psi \) values) against the background-only hypothesis (an event distribution isotropic in the sky). The signal and background hypotheses are represented by probability density functions of the \(\psi \) distributions,

where the subscripts S and B denote signal and background respectively and \(\mu \) is the number of signal events present among the total number of observed events, \(n_\mathrm{obs}\). The angle \(\psi \) is allowed to be in the full range [0\(^\circ \), 180\(^\circ \)], therefore covering the full sky, as shown in Fig. 4. This allows the analysis to be sensitive to the whole halo instead of just to the Galactic Centre. However, if the signal is allowed to come from anywhere in the halo, the background distribution, taken from data, is necessarily contaminated by a potential signal: thereby the dependence of \(f_\mathrm{B}(\psi \mid \mu )\) on \(\mu \) and not only on \(\psi \). In particular the background distribution is constructed as

where \(f_\mathrm{ss}\) and \(f_\mathrm{sd}\) are the PDF of the scrambled arrival directions of signal simulation and data events respectively.

The likelihood that the data sample contains \(\mu \) signal events is defined as

where \(n_\mathrm{obs}\) is the number of observed events and \(f(\psi _i \mid \mu )\) is given in Eq. (2). We follow the method described in [40] to calculate a 90 % confidence level upper limit on \(\mu \), \(\mu _{\mathrm{90}}\), which gives an upper limit on the flux of neutrinos from the halo as defined in Eq. (1). This limit can, in turn, be translated into a limit on \(\langle \sigma _{\mathrm{A}} \mathrm{v} \rangle \) for any given WIMP mass, annihilation channel and halo profile. The final limits are shown in the next section, for the event selection that showed the best sensitivity in each case.

7 Results and conclusion

At final selection level, a total of 5892 (2178) events were observed in the full sky for the low-energy (high-energy) samples respectively. Figure 5 shows the angular distribution of the two event samples at final cut level. The distributions are compatible with 0 signal events for all WIMP masses and annihilation channels tested. Tables 3, 4, 5 and 6 show the results for the best fit on the number of signal events, \(\hat{\mu }\), together with the 90 % upper limits on the number of signal events, \(\mu _{90}\), and the corresponding limit on the thermally-averaged WIMP annihilation cross section, \(\langle \sigma _{\mathrm{A}} \mathrm{v} \rangle _{90}\). Corresponding quantities with a tilde denote median upper limits (i.e., sensitivities). Each table corresponds to a given benchmark annihilation channel and it shows different WIMP masses for the two halo models considered. The available statistics at final level in the case of direct annihilation of 700 GeV WIMPs to neutrinos using the Burkert profile were not sufficient to define an angular distribution which was smooth enough to perform the shape analysis, so we choose not to quote results for this mass and channel in Table 6. Figures 6 and 7 show the results graphically for the NFW and Burkert dark matter profiles respectively. The plots show the 90 % C.L. upper limits (solid black line) on the velocity-averaged WIMP self-annihilation cross section, \(\langle \sigma _{\mathrm{A}} \mathrm{v}\rangle \), together with the corresponding sensitivities (dashed black line) and the 1\(\sigma \) (green) and 2\(\sigma \) (yellow) statistical uncertainties.

In order to put the results of this analysis in perspective, Fig. 8 shows a comparison with results from previous IceCube analyses and other experiments, for the \(\tau \tau \) annihilation channel and the NFW profile. Also shown is the allowed area in the \((\langle \sigma _{\mathrm{A}} \mathrm{v}\rangle \), \(m_{\chi })\) parameter space if the \(e^++e^-\) flux excess seen by Fermi-LAT and H.E.S.S. and the positron excess seen by PAMELA are interpreted as originating from dark matter annihilations [41]. There exist, however, conventional explanations based on local astrophysical sources [42, 43] that, along with current limits on \(\langle \sigma _{\mathrm{A}} \mathrm{v}\rangle \), disfavour such explanation. The figure shows that the analysis presented in this paper improves on previous IceCube analyses [44–47] for WIMP masses above about 200 GeV, as well as on the ANTARES [48] result for WIMP masses below \(\sim \)1 TeV. This demonstrates that particle cascades can be reconstructed with a good enough angular resolution in IceCube to make this channel competitive in searches for dark matter signals with neutrinos from the Galactic Centre and halo. Even if Cherenkov telescopes and gamma-ray satellites can reach stricter bounds on \(\langle \sigma _\mathrm{A} \mathrm{v} \rangle \) due to their better angular resolution and, depending on the source under consideration, low background, there is a much-needed complementarity in the field of dark matter searches, where neutrino telescopes can play a valuable role.

Comparison of upper limits on \(\langle \sigma _\mathrm{A} \mathrm{v} \rangle \) versus WIMP mass, for the annihilation channel \(\chi \chi \rightarrow \tau ^+\tau ^-\). This work (IC86 Halo Casc.) is compared to ANTARES [48] and previous IceCube searches with different detector configurations [44–47]. Also shown are the latest upper limits from gamma-ray searches obtained from the combination of FermiLAT and MAGIC results [49]. The three shaded areas indicate allowed regions if the \(e^++e^-\) flux excess seen by Fermi-LAT, H.E.S.S. and the positron excess seen by PAMELA (\(3\sigma \) in dark green, \(5\sigma \) in light green and gray area, respectively) would be interpreted as originating from dark-matter annihilations. The data for the shaded regions are taken from [41]. The natural scale denotes the required value of \(\langle \sigma _\mathrm{A} \mathrm{v} \rangle \) for a thermal-relic to constitute the dark matter [50]

Notes

The amplitude-weighted centre of gravity of an event is defined as \(\varvec{r}_{\texttt {COG}}=\sum a_i \varvec{r}_i / \sum a_i\), where \(a_i\) and \(\varvec{r}_i\) are the amplitude and position of the ith hit. The sum runs over all the hits in the event (after hit cleaning).

References

V. Lukovic, P. Cabella, N. Vittorio, Int. J. Mod. Phys. A 29, 1443001 (2014)

G. Jungman, M. Kamionkowski, K. Griest, Phys. Rep. 267, 195 (1996)

J.L. Feng, Ann. Rev. Astron. Astrophys. 48, 495 (2010)

L. Bergström, Ann. Phys. 524, 479 (2012)

L. Bergström, P. Ullio, J.H. Buckley, Astropart. Phys. 9, 137 (1998)

H. Yuksel, S. Horiuchi, J.F. Beacom, S. Ando, Phys. Rev. D 76, 123506 (2007)

A.V. Kravtsov, A.A. Klypin, J.S. Bullock, J.R. Primack, Astrophys. J. 502, 48 (1998)

A. Burkert, Astrophys. J. 171, 175 (1996)

J.F. Navarro, C.S. Frenk, S.D. White, Astrophys. J. 462, 563 (1996)

J. Diemand, B. Moore, Adv. Sci. Lett. 4, 297 (2011)

R. Ruffini, C.R. Argüelles, J.A. Rueda, Mon. Not. R. Astron. Soc. 451(1), 622 (2015)

W.J.G. de Blok, Adv. Astron. 2010, 789293 (2010)

P. Salucci, A. Burkert, Astrophys. J. 537, L9 (2000)

F. Nesti, P. Salucci, JCAP 1307, 016 (2013)

O. Adriani et al., Nature 458, 607 (2009)

A.A. Abdo et al., Phys. Rev. Lett. 102, 181101 (2009)

J. Chang et al., Nature 456, 362 (2008)

M. Cirelli, M. Kadastik, M. Raidal, A. Strumia, Nucl. Phys. B 813, 1 (2009)

M. Cirelli, M. Kadastik, M. Raidal, A. Strumia, Addendum. Nucl. Phys. B 873, 530 (2013)

F. Donato, D. Maurin, P. Brun, T. Delahaye, P. Salati, Phys. Rev. Lett. 102, 071301 (2009)

V. Barger, W.Y. Keung, D. Marfatia, G. Shaughnessy, Phys. Lett. B 672, 141 (2009)

L. Bergström, J. Edsjö, G. Zaharijas, Phys. Rev. Lett. 103, 031103 (2009)

I. Cholis, L. Goodenough, D. Hooper, M. Simet, N. Weiner, Phys. Rev. D 80, 123511 (2009)

S.K. Mandal, M.R. Buckley, K. Freese, D. Spolyar, H. Murayama, Phys. Rev. D 81, 043508 (2010)

F. Halzen, S.R. Klein, Rev. Sci. Instrum. 81, 081101 (2010)

R. Abbasi et al., Nucl. Instrum. Methods A700, 188 (2013)

R. Abbasi et al., Astropart. Phys. 35, 615 (2012)

T. Sjostrand, S. Mrenna, P.Z. Skands, Comput. Phys. Commun. 178, 852 (2008)

V. Barger, W.-Y. Keung, G. Shaughnessy, A. Tregre, Phys. Rev. D 76, 095008 (2007)

D. Heck, G. Schatz, T. Thouw, J. Knapp, J.N. Capdevielle, FZKA Technical Report (1998)

C. Andreopoulos et al., Nucl. Instrum. Methods A614, 87 (2010)

A. Gazizov, M.P. Kowalski, Comput. Phys. Commun. 172, 203 (2005)

M. Honda, T. Kajita, K. Kasahara, S. Midorikawa, T. Sanuki, Phys. Rev. D 75, 043006 (2007)

D. Chirkin, Nucl. Instrum. Methods A725, 141 (2013)

M.G. Aartsen et al., Nucl. Instrum. Methods A736, 143 (2014)

J. Ahrens et al., Nucl. Instrum. Methods A524, 169 (2004)

A. Hocker et al., PoS ACAT, 040 (2007)

M.G. Aartsen et al., Nucl. Instrum. Methods A711, 73 (2013)

M.G. Aartsen et al., in Proceedings of the 33rd Cosmic Ray Conference, ICRC2013, Rio de Janeiro, p. 0580 (2013)

G.J. Feldman, R.D. Cousins, Phys. Rev. D 57, 3873 (1998)

P. Meade, M. Papucci, A. Strumia, T. Volansky, Nucl. Phys. B 831, 178 (2010)

P. Blasi, Phys. Rev. Lett. 103, 051104 (2009)

D. Hooper, P. Blasi, P.D. Serpico, JCAP 0901, 025 (2009)

R. Abbasi et al., Phys. Rev. D 84, 022004 (2011)

M.G. Aartsen et al., Eur. Phys. J. C 75(1), 20 (2015)

M.G. Aartsen et al., Phys. Rev. D 88, 122001 (2013)

M.G. Aartsen et al., Eur. Phys. J. C 75(10), 492 (2015)

S. Adrian-Martinez et al., JCAP 1510(10), 068 (2015)

M.L. Ahnen et al., JCAP 1602(02), 039 (2016)

G. Steigman, B. Dasgupta, J.F. Beacom, Phys. Rev. D 86, 023506 (2012)

Acknowledgments

We acknowledge the support of the following institutions: U.S. National Science Foundation-Office of Polar Programs, U.S. National Science Foundation-Physics Division, University of Wisconsin Alumni Research Foundation, the Grid Laboratory Of Wisconsin (GLOW) grid infrastructure at the University of Wisconsin – Madison, the Open Science Grid (OSG) grid infrastructure; U.S. Department of Energy, and National Energy Research Scientific Computing Centre, the Louisiana Optical Network Initiative (LONI) grid computing resources; Natural Sciences and Engineering Research Council of Canada, WestGrid and Compute/Calcul Canada; Swedish Research Council, Swedish Polar Research Secretariat, Swedish National Infrastructure for Computing (SNIC), and Knut and Alice Wallenberg Foundation, Sweden; German Ministry for Education and Research (BMBF), Deutsche Forschungsgemeinschaft (DFG), Helmholtz Alliance for Astroparticle Physics (HAP), Research Department of Plasmas with Complex Interactions (Bochum), Germany; Fund for Scientific Research (FNRS-FWO), FWO Odysseus programme, Flanders Institute to encourage scientific and technological research in industry (IWT), Belgian Federal Science Policy Office (Belspo); University of Oxford, United Kingdom; Marsden Fund, New Zealand; Australian Research Council; Japan Society for Promotion of Science (JSPS); the Swiss National Science Foundation (SNSF), Switzerland; National Research Foundation of Korea (NRF); Villum Fonden, Danish National Research Foundation (DNRF), Denmark. H.T. acknowledges support from the K. G. och Elisabeth Lennanders Stiftelse.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Aartsen, M.G., Abraham, K., Ackermann, M. et al. All-flavour search for neutrinos from dark matter annihilations in the Milky Way with IceCube/DeepCore. Eur. Phys. J. C 76, 531 (2016). https://doi.org/10.1140/epjc/s10052-016-4375-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-016-4375-3