Abstract

Brain-inspired computing is a growing and interdisciplinary area of research that investigates how the computational principles of the biological brain can be translated into hardware design to achieve improved energy efficiency. Brain-inspired computing encompasses various subfields, including neuromorphic and in-memory computing, that have been shown to outperform traditional digital hardware in executing specific tasks. With the rising demand for more powerful yet energy-efficient hardware for large-scale artificial neural networks, brain-inspired computing is emerging as a promising solution for enabling energy-efficient computing and expanding AI to the edge. However, the vast scope of the field has made it challenging to compare and assess the effectiveness of the solutions compared to state-of-the-art digital counterparts. This systematic literature review provides a comprehensive overview of the latest advances in brain-inspired computing hardware. To ensure accessibility for researchers from diverse backgrounds, we begin by introducing key concepts and pointing out respective in-depth topical reviews. We continue with categorizing the dominant hardware platforms. We highlight various studies and potential applications that could greatly benefit from brain-inspired computing systems and compare their reported computational accuracy. Finally, to have a fair comparison of the performance of different approaches, we employ a standardized normalization approach for energy efficiency reports in the literature.

Graphical abstract

Unconventional computing, including its four major, partly overlapping, brain-inspired computating frameworks: In-memory, neuromorphic, reservoir, and hyperdimensional computing

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The miniaturization of electronics has enabled local sensing, processing, and actuation systems for edge computing [1, 2]. Local data processing provides shorter response times [3] and better privacy protection owing to reduced network dependence [4]. However, enabling edge computing has various challenges, given the power and energy budget limitations [5], which stem from the immense amount of data needed to be processed and the inherent complexity of the computational models. Therefore, low-power edge computing requires a collaborative effort to jointly co-innovate computational models and hardware [6].

When looking at the computational models for data analysis, deep neural networks (DNNs) are currently the most widely used and successful approach [7]. DNNs, however, are computationally expensive models, i.e., demanding numerous calculations to be computed in a reasonably short amount of time to meet the response time requirements [8]. In terms of computational model development, various approaches, such as efficient deep learning [9], neural architecture search [10], and benefiting from sparsity in matrix multiplications [11], have been studied. Central to these techniques, nonetheless, is to understand how hardware accomplishes these operations.

Currently, let us focus on the fact that the majority of operations performed in DNNs involve matrix–vector multiplications (MVM), which are further broken down into multiply-accumulate (MAC) operations [12]. Knowing the structure of a DNN, one can determine the memory access pattern for these MAC operations. Therefore, by exploiting parallel processing it is relatively straightforward to gain massive execution speedup using, e.g., spatial and temporal dataflow hardware architectures [13]. The success of DNNs can be attributed in large part to the breakthroughs in parallel processing achieved by graphics processing units (GPUs) and, more recently by specialized hardware accelerators, such as wafer-scale chips [14], application-specific integrated circuit (ASIC) hardware accelerators [12, 13], and tensor processing units [15,16,17].

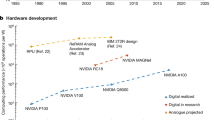

Presently, most DNN workloads rely on modern metal–oxide–semiconductor field-effect transistor (MOSFET)-based digital processors. Leveraging the scalability of complementary metal–oxide–semiconductor (CMOS) technology, each new processor generation comes with additional smaller transistors, resulting in higher performance per joule or equivalently better energy efficiency. Transistors in arithmetic logic units and memory circuits have both gone through this miniaturization, yet at different rates [18] (purple vs. blue curve in Fig. 1). Although this trend has remained valid since the 1960s, known as Moore’s law, its continuation is a heavily debated subject [19, 20]. Breakdowns have happened to other semiconductor industry laws that once were harmonious with Moore’s law, e.g., Dennard’s scaling law of power density [21] breaking down around 2006 [22]. Since then, however, innovations, such as multicore-processor design, evolved processors to become more powerful, although their speed (clock frequency) has experienced a negligible increase (depicted by the red curve in Fig. 1) [23].

DNN parameter size growth as an indicator of computational requirement (grey line) compared with hardware performance indicators (left axis) and fabrication costs. As a notable trend mismatch, the computational requirements of DNN models are growing exceedingly faster than the hardware specifications. Furthermore, the cost of hardware per generation is growing exponentially, which limits the use of high-end processors for commercial edge devices, thus, restricting the DNN development at the edge. Data from Refs. [23, 27, 28]

Regardless of the discussion on whether physical limitations in sub-nanometer scaling can be coped with or not, what is alarming is a trend mismatch, as illustrated in Fig. 1. Specifically, DNN model sizes, which are proportional to their computational demands (grey curve in Fig. 1), have increased much faster than the corresponding improvements in modern computer hardware [24]. Furthermore, the price per transistor in the CMOS technology has historically declined, largely due to Moore’s law [25]. However, the unsustainable increase in the costs of fabricating smaller transistors, i.e., higher-performance chips (yellow curve Fig. 1) has slowed down this trend. Overall, these observations suggest that following traditional hardware development practices alone will not suffice to meet the demands for efficient computing power in artificial intelligence (AI) systems [26], whether they are deployed in data centers or at the edge.

Therefore, there is a rising interest in energy-efficient hardware primarily optimized for artificial intelligence [29]. Given that the human brain can solve certain tasks almost instantaneously and with minimal effort, it is logical to draw inspiration from its functionality and incorporate those insights into hardware design [30, 31] or into the design of intelligent materials. Brain-inspired computing can be considered a subgroup of the unconventional computing (UC) paradigm (Fig. 2) [32]. The common feature of UC is that it often involves directly operating on physical systems to perform computational tasks rather than manipulating symbols, such as binary digits (bits) in digital computing [33]. Next to brain-inspired computing, it involves a wide range of approaches rather than the CMOS-based digital computing framework, such as molecular computing [34], quantum computing [35], optical computing [36], and thermodynamic computing [37].

Unconventional computing, including four major, partly overlapping, brain-inspired computational frameworks. Neuromorphic computing systems are designed to employ temporal spikes, as in spiking neural networks (SNNs), which are models of biological neurons that communicate with each other through time-encoded electrical impulses or spikes. Hyperdimensional computing is a technique that uses high-dimensional vectors to represent information, similar to how the brain represents information in distributed patterns across many neurons. Reservoir computing is a computational framework derived from recurrent neural networks designed to process temporal data, such as speech or video, using a fixed, randomly connected network of neurons called a “reservoir.” In-memory computing is a growing paradigm that uses memory devices to store and perform computations, which is inspired by the brain’s ability to perform computation and storage in the same physical structure, such as in the synapses between neurons

The goal of brain-inspired computing is to create computing systems that are more efficient, adaptable, robust, and capable of complex cognitive tasks compared to the existing computing systems by drawing inspiration from the structure and function of the brain [38]. Neuromorphic computing, hyperdimensional computing, reservoir computing, and in-memory computing are all computing paradigms that can be considered subfields of brain-inspired computing, and in some cases, they overlap. For example, several works develop concepts where a hyperdimensional-computing idea is realized within an in-memory computing framework. To consistently identify computational frameworks, in this work, we consider the framework idea that each article has reviewed rather than the realization approach. Specifically, we use ‘neuromorphic computing’ for designs that incorporate time-dependent encoding of information learning and communicating.

This work provides a review of brain-inspired computing publications between 2016 and 2023 to answer the following key questions:

-

1.

What different approaches for brain-inspired computing are being pursued?

-

2.

What are the applications that brain-inspired computing is designed for?

-

3.

What is the trade-off between different approaches regarding energy efficiency?

We have chosen a systematic approach to collect, assess and synthesize state-of-the-art research articles based on the quality-evaluating rules defined in “Systematic approaches to a successful literature review” [39]. There it is pointed out that a systematic approach addresses present research deficiencies by emphasizing on clarity of each review step and preventing potential selection or topic biases.

Neuromorphic, in-memory, reservoir, and hyperdimensional computing have gained increasing attention from academia and industry as promising UC candidates. Therefore, we introduce the main concepts of these UC frameworks and report recent topical review articles of each framework. We aim to enable readers from different backgrounds to quickly grasp the concepts and pick up a recent review work to continue a more in-depth analysis of the topic. Finally, as the nature of different UC computing approaches widely varies, providing a quantitative comparison of their efficiency is challenging. We, therefore, adopt a normalization approach for the reported figures for the performance per watt (often provided in terms of operations per second per watt, or OPS/W) so that we can fairly compare different approaches.

The rest of the review is organized as follows. Section 2 provides the tutorial background and an overview of the recent topical reviews. Section 3 contains three subsections, each answering one of the above key questions. Finally, conclusions and the outlook for the field are discussed in Sect. 4.

2 Background and other reviews

A literature search is one of the first steps of a systematic literature review, as well as identifying the research questions [39]. The systematic literature search entails defining the search scope by choosing keywords and relevant libraries. As described in Appendix 1, we specified the keywords to be searched within several online libraries. Among the collected articles, we separated literature reviews from research articles. \(\sim\)17% (91 out of 547) of the collected papers that passed the relevance-based study selection (Appendix 1.2) filter were literature reviews. Figure 3 depicts the percentage share of each topic reviewed by prior literature reviews. Neuromorphic and in-memory computing are the most studied brain-inspired approaches, which share over 50% percent of previous literature reviews.

Overview of brain-inspired computing topics that previous literature reviews have studied. The percentages are based on the number of published reviews that passed the relevance-based study selection criterion (Appendix 1.2). Spintronics, molecular, in-sensor, and quantum neuromorphic computing are grouped in the “others” slice

In this section, we provide an essential introduction to neuromorphic, in-memory, reservoir, and hyperdimensional computing. This tutorial section is intended for readers from different backgrounds to get familiar with the main ideas in each approach. Furthermore, several literature reviews and surveys have already reviewed different perspectives of brain-inspired computing, where each focused on one or more aspects to examine in depth. Here, we mention these existing works to help guide the readers to find topically focused reviews for a more in-depth discussion of the respective field.

2.1 In(near)-memory computing

The von Neumann bottleneck is a fundamental limitation to higher performance and energy efficiency in modern computers [40]. In digital computers, different hierarchies of memory units, which are mainly physically separated from the processing units, store both data and instructions. Considering limited memory response time and bandwidth, data transfer—from memory to processing units and vice versa—imposes substantial energy and latency (response delay) costs [41]. Furthermore, as shown in Fig. 1, memory size and bandwidth are facing scaling challenges. These limitations have motivated hardware designers to migrate from processor-centric to data-centric designs [42], where memory systems enable simultaneous data storage and processing, an approach referred to as in-memory computing. In-memory computing is inspired by the synaptic operations in the brain to consolidate memory and processing units to tackle the modern computing systems’ data movement bottleneck.

Classical arithmetic operations, such as MAC, constitute the bulk of the computational requirements for models used in artificial intelligence [13]. Executing these operations in the von Neumann architecture requires—at least—three memory accesses for fetching the operands and storing the results. DNNs rely on immense MAC operations, and consequently, digital hardware executing these models requires significant energy for data movement, which holds up more than 70% of the total energy consumption in the chip [12, 43]. Therefore, there has been extensive research to design alternative circuitries to accomplish MAC operations more efficiently, preferably exploiting massive parallelism. Computing in non-volatile memory addresses this problem by minimizing memory access and harnessing the laws of physics to perform arithmetic tasks with high energy efficiency.

Figure 4 depicts an example of an in-memory computing approach, from system-level architectural design (left) down to device structure (right). The in-memory computing core relies on memristive devices such as phase-change memory (PCM) [44] and ferroelectric [45] memory cells, which are programmed to maintain a specific conductance over an extended period. This eliminates the need for memory accesses to retrieve weights and improves overall efficiency. When a voltage value is applied to the inputs, according to Ohm’s law, the current output is equivalent to a multiplication operation, sensed by an analog-to-digital converter (ADC), shown as the equation in Fig. 4. Then, following Kirchhoff’s current law (KCL), the multiplication results are accumulated on the word line [46].

In-memory computing, from architectural design to device materials science. a A crossbar array of memory cells to parallelize MAC operations. Each row shares the same (analog) input voltage (\({V}_{in}^{(n)}\)) multiplied by different conductance \({G}_{i, j}\) of memristive cells. b Based on Kirchhoff’s law, the accumulated output current of column ‘c’ is shown in the equation. c Two examples of memristive devices, namely phase-change [44] and ferroelectric [45] memory cells. In a PCM device, the non-volatile resistance is tuned by the amorphous/crystalline phase of the programmable region by heat generated by the applied voltage to the electrodes (left). In a ferroelectric device, the non-volatile resistance is written by changing the orientation of the ferroelectric material (right). The massive parallelism, non-volatile multiplication’s operand, and efficient accumulation of current have motivated researchers to develop AI hardware accelerators based on non-volatile memory cell crossbar arrays [47, 48]

This non-von Neumann approach significantly reduces the number of memory accesses compared to digital solutions, thus providing a massively parallel and more energy-efficient solution. However, it is worth mentioning that driving in-memory computing cores requires peripheral circuitry, such as analog-to-digital and digital-to-analog converters (ADC/DAC), global memory, and controller logic, which have a significant contribution to the total power consumption of the chip [49]. Related challenges are achieving higher computational precision [50, 51], inherent stochastic switching of devices and their impacts on reliability [52, 53], and levels of parallelism [54]. In the following paragraph, we report some of the existing literature reviews discussing in(near)-memory computing.

2.2 In-memory computing literature reviews

2.2.1 General reviews

In Ref. [42], Siegl et al. provide a general overview of in-memory computing, where they consider near/in-memory an urgent requirement for modern computing systems. Moreover, Ref. [42] provides a practical explanation for bottlenecks of modern computing systems, i.e., memory, power, and bandwidth walls, and explains the issues of scaling down CMOS and its effect on energy efficiency.

References [55] and [56] classify computing platforms based on the memory access types, whether processing units are far, near, or in the memory. In these reviews, Singh et al. focus on near-memory computing and discuss data movement costs in modern computing systems. They propose that moving towards near-memory computing can be an immediate solution for data-intensive tasks.

Sun et al. [57] review the memristive devices from an evolutionary perspective, where they cover materials, nanostructures, and the (physics of) mechanisms enabling them to be used as artificial synapses and hardware for neural networks. With a focus on biomedical AI, Sun et al. propose a guiding principle and a promising outlook for developing memristive devices-based brain-like chips.

2.2.2 Architecture and circuit design

References [58,59,60] provide a detailed system-level design tutorial for analog computing based on non-volatile memory devices. By reviewing numerous types of emerging memory devices, Haensch et al. in Ref. [58] focus on hardware accelerator designs for deep neural networks based on those memory devices. In Ref. [59], realizing in-memory learning for deep learning is reviewed. In Ref. [60], Staudigl et al. further discuss the security aspects of in-memory computing for future computers.

Processing in major digital memory types, i.e., main memory realized with dynamic random-access memory (DRAM) and static random-access memory (SRAM), has gained significant attention as an in(near)-memory computing paradigm. Memory size, bandwidth, and latency scaling challenges as well as high power consumption attributed to data movement from main memory are primary concerns for efficient data-intensive computing tasks [61]. Below we report some in-digital-memory computing reviews where recent attempts to enable in-memory computing with minimal structural changes to the fabrication process have been studied.

2.2.2.1 In-DRAM

Mutlu et al. [41] provide motivation for in-memory computing by discussing the main challenges of the processor-centric paradigm. They discuss low-cost in-memory computing solutions, which can practically enable digital computers to perform in-DRAM bulk operations or add logic layers into 3D memory systems. Moreover, they argue that these solutions are promising approaches requiring minimal adaptations to be integrated into CMOS-based processor design and fabrication.

2.2.2.2 In-SRAM

References [62] and [63] discuss the recent advances in in-SRAM computing systems. In Ref. [62], Kang et al. provide a comprehensive overview of deep in-memory architectures (DIMAs) based on SRAM, where various topics, ranging from mixed-signal processing in-memory, energy/delay trade-off, signal-to-noise considerations (analog approximate-computing), data flow, and DNN algorithm in-memory, and a case study of a complete DIMA (named PROMIS) are analyzed. In Ref. [63], Jhang et al. highlight the compatibility of SRAM memory and logic circuits, i.e., processing elements, and the opportunities of co-designing integrated storage and computing. In addition to providing a thorough introduction, Jhang et al. review the recent advances in SRAM-based MAC operation acceleration optimized for edge AI.

2.2.3 Emerging non-volatile memory devices

Processing in-digital memory relies on binary bits [64] or, to a limited extent, multi-bit operations [65]. Achieving high computational accuracy, specifically for more complex models and datasets, requires higher precision operations. Non-volatile memory devices can mitigate the data movement bottleneck, providing higher precision operations when compared to their digital counterparts by enabling storage of multiple conductance levels. Here we report an overview of the most studied materials for these emerging devices.

Sebastian et al. [49] broadly classify memory devices suitable for non-von Neumann computing, ranging from charge-based to resistance-based memory elements. They provide an overview of the current applications that may benefit from efficiency improvements brought by the in-memory computing paradigm. Furthermore, they review state-of-the-art chip design demonstrations and compare the reported results of each design in terms of technology node size, weight and activation precision, and inference accuracy.

2.2.3.1 Resistive random-access memory (ReRAM)

Resistive random-access memory (ReRAM) has been intensively reviewed in the literature. References [66,67,68,69,70] study the recent advances in developing non-volatile memory devices from device and circuit level up to architectural designs.

2.2.3.2 Phase-change materials

References [71,72,73] provide a detailed review of phase-change materials for in-memory computing. While Sebastian et al. [71] introduce a detailed analysis of PCM-based crossbar arrays for deep learning hardware acceleration, Refs. [72] and [73] discuss the physics of the phase-change mechanism and experimental characterization of this property.

2.2.3.3 Ferroelectric materials

Ferroelectric materials are another group of promising candidates for developing multi-stable states nonvolatile memories, as they can permanently hold electrical polarization. Li et al. [74] review hafnium oxide films for in-memory computing applications focusing on materials studies and the memory device structure.

2.2.4 Other reviews

Xu et al. [75] review the recent developments in the in-memory implementation of stateful logic gates. They argue that logic operations can be encoded into the resistance of a memory cell, i.e., high, and low resistance levels as a logical 0 and 1,respectively. They explain the parallelizing of logic gates’allocation on crossbar arrays of memristive devices. Furthermore,they discuss the possibility and advantages of developing practical applications of stateful logic in memory in a complete computing system.

2.3 Neuromorphic computing

Modern graphic processing units (GPUs) currently deliver \(\sim\)10s of tera floating-point operations per second (TFLOPS) for mathematical operations [76], such as MACs. On the other hand,the human brain is highly efficient in solving complex cognitive tasks, such as face recognition,driving, and logical decision-making. It is estimated that the brain consumes \(\sim\)20 W—significantly lower than modern supercomputers executing similar tasks—performing \(\sim\)1015 “operations” per second [77]. Therefore,emulating brain cells’ functionality directly in hardware has gained significant attention in the past couple of decades, a concept referred to as neuromorphic computing. The goal is to develop adaptable,learnable, and energy-efficient computing systems inspired by the brain [78].

Figure 5a represents a simple visualization of a biological neuron cell and its main parts. The membrane potential of a neuron in the rest state is kept constant (\(\sim\)\(-\)70 mV) by the ion channels of the membrane. While a neuron integrates input temporal information with varying strengths (Fig. 5b), it fires a voltage spike (\(\sim\)30 mV amplitude) when the membrane potential surpasses a threshold in response to incoming stimuli. This spike, then, travels through the axon towards the synapse, where two neurons connect. Research suggests that our memory formation may occur based on the strength of interconnection between two neurons at synapses (for controversiality see, e.g., Ref.[79]).

a A biological neuron cell schematic and the electrical characteristic of a spike. The information transferred between neurons is encoded in time rather than amplitude. A spike can be generated (red) by a neuron if the membrane potential surpasses the threshold because of the input stimuli (blue). In the rest condition, membrane potential (Vrest) is \(\sim\) − 70 to − 80 mV relative to the extracellular environment. b A simple model for an artificial spiking neuron. The input stimuli to the neuron from previous layers have different effects on output generation, e.g., wi represents the strength of each connection. This can be interpreted as the strength memorized in a synapse c. An electronic circuitry example for a leaky-integrate-and-fire (LIF) neuron model. Input stimuli (I(t)) can build up charge on the capacitor, and when the threshold voltage is met, the voltage-control (VC) switch activates and discharges the capacitor. As the input current level increases, the output firing rate increases linearly (blue) or nonlinearly (purple), depending on the resistance behavior

The computational power of the brain stems from the large number of these synaptic connections. The estimated number of neurons in the brain is \(\sim\)1011, where each neuron connects to \(\sim\)1000 other neurons [80]. The massive interconnectivity for communicating information and the time dynamics between neurons make the brain a unique and powerful biological information processing system [81]. Furthermore, neurons communicate with each other through synapses, which are the junctions between neurons where spike-based electrical signals are transmitted [82]. Sparse and asynchronous spike-based signal transmission and memory formation by strengthening or weakening synaptic connections make the brain highly energy-efficient [83]. This motivates neuromorphic researchers to imitate the mechanisms observed in biological neurons in hardware or computational models such as spiking neural networks.

Figure 5c shows a simple electronic circuit for the hardware realization of a neuron model (integrate-and-fire). Inspired by the electrical characteristic of neurons, information can be encoded into the time dynamics of spike events. Specifically, depending on the temporal relations between the input spike events, the integrated potential can meet the threshold to generate an action potential. In this case, the voltage control switch can be considered as a comparator, which generates an output (action potential) when the membrane potential reaches the threshold.

Spiking neural networks (SNNs) are a subclass of artificial neural networks (ANNs) that use asynchronous spikes for learning and communication among neurons [81, 84]. In contrast to conventional DNNs, where the real-value signals (e.g., a floating-point number) are stored as weights and input activations, SNNs encode data into the time dynamics of spikes. Specifically, spikes are discrete events in time, and often information is encoded based on the temporal order of these events rather than their magnitudes. One of the central concepts of the SNN approach is the neuron model, which represents a hardware (or computational) model for neurons and their interconnections.

Leaky integrate-and-fire (LIF), for instance, is one of the widely studied neuron models, effectively modeling the firing patterns of neurons [81, 85, 86]. However, the typical hardware realization of LIF models using conventional electronics (Fig. 5c), often exceeds the design budget in terms of area and power consumption, especially when the network expands. More recently, memristive devices have also been shown to be promising realizations [87]. Therefore, neuromorphic engineering benefits from materials science to develop innovative devices for more efficient solutions [88].

2.4 Neuromorphic computing literature reviews

2.4.1 System-level architecture design and algorithmic developments

References [89] and [90] provide a historical review of neurobiologically inspired computing systems and report recent achievements. In Ref. [89], Furber provides an extensive overview and comparison between biological brains and recent large-scale neuromorphic computers, viz., Neurogrid, BrainScaleS, Truenorth, and SpiNNaker. In Ref. [90], James et al. focus on the interdisciplinary research field of hardware and algorithms for neuromorphic computing and possible co-developments.

Reference [91] clusters neuromorphic system designs into two categories: approaches inspired by biology to realize neuron behavior in hardware (bottom–up) and hardware/software developments to tackle current bottlenecks (top–down). Further, Frenkel et al. compare the tradeoffs of these two approaches and provide analogue, digital, and mixed-signal designs for neuromorphic systems.

2.4.2 Neuromorphic computing with non-volatile memory devices

Non-volatile memory devices play an important role in neuromorphic computing systems. References [83, 92,93,94,95,96,97,98] review the solutions to current challenges in non-volatile memory device developments, such as conductance dynamic range, non-ideal (non-)linearity, endurance time, switching power consumption, and device-to-device variability.

The following reviews focus on specific materials to develop non-volatile memory for neuromorphic computing:

2.4.2.1 Resistive switching materials

Developing hardware that mimics synaptic operations of the brain using non-volatile resistive switching materials has been reviewed in several works [99,100,101] focusing on event-driving data movement and spike-timing-dependent plasticity.

2.4.2.2 Phase-change materials

In addition to using PCM devices in developing analog in-memory computing systems, PCM has been also reviewed as a candidate to model synaptic behavior. In Ref. [102], Zhang et al. review crystallization dynamics in PCM and PCM-based synapse and neuron models.

2.4.2.3 Ferroelectric materials

The fundamental working concept of ferroelectric devices in terms of storing weights and modelling neuron activities has been reviewed by Oh et al. [103]. In this work, hafnium oxide is also discussed as an emerging ferroelectric material, and the methods to improve the scaling up procedure and scaling down of device sizes are reported.

2.4.2.4 Spintronic nanodevices

Recent advances in developing synaptic behavior using the physics of electron spins have been reviewed in Refs. [104,105,106,107]. In Ref. [105], Grollier et al. provide background on synapses and neuron models based on magnetic tunnel junctions and review the application tasks developed on neuromorphic systems based on ferroelectric devices.

2.4.2.5 Nanowire networks

By discussing the physics behind the memristive junctions in a nanowire network, Kuncic et al. motivate utilizing the memristive dynamics within a nanowire network topology for neuromorphic information processing [108]. Furthermore, Kuncic et al. provide future research directions, such as organic nanowires and neuromorphic quantum processing.

2.4.2.6 2D material systems

Inspired by extremely low energy consumption due to the low dimensional structures of van der Waals materials, Cao et al. review the physical properties of 2D materials for developing synaptic devices [109].

With growing interest in using novel materials for neuromorphic computing, the search for suitable materials and characterizing their properties has gained attention in materials science. Lu et al. [110] have studied the use of machine learning algorithms instead of traditional computational strategies to facilitate this search for 2D materials.

2.4.3 Flexible electronics and organic materials

References [111] and [112] report the recent advances in flexible electronics (or systems on plastic) and organic materials for neuromorphic computing, respectively. Lee et al. discuss the potential benefit and research fields for developing intelligent, flexible sensors and processors for medical applications, such as always-on wearable on/under skin monitors. Van de Burgt et al. review the organic resistive switches and their promises in biocompatibility and inexpensive scaling-up. Moreover, Ref. [112] discusses the switching mechanisms in organic devices, approaches to improving the resistance-to-conductance ratio, and improving the speed and size of those devices.

2.4.4 Superconductive electronics

Realizing primitive neuromorphic operations, such as charge integration and firing in spiking neurons, naturally in a device is an assuring approach toward energy-efficient neuromorphic computing. One example of these devices is Josephson junctions that can convey information in the form of voltage spikes without the resistive-capacitive time constants typically used in spiking neuromorphic hardware. Schneider et al. [113] review superconductive devices, circuits, and architecture demonstrations that are biologically inspired for neuromorphic computing.

2.5 Reservoir computing

Temporal dynamics found in the recurrent connections of biological neural networks is another source of brain inspiration underlying the field of reservoir computing [114]. Conventionally, recurrent neural networks (RNNs) are employed to exhibit temporal dynamics by adding cyclic (recursive) connections, where the output of a neuron is fed to the input of the same or another neuron [115]. Despite their capabilities in time-series-analysis tasks [116], RNNs are challenging to be trained because of the vanishing gradient problem [117] and are costly to run in terms of time and power consumption [118]. Furthermore, more recurrent units and training data are required to achieve higher performance in complex tasks. To overcome these challenges, reservoir computing (RC)—as an RNN computational model—has turned out to be an effective tool showing promising results as well as resolving RNN’s computing problems. RC is a broad term for similar approaches encompassing echo state networks (ESNs) [119] and liquid state machines (LSMs) [120], which use different types of input encoding.

Figure 6 represents an example of a high-level projection and a generalized schematic for an RC system, including input, reservoir, and linear (readout) layers. A high-level projection for linearly inseparable tasks, such as exclusive OR (XOR), makes the classification of the projected data more straightforward and feasible with a linear plane (Fig. 6a). The reservoir applies nonlinear filtering and maps inputs into a high-dimensional space by exploiting the time dynamics that emanate from delayed nodes and recurrent connections. Figure 6b gives an example of RC-based motion recognition, which requires spatial and temporal feature extraction using the DVS-128 gesture dataset [121].

a An example of high-level projection for the exclusive OR (XOR) gate [128]. In 2D, a linear decision boundary cannot separate two classes; however, in a 3D projection, a linear plane can separate the classes. b A conceptual schematic for reservoir computing (RC). An input layer feeds inputs to the reservoir layer. The reservoir layer contains recurrent and/or time-delayed units performing complex high-dimensional feature extraction. The linear (readout) layer contains learnable parameters similar to a fully connected layer of a NN. The reservoir layer can be realized in various substrates, from mixed-signal to in materia

The reservoir layer can be realized in different material systems, such as memristors [122], spin-torque nano-oscillators [123], and organic electrochemical devices [124]. The reservoir layer requires no training; it exploits the inherent time dynamics of the underlying materials system for high-dimensional projection. Furthermore, short-term memory is a common feature of different reservoirs, which fades within a specific period. To compare and evaluate reservoirs, often four criteria are used in the literature, echo state property (how well they “forget” previous states), memory capacity (how well they “recall” previous inputs), degree of nonlinearity, and separation property (how well they generate separable states from inputs) [125].

The functioning of the reservoir layer is not easy to explain as it is a complex dynamical system. However, it is assumed that the recurrent connections as well as the (variable) time delay between different nodes causes high-dimensional input mapping [126]. The high-dimensional features, then, become linearly separable, and the readout (linear) layer associates those features with the desired outputs. Compared with RNNs, an RC model has significantly fewer learnable parameters, only in the readout (linear) layer. The RC approach, therefore, solves the problem of the expensive training phase of RNNs, for temporal tasks, such as gesture recognition [122, 127].

2.6 Reservoir computing literature reviews

2.6.1 System architecture design

The reservoir layer can be realized using existing conventional devices, such as analog circuitry (resistor and capacitor), digital hardware (e.g., field-programmable gate array (FPGA), and very-large-scale integration (VLSI) designs), and in-materia approaches (e.g., using memristors) [129]. In-materia reservoir computing benefits from exploiting the intrinsic time dynamics of physical systems, hence, leading to potential improvement in the implementation efficiency [130]. Tanaka et al. have classified reservoir layer realization approaches into electronic, photonic, spintronic, mechanical, and biological groups [125].

Bala et al. [131] address a noticeable research gap in the RC paradigm, which is optimizing the RC model architecture and parameters for various applications by reviewing the usage of metaheuristics in RC, focusing on ESNs as early RC approaches. They introduce metaheuristic optimization techniques and review the works focusing on optimizing echo-state networks.

2.6.2 Non-volatile memory devices

Cao et al. [132] review RC realization with a focus on dynamic memristors. They introduce memristors as promising candidates for realizing a reservoir layer and, by reviewing the recent advances, compare different memristive approaches in terms of time constant (related to time dynamics of the network), task (image recognition or natural language processing), and their performance (accuracy). Cao et al. provide a perspective by discussing the recent works that have focused on either input, reservoir, or output layer and recommend future research directions.

2.7 Hyperdimensional computing (vector symbolic architectures)

The energy efficiency of the human brain in accomplishing complex cognitive tasks can be attributed to the fact that the brain processes patterns rather than performing exact mathematical operations [133]. Per each synaptic operation, where neurons communicate and form/strengthen connections with each other, a small amount of energy is consumed, whereas realizing a neuron model, such as LIF, in hardware with traditional electronic circuits demands orders of magnitudes higher amounts of energy [134]. Therefore, a computational framework, which takes inspiration from attributes of neural circuits rather than individual modeling of a neuron [135], has recently emerged as hyperdimensional computing.

The core of hyperdimensional computing (HD, also known as vector symbolic architectures) is hypervectors with a dimensionality of order \(\sim\)1000 s, which are (pseudo)random with independent and identical distributed (i.i.d) components; i.e., approximately orthogonal vectors [136]. For example, in classical computers, images are represented by matrices of pixel intensities, where higher magnitude means brighter pixels. However, an image can also be stored as a hyperdimensional vector (with a dimensionality of, e.g., 10,000), which is a fully holistic representation, and every unit of data (binary digit) in the hypervector contains an equal amount of information compared to the other idem. This ultra-wide information representation allows a faster yet more reliable approach to machine learning [137].

After encoding information into hypervectors, a set of operations can be defined to combine, manipulate, and compare hypervectors [136]. Specifically, similarity check between classes of hypervectors is a major step in data classification in HD computing [137]. Furthermore, three operations, namely bundling, binding, and permutation are used in hyperdimensional computing to accumulate, combine while preserving similarity, and generate new hypervectors, respectively [138].

Hardware implementation of vector symbolic architectures to operate on large hypervectors can be notably challenging. Comparing and permuting HD vectors can quickly become bottlenecked by slow memory access [139]. Therefore, in-memory computing is widely studied as an energy-efficient approach for hyperdimensional computing [135, 140,141,142]. Furthermore, several literature reviews have studied the recent advances in this research field from a theoretical and practical perspective [133, 137, 143].

2.8 Other literature reviews

Li et al. [144] introduce the dynamical behavior of magnetic skyrmion nanoscale devices as a potentially promising emerging approach for unconventional computing. They review the principal features of skyrmion devices and report works that develop neuromorphic, reservoir, and stochastic computing systems based on them.

References [145] and [146] are perspective articles that discuss quantum approaches for developing neuromorphic and reservoir computing systems, respectively. Marković et al. [145] discuss different approaches to quantum neuromorphic circuitry realization using digital or analog circuits and review the recent advances in this field. Mujal et al. [146] introduce the realization of a reservoir with quantum substrates to exploit potential benefits. They also review the recent proposals that aim to develop quantum reservoir computing systems and compare them with their conventional counterparts. Csaba et al. [147] review oscillator-based computing as a brain-inspired computing approach focusing on physics of oscillatory systems. Further, they discuss the characteristics, promises, and limitations of these next-generation computing systems in order to help enabling more effective computing solutions.

3 Synthesis and analysis of collected research articles

The synthesis section integrates the collected information and presents the findings using quantitative and qualitative methods [39]. We extracted the results based on pattern recognition among the studies, where the similarities (analogy) and direct relationships (homology) between different brain-inspired computing research topics are examined [148]. Thus, we combined descriptive, narrative, and quantitative approaches to report our findings and answer the research questions.

Figure 7 depicts the number of published articles for two fields, machine learning and brain-inspired hardware for machine learning, where since 2015, both numbers have sharply risen. This trend is more noticeable for neuromorphic, in-memory, and reservoir computing fields. This correlation is interesting for two reasons. First, it suggests that with the advances in AI, and with more and more applications using intelligent data processing [149], the demand for more efficient hardware capable of executing large-scale neural network models has increased. Novel solutions are sought after to help AI to become more versatile and applicable in different applications. Second, as Fig. 1 and the inset of Fig. 7 illustrate, both the computational requirements and the research interests in AI are likely to continue rising.

Number of published articles (using IEEE Xplore, Nature, and ACM digital libraries) for mainstream brain-inspired computing paradigms. Inset: Number of published papers at the Conference on Neural Information Processing Systems (NeurIPS). The number of brain-inspired computing publications and accepted papers at NeurIPS has sharply increased since 2015

3.1 Hardware perspectives

Table 1 categorizes the hardware approaches of each brain-inspired computing framework. The table is based on articles that (a) introduce a brain-inspired approach, (b) design and realize dedicated hardware, (c) solve a specific task, and (d) report an efficiency metric. We have classified the underlying hardware into three major groups: analog/mixed-signal circuitry, non-volatile memory devices, and photonic hardware.

3.1.1 Analog/mixed-signal circuitry

The integration of analog electronic circuits with CMOS-based digital designs has the potential to combine the benefits of two compatible computational approaches. Although various works study novel material systems beyond CMOS technology (see, e.g., Sect. 2.1), transistors, particularly FETs, benefit from several characteristics. The mature fabrication process, standard design toolchains, and the compatibility of integration with CPUs and GPUs are advantages. SRAM and DRAM, for example, are commercially applied in CPUs for storage in multiple hierarchy levels, from the cache to the main memory [150]; thus, as Table 1 indicates, processing in-static/dynamic RAM has recently been studied as an in-memory computing paradigm to tackle the data movement (von Neumann) bottleneck in modern computing systems.

However, conventional CMOS-based analog/mixed-signal circuits are less studied for the neuromorphic computing paradigm. A possible reason could be that developing a hardware platform with a standard electronic circuit for temporal time-encoded spikes processing is not considered an efficient approach [151], which motivates neuromorphic and materials scientists to explore novel approaches and materials to mimic brain functionality in hardware.

Furthermore, emerging nanoscale devices inherently operating with analog signals are another candidate for analog/mixed-signal integration with CMOS-based digital circuits. For instance, dopant network processing units (DNPUs) are nanoscale, highly nonlinear and tunable devices fabricated in silicon, conceivably, CMOS compatible [152, 153]. Exploiting their nonlinear hopping transport mechanism [154], DNPUs can be reconfigured to solve various linearly inseparable classification tasks, such as X(N)OR and kernel feature extraction [155, 156]. However, to achieve a complete, energy-efficient hardware architecture to solve complex tasks, such emerging devices are required to be integrated at the back-end-of-line (BEOL) of CMOS very-large-scale integration (VLSI). There, a large number of these devices can be integrated as computationally high-capacity neurons [157] while the communication between memory and other peripheral circuits can be optimized.

3.1.2 Resistive non-volatile memory devices

Memristive devices are the central hardware realization choice for in-memory and neuromorphic computing. Profiting from storage and computing integration, they are studied to mimic synaptic behavior (Sect. 2.3) and to develop crossbar arrays for accelerating DNNs’ inference (Sect. 2.1). In this regard, particular characteristics, such as area efficiency (density), storage capacity, switching energy/speed, and stability (data retention time) are of significance and have been compared in the literature [66, 158].

These characteristics change among various device technologies, depending on their switching mechanisms, e.g., phase-change transition, redox reaction, ferroelectricity, filamentary, and magnetoresistive, where each can offer specific properties. For example, while PCMs exhibit relatively high programming variability [66, 159], magnetoresistive memory devices offer lower stochasticity [160]. One should note that each computing application, such as analog matrix–vector multiplication or acceleration of digital Boolean operations, requires different properties. Thus, while materials scientists aim to improve device characteristics, each non-volatile memory technology may be more suitable for particular applications. Finally, it is worth mentioning that conventional electronic circuitry plays a crucial role in designing peripheral electronics [161] and, consequently, affects the resultant efficiency of the computing system. Incorporating memristive devices with novel tunable non-linear devices [128] could improve the efficiency of in-memory computing systems.

3.1.3 Photonic processing

There are two substantial arguments supporting the potential of light-based information processing: an inherent high degree of parallelism [162] and processing in the (optical) input signal’s domain [163]. Photonic vast parallelism can be realized with wavelength division multiplexing [164]. Simply put, encoding data into multiple wavelengths allows inherent parallel processing and data transmission [165]. Therefore, it is potentially an efficient solution for data-intensive tasks, such as computer vision. However, the high power consumption of optical modules, the difficulty of scaling these modules into a small chip, and the challenge of integrating photonic computing with electronics are examples of ongoing challenges for improving the efficiency of photonic-based computing systems.

For example, Ref. [166] argues that photonic ANN accelerators are not efficient solutions in the training phase and are only effective at the inference stage of ANNs. According to Ref. [166] and Ref. [167], one of the reasons is that phase shifters utilized in photonic ANNs are slow because of their slow heat dissipation [168]. Therefore, Nakajima et al. [166] argue that the RC framework fits well for photonic computing since only a (linear) readout layer requires training.

Table 1 summarizes realization approaches of light-based information processing under reservoir and neuromorphic computing solutions. Other approaches, such as photonic tensor processing cores studied in Refs. [36, 164, 169,170,171] are not categorized in this table.

3.2 Application perspectives

Table 2 represents the applications and reported classification accuracy of the works that passed quality assessment rules based on their approaches: in-memory computing (orange), neuromorphic computing (yellow), photonic (gray), and reservoir computing (blue). It is worth mentioning that the reported accuracies are acquired from experimental measurements, i.e., simulation results are not included. Image classification is a complex task that most of the works have addressed. The modified National Institute of Standards and Technology (MNIST) dataset is a collection of handwritten digits, which has turned into a canonical benchmark test to demonstrate the functionality of a proposed solution.

The application synthesis results suggest that the brain-inspired computing framework is currently limited to bringing more efficient visual/auditory sensing and processing to machines. The computing approaches follow two main strategies: (a) designing and implementing a novel hardware realization to accelerate existing ANN computational models (such as the approach in photonic tensor core processors [164]) and (b) proposing both a new computational model [157] and a hardware realization that differs from ANN models (such as hyperdimensional computing [135] and dopant network processing units [128]). We can conclude that for each of these strategies, simultaneously considering higher energy efficiency and targeting existing computational bottlenecks can be the most promising use case of brain-inspired computing systems. For example, reservoir computers and in-memory long short-term memory (LSTM)-based RNNs are notable instances of powerful frameworks for temporal data processing [208, 225].

Additionally, because edge applications interact closely with users and monitor their activities, using cloud computing to process the gathered personal data may raise privacy and security issues. Therefore, brain-inspired computing approaches potentially can not only improve the energy efficiency, but also privacy in the processing of sensitive data [226].

To summarize this section, it is inspiring to look at startups developing commercial UC solutions, indicating growing technological maturity. For instance, as Table 1 denotes, the in-memory computing approach has the highest number of publications, we expect it to be the most popular option for near-future commercial products. Consistent with this observation, various startups and companies involved in in-memory computing can be named, such as Axelera, Mythic, Rain Neuromorphic, NeuroBlade, and Areanna. For more examples of in-memory computing chips taped out from academia, please refer to Ref. [227].

3.3 Efficiency perspectives

Energy efficiency is one of the main benchmarking metrics, besides computing density (performance per area) and computing accuracy, which were proposed in Ref. [234]. Here, we evaluate and compare the efficiency of various brain-inspired approaches using the energy efficiency metric (or performance per watt), which compares the number of operations performed in the unit of time divided by power consumption (operations/second/watt = OPS/W). However, as an emerging field, not many UC publications report the efficiency metrics uniquely. For instance, a neuromorphic system may not directly compute a MAC operation, though it solves a task, which requires the same number of MAC operations. In those cases, we use energy consumption per each equivalent MAC operation, as explained in Ref. [178].

Furthermore, naively comparing the reports from the literature will not lead to a fair comparison, as the term “operation” is not uniquely defined among different fields. To clarify, consider the multiply-and-accumulate (MAC) operation in digital processors, where the operation energy consumption is significantly dependent on the bit width of the input operands [13, 235] or equivalently to the signal-to-noise ratio (SNR) in analog computing [236]. Furthermore, not every article reports the full chip power consumption, which includes the power consumption of peripheral components, such as ADCs and DACs [36].

Therefore, we suggest employing the 8-bit integer MAC operation as a standard calculation method for comparing efficiency metrics in studies that detail the power consumption of their computing systems. We chose this standard because 8-bit integer operations, or even fewer bits [219, 221], offer sufficient precision for training and deploying neural networks across diverse applications. When comparing reports, we first considered the bit width of the input operands (activation and weight bit widths) of each reported metric to normalize it to an 8-bit MAC operation. For example, accomplishing an 8-bit MAC operation with an 1-bit or 4-bit MAC unit requires 64 or 16 separate operations, respectively [235]. Therefore, for normalizing to 8-bit operations, we adjusted the energy efficiency specifications accordingly. Figure 8 summarizes the thus normalized energy efficiency reports of the synthesized research articles.

In-memory computing delivers the highest energy efficiency regardless of the hardware type used for implementation, i.e., either using CMOS-based SRAM cells or non-volatile memristive devices. There are two possible explanations for this observation. First, in-memory computing, particularly crossbar arrays, can massively parallelize MAC operations. As described in Sect. 2.1, weight-stationary architectures store one operand of a MAC operation as the conductance of a memory cell. Thus, they significantly reduce data movement. Furthermore, the accumulation operation is performed as current accumulation based on Kirchhoff’s law, which helps to reduce the overall operation costs as the number of parallel operations increases.

Secondly, in-SRAM computing benefits from the mature CMOS technology. This makes the peripheral circuitry and processor integrations easier and more efficient. Additionally, various materials science studies report non-volatile memory devices, in which a higher dynamic range of resistance levels, and thus, more computational precision, could be realized. These results imply that with better architecture, materials, and hardware-software co-design [161], in-memory computing can set new standards for parallel processing.

What further stands out in Fig. 8 is that photonic tensor cores, which accelerate matrix-by-vector multiplication (MVM), report modest energy efficiency despite their admirable throughput [36, 164, 237]. Although photonic integration with in-memory computing has gained noticeable attention, optical power supply, signal conversions, and modulators are the dominant power consumers [164]. As the field evolves, we may envision two future scenarios for integrated photonics. First, exploiting their significant throughput, photonic computing can offer more benefits in situations where energy consumption is less constrained than in edge computing, such as critical low-latency online video processing [238]. Second, sensor integration for eliminating signal conversion steps and processing in-physical domains, which potentially can provide promising solutions for machine-vision applications.

Reducing throughput, i.e., the number of operations in a unit of time can lead to higher energy efficiency for photonic tensor cores. This approach has been demonstrated in Ref. [233], which in Fig. 8, shows the highest energy efficiency for photonic tensor cores. However, as the size of the matrix-by-vector accelerator (equivalently, throughput) grows, the power consumption significantly increases. For example, in Ref. [233], for a 256 by 256 photonic tensor core system, \(\sim\)1 kW power consumption is reported, which is prohibitively high for most edge devices.

The reservoir computing realization in Fig. 8 represents a photonic computing approach [166]. This work has been separated from photonic tensor cores as they represent a different computational concept. Consistent with the results from photonic tensor cores, optical reservoir realizations are also limited in energy efficiency based on the same arguments provided previously. One possible approach to improve the efficiency metric is to realize all reservoir layers, viz., input, reservoir, and readout layers, in one signal domain, e.g., optical, as discussed in Ref. [166]. Potentially, this approach will eliminate some necessary signal conversions and reduce overall energy consumption. Thus, we may conclude that future studies should endeavor to solve the mentioned challenges with innovative solutions to introduce photonic computers that are not only fast but also efficient.

4 Discussion and conclusion

Throughout this systematic review, we emphasized the necessity of moving from conventional computing hardware systems to novel, unconventional approaches. Digital computers are facing challenges in incorporating AI with efficient computational power and low latency, especially on the edge. Thus, in this review, we discussed common aspects of upcoming alternative solutions and their promises. Numerous brain-inspired computing papers are published each year, which makes an objective comparison essential to evaluate different approaches. To make such a comparison possible, our review specifically aimed at brain-inspired approaches that reported efficiency metrics and applications. Since brain-inspired computing involves many fields of research, we also provided a brief introduction to neuromorphic, in-memory, reservoir, and hyperdimensional computing. We reviewed recent literature reviews and surveys that discuss each brain-inspired approach in detail and report their recent advances, where the readers can find an in-depth review of a brain-inspired computing subfield.

Conventional hardware has both benefits and drawbacks. The advantages lie with the mature design and fabrication processes, and the shortcomings involve limitations to traditional computer architectures, such as data movement bottleneck [239, 240], rather than, e.g., synaptic time-dependent neuromorphic operations. Brain-inspired concepts should be based on algorithmic requirements. Besides improving the functionality of existing devices, devices are needed that perform operations impossible or that are computationally expensive to execute by conventional hardware, such as non-linear and synaptic operations. In addition, a new general formal theory of “computing is needed for computing machines made out of whatever physics offers” [33].

To compare the efficiency of different brain-inspired approaches, we used a method to normalize the reported energy efficiency metrics. Although impressive energy efficiency metrics have been reported, we showed that in a fair comparison—where a unique operation is considered—the UC paradigm is still far away from human brain efficiency, which is approximated as \(\sim\)100 TOPS/W or equivalently 10 fJ/Op [77]. We provided possible reasons why the reported metrics in literature and our normalized values may differ, such as the varying definitions of an “operation”.

We see substantial potential for brain-inspired computing, especially at the edge, where it can integrate with sensors and memory to reduce signal domain conversion and data movement. For example, in-memory computing lowers data movement costs. Nevertheless, as a complete computing system, it requires energy-efficient peripheral circuitry, which is normally implemented in standard, digital CMOS. Moreover, photonic computing can merge with vision sensors to avoid signal conversion. Therefore, researchers should consider the costs of incorporating a brain-inspired computing approach into a complete computing system, which may inspire co-design and integration innovations.

With this review, we hope to contribute to the fascinating and rapidly expanding field of brain-inspired computing hardware, which shows great potential for revolutionizing the field of computing targeting the efficiency and adaptability of the human brain, or maybe even beyond. Brain-inspired computing has resulted in significant advancements in various fields, including image recognition, speech recognition, and natural language processing. However, there is still much work to be done in order to fully realize its full potential, especially on the hardware level. Nonetheless, the rapid progress made in this area of research suggests that we may soon be able to build computing systems that are not only more powerful, but also more energy-efficient, flexible, and adaptable, paving the way for a new era of intelligent computing.

Data availability

For the data we present in this study we refer to the corresponding authors of the respective articles.

References

W. Shi, J. Cao, Q. Zhang, Y. Li, L. Xu, IEEE Internet Things J. 3(5), 637 (2016)

S.F. Wamba, S. Akter, A. Edwards, G. Chopin, D. Gnanzou, Int. J. Prod. Econ. 165, 234 (2015)

C.-J. Wu, D. Brooks, K. Chen, D. Chen, S. Choudhury, M. Dukhan et al., Machine learning at Facebook: understanding inference at the edge. In: 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA), 2019 (pp. 331): IEEE

R. Dahiya, N. Yogeswaran, F. Liu, L. Manjakkal, E. Burdet, V. Hayward et al., Proc. IEEE 107(10), 2016 (2019)

A. Morán, V. Canals, F. Galan-Prado, C.F. Frasser, D. Radhakrishnan, S. Safavi et al., Cogn. Comput. 1, 1–9 (2021)

J. Chen, X. Ran, Proc. IEEE 107(8), 1655 (2019)

S. Grigorescu, B. Trasnea, T. Cocias, G. Macesanu, J. Field Robot. 37(3), 362 (2020)

D. Chen, S. Liu, P. Kingsbury, S. Sohn, C.B. Storlie, E.B. Habermann et al., NPJ Dig. Med. 2(1), 43 (2019)

R. David, J. Duke, A. Jain, V. Janapa Reddi, N. Jeffries, J. Li et al., Proc. Mach. Learn. Syst. 3, 800 (2021)

B. Zoph, Q. V. Le, arXiv preprint https://arXiv.org/1611.01578 (2016)

Z.-G. Liu, P. N. Whatmough, Y. Zhu, M. Mattina, S2ta: exploiting structured sparsity for energy-efficient mobile CNN acceleration. In: 2022 IEEE International Symposium on High-Performance Computer Architecture (HPCA), (pp. 573), IEEE (2022)

Y.-H. Chen, T. Krishna, J.S. Emer, V. Sze, IEEE J. Solid-State Circ. 52(1), 127 (2016)

V. Sze, Y.-H. Chen, T.-J. Yang, J.S. Emer, Proc. IEEE 105(12), 2295 (2017)

G. Lauterbach, IEEE Micro 41(6), 52 (2021)

J.D. Owens, M. Houston, D. Luebke, S. Green, J.E. Stone, J.C. Phillips, Proc. IEEE 96(5), 879 (2008)

N. P. Jouppi, C. Young, N. Patil, D. Patterson, G. Agrawal, R. Bajwa et al., In-datacenter performance analysis of a tensor processing unit. In: Proceedings of the 44th annual international symposium on computer architecture, p 1 (2017)

K. Rocki, D. Van Essendelft, I. Sharapov, R. Schreiber, M. Morrison, V. Kibardin et al., Fast stencil-code computation on a wafer-scale processor. In: SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, p 1 IEEE (2020)

O. Mutlu, Memory scaling: A systems architecture perspective. In: 2013 5th IEEE International Memory Workshop, IEEE, p 21 (2013)

J. Shalf, Phil. Trans. R. Soc. A 378(2166), 20190061 (2020)

W. Cao, H. Bu, M. Vinet, M. Cao, S. Takagi, S. Hwang et al., Nature 620(7974), 501 (2023)

R.H. Dennard, F.H. Gaensslen, H.-N. Yu, V.L. Rideout, E. Bassous, A.R. LeBlanc, IEEE J. Solid-State Circ. 9(5), 256 (1974)

H. Esmaeilzadeh, E. Blem, R.S. Amant, K. Sankaralingam, D. Burger, IEEE Micro 32(3), 122 (2012)

A. Gholami, Y. Zhewei, Kim, Sehoon and Mahoney, W. Michael, K. Keutzer, RiseLab Medium Post, (2021)

A.A. Conklin, S. Kumar, Nat. Electron. 6(7), 464 (2023). https://doi.org/10.1038/s41928-023-00985-1

J. Feng, K. Yu, Econ. Innov. New Technol. 29(4), 349 (2020)

L.H. Kaack, P.L. Donti, E. Strubell, G. Kamiya, F. Creutzig, D. Rolnick, Nat. Clim. Change 12(6), 518 (2022). https://doi.org/10.1038/s41558-022-01377-7

C.A. Thraskias, E.N. Lallas, N. Neumann, L. Schares, B.J. Offrein, R. Henker et al., IEEE Commun. Surv. Tutor. 20(4), 2758 (2018)

S.M. Khan, A. Mann, AI chips: what they are and why they matter. Center for Security and Emerging Technology (2020)

C. Kaspar, B.J. Ravoo, W.G. van der Wiel, S.V. Wegner, W.H.P. Pernice, Nature 594(7863), 345 (2021). https://doi.org/10.1038/s41586-021-03453-y

G. Cauwenberghs, Proc. Natl. Acad. Sci. 110(39), 15512 (2013)

Ziegler, M., Mussenbrock, T., & Kohlstedt, H.: Springer Nature, 2024.

A. Adamatzky, vol 22, Springer (2016)

H. Jaeger, B. Noheda, W.G. van Der Wiel, Nat. Commun. 14(1), 4911 (2023)

Y. Han, C. Nickle, Z. Zhang, H.P. Astier, T.J. Duffin, D. Qi et al., Nat. Mater. 19(8), 843 (2020)

L.S. Madsen, F. Laudenbach, M.F. Askarani, F. Rortais, T. Vincent, J.F. Bulmer et al., Nature 606(7912), 75 (2022)

F. Ashtiani, A.J. Geers, F. Aflatouni, Nature (2022). https://doi.org/10.1038/s41586-022-04714-0

D. Melanson, M. A. Khater, M. Aifer, K. Donatella, M. H. Gordon, T. Ahle et al., arXiv preprint https://arXiv.org/2312.04836 (2023)

D.V. Christensen, R. Dittmann, B. Linares-Barranco, A. Sebastian, M. Le Gallo, A. Redaelli et al., Neuromorph. Comput. Eng. 2(2), 022501 (2022)

A. Booth, A. Sutton, M. Clowes, M. Martyn-St James (2021)

O. Mutlu, S. Ghose, J. Gómez-Luna, R. Ausavarungnirun, A modern primer on processing in memory, in Emerging computing: from devices to systems: looking beyond Moore and Von Neumann. (Springer, 2022), p.171

O. Mutlu, Processing data where it makes sense in modern computing systems: Enabling in-memory computation. In: 2018 7th Mediterranean Conference on Embedded Computing (MECO), p 8 IEEE (2018)

P. Siegl, R. Buchty, M. Berekovic, Data-centric computing frontiers: a survey on processing-in-memory. In: Proceedings of the Second International Symposium on Memory Systems, pp 295 (2016)

Y.-H. Chen, T.-J. Yang, J. Emer, V. Sze, IEEE J. Emerg. Select. Top. Circ. Syst. 9(2), 292 (2019)

M. Le Gallo, Phase-change memory: device physics and application to non-von Neumann computing (ETH Zurich, 2017)

J. Woo, Device engineering strategy of Zr-doped HfO x ferroelectric memory for unconventional computing applications. In: 2021 IEEE International Symposium on Circuits and Systems (ISCAS), p 1 IEEE (2021)

D. Ielmini, Z. Wang, Y. Liu, APL Mater. 9(5), 050702 (2021)

S. Jung, H. Lee, S. Myung, H. Kim, S.K. Yoon, S.-W. Kwon et al., Nature 601(7892), 211 (2022)

P. Yao, H. Wu, B. Gao, J. Tang, Q. Zhang, W. Zhang et al., Nature 577(7792), 641 (2020)

A. Sebastian, M. Le Gallo, R. Khaddam-Aljameh, E. Eleftheriou, Nat. Nanotechnol. 15(7), 529 (2020)

G. Pedretti, E. Ambrosi, D. Ielmini, Conductance variations and their impact on the precision of in-memory computing with resistive switching memory (RRAM). In: 2021 IEEE International Reliability Physics Symposium (IRPS), p 1 IEEE (2021)

J. Büchel, A. Vasilopoulos, B. Kersting, F. Odermatt, K. Brew, I. Ok et al., Gradient descent-based programming of analog in-memory computing cores. In: IEEE International Electron Devices Meeting (2022)

S. Thomann, C. Li, C. Zhuo, O. Prakash, X. Yin, X. S. Hu et al., On the reliability of in-memory computing: Impact of temperature on ferroelectric TCAM. In: 2021 IEEE 39th VLSI Test Symposium (VTS), p 1 IEEE (2021)

M. Zhao, B. Gao, J. Tang, H. Qian, H. Wu, Appl. Phys. Rev. 7(1), 011301 (2020)

J.J. Yang, D.B. Strukov, D.R. Stewart, Nat. Nanotechnol. 8(1), 13 (2013)

G. Singh, L. Chelini, S. Corda, A. J. Awan, S. Stuijk, R. Jordans et al., A review of near-memory computing architectures: opportunities and challenges. In: 2018 21st Euromicro Conference on Digital System Design (DSD), p 608 IEEE (2018)

G. Singh, L. Chelini, S. Corda, A.J. Awan, S. Stuijk, R. Jordans et al., Microprocess. Microsyst. 71, 102868 (2019)

B. Sun, Y. Chen, G. Zhou, Z. Cao, C. Yang, J. Du et al., ACS Nano 18(1), 14 (2023)

W. Haensch, T. Gokmen, R. Puri, Proc. IEEE 107(1), 108 (2018)

Y. Xi, B. Gao, J. Tang, A. Chen, M.-F. Chang, X.S. Hu et al., Proc. IEEE 109(1), 14 (2020)

F. Staudigl, F. Merchant, R. Leupers, IEEE Des. Test 39(2), 90 (2021)

M. Jung, V. Gaddam, S. Jeon, Nano Convergence 9(1), 1 (2022)

M. Kang, S.K. Gonugondla, N.R. Shanbhag, Proc. IEEE 108(12), 2251 (2020)

C.-J. Jhang, C.-X. Xue, J.-M. Hung, F.-C. Chang, M.-F. Chang, IEEE Trans. Circ. Syst. I Regul. Pap. 68(5), 1773 (2021)

D. Kim, C. Yu, S. Xie, Y. Chen, J.-Y. Kim, B. Kim et al., IEEE J. Emerg. Select. Top. Circ. Syst. 12, 338–353 (2022)

E.J. Choi, I. Choi, C. Jeon, G. Yun, D. Yi, S. Ha et al., IEEE Solid-State Circ. Lett. 5, 234 (2022)

Z. Wang, H. Wu, G.W. Burr, C.S. Hwang, K.L. Wang, Q. Xia et al., Nat. Rev. Mater. 5(3), 173 (2020)

G. Pedretti, D. Ielmini, Electronics 10(9), 1063 (2021)

J. Del Valle, J.G. Ramírez, M.J. Rozenberg, I.K. Schuller, J. Appl. Phys. 124(21), 211101 (2018)

X. Hong, D.J. Loy, P.A. Dananjaya, F. Tan, C. Ng, W. Lew, J. Mater. Sci. 53(12), 8720 (2018)

Y. Li, Z. Wang, R. Midya, Q. Xia, J.J. Yang, J. Phys. D Appl. Phys. 51(50), 503002 (2018)

A. Sebastian, M. Le Gallo, G.W. Burr, S. Kim, M. BrightSky, E. Eleftheriou, J. Appl. Phys. 124(11), 111101 (2018)

A. Sebastian, M. Le Gallo, E. Eleftheriou, J. Phys. D Appl. Phys. 52(44), 443002 (2019)

M. Le Gallo, A. Sebastian, J. Phys. D Appl. Phys. 53(21), 213002 (2020)

Z. Li, T. Wang, J. Yu, J. Meng, Y. Liu, H. Zhu et al., Adv. Electron. Mater. 8, 2200951 (2022)

N. Xu, T. Park, K.J. Yoon, C.S. Hwang, Phys. Status Solidi (RRL) Rapid Res. Lett. 15(9), 2100208 (2021)

NVIDIA A100 TENSOR CORE GPU [White paper]. 3 (2021)

G. Van der Sande, D. Brunner, M.C. Soriano, Nanophotonics 6(3), 561 (2017)

M. Davies, A. Wild, G. Orchard, Y. Sandamirskaya, G.A.F. Guerra, P. Joshi et al., Proc. IEEE 109(5), 911 (2021)

W.C. Abraham, O.D. Jones, D.L. Glanzman, NPJ Sci. Learn. 4(1), 9 (2019)

F.A. Azevedo, L.R. Carvalho, L.T. Grinberg, J.M. Farfel, R.E. Ferretti, R.E. Leite et al., J. Comp. Neurol. 513(5), 532 (2009)

K. Roy, A. Jaiswal, P. Panda, Nature 575(7784), 607 (2019)

E. Bullmore, O. Sporns, Nat. Rev. Neurosci. 13(5), 336 (2012)

G.W. Burr, R.M. Shelby, A. Sebastian, S. Kim, S. Kim, S. Sidler et al., Adv. Phys. X 2(1), 89 (2017)

F. Ottati, C. Gao, Q. Chen, G. Brignone, M. R. Casu, J. K. Eshraghian et al. arXiv preprint https://arXiv.org/2306.15749 (2023)

M. Bouvier, A. Valentian, T. Mesquida, F. Rummens, M. Reyboz, E. Vianello et al., ACM J. Emerg. Technol. Comput. Syst. (JETC) 15(2), 1 (2019)

L. Wang, X. Fang, D. Liu, S. Duan, Front. Neurosci. 374, 853010 (2022)

J.-Q. Yang, R. Wang, Z.-P. Wang, Q.-Y. Ma, J.-Y. Mao, Y. Ren et al., Nano Energy 74, 104828 (2020)

S.R. Bauers, M.B. Tellekamp, D.M. Roberts, B. Hammett, S. Lany, A.J. Ferguson et al., Nanotechnology 32(37), 372001 (2021)

S. Furber, J. Neural Eng. 13(5), 051001 (2016)

C.D. James, J.B. Aimone, N.E. Miner, C.M. Vineyard, F.H. Rothganger, K.D. Carlson et al., Biol. Inspired Cogn. Architect. 19, 49 (2017)

C. Frenkel, D. Bol, G. Indiveri, Proc. IEEE (2023). https://doi.org/10.1109/JPROC.2023.3273520

S. Yu, Proc. IEEE 106(2), 260 (2018)

D.S. Jeong, C.S. Hwang, Adv. Mater. 30(42), 1704729 (2018)

C.-H. Kim, S. Lim, S.Y. Woo, W.-M. Kang, Y.-T. Seo, S.-T. Lee et al., Nanotechnology 30(3), 032001 (2018)

Y. Shimeng, Proc. IEEE 106, 260 (2018)

I. Chakraborty, A. Jaiswal, A. Saha, S. Gupta, K. Roy, Appl. Phys. Rev. 7(2), 021308 (2020)

J. Park, Electronics 9(9), 1414 (2020)

Y. Zhang, Z. Wang, J. Zhu, Y. Yang, M. Rao, W. Song et al., Appl. Phys. Rev. 7(1), 011308 (2020)

D. Ielmini, Microelectron. Eng. 190, 44 (2018)

X. Zhang, A. Huang, Q. Hu, Z. Xiao, P. Chu, K. Physica Status Solidi (a) 215(13), 1700875 (2018)

S. Kumar, X. Wang, J.P. Strachan, Y. Yang, W.D. Lu, Nat. Rev. Mater. 1, 575–591 (2022)

W. Zhang, R. Mazzarello, M. Wuttig, E. Ma, Nat. Rev. Mater. 4(3), 150 (2019)

S. Oh, H. Hwang, I. Yoo, APL Mater. 7(9), 091109 (2019)

A. Sengupta, K. Roy, Appl. Phys. Express 11(3), 030101 (2018)

J. Grollier, D. Querlioz, K. Camsari, K. Everschor-Sitte, S. Fukami, M.D. Stiles, Nat. Electron. 3(7), 360 (2020)

J. Grollier, D. Querlioz, M.D. Stiles, Proc. IEEE 104(10), 2024 (2016)

J. Cai, L. Zhang, B. Fang, W. Lv, B. Zhang, G. Finocchio et al., Appl. Phys. Lett. 114(19), 192402 (2019)

Z. Kuncic, T. Nakayama, Adv. Phys.: X 6(1), 1894234 (2021)

G. Cao, P. Meng, J. Chen, H. Liu, R. Bian, C. Zhu et al., Adv. Funct. Mater. 31(4), 2005443 (2021)

B. Lu, Y. Xia, Y. Ren, M. Xie, L. Zhou, G. Vinai et al., Adv. Sci. 11, 2305277 (2024)

H.E. Lee, J.H. Park, T.J. Kim, D. Im, J.H. Shin, D.H. Kim et al., Adv. Func. Mater. 28(32), 1801690 (2018)

Y. van De Burgt, A. Melianas, S.T. Keene, G. Malliaras, A. Salleo, Nat. Electron. 1(7), 386 (2018)

M. Schneider, E. Toomey, G. Rowlands, J. Shainline, P. Tschirhart, K. Segall, Supercond. Sci. Technol. 35(5), 053001 (2022)

D.J. Gauthier, E. Bollt, A. Griffith, W.A. Barbosa, Nat. Commun. 12(1), 1 (2021)

M. Lukoševičius, H. Jaeger, Comput. Sci. Rev. 3(3), 127 (2009)

J.T. Connor, R.D. Martin, L.E. Atlas, IEEE Trans. Neural Netw. 5(2), 240 (1994)

S. Hochreiter, Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 6(02), 107 (1998)

R. Pascanu, T. Mikolov, Y. Bengio, On the difficulty of training recurrent neural networks. In: International conference on machine learning, p 1310 PMLR (2013)

Jaeger, H. Advances in neural information processing systems, 15 (2002)

W. Maass, T. Natschläger, H. Markram, Neural Comput. 14(11), 2531 (2002)

A. Amir, B. Taba, D. Berg, T. Melano, J. McKinstry, C. Di Nolfo et al., A low power, fully event-based gesture recognition system. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7243 (2017)

Y. Zhong, J. Tang, X. Li, X. Liang, Z. Liu, Y. Li et al., Nat. Electron. 5(10), 672 (2022)

M. Romera, P. Talatchian, S. Tsunegi, F. Abreu Araujo, V. Cros, P. Bortolotti et al., Nature 563(7730), 230 (2018)

Y. Usami, B. van de Ven, D.G. Mathew, T. Chen, T. Kotooka, Y. Kawashima et al., Adv. Mater. 33(48), 2102688 (2021)

G. Tanaka, T. Yamane, J.B. Héroux, R. Nakane, N. Kanazawa, S. Takeda et al., Neural Netw. 115, 100 (2019)

K. Nakajima, Jpn. J. Appl. Phys. 59(6), 060501 (2020)

A. Jalalvand, G. Van Wallendael, R. Van de Walle, Real-time reservoir computing network-based systems for detection tasks on visual contents. In: 2015 7th International Conference on Computational Intelligence, Communication Systems and Networks, p 146 IEEE (2015)

T. Chen et al., Nature 577(7790), 341 (2020)

Y. Yi, Y. Liao, B. Wang, X. Fu, F. Shen, H. Hou et al., Microprocess. Microsyst. 46, 175 (2016)

S. Nichele, J. H. Jensen, D. Laketic, O. R. Lykkebø, G. Tufte, Reservoir computing in-materio: emergence and control in unstructured and structured materials, 87 (2021)

A. Bala, I. Ismail, R. Ibrahim, S.M. Sait, IEEE Access 6, 58012 (2018)

J. Cao, X. Zhang, H. Cheng, J. Qiu, X. Liu, M. Wang et al., Nanoscale 14(2), 289 (2022)

D. Kleyko, D. Rachkovskij, E. Osipov, A. Rahimi, ACM Comput. Surv. 55(9), 1 (2023)

H. Amrouch, P. R. Genssler, M. Imani, M. Issa, X. Jiao, W. Mohammad et al., Beyond von Neumann era: brain-inspired hyperdimensional computing to the rescue. In: Proceedings of the 28th Asia and South Pacific Design Automation Conference, p 553 (2023)

G. Karunaratne, M. Le Gallo, G. Cherubini, L. Benini, A. Rahimi, A. Sebastian, Nat. Electron. 3(6), 327 (2020)