Abstract

Measurements of normalized differential cross sections as functions of the multiplicity and kinematic variables of charged-particle tracks from the underlying event in top quark and antiquark pair production are presented. The measurements are performed in proton-proton collisions at a center-of-mass energy of 13\(~\text {Te}\text {V}\), and are based on data collected by the CMS experiment at the LHC in 2016 corresponding to an integrated luminosity of 35.9\(~\text {fb}^{-1}\). Events containing one electron, one muon, and two jets from the hadronization and fragmentation of \(\mathrm {b}\) quarks are used. These measurements characterize, for the first time, properties of the underlying event in top quark pair production and show no deviation from the universality hypothesis at energy scales typically above twice the top quark mass.

Similar content being viewed by others

1 Introduction

At the LHC, top quark and antiquark pairs (\({\mathrm {t}\overline{\mathrm {t}}}\)) are dominantly produced in the scattering of the proton constituents via quantum chromodynamics (QCD) at an energy scale (Q) of about two times the \(\mathrm {t}\) quark mass (\(m_\mathrm {t}\)). The properties of the \(\mathrm {t}\) quark can be studied directly from its decay products, as it decays before hadronizing. Mediated by the electroweak interaction, the \(\mathrm {t}\) quark decay yields a \(\mathrm {W}\) boson and a quark, the latter carrying the QCD color charge of the mother particle. Given the large branching fraction for the decay into a bottom quark, \(\mathcal {B}(\mathrm {t}\rightarrow \mathrm {W}\mathrm {b})=0.957\pm 0.034\) [1], in this analysis we assume that each \(\mathrm {t}\) or \(\overline{\mathrm {t}}\) quark yields a corresponding bottom (\(\mathrm {b}\)) or antibottom (\(\overline{\mathrm {b}}\)) quark in its decay. Other quarks may also be produced, in a color-singlet state, if a \(\mathrm {W}\rightarrow \mathrm {q} \overline{\mathrm {q}} ^\prime \) decay occurs. Being colored, these quarks will fragment and hadronize giving rise to an experimental signature with jets. Thus, when performing precision measurements of \(\mathrm {t}\) quark properties at hadron colliders, an accurate description of the fragmentation and hadronization of the quarks from the hard scatter process as well as of the “underlying event” (UE), defined below, is essential. First studies of the fragmentation and hadronization of the \(\mathrm {b}\) quarks in \({\mathrm {t}\overline{\mathrm {t}}}\) events have been reported in Refs. [2, 3]. In this paper, we present the first measurement of the properties of the UE in \({\mathrm {t}\overline{\mathrm {t}}}\) events at a scale \(Q\ge 2m_\mathrm {t}\).

The UE is defined as any hadronic activity that cannot be attributed to the particles stemming from the hard scatter, and in this case from \({\mathrm {t}\overline{\mathrm {t}}}\) decays. Because of energy-momentum conservation, the UE constitutes the recoil against the \({\mathrm {t}\overline{\mathrm {t}}}\) system. In this study, the hadronization products of initial- and final-state radiation (ISR and FSR) that cannot be associated to the particles from the \({\mathrm {t}\overline{\mathrm {t}}}\) decays are probed as part of the UE, even if they can be partially modeled by perturbative QCD. The main contribution to the UE comes from the color exchanges between the beam particles and is modeled in terms of multiparton interactions (MPI), color reconnection (CR), and beam-beam remnants (BBR), whose model parameters can be tuned to minimum bias and Drell–Yan (DY) data.

The study of the UE in \({\mathrm {t}\overline{\mathrm {t}}}\) events provides a direct test of its universality at higher energy scales than those probed in minimum bias or DY events. This is relevant as a direct probe of CR, which is needed to confine the initial QCD color charge of the \(\mathrm {t}\) quark into color-neutral states. The CR mainly occurs between one of the products of the fragmentation of the \(\mathrm {b}\) quark from the \(\mathrm {t}\) quark decay and the proton remnants. This is expected to induce an ambiguity in the origin of some of the final states present in a bottom quark jet [4,5,6]. The impact of these ambiguities in the measurement of \(\mathrm {t}\) quark properties is evaluated through phenomenological models that need to be tuned to the data. Recent examples of the impact that different model parameters have on \(m_\mathrm {t}\) can be found in Refs. [7, 8].

The analysis is performed using final states where both of the \(\mathrm {W}\) bosons decay to leptons, yielding one electron and one muon with opposite charge sign, and the corresponding neutrinos. In addition, two \(\mathrm {b}\) jets are required in the selection, as expected from the \({\mathrm {t}\overline{\mathrm {t}}} \rightarrow (\mathrm {e}\nu \mathrm {b})(\mu \nu \mathrm {b})\) decay. This final state is chosen because of its expected high purity and because the products of the hard process can be distinguished with high efficiency and small contamination from objects not associated with \(\mathrm {t}\) quark decays, e.g., jets from ISR.

After discussing the experimental setup in Sect. 2, and the signal and background modeling in Sect. 3, we present the strategy employed to select the events in Sect. 4 and to measure the UE contribution in each selected event in Sect. 5. The measurements are corrected to a particle-level definition using the method described in Sect. 6 and the associated systematic uncertainties are discussed in Sect. 7. Finally, in Sect. 8, the results are discussed and compared to predictions from different Monte Carlo (MC) simulations. The measurements are summarized in Sect. 9.

2 The CMS detector

The central feature of the CMS apparatus is a superconducting solenoid of 6\(~\text {m}\) internal diameter, providing a magnetic field of 3.8\(~\text {T}\) parallel to the beam direction.

Within the solenoid volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. A preshower detector, consisting of two planes of silicon sensors interleaved with about three radiation lengths of lead, is located in front of the endcap regions of the ECAL. Hadron forward calorimeters, using steel as an absorber and quartz fibers as the sensitive material, extend the pseudorapidity coverage provided by the barrel and endcap detectors from \(|\eta | = 3.0\) to 5.2. Muons are detected in the window \(|\eta |<2.4\) in gas-ionization detectors embedded in the steel flux-return yoke outside the solenoid.

Charged-particle trajectories with \(|\eta |<2.5\) are measured by the tracker system. The particle-flow algorithm [9] is used to reconstruct and identify individual particles in an event, with an optimized combination of information from the various elements of the CMS detector. The energy of the photons is directly obtained from the ECAL measurement, corrected for zero-suppression effects. The energy of the electrons is determined from a combination of the electron momentum at the primary interaction vertex as determined by the tracker, the energy of the corresponding ECAL cluster, and the energy sum of all bremsstrahlung photons spatially compatible with originating from the electron track. The energy of the muons is obtained from the curvature of the corresponding track. The energy of charged hadrons is determined from a combination of their momentum measured in the tracker and the matching ECAL and HCAL energy deposits, corrected for zero-suppression effects and for the response function of the calorimeters to hadronic showers. Finally, the energy of neutral hadrons is obtained from the corresponding corrected ECAL and HCAL energies.

Events of interest are selected using a two-tiered trigger system [10]. The first level, composed of custom hardware processors, uses information from the calorimeters and muon detectors to select events at a rate of around 100\(~\text {kHz}\) within a time interval of less than 4\(~\upmu \text {s}\). The second level, known as the high-level trigger, consists of a farm of processors running a version of the full event reconstruction software optimized for fast processing, and reduces the event rate to around 1\(~\text {kHz}\) before data storage.

A more detailed description of the CMS detector, together with a definition of the coordinate system used and the relevant kinematic variables, can be found in Ref. [11].

3 Signal and background modeling

This analysis is based on proton-proton (\(\mathrm {p}\) \(\mathrm {p}\)) collision data at a center-of-mass energy \(\sqrt{s}=13~\text {Te}\text {V} \), collected by the CMS detector in 2016 and corresponds to an integrated luminosity of \(35.9{~\text {fb}^{-1}} \) [12].

The \({\mathrm {t}\overline{\mathrm {t}}}\) process is simulated with the powheg (v2) generator in the heavy quark production (hvq) mode [13,14,15]. The NNPDF3.0 next-to-leading-order (NLO) parton distribution functions (PDFs) with the strong coupling parameter \(\alpha _S =0.118\) at the \(\mathrm {Z}\) boson mass scale (\(M_\mathrm {Z}\)) [16] are utilized in the matrix-element (ME) calculation. The renormalization and factorization scales, \(\mu _\mathrm{R}\) and \(\mu _\mathrm{F}\), are set to \(m_{\mathrm{T}} =\sqrt{\smash [b]{m_\mathrm {t}^2+p_{\mathrm{T}} ^2}}\), where \(m_\mathrm {t}=172.5~\text {Ge}\text {V} \) and \(p_{\mathrm{T}}\) is the transverse momentum in the \({\mathrm {t}\overline{\mathrm {t}}}\) rest frame. Parton showering is simulated using pythia8 (v8.219) [17] and the CUETP8M2T4 UE tune [18]. The CUETP8M2T4 tune is based on the CUETP8M1 tune [19] but uses a lower value of \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})=0.1108\) in the parton shower (PS); this value leads to a better description of jet multiplicities in \({\mathrm {t}\overline{\mathrm {t}}}\) events at \(\sqrt{s}=8~\text {Te}\text {V} \) [20]. The leading-order (LO) version of the same NNPDF3.0 is used in the PS and MPI simulation in the CUETP8M2T4 tune. The cross section used for the \({\mathrm {t}\overline{\mathrm {t}}}\) simulation is \(832^{+20}_{-29}~(\text {scale})\pm 35(\text {PDF}+\alpha _S)~\text {pb} \), computed at the next-to-next-to-leading-order (NNLO) plus next-to-next-to-leading-logarithmic accuracy [21].

Throughout this paper, data are compared to the predictions of different generator settings for the \({\mathrm {t}\overline{\mathrm {t}}}\) process. Table 1 summarizes the main characteristics of the setups and abbreviations used in the paper. Among other UE properties, CR and MPI are modeled differently in the alternative setups considered, hence the interest in comparing them to the data. Three different signal ME generators are used: powheg, MadGraph 5_amc@nlo (v2.2.2) with the FxFx merging scheme [22, 23] for jets from the ME calculations and PS, and sherpa (v2.2.4) [24]. The latter is used in combination with OpenLoops (v1.3.1) [25], and with the CS parton shower based on the Catani–Seymour dipole subtraction scheme [26]. In addition, two different herwig PS versions are used and interfaced with powheg: herwig++ [27] with the EE5C UE tune [28] and the CTEQ6 (L1) [29] PDF set, and herwig 7 [27, 30] with its default tune and the MMHT2014 (LO) [31] PDF set.

Additional variations of the Pw+Py8 sample are used to illustrate the sensitivity of the measurements to different parameters of the UE model. A supplementary table, presented in the appendix, details the parameters that have been changed with respect to the CUETP8M2T4 tune in these additional variations. The variations include extreme models that highlight separately the contributions of MPI and CR to the UE, fine-grained variations of different CR models [5, 32], an alternative MPI model based on the “Rope hadronization” framework describing Lund color strings overlapping in the same area [33, 34], variations of the choice of \(\alpha _S (M_\mathrm {Z})\) in the parton shower, and a variation of the values that constitute the CUETP8M2T4 tune, according to their uncertainties.

Background processes are simulated with several generators. The \(\mathrm {W}\) \(\mathrm {Z}\), \(\mathrm {W}\)+jets, and \(\mathrm {Z}\mathrm {Z}\rightarrow 2\ell 2q\) (where \(\ell \) denotes any of the charged leptons e/\(\mu \)/\(\tau \)) processes are simulated at NLO, using MadGraph 5_amc@nlo with the FxFx merging. Drell–Yan production, with dilepton invariant mass, \(m(\ell \ell )\), greater than 50\(~\text {Ge}\text {V}\), is simulated at LO with MadGraph 5_amc@nlo using the so-called MLM matching scheme [35] for jet merging. The powheg (v2) program is furthermore used to simulate the \(\mathrm {W}\) \(\mathrm {W}\), and \(\mathrm {Z}\mathrm {Z}\rightarrow 2\ell 2\nu \) processes [36, 37], while powheg (v1) is used to simulate the \(\mathrm {t}\) \(\mathrm {W}\) process [38]. The single top quark t-channel background is simulated at NLO using powheg (v2) and MadSpin contained in MadGraph 5_amc@nlo (v2.2.2) [39, 40]. The residual \(\mathrm {t}\) \(\overline{\mathrm {t}}\)+V backgrounds, where \(\mathrm {V}=\mathrm {W}{}\) or \(\mathrm {Z}\), are generated at NLO using MadGraph 5_amc@nlo. The cross sections of the DY and \(\mathrm {W}\)+jets processes are normalized to the NNLO prediction, computed using fewz (v3.1.b2) [41], and single top quark processes are normalized to the approximate NNLO prediction [42]. Processes containing two vector bosons (hereafter referred to as dibosons) are normalized to the NLO predictions computed with MadGraph 5_amc@nlo, with the exception of the \(\mathrm {W}\) \(\mathrm {W}\) process, for which the NNLO prediction [43] is used.

All generated events are processed through the Geant4-based [44,45,46] CMS detector simulation and the standard CMS event reconstruction. Additional \(\mathrm {p}\mathrm {p}\) collisions per bunch crossing (pileup) are included in the simulations. These simulate the effect of pileup in the events, with the same multiplicity distribution as that observed in data, i.e., about 23 simultaneous interactions, on average, per bunch crossing.

4 Event reconstruction and selection

The selection targets events in which each \(\mathrm {W}\) boson decays to a charged lepton and a neutrino. Data are selected online with single-lepton and dilepton triggers. The particle flow (PF) algorithm [9] is used for the reconstruction of final-state objects. The offline event selection is similar to the one described in Ref. [47]. At least one PF charged lepton candidate with \(p_{\mathrm{T}} >25~\text {Ge}\text {V} \) and another one with \(p_{\mathrm{T}} >20~\text {Ge}\text {V} \), both having \(|\eta |<2.5\), are required. The two leptons must have opposite charges and an invariant mass \(m(\ell ^\pm \ell ^\mp )>12~\text {Ge}\text {V} \). When extra leptons are present in the event, the dilepton candidate is built from the highest \(p_{\mathrm{T}}\) leptons in the event. Events with \(\mathrm {e}^\pm \mu ^\mp \) in the final state are used for the main analysis, while \(\mathrm {e}^\pm \mathrm {e}^\mp \) and \(\mu ^\pm \mu ^\mp \) events are used to derive the normalization of the DY background. The simulated events are corrected for the differences between data and simulation in the efficiencies of the trigger, lepton identification, and lepton isolation criteria. The corrections are derived with \(\mathrm {Z}\rightarrow \mathrm {e}^\pm \mathrm {e}^\mp \) and \(\mathrm {Z}\rightarrow \mu ^\pm \mu ^\mp \) events using the “tag-and-probe” method [48] and are parameterized as functions of the \(p_{\mathrm{T}}\) and \(\eta \) of the leptons.

Jets are clustered using the anti-\(k_{\mathrm{T}}\) jet finding algorithm [49, 50] with a distance parameter of 0.4 and all the reconstructed PF candidates in the event. The charged hadron subtraction algorithm is used to mitigate the contribution from pileup to the jets [51]. At least two jets with \(p_{\mathrm{T}} >30~\text {Ge}\text {V} \), \(|\eta |<2.5\) and identified by a \(\mathrm {b}\)-tagging algorithm are required. The \(\mathrm {b}\)-tagging is based on a “combined secondary vertex” algorithm [52] characterized by an efficiency of about 66%, corresponding to misidentification probabilities for light quark and \(\mathrm {c}\) quark jets of 1.5 and 18%, respectively. A \(p_{\mathrm{T}}\)-dependent scale factor is applied to the simulations in order to reproduce the efficiency of this algorithm, as measured in data.

The reconstructed vertex with the largest value of summed physics-object \(p_{\mathrm{T}} ^2\) is taken to be the primary \(\mathrm {p}\mathrm {p}\) interaction vertex. The physics objects are the jets, clustered using the jet finding algorithm [49, 50] with the tracks assigned to the vertex as inputs, and the associated missing transverse momentum, \(p_{\mathrm{T}} ^{\mathrm{miss}}\), taken as the negative vector sum of the \(p_{\mathrm{T}}\) of those jets. The latter is defined as the magnitude of the negative vector sum of the momenta of all reconstructed PF candidates in an event, projected onto the plane perpendicular to the direction of the proton beams.

All backgrounds are estimated from simulation, with the exception of the DY background normalization. The latter is estimated making use of the so-called \(R_{\mathrm{out/in}}\) method [53], in which events with same-flavor leptons are used to normalize the yield of \(\mathrm {e}\mu \) pairs from DY production of \(\tau \) lepton pairs. The normalization of the simulation is estimated from the number of events in the data within a 15\(~\text {Ge}\text {V}\) window around the \(\mathrm {Z}\) boson mass [53]. For \(\mathrm {e}\mu \) events, we use the geometric mean of the scale factors determined for \(\mathrm {e}\mathrm {e}\) and \(\mu \mu \) events. With respect to the simulated predictions, a scale factor \(1.3\pm 0.4\) is obtained from this method, with statistical and systematic uncertainties added in quadrature. The systematic uncertainty is estimated from the differences found in the scale factor for events with 0 or 1 \(\mathrm {b}\)-tagged jets, in the same-flavor channels.

We select a total of 52 645 \(\mathrm {e}\mu \) events with an expected purity of 96%. The data agree with the expected yields within 2.2%, a value smaller than the uncertainty in the integrated luminosity alone, 2.5% [12]. The \(\mathrm {t}\) \(\mathrm {W}\) events are expected to constitute 90% of the total background.

In the simulation, the selection is mimicked at the particle level with the techniques described in Ref. [54]. Jets and leptons are defined at the particle level with the same conventions as adopted by the rivet framework [55]. The procedure ensures that the selections and definitions of the objects at particle level are consistent with those used in the rivet routines. A brief description of the particle-level definitions follows:

-

prompt charged leptons (i.e., not produced as a result of hadron decays) are reconstructed as “dressed” leptons with nearby photon recombination using the anti-\(k_{\mathrm{T}}\) algorithm with a distance parameter of 0.1;

-

jets are clustered with the anti-\(k_{\mathrm{T}}\) algorithm with a distance parameter of 0.4 using all particles remaining after removing both the leptons from the hard process and the neutrinos;

-

the flavor of a jet is identified by including \({\mathrm {B}}\) hadrons in the clustering.

Using these definitions, the fiducial region of this analysis is specified by the same requirements that are applied offline (reconstruction level) for leptons and jets. Simulated events are categorized as fulfilling only the reconstruction-based, only the particle-based, or both selection requirements. If a simulated event passes only the reconstruction-level selection, it is considered in the “misidentified signal” category, i.e., it does not contribute to the fiducial region defined in the analysis and thus is considered as a background process. In the majority of the bins of each of the distributions analyzed, the fraction of signal events passing both the reconstruction- and particle-level selections is estimated to be about 80%, while the fraction of misidentified signal events is estimated to be less than 10%.

5 Characterization of the underlying event

In order to isolate the UE activity in data, the contribution from both pileup and the hard process itself must be identified and excluded from the analysis. The contamination from pileup events is expected to yield soft particles in time with the hard process, as well as tails in the energy deposits from out-of-time interactions. The contamination from the hard process is expected to be associated with the two charged leptons and two \(\mathrm {b}\) jets originating from the \({\mathrm {t}\overline{\mathrm {t}}}\) decay chain.

In order to minimize the contribution from these sources, we use the properties of the reconstructed PF candidates in each event. The track associated to the charged PF candidate is required to be compatible with originating from the primary vertex. This condition reduces to a negligible amount the contamination from pileup in the charged particle collection. A simple association by proximity in z with respect to the primary vertex of the event is expected to yield a pileup-robust, high-purity selection. For the purpose of this analysis all charged PF candidates are required to satisfy the following requirements:

-

\(p_{\mathrm{T}} >900~\text {Me}\text {V} \) and \(|\eta |<2.1\);

-

the associated track needs to be either used in the fit of the primary vertex or to be closer to it in z than with respect to other reconstructed vertices in the event.

After performing the selection of the charged PF candidates we check which ones have been used in the clustering of the two \(\mathrm {b}\)-tagged jets and which ones match the two charged lepton candidates within a \(\varDelta R=\sqrt{\smash [b]{(\varDelta \eta )^2+(\varDelta \phi )^2}}=0.05\) cone, where \(\phi \) is the azimuthal angle in radians. All PF candidates failing the kinematic requirements, being matched to another primary vertex in the event, or being matched to the charged leptons and \(\mathrm {b}\)-tagged jets, are removed from the analysis. The UE analysis proceeds by using the remaining charged PF candidates. Figure 1 shows, in a simulated \({\mathrm {t}\overline{\mathrm {t}}}\) event, the contribution from charged and neutral PF candidates, the charged component of the pileup, and the hard process. The charged PF candidates that are used in the study of the UE are represented after applying the selection described above.

Distribution of all PF candidates reconstructed in a Pw+Py8 simulated \({\mathrm {t}\overline{\mathrm {t}}}\) event in the \(\eta \)–\(\phi \) plane. Only particles with \(p_{\mathrm{T}} >900~\text {Me}\text {V} \) are shown, with a marker whose area is proportional to the particle \(p_{\mathrm{T}}\). The fiducial region in \(\eta \) is represented by the dashed lines

Various characteristics, such as the multiplicity of the selected charged particles, the flux of momentum, and the topology or shape of the event have different sensitivity to the modeling of the recoil, the contribution from MPI and CR, and other parameters.

The first set of observables chosen in this analysis is related to the multiplicity and momentum flux in the event:

-

charged-particle multiplicity: \(N_{\mathrm{ch}}\);

-

magnitude of the \(p_{\mathrm{T}}\) of the charged particle recoil system: \(|{\vec {p}}_{\mathrm{T}} |=|{\sum _{i=1}^{N_{\mathrm{ch}}}} \vec {p}_{\mathrm {T},i} |\);

-

scalar sum of the \(p_{\mathrm{T}}\) (or \(p_z\)) of charged particles: \(\sum p_\mathrm{k}={\sum _{i=1}^{N_{\mathrm{ch}}}} |\vec {p}_{\mathrm {k},i} |\), where \(\mathrm {k=T}\) or z;

-

average \(p_{\mathrm{T}}\) (or \(p_z\)) per charged particle: computed from the ratio between the scalar sum and the charged multiplicity: \(\overline{p_{\mathrm{T}}}\) (or \(\overline{p_z}\)).

The second set of observables characterizes the UE shape and it is computed from the so-called linearized sphericity tensor [56, 57]:

where the i index runs over the particles associated with the UE, as for the previous variables, and the \(\mu \) and \(\nu \) indices refer to one of the (x, y, z) components of the momentum of the particles. The eigenvalues (\(\lambda _i\)) of \(S^{\mu \nu }\) are in decreasing order, i.e., with \(\lambda _1\) the largest one, and are used to compute the following observables [58]:

-

Aplanarity: \(A=\frac{3}{2}\lambda _3\) measures the \(p_{\mathrm{T}} \) component out of the event plane, defined by the two leading eigenvectors. Isotropic (planar) events are expected to have \(A=1/2\,(0)\).

-

Sphericity: \(S=\frac{3}{2}(\lambda _2+\lambda _3)\) measures the \(p_{\mathrm{T}} ^2\) with respect to the axis of the event. An isotropic (dijet) event is expected to have \(S=1\,(0)\).

-

\({C}=3(\lambda _1\lambda _2+\lambda _1\lambda _3+\lambda _2\lambda _3)\) identifies 3 jet events (tends to be 0 for dijet events).

-

\({D}=27\lambda _1\lambda _2\lambda _3\) identifies 4 jet events (tends to be 0 otherwise).

Further insight can be gained by studying the evolution of the two sets of observables in different categories of the \({\mathrm {t}\overline{\mathrm {t}}}\) system kinematic quantities. The categories chosen below are sensitive to the recoil or the scale of the energy of the hard process, and are expected to be reconstructed with very good resolution. Additionally, these variables minimize the effect of migration of events between categories due to resolution effects.

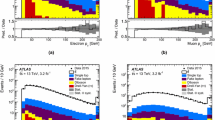

The dependence of the UE on the recoil system is studied in categories that are defined according to the multiplicity of additional jets with \(p_{\mathrm{T}} >30~\text {Ge}\text {V} \) and \(|\eta |<2.5\), excluding the two selected \(\mathrm {b}\)-tagged jets. The categories with 0, 1, or more than 1 additional jet are used for this purpose. The additional jet multiplicity is partially correlated with the charged-particle multiplicity and helps to factorize the contribution from ISR. The distribution of the number of additional jets is shown in Fig. 2 (upper left).

In addition to these categories, the transverse momentum of the dilepton system, \({\vec {p}}_{\mathrm{T}} (\ell \ell )\) , is used as it preserves some correlation with the transverse momentum of the \({\mathrm {t}\overline{\mathrm {t}}}\) system and, consequently, with the recoil of the system. The \({\vec {p}}_{\mathrm{T}} (\ell \ell )\) direction is used to define three regions in the transverse plane of each event. The regions are denoted as “transverse” (\(60^\circ<|\varDelta \phi |<120^\circ \)), “away” (\(|\varDelta \phi |>120^\circ \)), and “toward” (\(|\varDelta \phi |<60^\circ \)). Each reconstructed particle in an event is assigned to one of these regions, depending on the difference of their azimuthal angle with respect to the \({\vec {p}}_{\mathrm{T}} (\ell \ell )\) vector. Figure 3 illustrates how this classification is performed on a typical event. This classification is expected to enhance the sensitivity of the measurements to the contributions from ISR, MPI and CR in different regions. In addition, the magnitude, \(p_{\mathrm{T}} (\ell \ell )\) , is used to categorize the events and its distribution is shown in Fig. 2 (upper right). The \(p_{\mathrm{T}} (\ell \ell )\) variable is estimated with a resolution better than 3%.

Distributions of the variables used to categorize the study of the UE. Upper left: multiplicity of additional jets (\(p_{\mathrm{T}} >30~\text {Ge}\text {V} \)). Upper right: \(p_{\mathrm{T}} (\ell \ell )\). Lower: \(m(\ell \ell )\). The distributions in data are compared to the sum of the expectations for the signal and backgrounds. The shaded band represents the uncertainty associated to the integrated luminosity and the theoretical value of the \({\mathrm {t}\overline{\mathrm {t}}}\) cross section

Lastly, the dependence of the UE on the energy scale of the hard process is characterized by measuring it in different categories of the \(m(\ell \ell )\) variable. This variable is correlated with the invariant mass of the \({\mathrm {t}\overline{\mathrm {t}}}\) system, but not with its \(p_{\mathrm{T}}\). The \(m(\ell \ell )\) distribution is shown in Fig. 2 (lower). A resolution better than 2% is expected in the measurement of \(m(\ell \ell )\).

Although both \(p_{\mathrm{T}} (\ell \ell )\) and \(m(\ell \ell )\) are only partially correlated with the \({\mathrm {t}\overline{\mathrm {t}}}\) kinematic quantities, they are expected to be reconstructed with very good resolution. Because of the two escaping neutrinos, the kinematics of the \({\mathrm {t}\overline{\mathrm {t}}}\) pair can only be reconstructed by using the \(p_{\mathrm{T}} ^{\mathrm{miss}}\) measurement, which has poorer experimental resolution when compared to the leptons. In addition, given that \(p_{\mathrm{T}} ^{\mathrm{miss}}\) is correlated with the UE activity, as it stems from the balance of all PF candidates in the transverse plane, it could introduce a bias in the definition of the categories and the observables studied to characterize the UE. Hence the choice to use only dilepton-related variables.

Display of the transverse momentum of the selected charged particles, the two leptons, and the dilepton pair in the transverse plane corresponding to the same event as in Fig. 1. The \(p_{\mathrm{T}}\) of the particles is proportional to the length of the arrows and the dashed lines represent the regions that are defined relative to the \({\vec {p}}_{\mathrm{T}} (\ell \ell )\) direction. For clarity, the \(p_{\mathrm{T}}\) of the leptons has been rescaled by a factor of 0.5

6 Corrections to the particle level

Inefficiencies of the track reconstruction due to the residual contamination from pileup, nuclear interactions in the tracker material, and accidental splittings of the primary vertex [59] are expected to cause a slight bias in the observables described above. The correction for these biases is estimated from simulation and applied to the data by means of an unfolding procedure.

At particle (generator) level, the distributions of the observables of interest are binned according to the resolutions expected from simulation. Furthermore, we require that each bin contains at least 2% of the total number of events. The migration matrix (K), used to map the reconstruction- to particle-level distributions, is constructed using twice the number of bins at the reconstruction level than the ones used at particle level. This procedure ensures almost diagonal matrices, which have a numerically stable inverse. The matrix is extended with an additional row that is used to count the events failing the reconstruction-level requirements, but found in the fiducial region of the analysis, i.e., passing the particle-level requirements. The inversion of the migration matrix is made using a Tikhonov regularization procedure [60], as implemented in the TUnfoldDensity package [61]. The unfolded distribution is found by minimizing a \(\chi ^2\) function

where y are the observations, \(V_{yy}\) is an estimate of the covariance of y (calculated using the simulated signal sample), \(\lambda \) is the particle-level expectation, \(\Vert L(\lambda -\lambda _0)\Vert ^2\) is a penalty function (with \(\lambda _0\) being estimated from the simulated samples), and \(\tau >0\) is the so-called regularization parameter. The latter regulates how strongly the penalty term should contribute to the minimization of \(\chi ^2\). In our setup we choose the function L to be the curvature, i.e., the second derivative, of the output distribution. The chosen value of the \(\tau \) parameter is optimized for each distribution by minimizing its average global correlation coefficient [61]. Small values, i.e., \(\tau <10^{-3}\), are found for all the distributions; the global correlation coefficients are around 50%. After unfolding, the distributions are normalized to unity.

The statistical coverage of the unfolding procedure is checked by means of pseudo-experiments based on independent Pw+Py8 samples. The pull of each bin in each distribution is found to be consistent with that of a standard normal distribution. The effect of the regularization term in the unfolding is checked in the data by folding the measured distributions and comparing the outcome to the originally-reconstructed data. In general the folded and the original distributions agree within 1–5% in each bin, with the largest differences observed in bins with low yield.

7 Systematic uncertainties

The impact of different sources of uncertainty is evaluated by unfolding the data with alternative migration matrices, which are obtained after changing the settings in the simulations as explained below. The effect of a source of uncertainty in non-fiducial \({\mathrm {t}\overline{\mathrm {t}}}\) events is included in this estimate, by updating the background prediction. The observed bin-by-bin differences are used as estimates of the uncertainty. The impact of the uncertainty in the background normalization is the only exception to this procedure, as detailed below. The covariance matrices associated to each source of uncertainty are built using the procedure described in detail in [62]. In case several sub-contributions are used to estimate a source of uncertainty, the corresponding differences in each bin are treated independently, symmetrized, and used to compute individual covariance matrices, which preserve the normalization. Variations on the event yields are fully absorbed by normalizing the measured cross sections. Thus, only the sources of uncertainty that yield variations in the shapes have a non-negligible impact.

7.1 Experimental uncertainties

The following experimental sources of uncertainty are considered:

- Pileup::

-

Although pileup is included in the simulation, there is an intrinsic uncertainty in modeling its multiplicity. An uncertainty of \(\pm 4.6\%\) in the inelastic \(\mathrm {p}\mathrm {p}\) cross section is used and propagated to the event weights [63].

- Trigger and selection efficiency::

-

The scale factors used to correct the simulation for different trigger and lepton selection efficiencies in data and simulation are varied up or down, according to their uncertainty. The uncertainties in the muon track and electron reconstruction efficiencies are included in this category and added in quadrature.

- Lepton energy scale::

-

The corrections applied to the electron energy and muon momentum scales are varied separately, according to their uncertainties. The corrections and uncertainties are obtained using methods similar to those described in Refs. [64, 65]. These variations lead to a small migration of events between the different \(p_{\mathrm{T}} (\ell \ell )\) or \(m(\ell \ell )\) categories used in the analysis.

- Jet energy scale::

-

A \(p_{\mathrm{T}}\)- and \(\eta \)-dependent parameterization of the jet energy scale is used to vary the calibration of the jets in the simulation. The corrections and uncertainties are obtained using methods similar to those described in Ref. [51]. The effect of these variations is similar to that described for the lepton energy scale uncertainty; in this case the migration of events occurs between different jet multiplicity categories.

- Jet energy resolution::

-

Each jet is further smeared up or down depending on its \(p_{\mathrm{T}}\) and \(\eta \), with respect to the central value measured in data. The difference with respect to data is measured using methods similar to those described in Ref. [51]. The main effect induced in the analysis from altering the jet energy resolution is similar to that described for the jet energy scale uncertainty.

- \(\mathrm {b}\) tagging and misidentification efficiencies::

-

The scale factors used to correct for the difference in performance between data and simulation are varied according to their uncertainties and depending on the flavor of the jet [52]. The main effect of this variation is to move jets into the candidate \(\mathrm {b}\) jets sample or remove them from it.

- Background normalization::

-

The impact of the uncertainty in the normalization of the backgrounds is estimated by computing the difference obtained with respect to the nominal result when these contributions are not subtracted from data. This difference is expected to cover the uncertainty in the normalization of the main backgrounds, i.e., DY and the \(\mathrm {t}\) \(\mathrm {W}\) process, and the uncertainty in the normalization of the \({\mathrm {t}\overline{\mathrm {t}}}\) events that are expected to pass the reconstruction-level requirements but fail the generator-level ones. The total expected background contribution is at the level of 8–10%, depending on the bin. The impact from this uncertainty is estimated to be \(<5\%\).

- Tracking reconstruction efficiency::

-

The efficiency of track reconstruction is found to be more than 90%. It is monitored using muon candidates from \(\mathrm {Z}\rightarrow \mu ^+ \mu ^- \) decays, and the ratio of the four-body final \(\mathrm {D}^0 \rightarrow \mathrm {K}^-\pi ^+ \pi ^- \pi ^+ \) decay to the two-body \(\mathrm {D}^0 \rightarrow \mathrm {K}^-\pi ^+ \) decay. The latter is used to determine a data-to-simulation scale factor (\(SF_{\mathrm{trk}}\)) as a function of the pseudorapidity of the tracks, and for different periods of the data taking used in this analysis. The envelope of the \(SF_{\mathrm{trk}}\) values, with uncertainties included, ranges from 0.89 to 1.17 [66], and it provides an adequate coverage for the residual variations observed in the charged-particle multiplicity between different data taking periods. The impact of the variation of \(SF_{\mathrm{trk}}\) by its uncertainty is estimated by using the value of \(|1-SF_{\mathrm{trk}} |\) for the probability to remove a reconstructed track from the event or to promote an unmatched generator-level charged particle to a reconstructed track, depending on whether \(SF_{\mathrm{trk}}<1\) or \(>1\), respectively. Different migration matrices, reflecting the different tracking efficiencies obtained from varying the uncertainty in \(SF_{\mathrm{trk}}\), are obtained by this method and used to unfold the data. Although the impact is nonnegligible on variables such as \(N_{\mathrm{ch}}\) or \(\sum p_{\mathrm{T}} \) , it has very small impact (\(<1\%\)) on variables such as \(\overline{p_{\mathrm{T}}}\) and \(\overline{p_z}\).

7.2 Theoretical uncertainties

The following theoretical uncertainties are considered:

- Scale choices::

-

\(\mu _\mathrm{R}\) and \(\mu _\mathrm{F}\) are varied individually in the ME by factors between 0.5 and 2, excluding the extreme cases \(\mu _\mathrm{R}/\mu _\mathrm{F}=\mu (2,0.5)\) and \(\mu (0.5,2)\), according to the prescription described in Refs. [67, 68].

- Resummation scale and \(\alpha _S\) used in the parton shower::

-

In powheg, the real emission cross section is scaled by a damping function, parameterized by the so-called \(h_{\mathrm{damp}}\) variable [13,14,15]. This parameter controls the ME-PS matching and regulates the high-\(p_{\mathrm{T}}\) radiation by reducing real emissions generated by powheg with a factor of \(h_{\mathrm{damp}}^2/(p_{\mathrm{T}} ^2+h_{\mathrm{damp}}^2)\). In the simulation used to derive the migration matrices, \(h_{\mathrm{damp}}=1.58~m_\mathrm {t}\) and the uncertainty in this value is evaluated by changing it by +42 or -37%, a range that is determined from the jet multiplicity measurements in \({\mathrm {t}\overline{\mathrm {t}}}\) at \(\sqrt{s}=8~\text {Te}\text {V} \) [20]. Likewise, the uncertainty associated with the choice of \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})=0.1108\) for space-like and \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})=0.1365\) for time-like showers in the CUETP8M2T4 tune is evaluated by varying the scale at which it is computed, \(M_\mathrm {Z}\), by a factor of 2 or 1/2.

- UE model::

-

The dependence of the migration matrix on the UE model assumed in the simulation is tested by varying the parameters that model the MPI and CR in the range of values corresponding to the uncertainty envelope associated to the CUETP8M2T4 tune. The uncertainty envelope has been determined using the same methods as described in Ref. [19]. In the following, these will be referred to as UE up/down variations. The dependence on the CR model is furthermore tested using other models besides the nominal one, which is the MPI-based CR model where the \({\mathrm {t}\overline{\mathrm {t}}}\) decay products are excluded from reconnections to the UE. A dedicated sample where the reconnections to resonant decay products are enabled (hereafter designated as ERDon) is used to evaluate possible differences in the unfolded results. In addition, alternative models for the CR are tested. One sample utilizing the “gluon move” model [5], in which gluons can be moved to another string, and another utilizing the “QCD-based” model with string formation beyond LO [32] are used for this purpose. In both samples, the reconnections to the decay resonant processes are enabled. The envelope of the differences is considered as a systematic uncertainty.

- \(\mathrm {t}\) quark \(\mathbf p_{\mathrm{T}} \)::

-

The effect of reweighting of the simulated \(\mathrm {t}\) quark \(p_{\mathrm{T}}\) (\(p_{\mathrm{T}} (\mathrm {t})\)) distribution to match the one reconstructed from data [69, 70] is added as an additional uncertainty. This has the most noticeable effect on the fraction of events that do not pass the reconstruction-level requirements and migrate out of the fiducial phase space.

- \(\mathrm {t}\) quark mass::

-

An additional uncertainty is considered, related to the value of \(m_\mathrm {t}=172.5~\text {Ge}\text {V} \) used in the simulations, by varying this parameter by \(\pm 0.5~\text {Ge}\text {V} \) [71].

Any possible uncertainty from the choice of the hadronization model is expected to be significantly smaller than the theory uncertainties described above. This has been explicitly tested by comparing the results at reconstruction level and after unfolding the data with the Pw+Py8 and Pw+Hw++ migration matrices. The latter relies on a different hadronization model, but it folds other modelling differences such as the underlying event tune or the parton shower as well. Thus it can only be used as a test setup to validate the measurement.

7.3 Summary of systematic uncertainties

The uncertainties on the measurement of the normalized differential cross sections are dominated by the systematic uncertainties, although in some bins of the distributions the statistical uncertainties are a large component. The experimental uncertainties have, in general, small impact; the most relevant are the tracking reconstruction efficiency for the \(N_{\mathrm{ch}}\), \(\sum p_{\mathrm{T}} \) , \(\sum p_{z}\) , and \(|{\vec {p}}_{\mathrm{T}} |\) observables. Other observables are affected at a sub-percent level by this uncertainty. Theory uncertainties affect the measurements more significantly, a fact that underlines the need of better tuning of the model parameters.

Event shape observables are found to be the most robust against this uncertainty, while \(\sum p_{\mathrm{T}} \) , \(\sum p_{z}\) , and \(|{\vec {p}}_{\mathrm{T}} |\) are the ones that suffer more from it. Other sources of theoretical uncertainty typically have a smaller effect.

To further illustrate the impact of different sources on the observables considered, we list in Table 2 the uncertainties on the average of each observable. In the table, only systematic uncertainties that impact the average of one of the observables by at least 0.5% are included. The total uncertainty on the average of a given quantity ranges from 1 to 8%, and hence the comparison with model predictions can be carried out in a discrete manner.

8 Results

8.1 Inclusive distributions

The normalized differential cross sections measured as functions of \(N_{\mathrm{ch}}\), \(\sum p_{\mathrm{T}} \), \(\overline{p_{\mathrm{T}}}\), \(|{\vec {p}}_{\mathrm{T}} |\), \(\sum p_{z}\), \(\overline{p_z}\), sphericity, aplanarity, C, and D are shown in Figs. 4, 5, 6, 7, 8, 9, 10, 11, 12 and 13, respectively. The distributions are obtained after unfolding the background-subtracted data and normalizing the result to unity. The result is compared to the simulations, whose settings are summarized in Table 1 and in the appendix. For the predictions, the statistical uncertainty is represented as an error bar. In the specific case of the Pw+Py8 setup, the error bar represents the envelope obtained by varying the main parameters of the CUETP8M2T4 tune, according to their uncertainties. The envelope includes the variation of the CR model, \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})\), \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\), the \(h_{\mathrm{damp}}\) parameter, and the \(\mu _\mathrm{R}/\mu _\mathrm{F}\) scales at the ME level. Thus, the uncertainty band represented for the Pw+Py8 setup should be interpreted as the theory uncertainty in that prediction. For each distribution we give, in addition, the ratio between different predictions and the data.

The normalized differential cross section as a function of \(N_{\mathrm{ch}}\) is shown on the upper panel. The data (colored boxes) are compared to the nominal Pw+Py8 predictions and to the expectations obtained from varied \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})\) or \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) Pw+Py8 setups (markers). The different panels on the lower display show the ratio between each model tested (see text) and the data. In both cases the shaded (hatched) band represents the total (statistical) uncertainty of the data, while the error bars represent either the total uncertainty of the Pw+Py8 setup, computed as described in the text, or the statistical uncertainty of the other MC simulation setups

Normalized differential cross section as function of \(\sum p_{\mathrm{T}} \) , compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of \(\overline{p_{\mathrm{T}}}\) , compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of \(|{\vec {p}}_{\mathrm{T}} |\) , compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of \(\sum p_{z}\) , compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of \(\overline{p_z}\) , compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of the sphericity variable, compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of the aplanarity variable, compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of the C variable, compared to the predictions of different models. The conventions of Fig. 4 are used

Normalized differential cross section as function of the D variable, compared to the predictions of different models. The conventions of Fig. 4 are used

In \({\mathrm {t}\overline{\mathrm {t}}}\) events the UE contribution is determined to have typically \(\mathcal {O}(20)\) charged particles with \(\overline{p_{\mathrm{T}}} \sim \overline{p_z} \approx 2~\text {Ge}\text {V} \), vectorially summing to a recoil of about 10\(~\text {Ge}\text {V}\). The distribution of the UE activity is anisotropic (as the sphericity is \(<1\)), close to planar (as the aplanarity peaks at low values of \(\approx \)0.1), and peaks at around 0.75 in the C variable, which identifies three-jet topologies. The D variable, which identifies the four-jet topology, is instead found to have values closer to 0. The three-prong configuration in the energy flux of the UE described by the C variable can be identified with two of the eigenvectors of the linearized sphericity tensor being correlated with the direction of the \(\mathrm {b}\)-tagged jets, and the third one being determined by energy conservation. When an extra jet with \(p_{\mathrm{T}} >30~\text {Ge}\text {V} \) is selected, we measure a change in the profile of the event shape variables, with average values lower by 20–40% with respect to the distributions in which no extra jet is found. Thus when an extra jet is present, the event has a dijet-like topology instead of an isotropic shape.

The results obtained with pythia8 for the parton shower simulation show negligible dependence on the ME generator with which it is interfaced, i.e., Pw+Py8 and MG5_aMC yield similar results. In all distributions the contribution from MPI is strong: switching off this component in the simulation has a drastic effect on the predictions of all the variables analyzed. Color reconnection effects are more subtle to identify in the data. In the inclusive distributions, CR effects are needed to improve the theory accuracy for \(\overline{p_{\mathrm{T}}} <3~\text {Ge}\text {V} \) or \(\overline{p_z} <5~\text {Ge}\text {V} \). The differences between the CR models tested (as discussed in detail in Sect. 3) are nevertheless small and almost indistinguishable in the inclusive distributions. In general the Pw+Py8 setup is found to be in agreement with the data, when the total theory uncertainty is taken into account. In most of the distributions it is the variation of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) that dominates the theory uncertainty, as this variation leads to the most visible changes in the UE. The other parton shower setups tested do not describe the data as accurately, but they were not tuned to the same level of detail as Pw+Py8. The Pw+Hw++ and Pw+Hw7-based setups show distinct trends with respect to the data from those observed in any of the pythia8-based setups. While describing fairly well the UE event shape variables, herwig++ and herwig 7 disagree with the \(N_{\mathrm{ch}}\), \(\overline{p_{\mathrm{T}}}\) , and \(\overline{p_z}\) measurements. The sherpa predictions disagree with data in most of the observables.

For each distribution the level of agreement between theory predictions and data is quantified by means of a \(\chi ^2\) variable defined as:

where \(\delta y_i\) (\(\delta y_j\)) are the differences between the data and the model in the i-th (j-th) bin; here n represents the total number of bins, and \(\text {Cov}^{-1}\) is the inverse of the covariance matrix. Given that the distributions are normalized, the covariance matrix becomes invertible after removing its first row and column. In the calculation of Eq. 3 we assume that the theory uncertainties are uncorrelated with those assigned to the measurements. Table 3 summarizes the values obtained for the main models with respect to each of the distributions shown in Figs. 4, 5, 6, 7, 8, 9, 10, 11, 12 and 13. The values presented in the table quantify the level of agreement of each model with the measurements. Low \(\chi ^2\) per number of degrees of freedom (dof) values are obtained for the Pw+Py8 setup when all the theory uncertainties of the model are taken into account, in particular for the event shape variables. This indicates that the theory uncertainty envelope is conservative.

8.2 Profile of the UE in different categories

The differential cross sections as functions of different observables are measured in different event categories introduced in Sect. 5. We report the profile, i.e., the average of the measured differential cross sections in different event categories, and compare it to the expectations from the different simulation setups. Figures 14, 15, 16, 17, 18, 19, 20, 21, 22 and 23 summarize the results obtained. Additional results for \(\overline{p_{\mathrm{T}}}\) , profiled in different categories of \(p_{\mathrm{T}} (\ell \ell )\) and/or jet multiplicity, are shown in Figs. 24 and 25, respectively. In all figures, the pull of the simulation distributions with respect to data, defined as the difference between the model and the data divided by the total uncertainty, is used to quantify the level of agreement.

The average charged-particle multiplicity and the average of the momentum flux observables vary significantly when extra jets are found in the event or for higher \(p_{\mathrm{T}} (\ell \ell )\) values. The same set of variables varies very slowly as a function of \(m(\ell \ell )\). Event shape variables are mostly affected by the presence of extra jets in the event, while varying slowly as a function of \(p_{\mathrm{T}} (\ell \ell )\) or \(m(\ell \ell )\). The average sphericity increases significantly when no extra jets are present in the event showing that the UE is slightly more isotropic in these events. A noticeable change is also observed for the other event shape variables in the same categories.

For all observables, the MPI contribution is crucial: most of the pulls are observed to be larger than 5 when MPI is switched off in the simulation. Color reconnection effects are on the other hand more subtle and are more relevant for \(\overline{p_{\mathrm{T}}}\) , specifically when no additional jet is present in the event. This is illustrated by the fact that the pulls of the setup without CR are larger for events belonging to these categories. Event shape variables also show sensitivity to CR. All other variations of the UE and CR models tested yield smaller variations of the pulls, compared to the ones discussed.

Although a high pull value of the Pw+Py8 simulation is obtained for several categories, when different theory variations are taken into account, the envelope encompasses the data. The variations of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) and \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})\) account for the largest contribution to this envelope. As already noted in the previous section, the Pw+Hw++, Pw+Hw7, and sherpa models tend to be in worse agreement with data than Pw+Py8, indicating that further tuning of the first two is needed.

Average \(N_{\mathrm{ch}}\) in different event categories. The mean observed in data (boxes) is compared to the predictions from different models (markers), which are superimposed in the upper figure. The total (statistical) uncertainty of the data is represented by a shaded (hatched) area and the statistical uncertainty of the models is represented with error bars. In the specific case of the Pw+Py8 model the error bars represent the total uncertainty (see text). The lower figure displays the pull between different models and the data, with the different panels corresponding to different sets of models. The bands represent the interval where \(|\text {pull} |<1\). The error bar for the Pw+Py8 model represents the range of variation of the pull for the different configurations described in the text

Average \(\sum p_{\mathrm{T}} \) in different event categories. The conventions of Fig. 14 are used

Average \(\sum p_{z}\) in different categories. The conventions of Fig. 14 are used

Average \(\overline{p_{\mathrm{T}}}\) in different categories. The conventions of Fig. 14 are used

Average \(\overline{p_z}\) in different categories. The conventions of Fig. 14 are used

Average \(|{\vec {p}}_{\mathrm{T}} |\) in different categories. The conventions of Fig. 14 are used

Average sphericity in different categories. The conventions of Fig. 14 are used

Average aplanarity in different categories. The conventions of Fig. 14 are used

Average C in different categories. The conventions of Fig. 14 are used

Average D in different categories. The conventions of Fig. 14 are used

Average \(\overline{p_{\mathrm{T}}}\) in different \(p_{\mathrm{T}} (\ell \ell )\) categories. The conventions of Fig. 14 are used

Average \(\overline{p_{\mathrm{T}}}\) in different jet multiplicity categories. The conventions of Fig. 14 are used

8.3 Sensitivity to the choice of \(\alpha _S \) in the parton shower

The sensitivity of these results to the choice of \(\alpha _S (M_\mathrm {Z})\) in the parton shower is tested by performing a scan of the \(\chi ^2\) value defined by Eq. (3), as a function of \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})\) or \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\). The \(\chi ^2\) is scanned fixing all the other parameters of the generator. A more complete treatment could only be achieved with a fully tuned UE, which lies beyond the scope of this paper. While no sensitivity is found to \(\alpha _S ^{\mathrm{ISR}}(M_\mathrm {Z})\), most observables are influenced by the choice of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\). The most sensitive variable is found to be \(\overline{p_{\mathrm{T}}}\) and the corresponding variation of the \(\chi ^2\) function is reported in Fig. 26. A polynomial interpolation is used to determine the minimum of the scan (best fit), and the points at which the \(\chi ^2\) function increases by one unit are used to derive the 68% confidence interval (CI). The degree of the polynomial is selected by a stepwise regression based on an F-test statistics [72]. A value of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})=0.120\pm 0.006\) is obtained, which is lower than the one assumed in the Monash tune [73] and used in the CUETP8M2T4 tune. The value obtained is compatible with the one obtained from the differential cross sections measured as a function of \(\overline{p_{\mathrm{T}}}\) in different \(p_{\mathrm{T}} (\ell \ell )\) regions or in events with different additional jet multiplicities. Table 4 summarizes the results obtained. From the inclusive results, we conclude that the range of the energy scale that corresponds to the 5% uncertainty attained in the determination of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) can be approximated by a \([\sqrt{2},1/\sqrt{2}]\) variation, improving considerably over the canonical [2, 0.5] scale variations.

Scan of the \(\chi ^2\) as a function of the value of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) employed in the Pw+Py8 simulation, when the inclusive \(\overline{p_{\mathrm{T}}}\) or the \(\overline{p_{\mathrm{T}}}\) distribution measured in different regions is used. The curves result from a fourth-order polynomial interpolation between the simulated \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) points

9 Summary

The first measurement of the underlying event (UE) activity in \({\mathrm {t}\overline{\mathrm {t}}}\) dilepton events produced in hadron colliders has been reported. The measurement makes use of \(\sqrt{s}=13~\text {Te}\text {V} \) proton-proton collision data collected by the CMS experiment in 2016, and corresponding to 35.9\(~\text {fb}^{-1}\). Using particle-flow reconstruction, the contribution from the UE has been isolated by removing charged particles associated with the decay products of the \({\mathrm {t}\overline{\mathrm {t}}}\) event candidates as well as with pileup interactions from the set of reconstructed charged particles per event. The measurements performed are expected to be valid for other \({\mathrm {t}\overline{\mathrm {t}}}\) final states, and can be used as a reference for complementary studies, e.g., of how different color reconnection (CR) models compare to data in the description of the jets from \(\mathrm {W}\rightarrow \mathrm {q} \overline{\mathrm {q}} '\) decays. The chosen observables and categories enhance the sensitivity to the modeling of multiparton interactions (MPI), CR and the choice of strong coupling parameter at the mass of \(\mathrm {Z}\) boson (\(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\)) in the pythia8 parton shower Monte Carlo simulation. These parameters have significant impact on the modeling of \({\mathrm {t}\overline{\mathrm {t}}}\) production at the LHC. In particular, the compatibility of the data with different choices of the \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) parameter in pythia8 has been quantified, resulting in a lower value than the one considered in Ref. [73].

The majority of the distributions analyzed indicate a fair agreement between the data and the powheg +pythia8 setup with the CUETP8M2T4 tune [18], but disfavor the setups in which MPI and CR are switched off, or in which \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})\) is increased. The data also disfavor the default configurations in powheg +herwig++, powheg +herwig7, and sherpa. It has been furthermore verified that, as expected, the choice of the next-to-leading-order matrix-element generator does not impact significantly the expected characteristics of the UE by comparing predictions from powheg and MadGraph 5_amc@nlo, both interfaced with pythia8.

The present results test the hypothesis of universality in UE at an energy scale typically higher than the ones at which models have been studied. The UE model is tested up to a scale of two times the top quark mass, and the measurements in categories of dilepton invariant mass indicate that it should be valid at even higher scales. In addition, they can be used to improve the assessment of systematic uncertainties in future top quark analyses. The results obtained in this study show that a value of \(\alpha _S ^{\mathrm{FSR}}(M_\mathrm {Z})=0.120\pm 0.006\) is consistent with the data. The corresponding uncertainties translate to a variation of the renormalization scale by a factor of \(\sqrt{2}\).

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Release and preservation of data used by the CMS Collaboration as the basis for publications is guided by the CMS policy as written in its document "CMS data preservation, re-use and open access policy" (https://cms-docdb.cern.ch/cgibin/PublicDocDB/RetrieveFile?docid=6032&filename=CMSDataPolicyV1.2.pdf&version=2).].

References

Particle Data Group, Review of particle physics. Chin. Phys. C 40, 100001 (2016). https://doi.org/10.1088/1674-1137/40/10/100001

CMS Collaboration, Measurement of the top quark mass using charged particles in pp collisions at \(\sqrt{s} = 8~\text{TeV}\). Phys. Rev. D 93, 092006 (2016). https://doi.org/10.1103/PhysRevD.93.092006. arXiv:1603.06536

ATLAS Collaboration, Measurement of jet shapes in top-quark pair events at \(\sqrt{s} = 7~\text{ TeV }\) using the ATLAS detector. Eur. Phys. J. C 73, 2676 (2013). https://doi.org/10.1140/epjc/s10052-013-2676-3. arXiv:1307.5749

T. Sjöstrand, Colour reconnection and its effects on precise measurements at the LHC, in Proceedings, 43rd International Symposium on Multiparticle Dynamics (ISMD 13). (2013). arXiv:1310.8073

S. Argyropoulos, T. Sjöstrand, Effects of color reconnection on \(\text{ t }\overline{\text{ t }}\) final states at the LHC. JHEP 11, 043 (2014). https://doi.org/10.1007/JHEP11(2014)043. arXiv:1407.6653

G. Corcella, Interpretation of the top-quark mass measurements: a theory overview, in Proceedings, 8th International Workshop on Top Quark Physics (TOP2015), Ischia, Italy, September, 2016, vol. TOP2015, p. 037. arXiv:1511.08429

G. Corcella, R. Franceschini, D. Kim, Fragmentation uncertainties in hadronic observables for top-quark mass measurements. Nucl. Phys. B 929, 485 (2018). https://doi.org/10.1016/j.nuclphysb.2018.02.012. arXiv:1712.05801

S. Ferrario Ravasio, T. Jez̆o, P. Nason, C. Oleari, A theoretical study of top-mass measurements at the LHC using NLO+PS generators of increasing accuracy. Eur. Phys. J. C 78, 458 (2018). https://doi.org/10.1140/epjc/s10052-018-5909-7. arXiv:1801.03944

CMS Collaboration, Particle-flow reconstruction and global event description with the CMS detector. JINST 12, P10003 (2017). https://doi.org/10.1088/1748-0221/12/10/P10003. arXiv:1706.04965

CMS Collaboration, The CMS trigger system. JINST 12, P01020 (2017). https://doi.org/10.1088/1748-0221/12/01/P01020. arXiv:1609.02366

CMS Collaboration, The CMS experiment at the CERN LHC. JINST 3, S08004 (2008). https://doi.org/10.1088/1748-0221/3/08/S08004

CMS Collaboration, CMS luminosity measurements for the 2016 data taking period. CMS Physics Analysis Summary CMS-PAS-LUM-17-001 (2017)

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). https://doi.org/10.1088/1126-6708/2004/11/040. arXiv:hep-ph/0409146

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). https://doi.org/10.1088/1126-6708/2007/11/070. arXiv:0709.2092

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the POWHEG BOX. JHEP 06, 043 (2010). https://doi.org/10.1007/JHEP06(2010)043. arXiv:1002.2581

NNPDF Collaboration, Parton distributions for the LHC Run II. JHEP 04, 040 (2015). https://doi.org/10.1007/JHEP04(2015)040. arXiv:1410.8849

T. Sjöstrand et al., An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024. arXiv:1410.3012

CMS Collaboration, Investigations of the impact of the parton shower tuning in pythia8 in the modelling of \(\text{ t }\overline{\text{ t }}\) at \(\sqrt{s}=8\) and 13 TeV. CMS Physics Analysis Summary CMS-PAS-TOP-16-021 (2016)

CMS Collaboration, Event generator tunes obtained from underlying event and multiparton scattering measurements. Eur. Phys. J. C 76, 155 (2016). https://doi.org/10.1140/epjc/s10052-016-3988-x. arXiv:1512.00815

CMS Collaboration, Measurement of \(\text{ t }\overline{\text{ t }}\) production with additional jet activity, including \(\text{ b }\) quark jets, in the dilepton decay channel using pp collisions at \(\sqrt{s}=8~\text{ TeV }\). Eur. Phys. J. C 76, 379 (2016). https://doi.org/10.1140/epjc/s10052-016-4105-x. arXiv:1510.03072

M. Czakon, A. Mitov, Top++: a program for the calculation of the top-pair cross-section at hadron colliders. Comput. Phys. Commun. 185, 2930 (2014). https://doi.org/10.1016/j.cpc.2014.06.021. arXiv:1112.5675

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301

R. Frederix, S. Frixione, Merging meets matching in MC@NLO. JHEP 12, 061 (2012). https://doi.org/10.1007/JHEP12(2012)061. arXiv:1209.6215

T. Gleisberg et al., Event generation with SHERPA 1.1. JHEP 02, 007 (2009). https://doi.org/10.1088/1126-6708/2009/02/007. arXiv:0811.4622

F. Cascioli, P. Maierhofer, S. Pozzorini, Scattering amplitudes with open loops. Phys. Rev. Lett. 108, 111601 (2012). https://doi.org/10.1103/PhysRevLett.108.111601. arXiv:1111.5206

S. Schumann, F. Krauss, A parton shower algorithm based on Catani–Seymour dipole factorisation. JHEP 03, 038 (2008). https://doi.org/10.1088/1126-6708/2008/03/038. arXiv:0709.1027

M. Bahr et al., Herwig++ physics and manual. Eur. Phys. J. C 58, 639 (2008). https://doi.org/10.1140/epjc/s10052-008-0798-9. arXiv:0803.0883

M.H. Seymour, A. Siodmok, Constraining MPI models using \(\sigma _{eff}\) and recent Tevatron and LHC underlying event data. JHEP 10, 113 (2013). https://doi.org/10.1007/JHEP10(2013)113. arXiv:1307.5015

J. Pumplin et al., New generation of parton distributions with uncertainties from global QCD analysis. JHEP 07, 012 (2002). https://doi.org/10.1088/1126-6708/2002/07/012. arXiv:hep-ph/0201195

J. Bellm et al., Herwig 7.0/Herwig++ 3.0 release note. Eur. Phys. J. C 76, 196 (2016). https://doi.org/10.1140/epjc/s10052-016-4018-8. arXiv:1512.01178

L.A. Harland-Lang, A.D. Martin, P. Motylinski, R.S. Thorne, Parton distributions in the LHC era: MMHT 2014 PDFs. Eur. Phys. J. C 75, 204 (2015). https://doi.org/10.1140/epjc/s10052-015-3397-6. arXiv:1412.3989

J.R. Christiansen, P.Z. Skands, String formation beyond leading colour. JHEP 08, 003 (2015). https://doi.org/10.1007/JHEP08(2015)003. arXiv:1505.01681

C. Bierlich, G. Gustafson, L. Lönnblad, A. Tarasov, Effects of overlapping strings in pp collisions. JHEP 03, 148 (2015). https://doi.org/10.1007/JHEP03(2015)148. arXiv:1412.6259

C. Bierlich, J.R. Christiansen, Effects of color reconnection on hadron flavor observables. Phys. Rev. D 92, 094010 (2015). https://doi.org/10.1103/PhysRevD.92.094010. arXiv:1507.02091

J. Alwall et al., Comparative study of various algorithms for the merging of parton showers and matrix elements in hadronic collisions. Eur. Phys. J. C 53, 473 (2008). https://doi.org/10.1140/epjc/s10052-007-0490-5. arXiv:0706.2569

T. Melia, P. Nason, R. Rontsch, G. Zanderighi, \(\text{ W }^+ \text{ W }^-\), WZ and ZZ production in the POWHEG BOX. JHEP 11, 078 (2011). https://doi.org/10.1007/JHEP11(2011)078. arXiv:1107.5051

P. Nason, G. Zanderighi, \(\text{ W }^+ \text{ W }^-\), WZ and ZZ production in the POWHEG-BOX-V2. Eur. Phys. J. C 74, 2702 (2014). https://doi.org/10.1140/epjc/s10052-013-2702-5. arXiv:1311.1365

E. Re, Single-top Wt-channel production matched with parton showers using the POWHEG method. Eur. Phys. J. C 71, 1547 (2011). https://doi.org/10.1140/epjc/s10052-011-1547-z. arXiv:1009.2450

S. Alioli, P. Nason, C. Oleari, E. Re, NLO single-top production matched with shower in POWHEG: \(s\)- and \(t\)-channel contributions. JHEP 09, 111 (2009). https://doi.org/10.1007/JHEP02(2010)011. arXiv:0907.4076 [Erratum: JHEP 02, 011 (2010)]

P. Artoisenet, R. Frederix, O. Mattelaer, R. Rietkerk, Automatic spin-entangled decays of heavy resonances in Monte Carlo simulations. JHEP 03, 015 (2013). https://doi.org/10.1007/JHEP03(2013)015. arXiv:1212.3460

K. Melnikov, F. Petriello, Electroweak gauge boson production at hadron colliders through \(O(\alpha _s^2)\). Phys. Rev. D 74, 114017 (2006). https://doi.org/10.1103/PhysRevD.74.114017. arXiv:hep-ph/0609070

N. Kidonakis, Top quark production, in Proceedings, Helmholtz International Summer School on Physics of Heavy Quarks and Hadrons (HQ 2013): JINR, Dubna, Russia, July 15–28, 2013, p. 139 (2014). https://doi.org/10.3204/DESY-PROC-2013-03/Kidonakis. arXiv:1311.0283

T. Gehrmann et al., \(\text{ W }^+\text{ W }^-\) production at hadron colliders in next to next to leading order QCD. Phys. Rev. Lett. 113, 212001 (2014). https://doi.org/10.1103/PhysRevLett.113.212001. arXiv:1408.5243

GEANT4 Collaboration, Geant4—a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003). https://doi.org/10.1016/S0168-9002(03)01368-8

GEANT4 Collaboration, Geant4 developments and applications. IEEE Trans. Nucl. Sci. 53, 270 (2006). https://doi.org/10.1109/TNS.2006.869826

GEANT4 Collaboration, Recent developments in Geant4. Nucl. Instrum. Methods A 835, 186 (2016). https://doi.org/10.1016/j.nima.2016.06.125

CMS Collaboration, Measurement of the \(\text{ t }\overline{\text{ t }}\) production cross section using events in the \(\text{ e }\mu \) final state in pp collisions at \(\sqrt{s} = 13~\text{ TeV }\). Eur. Phys. J. C 77, 172 (2017). https://doi.org/10.1140/epjc/s10052-017-4718-8. arXiv:1611.04040

CMS Collaboration, Measurements of inclusive W and Z cross sections in pp collisions at \(\sqrt{s}=7~\text{ TeV }\). JHEP 01, 080 (2011). https://doi.org/10.1007/JHEP01(2011)080. arXiv:1012.2466

M. Cacciari, G.P. Salam, G. Soyez, The \(\text{ anti-k }_{\rm t}\) jet clustering algorithm. JHEP 04, 063 (2008). https://doi.org/10.1088/1126-6708/2008/04/063. arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). https://doi.org/10.1140/epjc/s10052-012-1896-2. arXiv:1111.6097

CMS Collaboration, Jet energy scale and resolution in the CMS experiment in pp collisions at 8 TeV. JINST 12, P02014 (2017). https://doi.org/10.1088/1748-0221/12/02/P02014. arXiv:1607.03663

CMS Collaboration, Identification of heavy-flavour jets with the CMS detector in pp collisions at 13 TeV. JINST 13, P05011 (2018). https://doi.org/10.1088/1748-0221/13/05/P05011. arXiv:1712.07158

CMS Collaboration, First measurement of the cross section for top-quark pair production in proton–proton collisions at \(\sqrt{s}=7~\text{ TeV }\). Phys. Lett. B 695, 424 (2011). https://doi.org/10.1016/j.physletb.2010.11.058. arXiv:1010.5994

CMS Collaboration, Object definitions for top quark analyses at the particle level. CMS Note 2017-004 (2017)

A. Buckley et al., Rivet user manual. Comput. Phys. Commun. 184, 2803 (2013). https://doi.org/10.1016/j.cpc.2013.05.021. arXiv:1003.0694

G. Parisi, Superinclusive cross sections. Phys. Lett. B 74, 65 (1978). https://doi.org/10.1016/0370-2693(78)90061-8

J.F. Donoghue, F.E. Low, S.-Y. Pi, Tensor analysis of hadronic jets in quantum chromodynamics. Phys. Rev. D 20, 2759 (1979). https://doi.org/10.1103/PhysRevD.20.2759

R.K. Ellis, D.A. Ross, A.E. Terrano, The perturbative calculation of jet structure in \(\text{ e }^+\text{ e }^-\) annihilation. Nucl. Phys. B 178, 421 (1981). https://doi.org/10.1016/0550-3213(81)90165-6

CMS Collaboration, Description and performance of track and primary-vertex reconstruction with the CMS tracker. JINST 9, P10009 (2014). https://doi.org/10.1088/1748-0221/9/10/P10009. arXiv:1405.6569

A.N. Tikhonov, Solution of incorrectly formulated problems and the regularization method. Sov. Math. Dokl. 4, 1035 (1963)

S. Schmitt, TUnfold: an algorithm for correcting migration effects in high energy physics. JINST 7, T10003 (2012). https://doi.org/10.1088/1748-0221/7/10/T10003. arXiv:1205.6201

ATLAS and CMS Collaborations, Combination of inclusive and differential \(\text{ t }\overline{\text{ t }}\) charge asymmetry measurements using ATLAS and CMS data at \( \sqrt{s}=7 \) and 8 TeV. JHEP 04, 033 (2018). https://doi.org/10.1007/JHEP04(2018)033. arXiv:1709.05327

CMS Collaboration, Measurement of the inelastic proton–proton cross section at \( \sqrt{s}=13~\text{ TeV }\). JHEP 07, 161 (2018). https://doi.org/10.1007/JHEP07(2018)161. arXiv:1802.02613

CMS Collaboration, Performance of electron reconstruction and selection with the cms detector in proton-proton collisions at \(\sqrt{s} = 8~\text{ TeV }\). JINST 10, P06005 (2015). https://doi.org/10.1088/1748-0221/10/06/P06005. arXiv:1502.02701

CMS Collaboration, Performance of CMS muon reconstruction in pp collision events at \(\sqrt{s}=7~\text{ TeV }\). JINST 7, P10002 (2012). https://doi.org/10.1088/1748-0221/7/10/P10002. arXiv:1206.4071

CMS Collaboration, Tracking POG plot results on 2015 data. CMS Detector Performance Summary CMS-DP-2016-2012 (2016)

M. Cacciari et al., The \(\text{ t }\overline{\text{ t }}\) cross-section at 1.8 TeV and 1.96 TeV: a study of the systematics due to parton densities and scale dependence. JHEP 04, 068 (2004). https://doi.org/10.1088/1126-6708/2004/04/068. arXiv:hep-ph/0303085

S. Catani, D. de Florian, M. Grazzini, P. Nason, Soft gluon resummation for Higgs boson production at hadron colliders. JHEP 07, 028 (2003). https://doi.org/10.1088/1126-6708/2003/07/028. arXiv:hep-ph/0306211

CMS Collaboration, Measurement of differential cross sections for top quark pair production using the lepton+jets final state in proton-proton collisions at 13 TeV. Phys. Rev. D 95, 092001 (2017). https://doi.org/10.1103/PhysRevD.95.092001. arXiv:1610.04191

CMS Collaboration, Measurement of normalized differential \(\text{ t }\overline{\text{ t }}\) cross sections in the dilepton channel from pp collisions at \(\sqrt{s}=13~\text{ TeV }\). JHEP 04, 060 (2018). https://doi.org/10.1007/JHEP04(2018)060. arXiv:1708.07638

CMS Collaboration, Measurement of the top quark mass using proton-proton data at \({\sqrt{s}} = 7\) and 8 TeV. Phys. Rev. D 93, 072004 (2016). https://doi.org/10.1103/PhysRevD.93.072004. arXiv:1509.04044

F. James, Statistical Methods in Experimental Physics, 2nd edn. (World Scientific, Hackensack, 2006). https://doi.org/10.1142/6096. ISBN:9789812567956

P. Skands, S. Carrazza, J. Rojo, Tuning PYTHIA 8.1: the Monash 2013 tune. Eur. Phys. J. C 74, 3024 (2014). https://doi.org/10.1140/epjc/s10052-014-3024-y. arXiv:1404.5630

Acknowledgements