Abstract

Normalized double-differential cross sections for top quark pair (\(\mathrm{t}\overline{\mathrm{t}}\)) production are measured in pp collisions at a centre-of-mass energy of 8\(\,\text {TeV}\) with the CMS experiment at the LHC. The analyzed data correspond to an integrated luminosity of 19.7\(\,\text {fb}^{-1}\). The measurement is performed in the dilepton \(\mathrm {e}^{\pm }\mu ^{\mp }\) final state. The \(\mathrm{t}\overline{\mathrm{t}}\) cross section is determined as a function of various pairs of observables characterizing the kinematics of the top quark and \(\mathrm{t}\overline{\mathrm{t}}\) system. The data are compared to calculations using perturbative quantum chromodynamics at next-to-leading and approximate next-to-next-to-leading orders. They are also compared to predictions of Monte Carlo event generators that complement fixed-order computations with parton showers, hadronization, and multiple-parton interactions. Overall agreement is observed with the predictions, which is improved when the latest global sets of proton parton distribution functions are used. The inclusion of the measured \(\mathrm{t}\overline{\mathrm{t}}\) cross sections in a fit of parametrized parton distribution functions is shown to have significant impact on the gluon distribution.

Similar content being viewed by others

1 Introduction

Understanding the production and properties of the top quark, discovered in 1995 at the Fermilab Tevatron [1, 2], is fundamental in testing the standard model and searching for new phenomena. A large sample of proton–proton (pp) collision events containing a top quark pair (\(\mathrm{t}\overline{\mathrm{t}}\)) has been recorded at the CERN LHC, facilitating precise top quark measurements. In particular, precise measurements of the \(\mathrm{t}\overline{\mathrm{t}}\) production cross section as a function of \(\mathrm{t}\overline{\mathrm{t}}\) kinematic observables have become possible, which allow for the validation of the most-recent predictions of perturbative quantum chromodynamics (QCD). At the LHC, top quarks are predominantly produced via gluon–gluon fusion. Thus, using measurements of the production cross section in a global fit of the parton distribution functions (PDFs) can help to better determine the gluon distribution at large values of x, where x is the fraction of the proton momentum carried by a parton [3,4,5]. In this context, \(\mathrm{t}\overline{\mathrm{t}}\) measurements are complementary to studies [6,7,8] that exploit inclusive jet production cross sections at the LHC.

Normalized differential cross sections for \(\mathrm{t}\overline{\mathrm{t}}\) production have been measured previously in proton–antiproton collisions at the Tevatron at a centre-of-mass energy of 1.96\(\,\text {TeV}\) [9, 10] and in pp collisions at the LHC at \(\sqrt{s} = 7\) \(\,\text {TeV}\) [11,12,13,14], 8\(\,\text {TeV}\) [14,15,16], and 13\(\,\text {TeV}\) [17]. This paper presents the measurement of the normalized double-differential \(\mathrm{t}\overline{\mathrm{t}} + \mathrm {X}\) production cross section, where X is inclusive in the number of extra jets in the event but excludes \(\mathrm{t}\overline{\mathrm{t}} +\mathrm{Z}/\mathrm {W}/\gamma \) production. The cross section is measured as a function of observables describing the kinematics of the top quark and \(\mathrm{t}\overline{\mathrm{t}}\): the transverse momentum of the top quark, \(p_{\mathrm {T}} (\mathrm{t})\), the rapidity of the top quark, \(y(\mathrm{t})\), the transverse momentum, \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\), the rapidity, \(y(\mathrm{t}\overline{\mathrm{t}})\), and the invariant mass, \(M(\mathrm{t}\overline{\mathrm{t}})\), of \(\mathrm{t}\overline{\mathrm{t}}\), the pseudorapidity between the top quark and antiquark, \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\), and the angle between the top quark and antiquark in the transverse plane, \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\). In total, the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section is measured as a function of six different pairs of kinematic variables.

These measurements provide a sensitive test of the standard model by probing the details of the \(\mathrm{t}\overline{\mathrm{t}}\) production dynamics. The double-differential measurement is expected to impose stronger constraints on the gluon distribution than single-differential measurements owing to the improved resolution of the momentum fractions carried by the two incoming partons.

The analysis uses the data recorded at \(\sqrt{s}=8\) \(\,\text {TeV}\) by the CMS experiment in 2012, corresponding to an integrated luminosity of \(19.7 \pm 0.5{\,\text {fb}^{-1}} \). The measurement is performed using the \(\mathrm {e}^{\pm }\mu ^{\mp }\) decay mode (\(\mathrm {e}\mu \)) of \(\mathrm{t}\overline{\mathrm{t}}\), requiring two oppositely charged leptons and at least two jets. The analysis largely follows the procedures of the single-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section measurement [15]. The restriction to the \(\mathrm {e}\mu \) channel provides a pure \(\mathrm{t}\overline{\mathrm{t}}\) event sample because of the negligible contamination from \(\mathrm{Z}/\gamma ^{*}\) processes with same-flavour leptons in the final state.

The measurements are defined at parton level and thus are corrected for the effects of hadronization and detector resolutions and inefficiencies. A regularized unfolding process is performed simultaneously in bins of the two variables in which the cross sections are measured. The normalized differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section is determined by dividing by the measured total inclusive \(\mathrm{t}\overline{\mathrm{t}}\) production cross section, where the latter is evaluated by integrating over all bins in the two observables. The parton level results are compared to different theoretical predictions from leading-order (LO) and next-to-leading-order (NLO) Monte Carlo (MC) event generators, as well as with fixed-order NLO [18] and approximate next-to-next-to-leading-order (NNLO) [19] calculations using several different PDF sets. Parametrized PDFs are fitted to the data in a procedure that is referred to as the PDF fit.

The structure of the paper is as follows: in Sect. 2 a brief description of the CMS detector is given. Details of the event simulation are provided in Sect. 3. The event selection, kinematic reconstruction, and comparisons between data and simulation are provided in Sect. 4. The two-dimensional unfolding procedure is detailed in Sect. 5; the method to determine the double-differential cross sections is presented in Sect. 6, and the assessment of the systematic uncertainties is described in Sect. 7. The results of the measurement are discussed and compared to theoretical predictions in Sect. 8. Section 9 presents the PDF fit. Finally, Sect. 10 provides a summary.

2 The CMS detector

The central feature of the CMS apparatus is a superconducting solenoid of 13\(\text { m}\) length and 6\(\text { m}\) inner diameter, which provides an axial magnetic field of 3.8\(\text { T}\). Within the field volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. Extensive forward calorimetry complements the coverage provided by the barrel and endcap sections up to \(|\eta |<5.2\). Charged particle trajectories are measured by the inner tracking system, covering a range of \(|\eta |<2.5\). The ECAL and HCAL surround the tracking volume, providing high-resolution energy and direction measurements of electrons, photons, and hadronic jets up to \(|\eta |<3\). Muons are measured in gas-ionization detectors embedded in the steel flux-return yoke outside the solenoid covering the region \(|\eta |<2.4\). The detector is nearly hermetic, allowing momentum balance measurements in the plane transverse to the beam directions. A more detailed description of the CMS detector, together with a definition of the coordinate system and the relevant kinematic variables, can be found in Ref. [20].

3 Signal and background modelling

The \(\mathrm{t}\overline{\mathrm{t}} \) signal process is simulated using the matrix element event generator MadGraph (version 5.1.5.11) [21], together with the MadSpin [22] package for the modelling of spin correlations. The pythia 6 program (version 6.426) [23] is used to model parton showering and hadronization. In the signal simulation, the mass of the top quark, \(m_{\mathrm{t}}\), is fixed to 172.5\(\,\text {GeV}\). The proton structure is described by the CTEQ6L1 PDF set [24]. The same programs are used to model dependencies on the renormalization and factorization scales, \(\mu _\mathrm {r}\) and \(\mu _\mathrm {f}\), respectively, the matching threshold between jets produced at the matrix-element level and via parton showering, and \(m_{\mathrm{t}}\).

The cross sections obtained in this paper are also compared to theoretical calculations obtained with the NLO event generators powheg (version 1.0 r1380) [25,26,27], interfaced with pythia 6 or Herwig6 (version 6.520) [28] for the subsequent parton showering and hadronization, and mc@nlo (version 3.41) [29], interfaced with herwig. Both pythia 6 and herwig 6 include a modelling of multiple-parton interactions and the underlying event. The pythia 6 Z2* tune [30] is used to characterize the underlying event in both the \(\mathrm{t}\overline{\mathrm{t}}\) and the background simulations. The herwig 6 AUET2 tune [31] is used to model the underlying event in the powheg +herwig 6 simulation, while the default tune is used in the mc@nlo +herwig 6 simulation. The PDF sets CT10 [32] and CTEQ6M [24] are used for powheg and mc@nlo, respectively. Additional simulated event samples generated with powheg and interfaced with pythia 6 or herwig 6 are used to assess the systematic uncertainties related to the modelling of the hard-scattering process and hadronization, respectively, as described in Sect. 7.

The production of W and Z/\(\gamma ^{*}\) bosons with additional jets, respectively referred to as W+jets and Z/\(\gamma ^{*}\)+jets in the following, and \(\mathrm{t}\overline{\mathrm{t}} +\mathrm{Z}/\mathrm {W}/\gamma \) backgrounds are simulated using MadGraph, while \(\mathrm {W}\) boson plus associated single top quark production (\(\mathrm{t} \mathrm {W}\)) is simulated using powheg. The showering and hadronization is modelled with pythia 6 for these processes. Diboson (WW, WZ, and ZZ) samples, as well as QCD multijet backgrounds, are produced with pythia 6. All of the background simulations are normalized to the fixed-order theoretical predictions as described in Ref. [15]. The CMS detector response is simulated using Geant4 (version 9.4) [33].

4 Event selection

The event selection follows closely the one reported in Ref. [15]. The top quark decays almost exclusively into a W boson and a bottom quark, and only events in which the two W bosons decay into exactly one electron and one muon and corresponding neutrinos are considered. Events are triggered by requiring one electron and one muon of opposite charge, one of which is required to have \(p_{\mathrm {T}} > 17\) \(\,\text {GeV}\) and the other \(p_{\mathrm {T}} > 8\) \(\,\text {GeV}\).

Events are reconstructed using a particle-flow (PF) technique [34, 35], which combines signals from all subdetectors to enhance the reconstruction and identification of the individual particles observed in pp collisions. An interaction vertex [36] is required within 24\(\text { cm}\) of the detector centre along the beam line direction, and within 2\(\text { cm}\) of the beam line in the transverse plane. Among all such vertices, the primary vertex of an event is identified as the one with the largest value of the sum of the \(p_{\mathrm {T}} ^2\) of the associated tracks. Charged hadrons from pileup events, i.e. those originating from additional pp interactions within the same or nearby bunch crossing, are subtracted on an event-by-event basis. Subsequently, the remaining neutral-hadron component from pileup is accounted for through jet energy corrections [37].

Electron candidates are reconstructed from a combination of the track momentum at the primary vertex, the corresponding energy deposition in the ECAL, and the energy sum of all bremsstrahlung photons associated with the track [38]. Muon candidates are reconstructed using the track information from the silicon tracker and the muon system. An event is required to contain at least two oppositely charged leptons, one electron and one muon, each with \(p_{\mathrm {T}} > 20\,\text {GeV} \) and \(|\eta | < 2.4\). Only the electron and the muon with the highest \(p_{\mathrm {T}}\) are considered for the analysis. The invariant mass of the selected electron and muon must be larger than 20\(\,\text {GeV}\) to suppress events from decays of heavy-flavour resonances. The leptons are required to be isolated with \(I_\text {rel}\le 0.15\) inside a cone in \(\eta \)-\(\phi \) space of \(\varDelta R = \sqrt{(\varDelta \eta )^{2} + (\varDelta \phi )^{2}} = 0.3\) around the lepton track, where \(\varDelta \eta \) and \(\varDelta \phi \) are the differences in pseudorapidity and azimuthal angle (in radians), respectively, between the directions of the lepton and any other particle. The parameter \(I_\text {rel}\) is the relative isolation parameter defined as the sum of transverse energy deposits inside the cone from charged and neutral hadrons, and photons, relative to the lepton \(p_{\mathrm {T}} \), corrected for pileup effects. The efficiencies of the lepton isolation were determined in Z boson data samples using the “tag-and-probe” method of Ref. [39], and are found to be well described by the simulation for both electrons and muons. The overall difference between data and simulation is estimated to be \({<} 2\%\) for electrons, and \({<}1\%\) for muons. The simulation is adjusted for this by using correction factors parametrized as a function of the lepton \(p_{\mathrm {T}}\) and \(\eta \) and applied to simulated events, separately for electrons and muons.

Jets are reconstructed by clustering the PF candidates using the anti-\(k_{\mathrm {T}}\) clustering algorithm [40, 41] with a distance parameter \(R = 0.5\). Electrons and muons passing less-stringent selections on lepton kinematic quantities and isolation, relative to those specified above, are identified but excluded from clustering. A jet is selected if it has \(p_{\mathrm {T}} > 30\,\text {GeV} \) and \(|\eta | < 2.4\). Jets originating from the hadronization of b quarks (b jets) are identified using an algorithm [42] that provides a b tagging discriminant by combining secondary vertices and track-based lifetime information. This provides a b tagging efficiency of \({\approx }80\)–85% for b jets and a mistagging efficiency of \({\approx }10\%\) for jets originating from gluons, as well as u, d, or s quarks, and \({\approx }30\)–40% for jets originating from c quarks [42]. Events are selected if they contain at least two jets, and at least one of these jets is b-tagged. These requirements are chosen to reduce the background contribution while keeping a large fraction of the \(\mathrm{t}\overline{\mathrm{t}}\) signal. The \(\mathrm{b}\) tagging efficiency is adjusted in the simulation with the correction factors parametrized as a function of the jet \(p_{\mathrm {T}}\) and \(\eta \).

The missing transverse momentum vector is defined as the projection on the plane perpendicular to the beams of the negative vector sum of the momenta of all PF particles in an event [43]. Its magnitude is referred to as \(p_{\mathrm {T}} ^\text {miss}\). To mitigate the pileup effects on the \(p_{\mathrm {T}} ^\text {miss}\) resolution, a multivariate correction is used where the measured momentum is separated into components that originate from the primary and from other interaction vertices [44]. No selection requirement on \(p_{\mathrm {T}} ^\text {miss}\) is applied.

The \(\mathrm{t}\overline{\mathrm{t}}\) kinematic properties are determined from the four-momenta of the decay products using the same kinematic reconstruction method [45, 46] as that of the single-differential \(\mathrm{t}\overline{\mathrm{t}}\) measurement [15]. The six unknown quantities are the three-momenta of the two neutrinos, which are reconstructed by imposing the following six kinematic constraints: \(p_{\mathrm {T}}\) conservation in the event and the masses of the W bosons, top quark, and top antiquark. The top quark and antiquark are required to have a mass of 172.5\(\,\text {GeV}\). It is assumed that the \(p_{\mathrm {T}} ^\text {miss}\) in the event results from the two neutrinos in the top quark and antiquark decay chains. To resolve the ambiguity due to multiple algebraic solutions of the equations for the neutrino momenta, the solution with the smallest invariant mass of the \(\mathrm{t}\overline{\mathrm{t}}\) system is taken. The reconstruction is performed 100 times, each time randomly smearing the measured energies and directions of the reconstructed leptons and jets within their resolution. This smearing recovers events that yielded no solution because of measurement fluctuations. The three-momenta of the two neutrinos are determined as a weighted average over all the smeared solutions. For each solution, the weight is calculated based on the expected invariant mass spectrum of a lepton and a bottom jet as the product of two weights for the top quark and antiquark decay chains. All possible lepton–jet combinations in the event are considered. Combinations are ranked based on the presence of b-tagged jets in the assignments, i.e. a combination with both leptons assigned to b-tagged jets is preferred over those with one or no b-tagged jet. Among assignments with equal number of b-tagged jets, the one with the highest average weight is chosen. Events with no solution after smearing are discarded. The method yields an average reconstruction efficiency of \({\approx }95\%\), which is determined in simulation as the fraction of selected signal events (which include only direct \(\mathrm{t}\overline{\mathrm{t}}\) decays via the \(\mathrm {e}^{\pm }\mu ^{\mp }\) channel, i.e. excluding cascade decays via \(\tau \) leptons) passing the kinematic reconstruction. The overall difference in this efficiency between data and simulation is estimated to be \({\approx }1\%\), and a corresponding correction factor is applied to the simulation [47]. A more detailed description of the kinematic reconstruction procedure can be found in Ref. [47].

In total, 38, 569 events are selected in the data. The signal contribution to the event sample is 79.2%, as estimated from the simulation. The remaining fraction of events is dominated by \(\mathrm{t}\overline{\mathrm{t}}\) decays other than via the \(\mathrm {e}^{\pm }\mu ^{\mp }\) channel (14.2%). Other sources of background are single top quark production (3.6%), Z/\(\gamma ^{*}\)+jets events (1.4%), associated \(\mathrm{t}\overline{\mathrm{t}} +\mathrm{Z}/\mathrm {W}/\gamma \) production (1.1%), and a negligible (\({<} 0.5\%\)) fraction of diboson, W+jets, and QCD multijet events.

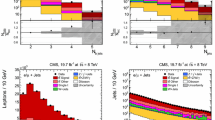

Figure 1 shows the distributions of the reconstructed top quark and \(\mathrm{t}\overline{\mathrm{t}}\) kinematic variables. In general, the data are reasonably well described by the simulation, however some trends are visible. In particular, the simulation shows a harder \(p_{\mathrm {T}} (\mathrm{t})\) spectrum than the data, as observed in previous measurements [12,13,14,15,16,17]. The \(y(\mathrm{t}\overline{\mathrm{t}})\) distribution is found to be less central in the simulation than in the data, while an opposite behavior is observed in the \(y(\mathrm{t})\) distribution. The \(M(\mathrm{t}\overline{\mathrm{t}})\) and \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) distributions are overall well described by the simulation.

Distributions of \(p_{\mathrm {T}} (\mathrm{t})\) (upper left), \(y(\mathrm{t})\) (upper right), \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) (middle left), \(y(\mathrm{t}\overline{\mathrm{t}})\) (middle right), and \(M(\mathrm{t}\overline{\mathrm{t}})\) (lower) in selected events after the kinematic reconstruction. The experimental data with the vertical bars corresponding to their statistical uncertainties are plotted together with distributions of simulated signal and different background processes. The hatched regions correspond to the shape uncertainties in the signal and backgrounds (cf. Sect. 7). The lower panel in each plot shows the ratio of the observed data event yields to those expected in the simulation

5 Signal extraction and unfolding

The number of signal events, \(N^\text {sig}_i\), is extracted from the data in the ith bin of the reconstructed observables using

where n denotes the total number of bins, \(N^\mathrm{{sel}}_i\) is the number of selected events in the ith bin, and \(N^\mathrm{{bkg}}_i\) corresponds to the expected number of background events in this bin, except for \(\mathrm{t}\overline{\mathrm{t}} \) final states other than the signal. The latter are dominated by events in which one or both of the intermediate W bosons decay into \(\tau \) leptons with subsequent decay into an electron or muon. Since these events arise from the same \(\mathrm{t}\overline{\mathrm{t}}\) production process as the signal, the normalisation of this background is fixed to that of the signal. The expected signal fraction is defined as the ratio of the number of selected \(\mathrm{t}\overline{\mathrm{t}}\) signal events to the total number of selected \(\mathrm{t}\overline{\mathrm{t}}\) events (i.e. the signal and all other \(\mathrm{t}\overline{\mathrm{t}}\) events) in simulation. This procedure avoids the dependence on the total inclusive \(\mathrm{t}\overline{\mathrm{t}}\) cross section used in the normalization of the simulated signal sample.

The signal yields \(N^\mathrm{{sig}}_i\), determined in each ith bin of the reconstructed kinematic variables, may contain entries that were originally produced in other bins and have migrated because of the imperfect resolutions. This effect can be described as

where m denotes the total number of bins in the true distribution, and \({M}_{j}^\mathrm{{unf}}\) is the number of events in the jth bin of the true distribution from data. The quantity \({M}^\mathrm{{sig}}_i\) is the expected number of events at detector level in the ith bin, and \(A_{ij}\) is a matrix of probabilities describing the migrations from the jth bin of the true distribution to the ith bin of the detector-level distribution, including acceptance and detector efficiencies. In this analysis, the migration matrix \(A_{ij}\) is defined such that the true level corresponds to the full phase space (with no kinematic restrictions) for \(\mathrm{t}\overline{\mathrm{t}}\) production at parton level. At the detector level a binning is chosen in the same kinematic ranges as at the true level, but with the total number of bins typically a few times larger. The kinematic ranges of all variables are chosen such that the fraction of events that migrate into the regions outside the measured range is very small. It was checked that the inclusion of overflow bins outside the kinematic ranges does not significantly alter the unfolded results. The migration matrix \(A_{ij}\) is taken from the signal simulation. The observed event counts \(N^\mathrm{{sig}}_i\) may be different from \({M}_i^\mathrm{{sig}}\) owing to statistical fluctuations.

The estimated value of \({M}_{j}^\mathrm{{unf}}\), designated as \(\hat{M_{j}}^\mathrm{{unf}}\), is found using the TUnfold algorithm [48]. The unfolding of multidimensional distributions is performed by mapping the multidimensional arrays to one-dimensional arrays internally [48]. The unfolding is realized by a \(\chi ^2\) minimization and includes an additional \(\chi ^2\) term representing the Tikhonov regularization [49]. The regularization reduces the effect of the statistical fluctuations present in \(N^\mathrm{{sig}}_i\) on the high-frequency content of \(\hat{M_{j}}^\mathrm{{unf}}\). The regularization strength is chosen such that the global correlation coefficient is minimal [50]. For the measurements presented here, this choice results in a small contribution from the regularization term to the total \(\chi ^2\), on the order of 1%. A more detailed description of the unfolding procedure can be found in Ref. [47].

6 Cross section determination

The normalized double-differential cross sections of \(\mathrm{t}\overline{\mathrm{t}}\) production are measured in the full \(\mathrm{t}\overline{\mathrm{t}}\) kinematic phase space at parton level. The number of unfolded signal events \(\hat{M}^\mathrm{{unf}}_{ij}\) in bin i of variable x and bin j of variable y is used to define the normalized double-differential cross sections of the \(\mathrm{t}\overline{\mathrm{t}}\) production process,

where \(\sigma \) is the total cross section, which is evaluated by integrating \((\mathrm{d}^{2}\sigma /\mathrm{d}x\,\mathrm{d}y)_{ij}\) over all bins. The branching fraction of \(\mathrm{t}\overline{\mathrm{t}}\) into \(\mathrm {e}\mu \) final state is taken to be \(\mathcal {B} = 2.3\%\) [51], and \(\mathcal {L}\) is the integrated luminosity of the data sample. The bin widths of the x and y variables are denoted by \(\varDelta x_{i}\) and \(\varDelta y_{j}\), respectively. The bin widths are chosen based on the resolution, such that the purity and the stability of each bin is generally above 30%. For a given bin, the purity is defined as the fraction of events in the \(\mathrm{t}\overline{\mathrm{t}}\) signal simulation that are generated and reconstructed in the same bin with respect to the total number of events reconstructed in that bin. To evaluate the stability, the number of events in the \(\mathrm{t}\overline{\mathrm{t}}\) signal simulation that are generated and reconstructed in a given bin are divided by the total number of reconstructed events generated in the bin.

7 Systematic uncertainties

The measurement is affected by systematic uncertainties that originate from detector effects and from the modelling of the processes. Each source of systematic uncertainty is assessed individually by changing in the simulation the corresponding efficiency, resolution, or scale by its uncertainty, using a prescription similar to the one followed in Ref. [15]. For each change made, the cross section determination is repeated, and the difference with respect to the nominal result in each bin is taken as the systematic uncertainty.

To account for the pileup uncertainty, the value of the total \(\mathrm {p}\mathrm {p}\) inelastic cross section, which is used to estimate the mean number of additional pp interactions, is varied by \({\pm }5\%\) [52]. The data-to-simulation correction factors for \(\mathrm{b} \) tagging and mistagging efficiencies are varied within their uncertainties [42] as a function of the \(p_{\mathrm {T}} \) and \(|\eta |\) of the jet, following the procedure described in Ref. [15]. The data-to-simulation correction factors for the trigger efficiency, determined relatively to the triggers based on \(p_{\mathrm {T}} ^\text {miss}\), are varied within their uncertainty of 1%. The systematic uncertainty related to the kinematic reconstruction of top quarks is assessed by varying the MC correction factor by its estimated uncertainty of \({\pm }1\)% [47]. For the uncertainties related to the jet energy scale, the jet energy is varied in the simulation within its uncertainty [53]. The uncertainty owing to the limited knowledge of the jet energy resolution is determined by changing the latter in the simulation by \({\pm }1\) standard deviation in different \(\eta \) regions [54]. The normalizations of the background processes are varied by 30% to account for the corresponding uncertainty. The uncertainty in the integrated luminosity of 2.6% [55] is propagated to the measured cross sections.

The impact of theoretical assumptions on the measurement is determined by repeating the analysis replacing the standard MadGraph \(\mathrm{t}\overline{\mathrm{t}}\) simulation with simulated samples in which specific parameters or assumptions are altered. The PDF systematic uncertainty is estimated by reweighting the MadGraph \(\mathrm{t}\overline{\mathrm{t}}\) signal sample according to the uncertainties in the CT10 PDF set, evaluated at 90% confidence level (CL) [32], and then rescaled to 68% CL. To estimate the uncertainty related to the choice of the tree-level multijet scattering model used in MadGraph, the results are recalculated using an alternative prescription for interfacing NLO calculations with parton showering as implemented in powheg. For \(\mu _\mathrm {r}\) and \(\mu _\mathrm {f}\), two samples are used with the scales being simultaneously increased or decreased by a factor of two relative to their common nominal value \(\mu _\mathrm {r} = \mu _\mathrm {f} = \sqrt{\smash [b]{m^2_{\mathrm{t}} + \Sigma p_{\mathrm {T}} ^2}}\), where the sum is over all additional final-state partons in the matrix element. The effect of additional jet production is studied by varying in MadGraph the matching threshold between jets produced at the matrix-element level and via parton showering. The uncertainty in the effect of the initial- and final-state radiation on the signal efficiency is covered by the uncertainty in \(\mu _\mathrm {r}\) and \(\mu _\mathrm {f}\), as well as in the matching threshold. The samples generated with powheg +herwig 6 and powheg +pythia 6 are used to estimate the uncertainty related to the choice of the showering and hadronization model. The effect due to uncertainties in \(m_{\mathrm{t}}\) is estimated using simulations with altered top quark masses. The cross section differences observed for an \(m_{\mathrm{t}}\) variation of 1\(\,\text {GeV}\) around the central value of 172.5\(\,\text {GeV}\) used in the simulation is quoted as the uncertainty.

The total systematic uncertainty is estimated by adding all the contributions described above in quadrature, separately for positive and negative cross section variations. If a systematic uncertainty results in two cross section variations of the same sign, the largest one is taken, while the opposite variation is set to zero.

8 Results

Normalized differential \(\mathrm{t}\overline{\mathrm{t}}\) cross sections are measured as a function of pairs of variables representing the kinematics of the top quark (only the top quark is taken and not the top antiquark, thus avoiding any double counting of events), and \(\mathrm{t}\overline{\mathrm{t}}\) system, defined in Sect. 1: \([p_{\mathrm {T}} (\mathrm{t}), y(\mathrm{t}) ]\), \([y(\mathrm{t}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), \([y(\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), \([\varDelta \eta (\mathrm{t},\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), \([p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), and \([\varDelta \phi (\mathrm{t},\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\). These pairs are chosen in order to obtain representative combinations that are sensitive to different aspects of the \(\mathrm{t}\overline{\mathrm{t}}\) production dynamics, as will be discussed in the following.

In general, the systematic uncertainties are of similar size to the statistical uncertainties. The dominant systematic uncertainties are those in the signal modelling, which also are affected by the statistical uncertainties in the simulated samples that are used for the evaluation of these uncertainties. The largest experimental systematic uncertainty is the jet energy scale. The measured double-differential normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross sections are compared in Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12 and 13 to theoretical predictions obtained using different MC generators and fixed-order QCD calculations. The numerical values of the measured cross sections and their uncertainties are provided in Appendix A.

8.1 Comparison to MC models

In Fig. 2, the \(p_{\mathrm {T}} (\mathrm{t})\) distribution is compared in different ranges of \(|y(\mathrm{t}) |\) to predictions from MadGraph +pythia 6, powheg +pythia 6, powheg +herwig 6, and mc@nlo +herwig 6. The data distribution is softer than that of the MC expectation over almost the entire \(y(\mathrm{t}) \) range, except at high \(|y(\mathrm{t}) |\) values. The disagreement level is the strongest for MadGraph +pythia 6, while powheg +herwig 6 describes the data best.

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(p_{\mathrm {T}} (\mathrm{t})\) in different \(|y(\mathrm{t}) |\) ranges to MC predictions calculated using MadGraph +pythia 6, powheg +pythia 6, powheg +herwig 6, and mc@nlo +herwig 6. The inner vertical bars on the data points represent the statistical uncertainties and the full bars include also the systematic uncertainties added in quadrature. In the bottom panel, the ratios of the data and other simulations to the MadGraph +pythia 6 (MG+P) predictions are shown

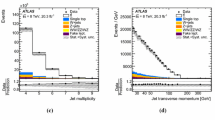

Figures 3 and 4 illustrate the distributions of \(|y(\mathrm{t}) |\) and \(|y(\mathrm{t}\overline{\mathrm{t}}) |\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges compared to the same set of MC models. While the agreement between the data and MC predictions is good in the lower ranges of \(M(\mathrm{t}\overline{\mathrm{t}})\), the simulation starts to deviate from the data at higher \(M(\mathrm{t}\overline{\mathrm{t}})\), where the predictions are more central than the data for \(y(\mathrm{t})\) and less central for \(y(\mathrm{t}\overline{\mathrm{t}})\).

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(|y(\mathrm{t}) |\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to MC predictions. Details can be found in the caption of Fig. 2

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(|y(\mathrm{t}\overline{\mathrm{t}}) |\) in different of \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to MC predictions. Details can be found in the caption of Fig. 2

In Fig. 5, the \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\) distribution is compared in the same \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to the MC predictions. For all generators there is a discrepancy between the data and simulation for the medium \(M(\mathrm{t}\overline{\mathrm{t}})\) bins, where the predicted \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\) values are too low. The disagreement is the strongest for MadGraph +pythia 6.

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to MC predictions. Details can be found in the caption of Fig. 2

Figures 6 and 7 illustrate the comparison of the distributions of \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) and \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) in the same \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to the MC models. For the \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) distribution (Fig. 6), which is sensitive to radiation, none of the MC generators provide a good description. The largest differences are observed between the data and powheg +pythia 6 for the highest values of \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\), where the predictions lie above the data. For the \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) distribution (Fig. 7), all MC models describe the data reasonably well.

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to MC predictions. Details can be found in the caption of Fig. 2

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to MC predictions. Details can be found in the caption of Fig. 2

In order to perform a quantitative comparison of the measured cross sections to all considered MC generators, \(\chi ^2\) values are calculated as follows:

where \(\mathbf {R}_{N-1}\) is the column vector of the residuals calculated as the difference of the measured cross sections and the corresponding predictions obtained by discarding one of the N bins, and \(\mathbf {Cov}_{N-1}\) is the \((N-1)\times (N-1)\) submatrix obtained from the full covariance matrix by discarding the corresponding row and column. The matrix \(\mathbf {Cov}_{N-1}\) obtained in this way is invertible, while the original covariance matrix \(\mathbf {Cov}\) is singular. This is because for normalized cross sections one loses one degree of freedom, as can be deduced from Eq. (3). The covariance matrix \(\mathbf {Cov}\) is calculated as:

where \(\mathbf {Cov}^\text {unf}\) and \(\mathbf {Cov}^\mathrm{{syst}}\) are the covariance matrices accounting for the statistical uncertainties from the unfolding, and the systematic uncertainties, respectively. The systematic covariance matrix \(\mathbf {Cov}^\mathrm{{syst}}\) is calculated as:

where \(C_{i,k}\) stands for the systematic uncertainty from source k in the ith bin, which consists of one variation only, and \(C^{+}_{i,k'}\) and \(C^{-}_{i,k'}\) stand for the positive and negative variations, respectively, of the systematic uncertainty due to source \(k'\) in the ith bin. The sums run over all sources of the corresponding systematic uncertainties. All systematic uncertainties are treated as additive, i.e. the relative uncertainties are used to scale the corresponding measured value in the construction of \(\mathbf {Cov}^\mathrm{{syst}}\). This treatment is consistent with the cross section normalization. The cross section measurements for different pairs of observables are statistically and systematically correlated. No attempt is made to quantify the correlations between bins from different double-differential distributions. Thus, quantitative comparisons between theoretical predictions and the data can only be made for individual distributions.

The obtained \(\chi ^2\) values, together with the corresponding numbers of degrees of freedom (dof), are listed in Table 1. From these values one can conclude that none of the considered MC generators is able to correctly describe all distributions. In particular, for \([\varDelta \eta (\mathrm{t},\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\) and \([p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), the \(\chi ^2\) values are relatively large for all MC generators. The best agreement with the data is provided by powheg +herwig 6.

8.2 Comparison to fixed-order calculations

Fixed-order theoretical calculations for fully differential cross sections in inclusive \(\mathrm{t}\overline{\mathrm{t}}\) production are publicly available at NLO \(O(\alpha _s^3)\) in the fixed-flavour number scheme [18], where \(\alpha _s\) is the strong coupling strength. The exact fully differential NNLO \(O(\alpha _s^4)\) calculations for \(\mathrm{t}\overline{\mathrm{t}}\) production have recently appeared in the literature [56, 57], but are not yet publicly available. For higher orders, the cross sections as functions of single-particle kinematic variables have been calculated at approximate NNLO \(O(\alpha _s^4)\) [19] and next-to-next-to-next-to-leading-order \(O(\alpha _s^5)\) [58], using methods of threshold resummation beyond the leading-logarithmic accuracy.

The measured cross sections are compared with NLO QCD predictions based on several PDF sets. The predictions are calculated using the mcfm program (version 6.8) [59] and a number of the latest PDF sets, namely: ABM11 [60], CJ15 [61], CT14 [62], HERAPDF2.0 [63], JR14 [64], MMHT2014 [65], and NNPDF3.0 [66], available via the lhapdf interface (version 6.1.5) [67]. The number of active flavours is set to \(n_f = 5\) and the top quark pole mass \(m_{\mathrm{t}} = 172.5\) \(\,\text {GeV}\) is used. The effect of using \(n_f = 6\) in the PDF evolution, i.e. treating the top quark as a massless parton above threshold (as was done, e.g. in HERAPDF2.0 [63]), has been checked and the differences were found to be \({<}0.1\%\) (also see the corresponding discussion in Ref. [66]). The renormalization and factorization scales are chosen to be \(\mu _\mathrm {r} = \mu _\mathrm {f} = \sqrt{\smash [b]{m_{\mathrm{t}}^2+[p_{\mathrm {T}} ^2({\mathrm{t}})+p_{\mathrm {T}} ^2(\overline{\mathrm{t}})]/2}}\), whereas \(\alpha _s\) is set to the value used for the corresponding PDF extraction. The theoretical uncertainty is estimated by varying \(\mu _\mathrm {r}\) and \(\mu _\mathrm {f}\) independently up and down by a factor of 2, subject to the additional restriction that the ratio \(\mu _\mathrm {r} / \mu _\mathrm {f}\) be between 0.5 and 2 [68] (referred to hereafter as scale uncertainties). These uncertainties are supposed to estimate the missing higher-order corrections. The PDF uncertainties are taken into account in the theoretical predictions for each PDF set. The PDF uncertainties of CJ15 [61] and CT14 [62], evaluated at 90% CL, are rescaled to the 68% CL. The uncertainties in the normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross sections originating from \(\alpha _s\) and \(m_{\mathrm{t}}\) are found to be negligible (\({<}1\%\)) compared to the current data precision and thus are not considered.

For the double-differential cross section as a function of \(p_{\mathrm {T}} (\mathrm{t})\) and \(y(\mathrm{t})\), approximate NNLO predictions [19] are obtained using the DiffTop program [4, 69, 70]. In this calculation, the scales are set to \(\mu _\mathrm {r} = \mu _\mathrm {f} = \sqrt{\smash [b]{m_{\mathrm{t}}^2+p_{\mathrm {T}} ^2({\mathrm{t}})}}\) and NNLO variants of the PDF sets are used. For the ABM PDFs, the recent version ABM12 [71] is used, which is available only at NNLO. Predictions using DiffTop are not available for the rest of the measured cross sections that involve \(\mathrm{t}\overline{\mathrm{t}}\) kinematic variables.

A quantitative comparison of the measured double-differential cross sections to the theoretical predictions is performed by evaluating the \(\chi ^2\) values, as described in Sect. 8.1. The results are listed in Tables 2 and 3 for the NLO and approximate NNLO calculations, respectively. For the NLO predictions, additional \(\chi ^2\) values are reported including the corresponding PDF uncertainties, i.e. Eq. (5) becomes \(\mathbf {Cov} = \mathbf {Cov}^\text {unf} + \mathbf {Cov}^\text {syst} + \mathbf {Cov}^\mathrm {PDF}\), where \(\mathbf {Cov}^\mathrm {PDF}\) is a covariance matrix that accounts for the PDF uncertainties. Theoretical uncertainties from scale variations are not included in this \(\chi ^2\) calculation. The NLO predictions with recent global PDFs using LHC data, namely MMHT2014, CT14, and NNPDF3.0, are found to describe the \(p_{\mathrm {T}} (\mathrm{t})\), \(y(\mathrm{t})\), and \(y(\mathrm{t}\overline{\mathrm{t}})\) cross sections reasonably, as illustrated by the \(\chi ^2\) values. The CJ15 PDF set also provides a good description of these cross sections, although it does not include LHC data [61]. The ABM11, JR14, and HERAPDF2.0 sets yield a poorer description of the data. Large differences between the data and the nominal NLO calculations are observed for the \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\), \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\), and \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) cross sections. It is noteworthy that the scale uncertainties in the predictions, which are of comparable size or exceed the experimental uncertainties, are not taken into account in the \(\chi ^2\) calculations. The \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) and \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) normalized cross sections are represented at LO \(O(\alpha _s^2)\) by delta functions, and nontrivial shapes appear at \(O(\alpha _s^3)\), thus resulting in large NLO scale uncertainties [18]. Compared to the NLO predictions, the approximate NNLO predictions using NNLO PDF sets (where available) provide an improved description of the \(p_{\mathrm {T}} (\mathrm{t})\) cross sections in different \(|y(\mathrm{t}) |\) ranges.

To visualize the comparison of the measurements to the theoretical predictions, the results obtained using the NLO and approximate NNLO calculations with the CT14 PDF set are compared to the measured \(p_{\mathrm {T}} (\mathrm{t})\) cross sections in different \(|y(\mathrm{t}) |\) ranges in Fig. 8. To further illustrate the sensitivity to PDFs, the nominal values of the NLO predictions using HERAPDF2.0 are shown as well. Similar comparisons, in regions of \(M(\mathrm{t}\overline{\mathrm{t}})\), for the \(|y(\mathrm{t}) |\), \(|y(\mathrm{t}\overline{\mathrm{t}}) |\), \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\), \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\), and \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) cross sections are presented in Figs. 9, 10, 11, 12 and 13. Considering the scale uncertainties in the predictions, the agreement between the measurement and predictions is reasonable for all distributions. For the \(p_{\mathrm {T}} (\mathrm{t})\), \(y(\mathrm{t})\), and \(y(\mathrm{t}\overline{\mathrm{t}})\) cross sections, the scale uncertainties in the predictions reach 4% at maximum. They increase to 8% for the \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\) cross section, and vary within \(20\text {--}50\%\) for the \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) and \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) cross sections, where larger differences between data and predictions are observed. For the \(p_{\mathrm {T}} (\mathrm{t})\), \(y(\mathrm{t})\), and \(y(\mathrm{t}\overline{\mathrm{t}})\) cross sections, the PDF uncertainties as estimated from the CT14 PDF set are of the same size or larger than the scale uncertainties. The HERAPDF2.0 predictions are mostly outside the total CT14 uncertainty band, showing also some visible shape differences with respect to CT14. The approximate NNLO predictions provide an improved description of the \(p_{\mathrm {T}} (\mathrm{t})\) shape.

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(p_{\mathrm {T}} (\mathrm{t})\) in different \(|y(\mathrm{t}) |\) ranges to NLO \(O(\alpha _s^3)\) (MNR) predictions calculated with CT14 and HERAPDF2.0, and approximate NNLO \(O(\alpha _s^4)\) (DiffTop) prediction calculated with CT14. The inner vertical bars on the data points represent the statistical uncertainties and the full bars include also the systematic uncertainties added in quadrature. The light band shows the scale uncertainties (\(\mu \)) for the NLO predictions using CT14, while the dark band includes also the PDF uncertainties added in quadrature (\(\mu + \mathrm {PDF}\)). The dotted line shows the NLO predictions calculated with HERAPDF2.0. The dashed line shows the approximate NNLO predictions calculated with CT14. In the bottom panel, the ratios of the data and other calculations to the NLO prediction using CT14 are shown

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(|y(\mathrm{t}) |\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to NLO \(O(\alpha _s^3)\) predictions. Details can be found in the caption of Fig. 8. Approximate NNLO \(O(\alpha _s^4)\) predictions are not available for this cross section

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(|y(\mathrm{t}\overline{\mathrm{t}}) |\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to NLO \(O(\alpha _s^3)\) predictions. Details can be found in the caption of Fig. 8. Approximate NNLO \(O(\alpha _s^4)\) predictions are not available for this cross section

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to NLO \(O(\alpha _s^3)\) predictions. Details can be found in the caption of Fig. 8. Approximate NNLO \(O(\alpha _s^4)\) predictions are not available for this cross section

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}})\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to NLO \(O(\alpha _s^3)\) predictions. Details can be found in the caption of Fig. 8. Approximate NNLO \(O(\alpha _s^4)\) predictions are not available for this cross section

Comparison of the measured normalized \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section as a function of \(\varDelta \phi (\mathrm{t},\overline{\mathrm{t}})\) in different \(M(\mathrm{t}\overline{\mathrm{t}})\) ranges to NLO \(O(\alpha _s^3)\) predictions. Details can be found in the caption of Fig. 8. Approximate NNLO \(O(\alpha _s^4)\) predictions are not available for this cross section

The data-to-theory comparisons illustrate the power of the measured normalized cross sections as a function of \([p_{\mathrm {T}} (\mathrm{t}), y(\mathrm{t}) ]\), \([y(\mathrm{t}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), and \([y(\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\) to eventually distinguish between modern PDF sets. Such a study is performed on these data and described in the next section. The remaining measured normalized cross sections as a function of \([\varDelta \eta (\mathrm{t},\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), \([p_{\mathrm {T}} (\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), and \([\varDelta \phi (\mathrm{t},\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\) could be used for this purpose as well, once higher-order QCD calculations become publicly available to match the data precision. Moreover, since the latter distributions are more sensitive to QCD radiation, they will provide additional input in testing improvements to the perturbative calculations.

9 The PDF fit

The double-differential normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross sections are used in a PDF fit at NLO, together with the combined HERA inclusive deep inelastic scattering (DIS) data [63] and the CMS measurement of the \(\mathrm {W}^\pm \) boson charge asymmetry at \(\sqrt{s} = 8\) \(\,\text {TeV}\) [72]. The fitted PDFs are also compared to the ones obtained in the recently published CMS measurement of inclusive jet production at 8\(\,\text {TeV}\) [8]. The xFitter program (formerly known as HERAFitter) [73] (version 1.2.0), an open-source QCD fit framework for PDF determination, is used. The precise HERA DIS data, obtained from the combination of individual H1 and ZEUS results, are directly sensitive to the valence and sea quark distributions and probe the gluon distribution through scaling violations. Therefore, these data form the core of all PDF fits. The CMS \(\mathrm {W}^\pm \) boson charge asymmetry data provide further constraints on the valence quark distributions, as discussed in Ref. [72]. The measured double-differential normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross sections are included in the fit to constrain the gluon distribution at high x values. The typical probed x values can be estimated using the LO kinematic relation \(x = (M(\mathrm{t}\overline{\mathrm{t}})/\sqrt{s})\exp {[\pm y(\mathrm{t}\overline{\mathrm{t}})]}\). Therefore, the present measurement is expected to be sensitive to x values in the region \(0.01 \lesssim x \lesssim 0.25\), as estimated using the highest or lowest \(|y(\mathrm{t}\overline{\mathrm{t}}) |\) or \(M(\mathrm{t}\overline{\mathrm{t}})\) bins and taking the low or high bin edge where the cross section is largest (see Table 11).

9.1 Details of the PDF fit

The scale evolution of partons is calculated through DGLAP equations [74,75,76,77,78,79,80] at NLO, as implemented in the qcdnum program [81] (version 17.01.11). The Thorne–Roberts [82,83,84] variable-flavour number scheme at NLO is used for the treatment of the heavy-quark contributions. The number of flavours is set to 5, with c and b quark mass parameters \(M_{\mathrm{c}}= 1.47\) \(\,\text {GeV}\) and \(M_{\mathrm{b}} = 4.5\) \(\,\text {GeV}\) [63]. The theoretical predictions for the \(\mathrm {W}^\pm \) boson charge asymmetry data are calculated at NLO [85] using the mcfm program, which is interfaced with ApplGrid (version 1.4.70) [86], as described in Ref. [72]. For the DIS and \(\mathrm {W}^\pm \) boson charge asymmetry data \(\mu _\mathrm {r}\) and \(\mu _\mathrm {f}\) are set to Q, which denotes the four-momentum transfer in the case of the DIS data, and the mass of the \(\mathrm {W}^{\pm }\) boson in the case of the \(\mathrm {W}^\pm \) boson charge asymmetry. The theoretical predictions for the \(\mathrm{t}\overline{\mathrm{t}}\) cross sections are calculated as described in Sect. 8.2 and included in the fit using the mcfm and ApplGrid programs. The strong coupling strength is set to \(\alpha _s(m_{\mathrm{Z}}) = 0.118\). The \(Q^2\) range of the HERA data is restricted to \(Q^2 > Q^2_\text {min} = 3.5\,\text {GeV} ^2\) [63].

The procedure for the determination of the PDFs follows the approach of HERAPDF2.0 [63]. The parametrized PDFs are the gluon distribution \(x\mathrm{g} (x)\), the valence quark distributions \(x\mathrm{u}_v(x)\) and \(x\mathrm{d}_v(x)\), and the \(\mathrm{u}\)- and \(\mathrm{d}\)-type antiquark distributions \(x\overline{U}(x)\) and \(x\overline{D}(x)\). At the initial QCD evolution scale \(\mu _\mathrm {f0}^2 = 1.9\,\text {GeV} ^2\), the PDFs are parametrized as:

assuming the relations \(x\overline{U}(x) = x\overline{\mathrm{u}}(x)\) and \(x\overline{D}(x) = x\overline{\mathrm{d}}(x) + x\overline{\mathrm{s}}(x)\). Here, \(x\overline{\mathrm{u}}(x)\), \(x\overline{\mathrm{d}}(x)\), and \(x\overline{\mathrm{s}}(x)\) are the up, down, and strange antiquark distributions, respectively. The sea quark distribution is defined as x\(\varSigma (x)=x\overline{\mathrm{u}}(x)+x\overline{\mathrm{d}}(x)+x \overline{\mathrm{s}}(x)\). The normalization parameters \(A_{\mathrm{u}_{{v}}}\), \(A_{\mathrm{d}_{v}}\), and \(A_{\mathrm{g}}\) are determined by the QCD sum rules. The B and \(B'\) parameters determine the PDFs at small x, and the C parameters describe the shape of the distributions as \(x\,{\rightarrow }\,1\). The parameter \(C'_{\mathrm{g}}\) is fixed to 25 [87]. Additional constraints \(B_{\overline{{U}}} = B_{\overline{{D}}}\) and \(A_{\overline{{U}}} = A_{\overline{{D}}}(1 - f_{\mathrm{s}})\) are imposed to ensure the same normalization for the \(x\overline{\mathrm{u}}\) and \(x\overline{\mathrm{d}}\) distributions as \(x \rightarrow 0\). The strangeness fraction \(f_{\mathrm{s}} = x\overline{\mathrm{s}}/( x\overline{\mathrm{d}}+ x\overline{\mathrm{s}})\) is fixed to \(f_{\mathrm{s}}=0.4\) as in the HERAPDF2.0 analysis [63]. This value is consistent with the determination of the strangeness fraction when using the CMS measurements of \(\mathrm {W}+{\mathrm{c}}\) production [88].

The parameters in Eq. (7) are selected by first fitting with all D, E, and F parameters set to zero, and then including them independently one at a time in the fit. The improvement in the \(\chi ^2\) of the fit is monitored and the procedure is stopped when no further improvement is observed. This leads to an 18-parameter fit. The \(\chi ^2\) definition used for the HERA DIS data follows that of Eq. (32) in Ref. [63]. It includes an additional logarithmic term that is relevant when the estimated statistical and uncorrelated systematic uncertainties in the data are rescaled during the fit [89]. For the CMS \(\mathrm {W}^\pm \) boson charge asymmetry and \(\mathrm{t}\overline{\mathrm{t}}\) data presented here a \(\chi ^2\) definition without such a logarithmic term is employed. The full covariance matrix representing the statistical and uncorrelated systematic uncertainties of the data is used in the fit. The correlated systematic uncertainties are treated through nuisance parameters. For each nuisance parameter a penalty term is added to the \(\chi ^2\), representing the prior knowledge of the parameter. The treatment of the experimental uncertainties for the HERA DIS and CMS \(\mathrm {W}^\pm \) boson charge asymmetry data follows the prescription given in Refs. [63] and [72], respectively. The treatment of the experimental uncertainties in the \(\mathrm{t}\overline{\mathrm{t}}\) double-differential cross section measurements follows the prescription given in Sect. 8.1. The experimental systematic uncertainties owing to the PDFs are omitted in the PDF fit.

The PDF uncertainties are estimated according to the general approach of HERAPDF2.0 [63] in which the fit, model, and parametrization uncertainties are taken into account. Fit uncertainties are determined using the tolerance criterion of \(\varDelta \chi ^2 =1\). Model uncertainties arise from the variations in the values assumed for the b and c quark mass parameters of \(4.25\le M_{\mathrm{b}}\le 4.75\,\text {GeV} \) and \(1.41\le M_{\mathrm{c}}\le 1.53\,\text {GeV} \), the strangeness fraction \(0.3 \le f_{\mathrm{s}} \le 0.4\), and the value of \(Q^2_{\text {min}}\) imposed on the HERA data. The latter is varied within \(2.5 \le Q^2_{\text {min}}\le 5.0\,\text {GeV} ^2\), following Ref. [63]. The parametrization uncertainty is estimated by extending the functional form in Eq. (7) of all parton distributions with additional parameters D, E, and F added one at a time. Furthermore, \(\mu _\mathrm {f0}^2\) is changed to 1.6 and \(2.2\,\text {GeV} ^2\). The parametrization uncertainty is constructed as an envelope at each x value, built from the maximal differences between the PDFs resulting from the central fit and all parametrization variations. This uncertainty is valid in the x range covered by the PDF fit to the data. The total PDF uncertainty is obtained by adding the fit, model, and parametrization uncertainties in quadrature. In the following, the quoted uncertainties correspond to 68% CL.

9.2 Impact of the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section measurements

The PDF fit is first performed using only the HERA DIS and CMS \(\mathrm {W}^\pm \) boson charge asymmetry data. To demonstrate the added value of the double-differential normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross sections, \([p_{\mathrm {T}} (\mathrm{t}), y(\mathrm{t}) ]\), \([y(\mathrm{t}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), and \([y(\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\) measurements are added to the fit one at a time. The global and partial \(\chi ^2\) values for all variants of the fit are listed in Table 4, illustrating the consistency among the input data. The DIS data show \(\chi ^2\)/dof values slightly larger than unity. This is similar to what is observed and investigated in Ref. [63]. Fit results consistent with those from Ref. [72] are obtained using the \(\mathrm {W}^\pm \) boson charge asymmetry measurements.

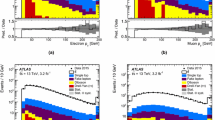

The resulting gluon, valence quark, and sea quark distributions are shown in Fig. 14 at the scale \(\mu _\mathrm{{f}}^2=30{,} 000\,\text {GeV} ^2 \simeq m_{\mathrm{t}}^2\) relevant for \(\mathrm{t}\overline{\mathrm{t}}\) production. For a direct comparison, the distributions for all variants of the fit are normalized to the results from the fit using only the DIS and \(\mathrm {W}^\pm \) boson charge asymmetry data. The reduction of the uncertainties is further illustrated in Fig. 15. The uncertainties in the gluon distribution at \(x>0.01\) are significantly reduced once the \(\mathrm{t}\overline{\mathrm{t}}\) data are included in the fit. The largest improvement comes from the \([y(\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\) cross section by which the total gluon PDF uncertainty is reduced by more than a factor of two at \(x \simeq 0.3\). This value of x is at the edge of kinematic reach of the current \(\mathrm{t}\overline{\mathrm{t}}\) measurement. At higher values \(x \gtrsim 0.3\), the gluon distribution is not directly constrained by the data and should be considered as an extrapolation that relies on the PDF parametrization assumptions. No substantial effects on the valence quark and sea quark distributions are observed. The variation of \(\mu _\mathrm {r}\) and \(\mu _\mathrm {f}\) in the prediction of the normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross sections has been performed and the effect on the fitted PDFs is found to be well within the total uncertainty.

The gluon (upper left), sea quark (upper right), u valence quark (lower left), and d valence quark (lower right) PDFs at \(\mu _\mathrm{{f}}^2=30{,} 000\,\text {GeV} ^2\), as obtained in all variants of the PDF fit, normalized to the results from the fit using the HERA DIS and CMS \(\mathrm {W}^\pm \) boson charge asymmetry measurements only. The shaded, hatched, and dotted areas represent the total uncertainty in each of the fits

The gluon distribution obtained from fitting the measured \([y(\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\) cross section is compared in Fig. 16 to the one obtained in a similar study using the CMS measurement of inclusive jet production at 8\(\,\text {TeV}\) [8]. The two results are in agreement in the probed x range. The constraints provided by the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) measurement are competitive with those from the inclusive jet data.

The gluon distribution at \(\mu _\mathrm{{f}}^2=30{,} 000\,\text {GeV} ^2\), as obtained from the PDF fit to the HERA DIS data and CMS \(\mathrm {W}^\pm \) boson charge asymmetry measurements (shaded area), the CMS inclusive jet production cross sections (hatched area), and the \(\mathrm {W}^\pm \) boson charge asymmetry plus the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section (dotted area). All presented PDFs are normalized to the results from the fit using the DIS and \(\mathrm {W}^\pm \) boson charge asymmetry measurements. The shaded, hatched, and dotted areas represent the total uncertainty in each of the fits

9.3 Comparison to the impact of single-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section measurements

The power of the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) measurement in fitting PDFs is compared with that of the single-differential analysis, where the \(\mathrm{t}\overline{\mathrm{t}}\) cross section is measured as a function of \(p_{\mathrm {T}} (\mathrm{t})\), \(y(\mathrm{t})\), \(y(\mathrm{t}\overline{\mathrm{t}})\), and \(M(\mathrm{t}\overline{\mathrm{t}})\), employing in one dimension the same procedure described in this paper. The measurements are added, one at a time, to the HERA DIS and CMS \(\mathrm {W}^\pm \) boson charge asymmetry data in the PDF fit. The reduction of the uncertainties for the resulting PDFs is illustrated in Fig. 17. Similar effects are observed from all measurements, with the largest impact coming from \(y(\mathrm{t})\) and \(y(\mathrm{t}\overline{\mathrm{t}})\). For the single-differential \(\mathrm{t}\overline{\mathrm{t}}\) data one can extend the studies using the approximate NNLO calculations [4, 19, 69, 70]. An example, using the \(y(\mathrm{t})\) distribution, is presented in Appendix B.

The same as in Fig. 15 for the variants of the PDF fit using the single-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross sections

A comparison of the PDF uncertainties from the double-differential cross section as a function of \([y(\mathrm{t}\overline{\mathrm{t}}), M(\mathrm{t}\overline{\mathrm{t}}) ]\), and single-differential cross section as a function of \(y(\mathrm{t}\overline{\mathrm{t}})\) is presented in Fig. 18. Only the gluon distribution is shown, since no substantial impact on the other distributions is observed (see Figs. 14, 15, 17). The total gluon PDF uncertainty becomes noticeably smaller once the double-differential cross sections are included. The observed improvement makes future PDF fits at NNLO using the fully differential calculations [56, 57], once they become available, very interesting.

Relative total uncertainties of the gluon distribution at \(\mu _\mathrm{{f}}^2=30{,} 000\,\text {GeV} ^2\), shown by shaded (or hatched) bands, as obtained in the PDF fit using the DIS and \(\mathrm {W}^\pm \) boson charge asymmetry data only, as well as single- and double-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross sections

10 Summary

A measurement of normalized double-differential \(\mathrm{t}\overline{\mathrm{t}}\) production cross sections in pp collisions at \(\sqrt{s}=8\,\text {TeV} \) has been presented. The measurement is performed in the \(\mathrm {e}^{\pm }\mu ^{\mp }\) final state, using data collected with the CMS detector at the LHC, corresponding to an integrated luminosity of 19.7\(\,\text {fb}^{-1}\). The normalized \(\mathrm{t}\overline{\mathrm{t}}\) cross section is measured in the full phase space as a function of different pairs of kinematic variables describing the top quark or \(\mathrm{t}\overline{\mathrm{t}}\) system. None of the tested MC models is able to correctly describe all the double-differential distributions. The data exhibit a softer transverse momentum \(p_{\mathrm {T}} (\mathrm{t})\) distribution, compared to the Monte Carlo predictions, as was reported in previous single-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section measurements. The double-differential studies reveal a broader distribution of rapidity \(y(\mathrm{t})\) at high \(\mathrm{t}\overline{\mathrm{t}}\) invariant mass \(M(\mathrm{t}\overline{\mathrm{t}})\) and a larger pseudorapidity separation \(\varDelta \eta (\mathrm{t},\overline{\mathrm{t}})\) at moderate \(M(\mathrm{t}\overline{\mathrm{t}})\) in data compared to simulation. The data are in reasonable agreement with next-to-leading-order predictions of quantum chromodynamics using recent sets of parton distribution functions (PDFs).

The measured double-differential cross sections have been incorporated into a PDF fit, together with other data from HERA and the LHC. Including the \(\mathrm{t}\overline{\mathrm{t}}\) data, one observes a significant reduction in the uncertainties in the gluon distribution at large values of parton momentum fraction x, in particular when using the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section as a function of \(y(\mathrm{t}\overline{\mathrm{t}})\) and \(M(\mathrm{t}\overline{\mathrm{t}})\). The constraints provided by these data are competitive with those from inclusive jet data. This improvement exceeds that from using single-differential \(\mathrm{t}\overline{\mathrm{t}}\) cross section data, thus strongly suggesting the use of the double-differential \(\mathrm{t}\overline{\mathrm{t}}\) measurements in PDF fits.

References

D0 Collaboration, Observation of the top quark. Phys. Rev. Lett. 74, 2632 (1995). doi:10.1103/PhysRevLett. 74.2632. arXiv:hep-ex/9503003

CDF Collaboration, Observation of top quark production in \({\bar{p}p}\) collisions. Phys. Rev. Lett. 74, 2626 (1995). doi:10.1103/PhysRevLett.74.2626. arXiv:hep-ex/9503002

M. Czakon, M.L. Mangano, A. Mitov, J. Rojo, Constraints on the gluon PDF from top quark pair production at hadron colliders. JHEP 07, 167 (2013). doi:10.1007/JHEP07(2013)167. arXiv:1303.7215

M. Guzzi, K. Lipka, S. Moch, Top-quark pair production at hadron colliders: differential cross section and phenomenological applications with DiffTop. JHEP 01, 082 (2015). doi:10.1007/JHEP01(2015)082. arXiv:1406.0386

M. Czakon et al., Pinning down the large-x gluon with NNLO top-quark pair differential distributions (2016). arXiv:1611.08609

CMS Collaboration, Measurements of differential jet cross sections in proton–proton collisions at \(\sqrt{s}=7\) TeV with the CMS detector. Phys. Rev. D 87, 112002 (2013). doi:10.1103/PhysRevD.87.112002. arXiv:1212.6660. [Erratum: doi:10.1103/PhysRevD.87.119902s]

CMS Collaboration, Constraints on parton distribution functions and extraction of the strong coupling constant from the inclusive jet cross section in pp collisions at \(\sqrt{s} = 7\) TeV. Eur. Phys. J. C 75, 288 (2015). doi:10.1140/epjc/s10052-015-3499-1. arXiv:1410.6765

CMS Collaboration, Measurement and QCD analysis of double-differential inclusive jet cross-sections in pp collisions at \(\sqrt{s} = \) 8 TeV and ratios to 2.76 and 7 TeV (2016). arXiv:1609.05331 (Submitted to JHEP)

CDF Collaboration, First measurement of the \({t{\bar{t}}}\) differential cross section \(d\sigma /dM_{{t{\bar{t}}}}\) in \({p{\bar{p}}}\) collisions at \(\sqrt{s} = 1.96\) TeV. Phys. Rev. Lett. 102, 222003 (2009). doi:10.1103/PhysRevLett.102.222003. arXiv:0903.2850

D0 Collaboration, Measurement of differential \({{\rm {t}}\bar{\rm {t}}}\) production cross sections in \({\rm p}{\bar{\rm p}}\) collisions. Phys. Rev. D 90, 092006 (2014). doi:10.1103/PhysRevD.90.092006. arXiv:1401.5785

ATLAS Collaboration, Measurements of top quark pair relative differential cross-sections with ATLAS in pp collisions at \(\sqrt{s}\) = 7 TeV. Eur. Phys. J. C 73, 2261 (2013). doi:10.1140/epjc/s10052-012-2261-1. arXiv:1207.5644

CMS Collaboration, Measurement of differential top-quark pair production cross sections in pp collisions at \(\sqrt{s} = 7\,\text{TeV}\). Eur. Phys. J. C 73, 2339 (2013). doi:10.1140/epjc/s10052-013-2339-4. arXiv:1211.2220

ATLAS Collaboration, Measurements of normalized differential cross-sections for \({t{\bar{t}}}\) production in pp collisions at \(\sqrt{s}\) = 7 TeV using the ATLAS detector. Phys. Rev. D 90, 072004 (2014). doi:10.1103/PhysRevD.90.072004. arXiv:1407.0371

ATLAS Collaboration, Measurement of top quark pair differential cross-sections in the dilepton channel in pp collisions at \(\sqrt{s}\) = 7 and 8 TeV with ATLAS. Phys. Rev. D 94, 092003 (2016). doi:10.1103/PhysRevD.94.092003. arXiv:1607.07281

CMS Collaboration, Measurement of the differential cross section for top quark pair production in pp collisions at \(\sqrt{s} = 8\,\text{ TeV } \). Eur. Phys. J. C 75, 542 (2015). doi:10.1140/epjc/s10052-015-3709-x. arXiv:1505.04480

ATLAS Collaboration, Measurements of top-quark pair differential cross-sections in the lepton+jets channel in pp collisions at \(\sqrt{s}=8\) TeV using the ATLAS detector. Eur. Phys. J. C 76, 538 (2016). doi:10.1140/epjc/s10052-016-4366-4. arXiv:1511.04716

CMS Collaboration, Measurement of differential cross sections for top quark pair production using the lepton+jets final state in proton–proton collisions at 13 TeV (2016). arXiv:1610.04191 (Submitted to Phys. Rev. D)

M.L. Mangano, P. Nason, G. Ridolfi, Heavy quark correlations in hadron collisions at next-to-leading order. Nucl. Phys. B 373, 295 (1992). doi:10.1016/0550-3213(92)90435-E

N. Kidonakis, E. Laenen, S. Moch, R. Vogt, Sudakov resummation and finite order expansions of heavy quark hadroproduction cross-sections. Phys. Rev. D 64, 114001 (2001). doi:10.1103/PhysRevD.64.114001. arXiv:hep-ph/0105041

CMS Collaboration, The CMS experiment at the CERN LHC. JINST 3, S08004 (2008). doi:10.1088/1748-0221/3/08/S08004

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). doi:10.1007/JHEP07(2014)079. arXiv:1405.0301

P. Artoisenet, R. Frederix, O. Mattelaer, R. Rietkerk, Automatic spin-entangled decays of heavy resonances in Monte Carlo simulations. JHEP 03, 015 (2013). doi:10.1007/JHEP03(2013)015. arXiv:1212.3460

T. Sjöstrand, S. Mrenna, P.Z. Skands, PYTHIA 6.4 physics and manual. JHEP 05, 026 (2006). doi:10.1088/1126-6708/2006/05/026. arXiv:hep-ph/0603175

J. Pumplin et al., New generation of parton distributions with uncertainties from global QCD analysis. JHEP 07, 012 (2002). doi:10.1088/1126-6708/2002/07/012. arXiv:hep-ph/0201195

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). doi:10.1088/1126-6708/2004/11/040. arXiv:hep-ph/0409146

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). doi:10.1088/1126-6708/2007/11/070. arXiv:0709.2092

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the POWHEG BOX. JHEP 06, 043 (2010). doi:10.1007/JHEP06(2010)043. arXiv:1002.2581

G. Corcella et al., HERWIG 6: an event generator for hadron emission reactions with interfering gluons (including supersymmetric processes). JHEP 01, 010 (2001). doi:10.1088/1126-6708/2001/01/010. arXiv:hep-ph/0011363

S. Frixione, B.R. Webber, Matching NLO QCD computations and parton shower simulations. JHEP 06, 029 (2002). doi:10.1088/1126-6708/2002/06/029. arXiv:hep-ph/0204244

CMS Collaboration, Measurement of the underlying event activity at the LHC with \(\sqrt{s}\) = 7 TeV and comparison with \(\sqrt{s}\) = 0.9 TeV. JHEP 09, 109 (2011). doi:10.1007/JHEP09(2011)109. arXiv:1107.0330

ATLAS Collaboration, ATLAS tunes of PYTHIA 6 and Pythia 8 for MC11. ATLAS PUB note ATL-PHYS-PUB-2011-009 (2011)

H.-L. Lai et al., New parton distributions for collider physics. Phys. Rev. D 82, 074024 (2010). doi:10.1103/PhysRevD.82.074024. arXiv:1007.2241

GEANT4 Collaboration, GEANT4—a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003). doi:10.1016/S0168-9002(03)01368-8

CMS Collaboration, Particle-flow event reconstruction in CMS and performance for jets, taus, and \({E_{\rm T}^{\text{ miss }}}\). CMS Physics Analysis Summary CMS-PAS-PFT-09-001 (2009)

CMS Collaboration, Commissioning of the particle-flow event reconstruction with the first LHC collisions recorded in the CMS detector. CMS Physics Analysis Summary CMS-PAS-PFT-10-001 (2010)

CMS Collaboration, CMS tracking performance results from early LHC operation. Eur. Phys. J. C 70, 1165 (2010). doi:10.1140/epjc/s10052-010-1491-3. arXiv:1007.1988

M. Cacciari, G.P. Salam, G. Soyez, The catchment area of jets. JHEP 04, 005 (2008). doi:10.1088/1126-6708/2008/04/005. arXiv:0802.1188

CMS Collaboration, Performance of electron reconstruction and selection with the CMS detector in proton–proton collisions at \(\sqrt{s} = 8\) TeV. JINST 10, P06005 (2015). doi:10.1088/1748-0221/10/06/P06005. arXiv:1502.02701

CMS Collaboration, Measurement of the Drell–Yan cross section in pp collisions at \(\sqrt{s}=7\) TeV. JHEP 10, 007 (2011). doi:10.1007/JHEP10(2011) 007. arXiv:1108.0566

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_t\) jet clustering algorithm. JHEP 04, 063 (2008). doi:10.1088/1126-6708/2008/04/063. arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). doi:10.1140/epjc/s10052-012-1896-2. arXiv:1111.6097

CMS Collaboration, Identification of b-quark jets with the CMS experiment. JINST 8, P04013 (2013). doi:10.1088/1748-0221/8/04/P04013. arXiv:1211.4462

CMS Collaboration, Missing transverse energy performance of the CMS detector. JINST 6, P09001 (2011). doi:10.1088/1748-0221/6/09/P09001. arXiv:1106.5048

CMS Collaboration, Performance of missing transverse energy reconstruction by the CMS experiment in \(\sqrt{s}=\) 8 TeV pp data. JINST 10, P02006 (2015). doi:10.1088/1748-0221/10/02/P02006. arXiv:1411.0511

D0 Collaboration, Measurement of the top quark mass using dilepton events. Phys. Rev. Lett. 80, 2063 (1998). doi:10.1103/PhysRevLett.80.2063. arXiv:hep-ex/9706014

L. Sonnenschein, Analytical solution of \({\rm t}\bar{{\rm t}}\) dilepton equations. Phys. Rev. D 73, 054015 (2006). doi:10.1103/PhysRevD.73.054015. arXiv:hep-ph/0603011. [Erratum: doi:10.1103/PhysRevD.78.079902]

I. Korol, Measurement of double differential \({\rm t}{\bar{\rm t}}\) production cross sections with the CMS detector. PhD thesis, Universität Hamburg, 2016. DESY-THESIS-2016-011 (2016). doi:10.3204/DESY-THESIS-2016-011

S. Schmitt, TUnfold: an algorithm for correcting migration effects in high energy physics. JINST 7, T10003 (2012). doi:10.1088/1748-0221/7/10/T10003. arXiv:1205.6201

A.N. Tikhonov, Solution of incorrectly formulated problems and the regularization method. Sov. Math. Dokl. 4, 1035 (1963)

S. Schmitt, Data unfolding methods in high energy physics (2016). arXiv:1611.01927

Particle Data Group Collaboration, Review of particle physics. Chin. Phys. C 40, 100001 (2016). doi:10.1088/1674-1137/40/10/100001

TOTEM Collaboration, First measurement of the total proton–proton cross section at the LHC energy of \(\sqrt{s}\) = 7 TeV. Europhys. Lett. 96, 21002 (2011). doi:10.1209/0295-5075/96/21002. arXiv:1110.1395

CMS Collaboration, Jet energy scale and resolution in the CMS experiment in pp collisions at 8 TeV (2016). arXiv:1607.03663 (Submitted to JINST)

CMS Collaboration, Determination of jet energy calibration and transverse momentum resolution in CMS. JINST 6, P11002 (2011). doi:10.1088/1748-0221/6/11/P11002. arXiv:1107.4277

CMS Collaboration, CMS luminosity based on pixel cluster counting—summer 2013 update. CMS Physics Analysis Summary CMS-PAS-LUM-13-001 (2013)

M. Czakon, D. Heymes, A. Mitov, High-precision differential predictions for top-quark pairs at the LHC. Phys. Rev. Lett. 116, 082003 (2016). doi:10.1103/PhysRevLett.116.082003. arXiv:1511.00549

M. Czakon, D. Heymes, A. Mitov, Dynamical scales for multi-TeV top-pair production at the LHC (2016). arXiv:1606.03350

N. Kidonakis, NNNLO soft-gluon corrections for the top-quark \({p_{\rm T}}\) and rapidity distributions. Phys. Rev. D 91, 031501 (2015). doi:10.1103/PhysRevD.91.031501. arXiv:1411.2633

J.M. Campbell, R.K. Ellis, mcfm for the Tevatron and the LHC. Nucl. Phys. Proc. Suppl. 205–206, 10 (2010). doi:10.1016/j.nuclphysbps.2010.08.011. arXiv:1007.3492

S. Alekhin, J. Blümlein, S. Moch, Parton distribution functions and benchmark cross sections at NNLO. Phys. Rev. D 86, 054009 (2012). doi:10.1103/PhysRevD.86.054009. arXiv:1202.2281

A. Accardi et al., Constraints on large-\(x\) parton distributions from new weak boson production and deep-inelastic scattering data. Phys. Rev. D 93, 114017 (2016). doi:10.1103/PhysRevD.93.114017. arXiv:1602.03154