Abstract

The DIRECT method solves Lipschitz global optimization problems on a hyperinterval with an unlimited range of Lipschitz constants. We propose an extension of the DIRECT method principles to problems with multiextremal constraints is proposed when two evaluations of functions at the ends of the chosen main diagonals are used at once. We present computational illustrations, including the solution of a problem with discontinuities. We also perform convergence analysis.

Similar content being viewed by others

References

Gorodetsky, S. Yu Several Approaches to Generalization of the DIRECT Method to Problems with Functional Constraints. Vestn. Nizhegorodsk. Gos. Univ., Ser. Mat. Model. Optimal. Upravlen. no. 6(1), 189–215 (2013).

Metody klassicheskoi i sovremennoi teorii avtomaticheskogo upravleniya, tom 2: Sintez regulyatorov i teoriya optimizatsii sistem avtomaticheskogo upravleniya (Methods of Classical and Modern Automatic Control Theory, vol 2: Controller Synthesis and Optimization Theory for Automatic Control Systems), Egupov, N.E., Ed., Moscow: MGTU im. N.E. Baumana, 2000.

Balandin, D. V. & Kogan, M. M. Sintez zakonov upravleniya na osnove lineinykh matrichnykh neravenstv (Synthesis of Control Laws Based on Linear Matrix Inequalities). (Fizmatlit, Moscow, 2007).

Aleksandrov, A. G. Metody postroeniya sistem avtomaticheskogo upravleniya (Methods for Constructing Automatic Control Systems). (Fizmatkniga, Moscow, 2008).

Gershon, E., Shaked, U. & Yaesh, I. H∞-Control and Estimation of State-multiplicative Linear Systems. (Springer, London, 2005).

Balandin, D. V. & Kogan, M. M. Pareto Optimal Generalized H2-control and Vibroprotection Problems, Autom. Remote Control 78(no. 8), 1417–1429 (2017).

Gorodetsky, S. Yu & Sorokin, A. S. Constructing Optimal Controllers Using Nonlinear Performance Criteria on the Example of One Dynamical System. Vestn. Nizhegorodsk. Gos. Univ., Ser. Mat. Model. Optimal. Upravlen. no. 2(1), 165–176 (2012).

Sergeev, Ya. D. & Kvasov, D. E. Diagonalanye metody globalanoi optimizatsii (Diagonal Global Optimization Methods). (Fizmatlit, Moscow, 2008).

Strongin, R. G. & Sergeyev, Ya. D. Global Optimization with Non-Convex Constraints: Sequential and Parallel Algorithms. (Kluwer, Dordrecht, 2000).

Evtushenko, Yu. G., Malkova, V. U. & Stanevichyus, A. A. Parallel Search for Global Extremum of Functions of Multiple Variables. Zh. Vychisl. Mat. Mat. Fiz. 49(no. 2), 255–269 (2009).

Strongin, R.G., Gergel’, V.P., Grishagin, V.A. and Barkalov, K.A.Parallelanye vychisleniya v zadachakh globalanoi optimizatsii (Parallel Computations in Global Optimization Problems), preface by V.A. Sadovnichii, Moscow: Mosk. Gos. Univ., 2013.

Gorodetsky, S. Yu Paraboloid Trianulation Methods in Solving Multiextremal Optimization Problems with Constraints for the Class of Functions with Lipschitz Directional Derivatives. Vestn. Nizhegorodsk. Gos. Univ., Ser. Mat. Model. Optimal. Upravlen. no. 1(1), 144–155 (2012).

Gorodetsky, S.Yu.A Study of Global Optimization Procedures with Adaptive Stochastic Models, Cand. Sci. Dissertation, Gorky: GGU, 1984.

Neimark, Yu. I. Dinamicheskie sistemy i upravlyaemye protsessy (Dynamical Systems and Controllable Processes). (Nauka, Moscow, 1978).

Gorodetsky, S. Yu & Neimark, Yu. I. On Search Characteristics of the Global Optimization Algorithm with Adaptive Stochastic Model, in Probl. Sluch. Poiska (pp. 83–105. Zinatne, Riga, 1981).

Jones, D. R., Perttunen, C. D. & Stuckman, B. E. Lipschitzian Optimization without the Lipschitz Constant. J. Optim. Theory Appl. 79(no. 1), 157–181 (1993).

Preparata, F.P. and Shamos, M.G.Computational Geometry. An Introduction, New York: Springer, 1985. Translated under the title Vychislitel’naya geometriya: vvedenie, Moscow: Mir, 1989.

Jones, D.R.The DIRECT Global Optimization Algorithm, in Encyclopedia of Optimization, in 7 vols, Floudas, C.A. and Pardalos, P.M., Eds., Springer, 2009, pp. 725–735, 2nd ed.

Evtushenko, Yu. G. & Rat’kin, V. A. Method of Dichotomy Partitions for Global Optimization of Functions of Multiple Variables. Izv. Akad. Nauk SSSR, Tekh. Kibern. no. 1, 119–127 (1987).

Gablonsky, J. M. & Kelley, C. T. A Locally-Biased Form of the DIRECT Algorithm. J. Global Optim. 21(no. 1), 27–37 (2001).

Sergeyev, Ya. D. & Kvasov, D. E. Global Search Based on Efficient Diagonal Partitions and a Set of Lipschitz Constants. SIAM J. Optim. 16(no. 3), 910–937 (2006).

Sergeyev, Ya. D. An Efficient Strategy for Adaptive Partition of N-dimensional Intervals in the Framework of Diagonal Algorithms. J. Optim. Theory Appl. 107(no. 1), 145–168 (2000).

Sergeyev, Ya. D. & Kvasov, D. E. A Univariate Global Search Working with a Set of Lipschitz Constants for the First Derivative. Optim. Lett. no. 3, 303–318 (2009).

Kvasov, D. E. & Sergeyev, Ya. D. Lipschitz Gradients for Global Optimization in a One-Point-Based Partitioning Scheme. J. Comput. Appl. Math. 236, 4042–4054 (2012).

Sergeyev, Ya. D., Mukhametzhanov, M. S. & Kvasov, D. E. On the Efficiency of Nature-Inspired Metaheuristics in Expensive Global Optimization with Limited Budget. Sci. Reports 8, 453 (2018).

Gorodetsky, S. Yu About the Model of Objective Function Behavior for Diagonal Implementation of DIRECT-Like Methods, CETERIS PARIBUS no. 1, (4–16. RITS EFIR, Moscow, 2016).

Karmanov, V. G. Matematicheskoe programmirovanie (Mathematical Programming). (Fizmatlit, Textbook, Moscow, 2008).

Author information

Authors and Affiliations

Appendix

Appendix

Proof of Statement. If conditions (24) are satisfied for some ΔL from the specified interval, then (21), (22), obviously, will hold with the same ΔL and with ΔLg = α ΔL. Now let (21), (22) be satisfied and suppose that for Dt there exists a corresponding pair \(\widetilde{\Delta L}\), \({\widetilde{\Delta L}}^{g}\). But since g−(ΔLg, Di) is monotone decreasing with increasing ΔLg, then (21), (22) will certainly hold for the values \(\widetilde{\Delta L}\), \(\Delta {L}^{g}=\alpha \widetilde{\Delta L}\), as needed. This completes the proof of the statement.

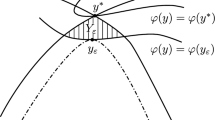

Proof of Lemma. At the first stage of processing the search information, at iteration k, as described in Section 3, in each d-layer at least one modified non-dominated hyperinterval \({\widehat{D}}_{s}^{d}\) of this layer is selected. The last selected hyperinterval has an associated range \([\Delta {L}_{s}^{d},\Delta {L}_{s+1}^{d})\) with the largest number s = Sk(d), where \(\Delta {L}_{{S}_{k}(d)+1}^{d}=+\infty \). This holds, in particular, for the d-layer of hyperintervals with the largest diameter \(d={d}_{k}^{\max }\) at the current kth iteration.

Further, when constructing the partition (25) of the axis of increments ΔL in the last interval [ΔLj, ΔLj+1) with number j = mk, the value \(\Delta {L}_{{m}_{k}+1}=+\infty \). This interval corresponds to the set of hyperintervals \({\widetilde{{\mathcal{O}}}}_{k}^{{m}_{k}}\), which by construction necessarily includes at least one hyperinterval \({\widehat{D}}_{s}^{d}\) on the d-layer with \(d={d}_{k}^{\max }\) (i.e., maximum diameter) corresponding to the range \([\Delta {L}_{s}^{d},\Delta {L}_{s+1}^{d})\) of values ΔL with the number \(s={S}_{k}({d}_{k}^{\max })\). On the comparison plane (d/2, F), when comparing hyperintervals from \({\widetilde{{\mathcal{O}}}}_{k}^{{m}_{k}}\), this hyperinterval will correspond to the rightmost point in the resulting set of points \({P}_{k}^{{m}_{k}}\). Since the comparison is done for the interval \([\Delta {L}_{{m}_{k}},+\infty )\), the point with the largest \(d={d}_{k}^{\max }\) with a sufficiently large ΔL will surely dominate the others and condition (17) will also be fulfilled at this point. Therefore, the hyperinterval of the largest diameter will be included in the set \({\widehat{{\mathcal{D}}}}_{k}\) of hyperintervals partitioned on iteration k. This completes the proof of the lemma.

Proof of Theorem. Due to the assumption of global stability of the set of solutions X* (condition C), as well as condition B to the structure of the set of global minima X* and the functions of the problem, to prove the theorem it suffices to prove that the test point placement is everywhere dense in the limit. Indeed, the search set D is compact. If conditions A and B are met, the test point placement which is everywhere dense in the limit leads to the appearance of subsequences of test points that converge to each of the solutions x*. Each x* belongs to the closure of some open subset χ from X, if \(X\ne \varnothing \), or from D otherwise. Given a test point placement which is everywhere dense in the limit, there are points arbitrarily close to x* that are centers of open balls included in χ. In each of these balls, the method will place a test point at some point. Therefore, there are subsequences of admissible points from subsets χ that converge to x* with an unlimited increase in the number of iterations. Functions Q, g or (with \(X=\varnothing \)) the function g are continuous on the closures \(\overline{\chi }\). Thus, the sequence of the points with the best function evaluations \({x}_{k}^{* }\) will be minimizing and, by virtue of requirement C, its limit points will represent solutions to the problem.

It remains to prove that the functions evaluation points distribution is everywhere dense in the limit. We give only a sketch of the proof. The desired behavior follows from the lemma, which establishes that in the constructed method at least one of the intervals with largest diameter is necessarily partitioned at each iteration. Since the partitioning of hyperintervals occurs in three equal parts along the largest edge, this ensures a strict decrease in diameter with a certain coefficient separated from unity. These two factors are sufficient. A more detailed argument can be constructed similarly to the proof of Theorem 5.6 from [8], taking into account the lemma. This completes the proof of the theorem.

Rights and permissions

About this article

Cite this article

Gorodetsky, S. Diagonal Generalizaton of the DIRECT Method for Problems with Constraints. Autom Remote Control 81, 1431–1449 (2020). https://doi.org/10.1134/S0005117920080068

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S0005117920080068