Abstract

Text difficulty refers to the ease with which a text can be read and understood, and the difficulty of research article abstracts has long been a hot topic. Previous studies have found that research article abstracts are difficult to read in general and that abstracts have gradually become more and more difficult. However, the widely used measurements, such as FRE and SMOG, have long been criticized in that they use only simplistic and surface-level indicators as proxies for complex cognitive processes of reading, and the sophisticated cognitive theory and Natural Language Processing/machine learning-based methods seem not that easy to use and interpret. A theoretically sound and methodologically neat measurement of text difficulty should be called for. Besides, the diachronic changes of abstract difficulty across disciplines have been under-researched. To address these issues, this study adopted a cognitive information-theoretic approach to investigate the diachronic change of text difficulty of research article abstracts across the areas of natural sciences, social sciences, and humanities. 1890 abstracts were sampled over a period of 21 years, and two indexes, i.e. entropy from information theory and mean dependency distance from cognitive science, were employed for the calculation of cognitive encoding/decoding difficulty. The results show that in general, the cognitive encoding difficulty of abstracts has been increasing in the past two decades, while the cognitive decoding difficulty of abstracts has been decreasing. Regarding the disciplinary variations, the humanities show no significant diachronic change in encoding difficulty, and the social sciences show no significant diachronic change in decoding difficulty. These phenomena can be attributed to the traits of abstracts, the nature of academic knowledge, the cognitive mechanism in human languages and the features of different disciplines. This study has implications for the innovations in theories and methods of measurement of text difficulty, as well as an in-depth understanding of the disciplinary variations in academic writing and the essence of research article abstracts for research article writers, readers, the scientific community, and academic publishers.

Similar content being viewed by others

Introduction

As a first and frequently read part of a research article (Nicholas et al., 2003; Jin et al., 2021), the abstract plays a pivotal role in knowledge construction and dissemination. For one thing, an abstract should explicitly reflect the full article by foregrounding the main points and packaging the core information (Jiang and Hyland, 2017). For another, it should be concise enough to reduce the cognitive burden of readers, hence attracting readers’ willingness to further their reading (Gazni, 2011). Therefore, an abstract can be regarded as having both informational and promotional traits. These traits are at the same time pursued as goals to fulfill by writers in their writing.

To make abstracts informational and promotional, writers would pay attention to the moderation of text difficulty. Text difficulty, also known as reading difficulty (Filighera et al., 2019) or text readability (Hartley et al., 2003), refers to the ease with which a text can be read and understood (Klare, 1963). The measurement of text difficulty was traditionally based on computational formulas, of which several have been widely used: the flesh reading ease (FRE), counting the numbers of syllables, words, and sentences in a text (Flesch, 1948); the Simple Measure of Gobbledygook (SMOG), calculating the number of words with more than three syllables (McLaughlin, 1969); the Flesch-Kincaid Grade Level, considering average sentence length and the average number of syllables per word (Kincaid et al., 1975); and the New Dale-Chall (NDC), calculating average sentence length and the percentage of difficult words that are out of a predefined list of common words (Dale and Chall, 1948). Later on, some researchers began to analyze text difficulty based on cognitive theories (Benjamin, 2012). For example, Kintsch and van Dijk (1978) considered propositions and inferences in the analysis of discourse processing in their cognitive theoretical framework. Landauer et al. (1998) introduced latent semantic analysis (LSA), a method that analyzes the semantic relatedness either between texts or among segments of text in a more expanded way than simple measures of word overlap (Benjamin, 2012), to assess reading comprehension. Graesser et al. (2004) developed Coh-Metrix based on a five-level cognitive model of perception, which is able to instantly gauge the difficulty of written text for the target audience with dozens of variables. Recently, some scholars attempted to use natural language processing (NLP) enabled features and machine learning techniques to improve the measurement of text difficulty. For example, Peterson and Ostendorf (2009) combined features from n-gram models, parsers, and traditional reading level measures into a support vector machine model to assess reading difficulty. Smeuninx et al. (2020) measured the readability of sustainability reports by combining fine-grained NLP measures (e.g., lexical density, subordination, parse tree depth, and passivization) with traditional formulas. Crossley et al. (2019a) used linguistic features like text cohesion, lexical sophistication, sentiment analysis, and syntactic complexity features extracted from NLP tools to develop models explaining human judgments of text comprehension and reading speed. Text difficulty is essential for knowledge development and information communication (Hartley et al., 2002), especially in research article abstracts. If an abstract is too difficult, it may hinder readers from further reading. If an abstract is easy-to-read, it may succeed in attracting readers’ attention while running the risk of poverty of information. Well-written abstracts often feature an appropriate level of difficulty, keeping a balance between the two ends.

To date, there have appeared plenty of studies on the difficulty of research article abstracts. Most studies have found that abstracts are difficult in general (Ante, 2022; Gazni, 2011; Lei and Yan, 2016; Vergoulis et al., 2019; Wang et al., 2022; Yeung et al., 2018). For example, Gazni (2011) used FRE to examine 260,000 abstracts of high-impact articles from diverse disciplines published during 2000–2009 and found that these abstracts were very difficult to read. Lei and Yan (2016) used FRE and SMOG to explore the readability of RA abstracts in information science and found that these texts were difficult to comprehend. Ante (2022) used three indexes (the Flesch Kincaid Grade Level, SMOG, and the Automated Readability Index) to examine 135,502 abstracts from 12 emerging technologies, and found that the abstracts were rather complex, requiring at least a university education for understanding. Besides, the difficulty of abstracts was found to be negatively correlated to their impact (Ante, 2022; Gazni, 2011; Sienkiewicz and Altmann, 2016; Wang et al., 2022; Yeung et al., 2018), although several studies also reported zero correlation (Gazni, 2011; Didegah and Thelwall, 2013; Lei and Yan, 2016; Vergoulis et al., 2019) or positive correlation (Dowling et al., 2018; Marino Fages, 2020).

Researchers are also interested in the diachronic change of abstract difficulty. A vast majority of studies have found that abstracts have gradually become more difficult (Ante, 2022; Lei and Yan, 2016; Plavén-Sigray et al., 2017; Vergoulis et al., 2019; Wang et al., 2022; Wen and Lei, 2022). For example, Plavén-Sigray et al. (2017) examined the readability of 709,577 abstracts published between 1881 and 2015 from 123 scientific journals and found that the readability of scientific articles, as indexed by FRE and NDC, was steadily decreasing. Wen and Lei (2022) analyzed 775,456 abstracts of scientific articles published between 1969 and 2019 and found a similar trend. Focusing on humanities rather than natural sciences, Wang et al. (2022) examined 71,628 abstracts published in SSCI journals in language and linguistics from 1991 to 2020, and found that the readability in language and linguistics abstracts was also decreasing. Meanwhile, some scholars (e.g. Gazni, 2011) found no significant diachronic change in difficulty, possibly due to the bottoming-out effect (Bottle et al., 1983), i.e. the sampled texts have already been at the very difficult level.

Since the very nature of academic texts would be shaped by disciplinary features (Hyland, 2000), disciplinary writing is discipline-specific in terms of structural, meta-discursive and linguistic features, and writers may adopt a variety of strategies that are popular in their academic community so as to promote their research (Hyland, 2015). Although variations have been found across disciplines, be it structural (e.g. Suntara and Usaha, 2013), meta-discoursal (e.g. Hyland and Tse, 2005; Saeeaw and Tangkiengsirisin, 2014), or lexico-grammatical (e.g. Omidian et al., 2018; Xiao and Sun, 2020; Xiao et al., 2022, 2023), not too much is known regarding the disciplinary variations of text difficulty. Most previous studies have only analyzed one particular discipline, such as management (Graf-Vlachy, 2022), tourism (Dolnicar and Chapple, 2015), information science (Lei and Yan, 2016), linguistics (Wang et al., 2022), economics (Marino Fages, 2020), psychology (Hartley et al., 2002; Yeung et al., 2018), and marketing (Sawyer et al., 2008; Warren et al., 2021). These mono-disciplinary studies cannot address the issue of disciplinary variations. Some studies reported descriptive statistics of a collection of disciplines, yet without disciplinary comparisons or inferential statistics (e.g. Ante, 2022; Gazni, 2011; Jin et al., 2021; Tohalino et al., 2021; Vergoulis et al., 2019). For example, Gazni (2011) ranked the 22 investigated disciplines by average FRE score but did not further conduct inferential statistics such as ANOVA. Ante (2022) carried out the descriptive analysis, t-tests, correlation analyses, and logistic regressions between uncited and highly-cited articles in 12 emerging technologies, but did not make comparisons among them. Vergoulis et al. (2019) collected multidisciplinary scientific texts but only made an analysis of the whole. It is obvious that the disciplinary variations of difficulty appeal to more exploration.

Flourished as the previous studies are, there appear two issues that need to be addressed. The first issue is concerned with the measurement methods. The widely used traditional formulas, such as FRE and SMOG, have long been criticized in that they use only simplistic and surface-level indicators as proxies for complex cognitive processes of reading (Benjamin, 2012; Hartley, 2016; Crossley et al., 2019b). They calculate text difficulty from a computational perspective but do not consider from a cognitive perspective the difficulty a reader may encounter when reading an abstract. Besides, they may omit the task situation of reading (Goldman and Lee, 2014; Valencia et al., 2014). Since reading comprehension involves dynamic interactions among readers, texts, and tasks, which are situated within a specific sociocultural context (NGACBP and CCSSO, 2010), the text difficulty can be assumed to lie at the intersection of texts, readers, and tasks (Mesmer et al., 2012). As to the cognitive model-based measures, the cognitive processes of reading are captured at the expense of excessive variables, which are difficult to interpret and use in reading pedagogy practices. The sophisticated NLP and machine learning-based methods are in a similar situation in that they need to incorporate too many features. Although these methods indeed perform better than the “classic” formulas, the “non-classic” features were only slightly more informative than the “classic” features, suggesting that the sophisticated techniques did not improve substantially the explanatory power of text difficulty models (François and Miltsakaki, 2012). Therefore, a theoretically sound and methodologically neat measurement of text difficulty, which considers the interactions among readers, texts, and tasks and is easy to use and interpret, should be called for. Since the present study aims to investigate the research article abstracts, the informational and promotional traits of abstracts should also be taken into account.

The second issue lies in the scarcity of longitudinal studies on the disciplinary variations of abstract difficulty. Since the abstracts have been found to show disciplinary variations in a variety of aspects, their text difficulty should not be an exception. However, little is known about the disciplinary variations of abstract difficulty, and even less is known about the diachronic changes across disciplines. Although this issue could be partially addressed via a synthesis of the mono-disciplinary studies, a direct comparison of multiple disciplines would be more straightforward. Besides, most studies have focused on specific disciplines, like economics and psychology, while no study has been implemented from a macro scope on the discipline areas of natural sciences, social sciences, and humanities. Such a systematic and comprehensive study will without doubt deepen our understanding of the disciplinary variations in academic writing and the essence of research article abstracts.

In this study, we aim to solve the above-mentioned two issues. Regarding the measurement problem, we proposed a novel measurement that links writers and readers together and takes into consideration the informational and promotional goals and traits of abstract reading, inspired by a cognitive information-theoretic approach. Instead of regarding difficulty as an indivisible whole, we deconstructed it into two aspects, i.e. the encoding difficulty and the decoding difficulty. The encoding difficulty refers to the difficulty encountered by the writers during the processes of text production and information encoding. At this stage, the writers should provide detailed information to the readers so as to meet the informational goal of abstract writing. The encoding difficulty can be measured by the classic index “entropy” that originated in information theory (Shannon, 1948). A line of studies has shown that the higher the entropy value, the more cognitive encoding difficulty the text features. For example, Gullifer and Titone (2020) used entropy to estimate the social diversity of language use and found that language entropy is able to characterize individual differences in bilingual/multilingual experience related to the social diversity of language use. The higher the entropy, the more diversified their language use. Karimi (2022) examined the effect of entropy on the production of referential forms and found that greater entropy leads to more explicit referential forms during language production, suggesting that the greater the entropy the more complex the semantic processes. Venhuizen et al. (2019) studied entropy in language comprehension and found that entropy reduction is derived from the cognitive process of word-by-word updating of the unfolding interpretation, and it reflects the end-state confirmation of this process. In the same vein, the decoding difficulty refers to the difficulty encountered by the readers during the processes of text comprehension and information decoding. At this stage, the writers should help the readers maximize successful understanding and minimize their reading difficulty, in an attempt to promote and popularize their studies. The decoding difficulty can be measured by “mean dependency distance (MDD)”, an indicator of cognitive load (Liu, 2008; Liu et al., 2022). The higher the MDD value, the more decoding difficulty the text features. For example, Hiranuma (1999) applied dependency distance as a text difficulty metric to investigate the difficulty of spoken text in English and Japanese and found that Japanese texts are syntactically no more difficult than English ones. Alghamdi et al. (2022) computed features related to lexical frequency and MDD to investigate what makes video-recorded lectures difficult for language learners, and found that MDD as one of the linguistic complexity features significantly correlated with the participants’ ratings of video difficulty. Ouyang et al. (2022) combined traditional syntactic complexity measures with dependency distance measures to assess second language syntactic complexity development and found that MDD can better differentiate the writing proficiency of beginning, intermediate, and advanced learners than the traditional measures used the dependency distance measures to investigate syntactic complexity in writing assessment in order to differentiate the writing proficiency of beginner, intermediate, and advanced learners. Inspired by both the cognitive sciences and information theory, our novel measurement is able to reflect the authentic situation in abstract reading tasks and is thought to overcome the criticized weaknesses of the traditional ones.

As to the disciplinary variations, we systematically and comprehensively investigated the diachronic change in text difficulty of research article abstracts across disciplines. To be specific, we sampled abstracts from the three disciplinary areas of natural sciences, social sciences and humanities, and tracked the changes in the past 21 years. In this way, we hope to overcome the weaknesses of mono-disciplinary research and contribute to the systematic understanding of the disciplinary variations of abstract difficulty.

Our research questions are then as follows:

-

(1)

Are there any diachronic changes in the cognitive encoding difficulty of abstracts? Is there any disciplinary variation across natural sciences, social sciences, and humanities?

-

(2)

Are there any diachronic changes in the cognitive decoding difficulty of abstracts? Is there any disciplinary variation across natural sciences, social sciences, and humanities?

Methods

Corpora

To guarantee representativeness, authority, and comparability across disciplines, we used the systematic stratified random sampling method to build our corpora. We chose three top journals (according to the JCR impact factor in 2021) from each of the fields of physics, chemistry, biology (to represent the natural sciences), economics, education, psychology (to represent the social sciences), philosophy, history and linguistics (to represent the humanities). We then randomly selected 10 research articles in each field per year, from 2000 to 2020. In the end, 1890 articles were selected. Their abstracts, with a volume of 316,319 words, were kept for analysis.

Measurement

The cognitive encoding difficulty is measured by entropy, a concept in information theory (Shannon, 1948). A higher entropy value indicates more information content, hence more effort in cognitive encoding (Sayood, 2018). In language studies, the entropy of a given text, represented by H(T), can be calculated as in Formula 1:

where p(wi) is the probability of the occurrence of the ith word type in a text T, V is the total number of word types in the text, and H(T) can be seen as the average information content of word types (Bentz et al., 2017).

It should be noted that the Shannon entropy as calculated according to Formula 1 may not be suitable for short texts, which thus could challenge the precision of entropy calculation in abstracts. To solve the problem, we employed an advanced algorithm, i.e., the Miller-Madow (MM) entropy (Hausser and Strimmer, 2014), which can counterbalance the underestimation bias of small text sizes, by adding a correction to Shannon entropy (Bentz et al., 2017; Hausser and Strimmer, 2009). The MM entropy is calculated as in Formula 2:

where V represents word types, and N refers to word tokens.

The cognitive decoding difficulty is measured by MDD, which is an average of dependency distances. Dependency distance (DD) refers to the linear distance between two words within a syntactic dependency relation (Tesnière, 1959; Liu et al., 2017). It is regarded as an indicator of syntactic complexity and cognitive load (Liu, 2008; Liu et al., 2022). Higher DD indicates higher syntactic complexity and heavier cognitive load (Gibson, 1998), hence more effort in cognitive decoding.

The DD between two dependency-related words (known as the governor and the dependent) is defined as the linear positional distance between the two paired words (Hudson, 2010; Tesnière, 1959). It can be calculated as in Formula 3:

where PG refers to the position of governor and PD the position of dependent.

The MDD of a sentence can then be calculated as in Formula 4:

where DDi is the dependency distance of the ith dependency pair in the sentence, and N is the total number of dependency relations in the sentence.

In order to verify the reliability of our metrics, we calculated the flesh reading ease (FRE) scores of our texts (see Table 1) and conducted Pearson correlation analyses between FRE and entropy/MDD. The FRE scores can be calculated as in Formula 5:

Data processing and statistical analysis

We used the R programming language (Version 4.0.3) to calculate entropy values. First, we calculated the frequencies of word occurrence in an abstract. Second, we calculated the probability of occurrence of each word. Finally, we calculated the entropy of the abstract according to Formulas 1 and 2.

As for the calculation of MDD, we used Stanford CoreNLP (Version 3.9.2) parser (Manning et al., 2014), an open-source probabilistic natural language parsing software in the Python programming environment (Version 3.10). First, we annotated the syntactic dependencies of the sentences in each abstract. Second, we calculated the DD of each dependency pair according to Formula 3. Finally, we calculated the MDD of each sentence according to Formula 4.

The FRE scores were also calculated in the Python programming environment (Version 3.10). First, we calculated the total number of sentences in an abstract. Second, we calculated the total number of syllables in an abstract. Third, we calculated the total number of words in an abstract. Finally, we calculated the FRE of each abstract according to Formula 5.

After obtaining the values of entropy and MDD, we coded an R script to calculate the mean values of each year and each discipline, plotted together with a fitting curve. We then conducted simple linear regressions to test whether there are significant diachronic changes in terms of entropy or MDD. The significant level was set at 0.05.

Results

The descriptive statistics of FRE scores are reported in Table 1 and a summary of linear regression models are reported in Table 2. The results show a downward trend of FRE score from 2000 to 2020 (F(1, 19) = 51.07, Beta = −0.271, p = 8.589e−07). The results of Pearson correlation analyses show that the FRE scores are significantly correlated with entropy (r = −0.754, p = 0.000) and MDD (r = 0.488, p = 0.025), indicating that our metrics are reliable.

The group means of entropy values are plotted in Fig. 1 in general and Fig. 2 by discipline, with the descriptive statistics in Table 3 and a summary of linear regression models in Table 4. From Fig. 1, Tables 3 and 4 we can see an upward trend of entropy values from 2000 to 2020 (F (1, 19) = 84.744, Beta = 0.009, p = 0.000). As to disciplinary variations, we can see from Fig. 2, Tables 3 and 4 that the entropy values of natural sciences and social sciences resemble the upward trend (NS: F(1, 19) = 21.389, Beta = 0.010, p = 0.006; SS: F(1, 19) = 114.000, Beta = 0.014, p = 0.000), while the humanities somewhat show no sign of significant change (F(1, 19) = 1.585, Beta = 0.003, p = 0.891). The results show that the cognitive encoding difficulty of abstracts has been increasing in the past two decades; yet the abstracts in humanities are an exception, showing no significant diachronic change in encoding difficulty.

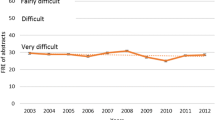

The group means of MDD values are plotted in Fig. 3 in general and Fig. 4 by discipline, with the descriptive statistics in Table 5 and a summary of linear regression models in Table 6. From Fig. 3, Tables 5 and 6 we can see a downward trend of MDD values from 2000 to 2020 (F(1, 19) = 7.163, Beta = −0.005, p = 0.003). As to disciplinary variations, we can see from Fig. 4, Tables 5 and 6 that the MDD values of natural sciences and humanities resemble the downward trend (NS: F(1, 19) = 12.852, Beta = −0.012, p = 0.001; HM: F(1, 19) = 7.526, Beta = −0.006, p = 0.003), while the social sciences show no sign of significant change (F(1, 19) = 1.536, Beta = 0.003, p = 0.521). The results show that the cognitive decoding difficulty of abstracts has been decreasing in the past two decades; yet the abstracts in social sciences are an exception, showing no significant diachronic change in decoding difficulty.

Discussion

Inspired by cognitive science and information theory, the present study used the measurement of entropy and MDD to investigate the diachronic change in text difficulty of research article abstracts across disciplines. To the best of our knowledge, this is the first study of such kind. The results show that in general, the cognitive encoding difficulty of abstracts has been increasing in the past two decades, while the cognitive decoding difficulty of abstracts has been decreasing. Regarding the disciplinary variations, the abstracts in humanities differ from those in natural and social sciences in that the humanities show no significant diachronic change in encoding difficulty, and the abstracts in social sciences differ from those in the other two disciplinary areas in that the social sciences show no significant diachronic change in decoding difficulty. In the rest of this section, we will discuss the major findings.

The cognitive encoding difficulty of abstracts

In the past two decades, the entropy values of abstracts showed an upward trend, suggesting an increase in information content and hence cognitive encoding difficulty. The increase could be attributed to the increasing importance of the informative trait of abstracts. The abstracts, as the first and frequently read part of a research article (Nicholas et al., 2003; Jin et al., 2021), bear the task of attracting readers. They should epitomize the full text and present the core information in a limited textual space that is often as short as hundreds of words. This highly condensed feature makes abstracts a rather informative genre. If an abstract is not informative, it is likely that readers would not proceed with reading the remaining sections (Lambert and Lambert, 2011). Nowadays, the “publish or perish” issue has become more and more severe in the academic community. In order to attract readers (especially the first readers: the journal editors and anonymous reviewers) from the very beginning of their articles and to increase the scientific and social impact of their research, researchers may have been writing more and more informative abstracts. Besides, the accumulative nature of academic knowledge may be another possible reason. The academic knowledge itself does not come out of anywhere. It has to be explored and unraveled by innovative research. A piece of research can be regarded as innovative only if it reports novel findings and makes substantial contributions to the expansion of human knowledge. That is, it must be based on and outperform previous studies, adding new knowledge to the existing one. With the progress of academic research, more and more knowledge, in terms of concepts, topics, theories, paradigms, and methods, has been accumulated (Dolnicar and Chapple, 2015). Due to the accumulative nature, studies in recency may carry more information content than remote ones, giving rise to an increase in encoding difficulty.

This finding is consistent with the results of previous studies (e.g. Plavén-Sigray et al., 2017; Wen and Lei, 2022; Ante, 2022), which also found that the abstracts are becoming more and more difficult to read, as indicated by traditional measurements such as FRE, SMOG, and NDC. The measurements used in these studies only capture surface-level linguistics features such as the number of syllables, the number of polysyllabic words, and the average sentence length. Instead of measuring these linguistics features, we measured the informativeness of abstracts from the perspective of information theory, thus have complemented and extended the results in previous findings.

Regarding the disciplinary variations, the abstracts in humanities diverge from those in natural and social sciences in that the humanities show no significant increase in encoding difficulty. The divergence may be attributed to the disciplinary features of humanities. Due to the very multi-dimensional and interpretive nature, the humanities lack a unified scientific research paradigm (Becher and Trowler, 2001; Hyland, 2000), as well as a steady accumulation of theories and practices. This is in sharp contrast to the scientific paradigms with a unique selling point epitomized as objectivity (Omidian et al., 2018), which emphasizes the accumulation of quantitative shreds of evidence (Becher, 1994). Another reason may be that our study only tracked changes in two decades, which seems too subtle to be detected. Although human spirits and cultures, as the research objectives of the humanities, were found to be accumulative as time goes by (Zhu and Lei, 2018), they could not soar within such a short period. This assumption is echoed by the results of Juola (2013), Liu (2016), and Zhu and Lei (2018), which show that cultural complexity has increased by about only five percent in 100 years. Bearing this in mind, it is possible that a significant increase would have been detected in our study if a longer period had been investigated.

The cognitive decoding difficulty of abstracts

In the past two decades, the MDD values of abstracts showed a downward trend, suggesting a decrease in dependency distance and hence the cognitive decoding difficulty. The decrease could be attributed to the promotional trait of abstracts. To promote a piece of research, the abstract should be concise enough for the readers to understand (Fulcher, 1997). A full understanding means a heavy cognitive load. Due to the constrain of cognitive resources, a heavy load in cognitive decoding would hinder readers from understanding. If the abstract is too difficult for the readers to understand, it cannot serve well the goal of promotion. Therefore, with the purpose to enable readers to fully grasp the meaning of the abstract, the writers would try to lower the cognitive burden of readers, resulting in a decrease in decoding difficulty. Besides, the decrease in decoding difficulty can also be accounted for by the popularization of academic knowledge. Nowadays academic research is no longer regarded as mysterious behaviors hidden inside the ivory tower. Instead, it is believed that science is an attempt to understand our complicated world and to help people lead more satisfactory lives, and scientists have the professional duty to do so (Hartley et al., 2002). To popularize their knowledge, researchers would resort to clear writing as a way of narrowing the gaps between researchers, practitioners, and the public (Hartley et al., 2002). Considering that academic writing in recent decades is found to become more informal (Hyland and Jiang, 2017) and the service of the English language as a lingua franca is expected to lead to syntactic simplification (Trudgill, 2011), the decrease in decoding difficulty could be thought as another reflection of the popularization. What is more, the decrease in decoding difficulty could also be accounted for by the mechanism of dependency distance minimization in human languages. In the field of quantitative linguistics, the phenomenon that the dependency distance tends to be minimized has been found in a variety of languages (e.g. Futrell et al., 2015; Lei and Wen, 2019; Liu, 2008). Dependency distance minimization is driven by the fact that the human language is a complex adaptive system following the principle of least effort (Zipf, 1949) and is shaped by efficiency in communication (Gibson et al., 2019). The research article abstracts, which seek to efficiently transmit academic knowledge while reducing readers’ effort in text decoding, also follow this universal trend. In the reading of research article abstracts, the minimization of dependency distance benefits the readers in reducing the cognitive processing difficulty. Given that the cognitive resources keep constant, the reduction of resources on text decoding means that the readers could spare more cognitive resources in the content of text rather than the sheer form of language, giving rise to a better understanding of the content.

Our finding of a decreasing text difficulty is inconsistent with the results of a vast majority of previous studies (e.g. Lei and Yan, 2016; Plavén-Sigray et al., 2017; Vergoulis et al., 2019; Wang et al., 2022; Wen and Lei, 2022) that found an increasing trend. The reason for the inconsistency may be that these studies did not disassociate text difficulty from the dimensions of encoding and decoding difficulties. Since text difficulty is thought to be multi-dimensional, it is a good attempt to calculate text difficulty from multiple perspectives. Meanwhile, our finding is in accordance with a line of dependency-based studies (e.g. Futrell et al., 2015; Lei and Wen, 2019; Lu and Liu, 2020; Liu et al., 2022). For example, Futrell et al. (2015) found a minimization of dependency distance by analyzing a large-scale and parsed corpus of 37 languages. Lei and Wen (2019) verified this minimization in the English language from a diachronic perspective and concluded that dependency distance minimization is driven by the evolution of human cognitive mechanisms. Lu and Liu (2020) found that besides sentences, noun phrases also show the tendency of dependency distance minimization, thus extending this tendency to the level of phrases. These studies used genres other than academic languages as their materials. Compared with them, we analyzed the research article abstracts, a typical academic genre. Our results thus reaffirmed the universality of dependency distance minimization in academic texts.

Regarding the disciplinary variations, the abstracts in social sciences diverge from those in natural sciences and humanities in that the social sciences show no significant decrease in decoding difficulty. The reason for the divergence may be that compared with natural sciences and humanities, the social sciences are in effect cross-disciplinary areas that employ methods in natural sciences to explore human beings and society that are traditionally within the scope of humanities. They are approximated to the natural sciences in terms of research orientation, modes of knowledge production and methods, and approximated to the humanities in terms of interpretation and textual construction (Li et al. 2022; Widdowson, 2007). This cross-disciplinary feature demands constant updates and a complex mixture of research theory, paradigm, and practice. However, a social scientist cannot possibly be well-rounded. For example, a social scientist that is good at questionnaire surveys may find it difficult to understand a study based on social network analysis. Therefore, it is difficult to significantly reduce readers’ decoding difficulty, which the writers may strive to control, though. Another reason is that social scientists may intentionally make their writing difficult to read. Since the social sciences are sometimes not regarded as hard or scientific as the natural sciences, social scientists could be eager to be acknowledged as real scientists (Xiao et al., 2022, 2023). Bearing this in mind, social scientists may resist simplifying their writing. Although clear writing is related to clear thinking (Langer and Flihan, 2000), simplicity could also mean easiness (Montoro, 2018). Therefore, social scientists may wish to make their papers complex, which means they would like to emphasize the scientific side of their research and gain a sense of existence in the scientific community (Kuteeva and Airey, 2014).

Conclusion

This study investigated the diachronic change in text difficulty of research article abstracts across the areas of natural sciences, social sciences, and humanities. A cognitive information-theoretic approach that uses entropy and mean dependency distance as indicators of cognitive encoding/decoding difficulty were adopted. The results show that in general, the cognitive encoding difficulty of abstracts has been increasing in the past two decades, while the cognitive decoding difficulty has been decreasing. The increase in encoding difficulty could be attributed to the informative trait of abstracts and the accumulative nature of academic knowledge, and the decrease in decoding difficulty could be attributed to the promotional feature of abstracts, the popularization of academic knowledge, and the mechanism of dependency distance minimization in human languages. Regarding the disciplinary variations, the humanities show no significant diachronic change in encoding difficulty, possibly due to their very multi-dimensional and interpretive nature; the social sciences show no significant diachronic change in decoding difficulty, possibly due to the fact that the cross-disciplinary feature of social sciences makes it difficult to significantly reduce readers’ decoding difficulty and that social scientists may intentionally make their writing difficult to read, hoping to be acknowledged as real scientists.

This study has several implications. Theoretically, we deconstructed text difficulty into two aspects, i.e. the encoding difficulty and the decoding difficulty, by drawing a theoretical basis from cognitive science and information theory. This novel approach does not regard text difficulty as an indivisible and static construct but as a result of the dynamic interactions among readers, texts, and tasks, thus have broadened the dimensions of text difficulty research. Methodologically, we have proposed a new way of difficulty calculation, which is theoretically sound and methodologically neat, as well as easy to use and interpret. Anyway, this method has not yet been noticed in the field of text difficulty research. More interesting findings would be revealed if the methods in our study were adopted in follow-suite studies on text difficulty. Practically, as one of the few cross-disciplinary studies, this study would deepen the understanding of the disciplinary variations in academic writing and the essence of research article abstracts. Our results would then shed light on research article writers and readers, the scientific community, and the publishing industry. For research article writers, it is necessary to have a basic understanding of the characteristics of academic texts in their disciplines. Bearing this in mind, writers could strive to approach the norms and conventions in their disciplinary communities, so as to make their work accepted by community members. For the readers, especially the non-native speakers of English that may feel anxious about their English reading, it is suggested that they focus on scientific knowledge rather than the English language, as we found that the decoding difficulty has become easier and easier while the encoding difficulty has become more and more difficulty. A reasonable distribution of cognitive resources would without doubt benefit reading comprehension. Due to the increasing difficulty of encoding, a minimum pre-requisite of related concepts, topics, theories, and paradigms does not harm. For the scientific community, it is suggested that researchers come to concord on the disciplinary variations and establish standards of scientific writing accordingly (as is done by some journals and associations), so as to maximize the efficiency of academic communication. For academic publishers, it is worth an attempt to incorporate the metrics of encoding/decoding difficulty in the assessment of potential high-impact articles and the prediction of hot topics.

Despite its significance, this study has several limitations. First, we employed the systematic stratified random sampling method to build our corpora, which requires the manual selection of articles and is thus very laborious. Future studies could use more advanced big data tools such as web crawlers to automatically retrieve articles, covering a wider range of fields, journals, articles, and periods. Second, since this is an initial attempt to study cognitive encoding/decoding difficulty with a combination of both entropy and dependency, the indexes may not be inclusive. Future studies could use more indexes, such as relative entropy, normalized dependency distance, and dependency relations, to explore cognitive encoding/decoding difficulty in a more fine-grained way. Third, since we only studied abstracts, cautions need to be taken when generalizing our findings to the full texts. The same methods in this study could be replicated in full-length research articles to reveal their unique features.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Alghamdi EA, Gruba P, Velloso E (2022) The relative contribution of language complexity to second language video lectures difficulty assessment. Mod Language J 106:393–410

Ante L (2022) The relationship between readability and scientific impact: evidence from emerging technology discourses. J Informetr 16:101252

Becher T (1994) The significance of disciplinary differences. Stud High Educ 19:151–161

Becher T, Trowler P (2001) Academic tribes and territories. McGraw-Hill Education, UK

Benjamin RG (2012) Reconstructing readability: recent developments and recommendations in the analysis of text difficulty. Educ Psychol Rev 24:63–88

Bentz C, Alikaniotis D, Cysouw M, Ferrer-i-Cancho R (2017) The entropy of words-learnability and expressivity across more than 1000 languages. Entropy 19:275

Bottle R, Rennie J, Russ S, Sardar Z (1983) Changes in the communication of chemical information I: some effects of growth. J Inf Sci 6:103–108

Crossley SA, Skalicky S, Dascalu M (2019a) Moving beyond classic readability formulas: new methods and new models. J Res Read 42:541–561

Crossley SA, Kyle K, Dascalu M (2019b) The tool for the automatic analysis of Cohesion 2.0: integrating semantic similarity and text overlap. Behav Res Methods 51:14–27

Dale E, Chall JS (1948) A formula for predicting readability: instructions. Educ Res Bull 27:37–54

Didegah F, Thelwall M (2013) Which factors help authors produce the highest impact research? Collaboration, journal and document properties. J Informetr 7:861–873

Dolnicar S, Chapple A (2015) The readability of articles in tourism journals. Ann Tour Res 52:161–166

Dowling M, Hammami H, Zreik O (2018) Easy to read, easy to cite? Econ Lett 173:100–103

Filighera A, Steuer T, Rensing C (2019) Automatic text difficulty estimation using embeddings and neural networks. In: Scheffel M, Broisin J, Pammer-Schindler V, Ioannou A, Schneider J (eds) Transforming learning with meaningful technologies, vol 11722. Springer, Cham, pp. 335–348

Flesch R (1948) A new readability yardstick. J Appl Psychol 32:221–233

François T, Miltsakaki E (2012) Do NLP and machine learning improve traditional readability formulas? In: Proceedings of the First Workshop on Predicting and Improving Text Readability for target reader populations, June 2012. Association for Computational Linguistics, Montréal, Canada, pp. 49–57

Fulcher G (1997) Text difficulty and accessibility: reading formulae and expert judgement. System 25:497–513

Futrell R, Mahowald K, Gibson E (2015) Large-scale evidence of dependency length minimization in 37 languages. Proc Natl Acad Sci USA 112:10336–10341

Gazni A (2011) Are the abstracts of high impact articles more readable? Investigating the evidence from top research institutions in the world. J Inf Sci 37:273–281

Gibson E (1998) Linguistic complexity: locality of syntactic dependencies. Cognition 68:1–76

Gibson E, Futrell R, Piantadosi SP, Dautriche I, Mahowald K, Bergen L, Levy R (2019) How efficiency shapes human language. Trends Cogn Sci 23:389–407

Goldman SR, Lee CD (2014) Text complexity: state of the art and the conundrums it raises. Elem School J 115:290–300

Graesser AC, McNamara DS, Louwerse MM, Cai Z (2004) Coh-Metrix: analysis of text on cohesion and language. Behav Res Methods Instrum Comput 36:193–202

Graf-Vlachy L (2022) Is the readability of abstracts decreasing in management research? Rev Manag Sci 16:1063–1084

Gullifer J, Titone D (2020) Characterizing the social diversity of bilingualism using language entropy. Bilingualism 23:283–294

Hartley J (2016) Is time up for the Flesch measure of reading ease? Scientometrics 107:1523–1526

Hartley J, Pennebaker JW, Fox C (2003) Abstracts, introductions and discussions: how far do they differ in style? Scientometrics 57:389–398

Hartley J, Sotto E, Pennebaker J (2002) Style and substance in Psychology: are influential articles more readable than less influential ones? Soc Stud Sci 32:321–334

Hausser J, Strimmer, K (2009) Entropy inference and the James-Stein estimator, with application to nonlinear gene association networks. J Mach Learn Res 10:1469–1484

Hausser J, Strimmer K (2014) Entropy: estimation of entropy, mutual information and related quantities. R package version 1

Hiranuma S (1999) Syntactic difficulty in English and Japanese: a textual study. UCL Work Pap Linguist 11:309–322

Hudson R (2010) Resilient regions in an uncertain world: wishful thinking or a practical reality? Camb J Reg Econ Soc 3:11–25

Hyland K (2000) Disciplinary discourse: social interactions in academic writing. Longman, London

Hyland K, Tse P (2005) Evaluative that constructions: Signalling stance in research abstracts. Funct Language 12:39–63

Hyland K (2015) Genre, discipline and identity. J Engl Acad Purp 19:32–43

Hyland K, Jiang F (2017) Is academic writing becoming more informal? Engl Specif Purp 45:40–51

Jiang FK, Hyland K (2017) Metadiscursive nouns: interaction and cohesion in abstract moves. Engl Specif Purp 46:1–14

Jin T, Duan H, Lu X, Ni J, Guo K (2021) Do research articles with more readable abstracts receive higher online attention? Evidence from science. Scientometrics 126:8471–8490

Juola P (2013) Using the Google N-Gram corpus to measure cultural complexity. Lit Linguist Comput 28:668–675

Karimi H (2022) Greater entropy leads to more explicit referential forms during language production. Cognition 225:105093

Kincaid JP, Fishburne RP, Rogers RL, Chissom BS (1975) Derivation of new readability formulas (automated readability index, fog count, and Flesch reading ease formula) for Navy enlisted personnel. Research Branch Report, Chief of Naval Technical Training: Naval Air Station Memphis, pp. 8–75

Kintsch W, Van Dijk T (1978) Toward a model of text comprehension and production. Psychol Rev 85:363–394

Klare GR (1963) Measurement of readability. Iowa State University Press, Ames, IA

Kuteeva M, Airey J (2014) Disciplinary differences in the use of English in higher education: reflections on recent language policy developments. High Educ 67:533–549

Lambert VA, Lambert CE (2011) Writing an appropriate title and informative abstract. Pac Rim Int J Nurs Res 15:171–172

Landauer T, Foltz P, Laham D (1998) An introduction to latent semantic analysis. Discourse Process 25:259–284

Langer JA, Flihan S (2000) Writing and reading relationships: constructive tasks. In: Indrisano R, Squire JR (eds) Perspectives on writing: research, theory, and practice. International Reading Association, pp. 112–139

Lei L, Yan S (2016) Readability and citations in information science: evidence from abstracts and articles of four journals (2003–2012). Scientometrics 108:1155–1169

Lei L, Wen J (2019) Is dependency distance experiencing a process of minimization? A diachronic study based on the State of the Union addresses. Lingua 239:102762

Li Y, Nikitina L, Riget PN (2022) Development of syntactic complexity in Chinese university students’ L2 argumentative writing. J Engl Acad Purp 56:101099

Liu H (2008) Dependency distance as a metric of language comprehension difficulty. J Cogn Sci 9:159–191

Liu H, Xu C, Liang J (2017) Dependency distance: a new perspective on syntactic patterns in natural languages. Phys Life Rev 21:171–193

Liu Z (2016) A diachronic study on British and Chinese cultural complexity with Google Books Ngrams. J Quant Linguist 23:361–373

Liu X, Zhu H, Lei L (2022) Dependency distance minimization: a diachronic exploration of the effects of sentence length and dependency types. Humanit Soc Sci Commun 9:420

Lu J, Liu H (2020) Do English noun phrases tend to minimize dependency distance? Aust J Linguist 40:246–262

Manning CD, Surdeanu M, Bauer J, Finkel JR, Bethard S, McClosky D (2014) The Stanford CoreNLP natural language processing toolkit. In: Proceedings of 52nd annual meeting of the Association for Computational Linguistics: System Demonstrations. Baltimore, Maryland, Association for Computational Linguistics. pp. 55–60

Marino Fages D (2020) Write better, publish better. Scientometrics 122:1671–1681

McLaughlin GH (1969) SMOG grading-a new readability formula. J Read 12:639–646

Mesmer HA, Cunningham JW, Hiebert EH (2012) Toward a theoretical model of text complexity for the early grades: Learning from the past, anticipating the future. Read Res Q 47:235–258

Montoro R (2018) Investigating syntactic simplicity in popular fiction: a corpus stylistics approach. In: Rethinking language, text and context. Routledge, London, pp. 63–78

National Governors Association Center for Best Practices, Council of Chief State School Officers (2010) Common Core State Standards for English language arts. http://www.corestandards.org/wp-content/uploads/ELA_Standards.pdf. Accessed 10 Nov 2022

Nicholas D, Huntington P, Watkinson A (2003) Digital journals, Big Deals and online searching behavior: a pilot study. Aslib Proc: New Inf Perspect 55:84–109

Omidian T, Shahriari H, Siyanova-Chanturia A (2018) A cross-disciplinary investigation of multi-word expressions in the moves of research article abstracts. J Engl Acad Purp 36:1–14

Ouyang J, Jiang J, Liu H (2022) Dependency distance measures in assessing L2 writing proficiency. Assess Writ 51:100603

Peterson S, Ostendorf M (2009) A machine learning approach to reading level assessment. Comput Speech Language 23:89–106

Plavén-Sigray P, Matheson GJ, Schiffler BC, Thompson WH (2017) The readability of scientific texts is decreasing over time. Elife 6:e27725

Saeeaw S, Tangkiengsirisin S (2014) Rhetorical variation across research article abstracts in Environmental Science and Applied Linguistics. Engl Language Teach 7:81–93

Sawyer AG, Laran J, Xu J (2008) The readability of marketing journals: are award-winning articles better written? Journal of Marketing 72:108–117

Sayood K (2018) Information theory and cognition: a review. Entropy 20:706

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27:379–423

Sienkiewicz J, Altmann EG (2016) Impact of lexical and sentiment factors on the popularity of scientific papers. R Soc Open Sci 3:160140

Smeuninx N, De Clerck B, Aerts W (2020) Measuring the readability of sustainability reports: a corpus-based analysis through standard formulae and NLP. Int J Bus Commun 57:52–85

Suntara W, Usaha S (2013) Research article abstracts in two related disciplines: rhetorical variation between Linguistics and Applied Linguistics. Engl Language Teach 6:84–99

Tesnière L (1959) Eléments de la syntaxe structurale. Klincksieck, Paris

Tohalino JA, Quispe LV, Amancio DR (2021) Analyzing the relationship between text features and grants productivity. Scientometrics 126:4255–4275

Trudgill P (2011) Sociolinguistic typology: social determinants of linguistic complexity. Oxford University Press, Oxford

Valencia SW, Wixson KK, Pearson PD (2014) Putting text complexity in context: refocusing on comprehension of complex text. Elem School J 115:270–289

Venhuizen NJ, Crocker MW, Brouwer H (2019) Expectation-based comprehension: modeling the interaction of world knowledge and linguistic experience. Discourse Process 56:229–255

Vergoulis T, Kanellos I, Tzerefos A, Chatzopoulos S, Dalamagas T, Skiadopoulos S (2019) A study on the readability of scientific publications. In: Doucet A, Isaac A, Golub K, Aalberg T, Jatowt A (eds) Digital libraries for open knowledge, vol 11799. Springer, Cham, pp. 36–144

Wang S, Liu X, Zhou J (2022) Readability is decreasing in language and linguistics. Scientometrics 127:4697–4729

Warren NL, Farmer M, Gu T, Warren C (2021) Marketing ideas: How to write research articles that readers understand and cite. J Mark 85:42–57

Wen J, Lei L (2022) Adjectives and adverbs in life sciences across 50 years: implications for emotions and readability in academic texts. Scientometrics 127:4731–4749

Widdowson HG (2007) Discourse analysis. Oxford University Press, Oxford

Xiao W, Sun S (2020) Dynamic lexical features of PhD theses across disciplines: a text mining approach. J Quant Linguist 27:114–133

Xiao W, Liu J, Li L (2022) How is information content distributed in RA introductions across disciplines? An entropy-based approach. Res Corpus Linguist 10:63–83

Xiao W, Li L, Liu J (2023) To move or not to move: an entropy-based approach to the informativeness of research article abstracts across disciplines. J Quant Linguist 30:1–26

Yeung AW, Goto TK, Leung WK (2018) Readability of the 100 most-cited neuroimaging papers assessed by common readability formulae. Front Hum Neurosci 12:308

Zhu H, Lei L (2018) British cultural complexity: an entropy-based approach. J Quant Linguist 25:190–205

Zipf GK (1949) Human behavior and the principle of least effort: an introduction to human ecology. Addison-Wesley Press, Boston

Acknowledgements

This work was supported by the Social Science Foundation of Chongqing [Grant number 2019QNYY51]; the Science Foundation of Chongqing [Grant number cstc2020jcyj-msxmX0554]; the Fund of the Interdisciplinary Supervisor Team for Graduates Programs of Chongqing Municipal Education Commission [Grant number YDSTD1923]; the Fundamental Research Funds for the Central Universities [Grant number 2021CDJSKZX07]; and the Graduate Research Innovation Program of Chongqing [Grant number CYS22081].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, X., Li, L. & Xiao, W. The diachronic change of research article abstract difficulty across disciplines: a cognitive information-theoretic approach. Humanit Soc Sci Commun 10, 194 (2023). https://doi.org/10.1057/s41599-023-01710-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-023-01710-1

- Springer Nature Limited

This article is cited by

-

Towards Positivity: A Large-Scale Diachronic Sentiment Analysis of the Humanities and Social Sciences in China

Fudan Journal of the Humanities and Social Sciences (2023)