Abstract

Pseudoscientific beliefs are widespread and have potentially harmful consequences. Being able to identify their presence and recognize the factors characterizing their endorsement is crucial to understanding their prevalence. In this preregistered study, we validated the English version of the Pseudoscience Endorsement Scale and investigated its correlates. A group of volunteers (n = 510), representative of the U.S. population, responded to this scale and to questionnaires measuring the presence of paranormal, denialist, and conspiracist beliefs. The validation resulted in a shorter version of the scale, the sPES. Participants also completed a scientific literacy questionnaire as well as bullshit detection and cognitive reflection tests. Scores obtained on the questionnaires corresponding to different unwarranted beliefs correlated with each other, suggesting a possible common basis. Scientific knowledge, cognitive reflection scores, and bullshit sensitivity were negatively associated with scores on the pseudoscience scale. Of note, bullshit receptivity was the main contributor in a model predicting pseudoscience endorsement.

Similar content being viewed by others

Introduction

Different types of epistemically unwarranted beliefs, that is, beliefs lacking substantial evidence to justify them (Lobato et al., 2014) are present in our societies. Common subcategories are belief in paranormal phenomena, i.e. those that, if genuine, would be in conflict with basic principles of science (Broad, 1949); conspiracy theories: “lay beliefs that attribute the ultimate cause of an event, or the concealment of an event from public knowledge, to a secret, unlawful, and malevolent plot by multiple actors working together” (Swami et al., 2010, p. 749); pseudoscientific beliefs, defined by Losh and Nzekwe (2011, p. 579) as “cognitions about material phenomena that, although they lay claim to be ‘science’, use non-scientific evidentiary processes including authoritative assertion, anecdotes, or unelaborated ‘natural’ causes”; and science denialism, which refers to a “motivated rejection […] of well‐established scientific theories, simulating from a pseudoskeptical standpoint a false controversy among scientists” (Fasce and Picó, 2019, p. 619), and is considered by some authors to be a subtype of pseudoscience (Lobato et al., 2014; Fasce and Picó, 2019).

In the present study, we revised and translated into English the Pseudoscience Endorsement Scale (PES), originally created by Torres et al. (2020) in Spanish. Unlike other questionnaires, which intermingle different types of unjustified beliefs (Lobato et al., 2014; Majima, 2015; Fasce and Picó, 2019; Huete-Pérez et al., 2022), include assessments of scientific knowledge (Johnson and Pigliucci, 2004; Losh and Nzekwe, 2011), or are exclusively addressed to evaluate the use of complementary and alternative medicine (Astin, 1998; Lindeman, 2011), the PES focuses only on the endorsement of pseudoscientific beliefs, thus avoiding other possible confounding variables, but encompasses the variety of pseudoscientific myths and beliefs about pseudotherapies that proliferate in nowadays society.

Our first goal was to validate this scale and to study the relationship between the presence of pseudoscientific beliefs and of other unwarranted beliefs. Following previous studies (Lobato et al., 2014; Fasce and Picó, 2019; Majima, 2015; Torres et al., 2020; Huete-Pérez et al., 2022), our hypothesis is that, despite possible conceptual and distributional differences (see below), endorsement of pseudoscientific beliefs positively correlates with the presence of other types of unwarranted beliefs (i.e., paranormal beliefs, science denialism and conspiracist beliefs).

Pseudoscientific beliefs are particularly interesting because they seem to be more widespread than other types of unwarranted beliefs. For instance, 59% and 68.6% of the Spanish population believe in the effectiveness of pseudoscientific therapies, such as homoeopathy and acupuncture, respectively; while only 22.7% and 27.9% believe in paranormal phenomena and superstitions, respectively (FECYT, 2017). Furthermore, believing in the effectiveness of certain pseudosciences may carry associated risks to people’s health and economy. For example, if they decide to deal with their illnesses or pathologies with (non-effective) pseudoscientific therapies they might face increased morbidity and even fatal consequences (Lim et al., 2011; Johnson et al., 2018a, 2018b). Hence, being able to adequately measure the presence of these beliefs and understanding the cognitive factors influencing their appearance is important because it would allow the design of strategies aimed to diminish their harmful influence.

Taking this into account, as a second goal, we aimed to study the endorsement of pseudoscientific beliefs in relation to possible cognitive correlates and key sociodemographic variables. An interesting aspect of pseudoscientific beliefs is their relation to formal education. Paranormal beliefs are known to be negatively related to education level (Majima, 2015; Aarnio and Lindeman, 2005), which suggests that formal education is an effective tool against the spreading of this kind of belief. In contrast, some data indicate that there could even be a positive relation between the length of education and endorsement of pseudoscientific belief (Astin, 1998; Barnes et al., 2009; CIS, 2018), suggesting that these beliefs might be resistant to formal education. In fact, pseudoscientific beliefs have been observed to be widespread among professionals with higher education, such as physicians (Posadzki et al., 2012) and teachers (Ferrero et al., 2016).

Even though achieving higher education, in general, appears not to be sufficient to prevent the endorsement of pseudoscientific beliefs, it could be the case that receiving specific scientific instruction does promote the rejection of pseudoscience. In this sense, Fasce and Picó (2019) observed a negative association between scientific knowledge and endorsement of pseudoscientific beliefs (note, however, that Majima, 2015, failed to observe this association). A positive relation between scientific knowledge and reduced pseudoscientific beliefs offers an encouraging possibility. Nevertheless, the predisposition to acquire scientific knowledge itself could be modulated by cognitive and meta-cognitive factors related to reasoning styles and analytic thinking. In relation to this, Fasce and Picó (2019) observed that the presence of pseudoscientific beliefs correlated with scores on the Rational-Experiential Information Styles self-report questionnaire (Epstein, et al., 1996; see also Majima et al., 2022 for similar results with an abbreviated version of the scale). Specifically, endorsement of pseudoscience among Fasce and Picó’s participants was positively correlated with scores on the faith in intuition subscale (i.e., the extent to which and individual relies on intuitive thinking), and negatively associated with scores on the need for cognition subscale (i.e., the individuals’ level of enjoyment and engagement of rational, logical, and analytic thinking). However, in a previous study, Lobato et al. (2014) observed no correlation between the need for cognition and the endorsement of pseudoscientific beliefs. Also in conflict with the results by Fasce and Picó (2019), Majima (2015), who applied a Japanese-adapted version of the same test (Information-Processing Style Inventory Short Form; Naito et al., 2004, study 2), observed a positive association between the scores in their non-paranormal pseudoscience scale and results obtained on the need for cognition-equivalent dimension, as well as a null association with results of the faith in intuition-equivalent scale.

Among other factors, discrepancies between the results of these studies might be related to the use of self-report measures, which might not be the best tool to adequately capture reasoning strategies. In this sense, Fasce and Picó (2019; see also Majima et al., 2022) confirmed their results with regard to analytical thinking by means of the Cognitive Reflection Test (CRT; Frederick, 2005). Correct responses in this questionnaire have been assumed to indicate the ability to resist reporting intuitive answers (“System 1”-based responses in terms of Stanovich and West, 2000), and engage in reflective, effortful reasoning (“System 2”-based processes). Note, however, that recent studies indicate that many correct responses to the test are, in fact, obtained intuitively. Taking this into account, CRT scores could be reflecting, not the ability to correct intuitive responses by means of deliberation, but the capacity to detect potential conflicts between heuristic and logical intuitions (Bago and De Neys, 2019; Šrol and De Neys, 2021).

Another variable, which could be playing a role in the development and maintenance of pseudoscientific beliefs could be gullibility, which has been defined as “an individual’s propensity to accept a false premise in the presence of untrustworthiness cues” (Teunisse et al., 2020, p. 2). Forer (1949) studied gullibility in his classic demonstration of the Barnum effect: the tendency to rate universally valid personality descriptions as highly accurate assessments of our own personality. Forer himself linked this effect to epistemically unwarranted beliefs such as those related to crystal-gazing, astrology or graphology. More recently, in their study of receptivity to pseudo-profound bullshit (i.e., apparently impressive statements which are presented as meaningful but are essentially vacuous), Pennycook, Cheyne, Barr, Koehler, and Fugelsang (2015) suggested that a general gullibility factor could be responsible for the tendency of some of their volunteers to accept both their stimuli and epistemically unwarranted beliefs. In this sense, some individuals might have an “uncritical open mind” (Pennycook et al., 2015, p. 559) leading them toward accepting statements as true, which could influence their endorsement of epistemically unwarranted beliefs, including those related to pseudoscience.

Finally, endorsement of pseudoscientific beliefs has been observed to differ with regard to several sociodemographic characteristics. For instance, some studies indicate that they are more prevalent among women (Lobato et al., 2014; Majima, 2015; Huete-Pérez et al., 2022). These differences might be related with the predominant role of women in community health, as they are the ones who usually assume the role of caregivers. Indeed, women are known to be more prone to use alternative and complementary medicine (Bishop and Lewith, 2010; Klein et al., 2015; Peltzer and Pengpid, 2018), what could explain the differences with regard to pseudoscientific beliefs in general. Moreover, pseudoscientific beliefs have been shown to be more frequent among individuals with higher socioeconomic status (FECYT, 2017; CIS, 2018; Eisenberg et al., 1993) what has been attributed to ideas of sophistication and exclusivity often associated to these kinds of beliefs (Fasce and Picó, 2019).

All in all, following previous studies, we expect the presence of pseudoscientific beliefs to be positively predicted by gullibility, as measured by a bullshit detection questionnaire and negatively predicted by analytic thinking (i.e., reflective as opposed to intuitive) and scientific knowledge. In relation to sociodemographic characteristics, and following previous observations, we expect that endorsement of pseudoscientific beliefs will be greater for women than for men (Lobato et al., 2014; Majima, 2015; Huete-Pérez et al., 2022) and will be higher for individuals with higher education level (FECYT, 2017; Astin, 1998; CIS, 2018) and socioeconomic status (FECYT, 2017; CIS, 2018).

Method

Prior to data collection, our hypotheses and the corresponding analyses were pre-registered at AsPredicted.org: https://aspredicted.org/x7mx5.pdf.

Participants

A total of 510 volunteers, representative of the U.S. population and recruited through the online experiment platform Prolific (https://www.prolific.co/), participated in this study. Supplementary Table S1 displays the distribution of the participants according to their age, sex, and ethnicity. Half of the participants were women and the other half were men. Their ages ranged from 18 to 80 (mean = 45.99, SD = 15.85).

The ethics committee of the university (Institutional Review Board IRB00003099, Universitat de Barcelona) approved the study protocols. All the volunteers provided informed consent prior to their participation. Each participant received £3.74 ($4.52) approximately (£13.61/h, with a median time of completion of 16.5 min) as compensation for their contribution to the study.

Materials

Pseudoscience Endorsement Scale

The main aim of the study was to validate an English version of the PES. We translated the Spanish Pseudoscience Endorsement Scale (Torres et al., 2020) into English following common translation and back-translation procedures (Sierro et al., 2016). The scale includes 20 items referring to popular pseudoscientific myths and disciplines. Each item consisted of a statement (e.g., “Radiation derived from the use of a mobile phone increases the risk of a brain tumour”) that the participants had to rate on a scale from 1 (“Totally disagree”) to 7 (“Totally agree”). The level of endorsement of pseudoscience is measured by averaging the responses to all the items. High scores on this scale indicate that the participants show great endorsement of pseudoscientific beliefs. Supplementary Table S2 includes the type of statement about pseudoscience (myths or disciplines), the topic referred by each item, and key references justifying their inclusion as examples of pseudoscience. The scale is available at https://osf.io/xbyz4.

Revised Paranormal Beliefs Scale

The Revised Paranormal Beliefs Scale (RPBS; Tobacyk, 2004) is a twenty-six-item questionnaire with seven subscales assessing endorsement of paranormal beliefs (e.g., “Some people have an unexplained ability to predict the future”). The participants provided their responses on a Likert-like scale ranging from 1 (“Strongly disagree”) to 7 (“Strongly agree”). The level of endorsement of paranormal beliefs is the mean of the responses to each item, with higher scores indicating stronger paranormal beliefs. The RPBS had an excellent internal consistency in our sample (ω = 0.94). We used global scores on the RPBS in our analyses because we did not have specific predictions for the different subscales. Nevertheless, results regarding the association between each of them and pseudoscientific beliefs are presented as supplementary materials.

Science denialism items—Pseudoscientific Beliefs Scale

Participants also responded to the Science Denialism items included in the Pseudoscientific Belief Scale (SD-PBS) by Fasce and Picó (2019), which are nine statements reflecting science denialism, this is, the rejection of sound and proven scientific theories on the basis of fake arguments (e.g., “Vaccines are unsafe, some of them cause diseases such as autism”). Volunteers had to provide their responses by means of a Likert-like scale ranging from 1 (“Strong disagreement”) to 5 (“Strong agreement”). The SD-PBS measure showed poor internal consistency in our sample (ω = 0.63). According to the individual item reliability analysis, item 4 was negatively influencing the reliability of this scale, so we dropped it for the subsequent analysis. A new reliability analysis showed a McDonald’s ω of 0.64. Mean scores of the eight items were calculated for each participant, with higher scores reflecting more science denialism.

Generic Conspiracist Beliefs Scale

We used the Generic Conspiracist Beliefs Scale (GCB; Brotherton et al., 2013) to assess endorsement of conspiracist beliefs, that is, the tendency of participants to believe that an event is the result of a conspiracy when a plainer explanation is more likely. Volunteers were presented with 15 statements describing generic conspiracies (e.g., “Secret organizations communicate with extraterrestrials, but keep this fact from the public”). They provided their responses on a Likert-like scale ranging from 1 (“Definitely not true”) to 5 (“Definitely true”). The level of conspiracist beliefs is reflected in the average scores of all the items, with higher values reflecting stronger conspiracist beliefs. The GCB measure presented an excellent internal consistency in our sample (ω = 0.95).

Bullshit detection

In order to assess the inclination to assign overstated judgments to meaningless statements, we followed Pennycook et al., 2015 (study 4) and presented the volunteers with 10 motivational quotes (e.g., “Your teacher can open the door, but you must enter by yourself”) and 10 bullshit sentences. The bullshit items consist of seemingly impressive statements that are presented as true and significant but are actually meaningless and empty (e.g., “Hidden meaning transforms unparalleled abstract beauty”). Pennycook et al. (2015) originally gathered these items from two websites, which create sentences by pseudorandomly shuffling profound-sounding words: http://wisdomofchopra.com and http://sebpearce.com/bullshit/. Volunteers had to rate the profoundness (i.e., the level “of deep meaning; of great and broadly inclusive significance”) of each item on a five-point scale ranging from 1 (“Not at all profound”) to 5 (“Very profound”). This scale showed very high internal consistency in our sample (ω = 0.92). Following Pennycook et al. (2015), different measures were calculated for this questionnaire: mean ratings for motivational quotes; mean ratings for bullshit sentences (i.e., bullshit receptivity); and mean bullshit sensitivity scores (i.e., profundity ratings for the motivational quotes minus profundity ratings for the bullshit items).

Science Literacy Knowledge Questionnaire

The Science Literacy Knowledge Questionnaire (SLKQ; Majima, 2015) is aimed to assess scientific knowledge. It consists of eleven statements about scientific topics. The participants had to judge whether they were true or false (e.g., “The continents on which we live have been moving their location for millions of years and will continue to move in the future”). The SLKQ showed poor internal consistency in our sample (ω = 0.58). According to the individual item reliability analysis, items 4 and 10 were negatively influencing the reliability of this scale, so we dropped them for the subsequent analysis. A subsequent reliability analysis showed a McDonald’s ω of 0.62. The score on the questionnaire is calculated as the sum of correct responses, with higher values indicating higher scientific knowledge.

Cognitive Reflection Test

We gathered the participants’ responses on the Cognitive Reflection Test (CRT; Sirota and Juanchich, 2018). This scale includes seven multiple choice questions, consisting of mathematical word problems, with four response options each, only one of them being correct (e.g., “A bat and a ball cost £1.10 in total. The bat costs £1.00 more than the ball. How much does the ball cost? 5 pence; 10 pence; 9 pence; 1 pence.”). The CRT measure had acceptable internal consistency in our sample (ω = 0.71). The score for this test is calculated as the sum of correct responses.

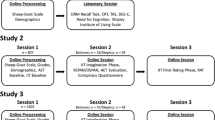

Procedure

The entire study was conducted online. All the questionnaires were designed through the online survey platform Qualtrics (http://www.qualtrics.com) and presented through the online experiment platform Prolific (https://www.prolific.co/). The participants first responded to the PES. Then, the remaining questionnaires were presented in random order. Before finishing, volunteers indicated their sex, age, political ideology (in a 1– 7 scale, where 1 was “very left-wing/liberal” and 7 was “very right-wing/conservative”), years of schooling, and socioeconomic status (in a scale from 1 being “the poorest people” in the country, to 10 being “the richest people” in the country). Finally, Prolific provided us with the ethnicity of the volunteers.

Design and analysis strategy

We first validated the English version of the PES and then we conducted a correlational study including this and other measures. We started analysing the psychometric properties and empirical structure of the English version of the PES with IMB SPSS Statistics (version 26.0.0.1). Then, we conducted frequentist and Bayesian correlational analyses with JASP (version 0.16.3.0) in which we included endorsement of pseudoscientific, paranormal, denialist and conspiracist beliefs as well as all the cognitive variables and sociodemographic data. These analyses were complemented with a comparison between scores obtained by men and women and specific correlations for each of them. Finally, as a complementary analysis, we constructed linear regression models aimed to compare the influence of the different predictors over the four types of unwarranted beliefs.

Results

The dataset that supports the findings of this study is available at https://osf.io/xbyz4.

Psychometric properties of the PES

A reliability analysis on the PES data (mean = 3.74, SD = 1.02) revealed very high internal consistency of item scores, McDonald’s ω = 0.92. Hotelling’s T2 index of equality, T2 = 2038.13, F(19,491) = 103.48, p < 0.001, showed that all the items were interrelated, and Tukey’s test of non-additivity, F(1,9671) = 18.36, p < 0.001, indicated that they were not additive. We explored the individual item distributions to determine whether any particular item was affecting the additivity property. Kolmogorov–Smirnov (K–S) test showed that none of the items followed a normal distribution, all K–S values < 0.25, and all ps < 0.001. None of the items were excluded following kurtosis and skewness analyses (Kim, 2013). Item 10 (i.e., “Nutritional supplements like vitamins or minerals can improve the state of one’s health and prevent diseases”) was the only item with extreme outliers. We performed a second reliability analysis without this item, which still showed an excellent internal consistency of the item scores, ω = 0.92. Hotelling’s T2 also remained significant, T2 = 1596.26, F(18,492) = 85.72, p < 0.001. Nevertheless, in this case, Tukey’s test showed additivity, F(1,9162) = 2.23, p = 0.135. It seems that the non-additivity of the scale was related to the distribution of the scores on this item.

Empirical structure of the PES

The suitability of our data for the principal components analysis (PCA) was appropriate, as the Kaiser–Meyer–Olkin (KMO) test showed a high measure of sampling adequacy, KMO = 0.94. Bartlett’s test of sphericity was also significant, χ2(190) = 4056.46, p < 0.001, an indicator of a high correlation between items. When the PCA was performed, we observed three components with eigenvalues over 1.0, which explained 39.43%, 6.13%, and 5.50% of the total variance, respectively. Nevertheless, most items loaded higher in component 1, and the variance percentages explained by the other two components were very low. Then, we opted to conduct a parallel analysis (oblimin rotation), which extracted only one component. Given this pattern of results, we accepted the one-component solution, suggesting that the PES is providing a general measure of pseudoscientific beliefs. Finally, eight items (i.e., items 1, 2, 3, 7, 10, 16, 18, and 20) showed weak loadings (i.e., values below 0.6). We removed them from the subsequent analysis to make the scale more robust and reliable. The short version of the scale (henceforth, sPES) still showed very high internal consistency (ω = 0.90).

Correlational analyses

In the following, we present the results of correlational analyses obtained with the sPES (results obtained with the full version were very similar and are included in Supplementary Tables S3–S6). We conducted Kendall’s tau for testing all correlations since the Shapiro–Wilk test revealed that none of the variables followed a normal distribution, all Ws(510) > 0.86, and all ps < 0.023.

Table 1 shows that the sPES (mean = 3.58, SD = 1.14) was positively correlated with the other three measures of unwarranted beliefs: RPBS, SD-PBS, and GCBS. As for the cognitive measures (see Table 2), sPES scores were positively correlated with profoundness ratings for both bullshit statements and motivational quotes, but negatively correlated with the bullshit sensitivity measure (i.e., the higher endorsement of pseudoscientific beliefs, the lower the ability to realise that a statement is bullshit compared to the motivational ones), with the SLKQ (i.e., the higher the scores on the sPES, the lower the scientific knowledge), and with correct responses on the CRT. Pseudoscientific beliefs also correlated positively with age and political ideology (i.e., the higher the tendency to score right-wing/conservative, the higher mean scores on the sPES), and negatively with years of schooling. The socioeconomic status seemed not to influence the endorsement of pseudoscientific beliefs (p = 0.072).

An independent samples t-test indicated that men (mean = 3.68, SD = 1.13) scored slightly higher than women (mean = 3.47, SD = 1.15) on the sPES, t(508) = 2.02, p = 0.044, d = 0.18. Note, however, that the Bayesian analysis indicated anecdotal evidence favouring the null hypothesis, BF01 = 1.41. When correlations were performed separately according to sex, women and men showed the same pattern of correlations as when the whole sample was considered, with only one exception. In men, the correlation between mean scores on the sPES and the bullshit sensitivity measure did not reach significance (rτ = −0.05, p = 0.229, BF01 = 5.72).

Regression analyses

Although it had not been originally planned in our preregistration, we ran four different forced entry regression models (see Table 3), respectively including scores reflecting pseudoscientific beliefs, paranormal beliefs, science denialism and conspiracist beliefs as dependent variables, and reflectiveness, bullshit receptivity, bullshit sensitivity, scientific knowledge, sex, age, years of schooling and socioeconomic status as predictors. Collinearity diagnostics discarded multicollinearity issues between the predictors (variance inflation factor < 1.63, tolerance > 0.62). Percentages of explained variance for the final models were 33% for pseudoscientific beliefs, 32% for paranormal beliefs, 23% for science denialism, and 19% for conspiracist beliefs.

Individuals with higher scientific literacy showed fewer epistemically unwarranted beliefs of the four types. So did participants less inclined to accept bullshit statements as profound. Correct scores on the CRT negatively predicted scores on the paranormal, denialist and conspiracist scales, but only approached significance in the case of pseudoscientific beliefs. Participants with more bullshit sensitivity showed more endorsement of pseudoscientific, paranormal, and conspiracist beliefs.

General discussion

In this study, we first validated an English version of the PES, and then we investigated the association between different types of epistemically unwarranted beliefs as well as possible cognitive factors and sociodemographic variables influencing them.

Regarding our validation, the English version of the PES showed very high internal consistency and a one-component solution appeared to be the most adequate. Nevertheless, eight of the items showed weak loadings, which led us to eliminate them, resulting in the short version of the scale, sPES.

With regards to the association between different kinds of epistemically unwarranted beliefs, as predicted, the presence of pseudoscientific beliefs in our participants was associated with endorsement of the other three belief categories. This result suggests that, despite conceptual divergences between them, different kinds of epistemically unwarranted beliefs might share an underlying basis (Lobato et al., 2014).

We were also interested in investigating possible cognitive correlates of pseudoscientific beliefs endorsement. First, we observed a significant positive association between the presence of pseudoscientific beliefs and gullibility. In our study, believers in pseudoscience tended to rate as more profound bullshit sentences in the bullshit detection scale. We thus replicate previous observations by Pennycook et al. (2015) who reported a significant correlation between receptivity to bullshit items and a measure of belief in the efficacy of different instances of complementary and alternative medicine, which partially overlaps with the content of our measure of pseudoscientific beliefs. In contrast with their results, we also observed a significant negative effect of bullshit sensitivity (i.e., the difference between profundity ratings to motivational and bullshit items) over endorsement of pseudoscientific beliefs. Nevertheless, according to our regression analysis, the willingness to accept bullshit statements as profound is more relevant for endorsement of pseudoscientific beliefs than the ability to discriminate between doubtfully significant sentences. Moreover, our study indicates that the same can be said about denialist, paranormal, and conspiracist beliefs.

Second, scientific knowledge appeared to have a protective role against pseudoscientific beliefs in our study. We, thus, replicate the negative association between scientific knowledge and endorsement of pseudoscientific beliefs observed by Fasce and Picó (2019; though see Majima, 2015). Furthermore, we extended this observation to both conspiracist, denialist and paranormal beliefs. Pseudoscience and science denialism are obviously associated with scientific topics, so it is reasonable to expect them to be negatively associated with scientific knowledge. As for conspiracist beliefs, scientists are considered as main actors in some of the most extended conspiracies (e.g., chemtrails, fake moon landing, HIV- and COVID19-related conspiracies, etc.) and, although the conspiracist beliefs scale used in our study (Brotherton et al., 2013) does not refer to specific conspiracies, it includes items such as “Groups of scientists manipulate, fabricate, or suppress evidence in order to deceive the public”, which clearly refer to distrust in science. In this sense, an association between a lack of scientific knowledge and conspiracist beliefs is to be expected. In contrast, paranormal beliefs, although inherently lacking scientific support, are not as directly related to knowledge of scientific facts. Nevertheless, note that scientific knowledge is closely related with the concept of scientific (i.e., critical) thinking, and both concepts are considered constituents of the more complex construct of scientific literacy (Siarova et al., 2019). From this perspective, the association between paranormal beliefs and scientific literacy in our study (see also Majima, 2015), aligns with the results of previous studies showing a reduction of certain paranormal beliefs after educational interventions based on scientific thinking strategies (Barberia et al., 2018; Rodríguez-Ferreiro et al., 2021).

Finally, believers in pseudoscience presented lower scores on the CRT. Previous studies based on self-informed measures of thinking style (Lobato et al., 2014; Fasce and Picó, 2019; Majima, 2015) provided conflicting results regarding the role of reflectiveness (i.e., analytic style) over pseudoscience endorsement (for a similar lack of agreement between previous results with regards to paranormal beliefs see Majima, 2015; Lasikiewicz, 2015; Irwin, 2015; Genovese, 2005). Our data, based on a direct measure of thinking style, the Cognitive Reflection Test, are in line with those obtained by Fasce and Picó (2019) and Majima et al. (2022) in showing that believers in pseudoscience obtain higher scores on this test. Furthermore, our study goes beyond the specific dimension of pseudoscientific beliefs, and confirms that a similar pattern is observed in relation with denialist (see also Fasce and Picó, 2019), paranormal (see also Majima et al., 2022; Sirota and Juanchich, 2018; Rizeq et al., 2021; Ståhl and van Prooijen, 2018) and conspiracist beliefs (see also Rizeq et al., 2021).

This result could be taken to indicate that sceptics are either more suited to suppress intuitive incorrect answers in favour of correct responses obtained through effortful deliberation (Stanovich and West, 2000) or that they present better abilities to detect potential conflicts between their heuristic and logical intuitions (Bago and De Neys, 2019; Šrol and De Neys, 2021; Raoelison et al., 2020). Nevertheless, when entered into a regression analysis with the other predictors, the effect of CRT over pseudoscientific beliefs was very weak, making its contribution not as relevant as those of other variables such as gullibility or scientific knowledge.

Regarding sociodemographic characteristics, our data did not show robust differences in the endorsement of pseudoscientific beliefs between men and women. This result contrasts with those observed in some previous studies (e.g., Lobato et al., 2014; Majima, 2015, Huete-Pérez et al., 2022; Majima et al., 2022), although other studies have failed to find sex differences (Fasce and Picó, 2019). Our hypotheses regarding the educational level and socioeconomic status were not confirmed either. On the one hand, although the effect was small and unreliable, participants indicating more years of schooling showed lower endorsement of pseudoscientific beliefs (note however, that this effect did not survive when included with other variables, such as scientific knowledge, in a regression model). On the other hand, our data provided evidence favouring a lack of association between socioeconomic status and pseudoscientific beliefs. These results contrast with previous observations of stronger endorsement of some particular pseudoscientific beliefs on people with higher educational and socioeconomic status. For example, Astin (1998) found that more educated individuals and those with higher income showed a stronger tendency to use alternative medicine (see Barbadoro et al., 2011; Thomas and Coleman, 2004, for similar results). The discrepancy with our results might stem from the fact that our scale is not restricted to pseudoscientific remedies and treatments but includes other relevant pseudoscientific domains, where these demographic variables might not operate in the same way. In fact, Fasce et al. (2020) recently observed that pseudoscientific beliefs, measured by their Pseudoscientific Belief Scale (Fasce and Picó, 2019), were more prevalent among people with pre-university studies than among people with university studies, a result in line with ours.

Regarding the two sociodemographic variables for which we had no a priori hypothesis, political ideology and age, we found that older participants and those self-identifying as right-wing/conservative were more prone to endorse pseudoscience. This result is, again, partially consistent with those of Fasce et al. (2020), who found a positive correlation between pseudoscientific endorsement and conservatism, but not age, and Majima et al. (2022), who also observed older participants present more pseudoscientific beliefs.

All in all, our results indicate that the four types of epistemically unwarranted beliefs could share a similar cognitive basis, characterized by gullibility and grounded in a lack of reflective thinking strategies (or conflict detection abilities). Moreover, scientific literacy also appears to have a protective role against these kinds of misbeliefs. Nevertheless, the correlational nature of our study prevents us from extracting strong conclusions regarding the direction of the associations observed in our results. Our hypothesis is that cognitive factors influence the development of epistemically unwarranted beliefs. However, it could also be the case that endorsement of unwarranted beliefs influences those factors, or that they all depend on a third mechanism not considered in our research. Future studies manipulating these variables should be conducted to confirm our hypothesis.

Data availability

The study was pre-registered at AsPredicted https://aspredicted.org/x7mx5.pdf and the dataset that supports our findings is available at the OSF repository https://osf.io/xbyz4.

References

Aarnio K, Lindeman M (2005) Paranormal beliefs, education, and thinking styles. Personal Individ Diff 39(7):1227–1236. https://doi.org/10.1016/j.paid.2005.04.009

Astin JA (1998) Why patients use alternative medicine. JAMA 279(19):1548. https://doi.org/10.1001/jama.279.19.1548

Bago B, De Neys W (2019) The Smart System 1: evidence for the intuitive nature of correct responding on the bat-and-ball problem. Think Reason 25(3):257–299. https://doi.org/10.1080/13546783.2018.1507949

Barbadoro P, Chiatti C, D’Errico MM, Minelli A, Pennacchietti L, Ponzio E, Prospero E (2011) Complementary and Alternative Medicine (CAM) among adults in Italy: use and related satisfaction. Eur J Integr Med 3(4):e325–e332. https://doi.org/10.1016/j.eujim.2011.09.001

Barberia I, Tubau E, Matute H, Rodríguez-Ferreiro J (2018) A short educational intervention diminishes causal illusions and specific paranormal beliefs in undergraduates. PLoS ONE 13(1):e0191907. https://doi.org/10.1371/journal.pone.0191907

Barnes PM, Bloom B, Nahin RL (2009) Complementary and alternative medicine use among adults and children: United States, 2007. Natl Health Stat Rep 12:1–23

Bishop FL, Lewith GT (2010) Who uses CAM? A narrative review of demographic characteristics and health factors associated with CAM use. Evid-Based Complement Altern Med 7(1):11–28. https://doi.org/10.1093/ecam/nen023

Broad CD (1949) The relevance of psychical research to philosophy. Philosophy 24(91):291–309

Brotherton R, French CC, Pickering AD (2013) Measuring belief in conspiracy theories: the generic conspiracist beliefs scale. Front Psychol 4(279) https://doi.org/10.3389/fpsyg.2013.00279

CIS—Centro de Investigaciones Sociológicas. (2018) Barómetro de Febrero 2018. Estudio 3205. http://www.cis.es/cis/opencm/ES/1_encuestas/estudios/ver.jsp?estudio=14383

Eisenberg DM, Kessler RC, Foster C, Norlock FE, Calkins DR, Delbanco TL (1993) Unconventional medicine in the United States. Prevalence, costs, and patterns of use. New Engl J Med 328(4):246–252. https://doi.org/10.1056/NEJM199301283280406

Epstein S, Pacini R, Denes-Raj V, Heier H (1996) Individual differences in intuitive–experiential and analytical–rational thinking styles. J Personal Soc Psychol 71(2):390–405. https://doi.org/10.1037/0022-3514.71.2.390

Fasce A, Adrián-Ventura J, Avendaño D (2020) Do as the Romans do: on the authoritarian roots of pseudoscience. Public Underst Sci 29(6):597–613. https://doi.org/10.1177/0963662520935078

Fasce A, Picó A (2019) Conceptual foundations and validation of the Pseudoscientific Belief Scale. Appl Cogn Psychol. https://doi.org/10.1002/acp.3501

FECYT (2017) Percepción Social de la Ciencia y la Tecnología 2016. https://www.fecyt.es/es/system/files/publications/attachments/2018/07/informe_epscyt_2016_completo_def.pdf

Ferrero M, Garaizar P, Vadillo MA (2016) Neuromyths in education: prevalence among spanish teachers and an exploration of cross-cultural variation. Front Hum Neurosci 10. https://doi.org/10.3389/fnhum.2016.00496

Forer BR (1949) The fallacy of personal validation: a classroom demonstration of gullibility. J Abnorm Soc Psychol 44(1):118–123. https://doi.org/10.1037/h0059240

Frederick S (2005) Cognitive reflection and decision making. J Econ Perspect 19(4):25–42. https://doi.org/10.1257/089533005775196732

Genovese JEC (2005) Paranormal beliefs, schizotypy, and thinking styles among teachers and future teachers. Personal Individ Diff 39(1):93–102. https://doi.org/10.1016/j.paid.2004.12.008

Huete-Pérez D, Morales-Vives F, Gavilán JM, Boada R, Haro J (2022). Popular epistemically unwarranted beliefs inventory (PEUBI): a psychometric instrument for assessing paranormal, pseudoscientific and conspiracy beliefs. Appl Cogn Psychol. https://doi.org/10.1002/acp.4010

Irwin H (2015) Thinking style and the formation of paranormal belief and disbelief. Aust J Parapsychol 15(2):121–139

Johnson M, Pigliucci M (2004) Is knowledge of science associated with higher skepticism of pseudoscientific claims. Am Biol Teacher 66(8):536–548. https://doi.org/10.2307/4451737

Johnson SB, Park HS, Gross CP, Yu JB (2018a) Complementary medicine, refusal of conventional cancer therapy, and survival among patients with curable cancers. JAMA Oncol 4(10):1375. https://doi.org/10.1001/jamaoncol.2018.2487

Johnson SB, Park HS, Gross CP, Yu JB (2018b) Use of alternative medicine for cancer and its impact on survival. J Natl Cancer Inst 110(1):121–124. https://doi.org/10.1093/jnci/djx145

Kim HY (2013) Statistical notes for clinical researchers: assessing normal distribution (2) using skewness and kurtosis. Restor Dent Endod 38(1):52–54. https://doi.org/10.5395/rde.2013.38.1.52

Klein SD, Torchetti L, Frei-Erb M, Wolf U (2015) Usage of complementary medicine in Switzerland: results of the Swiss Health Survey 2012 and development since 2007. PLoS ONE 10(10):e0141985. https://doi.org/10.1371/journal.pone.0141985

Lasikiewicz N (2015) Perceived stress, thinking style, and paranormal belief. Imagin Cogn Person 35(3):306–320. https://doi.org/10.1177/0276236615595235

Lim A, Cranswick N, South M (2011) Adverse events associated with the use of complementary and alternative medicine in children. Arch Dis Childh 96(3):297–300. https://doi.org/10.1136/adc.2010.183152

Lindeman M (2011) Biases in intuitive reasoning and belief in complementary and alternative medicine. Psychol Health 26:371–382. https://doi.org/10.1080/08870440903440707

Lobato E, Mendoza J, Sims V, Chin M (2014) Examining the relationship between conspiracy theories, paranormal beliefs, and pseudoscience acceptance among a University Population. Appl Cogn Psychol 28(5):617–625. https://doi.org/10.1002/acp.3042

Losh SC, Nzekwe B (2011) The influence of education major: how diverse preservice teachers view pseudoscience topics. J Sci Educ Technol 20(5):579–591. https://doi.org/10.1007/s10956-011-9297-0

Majima Y (2015) Belief in pseudoscience, cognitive style and science literacy. Appl Cogn Psychol 29(4):552–559. https://doi.org/10.1002/acp.3136

Majima Y, Walker AC, Turpin MH, Fugelsang JA (2022) Culture as a moderator of epistemically suspect beliefs. Front Psychol 13. https://doi.org/10.3389/fpsyg.2022.745580

Naito M, Suzuki K, Sakamoto A (2004) Development of rational and intuitive information-Processing Style Inventory. Jpn J Personal 13(1):67–78. https://doi.org/10.2132/personality.13.67

Peltzer K, Pengpid S (2018) Prevalence and determinants of traditional, complementary and alternative medicine provider use among adults from 32 countries. Chin J Integr Med 24(8):584–590. https://doi.org/10.1007/s11655-016-2748-y

Pennycook G, Cheyne JA, Barr N, Koehler DJ, Fugelsang JA (2015) On the reception and detection of pseudo-profound bullshit. Judgm Decis Mak 10(6):549–563. https://doi.org/10.1017/S1930297500006999

Posadzki P, Alotaibi A, Ernst E (2012) Prevalence of use of complementary and alternative medicine (CAM) by physicians in the UK: a systematic review of surveys. Clin Med 12(6):505–512. https://doi.org/10.7861/clinmedicine.12-6-505

Raoelison M, Thompson VA, De Neys W (2020) The smart intuitor: cognitive capacity predicts intuitive rather than deliberate thinking. Cognition 204:104381. https://doi.org/10.1016/j.cognition.2020.104381

Rizeq J, Flora DB, Toplak ME (2021) An examination of the underlying dimensional structure of three domains of contaminated mindware: paranormal beliefs, conspiracy beliefs, and anti-science attitudes. Think Reason 27(2):1–25. https://doi.org/10.1080/13546783.2020.1759688

Rodríguez-Ferreiro J, Vadillo MA, Barberia I (2021). Debiasing causal inferences: over and beyond suboptimal sampling. Teach Psychol https://doi.org/10.1177/00986283211048394

Siarova H, Sternadel D, Szőnyi E (2019) Research for CULT Committee—science and scientific literacy as an educational challenge. 1–64. https://www.europarl.europa.eu/committees/en/archives/8/home

Sierro G, Rossier J, Mason OJ, Mohr C (2016) French validation of the O-LIFE Short Questionnaire. Eur J Psychol Assess 32(3):195–203. https://doi.org/10.1027/1015-5759/a000249

Sirota M, Juanchich M (2018) Effect of response format on cognitive reflection: validating a two- and four-option multiple choice question version of the Cognitive Reflection Test. Behav Res Methods 50(6):2511–2522. https://doi.org/10.3758/s13428-018-1029-4

Šrol J, De Neys W (2021) Predicting individual differences in conflict detection and bias susceptibility during reasoning. Think Reason 1–31. https://doi.org/10.1080/13546783.2019.1708793

Ståhl T, van Prooijen JW (2018) Epistemic rationality: skepticism toward unfounded beliefs requires sufficient cognitive ability and motivation to be rational. Personal Individ Differ 122:155–163. https://doi.org/10.1016/j.paid.2017.10.026

Stanovich KE, West RF (2000) Individual differences in reasoning: implications for the rationality debate? Heurist Biases 421–440. https://doi.org/10.1017/S0140525X00003435

Swami V, Chamorro-Premuzic T, Furnham A (2010) Unanswered questions: a preliminary investigation of personality and individual difference predictors of 9/11 conspiracist beliefs. Appl Cogn Psychol 24(6):749–761. https://doi.org/10.1002/acp.1583

Teunisse AK, Case TI, Fitness J, Sweller N (2020) I should have known better: development of a self-report measure of gullibility. Personal Soc Psychol Bull 46(3):408–423. https://doi-org.sire.ub.edu/10.1177/0146167219858641

Thomas K, Coleman P (2004) Use of complementary or alternative medicine in a general population in Great Britain. Results from the National Omnibus survey. J Public Health 26(2):152–157. https://doi.org/10.1093/pubmed/fdh139

Tobacyk JJ (2004) A revised Paranormal Belief Scale. Int J Transpers Stud 23(1):94–98. https://doi.org/10.24972/ijts.2004.23.1.94

Torres MN, Barberia I, Rodríguez‐Ferreiro J (2020) Causal illusion as a cognitive basis of pseudoscientific beliefs. Br J Psychol 11(4):840–852. https://doi.org/10.1111/bjop.12441

Acknowledgements

This study was funded by grant PID2019-106102GB-I00 from Ministerio de Ciencia e Innovación/Agencia Estatal de Investigación, (MCIN/AEI/10.13039/501100011033).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The ethics committee of the university (Institutional Review Board IRB00003099, Universitat de Barcelona) approved the study protocols. All the research was conducted following the regulations stated by the Declaration of Helsinki. At the start of the experiment, the consent statement was displayed on the screen to the volunteers, who indicated their willingness to take part in the study by providing their Prolific ID.

Informed consent

At the start of the experiment, the consent statement was displayed on the screen to the volunteers, who indicated their willingness to take part in the study by providing their Prolific ID.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Torres, M.N., Barberia, I. & Rodríguez-Ferreiro, J. A validation of the Pseudoscience Endorsement Scale and assessment of the cognitive correlates of pseudoscientific beliefs. Humanit Soc Sci Commun 10, 176 (2023). https://doi.org/10.1057/s41599-023-01681-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-023-01681-3

- Springer Nature Limited