Abstract

Technological pedagogical content knowledge (TPACK) has evolved as a valuable model for understanding teacher performances in technology use in education to support pedagogy with proper content knowledge for the success of teaching and learning. Many self-report questionnaires have been developed for TPACK. However, limited studies assessed the extension of the questionnaires for a specific context. This study aims to develop and validate a questionnaire scale to assess elementary school teachers’ TPACK and the Use of the Internet (UotI). We used the scale to report TPACK’s internal relationships and TPACK with UotI. The study measured whether the model reflects transformative perspectives concerning how the domains (TPACK and UotI) interact. The initial scale consisted of 43 items, validated and assessed for reliability through some procedures; face and content validity, pilot study, and measurement model in the partial least-square structural equation modeling (PLS-SEM). The scale was distributed to 1100 elementary school teachers. Findings inform that 40 scale items are valid and reliable for assessing teachers’ TPACK and UotI. The significant relationships of all factors support a transformative view of the scale.

Similar content being viewed by others

Introduction

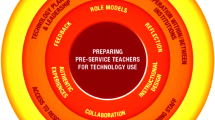

Technological pedagogical and content knowledge (TPACK) is a term used to define what teachers need to understand knowledge for effective teaching in education. The term “Technological Pedagogical Content Knowledge” (TPCK) was first used for the framework (Mishra and Koehler, 2006). The TPCK acronym was renamed TPACK (pronounced “tee-pack”) to make it more simple and easier to memorize. TPACK exposes the relationships and complexities among three essential factors of knowledge (technology, pedagogy, and content). These factors integrate educational methods and technologies with a basic comprehension of the content. By teaching content using good pedagogical and technological knowledge, teachers intuitively understand the interplay between three factors (CK, PK, and TK).

Teachers, including those who work in elementary schools, must be media and information literate to be critical to assess media, texts, and information meaningful to their students. This knowledge ensures teachers integrate technology, including the Use of the Internet (UotI) in teaching. Internet skills are required during teaching activities, such as using search engines to look for information, presentation programs, office programs, and other sources (Aslan and Zhu, 2017). Therefore, it is important to understand to what extent and how teachers use the Internet during teaching. This research combined TPACK and UotI for a scale development perceived by Indonesian elementary school teachers. This study aims to validate a self-report scale that extended TPACK questionnaires with UotI in the context of elementary school teachers. The internal relationships (TPACK-UotI) are also reported, measuring whether the model reflects transformative perspectives concerning how the domains (TPACK and UotI) interact (Fig. 1).

Instruments to assess TPACK

Some TPACK instruments have been validated and developed. Schmidt et al. (2009b) developed the first and most cited instrument. They verified the validity of the scale through Cronbach’s alpha and construct validity from 124 pre-service teachers. Seven TPACK factors were computed; TK (Technological Knowledge), PK (Pedagogical Knowledge), CK (Content Knowledge), PCK Pedagogical and Content Knowledge), TCK (Technological and Content Knowledge), TPK (Technological and Pedagogical Knowledge), and TPACK. The valid and reliable scale for the research resulted in 45 TPACK items, with 18 items being removed or revised. Many researchers used the scale in their studies. However, many failed to identify and support the seven components (e.g., Cetin-Dindar et al., 2018; Chai et al., 2012; Koh et al., 2010; Luik et al., 2018; Sang et al., 2011).

Through exploratory factor analysis (EFA), Koh et al. (2010) explored the TPACK instrumentation in Singapore, involving 1185 pre-service teachers as the respondents. Adapting Schmidt et al. (2009b) survey instruments, the seven TPACK components were not confirmed. Five constructs were reported through the process of validation; TK, CK, Knowledge of Pedagogy (KP), Knowledge of Teaching with Technology (KTT), and Knowledge from Critical Reflection (KCR). Chai et al. (2012) also failed to confirm the seven TPACK constructs. Validating their TPACK scale from 214 pre-service teachers, eight constructs were elaborated: TK, CK subject teaching 1 (CKCS1), CK subject teaching 2 (CKCS2), PK, TPK, TCK, PCK, and TPACK. Further, Shinas et al. (2013) did a study in the USA; they applied factor analysis as the main statistical approach for validity. Three hundred sixty-five pre-service teachers in an educational technology course (6 months) were the respondents of the study. Through confirmatory factor analysis (CFA), eight constructs were informed; TK, PK, Mathematical Content Knowledge (CKM), Science Content Knowledge (CLS), Literacy Content Knowledge (CKL), Social Science Content Knowledge (CKSS), TPK, and TPACK (Shinas et al., 2013).

Valtonen et al. (2015) focused on the importance of 21st-century teaching skills, which require teachers to use a variety of approaches and technology-based supporting activities. They described a TPACK development and validation process for 21st-century education. In the first phase of their research, 94 pre-service teachers received a self-assessment instrument, while 267 pre-service teachers received it in the second phase. The results were obtained through EFA with oblique rotation. Thirty-six valid and reliable items were presented. Some constructs (PK, CK old, CK 21st, TK, PCK21st, TCK21st, TPK21st, and TPACK) were reported in the 1st phase. Meanwhile, PK21st, CK old, CK21st, TK, PCK21st, TPK21st, and TCK21st emerged in the 2nd phase (Valtonen et al., 2015).

Sang et al. (2011) validated a TPCK questionnaire for Chinese pre-service teachers called the “CTPCK” scale. The study sample was randomly divided into two sub-samples, n1 = 229 and n2 = 207. An EFA procedure with varimax rotation dropped six out of 48 initiated items. The study disclosed eight TPACK constructs, namely PCK, PK, TPACK, CK, TK, TPK, TCK, and TKW (Sang et al., 2011). Baser et al. (2016) validated a self-assessed TPACK survey among pre-service English teachers, TPACK-EFL. Data from 174 and 204 pre-service teachers were elaborated through qualitative content validity with experts and users of the measures. Two rounds of EFA were conducted. The first findings identified five TPACK constructs. Following the adjustment, the second EFA found that the scale was comparable to the survey components of Schmidt et al. (2009b); they reported seven TPACK constructs (TK, PK, CK, TCK, PCK, TPK, and TPACK) (Baser et al., 2016).

Luik et al. (2018) published a report regarding the TPACK survey that data were taken from 413 pre-service teachers in Estonia, University of Tartu. As a technologically-advanced country with extensive technological integration into Estonian educational institutions, the research informed three constructs of TPACK: technological, pedagogical, and content. The study was supported by factor analysis to confirm the three constructs (Luik et al., 2018). Cetin-Dindar et al. (2018) used a mixed-method approach to investigate pre-service teachers’ TPACK development in Chemistry courses. Data were collected from 17 pre-service teachers who spent one semester in a material development course in educational technology. In contrast to earlier investigations, this study used Cronbach’s alpha or reliability tests and qualitative coding analysis to investigate the process. The findings led to the extraction of seven valid and reliable constructs, TK, PK, CK, PCK, TCK, TPK, and TPACK (Cetin-Dindar et al., 2018).

Schmid et al. (2020) used CFA with 117 pre-service upper secondary school teachers (63 females, 52 men, and 2 with no gender information) from two groups of respondents; falls 2018 (no. 49) and spring 2019 (no. 68). The questionnaire was adapted based on Schmidt et al. (2009b) TPACK constructs. The findings successfully verified 28 of 41 items with seven TPACK constructs similar to Schmidt et al. (2009b). In brief, no TPACK scale is appropriate for all situations, study objects, subjects, and settings. More studies into TPACK in various contexts and settings are still required and recommended.

UotI

Modern society has experienced incremental growth in available knowledge and new technologies (Goldie, 2016). The use of the Internet (UotI) has been significant for educational purposes in modern society (Goldie, 2016). Many significant forms of Internet-based education have emerged (Habibi et al., 2021; Çelik et al., 2012; Wang et al., 2019). The YouTube EDU program, for example, provides access to millions of educational content (Çelik et al., 2012; Habibi et al., 2021). Likewise, Apple’s educational media collection, iTunes, allows learners to bypass conventional learning and classes instead of on-demand, free mobile learning (Chen and You, 2010; Khan et al., 2015; Habibi et al., 2021). Students currently can obtain a vast amount of digital data, interact with everyone else via mobile technology, and play more games compared to prior generations (Mahat et al., 2022). Internet-based learning has been reported to be a more effective technique (Sadikin et al., 2021).

In the context of elementary education, the basic information sources are teachers and learning materials (Laine and Tirri, 2016). However, the Internet is a vital addition and tool for extending experiences (Wang et al., 2019). There is a rising demand for teachers to incorporate innovative solutions into their classrooms to allow students to utilize technology, especially the Internet. Teachers should participate actively in the classroom to use Internet-based devices to improve the teaching and learning experience (Wang and Suwanthep, 2017). Teachers may be driven or restricted by new technological advancements. Their views may force them to have a negative or favorable attitude toward technology use in teaching and learning activities (Osakwe et al., 2017; Shatto and Erwin, 2017). As a result, it is critical to understand the teachers’ perspectives of UotI to address students’ learning needs. Teachers’ Internet use perception during teaching is a significant component in educational progress, highlighted in this scale development.

Methodology

This study validated an instrument to measure TPACK, extended with the UotI. We also used the instrument to report the internal relationships, measuring whether the model reflects transformative perspectives concerning how the domains (TPACK and UotI) interact. We modified prior instruments to collect data from Indonesian elementary school teachers. The instrument consists of TPACK’s seven knowledge domains (TK, CK, PK, PCK, TCK, TPK, and TPACK) and UotI. We reviewed and adapted scholarly articles that addressed a variety of existing techniques for evaluating TPACK (Habibi et al., 2020a; Schmidt et al., 2009b) and UotI (Aslan and Zhu, 2017; Habibi et al., 2020a). All the items were translated and edited for face and content validity. Informed consent was obtained from all respondents. The need for ethics approval was waived by the IRB of the authors’ university. The study is under relevant guidelines and regulations.

Face and content validity

Face validity was done through a group discussion with four elementary school teachers and one school principal, assessing the simplicity and clarity of the instrument. From the discussions, some survey items were revised. After ensuring simplicity and clarity through face validity, the instrument was discussed with five experts to ensure relevance, a content validity. Two items were dropped since they were out of the context of Indonesian elementary education. Next, the instrument was emailed to 10 experts in educational technology for a content validity index (CVI) (Halek et al., 2017). Each item was rated for relevance, clarity, and simplicity on a 4-point scale (Habibi et al., 2020a; Lynn, 1986). The instrument’s CVI item level (I-CVI) and scale level (S-CVI) were measured. The I-CVI was calculated by dividing the total number of experts by a score of 3 or 4 (Lynn, 1986). With 10 experts involved, the I-CVI score should be <0.78. Besides, S-CVI is defined as the average percentage of items on one scale scored 3 or 4 on a scale of 1–4 (Halek et al., 2017); the score should not be <0.80. This study’s I-CVI scores were above 0.78, while the S-CVI were above 0.80. From the steps, the initial validity of the instrument was established.

Findings

Pilot study

The survey instrument was distributed to the elementary school teachers (no. 69) for a pilot study; 47 respondents were females; 22 were males. Eleven respondents were aged <25 years old, 28 were between 25 and 35 years old, and the others (no. 36) were above 35 years old. The pilot study results were analyzed through reliability tests with Cronbach alpha and values should be >0.700. All alpha values exceed 0.700, ranging from 0.848 to 0.921 (TK = 0.916; PK = 0.920; CK = 0.848; PCK = 0.899; TCK = 0.827; TPK = 0.877; TPACK = 0.921; UotI = 0.873).

Descriptive statistics

From the main data, the instrument (items = 40) was distributed to 1100 teachers; 1001 responses were measurable. From the computation, almost 10% of the data was dropped due to incomplete and missing data; Table 1 exhibits the complete information of the main data. The mean, standard deviation, skewness, and kurtosis values were calculated. Descriptive results for the single items showed a good variation within the response patterns. The results of descriptive statistics for all variables were performed in Table 2; the highest Mean is CK (M = 4.2408; SD = 0.56733), while the lowest is PCK (M = 3.6310; SD = 0.79719). Skewness and kurtosis were computed through the SPSS (values should be from −2 to 2) to ensure that the data are normally distributed (Garson, 2008). Skewness and kurtosis values are in the normal distribution range. Skewness values range from −0.367 to 1.219 and kurtosis values are between −0.614 and 0.547.

Measurement model

The measurement model for the main data was measured for construct reliability, convergent validity, and discriminant validity. Cronbach’s alpha (α) and composite reliability (CR) are used to quantify construct dependability (Fornell and Larcker, 1981). CR and α should be more than 0.700 . The CR and α values of all constructs are between 0.83 and 0.94; CK (α = 0.878; CR = 0.925), PK (α = 0.915; CR = 0.932), TK (α = 0.918; CR = 0.935), PCK (α = 0.878; CR = 0.925), TCK (α = 0.878; CR = 0.925), TPK (α = 0.878; CR = 0.925), PCK (α = 0.901; CR = 0.938), TPACK (α = 0.929; CR = 0.946), dan TIK (α = 0.941; CR = 0.950). The results indicate that the reliability of the scale is supported (Table 3). In order to ensure the convergent validity, Fornell and Larcker (1981) indicate that the value of average variance extracted (AVE) should be >0.500; AVE values are in the range of 0.550–0.680 (TK = 0.672, CK = 0.803, PK = 0.664, TPK = 0.842, PCK = 0.897, TCK = 0.806, TPACK = 0.779, TIK = 0.654). Similarly, the loadings for all items measuring constructs range from 0.697 to 0.918, suggesting that this survey has convergent validity.

Henseler et al. (2015) suggested that the Heterotrait–Monotrait (HTMT) ratio of correlation can examine the discriminant validity of variance-based estimators. HTMT has achieved higher specificity and sensitivity rates (97–99%) compared to cross-loadings and Fornell and Larcker’s criterion (Habibi et al., 2022; Ab Hamid et al., 2017). Discriminant validity is determined by measuring the HTMT values of two constructs; the HTMT value of two factors should preferably be <0.85, but it can go up to 0.90 if the conceptions are conceptually comparable (Benitez et al., 2020; Henseler et al., 2015; Ogbeibu et al., 2018). The findings of the HTMT ratio test vary from 0.012 to 0.253, indicating that all constructs are independent of one another, indicating that discriminant validity exists in this study (see Table 4).

The measurement model results were finalized after a few items were dropped during the computation in the SmartPLS 3.3. UotI11 was deleted because of the low loading. In addition, The HTMT value of the two relationships (TPK and TPACK; TPK and TCK), which is >0.900, causes two items to drop (TPK 1 and TPK 4). The PLS algorithm was re-calculated after dropping indicators that do not match the criteria (loading and HTMT values). The first computation of the measurement model can be seen in Appendix 1.

Correlations among TPACK–UotI scale

Given the multiple correlations estimated, Pearson product–moment correlations (r) were applied to the p-value, dividing the standard cut-off (0.05) by the number of coefficients estimated, thus lowering the significance level of p < 0.001. This final set of analyses examined the relationship between TPACK–UotI. All variables were significantly correlated with eight subscales at the 0.001 level of the p-value. The value of r should between –1 and 1 measuring the strength and direction of the two constructs’ relationship (between 0 and 1 = positive correlation; 0 = no correlation, and between 0 and −1 = negative correlation). All correlations are positive; the highest positive correlations emerged between TPACK and TPK (r = 0.781), TPACK and TPK (r = 0.798), CK and PK (r = 0.759), and TPACK and UotI (r = 0.723) (Table 5).

Discussions

The findings of the current study suggest that the instrument is valid and reliable for assessing TPACK–UotI among elementary school teachers. The instrument can be further refined and used for future studies. This work was established to examine elementary school teachers’ TPACK–UotI. For the TPACK, several instruments have been developed with their unique contexts over the years (Schmidt et al., 2009b; Chai et al., 2012; Shinas et al., 2013; Cetin-Berber and Erdem, 2015; Valtonen et al., 2015; Baser et al., 2016; Akyuz, 2018; Luik et al., 2018; Schmid et al., 2020). The findings of the current study support prior studies on the seven domains of the TPACK context (Akyuz, 2018; Baser et al., 2016; Cetin-Berber and Erdem, 2015; Schmid et al., 2020, 2009b; Valtonen et al., 2015). For example, Akyuz (2018) revealed TPACK domains among pre-service mathematic teachers through the CFA and reliability assessment, with 21 items informed to be valid and reliable. On the other hand, the current study opposes prior researchers’ work that failed to report the seven domains of TPACK (Chai et al., 2012; Luik et al., 2018; Shinas et al., 2013). For instance, Luik et al. (2018) informed three factors (pedagogy, content, and technology). The development of the instrument in the context of elementary school teachers’ TPACK provided by the results of this study enriches the TPACK scale in various settings with unique methodological approaches. Within this study, content validity, pilot study, and measurement model were addressed for the data analysis.

Besides TPACK, the validation involved the UotI as an extended variable. TPACK has been associated with technology integration in education; for example, TPK and technology integration (Chuang and Ho, 2015), TPACK and intention to use technology (Joo et al., 2018), TPACK and behavioral intention to adopt technology into teaching (Teo et al., 2017), PK and use of Youtube (Krauskopf et al., 2012), and digital nativity and TPACK (Kabakci Yurdakul, 2018). The validation of the scale that involves UotI during teaching perceived by elementary school teachers facilitated in this study could significantly support future studies on technology integration related to TPACK.

Even though progress has been made in generating valid and reliable instruments, this instrument is unique because it assesses the TPACK–UotI perceived by elementary school teachers. The results were built based on previous research by developing a new and comprehensive survey instrument focusing on elementary school teachers and assessing their knowledge development in each of the seven TPACK constructs and UotI. Readers should be aware that this survey was created exclusively for elementary schools. Efforts to measure elementary school teachers’ TPACK–UotI will help future work in this area. Adding more items to the constructs could improve the instrument’s reliability and validity. The instrument can be revised and refined for future work.

Conclusion

The instrument created for this study can guide future research and promote the development of TPACK–UotI. Observations of teachers are also recommended to assess the TPACK level in their classrooms. More studies on how TPACK affects classroom behaviors are also important. The instrument was utilized to examine how elementary school teachers’ attitudes toward teaching and technology changed during face-to-face and online courses. The utilization and customization of this instrument can inspire research to measure the development of TPACK–UotI in other research contexts and settings. The results can aid teacher education in designing and implementing measures to encourage technology integration in education. The valid and reliable survey instrument generated from this research would also facilitate a beneficial understanding for educational programs and institutions to promote UotI into teaching.

Data availability

Data is accessible online: https://figshare.com/s/f181150ead9fc946c587.

References

Ab Hamid MR, Sami W, Sidek MH (2017) Discriminant validity assessment: use of Fornell and Larcker criterion versus HTMT criterion. J Phys Conf Ser 125:212–225. 1.1016/j.compedu.2018.06.012

Akyuz D (2018) Measuring technological pedagogical content knowledge (TPACK) through performance assessment. Comput Educ 48(2):552–557. https://doi.org/10.1016/j.compedu.2018.06.012

Aslan A, Zhu C (2017) Investigating variables predicting Turkish pre-service teachers’ integration of ICT into teaching practices. Br J Edu Technol 48(2):552–570. https://doi.org/10.1111/bjet.12437

Baser D, Kopcha TJ, Ozden MY (2016) Developing a technological pedagogical content knowledge (TPACK) assessment for pre-service teachers learning to teach English as a foreign language. Comput Assist Lang Learn 29(4):749–764. https://doi.org/10.1080/09588221.2015.1047456

Benitez J, Henseler J, Castillo A et al. (2020) How to perform and report an impactful analysis using partial least squares: guidelines for confirmatory and explanatory IS research. Inf Man 57(2):1–16. https://doi.org/10.1016/j.im.2019.05.003

Çelik S, Toptaş V, Karaca T (2012) iTunes University: potentials and applications. Proc Soc Behav Sci 64:199–210. https://doi.org/10.1016/j.sbspro.2012.11.048

Cetin-Berber D, Erdem AR (2015) An investigation of Turkish pre-service teachers’ technological, pedagogical and content knowledge. Computers 4(3):234–250. https://doi.org/10.3390/computers4030234

Cetin-Dindar A, Boz Y, Yildiran Sonmez D, Demirci Celep N (2018) Development of pre-service chemistry teachers’ technological pedagogical content knowledge. Chem Educ Res Pract 4(3):234–25. https://doi.org/10.1039/C7RP00175D

Chai CS, Koh JHL, Ho HNJ et al. (2012) Examining pre-service teachers’ perceived knowledge of TPACK and cyberwellness through structural equation modeling. Australas J Educ Technol 28(6):1000–1019. https://doi.org/10.14742/ajet.807

Chen W, You M (2010) Student response to an internet-mediated industrial design studio course. Int J Technol Des Educ 20(2):151–174. https://doi.org/10.1007/s10798-008-9068-2

Chuang HH, Ho C-J (2015) An investigation of early childhood teachers’ technological pedagogical content knowledge (TPACK) in Taiwan. Ahi Evr Üniv Kırr Eği Fak Derg 12(2):99–117

Fornell C, Larcker DF (1981) Structural equation models with unobservable variables and measurement error. Thousand Oaks, CA: Sage Publications

Garson GD (2008) Topics in multivariate analysis. Statnotes 9(5):1–21

Goldie JGS (2016) Connectivism: a knowledge learning theory for the digital age? Med Teach 38(10) https://doi.org/10.3109/0142159X.2016.1173661

Habibi A, Yaakob MFM, Al-Adwan AS (2021) m-Learning management system use during Covid-19. Inf Dev https://doi.org/10.1177/02666669211035473

Habibi A, Yusop FD, Razak RA (2020a) The role of TPACK in affecting pre-service language teachers’ ICT integration during teaching practices: Indonesian context. Educ Inf Technol 25(3):1929–1949. https://doi.org/10.1007/s10639-019-10040-2

Habibi A, Riady Y, Al-Adwan SA, Albelbisi AN (2022) Beliefs and knowledge for pre-service teachers’ technology integration during teaching practice: an extended theory of planned behavior. Comput Sch 1–26 https://doi.org/10.1080/07380569.2022.2124752

Halek M, Holle D, Bartholomeyczik S (2017) Development and evaluation of the content validity, practicability and feasibility of the Innovative dementia-oriented Assessment system for challenging behaviour in residents with dementia. BMC Health Serv Res 17(1) https://doi.org/10.1186/s12913-017-2469-8

Henseler J, Ringle CM, Sarstedt M (2015) A new criterion for assessing discriminant validity in variance-based structural equation modeling. J Acad Mark Sci 43(1) https://doi.org/10.1007/s11747-014-0403-8

Joo YJ, Park S, Lim E (2018) Factors influencing pre-service teachers’ intention to use technology: TPACK, teacher self-efficacy, and Technology Acceptance Model. Educ Technol Soc 21(3):48–59

Kabakci Yurdakul I (2018) Modeling the relationship between pre-service teachers’ TPACK and digital nativity. Educ Technol Res Dev 66(2):267–281. https://doi.org/10.1007/s11423-017-9546-x

Khan AI, Al-Shihi H, Al-Khanjari ZA, Sarrab M (2015) Mobile Learning (M-Learning) adoption in the Middle East: lessons learned from the educationally advanced countries. Telemat Inform 32(4):909–920. https://doi.org/10.1016/j.tele.2015.04.005

Koh J. H. L, Chai C. S, Tsai C. C (2010) Examining technological pedagogical content knowledge of Singapore pre-service teachers with a large-scale survey. J. Comput. Assist 26(6):563–573. https://doi.org/10.1111/j.1365-2729.2010.00372.x

Krauskopf K, Zahn C, Hesse FW (2012) Leveraging the affordances of Youtube: the role of pedagogical knowledge and mental models of technology functions for lesson planning with technology. Comput Educ 58(4):1194–1206. https://doi.org/10.1016/j.compedu.2011.12.010

Laine S, Tirri K (2016) How Finnish elementary school teachers meet the needs of their gifted students. High Abil Stud 27(2):149–164

Luik P, Taimalu M, Suviste R (2018) Perceptions of technological, pedagogical and content knowledge (TPACK) among pre-service teachers in Estonia. Educ Inf Technol 23(2):741–755. https://doi.org/10.1007/s10639-017-9633-y

Lynn MR (1986) Determination and quantification of content validity. Nurs Res 35(6):382–386. https://doi.org/10.1097/00006199-198611000-00017

Mahat J, Alias N, Yusop FD (2022) Systematic literature review on gamified professional training among employees. Interact Learn Environ 0(0):1–20. 1.1080/1049482.2022.2043910

Mishra P, Koehler MJ (2006) Technological pedagogical content knowledge: a framework for teacher knowledge. Teach Coll Rec 108(6):1017–1054. https://doi.org/10.1111/j.1467-962.2006.00684.x

Ogbeibu S, Senadjki A, Gaskin J (2018) The moderating effect of benevolence on the impact of organisational culture on employee creativity. J Bus Res 90:334–346. https://doi.org/10.1016/j.jbusres.2018.05.032

Osakwe J, Dlodlo N, Jere N (2017) Where learners’ and teachers’ perceptions on mobile learning meet: a case of Namibian secondary schools in the Khomas region. Technol Soc 49:16–30. https://doi.org/10.1016/j.techsoc.2016.12.004

Sadikin A, Habibi A, Sanjaya E, Setiawan D. C, Susanti T, Saudagar F (2021) Factors influencing pre serviceteachers’ satisfaction and intention to use the Internet: A structural equation modeling. iJIM 15(2):110–123

Sang G, Valcke M, van Braak J, Tondeur J, Zhu C (2011) Predicting ICT integration into classroom teaching in Chinese primary school: exploring the complex interplay of teacher-related variables. J. Comput. Assist 27(2):160–172

Schmid M, Brianza E, Petko D (2020) Developing a short assessment instrument for Technological Pedagogical Content Knowledge (TPACK.xs) and comparing the factor structure of an integrative and a transformative model. Comput Educ 157:107. https://doi.org/10.1016/j.compedu.2020.103967

Schmidt DA, Baran E, Thompson AD et al. (2009b) Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for pre-service teachers. J Res Technol Educ 42(2):123–149. https://doi.org/10.1080/15391523.2009.10782544

Shatto B, Erwin K (2017) Teaching millennials and generation Z: Bridging the generational divide. Creat Nurs 23(1). https://doi.org/10.1891/1078-4535.23.1.24

Shinas VH, Yilmaz-Ozden S, Mouza C, Karchmer-Klein R, Glutting JJ (2013) Examining domains of technological pedagogical content knowledge using factor analysis. J Res Technol Educ 45(4):339–360. https://doi.org/10.1080/15391523.2013.10782609

Teo T, Milutinović V, Zhou M et al. (2017) Traditional vs. innovative uses of computers among mathematics pre-service teachers in Serbia. Interact Learn Environ 25(7):6483–6511. https://doi.org/10.1080/1049482.2016.1189943

Valtonen T, Sointu ET, Mäkitalo-Siegl K, Kukkonen J (2015) Developing a TPACK measurement instrument for 21st century pre-service teachers. Seminar.net 11(2):87–100

Wang F, Suwanthep J (2017) Constructivism-based mobile application for EFL vocabulary learning. Int J Learn 3(2) https://doi.org/10.18178/IJLT.3.2.106-112

Wang LYK, Lew SL, Lau SH, Leow MC (2019) Usability factors predicting continuance of intention to use cloud e-learning application. Heliyon 5(6) https://doi.org/10.1016/j.heliyon.2019.e01788

Acknowledgements

We thank all respondents of the study.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics statement

The need for ethics approval was waived by the IRB of the authors’ university. The study is in accordance with relevant guidelines and regulations.

Informed consent

Informed consent was obtained from all respondents.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sofyan, S., Habibi, A., Sofwan, M. et al. TPACK–UotI: the validation of an assessment instrument for elementary school teachers. Humanit Soc Sci Commun 10, 55 (2023). https://doi.org/10.1057/s41599-023-01533-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-023-01533-0

- Springer Nature Limited

This article is cited by

-

Assessing primary school preservice teachers’ confidence to apply their TPACK in specific categories of technologies using a self-audit survey

The Australian Educational Researcher (2024)