Abstract

The widespread problems with scientific fraud, questionable research practices, and the reliability of scientific results have led to an increased focus on research integrity (RI). International organisations and networks have been established, declarations have been issued, and codes of conducts have been formed. The abstract principles of these documents are now also being translated into concrete topic areas that Research Performing organisations (RPOs) and Research Funding organisations (RFOs) should focus on. However, so far, we know very little about disciplinary differences in the need for RI support from RPOs and RFOs. The paper attempts to fill this knowledge gap. It reports on a comprehensive focus group study with 30 focus group interviews carried out in eight different countries across Europe focusing on the following research question: “Which RI topics would researchers and stakeholders from the four main areas of research (humanities, social science, natural science incl. technical science, and medical science incl. biomedicine) prioritise for RPOs and RFOs?” The paper reports on the results of these focus group interviews and gives an overview of the priorities of the four main areas of research. The paper ends with six policy recommendations and a reflection on how the results of the study can be used in RPOs and RFOs.

Similar content being viewed by others

Introduction and literature background

Scientific research is vital for extending the frontiers of knowledge. Universities and other research performing organisations (RPOs) are the cradle of competence, knowledge, and curiosity, playing a pivotal role in society by informing social, political, and economic decision-making. Research funding organisations (RFOs) contribute to this crucial role of science by setting directions and priorities for research and by allocating the necessary funding. The authority and societal relevance of research depend on the trustworthiness of research results. However, science is fallible, contextually situated, and “never pure” (Shapin, 2010), progressing by learning from mistakes and refutations of own hypotheses, being itself a source of uncertainties and dilemmas (Beck, 1992). Therefore, it is crucial that the scientific community and the public can have trust in researchers and their organisations, knowing that they have research integrity (RI), defined as “the attitude and habit of the researchers to conduct their research according to appropriate ethical, legal and professional frameworks, obligations and standards” (ENERI, 2019).

However, over the last 20 years, an alarmingly high number of RI-related problems have been identified and reported. These include cases of scientific fraud and widespread problems with questionable research practices (Steneck, 2006; Fanelli, 2009; Bouter et al., 2016; Ravn and Sørensen, 2021) as well as problems with reliability of scientific results (Ioannidis, 2005; Resnik and Shamoo, 2017; Baker, 2016). Ultimately, such violations of good research practice risk diminishing the public and the research community’s trust in science, its institutions, and its practitioners (Roberts et al., 2020; Edwards and Roy, 2017). Therefore, for validity as well as trust concerns (Bouter et al., 2016), research integrity should be strengthened among researchers and research institutions.

To strengthen RI, international networks have been established, such as the European Network of Research Integrity Offices (ENRIO) and the World Conferences on Research Integrity Foundation (WCRI). These organisations have issued different guidance documents on, for instance, RI principles (the Singapore Statement) (WCRI, 2010), RI in international collaborations (the Montreal statement) (WCRI, 2013), criteria for advancement of researchers (the Hong Kong principles) (Moher et al., 2020), and RI investigations (ENERI and ENRIO 2019). There are good examples of RPOs and RFOs successfully implementing RI policies into practice (Mejlgaard et al., 2020; Lerouge and Hol, 2020). There is also recognition that RPOs need support to put RI principles into practice, as outlined in, for instance, the European Code of Conduct for Research Integrity (ALLEA, 2017) or in the recommendations from the National Academies of Sciences, Engineering, and Medicine (2017) in the USA. RPOs and RFOs are expected to develop concrete organisational policies that are, or will be, implemented across disciplines in the research ecosystem. However, there is little evidence on how to best address this important task and make abstract RI principles and codes of conduct concrete and relevant for researchers across different disciplines, organisations, and national contexts. A recent scoping review of available evidence showed that most RI practice guidance was developed for research in general, applicable to all research fields (Ščepanović et al., 2021). The majority of RI documents were guidelines developed by RPOs, which focused on researchers. Only a few RI practices originating from RFOs were identified. While medical science had many guidance documents and support structures in place (patient-centred data management plans, ethical review boards, etc.), the main research areas of natural science, social science, and the humanities did not have much discipline-specific RI guidance.

If we look at factors influencing the implementation of practices for RI in RPOs and RFOs, there is a great deal of evidence on factors negatively influencing RI but only a few studies on how to make a positive change in research environments at the institutional level (Gaskell et al., 2019). A Cochrane systematic review of interventions to promote RI (Marušić et al., 2016) showed that only a few have been shown to be effective. There was only low-quality evidence on training students about plagiarism, and no studies at that time showed successful interventions at the organisational level (Marušić et al., 2016). More recently, Haven et al. (2020) showed that a transparent research climate is important for enhancing RI.

A study of RI-related changes in a research organisation showed that the responses of researchers were influenced not only by academic and professional training and experience, but also by the micro-organisational context in which the change was implemented (Owen et al., 2021). Furthermore, developing skills for RI and advocating and supporting RI implementation with specific interventions may not be sufficient and should be complemented by periodic formal research assessment exercises to ensure that the RI practices have been fully implemented (Owen et al., 2021). Economic modelling of institutional rewards for research further suggests that research institutions should balance both an “effort incentive policy” (to increase research productivity) and an “anti-fraud policy” (to deal with misconduct) (Le Maux et al., 2019).

The point of departure for our focus group study is the idea that research organisations need to have a clear plan for how to promote RI (Mejlgaard et al., 2020). This plan must describe which policies and actions an organisation will apply to promote RI and point to relevant guidelines that can support researchers across main areas of research. The first step in such a plan is to identify the most relevant topics. Some topics have already been identified by the research community, such as training and mentoring for RI, improving research culture, protecting both whistleblowers and researchers under allegation for misconduct (Forsberg et al., 2018), as well as research methodology and reporting/publishing (EViR Funders’ Forum, 2020).

Our focus group study is part of the EC-funded project “Standard Operating Procedures for Research Integrity” (SOPs4RI), which aims to support transformational RI processes across European Research.Footnote 1 Ahead of the focus group study, a wide, three-round Delphi consultation of research policy experts and institutional leaders was conducted to identify priority topics for RI promotion plans (Labib et al., 2021). This resulted in two lists of RI topics, with nine topics for RPOs and 11 topics for RFOs identified as important.Footnote 2

However, neither the Delphi study (Labib et al., 2021) nor any other studies tell us anything about disciplinary differences in the relevance of these RI topics. We know from the literature on, for example, epistemic cultures that there are notable differences in how research processes are carried out and what constitutes scientific knowledge (e.g., Knorr Cetina, 1999; Knorr Cetina & Reichmann, 2015). These differences also lead to variation in the perception of questionable research practices (QRPs) across research areas (e.g., Ravn and Sørensen, 2021). Therefore, we might expect disciplinary differences in requirements for research integrity guidelines and organisational support. However, so far, we know very little about what such differences entail. In the present paper, we attempt to fill this knowledge gap by examining how to best promote RI across the main areas of research.Footnote 3 We conducted 30 focus group interviews across Europe in an attempt to answer the question, “Which RI topics would researchers and stakeholders from the four main areas of research (humanities, social science, natural science incl. technical science, and medical science incl. biomedicine) prioritise for RPOs and RFOs?” The paper reports on the results of these focus group interviews and gives an overview of the priorities of the four main areas of research. The paper ends with policy recommendations and a reflection on how the results of our study can be used in RPOs and RFOs.

Methods

Research and interview design

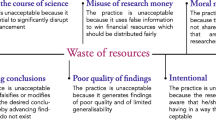

Through a focus group study design, we aimed to explore how the main areas of research (humanities, social science, natural science incl. technical science, and medical science incl. biomedicine) perceived and prioritised a number of different RI topics relevant for RPOs and RFOs, respectively (based on Labib et al., 2021 and Mejlgaard et al., 2020). The focus group interviews consisted of three parts (cf. Document S3: Moderator/interview guide). First, some open questions were introduced that related to the different main areas of research’s needs for RI support. Then, a discussion followed on selected RI topics. Finally, a sorting exercise was introduced where nine and 11 topics for RPOs and RFOs, respectively, were sorted and ranked. Here, based on discussion and consensus within the group, participants were asked to place the topics within one of three categories: “very important”, “somewhat important”, and “of none or minimal importance” for enhancing RI within their main area of research. The exercise was carried out via pre-printed, laminated cards (see examples in Document S5). On one side of the card was the name of the RI topic under discussion—on the other side the subtopics associated with this topic. In this way, the interviewees could use the subtopics to understand the meaning of the topic, discuss the importance of it for their research area and, finally, place it in one of the three categories. Table 1 shows the topics, including subtopics, that were sorted and ranked, and this paper reports on the results of this sorting exercise.

Sample and recruitment strategy

The comprehensive study consists of 30 focus group interviews across eight European countries.Footnote 4 The interviews were conducted between December 2019 and April 2020. 14 of the focus groups concentrated on RPOs and involved researchers exclusively, 16 groups focused on RFOs and included researchers as well as relevant stakeholders. A total of 147 researchers and stakeholders participated in the study.Footnote 5 Table 2 displays the distribution of participants in relation to research area, gender, and academic level. Table S1 and Table S2 give a more detailed overview of the composition of each focus group.

See Table S4 for further information on number of invitations send out, acceptance rates, and cancellations.

.Study participants were recruited based on a number of sampling criteria to ensure overall variation in research areas and disciplines as well as in stakeholder representation in the mixed focus groups. For both the researcher-only groups (targeting RPOs) and the mixed groups (targeting RFOs), sample homogeneity was employed with regard to area of research. Furthermore, the RPO groups were composed of researchers with shared methodological and epistemic approaches in terms of “research orientation”. In the humanities-groups focusing on RPOs, one group was composed of language disciplines, one of historical disciplines, and the last one of communication disciplines. In the social sciences, the groups were divided between qualitative researchers (two groups) and quantitative researchers (one group). In the Natural science groups, three groups were composed of researchers doing laboratory/experimental/applied/field research and one group with researchers who work theoretical. Finally, in the medical science groups, two groups conducted basic research and two groups were clinical/translational/public health in character. The exact disciplines represented in these groups are shown in Table S1.

In the 16 groups focusing on RFOs, four groups were conducted per main area of research (humanities, social science, natural science incl. technical science, and medical science incl. biomedicine). All groups consisted of both researchers and relevant stakeholders. In the composition of these groups, variation in stakeholder representation was key. Stakeholders included research integrity and research ethics committee members, public and private funders, representatives from RPO-management and industry, trade unions, publishers, etc (see Table S2 for a full account of participants).

The following sample criteria were also applied to enhance representation and diversity and to introduce heterogeneity into the groups to counterbalance group homogeneity:

-

One stakeholder employed in a high-level management position in a research-funding organisation (RFO) and one stakeholder from a research integrity office (RIO) should be included in each of the groups.

-

Both senior/permanent position holders and junior researchers/non-permanent position holders should be represented in the groups. Interdependent participants (e.g., a lab leader and an employee from the same lab) should not be recruited to the same group.

-

The gender composition of the focus groups should be balanced.

-

Two to three different disciplines should be represented in each focus group.

-

Minimum two types of stakeholders should be included in a mixed focus group. Stakeholders must have discipline-specific knowledge.

-

The selected disciplines should be broadly representative of research being conducted in the four main areas.

Not all focus groups meet all sampling criteria (see Table S1 and Table S2). However, across the complete sample, variation is accomplished as to the number of criteria stipulated. Overall, the focus group study applied a purposeful sampling strategy (Patton, 1990) based on the number of pre-selected criteria outlined above. Moreover, the study used snowball/chain sampling. Relevant volunteers from existing networks, together with new volunteers recruited at, for instance, conferences were asked to act as gatekeepers and assist with the recruitment of relevant researchers and stakeholders within their organisations and institutions. This strategy was supplemented by an approach where participants were chosen from institutional webpages and invited by e-mail.

Ethical considerations

Ethical approval

Ethical approval was obtained from the Research Ethics Committee at Aarhus University (ref. no 2019-0015957). In addition, a national approval was obtained in Croatia.

Risk and inconveniences

The focus group study posed a small risk of discovering sensitive information, for instance concerning research misconduct cases. In the focus group introduction, the focus group facilitators emphasised the issue of confidentiality, and by signing the informed consent form, participants agreed to maintain the confidentiality of information discussed during the focus group interview.

Informed consent

The informed consent form followed the guidelines of Aarhus University. For the face-to-face interviews, consent forms were signed before the commencement of the interviews. For the online focus group interviews, consent was given verbally and subsequently provided in a written version.

Data management and privacy

The focus group invitation letter included a link to the privacy policy specifying the procedures for data management and privacy in compliance with the General Data Protection Regulation (GDPR).

Coding and analytical strategy

Recording and transcription

All interviews were performed in English, recorded, and transcribed to enhance accuracy and reliability. All transcribed interviews were coded in the software programme NVivo (ver. 12), which is designed to facilitate data management, analysis, and reporting. The coding process mainly followed a deductive coding strategy and was directed by a set of pre-defined categories that relate to the list of RI topics and subtopics explored through the moderator/interview guide (cf. Document S3, Table 1, and Sørensen et al., 2020). The coding process also made use of a more explorative approach, where new topics and cross-cutting themes emerged through an inductive coding procedure. The data was coded through the process of first- and second-cycle coding (Saldana, 2013) by one of the authors but discussed collectively.

Analytical strategy and construction of heat maps

The analytical strategy prioritised within-case analyses of each of the discussed RI topics in order to understand its uniqueness in relation to the different main areas of research, the specific dynamics and correlations at play as well as context-dependent implications that may reflect national and institutional variance of particular importance. The analytical strategy also included a thematic across-case comparison that added to and supported the explanatory force of the individual within-case analyses by ocusing on identifying differences and similarities across the main areas of research. To visualise the prioritisation of topics qua the sorting exercise and associated discussions, 10 heat maps were constructed through two rounds of coding. In the first round, two researchers analysed the outputs from the sorting exercises—pictures (cf. Document S5) and transcriptions—in order to place each topic in one of the five categories applied: “very important”, “important”, “somewhat important”, “of minimal importance”, and “not important”.Footnote 7 This was done for each of the 30 focus groups. In the second round, disparities in the coding were analysed and discussed. After this, both coders’ final codings were given a score (1–5; the lowest category “not important” got the score 1, the next-lowest category 2, etc.). Subsequently, the average score was calculated and used as the basis of the heat maps (Figs. 1–10).

The heat map displays the results of the sorting exercise of nine RI topics (horizontal rows) across the 14 RPO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all 14 RPO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of nine RI topics (horizontal rows) across the three humanities RPO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all three RPO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of nine RI topics (horizontal rows) across the three social science RPO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all three RPO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of nine RI topics (horizontal rows) across the four natural science RPO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all four RPO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of nine RI topics (horizontal rows) across the four medical science RPO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all four RPO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the "Methods" section.

The heat map displays the results of the sorting exercise of 11 RI topics (horizontal rows) across the 16 RFO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all 16 RFO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of 11 RI topics (horizontal rows) across the four humanities RFO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all four RFO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of 11 RI topics (horizontal rows) across the four social science RFO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all four RFO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of 11 RI topics (horizontal rows) across the four natural science RFO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all four RFO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

The heat map displays the results of the sorting exercise of 11 RI topics (horizontal rows) across the four medical science RFO focus groups (vertical columns). The horizontal column on the left side with hexagons shows the combined results for all four RFO focus groups. The color chart at the top explains what the heat map colors mean. The construction of the heat maps is explained in the “Methods” section.

Results

In this section, we present the results of the sorting exercise that was carried out as part of the 30 focus group interviews. We present the results in two subsections. First, we report on the results from the 14 focus groups focusing on topics for RPOs. Then, we present the results from the 16 focus groups that discussed RFO-related topics. In both subsections, we first show the combined results of all the focus groups within the subsection in a heat map. After this, we present the results per main area of research (humanities, social science, natural science, and medical science), reported in one heat map per main area. Finally, we end both subsections with a table that summarises the most important RI topics for the four main areas of research.

Research performing organisations

This subsection presents the results from the 14 focus groups that discussed a set of nine research integrity topics for RPOs. 67 researchers took part in the interviews, which were carried out in eight different countries. The interviewees represented key approaches and core disciplines within the four main areas of research. Table S1 describes the 14 focus groups in detail, including the number of interviewees in each group, approaches and disciplines represented, seniority level, gender balance, and the country where the focus group interview was conducted.

The combined results of the RPO focus groups

Figure 1 shows the results of the 14 focus groups that discussed the importance of the nine RPO topics. The heat map depicts the scores from each of the 14 groups as well as the combined score for all groups shown per topic. The combined scores show that two topics (“Supervision and mentoring” and “Research environment”) were considered to be very important, five topics were considered to be important, whereas the two last topics (“Collaborative research among RPOs” and “Declaration of competing interests”) got the combined score “somewhat important”. However, if we look at how the individual groups have scored these two topics, they were found to be “very important” in four and six groups, respectively. Our focus group interviews thus confirmed the importance of the RPO topics listed in Table 1.

The results per main area of research for RPOs

If we look separately at the four different main areas of research, there are, however, also notable differences in the perception of the importance of individual topics. Figure 2 shows the results of the sorting exercise in the humanities focus groups. Apart from “Collaborative research among RPOs”, all topics were perceived to be important. The topic “Research Environment” was considered the most important topic.

Across the humanities groups, the topic “Responsible supervision and mentoring” was seen as a foundation for a solid research culture, as expressed by this interviewee, for example: “It holds up the quality. So I think that it’s very, very important that we have a good sense that responsibility is key in, when you supervise, when you mentor …” (Senior-level researcher in history of ideas, focus group 1). On the other hand, the topics “Research ethics structures” and “Data practices and management” were assessed rather differently among the three groups. For some, ethics does not play any role: “The actors I look at, they’re all dead so [all laugh]” (Assistant professor in archaeology, focus group 1). For others, it is seen as fundamental: “I mean it’s such a crucial, but basic thing, I think that its, yer, having a framework in place that is ethical” (Postdoc in theoretical linguistics, focus group 11).

These differences also reflect variances in experiences and needs of the different disciplinary subfields within the humanities. As a main research area, the humanities consist of many different disciplinary fields that vary in the methods they use and, in some cases, even belong to different epistemic cultures (Knorr-Cetina, 1999; Ravn and Sørensen, 2021). There are, in other words, differences in the way in which they “do research” and understand what good research is, and these differences show themselves in dissimilar needs for guidelines and SOPs. Accordingly, the interviewees in the humanities groups would like RPOs to consider disciplinary differences when formulating research integrity policies and guidelines in order to make sure that the policies are relevant: “… we are always held to standards that have nothing to do with our practice” (Senior-level researcher in history of ideas, focus group 1). According to the interviewees, this would also help RPOs ensuring legitimacy of its policies and procedures.

Differences in the way research is carried out can probably also explain why “Collaborative research among RPOs” got a low score in the humanities groups. Across the humanities, publications on average have fewer co-authors than publications within other main areas of research (Henriksen, 2016).

The need for thinking about disciplinary differences was also relevant among the focus group interviews with social scientists. In these interviews, eight of the nine topics were assessed as important or very important (Fig. 3). For example, expressed in this way concerning “Research environment”: “it’s very important because it creates a culture […]. If an institute or I’m new at some place and already their culture has integrity then I might learn by doing from them.” (Postdoc, focus group 16) “Research ethics structures” was the only topic that the social science groups considered to be of minimal importance for RPOs to develop guidelines and SOPs for. The discussions in the groups showed that this was not because the topic was not considered important per se, but because it was seen as a topic that was already well taken care of by RPOs. “Collaborative research among RPOs” also got a relatively low combined score. However, here, it is important to note the difference between the quantitative group that found this topic very important and the two qualitative groups that found the subject somewhat important.

In the natural science groups (including technical science), all issues except one were considered to be important or very important (Fig. 4). The only topic that got the combined score “somewhat important” was “Declaration of competing interests”. However, here, there were noteworthy differences between the four groups. Two groups found it very important, while one group thought this topic was already well covered: “… they [RPOs] all have clear guidelines on what you have to do in case of competing interest. You have different ones but they’re all pretty clear. It’s a very important topic, of course, but it’s being handled in a quite appropriate way as far as I know” (Associate professor in bioscience and engineering, focus group 17). The last group questioned the effect of declarations of competing interests: “I don’t think that declaration is the most important thing. The principle is important but not the declaration. What is declaration? [laughter] If you sign something that you don’t do, it’s not necessary that you’ll follow this […]” (Senior researcher in geoscience, focus group 23).

In contrast to the humanities and the qualitative methods groups within social science, the natural and technical science researchers could relate to most RI topics discussed in the focus groups. In these groups, “classic problems” within RI, such as conflicts on authorship, publication pressure, and problems related to supervision, were discussed across the groups. In addition, problems related to collaborative work, especially between universities and industry, were also pointed out as important, most likely because university-industry collaboration plays an important role within technical science and can sometimes generate conflicts, as this interviewee explains:

So, if you publish anything with [company name] or any of the companies, they will wanna read it, and they have to sign off on specifically what you write, and they will ask you to change some sentences that they don’t like. So, they never changed what they said, but they changed some of the wordings. […] So, I felt like they should just shush, like they shouldn’t have any say in what I write, because it’s my paper, it’s my data, but it’s also their data, and if I work with somebody in the university, they will also have permission to say that, right. We all have to agree on what it is, we say […] (Postdoc in chemistry, focus group 6).

The importance of thinking about disciplinary differences when formulating policies and guidelines for RI topics was also evident in the natural science groups. For example, all three experimental groups perceived “Research ethics structures” to be very important, whereas the theoretical group considered this topic “not important”. One of the interviewees explained the lack of importance of ethics structures for theoretical natural science with a lack of connection between theoretical work and living beings: “… I guess it doesn’t have any importance because it’s theoretical, it’s not on anything living” (Postdoc in chemistry, focus group 6).

Finally, if we look at the results from the medical science focus groups (Fig. 5), the interviewees found it particularly important to focus on “Education and training in RI”, “Responsible supervision and mentoring”, and “Research environment”. For example, expressed in this way:

On research environment:

[…] if we don’t support a research culture and a research environment there’s nothing for us to do. […] we have to be fertilised with good energy to make some good projects, and if there’s no culture where there’s fair procedures for appointments, where there’s adequate education and skills training, […] if none of these things are in place, there’s no need for us to do what we’re doing (Associate professor in clinical nursing, focus group 10).

On supervision and mentoring:

Ph.D students are expensive, because we have to send them to courses, conferences all these things, and we have to put so much energy trying to read the articles, to promote their science, and when something goes wrong, we are the main person taking the fall for it. So we need to have our names standing out if we don’t, therefore coaching and supervision is very, very important (Associate professor in oncology, focus group 10).

On education and training:

So, how do we perceive the term responsible science? Each one perceives it in a different way […] For me what we need is education […] (Professor in medical law and ethics, focus group 30).

“Dealing with breaches of RI”, “Research Ethics structures”, and “Data practices and management” were also assessed to be important issues for the RPOs to focus on, whereas the results for the last three topics are less clear. “Declaration of competing interest” got four different scores in the groups. The interviewees saw it as important, but also as a mere formality to declare competing interests: “It’s something that is always written, even though it’s just a little star at the end, ‘if there are any cases of conflict of interest, do not’. […] I think what we’re saying is that it’s important, but what I’m trying to say is, that it’s already written in many of the documents […]” (Associate professor in clinical nursing, focus group 10). “Publication and communication” and “Collaborative research among RPOs” also scored relatively low, but here, there seems to be a difference between the clinical and basic science groups. These topics were perceived as more important by the clinical groups than the basic research groups.

Finally, interviewees pointed out that procedures and standards are often quite different between RPOs and countries. One interviewee expressed it in this way:

Where should she apply for ethics? [There are] some other EU regulations that we have to follow on top of the local, regional, national requirements for ethics, so just to say that the mix of who we are and where we work influences, it makes a lot of, I don’t know, confusion somehow. And I think some rules and guidelines would be beneficial at times (Associate professor in clinical nursing, focus group 10).

This is often a challenge in collaborative projects. Therefore, the interviewees did not just request more guidelines and SOPs, but also a harmonisation across RPOs and countries, as expressed in this way: “If you are going to set up an office of research integrity at the European level (like USA) that would be very interesting. I think that would be a good idea” (Senior researcher in biophysics, focus group 30).

Top-prioritised topics per main area of research for RPOs

As this subsection has shown, there are notable differences between the main areas of research. Therefore, we end this subsection with a table that provides an overview of the top-prioritised topics per main area of research (Table 3). The table shows which topics are considered the most important for the different main areas. Only the topics that were found to be “very important” in at least three out of four groups (or two out of three for the main areas, where only three groups were carried out) are included.

Research funding organisations

In addition to the 14 focus group interviews focussing on the RPOs, 16 other focus groups interviews were carried out to discuss the perceived importance of the 11 topics that Labib et al. (2021) identified as important for RFOs. In this subsection, we report on the results from these focus groups. Also, in these RFO groups, we operated with the four main areas of research and conducted four focus group interviews within each of these main areas. The interviewees were both researchers from the particular main area of research and relevant stakeholders such as people working in management position at RPOs, representatives from funders, RIOs, and so on (see Table S2 for details). We did not divide the groups into different approaches within the four main areas of research but invited the interviewees as representatives of the main area. For example, this means that both qualitative and quantitative researchers took part in all four focus groups interviews within social science.

The combined results of the RFO focus groups

The combined results of the 16 focus groups that discussed the importance of the 11 topics identified by Labib et al. (2021) are shown in Fig. 6. This combined heat map shows that nine of the 11 topics are considered to be “important”, while two topics, “Intellectual property rights” and “Collaboration within funded projects” were only regarded as “somewhat important”. Still, these topics were considered “very important” or “important” in seven and eight, respectively, of the 16 groups. The combined heat map thus testifies to a general support of the 11 topics identified by Labib et al. (2021).

The results per main area of research for RFOs

Despite a general validation of the 11 topics, the focus group interviews also revealed some disciplinary differences in the perception of the importance of the topics. Therefore, we continue this subsection by looking into the four main areas of research’s perception of the importance of the topics. We begin with the humanities and the heat map depicted in Fig. 7, which shows the importance assigned to the 11 RFO-related topics by the interviewees in the humanities groups. Across the four groups, eight of the topics were found to be either important or very important for funders to focus on to support RI of its beneficiaries. Two topics (“Intellectual property issues” and “Collaboration within funded projects”) were only considered to be “somewhat important”, while “Declaration of competing interests” was found to be of minimal importance.

Even though the interviewees from the humanities were relatively positive towards the idea that RFOs develop their own guidelines and SOPs for at least eight of the 11 topics, it should be mentioned that they also expressed a concern that this might lead to increased bureaucracy, articulated by an interviewee in this way: “Yeah, it’s hard to say because I think that there’s already like a lot of bureaucracy […] I wonder if maybe we need to readdress where the bureaucracy is focused, when it comes to these things” (Associate researcher in digital humanities, focus group 13).

Further, although there are clear patterns in the way in which the humanities prioritise different topics, it is also important to note the relatively large differences between the groups. These differences are larger than within the other main areas of research and show how difficult it is to talk about the humanities as such across disciplinary, institutional, and national differences. When interpreting the differences in the perceptions of the importance of the topics, one also has to take the different subtopics related to each topic into consideration (cf. Table 1). Some subtopics might be important for some interviewees, while others are of lesser importance.

Turning now to social science, the first important feature in the heat map (Fig. 8) is that it is much greener than the humanities heat map (Fig. 7). This points to a generally stronger support within social science to the idea that RFOs can help enhance RI through guidelines and SOPs. Except for the topic “Intellectual property rights”, which the interviewees found it hard to relate to and not especially relevant for their area of research, the combined results show that the interviewees found the rest of the issues “important” or “very important”. Two topics, “Research ethics structures” and “Publication & communication”, were even considered to be “very important” by all groups. Three other topics (“Dealing with breaches of RI”, “Selection & evaluation of proposals”, and “Collaboration within funded projects”) were similarly placed in the “very important” category in three of the four focus groups, showing strong support to the idea that RFOs should make guidelines and SOPs for these topics.

Despite a generally positive-attitude towards RFOs providing guidelines and SOPs to beneficiaries, the interviewees in the social science focus groups also warned against possible negative bureaucratic side-effects of more guidelines and SOPs alongside already existing ones:

P2: […] we are spending more and more human resources and, along with that financial resources to explain how did we spent our money (Management position at university, focus group 22).

P1: I fully agree. And I think we are creating way too much burden, administrating, which could be used for actual productive scientific work (Associate professor of psychology, focus group 22).

If we look at the results from the natural science groups (Fig. 9), which in our study also includes technical science, we again see a strong overall validation of the 11 topics identified in the Delphi study. As was the case within social science, all topics are placed in the “very important” category in at least two of the four groups. However, although the overall picture is that all 11 topics are important for the RFOs to address (except “Publication & communication”, which only got the combined score “somewhat important”), the discussions in the four focus groups revealed substantial differences in the perception of the importance of the single topics. In most cases, these differences can be explained with disciplinary, institutional, and especially national differences. For example, the topic “Dealing with breaches of RI” was considered a “very important” topic in three groups, but not in the group that was conducted in Denmark. Here, it was found to be “not important”—not because the topics was not seen as important per see, but because a legal system for handling scientific misconduct is already in place in Denmark. As one of the interviewees explained,

[…] in Denmark we have actually a legal framework for dealing with this. It’s not something we invented at [name of RPO], it’s a standard for all Danish universities. So we also need to respect our system, we might not completely agree with the system, but then we need to work on changing the system but not having this overruled by a funding agency (RIO, focus group 7).

However, not all countries have such systems in place:

[…] from a funder’s perspective if you for instance were funding research in Italy or Greece, then from a funder’s perspective there might be a need for you to deal with breaches of research integrity, because there might not be any system at the university (RIO, focus group 7).

For medical science, including biomedicine, the issue of division of work between RPOs and RFOs was at the centre of the discussions in these groups. For example, a representative from a funder said the following: “[…] [I]t’s important to state that you find this important as a funder, but I don’t think it’s important for the funding agency, whatever the source of money is, to control this, to monitor this. That would be at the university level” (private funding org. representative, focus group 8). Another interviewee said that “[…] research integrity is more handled at the […] university where they already have committees etc. in place to handle this” (Professor of molecular pharmacology, focus group 8).

Although most of the topics were seen as important in themselves, it was pointed out that there must be a balance of responsibilities between RPOs and RFOs. Interviewees emphasised that for topics such as “Research ethics structures”, “Independence”, “Updating and implementing the RI policy”, and “Publication and communication”, funders should be careful not to interfere with the internal affairs of RPOs. Instead of making their own guidelines and SOPs, they could demand that procedures were in place at the beneficiary institutions. One representative from a funder said that they “[…] use the structures that are set in place by the universities” (Public funding org. representative, focus group 19), and another representative from a different funder said that

[…] it would make little sense to make rules that are in addition to or maybe even in conflict with the rules that actually govern whether people do or do not get approval from ethics committees. So I would say no to having separate rules, but yes to stating as a funder that you expect people to adhere to the rules that are already in place (Private funding org. representative, focus group 8).

Across groups, interviewees said that “Declaration of competing interests” and “Selection and evaluation of proposals” were more obvious topics for the funders to develop their own standards for.

Finally, as Fig. 10 shows, three topics got the combined score “somewhat important”. These are “Funders’ expectations of RPOs”, “Collaboration within funded projects”, and “Monitoring of funded applications”. According to the interviewees, these topics are both difficult to implement and to follow up on effectively. The relatively low score of “Monitoring of funded applications” also had to do with a fear of unnecessary paperwork and bureaucracy: “[…] it’s really difficult to do this [monitoring of funded applications] without adding much more paperwork and kind of administrative, you know, also for the researchers […]” (Administrative employee in science communication, focus group 9).

Top-prioritised topics per main area of research for RFOs

We end this subsection by summarising the main results from the focus group interviews on the RFO topics. Table 4 shows the topics that the four main areas of research would particularly like RFOs to focus on. As in Table 3, the threshold has been set quite high so that the topics included are the ones that at least three out of four groups found very important.

Discussion and recommendations

In the previous section, we presented the results of the sorting exercise conducted in the 30 focus group interviews. In the focus group discussions, the interviewees recognised the importance of the RI topics listed in Table 1 (based on Labib et al., 2021 and Mejlgaard et al., 2020). However, the results also revealed differences in the four main areas of research’s perceptions of the importance of the single topics. Some topics were more important for some areas than others were, as summarised in Tables 3 and 4. These results can help us fill in the knowledge gap identified in the Introduction concerning disciplinary differences in the need for organisational RI support. We therefore in this section first discuss the meaning of these results, before ending with a reflection on the strengths and limitations of our study. The discussion is structured as six recommendations (I-VI).

Consider disciplinary differences

The study clearly shows that research and funding organisations must consider disciplinary differences when formulating research integrity policies. Variation across and within research areas influences RI perceptions and results in different challenges in, for instance, terms of data management, ethical considerations, and authorship distribution, which in turn call for discipline-specific RI support and guidelines. Evidently, RI policies are requested to be sensitive towards disciplinary differences. Attention to such prioritisations will not only assist organisations in maximising their resources and RI efforts; tailored policies and guidelines will also increase their quality and relevance and, as a result, increase their legitimacy among researchers. As shown in the results section, there are also important differences within the main areas of research that need to be considered when RI policy initiatives are designed and implemented. These differences seem to be particularly evident within the humanities but are also identified within social science between qualitative and quantitative research approaches. Differences in the need for RI support and guidance are, for instance, evident in the demand for research ethics structures and requirements as well as publication and communication issues. These needs relate to the nature of one’s research as well as the types of collaborations formed. For the ethical requirements, important variation exists within the humanities as to whether research entails the need to protect human subjects, animals, environment, and data. For example, different ethical issues and needs emerge when you work with children and other vulnerable groups as a linguist, compared to a researcher of medieval history working with 800-year-old texts. Standard ethical requests and ethical review procedures are not always experienced to be in alignment with the performed research and the risks and impacts associated with it, and it is relevant to adapt such requirements to disciplinary contexts and the research activities performed.

RPOs: build a sound research environment

Despite disciplinary variation, the study generally points to the research environment as a key RI topic area for RPOs to address. The research environment – the cultural norms and values of an institution (Valkenburg et al., 2021, p. 5) and its handling of appointments, incentive structures, conflicts, competition, diversity issues, and so on – is also seen as an underlying construct for managing and cultivating other issues of RI. Issues such as hyper-competitiveness, performance pressures, and power imbalances were emphasised in the focus groups as main obstacles for a sound RI environment. These findings are in line with other recent studies drawing attention to the profound and ensuing effects of strong organisational cultures to the reinforcement of responsible research (Forsberg et al., 2018; Haven et al., 2020).

It is our opinion, that although individual researchers carry responsibilities and have to live up to professional standards (Steneck, 2006), “responsibilisation” of RI is unevenly targeted at the individual researcher rather than linked to institutions and the science system (cf. also Davies, 2019, p. 1250; Bonn et al., 2019). Our study confirms the importance of paying more attention to the institutional level. Therefore, we urge research organisations to think about how they can build a sound research environment. Translated into actions, institutions could, for example, ensure fair and transparent assessment procedures for appointments, assessments, and promotions. They could also address hyper-competition, excessive publication pressure and diversity issues. Moreover, institutions could provide adequate education and skills training at both junior and senior levels and secure mentoring arrangements (Labib et al., 2021, May 14). Mejlgaard et al. (2020) point out that such responsible research processes should be supported by transparency, quality assessments, and clear procedures for handling allegations of misconduct (see also www.sops4ri.eu and Lerouge and Hol, 2020 for inspiration for additional actions and tools).

RFOs: adapt RI topics into concrete actions

For RFOs, procedures for managing breaches of RI, securing research ethics structures, and addressing publication and communication issues were pointed out as especially important topics. Therefore, we recommend that RFOs review their evaluation and funding procedures and formulate policies on how funding proposals are selected, reviewed, and monitored. While there is consensus among study participants that RFOs have an important role to play in implementing sound and effective RI policies, the interviewees also emphasised that RFOs should refrain from establishing disparate and parallel RI procedures to those of research organisations. As to the latter, funding organisations could undertake an active role in making sure that RPOs properly address RI issues—that is, by ensuring that they have clear policies, governance structures, and guidelines in place. Increased collaboration and harmonisation on RI practices between RFOs and RPOs constitute an untapped potential for greater attention. The production of further insights into specific policies, good practices, and clearer demarcations of RFO responsibilities within greater scientific systems could accelerate incumbent policies and standards of RI and help sustain the current momentum of “responsibilisation” in scientific governance (Davies, 2019) and RI as a new discourse “in the making” (Owen et al., 2021, p. 10).

Remember organisational and national differences

Besides disciplinary differences for both RPOs and RFOs, it is also important to consider national legislation and organisational differences as such contextual matters are found to have an impact on the importance attached to different RI topics and the level of attention given to established RI practices and procedures. For instance, variations in funding and legal and institutional structures for handling allegations and breaches of research integrity create different requests for change and efforts needed. Our study was designed to elicit understandings about differences between main areas of research and does not provide systematic evidence for differences between types of organisations. Nevertheless, RI institutionalisation has been shown to be highly dependent on micro-organisational structures and effects (Owen et al., 2021). In general, RI measures and policies should be created with a view to existing national and organisational RI landscapes and adapted to local contexts. In this regard, we recommend that organisational integrity plans and initiatives are developed in close dialogue with all stakeholders—–management, staff, and researchers from all disciplines—in order to make these policies as useful and effective as possible.

Avoid bureaucracy and unnecessary use of resources

When formulating policies for RI and implementing new procedures, unnecessary use of resources and excessive bureaucracy should be avoided. Across disciplines, institutions, and countries, researchers were concerned with striking a balance between implementing sound and relevant procedures that can stimulate RI practices and avoiding adding unnecessary bureaucracy. Researchers express a willingness to work thoroughly with research integrity issues, but they fear that new policies and standards will be placed on top of already existing requirements. For them, this would imply a loss of valuable research time. On this basis, we recommend that organisations carefully consider existing as well as future policies concerning RI. Duplication and parallel systems should be avoided, and existing policies should be evaluated in terms of cost-benefit analyses. Heightened awareness and dissemination about already established guidance and support structures could also advance the use of existing resources. An overall message was clearly conveyed: RI requirements and tools have to be meaningful, flexible, and practical to wield for researchers if they are to support and promote RI in practice.

Make a plan to improve RI

Finally, based on the study and the five previous recommendations, we recommend that RPOs and RFOs develop a coherent plan for how they want to implement, promote, and sustain RI (see also Mejlgaard et al., 2020; Bouter, 2020). We acknowledge that research institutions have limited resources and therefore have to prioritise, also when it comes to RI actions and policies. The topics and subtopics assessed and evaluated by researchers and stakeholders in this study could provide a first checklist for not only RPOs and RFOs, but also for smaller units such as research departments or faculties to ensure that relevant guidelines and policies are in place to assist researchers conducting their research according to appropriate ethical, legal, and professional standards (ENERI, 2019) and adapt them to organisational and disciplinary needs.

Strengths and limitations of the study

By virtue of the scale and scope of the present study, we have collected an unprecedented amount of data on the disciplinary importance and prioritisation of a large number of RI topics. Apart from its size, the strength of our study is the complexity of its design, comprising 30 focus groups conducted across 8 different countries with researchers as well as other relevant stakeholders. This rigorous approach allowed us to make in-depth explorations of similarities and differences in perceptions of RI topics across the four main areas of research. The voices of 147 researchers and stakeholders carry qualitative weight in exploring existing challenges to fostering RI and in providing nuanced understandings of the different main areas of research’s RI requirements. Although most core disciplines within the four main areas of research were represented in the focus groups, a potential weakness of the study is that not all disciplines were represented. Further, due to the study’s focus on main areas of research (e.g., Humanities), it is not able to give detailed accounts of the need for RI support within specific disciplines (History, Literature studies, etc.).

Data availability

All relevant documents and reports from this focus group study can be accessed via the study’s OSF page: https://osf.io/e9u8t/ Early 2022, all transcripts from the focus group interviews will also be made openly available on this page (in an anonymized form).

Notes

SOPs4RI includes a number of sub-studies in addition to the focus group study: two literature scoping reviews (Gaskell et al., 2019); an expert interview study with 23 research-integrity experts across RPOs (Ščepanović et al., 2019), a Delphi study with a panel of 68 RPO and 52 RFO research-integrity experts (Labib et al., 2021), a survey, and a pilot study (www.sops4ri.eu).

The Delphi study pointed to 12 topics for RPOs and 11 topics for RFOs to be included in the RI policies of these institutions (see Table 1 Ranked list of RI topics in Labib et al., 2021). However, the 12 RPO topics were later merged into 9 topics, presented in Mejlgaard et al. (2020). It is the list of nine topics that is used for RPOs in this study.

When we here and in the following refer to main areas of research, we mean the humanities, social sciences, natural sciences (including technical science), and medical sciences (including biomedicine). Each of these main areas of research, consists of a number of disciplines. Social science, for example, covers disciplines such as political science, law, economics etc.

The focus group interviews were carried out in Denmark, Spain, the Netherlands, Germany, Belgium, Croatia, Italy, and Greece. 22 of them were conducted as face-to-face interviews, while the last eight had to be carried out online because of the COVID-19 pandemic.

The interviewees were not payed for participating. They participated because they were interested in the topic of research integrity and/or because they felt obliged to take part in what they felt was an important discussion. After the focus group interviews, they received a small gift (a box of chocolates or a book voucher).

See Table S4 for further information on number of invitations send out, acceptance rates, and cancellations.

In some cases, participants placed a topic in-between two of the three pre-specified categories. We therefore ended up with five categories in the heat maps.

References

ALLEA–All European Academies (2017) The European code of conduct for research integrity. Revised edition. https://www.allea.org/wp-content/uploads/2017/05/ALLEA-European-Code-of-Conduct-for-Research-Integrity-2017.pdf. Accessed 21 July 2021

Baker M (2016) 1,500 scientists lift the lid on reproducibility. Survey sheds light on the “crisis” rocking research. Nature 533:452–454

National Academies of Sciences, Engineering, and Medicine (NASEM) (2017) Fostering integrity in research. The National Academies Press, Washington, DC, https://doi.org/10.17226/21896

Beck U (1992) Risk society: towards a new modernity. Sage, London

Bonn NA, Pinxten W (2019) A decade of empirical research on research integrity: what have we (not) looked at? J Empir Res Hum Res Ethics 14(4):338–352

Bouter L (2020) What research institutions can do to foster research integrity. Sci Eng Ethics 26:2363–2369. https://doi.org/10.1007/s11948-020-00178-5

Bouter LM, Tijdink J, Axelsen N et al (2016) Ranking major and minor research misbehaviors: Results from a survey among participants of four World Conferences on Research Integrity. Res Integr Peer Rev, 1(17). https://doi.org/10.1186/s41073-016-0024-5

Davies SR (2019) An ethics of the system: talking to scientists about research integrity. Sci Eng Ethics 25(4):1235–1253. https://doi.org/10.1007/s11948-018-0064-y

Edwards MA, Roy S (2017) Academic research in the 21st Century: maintaining scientific integrity in a climate of perverse incentives and hypercompetition. Environ Eng Sci 34:51–61

ENERI–European Network of Research Ethics and Research Integrity (2019) What is research integrity? https://eneri.eu/what-is-research-integrity/. Accessed 21 July 2021

ENERI–European Network of Research Ethics and Research Integrity, ENRIO–European Network of Research Integrity Offices (2019) Recommendations for the Investigation of Research Misconduct: ENRIO Handbook. http://www.enrio.eu/wp-content/uploads/2019/03/INV-Handbook_ENRIO_web_final.pdf. Accessed 21 July 2021

EViR Funders’ Forum (2020) Guiding Principles. https://evir.org/our-principles/. Accessed 21 July 2021

Fanelli D (2009) How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE 4(5). https://doi.org/10.1371/journal.pone.0005738

Forsberg EM, Anthun FO, Bailey S et al. (2018) Working with research integrity-guidance for research performing organisations: the Bonn PRINTEGER statement. Sci Eng Ethics 24(4):1023–1034. https://doi.org/10.1007/s11948-018-0034-4

Gaskell G, Ščepanović R, Buljan I et al (2019) D3.2: Scoping reviews including multi-level model of research cultures and research conduct. https://sops4ri.eu/wp-content/uploads/D3.2_Scoping-reviews-including-multi-level-model-of-research-cultures-and-research-conduct-1.pdf. Accessed 21 July 2021

Haven T, Pasman HR, Widdershoven G et al. (2020) Researchers’ perceptions of a responsible research climate: a multi focus group study. Science Eng Ethics 26:3017–3036. https://doi.org/10.1007/s11948-020-00256-8

Henriksen D (2016) The rise in co-authorship in the social sciences (1980–2013). Scientometrics 107(2):455–476. https://doi.org/10.1007/s11192-016-1849-x

Ioannidis JPA (2005) Why most published research findings are false. PLoS Med 2(8):e124. https://doi.org/10.1371/journal.pmed.0020124

Knorr-Cetina K (1999) Epistemic cultures: how the sciences make knowledge. Harvard University Press, Cambridge, Massachusetts

Knorr-Cetina K, Reichmann W (2015) Epistemic Cultures. International Encyclopedia of the Social & Behavioral Sciences (2nd ed.). Elsevier

Labib K, Evans N, Scepanovic R et al (2021) Education and training policies for research integrity: Insights from a focus group study. https://doi.org/10.31219/osf.io/p38nw

Labib K, Roje R, Bouter L et al (2021) Important topics for fostering research integrity by research performing and research funding organizations: a delphi consensus study. Sci Eng Ethics 27. https://doi.org/10.1007/s11948-021-00322-9

Le Maux B, Necker S, Rocaboy Y (2019) Cheat or perish? A theory of scientific customs. Res Policy, 48(9). https://doi.org/10.1016/j.respol.2019.05.001

Lerouge I, Hol A (2020) Towards a research integrity culture at universities: From recommendations to implementation. League of European Universities, 2020. https://www.leru.org/publications/towards-a-research-integrity-culture-at-universities-from-recommendations-to-implementation. Accessed 21 July 2021

Marušić A, Wager E, Utrobicic A et al. (2016) Interventions to prevent misconduct and promote integrity in research and publication. Cochrane Database Syst Rev 4(4):MR000038. https://doi.org/10.1002/14651858.MR000038.pub2

Mejlgaard N, Bouter LM, Gaskell G et al. (2020) Research integrity: nine ways to move from talk to walk. Nature 586(7829):358–360

Moher D, Bouter L, Kleinert S et al. (2020) The Hong Kong principles for assessing researchers: fostering research integrity. PLoS Biol 18(7):e3000737

Owen R, Pansera M, Macnaghten P, Randles S (2021) Organisational institutionalisation of responsible innovation. Res Policy 50(1):104132. https://doi.org/10.1016/j.respol.2020.104132

Patton MQ (1990) Qualitative evaluation and research methods, 2nd edn. Sage Publications, Inc, Thousand Oaks, CA, US

Ravn T, Sørensen M (2021) Exploring the Gray Area: Similarities and Differences in Questionable Research Practices (QRPs) Across Main Areas of Research. Sci Eng Ethics 27. https://doi.org/10.1007/s11948-021-00310-z

Resnik DB, Shamoo AE (2017) Reproducibility and research integrity. Account Res 24(2):116–123. https://doi.org/10.1080/08989621.2016.1257387

Roberts LL, Sibum HO, Mody CCM (2020) Integrating the history of science into broader discussions of research integrity and fraud. Hist Sci 58(4):354–368

Saldaña J (2013) The coding manual for qualitative researchers, 2nd edn. SAGE Publications Ltd, London, UK

Ščepanović R, Labib K, Buljan I et al (2021) Practices for research integrity promotion in research performing organisations and research funding organisations: a scoping review. Sci Eng Ethics 27(4); https://doi.org/10.1007/s11948-021-00281-1

Ščepanović R, Tomić V, Buljan I, Marušić A (2019) D3.3: report on the results of explorative reviews. https://sops4ri.eu/wp-content/uploads/D3.3_Report-on-the-results-of-the-explorative-interviews-1.pdf. Accessed 21 July 2021

Shapin S (2010) Never pure: historical studies of science as if it was produced by people with bodies, situated in time, space, culture, and society, and struggling for credibility and authority. The John Hopkins University Press, Baltimore

Sørensen MP, Ravn T, Bendtsen A-K et al. (2020) D5.2: report on the results of the focus group interviews. https://www.sops4ri.eu/wp-content/uploads/D5.2_Report-on-the-Results-of-the-Focus-Group-Interviews.pdf. Accessed 21 July 2021

Steneck NH (2006) Fostering integrity in research: definitions, current knowledge, and future directions. Sci Eng Ethics 12(1):53–74. https://doi.org/10.1007/PL00022268

Valkenburg G, Dix G, Tijdink J et al. (2021) Expanding research integrity: a cultural-practice perspective. Sci Eng Ethics 27(10). https://doi.org/10.1007/s11948-021-00291-z

WCRI–World Conference on Research Integrity (2010) Singapore statement on research integrity. https://wcrif.org/guidance/singapore-statement. Accessed 21 July 2021

WCRI-World Conference on Research Integrity (2013) Montreal statement on research integrity in cross-boundary research collaborations. https://wcrif.org/guidance/montreal-statement. Accessed 21 July 2021

Acknowledgements

The authors would like to express our gratitude to the 147 participants in the 30 focus group interviews who took the time to share their knowledge and experiences with us. We would also like to thank the many people who have helped us set up the interviews at the different institutions around Europe. For reasons of anonymity, we cannot mention your names here, but without your help, it would not have been possible to conduct this study. We would further like to thank our SOPs4RI colleagues George Gaskell (London), Rea Roje and Ivan Buljan (Split), Krishma Labib, Natalie Evans and Guy Widdershoven (Amsterdam), Wolfgang Kaltenbrunnen and Josephine Bergmans (Leiden), Eleni Spyrakou (Athens), Giuseppe A. Veltri (Trento), and Anna Domaradzka (Warsaw) for their help and support. Thank you also to Amalie Due Svendsen and Anders Møller Jørgensen (Aarhus), Jonathan Bening (Leiden), Dan Gibson (Leiden), Vasileios Markakis (Athens), and Andrijana Perković Paloš (Split), who helped transcribe the interviews. The SOPs4RI project is funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No 824481.

Author information

Authors and Affiliations

Contributions

MPS: Conceptualisation, Methodology, Investigation, Formal analysis, Validation, Writing—–original draft, Writing—review & editing, Supervision, Project administration. TR: Conceptualisation, Methodology, Investigation, Formal analysis, Validation, Writing—original draft, Writing—review & editing. AM: Conceptualisation, Investigation, Writing—original draft, Writing—review & editing, Supervision. ARE: Conceptualisation, Investigation, Formal analysis, Validation, Writing—review & editing, Visualisation. PK: Conceptualisation, Investigation, Writing—review & editing, Visualisation. JKT: Conceptualisation, Investigation, Writing—review & editing, Supervision. AKB: Investigation, Formal analysis, Validation, Writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sørensen, M.P., Ravn, T., Marušić, A. et al. Strengthening research integrity: which topic areas should organisations focus on?. Humanit Soc Sci Commun 8, 198 (2021). https://doi.org/10.1057/s41599-021-00874-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-021-00874-y

- Springer Nature Limited

This article is cited by

-

The impotence of ethics

Medicine, Health Care and Philosophy (2024)

-

How Competition for Funding Impacts Scientific Practice: Building Pre-fab Houses but no Cathedrals

Science and Engineering Ethics (2024)

-

Navigating the Science System: Research Integrity and Academic Survival Strategies

Science and Engineering Ethics (2024)

-

Impact and Assessment of Research Integrity Teaching: A Systematic Literature Review

Science and Engineering Ethics (2024)

-

What do Retraction Notices Reveal About Institutional Investigations into Allegations Underlying Retractions?

Science and Engineering Ethics (2023)