Abstract

Meta-analyses summarize a field’s research base and are therefore highly influential. Despite their value, the standards for an excellent meta-analysis, one that is potentially award-winning, have changed in the last decade. Each step of a meta-analysis is now more formalized, from the identification of relevant articles to coding, moderator analysis, and reporting of results. What was exemplary a decade ago can be somewhat dated today. Using the award-winning meta-analysis by Stahl et al. (Unraveling the effects of cultural diversity in teams: A meta-analysis of research on multicultural work groups. Journal of International Business Studies, 41(4):690–709, 2010) as an exemplar, we adopted a multi-disciplinary approach (e.g., management, psychology, health sciences) to summarize the anatomy (i.e., fundamental components) of a modern meta-analysis, focusing on: (1) data collection (i.e., literature search and screening, coding), (2) data preparation (i.e., treatment of multiple effect sizes, outlier identification and management, publication bias), (3) data analysis (i.e., average effect sizes, heterogeneity of effect sizes, moderator search), and (4) reporting (i.e., transparency and reproducibility, future research directions). In addition, we provide guidelines and a decision-making tree for when even foundational and highly cited meta-analyses should be updated. Based on the latest evidence, we summarize what journal editors and reviewers should expect, authors should provide, and readers (i.e., other researchers, practitioners, and policymakers) should consider about meta-analytic reviews.

Resume

Les méta-analyses résument la base d’un domaine de recherche et sont donc très influentes. Malgré leur valeur, les normes d’une excellente méta-analyse, celle qui peut être récompensée, ont changé au cours de la dernière décennie. Chaque étape d’une méta-analyse est désormais plus formalisée, de l’identification des articles pertinents au codage, à l’analyse des modérateurs et à la communication des résultats. Ce qui était exemplaire il y a dix ans peut être quelque peu daté aujourd’hui. En prenant comme exemple la méta-analyse primée de Stahl, Maznevski, Voigt et Jonsen (2010), nous avons adopté une approche pluridisciplinaire (par exemple, management, psychologie, sciences de la santé) pour résumer l’anatomie (c’est-à-dire les composantes fondamentales) d’une méta-analyse moderne, en nous concentrant sur : (i) la collecte de données (c’est-à-dire la recherche et le tri de la littérature, le codage), (ii) la préparation des données (c’est-à-dire le traitement des tailles d’effet multiples, l’identification et la gestion des valeurs aberrantes, le biais de publication), (iii) l’analyse des données (c’est-à-dire les tailles d’effet moyennes, l’hétérogénéité des tailles d’effet, la recherche de modérateurs) et (iv) la production de rapports (c’est-à-dire la transparence et la reproductibilité, les futures orientations de recherche). En outre, nous fournissons des lignes directrices et un arbre décisionnel pour les cas où même les méta-analyses fondamentales et fortement citées devraient être mises à jour. Sur la base des dernières données, nous résumons ce à quoi les rédacteurs et les réviseurs de revues doivent s’attendre, ce que les auteurs doivent fournir et ce que les lecteurs (c’est-à-dire les autres chercheurs, les praticiens et les décideurs politiques) doivent envisager concernant les études méta-analytiques.

Resumen

Los meta-análisis resumen la base de investigación de un campo y, por esto, son altamente influyentes. A pesar de su valor, los estándares para un meta-análisis excelente, uno que sea potencialmente galardonado, han cambiado en la última década. Cada paso de un meta-análisis está ahora más formalizado, desde la identificación de los artículos relevantes para codificar, el análisis del moderador, y el reporte de los resultados. Lo que era ejemplar hace una década atrás puede estar obsoleto hoy. Usando el análisis galardonado de Stahl, Maznevski, Voigt, y Jonsen (2010) como ejemplar, adoptamos un enfoque multidisciplinario (por ejemplo, administración, psicología, ciencias de la salud) para resumir la anatomía (es decir, los componentes fundamentales) de un meta-análisis moderno, enfocándonos en: (i) recolección de datos (es decir, la búsqueda de literatura y examinación, codificación), (ii) preparación de los datos (es decir, los tratamientos de efectos de los tamaños de efecto múltiples, identificación y gestión de atípicos, sesgo de publicación), (iii) análisis de datos (es decir, tamaños del efecto promedio, la heterogeneidad de los tamaños del efecto, y la búsqueda de moderador), y (iv) reporte (es decir, transparencia y reproducibilidad, direcciones de investigación futura). Asimismo, proporcionamos lineamientos y un árbol de toma de decisiones para cuando se deben actualizar los meta-análisis de base y altamente citados. Sobre la base de las últimas evidencias, resumimos lo que los editores y revisores de revistas deben esperar, los autores deben proporcionar, y lectores (es decir, otros investigadores, profesionales, y formuladores de políticas) deben considerar acerca de las revisiones meta-analíticas.

Resumo

Meta-análises resumem a base de pesquisa de um campo e, portanto, são altamente influentes. Apesar de seu valor, os padrões para uma excelente meta-análise, uma que seja potencialmente digna de ser premiada, mudaram na última década. Cada etapa de uma meta-análise é agora mais formalizada, desde a identificação de artigos relevantes até a codificação, análise do moderador e relato dos resultados. O que era exemplar há uma década pode ser um pouco ultrapassado hoje. Usando a premiada meta-análise de Stahl, Maznevski, Voigt e Jonsen (2010) como um exemplo, adotamos uma abordagem multidisciplinar (por exemplo, gestão, psicologia, ciências da saúde) para resumir a anatomia (ou seja, componentes fundamentais) de uma meta-análise moderna, com foco em: (i) coleta de dados (ou seja, pesquisa da literatura e triagem, codificação), (ii) preparação de dados (ou seja, tratamento de múltiplas magnitudes de efeito, identificação e gestão de outliers, viés de publicação), (iii) análise de dados (isto é, médias magnitudes de efeito, heterogeneidade de magnitudes de efeito, pesquisa de moderador) e (iv) relatórios (isto é, transparência e reprodutibilidade, direções de pesquisas futuras). Além disso, fornecemos diretrizes e uma árvore de tomada de decisão para quando até mesmo meta-análises fundamentais e altamente citadas devem ser atualizadas. Com base em evidências mais recentes, resumimos o que editores de periódicos e revisores devem esperar, autores devem fornecer e os leitores (ou seja, outros pesquisadores, praticantes e formuladores de políticas) devem considerar a respeito de revisões meta-analíticas.

抽象

荟萃分析总结一个领域的研究基础, 因而具有很大的影响。尽管有其价值, 有潜能获奖的出色的荟萃分析标准在过去十年中有了变化。荟萃分析的每个步骤, 从相关文章的识别到编码、调节变数分析、以及结果报告, 现在都更加正式化。十年前的样板在今天可能是过时的。我们以Stahl、Maznevski、Voigt和Jonsen(2010)获奖的荟萃分析为例, 采用多学科方法(例如管理学、心理学、健康科学)来总结现代荟萃分析的解剖结构(即基本成分) , 重点关注:(i)数据收集(即文献搜索与筛选, 编码), (ii) 数据准备(即多效应大小的处理, 异常值的识别与管理, 发布偏差), (iii)数据分析(即平均效应大小, 效果大小的异质性, 调节变数的搜索)和(iv)报告(即透明度和可重复性, 未来研究方向)。此外, 我们还提供了何时应更新甚至是基础的和高引用率的荟萃分析的指南和决策树。根据最新证据, 我们针对荟萃分析, 综述总结了期刊编辑和审阅者应有的期望, 作者应提供的内容, 以及读者(即其他研究人员、从业人员和政策制定者)应有的考虑。

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

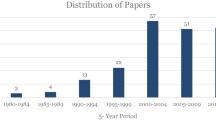

Scientific knowledge is the result of a multi-generational collaboration where we cumulatively generate and connect findings gleaned from individual studies (Beugelsdijk, van Witteloostuijn, & Meyer, 2020). Meta-analysis is critical to this process, being the methodology of choice to quantitatively synthesize existing empirical evidence and draw evidence-based recommendations for practice and policymaking (Aguinis, Pierce, Bosco, Dalton, & Dalton, 2011; Davies, Nutley, & Smith, 1999). Although meta-analyses were first formally conducted in the 1970s, it was not until the following decade that they began to be promoted (e.g., Hedges, 1982; Hedges & Olkin, 1985; Hunter, Schmidt, & Jackson, 1982; Rosenthal & Rubin, 1982), which subsequently spread across almost all quantitative fields, including business and management (Cortina, Aguinis, & DeShon, 2017). Aguinis, Pierce, et al. (2011) reported a staggering increase from 55 business and management-related articles using meta-analysis for the 1970–1985 period to 6918 articles for the 1994–2009 period.

Although there are several notable examples of meta-analysis, there are many more that are of suspect quality (Ionnadis, 2016). Consequently, we take the opportunity to discuss components of a modern meta-analysis, noting how the methodology has continued to advance considerably (e.g., Havránek et al., 2020). To illustrate the evolution of meta-analysis, we use the award-winning contribution by Stahl, Maznevski, Voigt and Jonsen (2010) who effectively summarized and made sense of the voluminous correlational literature on team diversity and cultural differences.

It is difficult to overstate how relevant Stahl et al.’s (2010) topic of diversity has become. Having a diverse workforce that reflects the larger society has only grown as a social justice issue over the last decade (Fujimoto, Härtel, & Azmat, 2013; Tasheva & Hillman, 2019). Furthermore, team diversity also has potential organizational benefits, the “value-in-diversity” thesis (Fine, Sojo, & Lawford-Smith, 2020). Consequently, their meta-analysis speaks to the innumerable institutional efforts to increase diversity as well as those who question these efforts’ effectiveness (e.g., the “Google’s Ideological Echo Chamber” memo that challenged whether increasing gender diversity in the programming field would increase performance; Fortune, 2017).

The focus of our article is on meta-analytic methodology. Stahl et al. make a useful contrast as, although its methodology was advanced for its time, the field has evolved rapidly. We draw upon recently established developments to contrast traditional versus modern meta-analytic methodology, summarizing our recommendations in Table 1. Our goal is to assist authors planning to carry out a meta-analytical study, journal editors and reviewers asked to evaluate their resulting work, and consumers of the knowledge produced (i.e., other researchers, practitioners, and policymakers) highlighting common areas of concern. Accordingly, we offer recommendations and, perhaps more importantly, specific implementation guidelines that make our recommendations concrete, tangible, and realistic.

Modern methodology

Using Stahl et al. as an exemplar, we summarize the anatomy (i.e., fundamental components) of a modern meta-analysis, focusing on: (1) data collection (i.e., literature search and screening, coding), (2) data preparation (i.e., treatment of multiple effect sizes, outlier identification and management, publication bias), (3) data analysis (i.e., average effect sizes, heterogeneity of effect sizes, moderator search), and (4) reporting (i.e., transparency and reproducibility, future research directions). Stahl et al. graciously shared their database with us, which we re-analyzed using more recently developed procedures.

Stage 1: Data Collection

Data collection is the creation of the database that enables a meta-analysis. Inherently, there is tension between making a meta-analysis manageable, that is small enough that it can be finished, and making it comprehensive and broad to make a meaningful contribution. With the research base growing exponentially but research time and efficiency remaining relatively constant, the temptation is to limit the topic arbitrarily by journals, by language, by publication year, or by the way constructs are measured (e.g., specific measure of cultural distance). The risk is that the meta-analysis is so narrowly conceived that, as Bem (1995: 172) puts it, “Nobody will give a damn.” One solution is to acknowledge that meta-analysis is increasingly becoming a “Big Science” project, requiring larger groups of collaborators. Although well-funded meta-analytic laboratories do exist, they are almost exclusively in the medical field. In business, it is likely that influential reviews will increasingly become the purview of well-managed academic crowdsourcing projects (i.e., Massive Peer Production) whose leaders can tackle larger topics (i.e., community augmented meta-analyses; Tsuji, Bergmann, & Cristia, 2014), such as exemplified by Many Labs (e.g., Klein et al., 2018).

With a large team or a smaller but more dedicated group, researchers have a freer hand in determining how to define the topic and the edges that define the literature. To this end, Tranfield, Denyer and Smart (2003) discussed that the identification of a topic, described as Phase 0, “may be an iterative process of definition, clarification, and refinement” (Tranfield et al., 2003: 214). Relatedly, Siddaway, Wood and Hedges (2019) highlighted scoping and planning as key stages that precede the literature search and screening procedures. Indeed, it is useful to conduct a pre-meta-analysis scoping study, ensuring that the research question is small enough to be manageable, large enough to be meaningful, there is sufficient research base for analysis, and that other recent or carried out reviews have not already addressed the same topic. Denyer and Tranfield (2008) stressed how an author’s prior and prolonged interest in the topic is immensely helpful, exemplified by a history of publishing in a particular domain. In fact, deep familiarity with the nuances of a field assists in every step of a meta-analytic review. Consistent with this point, Stahl et al.’s References section shows this familiarity, containing multiple publications by the first two authors. Gunter Stahl has emphasized cultural values while Martha Maznevski has focused on team development, with enough overlap between the two that Maznevski published in a handbook edited by Stahl (Maznevski, Davison, & Jonsen, 2006).

Once a worthy topic within one’s capabilities has been established, the most arduous part of meta-analysis begins. First is the literature search and screening (i.e., locating and obtaining relevant studies) and second is coding (i.e., extracting the data contained within the primary studies).

Literature search and screening

Bosco, Steel, Oswald, Uggerslev and Field (2015) alluded to academia’s “Tower of Babel” or what Larsen and Bong (2016) more formally labeled as the construct identity fallacy. These terms convey the idea that there can be dozens of terms and scores of measures for the same construct (i.e., jingle) and different constructs can go by the same name (i.e., jangle), such as cultural distance versus the Kogut and Singh index (Beugelsdijk, Ambos, & Nell, 2018; Maseland, Dow, & Steel, 2018). Furthermore, many research fields have exploded in size, almost exponentially (Bornmann & Mutz, 2015), making a literature search massively harder. Then there are the numerous databases within which the targeted articles may be hidden due to their often flawed or archaic organization (Gusenbauer & Haddaway, 2020), especially their keyword search functions. As per Spellman’s (2015) appraisal, “Our keyword system has become worthless, and we now rely too much on literal word searches that do not find similar (or analogous) research if the same terms are not used to describe it” (Spellman, 2015: 894).

Given this difficulty and that literature searches often occur in an iterative manner, where researchers are learning the parameters of the search as they conduct them (i.e., “Realist Search”; Booth, Briscoe, & Wright, 2020), there is an incentive to filter or simplify the procedure and to not properly document such a fundamentally flawed process so as to not leave it open to critique from reviewers’ potentially idealistic standards (Aguinis, Ramani, & Alabduljader, 2018). The result can be an implicit selection bias, where the body of articles is a subset of what is of interest (Lee, Bosco, Steel, & Uggerslev, 2017). Rothstein, Sutton and Borenstein (2005) described four types of bias: availability bias (selective inclusion of studies that are easily accessible to the researcher), cost bias (selective inclusion of studies that are available free or at low costs), familiarity bias (selective inclusion of studies only from one’s own field or discipline), and language bias (selective inclusion of studies published in English). The last of these is particularly common as well as particularly ironic in international business (IB) research. To this list, we would like to add citation bias due to The Matthew Effect (Merton, 1968). With increased public information on citation structures thanks to software such as Google Scholar, there is the risk of a selective inclusion of those studies that are heavily cited, at the expense of studies that have not been picked up (yet). Each of these biases can be addressed, respectively, by searching the grey literature, finding access to pay-walled scientific journals, including databases outside one’s discipline, engaging in translation (at least those languages used in multiple sources), and not using a low citation rate as an exclusion criterion.

How was Stahl et al.’s literature search process? Adept for its time. They drew from multiple databases, which is recommended (Harari, Parola, Hartwell, & Riegelman, 2020), and they supplemented with a variety of other techniques, including manual searches. They provided a sensible set of keywords but also contacted researchers operating in the team field to acquire the “grey literature” of obscure or unpublished works. Some other techniques could be added, such Ones, Viswesvaran and Schmidt’s (2017) suggestion that “snowballing” (aka “ancestry searching” or “pearl growing”; Booth, 2008) should be de rigueur. In other words, “by working from the more contemporary references for meta-analysis, tracking these references for the prior meta-analytic work on which they relied, and iteratively continuing this process, it is possible to identify a set of common early references with no published predecessors” (Aguinis, Dalton, Bosco, Pierce, & Dalton, 2011: 9). At present, however, some of Stahl et al.’s efforts would likely be critiqued in terms of replicability or reproducibility and transparency (Aguinis et al., 2018; Beugelsdijk et al., 2020). For example, if the keywords “team” and “diversity” are entered as search terms, Google Scholar alone yields close to two million hits. Other screening processes must have occurred, though are not reported, reflected in that Stahl et al. provided a sampling of techniques designed to reassure reviewers that they made a concerted effort (e.g., “searches were performed on several different databases, including…. search strategies included…”, Stahl et al., 2010: 697).

Presently, in efforts to increase transparency and replicability, the PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses) method is often recommended, which requires being extremely explicit about the exact databases, the exact search terms, and the exact results, including duplicates and filtering criteria (Moher, Liberati, Tetzlaff, & Altman, 2009). Although more onerous, the PRISMA-P version goes even further in terms of transparency, advocating pre-registering of the entire systematic review protocol encapsulated in a 17-item checklist (Moher et al., 2015). And, at present, the 2020 version of PRISMA recommends a 27-item checklist, not including numerous sub-items, again with the goal of improving the trustworthiness of systematic reviews (Page et al., 2020). Given the attempt to minimize decisions in situ, proper adherence to the PRISMA protocols can be difficult when searches occur in an iterative manner, as researchers find new terms or measures as promising leads for relevant papers. When this happens, especially during the later stages of data preparation, researchers face the dilemma of either re-conducting the entire search process with the added criteria (substantively increasing the workload) or ignoring the new terms or measures (leading to a less than exhaustive search). New software has been developed to help address that search processes can be informed simultaneously with implementation, such as www.covidence.org, www.hubmeta.com, or https://revtools.net/ (with many more options curated at http://systematicreviewtools.com/, The Systematic Review Tool Box). They provide a computer-assisted walk-through of the search as well as a screening process, which starts with deduplication, and filtering on abstract or title, followed by full text filtering (with annotated decisions). Reviewers should expect that this information be reported in a supplemental file, along with the final list of all articles coded and details regarding effect sizes, sample sizes, measures, moderators, and other specific details that would enable readers to readily reproduce the creation of the meta-analytic database.

It is a challenge to determine that a search approach has been thorough and exhaustive, given that reviewers may have an incomplete understanding of the search criteria or of how many articles can be expected. In other words, although the authors may have reported detailed inclusion and exclusion criteria, as per MARS (Kepes, McDaniel, Brannick, & Banks, 2013), how can reviewers evaluate their adequacy? We anticipate that in the future this need for construct intimacy may be emphasized and a meta-analysis would require first drawing upon or even publishing a deep review of the construct. For example, prior to publishing their own award-winning monograph on Hofstede’s cultural value dimensions (Taras, Kirkman, & Steel, 2010), two of the authors published a review of how culture itself was assessed (Taras, Rowney, & Steel, 2009), as well as a critique of the strengths and challenges of Hofstede’s measure (Taras & Steel, 2009). Another example is a pre-meta-analytic review of institutional distance (Kostova, Beugelsdijk, Scott, Kunst, Chua, & van Essen, 2020), where several of the authors previously published on the topic (e.g., Beugelsdijk, Kostova, Kunst, Spadafora, & van Essen, 2018; Kostova, Roth, & Dacin, 2008; Scott, 2014). Once authors have demonstrated prolonged and even affectionate familiarity with the topic (“immersion in the literature”; DeSimone, Köhler, & Schoen, 2019: 883), reviewers may be further reassured that the technical aspects of the search were adequately carried out if a librarian (i.e., an information specialist) was reported to be involved (Johnson & Hennessy, 2019).

Coding of the primary studies

Extracting all the information from a primary study can be a lengthy procedure, as a myriad of material is typically needed beyond the basics of sample size and the estimated size of a relationship between variables (i.e., correlation coefficient). This includes details required for psychometric corrections, conversion from different statistical outputs to a common effect size (e.g., r or d), and study conditions and context that permit later moderator analysis (i.e., conditions under which a relationship between variables is weaker or stronger). Properly implementing procedures such as applying psychometric corrections for measurement error and range restriction is not always straightforward (Aguinis, Hill, & Bailey, 2021; Hunter, Schmidt, & Le, 2006; Schmidt & Hunter, 2015; Yuan, Morgeson, & LeBreton, 2020). However, while this used to be a manual process requiring intimate statistical knowledge (e.g., including knowledge of how to correct for various methodological and statistical artifacts), fortunately, this process is increasingly semi-automated. For example, the meta-analytic program psychmeta (the psychometric meta-analysis toolkit) provides conversion to correlations for “Cohen’s d, independent samples t values (or their p values), two-group one-way ANOVA F values (or their p values), 1df χ2 values (or their p values), odds ratios, log odds ratios, Fisher z, and the common language effect size (CLES, A, AUC)” (Dahlke & Wiernik, 2019).

However, a pernicious coding challenge is related to the literature search and screening process described earlier. For initial forays into a topic, a certain degree of conceptual “clumping” is necessary to permit sufficient studies for meta-analytic summary, in which we trade increased measurement variance for a larger database. As more studies become available, it is possible to make more refined choices and to tease apart broad constructs into component dimensions or adeptly merge selected measures to minimize mono-method bias (Podsakoff, MacKenzie, & Podsakoff, 2012). For example, Richard, Devinney, Yip and Johnson’s (2009) study on organizational performance found not all measures to be commensurable, such as return on total assets often being radically different from return on sales. As a result, only a subset of the obtained literature actually represents the target construct, and this subset can be difficult to determine.

Stahl et al. methodically reported how they coded cultural diversity as well as each of their dependent variables. This is an essential start, but, reflecting the previous problem of construct proliferation, more information regarding how each dependent variable was operationalized in each study would be a welcome addition. Although some information regarding the exact measures used is available directly from the authors, which was readily provided upon request, today many journals require these data to be perpetually archived and available through an Open Science repository. The issue of commensurability applies here, as one of Stahl et al.’s dependent variables was creativity. Ma’s (2009) meta-analysis on creativity divided the concept into three groups, with many separating problem-solving from artistic creativity. With only five studies on creativity available, mingling of different varieties of creativity is necessary. Still, it is important to note that Stahl et al. chose to treat studies that focused on the quality of ideas generated (e.g., Cady & Valentine, 1999) as an indicator of creativity along with more explicit measures, such as creativity of story endings (Paletz, Peng, Erez, & Maslach, 2004), leaving room for this to be re-explored as the corpus of results expanded.

To help alleviate concerns of commensurability, it is commendable that Stahl et al. used two independent raters to code the articles, documenting agreement using Cohen’s kappa. Notably, kappa is used to quantify interrater reliability for qualitative decisions, where there is a lack of an irrefutable gold standard or “the ‘correctness’ of ratings cannot be determined in a typical situation” (Sun, 2011: 147). Too often, kappa is used indiscriminately to include what should be indisputable decisions, such as sample size, and when there is disagreement, coders can simply reference the original document. Qualitative judgements, where there are no factual sources to adjudicate, reflect kappa’s intended purpose. Consequently, kappa can be inflated simply by including prosaic data entry decisions that reflect transcription (where it may suffice to mention double-coding with errors rectified by referencing the original document), and, with Stahl et al. reporting kappa “between .81 and .95” (Stahl et al., 2010: 699), it is unclear how it was used in this case.

Consequently, reviewers should expect authors to provide additional reassurance beyond kappa that they grouped measures appropriately. This is not simply a case of using different indices of interrater agreement (LeBreton & Senter, 2008), which often prove interchangeable themselves, but using a battery of options to show measurement equivalence and that these measures are tapping into approximately the same construct. Although few measures will be completely identical (i.e., parallel forms), there are the traditional choices of showing different types of validity evidence (Wasserman & Bracken, 2003). For example, Taras et al. (2010) were faced with over 100 different measures of culture in their meta-analysis of Hofstede’s Values Survey Module. Their solution, which they document over several pages, was to begin with the available convergent validity evidence, that is factor or correlational studies. Given that the available associations were incomplete, they then proceeded to content validity evidence, examining not just the definitions but also the survey items for consistency with the target constructs. Finally, for more contentious decisions, they drew on 14 raters to gather further evidence regarding content validity.

As can be seen, demonstrating that different measures tap into the same construct can be laborious, and preferably future meta-analyses should be able to draw on previously established ontologies or taxonomic structures. As mentioned, there are some sources to rely on, such as Richard et al.’s (2009) work on organizational performance, Versteeg and Ginsburg’s (2017) assessment of rule of law indices, or Stanek and Ones’ (2018) taxonomy of personality and cognitive ability. Unfortunately, this work is still insufficient for many meta-analyses, and such a void is proving a major obstacle to the advancement of science. The multiplicity of overlapping terms and measures creates a knowledge management problem that is increasingly intractable for the individual researcher to solve. Larsen, Hekler, Paul and Gibson (2020) argued that a solution is manageable, but we need a sustained “collaborative research program between information systems, information science, and computer science researchers and social and behavioral science researchers to develop information system artifacts to address the problem” (Larsen et al., 2020: 1). Once we have an organized system of knowledge, they concluded: “it would enable scholars to more easily conduct (possibly in a fully automated manner) literature reviews, meta-analyses, and syntheses across studies and scientific domains to advance our understanding about complex systems in the social and behavioral sciences” (Larsen et al., 2020: 9).

Stage 2: Data Preparation

Literature search, screening, and coding provide the sample of primary studies and the preliminary meta-analytic database. Next, there are three aspects of the data preparation stage that leave quite a bit of discretionary room for the researcher, thus requiring explicit discussion useful not only for meta-analysts but also for reviewers as well as research consumers. First, there is the treatment of multiple effect sizes reported in a given primary-level study. Second, there is the identification and treatment of outliers. And third, the issue of publication bias.

Treatment of multiple effects sizes

A single study may choose to measure a construct in a variety of ways, each producing its own effect size estimate. In other words, effect sizes are calculated using the same sample and reported separately for each measure. Separately counting each result violates the principle of statistical independence, as all are based on the same sample. Stahl et al. chose to average effect sizes within articles, which addresses this issue; however, more effective options are now available (López‐López, Page, Lipsey, & Higgins, 2018).

Typically, the goal is to focus on the key construct, and so Schmidt and Hunter (2015) recommended the calculation of composite scores, drawing on the correlations between the different measures. Unless the measures are unrelated (which suggests that they assess different constructs and therefore should not be grouped), the resulting composite score will have better coverage of the underlying construct as well as higher reliability. Other techniques include the Robust Error Variance (RVE) approach (Tanner-Smith & Tipton, 2014), which considers the dependencies (i.e., covariation) between correlated effect sizes (i.e., from the same sample). Another option is adopting a multilevel meta-analytic approach, where Level 1 includes the effects sizes, Level 2 is the within-study variation, and Level 3 is the between-study variation (Pastor & Lazowski, 2018; Weisz et al., 2017). A potential practical limitation is that these alternatives to composite scores pose large data demands, as they typically require 40–80 studies per analysis to provide acceptable estimates (Viechtbauer, López-López, Sánchez-Meca, & Marín-Martínez, 2015).

Outlier identification and management

Although rarely carried out (Aguinis, Dalton, et al., 2011), outlier analysis is strongly recommended for meta-analysis. Some choices include doing nothing, reducing the weight given to the outlier, or eliminating the outlier altogether (Tabachnik & Fidell, 2014). However, whatever the choice, it should be transparent, with the option of reporting results both with and without outliers. To detect outliers, the statistical package metafor provides a variety of influential case diagnostics, ranging from externally standardized residuals to leave-one-out estimates (Viechtbauer, 2010). There are multiple outliers in Stahl et al.’s dataset, such as Polzer, Crisp, Jarvenpaa and Kim (2006) for Relationship Conflict, Maznevski (1995) for Process Conflict, and Gibson and Gibbs (2006) for Communication. In particular, Cady and Valentine (1999), which is the largest study for the outcome measure of Creativity and reports the sole negative correlation of − 0.14, almost triples the residual heterogeneity (Tau2), increasing it from 0.025 to 0.065. As is the nature of outliers, and as will be shown later, their undue influence can substantially tilt results by their inclusion or exclusion.

Like the Black Swan effect, an outlier may be a legitimate effect size drawn by chance from the ends of a distribution, which would relinquish its outlier status as more effects reduce or balance its impact. Aguinis, Gottfredson and Joo (2013) offered a decision tree involving a sequence of steps to first identify outliers (i.e., whether a particular observation is far from the rest) and then decide whether specific outliers are errors, interesting, or influential. Based on the answer, a researcher can decide to eliminate it (i.e., if it is an error), retain it as is or decrease its influence, and then, regardless of the choices, it is recommended to report results with and without the outliers. Stahl et al. retained outliers, which is certainly preferable to using arbitrary cutoffs such as two standard deviations below or above the mean to omit observations from the analysis (a regrettable practice that artificially creates homogeneity; Aguinis et al., 2013). However, we do not have information on whether these outliers could have been errors.

Publication bias

Publication bias refers to a focus on statistically significant or strong effect sizes rather than a representative sample of results. This can happen for a wide of variety of reasons, including underpowered studies and questionable research practices such as p-hacking (Meyer, van Witteloostuijn & Beugelsdijk, 2017; Munafò et al., 2017), and it occurs frequently in a variety of fields (Ferguson & Brannick, 2012; Ioannidis, Munafò, Fusar-Poli, Nosek, & David, 2014), although not all (Dalton, Aguinis, Dalton, Bosco, & Pierce, 2012). When it does occur, it has the potential to severely distort findings (Friese & Frankenbach, 2020). It is notable that Stahl et al. tested for publication bias, while only 3–30% of meta-analyses include this step (Aguinis, Dalton, et al., 2011; Kepes, Banks, McDaniel, & Whetzel, 2012). To test for publication bias, Stahl et al. used the fail-safe N, devised by Rosenthal (1979) for experimental research. Although Rosenthal focused on the common “file drawer” problem, his statistic is more of a general indicator of the stability of meta-analytic results (Carson, Schriesheim, & Kinicki, 1990; Dalton et al., 2012). In particular, the fail-safe N estimates the number of null studies that would be needed to change the average effect size a group of studies to a specified statistical significance level, especially non-significance (e.g., p > .05).

While at one time the fail-safe N was a recommended component of a state-of-the-science meta-analysis, this time has now passed. It has a variety of problems. For example if the published literature indicates a lack of relationship, that is the null itself, the equation becomes unworkable. For example, Stahl et al. were unable to give a fail-safe N precisely for the variables which were not significant in the first place. Consequently, for decades, researchers have recommended its disuse (Begg, 1994; Johnson & Hennessy, 2019; Scargle, 2000). Sutton (2009: 442) described it as “nothing more than a crude guide”, and Becker (2005: 111) recommended that “the fail-safe N should be abandoned in favor of other more informative analyses.” At the very least, the fail-safe N should be supplemented.

Although there are no perfect methods to detect or correct for publication bias, there are a wide variety of better options (Kepes et al., 2012). We can use selection-based methods and compare study sources, typically published versus unpublished, with the expectation there should be little difference between the two (Dalton et al., 2012). Also, there are a variety of symmetry-based methods, essentially where the expectation is that sample sizes or standard errors should be unrelated to effect sizes. One of most popular of these symmetry techniques is Egger’s regression test, which we applied to Stahl et al. Confirming Stahl et al.’s findings, there was no detectable publication bias.

Henmi and Compas (2010) developed a simple method for reducing the effect of publication bias, which uses fixed-effect model weighting to reduce the impact of errant heterogeneity. Alternatively, the classic Trim-and-Fill technique (Duval, 2005) can also be employed, which will impute the “missing” correlations. For a more sophisticated option, there is the precision-effect test and a precision-effect estimate with standard errors (PET-PEESE), which can detect as well as correct for publication bias (see Stanley and Doucouliagos 2014 for illustrative examples and code for Stata and SPSS). Stanley (2017) identified when PET-PEESE becomes unreliable, typically when there are few studies, excessive heterogeneity, or small sample sizes, which are often the same conditions that weaken the effectiveness of meta-analytic techniques in general.

Stage 3: Data Analysis

Meta-analyses are overwhelmingly used to understand what is the overall (i.e., average) size of the relationship between variables across primary-level studies (DeSimone et al., 2019; Carlson & Ji, 2011). However, meta-analysis is just as useful, if not more so, to understand when and where a relationship is likely to be stronger or weaker (Aguinis, Pierce, et al., 2011). Consequently, we discuss the three basic elements of the data analysis stage – average effect sizes, heterogeneity, and moderators – and we emphasize theory implications.

Reflecting that many meta-analytic methodologies were under debate at that time, Stahl et al. used a combination of techniques, including psychometric meta-analysis, both a fixed-effect and random-effect approach, as well as converting correlations to Fisher’s z after psychometric adjustments. The motivation for this blend of techniques is clear: each has its advantages (Wiernik & Dahlke, 2020). However, procedures have been refined and, consequently, we contrast Stahl et al.’s results with a modern technique that better accomplishes their aim: Morris estimators (Brannick, Potter, Benitez, & Morris, 2019).

Average effect sizes

During the early years of meta-analysis, the main question of interest was: “Is there a consistent relationship between two variables when examined across a number of primary-level studies that seemingly report contradictory results?” As Gonzalez-Mulé and Aguinis (2018) reviewed, for many meta-analyses, this is all they provided. Showing association and connection represents the initial stages of theory testing, and most meta-analyses have some hypotheses attached to these estimates. Given that this is the lower-hanging empirical and theoretical fruit, much of it has already been plucked and, today, unlikely by itself to satisfy demands for a novel contribution. An improved test of theory at this stage is not just positing that a relationship exists, and that it is unlikely to be zero, but how big it is (Meehl, 1990); in other words, “Instead of treating meta-analytic results similarly to NHST (i.e., limiting the focus to the presence or absence of an overall relationship), reference and interpret the MAES (meta-analytic effect sizes) alongside any relevant qualifying information” (DeSimone et al., 2019: 884). To this end, researchers have typically drawn on Cohen (1962), who made very rough benchmark estimates based on his review of articles published in the 1960 volume of Journal of Abnormal and Social Psychology. Contemporary effect-size estimates have been compiled by Bosco, Aguinis, Singh, Field and Pierce (2015), who drew on 147,328 correlations reported in 1660 articles, and by Paterson, Harms, Steel and Credé (2016), who summarized results from more than 250 meta-analyses. Both ascertained that Cohen’s categorizations of small, medium, and large effects do not accurately reflect today’s research in management and related fields. Averaging Bosco et al.’s Table 2 and Paterson et al.’s Table 3, a better generic distribution remains as per Cohen 0.10 for small (i.e., 25th percentile), but 0.18 for medium (i.e., 50th percentile) and 0.32 for large (i.e., 75th percentile). Using these distributions of effect sizes, or those compiled from other analogous meta-analyses, meta-analysts can go beyond the simple conclusion that a relationship is different from zero and, instead, critically evaluate the size of the effect within the context of a specific domain.

Stahl et al. adopted a hybrid approach to calculate average effect sizes. Initially, they reported estimates using Schmidt and Hunter’s (2015) psychometric meta-analysis, correcting for dichotomization (i.e., uneven splits) and attenuation due to measurement error. They then departed from Schmidt’s and Hunter’s approach by transforming correlations to Fisher’s zs (Borenstein, Hedges, Higgins, & Rothstein, 2009) and weighting by N − 3, the inverse of sampling error after Fisher’s transformation. As Stahl et al. clearly acknowledged, this is a fixed-effects approach that assumes the existence of a single population effect. In contrast, a random-effects model assumes that there are multiple population effects that motivate the search for moderator (i.e., factors that account for substantive variability of observed effects).

Where does this leave Stahl et al., who corrected for attenuation but used a Fisher’s z transformation with an underlying fixed-effect approach? If correlations are between ± 0.30, Fisher’s z transformed versus untransformed correlations are almost identical. For Stahl et al.’s data, 81% of their effect sizes fell within this special case of near equivalence, making the matter almost moot. Similarly, Schmidt and Hunter (2015) used an attenuation factor, which can change weights drastically, but here the average absolute difference between raw and corrected correlation is less than 0.02, minimizing this concern. Consequently, although we do not recommend Stahl et al.’s fixed-effects approach, results should be close to equivalent to other methods, as noted by Aguinis, Gottfredson and Wright (2011).

As mentioned earlier, we re-analyzed Stahl et al.’s data using Morris weights. To calculate variance of effect sizes across primary-level studies, we used N − 1 in the formula rather than N, as the effect sizes are estimates and not population values. To calculate residual heterogeneity (i.e., whether variation of effect sizes is due to substantive rather than artifactual reasons), Morris estimators rely on restricted maximum likelihood. We conducted all analyses using the metafor (2.0-0) statistical package (Viechtbauer, 2010) in R (version 3.5.3). We found that the average effect size for creativity, for example, increased from Stahl et al.’s 0.16 to 0.18, although it was non-significant (p = .20). Moreover, using the random-effects model, which increased the size of confidence intervals due the inclusion of the random-effects variance component (REVC), none of the effects were significant, with a caveat due to the consideration of outliers. If we exclude Cady and Valentine (1999), the effect size of creativity increases to 0.29 and became significant (p = 0.02). In sum, Stahl et al. provided an excellent example that methodological choices, here regarding outliers and the model, are influential enough that a meta-analysis’ major conclusions can hinge upon them.

Stahl et al. presented a single column of effect sizes, which is now insufficient for modern meta-analyses. What is preferred is a grid of them. For example, meta-analytic structural equation modeling (MASEM) is based on expanding the scope of a meta-analysis from bivariate correlations to creating a full meta-analytic correlation matrix (Bergh et al., 2016; Cheung, 2018; Oh, 2020). Given that this allows for additional theory testing options enabled by standard structural equation modeling, the publication of a meta-analysis can pivot on its use of MASEM. Options range from factor analysis to path analysis, such as determining the total variance provided by predictors or if a predictor is particularly important (e.g., dominance or relative weights analysis). It also allows for mediation tests, that is, the “how” of theory or “reasons for connections.” It is even possible to use MASEM to test for interaction effects. Traditionally, the correlation between the interaction term and other variables is not reported and often must be requested directly from the original authors. Doing so is a high-risk endeavor given researchers’ traditionally low response rate (Aguinis, Beaty, Boik, & Pierce, 2005; Polanin et al., 2020a), but the rise of Open Science and the concomitant Individual Participant Data (IPD) means that this information is increasingly available. Amalgamating IPD across multiple studies is usually referred to as a mega-analysis, and, as suggested here, can be used to supplement a standard meta-analysis (Boedhoe et al., 2019; Kaufmann, Reips, & Merki, 2016).

Reviewers will note that, as researchers move from simply an average of bivariate relationships towards MASEM, they can encounter incomplete and nonsensical matrices. For incomplete matrices, Landis (2013) and Bergh et al. (2016) provided sensible recommendations for filling blank cells in a matrix, such as drawing on previously published meta-analytic values or expanding the meta-analysis to target missing correlations. Nonsensical matrices (that occur increasingly as correlation matrices expand) create a non-positive definite “Frankenstein” matrix, stitched together from incompatible moderator patches. Landis (2013), as well as Sheng, Kong, Cortina and Hou (2016), provided remedies, such as excluding problematic cells or collapsing highly correlated variables into factors to avoid multicollinearity. In addition, we can employ more advanced methods that incorporate random effects and dovetail meta-regression with MASEM (e.g., Jak & Cheung, 2020). The benefit is a mature science that can adjust a matrix so that the resulting regression equations represent specific contexts. For example, synthetic validity is a MASEM application in which validity coefficients are predicted based on a meta-regression of job characteristics, meaning that we can create customized personnel selection platforms orders of magnitude less costly, faster, and more accurately (Steel, Johnson, Jeanneret, Scherbaum, Hoffman, & Foster, 2010).

Heterogeneity of effect sizes

A supplement to our previous discussion of average effect sizes is the degree of dispersion around the average effect. As noted by Borenstein et al. (2009), “the goal of a meta-analysis should be to synthesize the effect sizes, and not simply (or necessarily) to report a summary effect. If the effects are consistent, then the analysis shows that the effect is robust across the range of included studies. If there is modest dispersion, then this dispersion should serve to place the mean effect in context. If there is substantial dispersion, then the focus should shift from the summary effect to the dispersion itself. Researchers who report a summary effect are indeed missing the point of the synthesis” (Borenstein et al., 2009: 378).

Stahl et al. examined whether the homogeneity Q statistic was significant, meaning that sufficient variability of effects around the mean exists, as a precursor to moderator examination. A modern meta-analysis should complement the Q statistic with other ways of assessing heterogeneity, because Q often leads to Type II errors (i.e., incorrect conclusions that heterogeneity is not present; Gonzalez-Mulé & Aguinis, 2018), especially when there is publication bias (Augusteijn, van Aert, & van Assen, 2019). Further reporting of heterogeneity by Stahl et al. is somewhat unclear. They provided in Table 2 “Variance explained by S.E. (%)” and “Range of effect sizes,” which were not otherwise explained. This oversight is, as Gonzalez-Mulé and Aguinis (2018) documented, regrettably common. In fact, they found that 16% of meta-analyses from major management journals fail to report heterogeneity at all. Stahl et al. reported the range of effect sizes for creativity was − .14 to .48. However, the actual credibility intervals, after removing the outlier, was .03 to .55, indicating that the result typically generalizes and can be strong. As per Gonzalez-Mulé and Aguinis, we recommend providing at a minimum: credibility intervals, T2 (i.e., SDr or the REVC), and I2 (i.e., percentage of total variance attributable to T2). The ability to further assess heterogeneity is facilitated by recent methodological advances, such as the use of a Bayesian approach that corrects for artificial homogeneity created by small samples (Steel, Kammeyer-Mueller, & Paterson, 2015), and by the use of asymmetric distributions in cases of skewed credibility intervals (Baker & Jackson, 2016; Jackson, Turner, Rhodes, & Viechtbauer, 2014; Possolo, Merkatas, & Bodnar, 2019).

Moderator search

Moderating effects, which account for substantive heterogeneity, can be organized around Cattell’s Data Cube or the Data Box (Revelle & Wilt, 2019): (1) sample (e.g., firm or people characteristics), (2) variables (e.g., measurements), and (3) occasions (e.g., administration or setting). Typical moderator variables include country (e.g., developing vs developed), time period (e.g., decade) and published vs unpublished status (where comparison between the two can indicate the presence of publication bias). Particularly important from an IB perspective is the language and culture of survey administration, which has been shown to influence response styles (Harzing, 2006; Smith & Fischer, 2008) and response rates (Lyness & Brumit Kropf, 2007). Theory is often addressed as part of the moderator search, as per Cortina’s (2016) review, “a theory is a set of clearly identified variables and their connections, the reasons for those connections, and the primary boundary conditions for those connections” (Cortina, 2016: 1142). Moderator search usually establishes the last of these – boundary conditions – although not exclusively. For example, Bowen, Rostami and Steel (2010) used the temporal sequence as a moderator to clarify the causal relationship between innovation and firm performance. Of note, untheorized moderators (e.g., control variables) are still a staple of meta-analyses but should be clearly delineated as robustness tests or sensitivity analyses (Bernerth & Aguinis, 2016).

After establishing average effect sizes (i.e., connections), Stahl et al. grappled deeply with the type of diversity, a boundary condition inquiry that determines how specific contexts affect these connections or effect sizes. Stahl et al. differentiated between the role of surface level (e.g., racio-ethnicity) vs deep level (e.g., cultural values) diversity and noted trade-offs. They expected diversity to be associated with higher levels of creativity, but at the potential cost of lower satisfaction and greater conflict, negative outcomes that likely diminish as team tenure increases. Note how well these moderators match up to core theoretical elements. Page’s (2008) book on diversity, The Difference, covers in detail the four conditions that lead to diversity creating superior performance. This includes that the task should be difficult enough that it needs more than a single brilliant problem solver (i.e., task complexity), that those in the group should have skills relevant to the problem (i.e., type of diversity), that there is synergy and sharing among the group members (i.e., team dispersion), and that the group should be large and genuinely diverse (i.e., team size). A clear connection between theory, data, and analysis is a hallmark of a great paper, reflected in that the more a meta-analysis attempts to test an existing theory, the larger the number of citations it receives (Aguinis, Dalton, et al., 2011).

However, the techniques that Stahl et al. used to assess moderators have evolved considerably. Stahl et al. used subgrouping methodology, which comes in two different forms: comparison of mean effect sizes and analysis of variance (Borenstein et al., 2009). The use of such subgrouping approach has come into debate. To begin with, subgrouping should be reserved for categorical variables as otherwise it requires dichotomizing continuous moderators, usually using a median split, which reduces statistical power (Cohen, 1983; Steel & Kammeyer-Mueller, 2002). Also, it appears that Stahl et al. used a fixed-effects model although meta-analytic comparisons are typically based on a random-effects model (Aguinis, Sturman, & Pierce, 2008), with some exceptions, such as when the subgroups are considered exhaustive (e.g., before and after a publication year; Borenstein & Higgins, 2013) or whether the research question focuses on dependent correlates differing within the same situation (Cheung & Chan, 2004). Furthermore, standard Wald-type comparisons result in massive increases in Type I errors (Gonzalez-Mulé & Aguinis, 2018), and, although useful to contrast two sets of correlations to determine whether they differ, they have limited application in determining moderators’ explanatory power (Lubinski & Humphreys, 1996). A superior alternative to subgrouping is meta-regression analysis or MARA (Aguinis, Gottfredson, & Wright, 2011; Gonzalez-Mulé & Aguinis, 2018; Viechtbauer et al., 2015). Essentially, MARA is a regression model in which effect sizes are the dependent variable and the moderators are the predictors (e.g., Steel & Kammeyer-Mueller, 2002). MARA tests whether the size of the effects can be predicted by fluctuations in the values of the hypothesized moderators, which therefore are conceptualized as boundary conditions for the size of the effect. If there are enough studies, MARA enables simultaneous testing of several moderators. Evaluating the weighting options for the predictors, Viechtbauer et al. settled on the Hartung–Knapp as the best alternative. Other recommendations for MARA are given by Gonzalez-Mulé and Aguinis (2018), such as making the sensible observation that we should use \(R^{2}_{\text{Meta}}\), which adjusts R2 to reflect I2, the known variance after excluding sampling error. Gonzalez-Mulé and Aguinis (2018) also included the R code to conduct all analyses as well as an illustrative study. Some analysis programs, such as metafor, provide \(R^{2}_{\text{Meta}}\) by default.

Stage 4: Reporting

A modern meta-analysis must be transparent and reproducible – meaning that all steps and procedures need to be described in such a way that a different team of researchers would obtain similar results with the same data. At present, this is among our greatest challenges. In psychology, half of 500 effect sizes sampled from 33 meta-analyses were not reproducible based on the available information (Maassen, van Assen, Nuijten, Olsson-Collentine, & Wicherts, 2020). Also, a modern meta-analysis not only provides more than a summary of past findings but also points towards the next steps. Consequently, it should consider future research directions, not just in terms of what studies should be conducted but when subsequent meta-analyses could be beneficial and what they should address.

Transparency and reproducibility

As Hohn, Slaney and Tafreshi (2020: 207) concluded: “It is vitally important that meta-analytic work be reproducible, transparent, and able to be subjected to rigorous scrutiny so as to ensure that the validity of conclusions of any given question may be corroborated when necessary.” Stahl et al. provided their database to assist with our review, allowing the assessment of reproducibility because both of our analyses relied on the same meta-analytic data (Jasny, Chin, Chong, & Vignieri, 2011). Such responsiveness is commendable but also highlights the problem of using researchers’ personal computers as archives. The data are often difficult to obtain, lost, or incomplete, and even authors of recent meta-analyses, who claim that the references or data are available upon request, and such availability is an explicit requirement for many journals, are sporadically responsive (Wood, Müller, & Brown, 2018). Hence the call for Open Science, Open Data, Open Access, and Open Archive, and the increasing number of journals that have adopted this standard of transparency (Aguinis, Banks, Rogelberg, & Cascio, 2020; Vicente-Sáez & Martínez-Fuentes, 2018). Along with the complete database, if the statistical process deviates from standard practice, ideally a copy of the analysis script should be made available in an Open Science archive. The advantages of such heightened transparency and reproducibility are several (Aguinis et al., 2018; Polanin, Hennessy, & Tsuji, 2020b), but it does introduce considerable challenges (Beugelsdijk et al., 2020).

To begin with, journal articles are an abridged version of the available data and the analysis process. By themselves, they can hide a multitude of virtues and vices. As per Stahl et al., we were unable to completely recreate some steps (though we did approximate them) as they were not sufficiently specified. Adopting an Open Science framework, choices can be examined and updated, improving the research quality, as it encourages increased vigilance by the source authors.

As Marshall and Wallace (2019: 1) concluded, “Clearly, existing processes are not sustainable: reviews of current evidence cannot be produced efficiently and, in any case, often go out of date quickly once they are published. The fundamental problem is that current EBM [evidence-based medicine] methods, while rigorous, simply do not scale to meet the demands imposed by the voluminous scale of the (unstructured) evidence base.” Although originating from the medical field, this critique equally applies to management and IB (Rousseau, 2020). Our traditional methods of reporting, which Stahl et al. adopted, are flagging the extracted studies with an asterisk in the reference section or upon request from the authors. This is at present insufficient. Science is a social endeavor, and we need to be able to build on past meta-analyses to enable future ones; by making meta-analyses reproducible, that is, in having access to the coding database we are also making the process cumulative (Polanin et al., 2020b). In fact, Open Science can be considered as a stepping stone towards living systematic reviews (LSRs; Elliot et al., 2017), essentially reviews that are continuously updated in real time. Having found traction in medicine, LSRs are based around critical topics that can enable broad collaborations (along with advances in technological innovations, such as online platforms and machine learning), although not without their own challenges (Millard, Synnot, Elliott, Green, McDonald, & Turner, 2019).

Such data sharing is not without its perils, exacerbating the moral hazards associated with a common pool resource, that is, the publication base (Alter & Gonzalez, 2018; Hess & Ostrom, 2003). Traditionally, in a meta-analysis, the information becomes “consumed” once published or “extracted” in a meta-analysis, and the research base needs time to “regenerate,” that is grow sufficiently that a new summary is justified. Since there is no definitive point when regeneration occurs, we encounter a tragedy of the commons, where one instrumental strategy is to rush marginal meta-analyses to the academic market, shopping them to multiple venues in search of acceptance (i.e., science’s first mover advantage; Newman 2009). Open Science is likely to exacerbate this practice, as the cost of updating meta-analyses would be substantially reduced and, as Beugelsdijk et al. (2020: 897) discussed, “There would be nothing to stop others from using the fruits of their labor to write a competing article”. For example, in the field of ecology, the authors of a meta-analysis on marine habitats admirably provided their complete database, which was rapidly re-analyzed by a subsequent group with a slightly different taxonomy (Kinlock et al., 2019). In a charitable reply, they viewed this as an endorsement of Open Science, concluding “Without transparent methods, explicitly defined models, and fully transparent data and code, this advancement in scientific knowledge would have been delayed if not unobtainable” (Kinlock et al., 2019: 1533). However, as they noted, it took a team of ten authors over two years to create the original database, and posting it allowed others to supersede them with relatively minimal effort. If the original authors adopt an Open Science philosophy for their meta-analytic database (which we strongly recommend), subsequent free-riding or predatory authors could take advantage and, by adding marginal updates, publish. Reviewers should be sensitive to whether a new meta-analysis provides a substantive threshold of contribution, preferably with the involvement of the previous lead authors upon whose work they are building (especially if recent). To help guide decisions, we further address this issue in our subsequent section, “The next generation of meta-analyses.” In addition, journals can help to mitigate the moral hazard associated with meta-analysis’ common pool resource by allowing pre-registration and conditional pre-approval of large meta-analyses.

Future research directions

A good section on future research directions,” based on a close study of the entire field’s findings, although perhaps sporadically used (Carlson & Ji, 2011), can be as invaluable as the core results themselves. This information allows meta-analysts to steer the field itself. We can expect meta-analysts to expound on the gap between what is already known and what is required to move forward. The components of a good Future Research section touch on many of the very stages we previously emphasized here, especially Data Collection, Data Analysis, and Reporting.

During Data Collection, researchers have had to be sensitive to inclusion and exclusion criteria and how constructs were defined and measured. This provides several insights. To begin with, the development of inclusion and exclusion criteria, along with addressing issues of commensurability, allow researchers to consider construct definition and its measurement. Was the construct well defined? Often, there are as many definitions as there are researchers, so this is an opportunity to provide some clarity. With an enhanced understanding, an evaluation of the measures can proceed, especially where they could be improved. How well do they assess the construct? Should some be favored and others abandoned?

During Data Analysis, researchers likely attempted to assemble a correlation matrix to conduct meta-analytic structural equation modeling and meta-regression. One of the more frustrating aspects of this endeavor is when the matrix is almost complete, but some cells are missing. Here is where the researcher can direct future projects towards understudied elements, as well as highlight that other relationships have been overly emphasized, perhaps to the point of recommending a moratorium. Similarly, the issues of heterogeneity and moderators come up. The results may generalize, but this may be due to overly homogenous samples or settings. Also, there was likely a need by some moderators to address theory, but the field simply did not report or contain them. Additionally, informing reviewers that the field is not yet able to address such ambitions often helps curtail a critique of their absence. This is where Stahl et al. primarily dedicated their own Future Research Agenda: process moderators should be considered (alone and in combination) and different cultural settings should be explored. In short, the researcher should stress how every future study should contextualize or describe itself (i.e., based on the likely major moderators).

Finally, we emphasized during Reporting the need for an Open Science framework. For a meta-analyst, often the greatest challenge is not the choice of statistical technique but getting enough foundational studies, especially those that fully report and are of high quality. The methodological techniques tend to converge at higher k, and statistical legerdemain can mitigate but not overcome an inherent lack of data. Fortunately, the Open Science movement and the increased availability of a study’s underlying data (i.e., IPD) opens possibilities. Contextual and other detailed information may not be reported in a study, often due to journal space limitation, but are needed for meta-analytic moderator analyses. With Open Science, this information will be increasingly available, allowing for the improved application of many sophisticated techniques. For example, Jak and Cheung’s (2020) one-stage MASEM incorporates continuous moderators for MARA but requires a minimum of 30 studies. Consequently, researchers should consider what new findings would be possible with a growing research base. In short, journal editors and reviewers should expect a synopsis of when a follow up meta-analysis would be appropriate and what the next update could accomplish with a greater and more varied database to rely on.

The next generation of meta-analyses

Is Stahl et al. the last word on diversity? Of course not. The entire point of Stahl et al.’s future research direction section was that it should be acted upon. Since Stahl et al., there have been a variety of advances in diversity research, such as the greater adoption of Blau’s index used to calculate the actual proportion of diversity (Blau, 1977; Harrison & Klein, 2007), and Shemla et al.’s (2016) conclusions that perceived levels of diversity can be more revealing than the objective measures on which Stahl et al. focused. Furthermore, not only do research bases refine and grow, at times exponentially, but meta-analytical methodology continues to evolve. With the increased popularity of meta-analysis, we can expect continued technical refinements and advances, some of which we touched upon in our article. We have shown that some of the newer techniques affected Stahl et al. findings, which proved sensitive to outliers and whether a fixed- or random-effects model was used. As for the near future, Marshall and Wallace (2019), as well as Johnson, Bauer and Niederman (2017), argued that we will see increased adoption of machine-learning systems in literature search and screening, which already exist but tend to be in the domain of well-funded health topics such as immunization (Begert, Granek, Irwin, & Brogly, 2020). Machine learning is a response to the “torrential volume of unstructured published evidence has rendered existing (rigorous, but manual) approaches to evidence synthesis increasingly costly and impractical” (Johnson et al., 2017: 8). The typical machine-learning strategy is to constantly sort the remaining articles based on researchers’ previous choices, until these researchers reject (screen out) a substantive number of articles in a row, whereupon screening stops. Since the system cannot predict perfectly, there is a tradeoff between false negatives and positives, meaning that adopters will sacrifice missing approximately 4–5% of relevant articles to reduce screening time by 30–78% (Créquit, Boutron, Meerpohl, Williams, Craig, & Ravaud, 2020). Complementing these efforts, meta-analyses may draw on a variant of the “Mark–Recapture” method commonly used in ecology to determine a population’s size. Essentially, such as determining the number of fish in a pond, some are captured, marked, and released. The number of these marked fish re-captured during a subsequent effort provides the total population through the Lincoln–Petersen method. As this applies to meta-analysis, when one has a variety of terms and databases to search for a construct, subsequent searches showing an ever-increasing number of duplicate articles (i.e., articles previously “marked” and “recaptured”) provides a strong indicator of thoroughness. This combination of research base growth and improved search and analysis means that meta-analyses should have a half-life and perhaps a short one (Shojania, Sampson, Ansari Ji, Doucette, & Moher, 2007).

Despite these ongoing advances, it is not uncommon for IB, management, and related fields to rely on meta-analyses not just one decade old but two, three or four, which can be contrasted with the Cochrane Database of Systematic Reviews where the median time for an update is approximately 3 years (Bashir, Surian, & Dunn, 2018; Bastian, Doust, Clarke, & Glasziou, 2019). For example, the classic meta-analysis on job satisfaction by Judge, Heller and Mount (2002) is still considered foundational and cited hundreds of times each year, although it relies on an unpublished personality matrix from the early 1980s, a choice of matrix that, as Park et al. (2020: 25) noted, “can substantively alter their conclusions”. Because of this reliance on very early and very rough estimates, newer meta-analyses indicate that many of Judge et al.’s core findings do not replicate (Steel, Schmidt, Bosco, & Uggerslev, 2019). Exactly because techniques evolve and research bases continue to grow, it is critical to update meta-analyses, even those, or perhaps especially those, that have become classics in a field.

This issue of meta-analytic currency has been intensely debated, culminating in a two-day international workshop by the Cochrane Collaboration’s Panel for Updating Guidance for Systematic Review (Garner et al., 2016). Drawing on this panel’s work, as well as similar recommendations by Mendes, Wohlin, Felizardo and Kalinowski (2020), we provide a revised set of guidelines, summarized in Figure 1. Next, we apply this sequence of steps to Stahl et al. Step one is the consideration of currency. Does the review still address a relevant question? In the case of Stahl et al., its topic has increased in relevance, as reflected by its frequent citations and the widespread concern with diversity. Step two is to the consideration of methodology and/or the research base. Have any new relevant methods been developed? Did Stahl et al. miss any appropriate applications? Meta-analysis has indeed rapidly developed, and, as we review here, there are numerous refinements that could be applied, from outlier analysis to MASEM. Alternatively, have any new relevant studies been published, or new information? This is related to currency, Step one, as, within the thousand studies alone that cited Stahl et al., the meta-analytic database would likely double or triple. Also, an expanded research base enables the application of more sophisticated analysis techniques. Step three is the probable impact of the new methodology and/or studies. Can they be expected to change the findings or reduce uncertainty? This has already been shown here, that taking a random-effects approach has changed statistical significance. Of note, there merely needs to be a likelihood of impact, not an inevitability. For example, narrowing extremely wide confidence intervals without changing the average effect size is still a valuable contribution simply because it reduces uncertainty. Similarly, providing a previously unavailable complete meta-analytic database in an Open Science archive (enabling cumulative growth) can still be considered impactful (especially as it motivates all researchers to data-share or risk their meta-analysis being rapidly superseded).

By all standards, Stahl et al.’s meta-analysis is now worthy of updating, but, as mentioned, it is certainly not alone. Given that our Table 1 focuses on modern meta-analytic practices, it makes a useful litmus test in conjunction with Figure 1’s decision framework for determining whether newer meta-analyses should be pursued or whether existing ones provide a sufficiently novel contribution. The more of the elements expounded in Table 1 that the more recent meta-analysis has compared to its predecessor, the more it deserves favorable treatment.

Conclusions

We have discussed key methodological junctures in the design and execution of a modern meta-analytic study. We have shown that each stage in a meta-analytical study requires a series of critical decisions. These decisions are critical because they have an impact on the results obtained and substantive conclusions for theory as well as implications for practice and policymaking. We have discussed Stahl et al.’s meta-analysis as an exemplar to explain why their article was selected as the 2020 JIBS decade award, but also to show how the field of meta-analysis has progressed since. Table 1 summarizes recommendations and their implementation guidelines for a modern meta-analysis. By following the different steps described in Table 1, we make explicit the anatomy of a successful meta-analysis, and we summarize what authors can be expected to do, what reviewers can be expected to ask for, and what consumers of meta-analytic reviews (i.e., other researchers, practitioners, and policymakers) can be expected to look for. Like any research method, meta-analysis is nuanced, and this is not an exhaustive list of all technical aspects or possible contributions or permutations. We can, though, summarize its spirit. When a phenomenon has been researched from a wide variety of perspectives, pulling these studies together and effectively exploring and explaining the shifting effect sizes and signs is invariably enriching.

References

Aguinis, H., Banks, G. C., Rogelberg, S., & Cascio, W. F. 2020. Actionable recommendations for narrowing the science-practice gap in open science. Organizational Behavior and Human Decision Processes, 158: 27–35.

Aguinis, H., Beaty, J. C., Boik, R. J., & Pierce, C. A. 2005. Effect size and power in assessing moderating effects of categorical variables using multiple regression: A 30-year review. Journal of Applied Psychology, 90(1): 94–107.

Aguinis, H., Dalton, D. R., Bosco, F. A., Pierce, C. A., & Dalton, C. M. 2011a. Meta-analytic choices and judgment calls: Implications for theory building and testing, obtained effect sizes, and scholarly impact. Journal of Management, 37(1): 5–38.

Aguinis, H., Gottfredson, R. K., & Joo, H. 2013. Best-practice recommendations for defining, identifying, and handling outliers. Organizational Research Methods, 16(2): 270–301.

Aguinis, H., Gottfredson, R. K., & Wright, T. A. 2011b. Best-practice recommendations for estimating interaction effects using meta-analysis. Journal of Organizational Behavior, 32(8): 1033–1043.

Aguinis, H., Hill, N. S., & Bailey, J. R. 2021. Best practices in data collection and preparation: Recommendations for reviewers, editors, and authors. Organizational Research Methods. https://doi.org/10.1177/1094428119836485.

Aguinis, H., Pierce, C. A., Bosco, F. A., Dalton, D. R., & Dalton, C. M. 2011c. Debunking myths and urban legends about meta-analysis. Organizational Research Methods, 14(2): 306–331.

Aguinis, H., Ramani, R. S., & Alabduljader, N. 2018. What you see is what you get? Enhancing methodological transparency in management research. Academy of Management Annals, 12: 83–110.

Aguinis, H., Sturman, M. C., & Pierce, C. A. 2008. Comparison of three meta-analytic procedures for estimating moderating effects of categorical variables. Organizational Research Methods, 11(1): 9–34.

Alter, G., & Gonzalez, R. 2018. Responsible practices for data sharing. American Psychologist, 73(2): 146–156.

Augusteijn, H. E. M., van Aert, R. C. M., & van Assen, M. A. L. M. 2019. The effect of publication bias on the Q test and assessment of heterogeneity. Psychological Methods, 24(1): 116–134.

Baker, R., & Jackson, D. 2016. New models for describing outliers in meta-analysis. Research Synthesis Methods, 7(3): 314–328.

Bashir, R., Surian, D., & Dunn, A. G. 2018. Time-to-update of systematic reviews relative to the availability of new evidence. Systematic Reviews, 7(1): 195.

Bastian, H., Doust, J., Clarke, M., & Glasziou, P. 2019. The epidemiology of systematic review updates: A longitudinal study of updating of Cochrane reviews, 2003 to 2018. medRxiv: 19014134.

Becker, B. J. 2005. Failsafe N or file-drawer number. In H. R. Rothstein, A. J. Sutton, & M. Borenstein (Eds.), Publication bias in meta-analysis: Prevention, assessment and adjustments: 111–125. West Sussex: Wiley.

Begert, D., Granek, J., Irwin, B., Brogly, C., & Xtract, A. I. 2020. Using automation for repetitive work involved in a systematic review. CCDR, 46(6): 174–179.

Begg, C. B. 1994. Publication bias. In H. M. Cooper & L. V. Hedges (Eds.), The handbook of research synthesis: 399–409. New York: Russell Sage.

Bem, D. J. 1995. Writing a review article for psychological bulletin. Psychological Bulletin, 118(2): 172–177.

Bergh, D. D., Aguinis, H., Heavey, C., Ketchen, D. J., Boyd, B. K., Su, P., et al. 2016. Using meta-analytic structural equation modeling to advance strategic management research: Guidelines and an empirical illustration via the strategic leadership-performance relationship. Strategic Management Journal, 37(3): 477–497.

Bernerth, J., & Aguinis, H. 2016. A critical review and best-practice recommendations for control variable usage. Personnel Psychology, 69(1): 229–283.

Beugelsdijk, S., Ambos, B., & Nell, P. 2018a. Conceptualizing and measuring distance in international business research: Recurring questions and best practice guidelines. Journal of International Business Studies, 49(9): 1113–1137.

Beugelsdijk, S., Kostova, T., Kunst, V. E., Spadafora, E., & van Essen, M. 2018b. Cultural distance and firm internationalization: A meta-analytical review and theoretical implications. Journal of Management, 44(1): 89–130.