Abstract

Anxiety, a condition characterized by intense fear and persistent worry, affects millions each year and, when severe, is distressing and functionally impairing. Numerous machine learning frameworks have been developed and tested to predict features of anxiety and anxiety traits. This study extended these approaches by using a small set of interpretable judgment variables (n = 15) and contextual variables (demographics, perceived loneliness, COVID-19 history) to (1) understand the relationships between these variables and (2) develop a framework to predict anxiety levels [derived from the State Trait Anxiety Inventory (STAI)]. This set of 15 judgment variables, including loss aversion and risk aversion, models biases in reward/aversion judgments extracted from an unsupervised, short (2–3 min) picture rating task (using the International Affective Picture System) that can be completed on a smartphone. The study cohort consisted of 3476 de-identified adult participants from across the United States who were recruited using an email survey database. Using a balanced Random Forest approach with these judgment and contextual variables, STAI-derived anxiety levels were predicted with up to 81% accuracy and 0.71 AUC ROC. Normalized Gini scores showed that the most important predictors (age, loneliness, household income, employment status) contributed a total of 29–31% of the cumulative relative importance and up to 61% was contributed by judgment variables. Mediation/moderation statistics revealed that the interactions between judgment and contextual variables appears to be important for accurately predicting anxiety levels. Median shifts in judgment variables described a behavioral profile for individuals with higher anxiety levels that was characterized by less resilience, more avoidance, and more indifference behavior. This study supports the hypothesis that distinct constellations of 15 interpretable judgment variables, along with contextual variables, could yield an efficient and highly scalable system for mental health assessment. These results contribute to our understanding of underlying psychological processes that are necessary to characterize what causes variance in anxiety conditions and its behaviors, which can impact treatment development and efficacy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Anxiety disorders affected ~12% of the US population in 20211 and affects 4% of the population worldwide2,3. Anxiety disorders are characterized by intense fear and persistent worry in the absence of a defined threat4 and are among the most common causes of disability worldwide. Anxiety disorders begin early in life5,6 and increase the risk of subsequent mood disorders, substance misuse, suicidal behavior and economic disadvantage7.

The diagnosis of an anxiety disorder involves clinical determination of the severity of symptoms and the presence of specific symptom constellations based on clinical assessment, commonly augmented by surveys and symptom inventories8. Recently, automated approaches have been tested for predicting anxiety, as determined by clinical assessment or surveys, with a primary focus on using machine learning (ML) approaches9 with large variable sets (e.g., >100)10,11,12 including clinical data13,14,15, questionnaires16,17, wearable biosensors18,19,20, social media posts21,22,23, neural measures from MRI24,25,26 and cognitive science variables27,28,29,30,31. These large variable sets add multiple dimensions to the characterization of anxiety across study participants producing higher accuracies and lower unexplained variance. They model complex relationships between the predictors and the outcome, yet can present challenges ranging from significant computational requirements and prohibitive privacy concerns, to lengthy and costly data acquisitions. The current study sought to contribute to current ML-based anxiety level prediction efforts by using a small set of cognitive science variables, that can be acquired in 2–3 min on a small digital device, like a smartphone. Currently, 92% of the US population32 and 85% of the world population33 can access such devices.

Cognitive science studies focused on judgment behavior are hypothesized to be relevant to anxiety given the overlap in the neural systems implicated in both34,35,36. Abnormalities in reward/aversion judgment have been linked to dopamine system dysfunction in depression, addiction, suicidality, and chronic stress37,38,39, and individuals with anxiety have shown salient alterations in reward/aversion judgment40,41,42. A number of reward/aversion variables are thought to represent biases in judgment43,44, such as loss aversion (LA)45 and risk aversion (RA)46. Heightened RA47,48,49 and heightened LA50,51 have been reported in those with anxiety using a range of distinct monetary and emotional stimuli that describe reward/aversion judgment.

Reward/aversion judgment has been studied using operant keypress tasks to frame reinforcement reward in humans52,53,54,55,56, and been used to quantify judgment variables like LA56,57. These cognitive science studies have compared keypress-based LA to other LA frameworks such as prospect theory (e.g., Lee et al.58), connected keypress methods to imaging of reward/aversion circuitry (e.g., refs. 52,59,60,61) and connected LA from operant keypressing to reward/aversion circuitry62. The keypress framework allows the modeling of human behavior using variance and entropic variables to produce a set of at least 15 features that characterize an individual’s reward/aversion judgment (e.g., LA, RA, and others, Table 1). These features have been linked to brain structure differences in the context of (1) substance use disorder60 and (2) the characterization of substance use disorder and depression63.

These 15 judgment variables can also be computed from a picture rating task that takes 2–3 min64. The picture rating task was adapted from the operant keypress task, and is implementable on a smartphone or digital device (Fig. 1, Table 1). Judgment variables from the shorter picture rating task are consistent across multiple data sets64,65. The 15 judgment variables derived from the picture rating task, when combined with a small set of demographic and survey variables, have been used to predict other mental health and medical health conditions with high accuracy using ML: depression history65, suicidality66, and vaccine uptake67. Based on results from these prior publications, we hypothesized that a small set of 15 judgment variables (see Fig. 1, Table 1), with contextual variables theorized to affect judgment and mental function (in this case: demographics, perceived loneliness, and COVID-19 history), might facilitate the prediction of anxiety levels.

A An example picture from the picture rating task where participants were asked to rate how much they liked or disliked an imagine on a scale of −3 (dislike very much) and +3 (like very much), with 0 being neutral. B Visual representation of the x–y plane for relative preference theory (RPT) value function fitting and resulting features extracted. C Visual representation of the x–y plane for RPT limit function fitting and resulting features extracted. D Visual representation of the x–y plane for RPT tradeoff function fitting and resulting features extracted. E Each of the 15 features and their abbreviated terms.

A short picture rating task (see “Methods”) was administered to 4019 (3476 following data exclusion) de-identified participants in December 2021. Participants rated 48 unique color images from the International Affective Picture System (IAPS)68,69 on a scale of −3 (dislike very much) to +3 (like very much). Anxiety scores were derived from the state component of the State-Trait Anxiety Inventory (STAI) questionnaire70, a validated anxiety questionnaire. Random Forest (RF) and balanced Random Forest (bRF) techniques were used to classify anxiety scores into ‘higher’ versus ‘lower’ classes and to understand the relative importance of the predictors using Gini scores. Post hoc mediation/moderation analyses were conducted to understand the interactions between judgment and contextual variables that may underly anxiety level prediction. Lastly, contextual and judgment variable differences were assessed against anxiety levels.

This study took the perspective that the power of psychological constructs depends on their capacity to make meaningful predictions. The use of mathematical cognitive science to predict survey-based anxiety measures contributes to our understanding of how psychological processes underlie the variance in anxiety conditions and behaviors, which might impact treatment development and efficacy.

Results

This study assessed anxiety levels (derived from STAI questionnaire) in relation to contextual and 15 picture rating-derived judgment variables. Judgment variables include Loss Aversion (LA)45, Risk Aversion (RA)46, Loss Resilience (LR), Ante, Insurance, Total Reward Risk (Total RR), Total Aversion Risk (Total AR), Peak Positive Risk (Peak PR), Peak Negative Risk (Peak NR), Reward Tipping Point (Reward TP), Aversion Tipping Point (Aversion TP), Reward-Aversion tradeoff (RA tradeoff), Tradeoff range, Reward-Aversion consistency (RA consistency) and Consistency range (see Fig. 1B–D and Table 1).

Classification analysis: Random Forest (RF) and balanced Random Forest (bRF)

For the classification of ‘higher’ and ‘lower’ anxiety levels, the bRF performed better than RF in terms of sensitivity, specificity, AUC ROC, and balanced accuracy at all three threshold values with the best performance for the threshold of 35 (Table 2). For the bRF classification, the out of bag (OOB) accuracy and accuracy ranged from 72 to 81% and AUC ROC ranged from 0.71 to 0.74 (Table 2) which was much higher than the chance levels obtained from the permutation analysis (Supplementary Table 3). The sensitivity ranged from 56 to 74% with the lowest values corresponding to a greater class imbalance at a threshold of 55. A greater class imbalance was noted as the threshold values increased as depicted in the ‘Percentage of data’ column of Table 2. When the threshold value was 55, the percentage of participants with high anxiety was only 12% (418/3476) of the dataset.

For RF classification, the OOB accuracy and test dataset accuracy ranged from 72 to 88% and AUC ROC ranged from 0.52 to 0.72 (Table 2). The sensitivity ranged from 3 to 74% with the lowest values corresponding to a greater class imbalance at a threshold of 55. RF had the worst performance at the threshold of 55, with the output metrics close to the chance levels (Supplementary Table 3).

Multi-dimensional scaling (MDS) plots demonstrated how ‘higher’ and ‘lower’ clusters were better distinguished at lower STAI-S thresholds (i.e., Supplementary Fig. 2) and how bRF always produced better data segregation between the two classes as compared to RF.

Relative importance of features

Age, loneliness, income, and employment were consistently the most important individual features for bRF based on the mean decrease in Gini scores (see Fig. 2B, D, F). This was also the case for RF with anxiety thresholds of 35 and 45 (Fig. 2A, C). Together, these four variables contributed between 29 and 33% of the relative importance (Table 3). For the RF analysis with a high threshold of 55 (only 12% of the cohort in the ‘higher’ group and 3% sensitivity), age and loneliness remained the top-most contributing variables.

The predictors are arranged according to the mean decrease in Gini scores with the most important predictors on the top. The red box outlines the top contextual variables, and the blue box outlines the 15 judgment variables while the red * in the blue box points to the contextual variables in the cluster of judgment variables. Plots (A), (C), (E) corresponds to RF analyses with thresholds of 35, 45, and 55, respectively, and (B), (D), (F) corresponds to bRF with thresholds of 35, 45, and 55, respectively.

The 15 judgment variables contributed a combined 55–61% of the relative importance (Fig. 2 and Table 3). Other contextual variables (education group, education in years, marital status, race/ethnicity, sex, COVID-19 test, and diagnosis) were lower in classification importance, contributing a combined relative importance of 11–21%.

Mediation and moderation analysis

Mediation and moderation analysis were used to define statistical interactions between judgment variables and the most important contextual variables (age, loneliness, income, and employment). These contextual variables were defined as the mediator (Me) or moderator (Mo), judgment variables were defined as the independent variable, and STAI-S scores were defined as the dependent variable. These analyses revealed nine mediation (Table 4A and Supplementary Table 4) and seven moderation results (Table 4B). Age acted as a mediator when loss resilience, Aversion TP, Tradeoff Range, and Consistency range were independent variables. Loneliness appeared as the mediator in four mediation analyses with ante, insurance, Total AR, and Consistency range, whereas employment only mediated Consistency range as the independent variable.

Independent and Me variables were then switched to test if the judgment variables acted as mediators. No significant mediation results were found when contextual variables were independent variables and judgment variables were mediators.

With regard to moderation analyses, age was found to also be involved in four moderations, with ante, insurance, Peak PR, and Peak NR as the independent variables. Loneliness was involved in one moderation with Peak PR, and employment was implicated in two moderations with insurance and Peak NR. There were no mediation or moderation results with the income variable.

Note that age, loneliness, and employment interacted with different judgment variables when acting as a mediators versus when acting as a moderators (see Table 4A and Table 4B).

Post hoc analysis of contextual variable differences

The seven demographic variables (excluding years of education), perceived loneliness, and COVID-19 history were assessed for differences across anxiety scores using Wilcoxon rank-sum and Kruskal Wallis tests. All contextual variables significantly varied by anxiety score (p-value < 0.05) (Table 5A). For the majority of contextual variables, boxplots depicted ascending or descending trends (Supplementary Fig. 3). For example, anxiety scores were higher (1) with higher levels of perceived loneliness, (2) among younger individuals, (3) in females, (4) among individuals with lower household income, (5) among individuals with lower education levels, and (6) in individuals reporting a history of COVID-19 infection (test and diagnosis).

Post hoc analysis of judgment variable differences

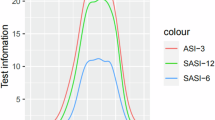

Judgment variables were analyzed by ‘higher’ and ‘lower’ anxiety scores for the three threshold values (Fig. 3). Eleven out of the 15 judgment variables differed using the one-sided Wilcoxon rank sum test (significance α < 0.05) and 8 out 15 differed after correction for multiple comparisons (significance α < 0.0083, after Bonferroni correction). The alternative hypothesis, and the respective p-values for each test are reported in Table 5B. The alternative hypothesis was defined as the judgment variable distribution median being greater in the ‘higher’ anxiety group than the ‘lower’ anxiety group, or vice versa. The ‘higher’ anxiety group had higher medians for loss aversion (threshold = 35, p < 0.05), ante (threshold = 45, 55, p < 0.05), Peak PR (threshold = 45, p < 0.0083; 55, p < 0.05), and Total RR (threshold = 35, p < 0.05; 45, 55, p < 0.0083) when compared to the ‘lower’ anxiety group (Table 5B). The ‘higher’ anxiety group had lower medians for risk aversion (threshold = 45, p < 0.05), loss resilience (threshold = 35, 45, 55, p < 0.0083), Peak NR (threshold = 35, p < 0.0083), Aversion TP (threshold = 35, 45, p < 0.0083), Total AR (threshold = 35, p < 0.0083), Tradeoff Range (threshold = 35, 45, 55, p < 0.0083), and RA consistency (threshold = 35, p < 0.05; 45, p < 0.0083) (Table 5B). Insurance, Reward TP, RA Tradeoff, and Consistency Range showed no significant differences across all threshold values.

Discussion

This study evaluated how well a small set of judgment and contextual variables (i.e., demographics, perceived loneliness, and COVID-19 infection history), could together predict state anxiety levels. The study produced four major findings. First, prediction accuracy ranged from 72.4 to 81.3% with balanced Random Forest (bRF), and sensitivities decreased (74.1% to 56.1%) as the threshold for classifying anxiety was increased. Regardless of the threshold change, all prediction models maintained a relatively high AUC ROC (0.72 to 0.71), comparable with findings in the literature, and distinct from a permutation analysis showing AUC ROC outcomes approximating 0.50. bRF produced uniformly higher sensitivity outcomes than RF approaches. Second, four contextual variables (age, income, employment, and perceived loneliness) had the highest relative importance based on normalized Gini scores across most RF and bRF analyses (5 out of 6 analyses), and contributed a cumulative of 29–33% of relative importance to prediction. Other contextual variables such as race/ethnicity, sex, and COVID-19 infection history showed minimal importance across all analyses. All 15 judgment variables consistently showed similar importance and contributed a cumulative importance ranging from 55 to 61%. Third, nine of the 15 judgment variables were involved in mediation or moderation with contextual variables. Age, employment, and perceived loneliness mediated or moderated relationships with distinct judgment variables to model anxiety scores, consistent with other reports regarding the relationship of cognitive science measures and contextual variables65,66,67,71,72,73,74,75. Fourth, all contextual variables exhibited significant differences in anxiety scores, and 11 of the 15 judgment variables differed when assessed for median shifts across ‘higher’ and ‘lower’ anxiety groups, indicating that a constellation of judgment alterations are predictive of anxiety levels.

Prediction results from this study were comparable to recent research deploying advanced machine learning algorithms to predict anxiety and other mental health conditions9, with a number of limitations and advantages. Over the past decade there have been six general types of data used for predicting anxiety, with considerable heterogeneity in terms of how anxiety is defined, and the machine learning algorithms used. These six general frameworks for prediction included variables from: neuroimaging, physiological signals, survey-based assessments, social media posts, clinical or medical health records and behavioral tasks. Neuroimaging studies targeting anxiety prediction reported high accuracies ranging between 79 and 90%24,25 and low correlation r = 0.28 (using Gaussian Process Regression)26 but present challenges surrounding the collection of expensive, computationally-intensive, and complex MRI data that requires supervision of trained individuals at specific imaging sites. With similar caveats, studies using bio-signals and physiological signals18,19,20, that involved the use of multiple wearable sensors and collection of data under supervision, reported higher accuracies and model fits of 84.3%, 89.8% and r = 0.81. Survey-based studies have utilized extensive sets of demographic variables and lengthy questionnaires16,17, to predict anxiety with sensitivities between 62 and 73%. Other studies using demographics, lifestyle, and health surveys10,11, and transcripts of recorded interviews12 predicted self-reported anxiety with accuracies between 75–86%. Studies using social media platforms like Reddit predicted anxiety with 75% precision21, 78% accuracy22) from posts in mental health discussion groups, and Gruda et al.23 used tweets to predict anxiety scores based on the level of anxiety assessed by volunteers in those tweets. Some studies13,14,15 required access to clinical and medical records of thousands of participants and reported accuracies of 73–89%11,13,14,18,20,25.

A number of studies have utilized cognitive science variables derived from behavioral tasks to study anxiety27,28,31 and neuroticism29 (a general trait that is considered as a vulnerability factor for anxiety76). For instance, Yamamori et al.27 used approach-avoidance reinforcement learning tasks with hierarchical logistic regression (reporting p < 0.05) to model task-induced anxiety. Aupperle et al.28 reported significant correlations (p < 0.01) between measures from computer-based approach-avoidance conflict task and self-reported anxiety measures from anxiety sensitivity index and Behavioral Inhibition/Activation Scale. Park et al.29 reported attenuated processing of gains and losses (using functional MRI responses to a Monetary Incentive Delay task) with higher polygenic risk scores for neuroticism using a general linear model (p < 0.001). Forthman et al.30 predicted repetitive negative thinking (a trait that negatively impacts anxiety) from 20 principal components of behavioral and cognitive variables (derived from detailed neuropsychological and behavioral assessment) and polygenic risk scores using a machine learning ensemble method with R2 of 0.037 (standard error = 0.002). Richter et al.31 utilized thorough behavioral testing completed by participants under supervision, and reported a sensitivity of 71.4% and a specificity of 70.8% using RF in individuals with anxiety and/or depression. The current study complements these publications, and supports their findings by using a machine learning-based approach and a short cognitive science task that can be performed without supervision on personal electronic device to predict anxiety with high accuracy and sensitivity.

Four contextual variables (age, loneliness, income, and employment status) were salient for the prediction of anxiety, having a cumulative relative importance of 29–33%. Although the relationship between anxiety levels and demographic measures was consistent with the literature (as described below), most of the other demographic measures did not contribute as much to anxiety level prediction. For instance, sex (gender assigned at birth) consistently contributed less than 1% of relative importance and race/ethnic background contributed 1–1.5% of relative importance across analyses. The 15 judgment variables contributed a cumulative relative importance ranging from 55 to 61%. These variables quantify irrationality, or biases, in judgment (i.e., the bounds to rationality as described by Kahneman44), and support prior publications pointing to the importance of reward/aversion variables for the study anxiety27,28,41,77. Gini scores were minimally different across the 15 judgment variables, suggesting further research is needed to assess how these judgment variables interact or cluster together. When the threshold used for segregating ‘higher’ versus ‘lower’ anxiety groups was increased, education and marital status increased in feature importance for prediction, raising a hypothesis that some demographic variables may be more important for predicting severe anxiety. A history of COVID-19 infection was not salient for predicting current anxiety and was consistently one of the least important features. This result contrasts with other literature showing large-scale societal concern about COVID-19 illness, the pandemic and related anxiety78,79. This study only used the “state” and not the “trait” component from the STAI, to reflect current experience. The study thus does not address any relationship between prior COVID-19 history and long-term trait anxiety, nor address people’s thoughts about infection.

Mediation and moderation models were used to quantify relationships between contextual and judgment variables involved in the prediction of anxiety levels. Three of the four most important contextual variables (age, employment, and loneliness) interacted with judgment variables to predict, or model, anxiety scores in mediation and moderation frameworks. These relationships were not observed with income and no significant mediation were found when contextual variables (age, employment, income, and loneliness) acted as independent variables and judgment variables were the mediator variables. On the other hand, significant mediations with contextual variables as mediators indicated that the contextual variables statistically modulated the relationship between judgment variables and anxiety scores; that is, they sat in the causal pathway between judgment variables and anxiety levels. Seven unique judgment variables were involved in nine significant mediation models with age, employment, and loneliness as mediators. Moderation analyses reflected how an interaction between a contextual variable and judgment variable might predict anxiety scores; these relationships were observed for only four judgment variables across seven significant moderation models. In total, 9 of the 15 judgment variables were thus involved in either mediation or moderation, indicating that contextual variables affect the impact of a majority of the judgment variables on anxiety level prediction. From a psychological perspective, these findings demonstrate how context (e.g., age, employment status, perceived loneliness) modulates or interacts with judgment variables to model anxiety, and how these relationships between judgment and context may aide the assessment of anxiety and ultimately, other mental health conditions. Others have noted that psychological processes occur in a context, and this study supports their work65,66,67,71,72,73,74,75.

In the current study, anxiety scores significantly varied by the contextual variables used to classify anxiety scores. Anxiety scores increased with increasing levels of perceived loneliness, where participants who often, or always, avoid spending time with others, or spend most of their time alone, had higher levels of anxiety. This is consistent with previous literature where anxiety increased as a function of loneliness80,81,82 and higher anxiety was related to avoidant social behavior83. Consistent with the literature, anxiety scores were predominately higher in females, as compared to males84,85,86,87, and in young adults (aged 18–39 years), as compared to older adults aged (40–70)87. As others have published, anxiety scores were higher in participants indicating lower household income levels88,89 and lower education levels90. In alignment with other reports, anxiety scores also varied with different levels of employment where retired participants reported the lowest anxiety scores and participants that were unemployed89,91 or had more than one job had the highest levels of anxiety. Anxiety scores also varied with marital status, in alignment with other reports, where participants classifying as ‘single’, ‘separated’, and ‘living with partner’ reported higher anxiety than others (e.g., ‘married’, ‘divorced’, ‘widowed’)89. As reported elsewhere our participants reporting mixed race backgrounds had higher anxiety92 than other racial/ethnic groups (e.g., white, African American, Hispanic, Asian). Lastly, individuals who reported previous COVID-19 experienced more anxiety. This finding is consistent with other studies in adults93,94,95 and a longitudinal study of adolescents with anxiety disorders that found SARS-COV-2 infection was associated with a 30% worsening in anxiety severity96, regardless of treatment status. Altogether, this concordance with the literature supports the broader set of findings.

Most judgment variables showed significant differences between ‘higher’ and ‘lower’ anxiety groups, suggesting three general constructs. The first being that the ‘higher’ anxiety group had higher loss aversion50,51 which corresponds to an overweighting of bad outcomes relative to good ones44. The ‘higher’ anxiety group also had higher Peak Positive Risk and Total Reward Risk, indicating that there was a higher uncertainty that must be overcome to approach stimuli, and that the interactions between reward and the associated risk were higher. Both observations point to difficulties with initiating behavior toward positive things, per Markowitz’s decision utility equation113. The same participants had lower Peak Negative Risk as compared to the low anxiety group, indicating there was a lower uncertainty to avoid events. The ‘higher’ anxiety group also had lower Total Aversion Risk suggesting that interactions between aversion and the associated risk were lower. Similarly, they had lower Aversion Tipping Point which corresponds to the intensity of aversion beyond which avoidant choices are made, suggesting lower values in those with higher anxiety scores more readily make avoidant choices. Together, this set of judgment variables quantifies how individuals with high anxiety overweight bad outcomes relative to good ones, have difficulty approaching positive stimuli (i.e., more rewarding and non-aversive items), yet readily seem to avoid negative ones. A second construct suggests that individuals with difficulty approaching positive stimuli seem to be more open to risk-seeking. Participants with high anxiety scores had higher ante and lower risk aversion indicating they would be more willing to play a game with uncertain outcomes, and that they do not prefer actions that lead to certain outcomes (i.e., they prefer two birds in the bush vs. one in the hand). This contrasts with studies that used emotional and monetary stimuli to observe heightened risk aversion in individuals with anxiety47,48. This difference in observations might depend on how a question is placed in the context of gain or loss (i.e., framing effects)44,97. The third construct was identified by low loss resilience, tradeoff range, and consistency metrics. Specifically, a lower Tradeoff range in high-anxiety persons is consistent with a restrictive portfolio of positive and negative preferences. Lower Reward Aversion consistency suggests a likelihood of indifference or that a person neither likes nor dislikes a particular stimulus. People with high anxiety scores are less loss resilient, meaning they have a reduced ability to rebound from bad outcomes. The three constructs describe a behavioral profile for high anxiety persons as having less resilience, more avoidance, and more indifference behavior. Together, these three general groupings of judgment variables point to known features of anxiety49 but provide a lawful, quantitative framework56,57 for framing the condition and support the hypothesis that unique constellations of judgment variables underlie other mental health conditions like depression65 and suicidality66. The current findings support calls for the development of a standard model of mind98, albeit based on processes of judgment and agency as opposed to variables focused primarily on cognition.

Several limitations need be considered. First, the participants were recruited from the United States, and region and culture may influence the importance of judgment variables in predicting anxiety as psychiatric symptoms differ across cultures99,100,101,102,103,104. Second, participants with mental health conditions were oversampled to meet criteria for other survey components not discussed here. This oversampling could bias results and more generalized samples are needed to validate and extend our findings. Third, all variables were self-reported and not collected from clinical records or framed as a double-blinded trial with investigator-administered survey instruments. Fourth, the cohort was sampled during the COVID-19 pandemic, in which greater incidents of loneliness and anxiety have been reported78,79. It will be important to prospectively investigate if similar behavioral patterns predict anxiety in the absence of a pandemic. Fifth, the survey did not request participants to differentiate between white non-Hispanic and non-white; more in-depth questions regarding racial and ethnic backgrounds should be considered in future data collections.

The current study used a computational cognition framework to assess how biases in human judgment might contribute to predicting anxiety levels. Using a small set of judgment and contextual variables (including demographics, perceived loneliness, and COVID-19 history) with a balanced Random Forest framework, this study achieved high accuracies up to 88.51% and AUC ROC values of 0.69–0.74 for predicting state anxiety levels derived from the STAI70. Judgment variables were extracted from a short (2–3 min), simple, and unsupervised picture rating task that can be easily completed on a personal electronic device. In these prediction analyses, the four most important variables (age, employment, income, and loneliness) were contextual variables that contributed 29–33% of the relative importance and judgment variables contributed up to 61% of the relative importance for prediction. Furthermore, age, loneliness, and employment status significantly mediated and moderated the relationship between judgment variables and anxiety scores—indicating statistically mechanistic relationships between these variables, and suggesting that both cognitive variables and contextual variables are important for accurately predicting anxiety levels. Judgment variables differed across participants with higher and lower anxiety scores providing a behavioral profile for participants with higher anxiety scores. That is to say, individuals with higher anxiety scores overweighted bad outcomes relative to good ones, had difficulty approaching positive stimuli, yet readily avoided negative ones. Along with this higher avoidance, they also had lower resilience and higher indifference, consistent with prior reports49. This study supports the hypothesis that a small set of interpretable judgment and contextual variables can accurately predict psychiatric symptoms and provide a computational cognitive framework to better understand and classify anxiety and other mental health conditions.

Methods

Participant recruitment

Gold Research Inc. (San Antonio, Texas) recruited study participants from multiple vendors in December 2021. 4019 de-identified participants (mean age ± std = 51.4 ± 14.9 years) were randomly sampled from the general U.S. population using an email survey database accessed by Gold Research, Inc. and a double opt-in methodology as described in detail in refs. 66,67,94,105,106, and in the Supplemental Material. All participants provided informed consent following oversight by Northwestern University’s and the University of Cincinnati’s Institutional Review Board and in accordance with the Declaration of Helsinki (see “Ethical statement” and refs. 66,67,94,105,106). Participants were balanced to meet the U.S. Census Bureau’s demographic criteria at the time of the survey (December 2021) and oversampled by 15% of the sample for mental health conditions (see Supplemental Material). The survey was composed of several blocks of questions using questionnaires (detailed below) for depression, anxiety, suicidality, addiction, psychosis, violent ideation, disruptive and destructive behaviors, perceived loneliness, along with demographic, self-reported mental health, and COVID-19 history questionnaires. Participants also completed a 48-item picture rating task (Fig. 1) split into two 24 picture blocks.

Ethical statement

Participation was offered with language noting that Gold Research was administering an emotional health questionnaire on behalf of Northwestern University, with the phrasing: “We will be evaluating how different emotions and experiences are connected and may relate to our emotional health.” All participants provided written informed consent, including their primary participation in the study and the secondary use of their anonymized, de-identified (i.e., all identifying information removed by Gold Research Inc. prior to retrieval by the research group) data in secondary analyses (see Supplemental Material). The study was approved by the Institutional Review Boards for Northwestern University (NU) and University of Cincinnati (UC) in accordance with the Declaration of Helsinki (approval number STU00213665 for NU and 2023-0164 for UC).

Data filtering

Gold Research excluded participants using four criteria: (1) participants selected the same response throughout any section of the questionnaire (e.g., selecting option “1” for all questions), (2) participants indicated they had ten or more clinician-diagnosed illnesses out of a possible 17 (data not described here), (3) if both education level and years of education did not match, and (4) if they completed the questionnaire in less than 800 s. After filtering for these criteria, Gold Research provided the research team data from 4019 participants. These data were further screened using responses from the picture rating task. These procedures have been adapted from Azcona et al.64 and are detailed in the Supplemental Material under Data filtering based on picture rating task. In short, participants were excluded if there was minimal variance in picture ratings (i.e., all pictures were rated the same or varied only by one point) and the quantitative feature set derived from the picture rating task was incomplete and/or there were extreme outliers (see Judgment variables from picture rating task and Supplemental Material). Using these exclusion criteria, 3476 participants were cleared for statistical analyses.

Contextual variables from survey questionnaires

Participants completed the survey using the online platform provided by Gold Research, Inc. Participants were asked to self-report (a) perceived loneliness in the past month (loneliness), (b) demographics including age, gender assigned at birth (sex), annual household income (income), marital status (marital), employment status (employment), level of education (edu), number of years of education (edu_years), race/ethnicity (race/ethnicity), and (c) two COVID-19 questions: (i) if the participant had ever tested positive for COVID-19 (test) and (ii) if the participant was ever diagnosed by a clinician with COVID-19 (diagnosis). The complete text regarding these questions is listed under the Survey Questions section in Supplemental Material. The response set to (a)–(c) is referred to as ‘contextual variables’ hereafter. Following data filtering as described above and in refs. 94,105,106, the 3476 participants were categorized as predominately female (61.5%), married (51.4%), white (85.7%), employed full-time (35.8%) with some college education (29.6%), and on average older (mean age = 51 years), see Supplementary Table 1 for a complete summary.

Anxiety questionnaire

This study assessed anxiety in relation to contextual and picture rating-derived variables (described below). We used the State-Trait Anxiety Inventory (STAI) questionnaire which is commonly used to measure trait and state anxiety70. It is used in clinical settings to quantify anxiety. The STAI consists of 20 questions for current state anxiety, and 20 questions for trait anxiety. In this study, only the 20-state anxiety (STAI-S) questions were deployed in the online survey. Participants were instructed to answer each question based on a 4-point Likert scale (1 = Not at all; 2 = Somewhat; 3 = Moderately so; 4 = Very much so) based on how they feel right now, that is, at the time of the survey. The questions were scored following the instructions in the score key for the form Y-1 of STAI (https://oml.eular.org/sysModules/obxOml/docs/ID_150/State-Trait-Anxiety-Inventory.pdf). The scored sum of STAI-S ranged from 20 to 80 and is hereafter referred to as ‘STAI-S score’ and/or ‘anxiety score’. STAI-S score distributions are shown in Fig. 3 with three red arrows marking the threshold values used in classification as described under ‘Classification analysis’. The STAI thresholds of 35, 45, and 55 roughly corresponds to 50th percentile (median), 75th percentile and 90th percentile, respectively, of the STAI-S scores (Fig. 3).

Picture rating task

Participants were shown 48 unique color images from the International Affective Picture System (IAPS)68,69. Six picture categories were used: (1) sports, (2) disasters, (3) cute animals, (4) aggressive animals, (5) nature (beach vs. mountains), and (6) adults in bathing suits, with eight pictures per category (48 pictures in total, a sample image is shown in Fig. 1A), with all pictures in a category having similar published calibration. These images act as mildly emotional stimuli that are employed to assess both positive and negative value (i.e., reward or liking vs. aversion or disliking) and have been broadly used and validated in research of human emotion, attention, and preference68,69. Images were displayed on participants’ personal devices with a maximum size of 1204 × 768 pixels. Below each picture was a rating scale from −3 (dislike very much) to +3 (like very much), where 0 indicated a neutral point (Fig. 1A). While there was no time limit for selecting a picture rating, participants were asked in the instructions to rate the images as quickly as possible and to use their first impression; specific instructions can be found in the Supplemental Material. Once a rating was selected, the next image was displayed.

Judgment variables derived from a picture rating task

Data from the picture rating task were analyzed using a computational framework to characterize preference judgments. Referred to as relative preference theory (RPT)56,57,62, this framework has been adapted to derive judgment features from picture ratings64,66,67 as opposed to operant keypressing52,56,57,59,60,61,62,107. For each participant, picture ratings from each of the six image categories were split into two sets—positive and negative. For each of these two sets, and for all six categories, the mean, Shannon entropy56,108, and variance were calculated, yielding a tuple denoted as \(\left({{\boldsymbol{K}}}^{{\boldsymbol{+}}}{\boldsymbol{,}}{{\boldsymbol{\sigma }}}^{{\boldsymbol{+}}}{\boldsymbol{,}}{{\boldsymbol{H}}}^{{\boldsymbol{+}}}\right)\) for the positive ratings and \(\left({{\boldsymbol{K}}}^{{\boldsymbol{-}}}{\boldsymbol{,}}{{\boldsymbol{\sigma }}}^{{\boldsymbol{-}}}{\boldsymbol{,}}{{\boldsymbol{H}}}^{{\boldsymbol{-}}}\right)\) for the negative ratings. This resulted in a total of 36 \(\left({\boldsymbol{K}}{\boldsymbol{,}}{\boldsymbol{\sigma }}{\boldsymbol{,}}{\boldsymbol{H}}\right)\) variables. Next, for each participant, the mean across the six categories was computed for each \(\left({\boldsymbol{K}}{\boldsymbol{,}}{\boldsymbol{\sigma }}{\boldsymbol{,}}{\boldsymbol{H}}\right)\) variable, resulting in six additional variables: mean \(\left({{\boldsymbol{K}}}^{{\boldsymbol{+}}}{\boldsymbol{,}}{{\boldsymbol{\sigma }}}^{{\boldsymbol{+}}}{\boldsymbol{,}}{{\boldsymbol{H}}}^{{\boldsymbol{+}}}\right)\) representing reward behavior and mean \(\left({{\boldsymbol{K}}}^{{\boldsymbol{-}}}{\boldsymbol{,}}{{\boldsymbol{\sigma }}}^{{\boldsymbol{-}}}{\boldsymbol{,}}{{\boldsymbol{H}}}^{{\boldsymbol{-}}}\right)\) representing aversion behavior. For each participant, three separate curves for value, limit, and tradeoff functions (see Fig. 1B–D were plotted using MATLAB and the library polyfit, following other publications (see details in Supplemental Material)56,57,62,64,107. Representative curves from 500 randomly selected participants out of the 3476 cohort are shown in Supplementary Fig. 1.

Goodness of fit for these functions was assessed by computing \({R}^{2}\) values, adjusted \({R}^{2}\) values (accounting for degrees of freedom), and \(F\)-statistics for each participant’s model fit (Supplementary Table 2A). Individual participants’ \(\left({\boldsymbol{K}}{\boldsymbol{,}}{\boldsymbol{H}}\right)\) value functions were fit by concave logarithmic, or power-law functions (Supplementary Table 2, Supplementary Fig. 1). \({R}^{2}\) values ranged from 0.85 to 0.94 for logarithmic fits of the value function, which was considered very high. Concave quadratic fits across individual participants’ \(\left({\boldsymbol{K}}{\boldsymbol{,}}{\boldsymbol{\sigma }}\right)\) data are displayed in Supplementary Fig. 1, and goodness of fit assessed using the same metrics as with the \(\left({\boldsymbol{K}}{\boldsymbol{,}}{\boldsymbol{H}}\right)\) data (Supplementary Table 2A). All \({R}^{2}\) values for the quadratic fits exceeded 0.80 and ranged from 0.84 to 0.96. Lastly, radial functions were fit to test for trade-offs in the distribution of \({{\boldsymbol{H}}}^{{\boldsymbol{-}}}\) and \({{\boldsymbol{H}}}^{{\boldsymbol{+}}}\) values across categories for each individual participant. Supplementary Fig. 1 displays radial fits across individual participants’ \(\left({{\boldsymbol{H}}}^{{\boldsymbol{+}}}{\boldsymbol{,}}{{\boldsymbol{H}}}^{{\boldsymbol{-}}}\right)\) and \(\left({{\boldsymbol{H}}}^{{\boldsymbol{+}}}{\boldsymbol{,}}{{\boldsymbol{H}}}^{{\boldsymbol{-}}}\right)\) data points for a random sample of participants.

The RPT framework fits reward/aversion curves and derives mathematical features from these graphical plots that are psychologically interpretable, scalable, recurrent, and discrete56,57,64. At least 15 variables can be extracted from this framework64, including Loss Aversion (LA)45, Risk Aversion (RA)46, Loss Resilience (LR), Ante, Insurance, Total Reward Risk (Total RR), Total Aversion Risk (Total AR), Peak Positive Risk (Peak PR), Peak Negative Risk (Peak NR), Reward Tipping Point (Reward TP), Aversion Tipping Point (Aversion TP), Reward-Aversion tradeoff (RA tradeoff), Tradeoff range, Reward-Aversion consistency (RA consistency) and Consistency range (Fig. 1B–D, Table 1). Each variable describes a quantitative component of the reward/aversion processing involved with judgment behavior (see details about each feature in Supplemental Material). The term ‘judgment variables’ will be used hereafter in reference to these features. Summary statistics for all 15 judgment variables obtained from all participants are summarized in Supplementary Table 2B.

Classification analysis

All analyses were performed in R. Judgment variables and contextual variables, including demographics, perceived loneliness, and COVID-19 questions, were used in the classification analyses. Random Forest (RF) and balanced Random Forest (bRF) analyses were used to classify anxiety scores into ‘higher’ and ‘lower’ classes (see below). The open access package ‘randomForest’ in R was used to train the RF and bRF models on training dataset.

Random Forest (RF) and balanced Random Forest (bRF) analysis

Anxiety scores were divided into two classes ‘higher’ and ‘lower’ classes based on three threshold values of 35, 45 and 55 as shown in Fig. 3 marked with red arrows. All values below a given threshold were labeled as ‘lower’ and values above and equal to the threshold were labeled as ‘higher’. Data were divided into train and test sets with a 70:30% ratio. RF and bRF approaches were implemented for each of the three thresholds using the command ‘randomForest’ from the package ‘randomForest’ in R. The number of variables randomly sampled as candidates at each split was 5 and the number of trees grown was 1000. The bRF performs random down-sampling of the majority class at each bootstrap sample to match the number of samples in majority and minority classes. The bRF approach was used in addition to the standard RF analysis because of the greater class imbalance when the anxiety score threshold was set to 45 and 55 (i.e., the ‘lower’ class occupied 70% and 88% of the dataset respectively). Note that bRF only performs down-sampling during training of the model. Once the model is trained, it calculates the prediction metrics on the complete, imbalanced train and test sets.

Out of bag (OOB) accuracy was reported for the training set. The model was then tested with the imbalanced test dataset and accuracy, sensitivity, specificity, AUC ROC (area under the receiving operating characteristics curve), and Balanced Accuracy (mean of sensitivity and specificity) were reported. Here, ‘higher’ was considered the positive class. For each threshold, percentages of each class relative to the entire dataset were reported. The entire procedure was repeated for each threshold value (i.e., 35, 45, 55). The multi-dimensional scaling (MDS) scaling coordinates of the proximity matrix for RF and bRF analyses were plotted to see how segregated the two clusters of lower and higher anxiety scores were, using the command ‘MDSplot’ in the package ‘randomForest’. The proximity matrix contains the frequency for each pair of data points. If two data points occupy the same terminal node through one decision tree, their proximity is increased by one. At the end of the run of all decision trees, the proximities are normalized by dividing by the number of trees. The MDS plots display how segregated the clusters were for the classification performed by RF or bRF.

To compare the performance of the classifiers to chance levels, permutation analysis was conducted for RF and bRF for each of the three threshold levels with 100 iterations. For each iteration, the ‘lower’/‘higher’ labels were shuffled randomly and RF and bRF analyses were run with the procedure described above. The above-mentioned output metrics were averaged over the 100 iterations to produce a model of chance effects.

Relative importance of features

The judgment and contextual variables were sorted based on the mean decrease in Gini scores and were plotted by decreasing feature importance, with the most important features appearing on the top of the plot. Gini score is a fundamental outcome of the random forest algorithms as it shows for each feature how large was the discriminative value for separating the data points into different classes. That is, how important was each variable in the classification, or how much was the uncertainty reduced in the model, leading to accurate predictions. The higher the mean decrease Gini score, the more important the feature was for the classification. The relative importance of the features was analyzed by normalizing the Gini score of each feature to the sum of all Gini scores. This gives the relative proportion of importance for each feature, with the sum for all features being 1. The Gini score plots, and the relative importance of the features were used in this study as an associated sensitivity analysis for the random forest algorithms.

Post hoc analysis: mediation and moderation analysis

Mediation and moderation were used as post hoc analyses to understand how judgment variables and the most important contextual variables, based on Gini scores, interact to model anxiety scores. These statistical mechanisms aide interpretation of the prediction results and follow procedures we have published before109,110.

Mediation analysis

Mediation was utilized to elucidate statistically causal relationship between judgment variables, contextual variables, and anxiety scores. The mediation model defines the relationship between an independent variable (X) and dependent variable (Y) with the inclusion of a third mediator variable (Me). Two sets of analyses were conducted where Y was the anxiety score and: (i) X were each of the judgment variables and Me were each of the most important contextual variables based on Gini score from RF and bRF analyses (i.e., the top four scores); (ii) X were the most important contextual variables and Me were the judgment variables.

The mediation model proposes that instead of a direct statistical causal relationship between X and Y, X influences Me, which then influences Y. Beta coefficients and their standard error (s) terms from the following linear regression equations, following the four-step process of Baron and Kenny (1986)111,112, were used to calculate Sobel p-values and mediation effect percentages (Teff):

Step 1-3:

Step 4: Sobel’s test was then used to test if \({c}^{{\prime} }\) was significantly lower than \(c\) using the following equation:

Using a standard 2-tail z-score table, the Sobel p-value (\({p}_{{Sobel}})\) was determined from the Sobel z-score and the mediation effect percentage (Teff) was calculated using the following equation:

Mediation was considered significant if p-values associated with terms a, b, and c were <0.05 from Step 1–3 and \({p}_{{Sobel}}\) < 0.05111 and \({T}_{{eff}}\) > 50%109,110.

Secondary mediation analysis was run by switching variables assigned to X and Me to see if the mediation effects were directed. If \({p}_{{Sobel}}\) > 0.05 and \({T}_{{eff}}\) < 50% for the secondary mediation analysis, this supported that Me was in the causal pathway between X and Y.

Moderation analysis

The moderation model proposes that the strength and direction of the relationship between an independent variable (X) and dependent variable (Y) is controlled by the moderator variable (Mo). In this study, X were each of the judgment variables, Mo were each of the most important contextual variables based on Gini score from RF and bRF analysis, and Y was the anxiety score. Moderation is characterized by the interaction term between X and Mo in the linear regression equation as given below:

Moderation was considered significant if \({p}_{{\beta }_{3}}\le 0.05\) (the interaction term \({\beta }_{3}\) is significantly different than zero) and \({p}_{{overall}}\le 0.05\) (for the overall model)109,110. To check if the overall model was significant, we used F-test.

To test if the coefficient of the interaction term (\({\beta }_{3})\) was significantly different than zero, we built full and restricted models and used partial F-tests to test the null hypothesis.

Full model:

Restricted Model:

Null hypothesis:

Alternative hypothesis:

If \({p}_{{\beta }_{3}}\), associated with the partial F-test was less than 0.05, we rejected our null hypothesis regarding the interaction term.

Post hoc analysis: variable differences by anxiety score

These post hoc analyses assessed if the contextual variables and judgment variables differed by anxiety score.

Contextual variable differences

Anxiety scores were assessed for differences by the different levels of contextual variables (except years of education) using Wilcoxon rank-sum test for questions with two levels and Kruskal Wallis test for questions with more than two levels. The ten contextual variables tested were loneliness, age, sex, income, marital status, employment status, education level, ethnicity, and COVID-19 test and diagnosis. Boxplots and p-values were reported.

Judgment variable differences

Since judgment variables were continuous, they were divided into corresponding ‘higher’ and ‘lower’ groups following the respective grouping of anxiety scores at each of the three thresholds (35, 45, and 55), and tested using the one-sided Wilcoxon rank-sum test. Alternative hypotheses for each test, and the respective p-values, were reported. Bonferroni correction was done across all six tests (two tests for each of the three thresholds) for each judgment variable. The alternative hypothesis indicated if the judgment variable distributions differed between participants in ‘higher’ and ‘lower’ anxiety classes (for example, if a given judgment variable was higher in the ‘higher’ anxiety class as compared to the ‘lower’ anxiety class, or vice versa).

Data availability

Data were de-identified before being provided to the investigators. Data are available in Microsoft Excel format and include relative preference variables, demographic metrics and survey variables inclusive of anxiety variables. The data may be accessed in Appendix 1, Supplementary Information.

Code availability

Computational behavior analysis used code published in refs. Azcona et al. 2022 and Kim et al. 2010. ML analyses used parameters as detailed in the Methods and Appendix 2, Supplementary Information. Mediation/moderation analyses used code sequences as detailed in refs. Bari et al. 2021 and Vike et al. 2022.

References

CDC, FastStats: Mental Health. Center for Disease Control and Prevention (CDC). https://www.cdc.gov/nchs/fastats/mental-health.htm (2021).

Ritchie, H. & Dattani, M. R. S. Mental Health - Our World in Data. Published online at OurWorldInData.org. https://ourworldindata.org/mental-health#risk-factors-for-mental-health (2021).

G. 2019 M. D. C.-T. L. Psychiatry, undefined 2022, Global, regional, and national burden of 12 mental disorders in 204 countries and territories, 1990–2019: a systematic analysis for the Global Burden of Disease Study 2019. Lancet Psychiatry 9, 137–150 (2022).

Chand, S. P. & Marwaha, R. Anxiety - StatPearls - NCBI Bookshelf (StatPearls, 2021).

Beesdo, K., Pine, D. S., Lieb, R. & Wittchen, H. U. Incidence and risk patterns of anxiety and depressive disorders and categorization of generalized anxiety disorder. Arch. Gen. Psychiatry 67, 47–57 (2010).

Beesdo-Baum, K. & Knappe, S. Developmental epidemiology of anxiety disorders. Child Adolesc. Psychiatr. Clin. N. Am. 21, 457–478 (2012).

Asselmann, E., Wittchen, H. U., Lieb, R. & Beesdo-Baum, K. Sociodemographic, clinical, and functional long-term outcomes in adolescents and young adults with mental disorders. Acta Psychiatr. Scand. 137, 6–17 (2018).

Bystritsky, A., Khalsa, S. S., Cameron, M. E. & Schiffman, J. Current diagnosis and treatment of anxiety disorders. P T 38, 30–44 (2013).

Iyortsuun, N. K., Kim, S. H., Jhon, M., Yang, H. J. & Pant, S. A review of machine learning and deep learning approaches on mental health diagnosis. Healthcare 11, 285 (2023).

van Eeden, W. A. et al. Predicting the 9-year course of mood and anxiety disorders with automated machine learning: a comparison between auto-sklearn, naïve Bayes classifier, and traditional logistic regression. Psychiatry Res. 299, 113823 (2021).

Pavlova, I. et al. Determinants of anxiety levels among young males in a threat of experiencing military conflict-Applying a machine-learning algorithm in a psychosociological study. PLoS ONE 15, e0239749 (2020).

Demiris, G. et al. Spoken words as biomarkers: Using machine learning to gain insight into communication as a predictor of anxiety. J. Am. Med. Inform. Assoc. 27, 929–933 (2020).

Dabek, F. & Caban, J. J. A neural network based model for predicting psychological conditions. Lect. Notes Comput. Sci. 9250, 252–261 (2015).

Sau, A. & Bhakta, I. Predicting anxiety and depression in elderly patients using machine learning technology. Health. Technol. Lett. 4, 238–243 (2017).

Nemesure, M. D., Heinz, M. V., Huang, R. & Jacobson, N. C. Predictive modeling of depression and anxiety using electronic health records and a novel machine learning approach with artificial intelligence. Sci. Rep. https://doi.org/10.1038/s41598-021-81368-4 (2021).

Simjanoski, M. et al. Lifestyle predictors of depression and anxiety during COVID-19: a machine learning approach. Trends Psychiatry Psychother. 44, 44–45 (2022).

Bokma, W. A. et al. Predicting the naturalistic course in anxiety disorders using clinical and biological markers: a machine learning approach. Psychol. Med. 52, 57–67 (2022).

Katsis, C. D., Katertsidis, N. S. & Fotiadis, D. I. An integrated system based on physiological signals for the assessment of affective states in patients with anxiety disorders. Biomed. Signal Process Control 6, 261–268 (2011).

Perpetuini, D. et al. Prediction of state anxiety by machine learning applied to photoplethysmography data. PeerJ 9, e10448 (2021).

Ihmig, F. R. et al. On-line anxiety level detection from biosignals: machine learning based on a randomized controlled trial with spider-fearful individuals. PLoS ONE 15, e0231517 (2020).

Thorstad, R. & Wolff, P. Predicting future mental illness from social media: a big-data approach. Behav. Res. Methods 51, 1586–1600 (2019).

Kim, J., Lee, J., Park, E. & Han, J. A deep learning model for detecting mental illness from user content on social media. Sci. Rep. 10, 11846 (2020).

Gruda, D. & Hasan, S. Feeling anxious? Perceiving anxiety in tweets using machine learning. Comput. Hum. Behav. 98, 245–255 (2019).

Ball, T. M., Stein, M. B., Ramsawh, H. J., Campbell-Sills, L. & Paulus, M. P. Single-subject anxiety treatment outcome prediction using functional neuroimaging. Neuropsychopharmacology 39, 1254–1261 (2014).

Hilbert, K., Lueken, U., Muehlhan, M. & Beesdo-Baum, K. Separating generalized anxiety disorder from major depression using clinical, hormonal, and structural MRI data: A multimodal machine learning study. Brain Behav. 7, e00633 (2017).

Portugal, L. C. L. et al. Predicting anxiety from wholebrain activity patterns to emotional faces in young adults: a machine learning approach. Neuroimage Clin. https://doi.org/10.1016/j.nicl.2019.101813 (2019).

Yamamori, Y., Robinson, O. J. & Roiser, J. P. Approach-avoidance reinforcement learning as a translational and computational model of anxiety-related avoidance. Elife 12, RP87720 (2023).

Aupperle, R. L., Sullivan, S., Melrose, A. J., Paulus, M. P. & Stein, M. B. A reverse translational approach to quantify approach-avoidance conflict in humans. Behav. Brain Res. 225, 455–463 (2011).

Park, H. et al. Polygenic risk for neuroticism moderates response to gains and losses in amygdala and caudate: evidence from a clinical cohort. J. Affect Disord. 293, 124–132 (2021).

Forthman, K. L. et al. Transdiagnostic behavioral and genetic contributors to repetitive negative thinking: a machine learning approach. J. Psychiatr. Res. 162, 207–213 (2023).

Richter, T., Fishbain, B., Markus, A., Richter-Levin, G. & Okon-Singer, H. Using machine learning-based analysis for behavioral differentiation between anxiety and depression. Sci. Rep. 10, 1–12 (2020).

Martin, M. Computer and Internet Use in the United States: 2018 American Community Survey Reports (www.census.gov/acs) (2021).

Pews Research Center. (2019) Pew Research Center: Mobile Fact Sheet. https://www.pewinternet.org/factsheet/mobile/#targetText=The.

Hartley, C. A. & Phelps, E. A. Anxiety and decision-making. Biol. Psychiatry 72, 113–118 (2012).

Hartley, C. A. & Phelps, E. A. Changing fear: the neurocircuitry of emotion regulation. Neuropsychopharmacology 35, 136–146 (2010).

Rangel, A., Camerer, C., & Montague, P. R. A framework for studying the neurobiology of value-based decision making. Nat. Rev. Neurosci. 9, 545–556 (2008).

Pedersen, M. L. et al. Computational phenotyping of brain-behavior dynamics underlying approach-avoidance conflict in major depressive disorder. PLoS Comput. Biol. 17, e1008955 (2021).

Ironside, M. et al. Approach-avoidance conflict in major depressive disorder: congruent neural findings in humans and nonhuman primates. Biol. Psychiatry 87, 399–408 (2020).

Bogdan, R. & Pizzagalli, D. A. Acute stress reduces reward responsiveness: implications for depression. Biol. Psychiatry 60, 1147–1154 (2006).

Dillon, D. G. et al. Peril and pleasure: an rdoc-inspired examination of threat responses and reward processing in anxiety and depression. Depress Anxiety 31, 233–249 (2014).

Breiter, H. C. & Gasic, G. P. A General Circuitry Processing Reward/Aversion Information and Its Implications for Neuropsychiatric Illness. Cognitive Neurosciences III, Third Edition 1043–1065 (2004).

Breiter, H. C., Gasic, G. P. & Makris, N. in Complex Systems Science in Biomedicine, 763–810 (2007).

Tversky, A. & Kahneman, D. Judgment under uncertainty: heuristics and biases. Science 185, 1124–1131 (1974).

Kahneman, D. & Tversky, A. On the interpretation of intuitive probability: a reply to Jonathan Cohen. Cognition 7, 409–411 (1979).

Chen, X., Voets, S., Jenkinson, N. & Galea, J. M. Dopamine-dependent loss aversion during effort-based decision-making. J. Neurosci. 40, 661–670 (2020).

Wang, S., Krajbich, I., Adolphs, R. & Tsuchiya, N. The role of risk aversion in non-conscious decision making. Front Psychol. 3, 50 (2012).

Charpentier, C. J., Aylward, J., Roiser, J. P. & Robinson, O. J. Enhanced risk aversion, but not loss aversion, in unmedicated pathological anxiety. Biol. Psychiatry 81, 1014–1022 (2017).

Lorian, C. N. & Grisham, J. R. Clinical implications of risk aversion: an online study of risk-avoidance and treatment utilization in pathological anxiety. J. Anxiety Disord. 25, 840–848 (2011).

Giorgetta, C. et al. Reduced risk-taking behavior as a trait feature of anxiety. Emotion 12, 1373–1383 (2012).

Charpentier, C. J., De Martino, B., Sim, A. L., Sharot, T. & Roiser, J. P. Emotion-induced loss aversion and striatal-amygdala coupling in low-anxious individuals. Soc. Cogn. Affect Neurosci. 11, 569–579 (2016).

Xu, P. et al. Amygdala–prefrontal connectivity modulates loss aversion bias in anxious individuals. Neuroimage 218, 116957 (2020).

Aharon, I. et al. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron 32, 537–551 (2001).

Strauss, M. M. et al. fMRI of sensitization to angry faces. Neuroimage 26, 389–413 (2005).

Elman, I. et al. Probing reward function in post-traumatic stress disorder with beautiful facial images. Psychiatry Res. 135, 179–183 (2005).

Levy, B. et al. Gender differences in the motivational processing of facial beauty. Learn Motiv 39, 136–145 (2008).

Kim, B. W. et al. Recurrent, robust and scalable patterns underlie human approach and avoidance. PLoS ONE 5, e10613 (2010).

Livengood, S. L. et al. Keypress-based musical preference is both individual and lawful. Front Neurosci. 11, 136 (2017).

Lee, S. et al. The commonality of loss aversion across procedures and stimuli. PLoS ONE 10, e0135216 (2015).

Perlis, R. H. et al. Association of a polymorphism near CREB1 with differential aversion processing in the insula of healthy participants. Arch. Gen. Psychiatry 65, 882–892 (2008).

Makris, N. et al. Decreased volume of the brain reward system in alcoholism. Biol. Psychiatry 64, 192–202 (2008).

Gasic, G. P. et al. BDNF, relative preference, and reward circuitry responses to emotional communication. Am. J. Med. Genet., Part B: Neuropsychiatr. Genet. 150, 762–781 (2009).

Viswanathan, V. et al. Age-related striatal BOLD changes without changes in behavioral loss aversion. Front Hum. Neurosci. 9, 1–12 (2015).

Vike, N. L. et al. Characterizing major depressive disorder and substance use disorder using heatmaps and variable interactions: The utility of operant behavior and brain structure relationships. PLoS ONE 19, e0299528(2024).

Azcona, E. A. et al. Discrete, recurrent, and scalable patterns in human judgement underlie affective picture ratings. https://doi.org/10.48550/arxiv.2203.06448 (2022).

Stefanopoulos, H. et al. In ICBHI 2022 Proceedings (figshare, 2022).

Lalvani, S. et al. Predicting suicidality with small sets of interpretable reward behavior and survey variables. Nat. Ment. Health 2024, 1–14 (2024).

Vike, N. L. et al. Predicting COVID-19 vaccination uptake using a small and interpretable set of judgment and demographic variables: cross-sectional cognitive science study. JMIR Public Health Surveill. 10, e47979 (2024).

Lang, P. & Bradley, M. International affective picture system (IAPS): technical manual and affective ratings. Psychology https://www.unifesp.br/dpsicobio/adap/instructions.pdf (1999).

Lang, P. J., Margaret, M. B. & Cuthbert, B. N. International affective picture system (IAPS): Affective Ratings of Pictures and Instruction Manual. Technical Report A-8. University of Florida, Gainesville, FL (2008).

Spielberger, C. D. Manual for the State-Trait Anxiety Inventory (Consulting Psychologists, 1983).

Schafer, K. M. The Status of Suicidality Prediction Research: A Meta-Analysis. https://diginole.lib.fsu.edu/islandora/object/fsu:723003/datastream/PDF/download (2019).

Schafer, K. M. et al. Suicidal ideation, suicide attempts, and suicide death among Veterans and service members: a comprehensive meta-analysis of risk factors. Mil. Psychol. 34, 129–146 (2022).

Schafer, K. M., Wilson, E. & Joiner, T. Traumatic brain injury and suicidality among military veterans: the mediating role of social integration. J. Affect Disord. 338, 414–421 (2023).

Resnik, P., Foreman, A., Kuchuk, M., Musacchio Schafer, K. & Pinkham, B. Naturally occurring language as a source of evidence in suicide prevention. Suicide Life Threat Behav. 51, 88–96 (2021).

Shing, H. C., Resnik, P. & Oard, D. W. A prioritization model for suicidality risk assessment. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, 8124–8137 (2020).

Clark, L. A., Watson, D. & Mineka, S. Temperament, personality, and the mood and anxiety disorders. J. Abnorm Psychol. 103, 103–116 (1994).

Breiter, H. C., Aharon, I., Kahneman, D., Dale, A. & Shizgal, P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron 30, 619–639 (2001).

Hawes, M. T., Szenczy, A. K., Klein, D. N., Hajcak, G. & Nelson, B. D. Increases in depression and anxiety symptoms in adolescents and young adults during the COVID-19 pandemic. Psychol. Med. 52, 3222–3230 (2022).

Lakhan, R., Agrawal, A. & Sharma, M. Prevalence of depression, anxiety, and stress during COVID-19 pandemic. J. Neurosci. Rural Pr. 11, 519–525 (2020).

Narchal, R. & Mcdavitt, S. A. Loneliness and anxiety sensitivity: understanding behavioural avoidance in the lonely. Acta Psychopathol. 3, 58 (2017).

Cacioppo, J. T. et al. Lonely traits and concomitant physiological processes: the MacArthur social neuroscience studies. Int. J. Psychophysiol. 35, 143–154 (2000).

Heinrich, L. M. & Gullone, E. The clinical significance of loneliness: a literature review. Clin. Psychol. Rev. 26, 695–718 (2006).

Hayward, C. & Wilson, K. A. Anxiety sensitivity: a missing piece to the agoraphobia-without-panic puzzle. Behav. Modif. 31, 162–173 (2016).

Lewinsohn, P. M., Lewinsohn, M., Gotlib, I. H., Seeley, J. R. & Allen, N. B. Gender differences in anxiety disorders and anxiety symptoms in adolescents. J. Abnorm Psychol. 107, 109–117 (1998).

McLean, C. P. & Anderson, E. R. Brave men and timid women? A review of the gender differences in fear and anxiety. Clin. Psychol. Rev. 29, 496–505 (2009).

Pigott, T. A. Gender differences in the epidemiology and treatment of anxiety disorders. J. Clin. Psychiatry 60, 4–15 (1999).

Nakazato, K. & Shimonaka, Y. The Japanese State-Trait Anxiety Inventory: age and sex differences. Percept. Mot. Skills 69, 611–617 (1989).

Ridley, M., Rao, G., Schilbach, F. & Patel, V. Poverty, depression, and anxiety: causal evidence and mechanisms. Science (1979) 370, eaay0214 (2020).

Watterson, R. A., Williams, J. V. A., Lavorato, D. H. & Patten, S. B. Descriptive epidemiology of generalized anxiety disorder in Canada. Can. J. Psychiatry 62, 24–29 (2017).

Brenes, G. A. Age differences in the presentation of anxiety. Aging Ment. Health 10, 298–302 (2006).

Hiswåls, A.-S., Walander, A., Soares, J. & Macassa, G. Employment status employment status, anxiety and depression in a municipal context. Res Health Sci. 2, 12 (2017).

Fisher, S., Reynolds, J. L., Hsu, W. W., Barnes, J. & Tyler, K. Examining multiracial youth in context: ethnic identity development and mental health outcomes. J. Youth Adolesc. 43, 1688–1699 (2014).

Mazza, M. G. et al. Anxiety and depression in COVID-19 survivors: role of inflammatory and clinical predictors. Brain Behav. Immun. 89, 594–600 (2020).

Woodward, S. F. et al. Anxiety, post-COVID-19 syndrome-related depression, and suicidal thoughts and behaviors in COVID-19 Survivors: cross-sectional study. JMIR Form. Res. 6, e36656 (2022).

Dorri, M. et al. Psychological problems and reduced health-related quality of life in the COVID-19 survivors. J. Affect Disord. Rep. 6, 100248 (2021).

Strawn, J. R. et al. The impact of COVID-19 infection and characterization of long COVID in adolescents with anxiety disorders: a prospective longitudinal study. J. Am. Acad. Child Adolesc. Psychiatry, https://doi.org/10.1016/j.jaac.2022.12.027 (2023).

Tversky, A. & Kahneman, D. The framing of decisions and the psychology of choice. Science 211, 453–458 (1981).

Laird, J. E., Lebiere, C. & Rosenbloom, P. S. A standard model of the mind: toward a common computational framework across artificial intelligence, cognitive science, neuroscience, and robotics. AI Mag. 38, 13–26 (2017).

Bemme, D. & Kirmayer, L. J. Global Mental Health: interdisciplinary challenges for a field in motion. Transcult. Psychiatry 57, 3–18 (2020).

Kirmayer, L. J. The politics of diversity: pluralism, multiculturalism and mental health. Transcult. Psychiatry 56, 1119–1138 (2019).

Kpanake, L. Cultural concepts of the person and mental health in Africa. Transcult. Psychiatry 55, 198–218 (2018).

Weine, S. M., Langenecker, S. & Arenliu, A. Global mental health and the National Institute of Mental Health Research Domain Criteria. Int. J. Soc. Psychiatry 64, 436–442 (2018).

Bhugra, D. Sexual variation and mental health. Nord J. Psychiatry 72, S27–S29 (2018).

Bredström, A. Culture and context in mental health diagnosing: scrutinizing the DSM-5 revision. J. Med. Human. 40, 347–363 (2019).

Bari, S. et al. The prevalence of psychotic symptoms, violent ideation, and disruptive behavior in a population with SARS-CoV-2 infection: preliminary study. JMIR Form. Res 6, e36444 (2022).

Vike, N. L. et al. The relationship between a history of high-risk and destructive behaviors and COVID-19 infection: preliminary study. JMIR Form. Res. 7, e40821 (2023).

Viswanathan, V. et al. A quantitative relationship between signal detection in attention and approach/avoidance behavior. Front Psychol. 8, 122 (2017).

Shannon, C. E. & Weaver, W. The Mathematical Theory of Communication Vol. 1, 117 (University of Illinois Press, 1949).

Bari, S. et al. Integrating multi-omics with neuroimaging and behavior: a preliminary model of dysfunction in football athletes. Neuroimage: Rep. 1, 100032 (2021).

Vike, N. L. et al. A preliminary model of football-related neural stress that integrates metabolomics with transcriptomics and virtual reality. iScience 25, 103483 (2022).

Baron, R. M. & Kenny, D. A. The moderator-mediator variable distinction in social psychological research. conceptual, strategic, and statistical considerations. J. Pers. Soc. Psychol. 51, 1173–1182 (1986).

Kenny, D. A. Mediation. http://davidakenny.net/cm/mediate.htm (2021).

Markowitz, H. The utility of wealth. J. Political Econ. 60, 151–158 (1952).

Acknowledgements

We thank Carol Ross, Angela Braggs-Brown, Thomas M. Talavage, Eric Nauman, and Marc Cahay at College of Engineering and Applied Science, University of Cincinnati who significantly impacted the transfer of research funding to University of Cincinnati (UC), allowing this work to be completed. Funding for this work was provided by Office of Naval Research award N00014-21-1-2216 [H.C.B.], Office of Naval Research award N00014-23-1-2396 [H.C.B. (contact), A.K.K.] and Jim Goetz donation to the University of Cincinnati, College of Engineering and Applied Science (H.C.B.).

Author information

Authors and Affiliations

Contributions

Study concept/design: H.C.B., A.K.K. and S.B. Acquisition of original data: H.C.B., B.W.K., S.B., N.L.V., S.L., L.S., M.B. and A.K.K. Coding of statistical tools: S.B. and B.W.K. (with guidance from H.C.B. and A.K.K.). Analysis of data: S.B. and B.W.K. (with guidance from H.C.B. and A.K.K.). Interpretation of data: S.B., H.C.B. and A.K.K. (with input from B.W.K., N.L.V., S.L., L.S., B.W.K., M.B. and N.M.). Statistical assessment: S.B. and B.W.K. (with guidance from H.C.B. and A.K.K.). Authored original draft: S.B. and H.C.B. Generated figures: S.B., B.W.K. and H.C.B. Revision of manuscript for content: all authors. All authors approved the final version of the paper for submission.

Corresponding author

Ethics declarations

Competing interests

S.B., B.W.K., N.V., S.L., L.S., M.B., A.K. and H.C.B. submitted a provisional patent “Integrating Cognitive Science with Machine Learning for High Accuracy Prediction of Anxiety Disorders”. The provisional application is led by University of Cincinnati (Office of Innovation) in conjunction with Northwestern University, Application # 63/648,898. The other authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bari, S., Kim, BW., Vike, N.L. et al. A novel approach to anxiety level prediction using small sets of judgment and survey variables. npj Mental Health Res 3, 29 (2024). https://doi.org/10.1038/s44184-024-00074-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44184-024-00074-x

- Springer Nature Limited