Abstract

Predictive coding theory suggests the brain anticipates sensory information using prior knowledge. While this theory has been extensively researched within individual sensory modalities, evidence for predictive processing across sensory modalities is limited. Here, we examine how crossmodal knowledge is represented and learned in the brain, by identifying the hierarchical networks underlying crossmodal predictions when information of one sensory modality leads to a prediction in another modality. We record electroencephalogram (EEG) during a crossmodal audiovisual local-global oddball paradigm, in which the predictability of transitions between tones and images are manipulated at both the stimulus and sequence levels. To dissect the complex predictive signals in our EEG data, we employed a model-fitting approach to untangle neural interactions across modalities and hierarchies. The model-fitting result demonstrates that audiovisual integration occurs at both the levels of individual stimulus interactions and multi-stimulus sequences. Furthermore, we identify the spatio-spectro-temporal signatures of prediction-error signals across hierarchies and modalities, and reveal that auditory and visual prediction errors are rapidly redirected to the central-parietal electrodes during learning through alpha-band interactions. Our study suggests a crossmodal predictive coding mechanism where unimodal predictions are processed by distributed brain networks to form crossmodal knowledge.

Similar content being viewed by others

Introduction

Predictive-coding theory postulates that the brain is constantly predicting upcoming events based on an internal model established by learning the statistical regularity in sensory experience1,2. This is achieved by a bidirectional and hierarchical signaling cascade, where the top-down prediction represents the information inferred from prior knowledge to explain away the input, and the bottom-up prediction error is generated to refine the prediction when there is an unexplained discrepancy. This coding is thought to be the basis for diverse cognitive processes, such as perceptual inference, reinforcement learning, and motivational attention3,4,5.

Considerable evidence of predictive coding has been provided within single sensory modalities. For example, the mismatch negativity (MMN), a surprise response to oddball stimuli, has been interpreted as a prediction-error signal in the domains of audition6,7,8, vision9,10 and tactility11,12. The hierarchical organization of the unimodal predictive coding has also been examined, for example, by the local-global oddball paradigm with auditory13 and visual stimuli14. In this paradigm, regularities were controlled at the stimulus-to-stimulus level (local regularity) and the multi-stimulus sequence level (global regularity). The hierarchical prediction-error signals have been identified in both monkeys and humans15,16,17, and hierarchical prediction signals have been extracted with a quantitative predictive-coding model18.

To further generalize the predictive-coding theory, it is critical to verify how predictive coding can operate across multiple sensory modalities, particularly, how prior knowledge can be transferred across sensory domains. During audiovisual events, it has been found that visual information can facilitate the early auditory response, suggesting a prediction established by the crossmodal transition from the visual to auditory domains and thus speeding up the process of upcoming auditory input19,20. Furthermore, the concept of a crossmodal prediction error comes into play once a crossmodal transition is violated. For instance, a visual stimulus V followed by an auditory stimulus A is learned, and a crossmodal prediction error is generated when this expected transition is disrupted by replacing A with a different auditory stimulus A’. This error, which arises when visual information is inadequate to infer upcoming auditory stimuli, serves to explain phenomena such as the McGurk fusion and combination of audiovisual speech19,21,22. However, it remains unclear how crossmodal transitions are predicted at different hierarchical levels, and how sensory information from different modalities converges along the hierarchy that leads to the emergence of crossmodal prediction errors.

To address this, we use a crossmodal audiovisual local-global paradigm in which auditory and visual stimuli are delivered one at a time in sequences, and the unimodal and crossmodal transitions are controlled at two hierarchical levels of temporal regularities. By delivering stimuli one at a time, we can independently control the occurrences of both unimodal and crossmodal transitions, which cannot be done if both auditory and visual stimuli are presented simultaneously. To examine how the brain learns and represents unimodal and crossmodal transitions at the two levels, we record electroencephalogram (EEG) and analyze the data with a model-fitting approach18. Three predictive-coding models are tested: one with auditory and visual processes not integrated, one with audiovisual integration at the global level, and one with audiovisual integration at both the local and global levels. The best-fitting model reveals that audiovisual integration occurs at both levels, indicating a convergence of the auditory and visual information to create crossmodal predictions, even at the lower level of stimulus-to-stimulus transitions. We further show that the auditory and visual prediction-error signals are rapidly re-routed from the electrodes around the contralateral temporal and parieto-occipital areas to the electrodes around the central-parietal area during learning via the alpha-band interactions. Our findings demonstrate that the principles of hierarchical predictive coding, initially understood in the context of unimodal processing, can also comprehensively describe the learning and encoding of multilevel statistics observed in crossmodal transitions, with the engagement of more widespread brain networks.

Results

A crossmodal audiovisual local-global oddball paradigm

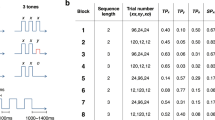

We designed a crossmodal audiovisual local-global oddball paradigm in which crossmodal transitions were created between a tone (denoted as A) and a checkerboard (denoted as V). These two stimuli were arranged to create four sequences: AAA, AAV, VVV, and VVA. Within each sequence, all stimuli were presented for 200 msec with a 300 msec stimulus onset asynchrony. A random interval of 1600–1900 msec was inserted between the offset of one sequence and the onset of the next sequence (Fig. 1a). Four sequence blocks were created, each one with 120 trials of stimulation sequences but with distinct predictability in the crossmodal transitions. In each block, a frequent sequence (100 trials) and an infrequent sequence (20 trials) were assigned, with the first two stimuli in both sequences being of the same modality and the last being of different modalities (Fig. 1b). Each block was presented twice: once with both the visual and auditory stimuli delivered from the left side, and once with both stimuli delivered from the right side (Fig. 1a). The purpose of this lateral delivery was to enable post-hoc confirmation of the lateralization in sensory responses, such as whether EEG responses to an image in the left visual field would appear mostly at the electrodes around the right occipital areas (we called these “right occipital electrodes” based on the areas they covered). Participants were asked to pay attention to the stimuli while maintaining eye fixation on a white dot.

a Demonstration of the left- and right-side stimulation delivery. The white dot represents the fixation. The visual stimuli are displayed on left or right relative to the fixation, and the auditory stimuli are presented on the left or right speaker. ISI: inter-stimulus interval. b Configuration of trial number and trial types in the four blocks. Each block was presented twice. A represents a tone and V represents a green-and-black checkerboard. A and V are used to form three-stimulus sequences.

During the experiment, 64-channel EEG signals were recorded, and no task-related behavioral responses were required. The EEG data were preprocessed to reduce artifacts and impact of volume conduction (see details in Methods). For each trial, channel, and participant, we quantified the event-related spectral perturbation (ERSP) by calculating the time-frequency representation with baseline normalization (the decibel method; baseline: –0.2 to 0 s, relative to the first stimulus). In trials where stimulation was applied to the left side, the channel assignments for ERSP were mirrored from left to right. This mirroring aimed to align the sensory responses with trials where stimulation was delivered to the right side and ensured the left hemisphere to serve as the contralateral side in both scenarios. After the mirroring, the ERSPs were then averaged across trials for each trial type in each block, and for each participant and each channel.

Univariate analysis of neural responses for audiovisual integration

First, we used a univariate analysis to evaluate whether audiovisual integration occurred in our crossmodal paradigm. Importantly, we define audiovisual integration as the neural processes where auditory and visual stimuli influence each other23,24, without necessarily converging into a singular higher-level concept, such as the audiovisual speech perception25. Under this definition, if integration occurred, its degree would be different between Blocks 1 and 2 due to different numbers of A-to-V transitions. Therefore, the neural responses to AAA would differ in these two blocks. Similarly, the neural responses to VVV would differ in Blocks 3 and 4 due to different numbers of V-to-A transitions.

To evaluate this, we contrasted the averaged ERSPs: AAA in Block 1 – AAA in Block 2, and VVV in Block 3 – VVV in Block 4. We first identified significant contrast responses across participants for each channel by bootstrap analysis (n = 30 participants, 1000 resamples, alpha = 0.05, two-tailed, with false discovery rate correction). An example of the significant contrast response at channel Pz is shown in Fig. 2a (examples from other channels Fcz, C3 and Pz are shown in Supplementary Fig. 1). For each of the two contrasts, we calculated the overall significant contrast responses by averaging significant values across all channels, replacing non-significant ones with zeros. From the overall significant contrast responses (shown in Fig. 2b), we observed that the AAA contrast exhibited positive differences in the theta and gamma frequency bands, which were followed by negative differences in the alpha and beta bands. Conversely, the VVV contrast displayed only negative differences in the alpha and beta bands. Overall, significant negative alpha and beta power responses were identified in both contrasts.

a Example responses of AAA in Blocks 1 and 2 at channel Pz, and their contrast. Time zero represents the onset of the last stimulus of a sequence. The three black vertical dashed lines represent the onsets of three stimuli of a sequence. The black horizontal dashed lines delineate the ranges of theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz) and gamma (30–100 Hz) bands. The black contours represent significant differences. b The significant contrast responses averaged across all channels in two contrasts. c The contrast responses were averaged across time points after the last stimulus and frequency binds for four bands for each contrast. The averaged responses were tested, and the white triangles represent significant differences.

To further evaluate significant differences in EEG topography, we averaged the contrast responses across time points (0–0.9 s from the last stimulus onset) and within specific frequency bands—theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), and gamma (30–100 Hz)—for each contrast, channel, and participant. Then, we identified significant responses across participants for each channel by permutation analysis (n = 30 participants, 1000 permutations, alpha = 0.05, two-tailed, cluster-based correction). For the AAA contrast (Fig. 2c), significant differences primarily appeared in the alpha and beta bands at the parieto-occipital electrodes, indicating that a region typically associated with visual processing was active during the processing of purely auditory stimuli. Conversely, for the VVV contrast, significant differences were primarily observed in the alpha band at both the frontal and parieto-occipital electrodes, and in the beta band at the central and parieto-occipital electrodes. This implies that visual activations may move toward the frontal and central scalp distributions in the crossmodal context.

To further confirm the presence of audiovisual integration in the paradigm, we compared the responses to AAA from the crossmodal paradigm to those from a classical auditory local-global oddball paradigm (see details in Methods). The results, depicted in Supplementary Fig. 2, reveal a consistent disparity in the alpha band within the parieto-occipital region, similar to what is shown in Fig. 2c.

Three hypotheses of crossmodal transitions across hierarchy

After confirming the integration of audio and visual stimuli in our paradigm, we further investigate how the crossmodal knowledge can form across hierarchical levels. In our paradigm, the regularities of crossmodal transitions can be established at two hierarchical levels within a single block. The local regularity is established based on the stimulus-to-stimulus transition probability (TP), defined as the conditional probability of a particular stimulus occurring, given the preceding stimulus. We denoted the probabilities of the crossmodal A-to-V and V-to-A transitions as TPA→V and TPV→A, respectively. On the other hand, the probabilities of the unimodal A-to-A and V-to-V transitions were denoted as TPA→A and TPV→V, respectively. At a higher level, the global regularity is established based on the sequence probability (SP), defined as the probability of sequences AAA, AAV, VVV, or VVA within a block. These were denoted as SPAAA, SPAAV, SPVVV, and SPVVA, respectively.

To understand how the brain processes local and global regularities in the crossmodal sequences, we first need to know how it integrates auditory and visual information. For instance, if no audiovisual integration occurs during the processing of two consecutive stimuli (local integration), then the probabilities of A-to-V and V-to-A transitions cannot be determined, resulting in TPA→V and TPV→A being zero. Similarly, without audiovisual integration at the sequence level (global integration), AAV and VVA cannot be recognized as single sequences, and therefore, SPAAV and SPVVA are zero. Here, we present three hypotheses on how the brain integrates auditory and visual information at the local and global levels, which were subsequently verified using EEG data. To elucidate these hypotheses, we use the processing sequences of AAA and AAV as illustrative examples (Fig. 3a).

a Three hypotheses of how crossmodal transitions are considered in probabilities, shown with the example of AAA and AAV sequences. The transition probability is calculated with the transitions between the stimuli or omission. The black curved arrow represents the transition between the stimuli with the same modality or between a stimulus and omission, the orange curved arrow represents the transition between the stimuli with different modalities. The sequence probability is calculated with the occurrences of the sequence types. The black rectangle rim represents the sequence type composed of an identical modality, while the orange rectangle rim represents the sequence type composed of different modalities. The streams of audition and vision represent the independent processes of auditory and visual stimuli. The stream of audiovisual integration highlighted in the orange solid line represents that stimuli of the two modalities are processed together; that is, the crossmodal transitions can be learned. b The model structures are built upon the three hypotheses. Each model consists of two sensory streams (A and V) and three levels (S, 1, and 2). For neuronal populations (white diamonds), A0, A1, A2 were denoted as populations in the auditory stream at the level S, level 1, and level 2, respectively. V0, V1, V2 were denoted as populations in the visual stream at the level S, level 1, and level 2, respectively. Prediction signals (green solid downward arrows), prediction error signals (blue solid upward arrows), and sensory inputs (black solid upward arrows) are shown. The horizontal orange bar represents the integration between two streams. c The model values of the three hypotheses. The example of the model values for AAV and AAA sequences in Block 1 are shown. The values are calculated when the last stimulus of the sequence (underlined) is received. The dashed arrow represents the signal with a zero value. d The contrast values in Block 1 (first row) are obtained by comparing the model values of AAV to AAA shown in panel a. The green solid lines are removed since no prediction signals are left after the contrast. The two white diamonds being overlaid represent the neuronal populations grouped in the integration layer, and the values are combined by summation when the signals are sent from and to an integration layer. e The model contrast values of three models in four blocks.

Hypothesis 1: Auditory and visual information are processed separately, but instead are processed independently within their respective auditory and visual streams. Since the auditory and visual streams are independent from each other, the auditory stream perceives AAA and AAV as AAA and AA, respectively (Fig. 3a). In this scenario, TPA→V equals zero, and TP is determined based on the A-to-A transition (TPA→A) and A-to-O transition (TPA→O), where O represents omissions at the end of the sequence. It is important to note that the A-to-O transition needs to be considered, since at the stimulus-to-stimulus transition level, the last A in a sequence will continue to predict the next stimulus item (including omission)18. This need can be illustrated by a simplified example comparing two sequences without a global structure: AAAAAAAA and AAAAOAAA. If omissions are not considered as events, TP will be the same for both sequences. However, the anticipation of the upcoming A tone clearly differs between these two scenarios because the omission is anticipated in the second sequence. Similarly, SPAAV equals zero, and SP applies only to sequences AAA and AA (thus SPAAA and SPAA are nonzero). This hypothesis presents an unlikely scenario, and it serves as a benchmark for other hypotheses.

Hypothesis 2: Auditory and visual information are processed independently at the local level but are integrated at the global level. In this scenario, auditory and visual information are processed separately to determine TP, as in Hypothesis 1, but are processed jointly to determine SP. Consequently, AAA and AAV are considered as sequences (thus SPAAA and SPAAV are nonzero), and there is no sequence AA (SPAA is equal to zero).

Hypothesis 3: Auditory and visual information are integrated at both the local and global levels. In this scenario, auditory and visual information are combined to determine both TP and SP. Consequently, the A-to-V transition is now considered, making TPA→V nonzero. SP is determined in the same manner as in Hypothesis 2.

Therefore, the TP and SP values vary not only across the blocks but also across the three hypotheses, as detailed in Table 1.

Quantitative models for evaluating the hypotheses

In order to evaluate these hypotheses using EEG data, we need to quantify the interplay among sensory, prediction, and prediction-error signals within the varying configurations of audiovisual integration proposed in each hypothesis. To achieve this, we adapted a hierarchical predictive-coding model originally designed to elucidate the auditory local-global paradigm15,18, and incorporated varying interactions between auditory and visual streams. Each of the three hypotheses was represented by a corresponding model, each featuring unique structural elements and signal flows (Fig. 3b).

All three models consist of two key structures: the auditory and visual sensory streams (A and V streams), and three hierarchical levels (Levels S, 1, and 2). Level S receives sensory input, and Levels 1 and 2 encode the local and global regularities, respectively. Using the A stream as an example, the neuronal population at Level S (denoted as A0) receives an auditory input (denoted as SA) and a top-down first-level prediction of that input (denoted as P1A) from Level 1. When the stimulus is A, SA = 1, and otherwise, SA = 0. The first-level prediction error (denoted as PE1A), which is the absolute difference between SA and P1A, is sent to Level 1. At Level 1, the neuronal population (denoted as A1) receives PE1A and a second-level prediction (denoted as P2A) from Level 2. The second-level prediction error (denoted as PE2A), which is the absolute difference between PE1A and P2A, is sent to the neural population at Level 2 (denoted as A2). The V stream functions similarly, and there are first-level and second-level predictions and prediction errors (denoted as P1V, P2V, PE1V, and PE2V). SV is set to 1 when the stimulus is V and 0 otherwise.

The key distinction among the three models is whether auditory and visual streams are integrated at Level 1 (local level) and Level 2 (global level) (Fig. 3b):

Model 1: To model Hypothesis 1, which proposes no audiovisual integration, this model features discrete auditory and visual streams, and the auditory and visual signals operate independently.

Model 2: To model Hypothesis 2, which proposes audiovisual integration at the global level, this model incorporates an integration layer at Level 2 (shown as a horizontal orange bar). Within the integration layer, the auditory and visual streams are “linked”, allowing for crossmodal sequences to be considered for SP.

Model 3: To model Hypothesis 3, which proposes audiovisual integration at both the local and global levels, this model incorporates integration layers at both Levels 1 and 2. The integration layer at Level 1 allows for crossmodal A-to-V and V-to-A transitions to be considered in TP.

Theoretical values of prediction and prediction-error signals

Next, we examined the steady-state values of that each signal would reach once TP and SP have been learned. Importantly, our analysis focused only on the signals generated by the last stimulus in each sequence, since all sequences in a block shared the same first two stimuli and crossmodal transitions occurred only at the last stimulus. We assessed the steady-state values of the prediction signals (P1A, P2A, P1V, and P2V) for the last stimulus with the principle outlined by Chao et al.18: The optimal value for each prediction signal is determined by minimizing the mean squared prediction error within the corresponding neural population. Utilizing this principle, once determining TP and SP, we can derive the values for all relevant prediction and prediction-error signals for each sequence (see further details in Methods).

To further elucidate model values in three models, we use sequences AAV and AAA in Block 1 as illustrative examples (Fig. 3c) (comprehensive model values for all blocks can be found in Supplementary Fig. 3). Here, we highlight several key points:

-

The values of the prediction signals are identical for sequences AAV and AAA. This is because the prediction of the upcoming last stimulus does not depend on the identity of the last stimulus.

-

In Models 1 and 2, local audiovisual integration is absent, and TPA→V = 0. Therefore, the last stimulus V in AAV is unpredictable based on stimulus transitions, and local prediction P1V = 0.

-

In Model 1, global audiovisual integration is absent, and SPAAV = 0. Therefore, the last stimulus V in AAV is unpredictable based on sequence structure, leading to global prediction P2V = 0.

-

In the integration layer where two streams are linked, different prediction signals are sent down in the auditory and visual streams to minimize their respective prediction errors. For example, in Model 2, the values of P2A and P2V differ since they aim to minimize different prediction errors (i.e., PE2A and PE2V, respectively).

To extract the prediction error, we conducted within-block contrasts: contrasting the model values between sequences AAV and AAA (AAV – AAA) in Blocks 1 and 2, and the model values between sequences VVA and VVV (VVA – VVV) in Blocks 3 and 4. These contrasts eliminated the prediction signals, which are identical for both sequences within a block, and contained only the contrast values of sensory and prediction-error signals. An illustrative example of these contrast values in Block 1 is shown in Fig. 3d.

For the integration layers, we propose that the neuronal populations from both streams would spatially cluster in the brain to facilitate effective information integration. This clustering would resemble the specialized subareas for processing specific modalities in the multisensory superior temporal sulcus26,27. In the model, neuronal populations are grouped across both auditory and visual streams to represent this clustering (Fig. 3d). This is especially crucial in Model 3. In Model 3, PE2A and PE2V are collectively transmitted from grouped neuronal populations at Level 1 to grouped neuronal populations at Level 2. Consequently, we combined them into a single composite signal, PE2A/V ( = PE2A + PE2V), based on the assumption that they cannot be spatially separated due to the limited resolution of EEG.

The comprehensive contrast values for all blocks are shown in Fig. 3e. Additionally, we constructed alternative models featuring modified elements related to integrated neuronal populations and signals (see details in Supplementary Fig. 4). These alternative models were subsequently evaluated. Moreover, we calculated the TP under the assumption that the last stimulus (e.g., last A in AAA) will continue to predict the next stimulus item (transition from the last A to the omission). We also tested models with different TP calculations: (1) excluding A-to-O transitions in AAA and V-to-O transitions and (2) considering the overall occurrences of a single stimulus rather than the transition between two stimuli.

Model evaluation with EEG data

Next, we tested the three hypotheses by quantifying how well the modeled contrast values (as in Fig. 3e) fit the EEG data. To capture prediction-error signals in accordance with the model shown in Fig. 3d, we contrasted the averaged ERSPs from different trial types: AAV – AAA in Blocks 1 and 2, and VVA – VVV in Blocks 3 and 4. Significant contrast responses were identified as those significantly different from zero using bootstrap analysis (n = 30 participants, 1000 resamples, alpha = 0.05, two-tailed, with false discovery rate correction), and non-significant responses were replaced with zeros. An example of a significant contrast response is shown in Fig. 4a, b shows the overall significant contrast responses, averaged across all channels for each block. These responses were mainly observed after the last stimulus, exhibiting varying patterns across blocks (also see Supplementary Result 1 for further details). We then pooled all the significant contrast responses into a tensor with three dimensions: Block (4 blocks), Channel (60 channels), and Time-Frequency (450 time points × 100 frequency bins).

a Example responses of AAV and AAA in Block 1 at channel Pz, and their contrast. The same fashion is the same as Fig. 2a. b The significant contrast responses averaged across all channels in the four blocks.

To fit the 3D tensor with the models, we used a decomposition approach to break down the tensor into various components of sensory and prediction-error signals, based on their corresponding contrast values in the models. For example, in the case of Model 1, we restricted the tensor to break down into 6 designated components: SA, SV, PE1A, PE1V, PE2A, and PE2V. Each of these components was activated across all four blocks, precisely matching the activation profiles shown in Fig. 3e. This was achieved by fixing the Block dimension to the modeled contrast values in the parallel factor analysis (PARAFAC), a high-dimensional decomposition method28,29. We performed PARAFAC 50 times, each with a random initialization.

Following this, we assessed which model offered a better explanation of the data. First, we used the core consistency diagnostic (CORCONDIA) to quantify the interaction between components29. A higher CORCONDIA indicates less interaction between the components, indicating a more appropriate fit for the decomposition28,30. In Fig. 5a, CORCONDIA was found to be 0 ± 0.1% in Model 1, 14.8 ± 8.8% in Model 2, and 83.3 ± 0.6% in Model 3 (mean ± standard deviation, n = 50 random initializations). Model 3 showed a significantly higher CORCONDIA value compared to Model 1 (n = 50 random initializations for each, two-sample t test, alpha = 0.05, two-tailed, Bonferroni correction) (t[98] = 1005.1, p < 1e–16) and Model 2 (t[98] = 35.29, p < 0.001). Additionally, we assessed the model’s goodness of fit using the Bayesian Information Criterion (BIC). A lower BIC value suggests a better balance between the model’s fit and its complexity. Model 3 showed the lowest BIC value compared to Model 1 (t[98] = −8.66, p < 1e–14) and Model 2 (t[98] = −9.38, p < 1e–15). Furthermore, Model 3 also outperformed the other alternative models outlined in Supplementary Fig. 4 (see Supplementary Table 1) and models with different TP (Supplementary Table 2).

a The core consistency diagnostic (CORCONDIA) and Bayesian information criterion (BIC) gained with data fitting 50 times respectively in each of the three models. The mean and standard deviation are shown with a black error bar, and fits are shown with circles. b The time-frequency representation in four blocks at channel FCz, P1, and C4 are reconstructed from Model 3. The actual responses are the significant contrast responses, as the comparison to the reconstructed representations.

These results consistently indicated that Model 3 was the best-fitting model. To further substantiate this, we reconstructed the significant contrast responses using the 5 components derived from Model 3 (see details in Methods) and demonstrated that Model 3 was effective in capturing the diverse responses across different channels and blocks (Fig. 5b).

Hierarchical prediction-error signals extracted from Model 3

Figure 6a shows the 5 components in Model 3 (SA, SV, PE1A, PE1V, and PE2A/V) extracted from PARAFAC. Each component extracted from PARAFAC is represented by the score or loading matrix (activation values) along the original dimensions: Block, Channel, and Time-Frequency. The first dimension was anchored to the four modeled contrast values. The remaining two dimensions captured how the component was activated across 60 channels (Channel), and across 450 time points and 100 frequency bins (Time-Frequency), describing its spatio-spectro-temporal signature.

a The sensory components (SA and SV) and the prediction-error components (PE1A, PE1V and PE2A/V), showing the model contrast values across four blocks, the topography, and the time-frequency representation. The time-frequency representations are normalized to equalize the strengths for visualization only by dividing the responses with the standard deviation of the corresponding frequency band. b The frequency profile of the five components. The solid vertical lines represent the range of the frequency bands, labeled on top (T: Theta; A: Alpha; B: Beta; G: Gamma). c The temporal profile of the gamma band. The peak latencies of the extracted components are shown.

SA, the sensory signal in the auditory stream, was found at the bilateral temporal and central electrodes with increases in the theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), and gamma (30–100 Hz) bands after the last stimulus. SV, the sensory signal in the visual stream, was found at the contralateral parieto-occipital electrodes with increases in the theta and gamma bands after the last stimulus and then decreases in the alpha, beta, and gamma bands.

PE1A, the first-level prediction-error signal in the auditory stream, was found at the bilateral temporal and central electrodes with increases in the theta, alpha, beta, and gamma bands and then a decrease in the gamma band. PE1V, the first-level prediction-error signal in the visual stream was found at the bilaterally parieto-occipital electrodes with increases in the theta band and gamma bands and then decreases in the alpha and beta bands. PE2A/V, the second-level prediction-error signal in the integrated stream, was found at the central-parietal electrodes with an increase in the gamma band and then decreases in the theta, alpha, and gamma bands, and a long-lasting decrease in beta band oscillations.

Spatially, SV was activated more in the contralateral area due to the lateral stimulation delivery. On the other hand, SA was activated in both hemispheres, which could result from less focal sound delivery by the speakers compared to headphones. For the prediction errors, PE1V appeared in the bilateral scalp distribution and PE1A appeared at the central electrodes, suggesting that the sensory signals located at the electrodes around the contralateral sensory areas now propagated to the central electrodes and both contralateral and ipsilateral electrodes. Spectrally, we measured the peak value for each component in each frequency band the 0–0.9 s time window (Fig. 6b). All five components exhibited higher values in the gamma band. Temporally, we focused on the dynamics of gamma-band signals by averaging the values across frequency bins within the gamma band for each component (Fig. 6c). The peak latencies for SA, SV, PE1A, PE1V, and PE2A/V were 136 msec, 192 msec, 332 msec, 324 msec, and 328 msec, respectively. These latencies suggested a sequential bottom-up signal propagation from sensory input to prediction error, in line with signal flows in our model. Also, we could also observe the first-level prediction-error components inherit spatial and spectral characteristics of the sensory components, e.g., SA and PE1A both contained positive power responses in all frequency bands after the last stimulus onset in the bilateral temporal and central electrodes. Peak latencies for other frequency bands are provided in Supplementary Fig. 5.

Crossmodal integration during learning

The PARAFAC topographic results suggested that the unimodal prediction-error signals, PE1A and PE1V, were redirected to the central-parietal electrodes to generate the crossmodal prediction-error signal PE2A/V. To explore how this integration connectome was established during learning, we assessed the functional connectivity between the electrodes involved in prediction-error signaling across different stages within a block. For each component (PE1A, PE1V, or PE2A/V), we selected the five channels exhibiting the highest values based on the PARAFAC results in the Channel dimension. This resulted in 25 connections between PE1A and PE2A/V. Meanwhile, there were only 24 connections between PE1V and PE2A/V, since there was one channel shared between them (Fig. 7a). To assess learning, we divided the trials within a single block into 11 continuous time windows. Trials from each of these windows were then grouped across all four blocks.

a The channels selected from the prediction-error components: FC1, FCz, C1, C2 and Cz for PE1A (blue dots), P7, PO3, PO5, PO7 and O1 for PE1V (green dots), and P1, P2, Pz, PO3 and POz for PE2A/V (orange dots). The 25 connections are paired between the channels from PE1A and PE2A/V (blue solid lines). The 24 connections are paired between the channels from PE1V and PE2A/V (green solid lines). b Examples of the mean pairwise phase consistency for the connections between PE1A and PE2A/V in three time windows in one participant. c For each window, frequency band, and each pair of connected components, the mean and standard deviation are shown with a black error bar, and individuals are shown with circles.

To measure spectral functional connectivity, we used pairwise phase consistency (PPC), a method measuring phase synchronization between two signals across trials while minimizing bias related to the number of trials31. For each connection, time window, and participant, we calculated PPC values and then averaged them across the relevant connections (either 25 or 24, depending on the components involved). Fig. 7b shows examples of these averaged PPC values for the connections between PE1A and PE2A/V across three time windows in one participant. Then, these averaged PPC values were further averaged across frequency bins within four distinct frequency bands (theta, alpha, beta, and gamma) and across time points (0–0.9 s after the last stimulus). For the connections between PE1A and PE2A/V, we compared the averaged PPC values across adjacent time windows for each frequency band to illustrate changes in functional connectivity throughout the learning stages (Fig. 7c). Similarly, for connections between PE1V and PE2A/V, the averaged PPC values were compared to depict changes of functional connectivity.

The pairwise comparisons revealed a significantly positive difference in the alpha band between PE1A and PE2A/V (n = 30 participants, 1000 resamples, alpha = 0.05, two-tailed, Bonferroni correction). Significantly positive differences were observed in the theta and alpha bands between PE1V and PE2A/V. These results showed that the alpha-band interactions between the central-parietal electrodes and the electrodes representing unimodal prediction-error signals gradually strengthened during learning, suggesting that the central-parietal electrodes gradually emerged as a hub for integrating crossmodal information.

Discussion

We combined a crossmodal experimental paradigm with a hypothesis-driven analysis to examine hierarchical crossmodal predictive coding for sequence processing. We demonstrated that hierarchical crossmodal predictions can be established by learning TP and SP in a higher-order brain region that integrates information from different sensory streams. Our finding provides a generalized framework for crossmodal predictive coding and reveals neural signatures of its representation and learning.

From the predictive coding perspective, convergence of sensory inputs along the hierarchy plays a critical role to minimize prediction errors. To demonstrate this, we calculated the theoretical values of the overall prediction error for each block in each model; that is the minimum of mean-squared prediction error, the principle in model value calculation. For each block (e.g., Block 1), the squared values of PE1A, PE1V, PE2A, and PE2V of each trial type (e.g., AAV or AAA) were weighted by the probability of its occurrence and then summed together. This summed value represents the overall prediction error, which was the same between Blocks 1 and 3, since the model values were the same in these two blocks with just the two sensory streams swapped. Similarly, the overall prediction error was the same between Blocks 2 and 4. The overall prediction errors in Model 3 were found to be the smallest among the three models (Supplementary Fig. 6). Convergence early at functional level of stimulus-to-stimulus transitions enables the brain to achieve the smallest sum of prediction errors, which is in line with the general framework of predictive coding theory.

The central-parietal electrodes, which showed minimal overlap with the extracted auditory and visual sensory signal areas (i.e., SA and SV), may facilitate the integration of crossmodal information, as suggested by our activity and connectivity analyses. The connectivity strength between the central-parietal electrodes and the electrodes of two unimodal prediction-error signals increased during learning, particularly in the alpha frequency. We also observed reduced alpha-beta power when comparing crossmodal and unimodal contexts, as well as during the processing of unimodal sequences within crossmodal contexts. This aligns with the decreased beta-band power reported in previous studies on multimodal tasks32,33. Additionally, the PE2A/V component exhibited a prolonged negative deflection in the beta band. The desynchronization of the alpha-beta oscillation also suggests a top-down transmission to update predictions for further error minimization15,34,35. These findings imply that the central-parietal electrodes, as a hub, receives audiovisual information and updates crossmodal predictions, carried via alpha-beta oscillations. Furthermore, gamma oscillations are thought to facilitate bottom-up transmission of prediction errors from lower to higher neuronal layers36,37,38. Additionally, the temporal sequence of peak gamma oscillations observed in SA and SV, followed by those at the central-parietal electrodes of PE2A/V, suggests a directional flow of information from sensory to higher-order regions over time.

However, we also observed theta and alpha oscillations evoked around the onset of gamma oscillations in the components of both sensory inputs and unimodal prediction errors. In contrast, reduced theta and alpha oscillations were observed in the components of crossmodal prediction errors, indicating less coupling between the low and high frequency oscillations. Evidence of phase-amplitude cross-frequency coupling has been discovered in connections among different brain regions and large-scale networking between regions such as the hippocampus and prefrontal areas39,40,41,42. Further advanced recording and methodologies such as Electrocorticography (ECoG) in the hippocampus area and whole cortical surface can be combined with cross-frequency coupling analysis to verify if lower- and higher-hierarchy crossmodal processing involves different ranges of distributed networks.

In addition, while central-parietal activation in EEG may reflect activity in the multisensory superior temporal areas in imaging, intracranial EEG, and MEG studies in the crossmodal context43,44,45, further recording modalities should be considered to verify where crossmodal predictive coding occurs across the hierarchical structure. Moreover, studies have shown that convergence of sensory processing across modalities can occur already in subcortical and lower cortical areas. For example, inferior and superior colliculus have both been found to receive inputs of different sensory modalities46,47. This could also be achieved by interconnections between sensory-specific primary cortices, which have been identified between the auditory and visual areas, and between auditory and somatosensory areas23,24,27,48. We suggest that early convergence could occur when crossmodal transitions are processed at a short timescale, such as visual speech occurring a few hundred msec ahead and leading to the prediction of acoustical input of speech spectrograms and phonetic features49. When considering complex sequences with multiple time scales, probability computations would be operated at a more distributed brain network. Our study used arbitrary stimuli to create two-level temporal regularities, while real-world stimuli can consist of more than two timescales of regularities, e.g. in music and language50. It is needed to further reveal this central-parietal hub or a larger brain network processing complex sequences with natural stimuli and thus generalize crossmodal predictive coding in the multi-level hierarchy.

There is still room for further investigation of the hierarchical crossmodal predictive coding model, including probability encoding, neural representations of crossmodal prediction signals, trial-by-trial prediction updates, functional causality between hierarchical prediction-error signals, and interpretation of atypical audiovisual integration. First, the current model’s TP and SP are specifically designed for the local-global paradigm. There is no explicit mechanism explaining why and how these two probability types are encoded. A more systematic and biologically plausible approach would be to encode probabilities conditioned on histories of different timescales. For example, ranging from lower-level probabilities like p(A | A) (equivalent to TPA→A), to higher-order probabilities like p(A | AA) (equivalent to SPAAA), p(A | AAA), and so on. To further validate this approach, a paradigm with more diverse stimulus sequences is needed.

Second, crossmodal prediction signals were not estimated directly. We modeled the prediction-error signals when established crossmodal predictions were violated, and degrees of prediction errors also reflected crossmodal predictability. To extract neural representations of prediction signals in order to construct a comprehensive predictive coding network, we would need to break the interdependence between crossmodal predictions and crossmodal prediction errors by considering their timing delay. That is, a prediction is activated before input is received and then a prediction error is evoked after input is received.

Third, while we could provide some estimate of the learning effect by dividing EEG trials in one block into several segments, our model is limited by design to steady-state values, and trial-by-trial dynamics of crossmodal prediction updates over time remains unknown. Although Hierarchical Gaussian Filter is a way to measure the dynamics of how prediction errors are optimized at different levels51,52, the hierarchical structure cannot be shaped when different timescales of statistical regularities are learned and affect hierarchical interactions. Still, our model with the hierarchical structure and analyses could be greatly improved with the explicit inclusion of learning over time.

Fourth, our study used PPC to investigate connections among the prediction-error components, without directional information. To address it, we could use directed connectivity measures, such as directed transfer function, partial directed coherence, and Granger causality, to estimate causal links from the lower-level prediction-error signals to the higher-level prediction-error signals. Moreover, our model approach is not restricted solely to ERSP and can be applied to not only other recording modalities such as the blood-oxygen-level-dependent (BOLD) signal, but also other analytical metrics, including directed functional connectivity. For example, we can combine this model approach and the contrast of directed functional connectivity to further investigate regional information flows among hierarchical prediction-error signals.

Finally, altered multisensory integration has been used to explain the difficulties of involving in audiovisual communication experienced by individuals with autism spectrum disorder53,54. In contrast, multisensory integration can allow older people to compensate for declines in physical functions (e.g., reduced visual or auditory acuity) by inferring information from other sensory sources55. Our task with a model approach can provide a platform to evaluate multisensory integration from a hierarchical view and offer insights into the neural mechanism about how and why deficits or gains in multisensory integration happen.

Methods

Participants

Thirty participants were recruited for this study (15 males and 15 females; age = 22.0 ± 2.4 years). The inclusion criteria were: (1) age between 20 and 50 years, (2) no difficulty in understanding the experimental procedure due to apparent deficits in cognition, hearing and vision, and (3) no medical history of neurological or psychological diagnosis. Signed consent was received from the participants before the experiment, and all protocols were approved by the research ethics committees of The University of Tokyo (No. 21–372) and National Taiwan University Hospital (No. 201906081RINA). All ethical regulations relevant to human research participants were followed.

Stimuli and task

The auditory stimulus (A) was created with three superimposed sinusoidal waves (500, 1000 and 1500 Hz; volume = 55 dB), and the visual stimulus (V) was a 16*16 green-black checkerboard (size: 4 degree visual angle with a 70 cm viewing distance) presented against a gray background (1920*1220 pixels). These two stimuli were arranged to create four sequences: AAA, AAV, VVV, and VVA with 200-msec duration and 300-msec SOA. A random interval of 1600–1900 msec (50 msec as a step) was inserted between the offset of one sequence and the onset of the next sequence.

Four blocks were respectively assigned a frequent sequence and an infrequent sequence. Block 1 consisted of 100 trials of AAV and 20 trials of AAA. Block 2 consisted of 20 trials of AAV and 100 trials of AAA. Block 3 consisted of 100 trials of VVA and 20 trials of VVV. Block 4 consisted of 20 trials of VVA and 100 trials of VVV. Each block was constructed as follows: The 120 trials were notionally divided into four phases of 30 trials each. Each phase was built from 25 frequent and 5 infrequent sequences in pseudorandom order, yielding an even distribution of trial types within the block.

For unimodal auditory local-global oddball paradigm, two auditory stimuli were created with superimposed sinusoidal waves: 500, 1000 and 1500 Hz for A tone, and 350, 700 and 1400 Hz for A’ tone. These two tones were arranged to create 2 sequences: AAA and AAA’. The setting of stimulation delivery was the same as above, but the sequence AAV in Blocks 1 and 2 was replaced with the sequence AAA’. In the xY Block, as the same stimulus sequence proportion in Block 1, there were 100 trials of AAA’ and 20 trials of AAA. In the xx Block (as Block 2), there were 20 trials of AAA’ and 100 trials of AAA.

Each block was presented twice, once for the left stimulation delivery, where the visual stimuli were delivered 5.28 degree visual angle away from the screen center and the auditory stimuli were delivered from the left speaker, and once for the right stimulation delivery. This led to the total eight block representations (runs) for the main task, and four runs for the unimodal task. We first demonstrated the four runs in a random order, to avoid the carry-over effect of visual stimuli from the main task, and then, the eight runs were delivered randomly. There was a break between runs. A white fixation dot was presented at a visual angle of 3.85 degrees below the screen center. The experimental protocol was programmed with the MATLAB-based PsychtoolBox56.

Probability calculation

The values of the transition probability (TP) and the sequence probability (SP) differ across Blocks and Hypotheses. Here, we first demonstrate the calculations of TP and SP for Block 1 in Hypothesis 1. For a single sequence AAA, there are two A-to-A transitions, and one A-to-O transition. For a single sequence AAV, there are one A-to-A transitions, and one A-to-O transition. Therefore, the total number of A-to-A transitions (denoted as TNA→A) is 2*20 (20 trials of sequence AAA) + 1*100 (100 trials of sequence AAV) = 140. Similarly, the total number of A-to-O transitions (denoted as TNA→O) is 1*20 (sequence endings for 20 trials of sequence AAA) + 1*100 (100 trials of sequence AAV) = 120. Since there is no A-to-V transition in Hypothesis 1, the total number of A-to-V transitions (denoted as TNA→V) is zero.

TPA→A, TPA→V, and TPA→O then can be calculated by dividing the number of corresponding transitions by the total number of transitions ( = 140 + 120 + 0 = 260), which results in 140/260, 0, and 120/260, respectively (as shown in Table 1). For SP, there are 20 trials of AAA, 0 trial of AAV (no global integration), and 100 trials of AA. Thus, SPAAA, SPAAV, SPAA are 20/120, 0, 100/120, respectively. The comprehensive calculations are formulized below.

For Block 1 in Hypothesis 1:

In Hypothesis 2, TPs are calculated based on Equations [1 ~ 6] as in Hypothesis 1. For SP, since there is global integration for sequence AAV, SPAAA, SPAAV, SPAA are 20/120, 100/120, and 0 respectively. In Hypothesis 3, SP are calculated as in Hypothesis 2, and TPs are calculated as in Hypothesis 1, but with different transition numbers. With local integration, TNA→A is still 140, but TNA→V is 1*100 (100 trials of sequence AAV) = 100, and TNA→O is 1*20 (sequence endings for 20 trials of sequence AAA) = 20.

For Block 2, the only difference from Block 1 is the number of trials. In this block, there are 100 trials of AAA and 20 trials of AAV. The calculations of TP and SP can be conducted according to Equations [1 ~ 9], with the numbers 100 and 20 being swapped.

For Blocks 3 and 4, the only difference from Blocks 1 and 2 is the modality. The calculations of TP and SP can be conducted based on the same principle, but with the modality A and V being swapped. For example, TPA→V is swapped to TPV→A, and SPAAV is swapped to SPVVA.

Model value calculation

The model values were calculated from TP and SP based on Chao et al.18. To demonstrate this, we use Block 1 as an example (see Fig. 3c). For the A-to-A transition (with the probability of TPA→A), SA = 1, and thus PE1A = | 1 – P1A | (|.| represents the absolute value). For the other transitions (A-to-O or A-to-V, the probability is 1 – TPA→A), SA = 0, and thus PE1A = | 0 – P1A | . Therefore, the sum of the mean of squared prediction errors at the auditory stream at the first level, denoted as MSE1A, is:

which is a function of P1A and can be minimized by P1A = TPA→A. A similar calculation can be applied to Level 2, where PE2A = | PE1A – P2A | . For sequence AAA (with the probability of SPAAA), SA = 1 and PE1A = | 1 – P1A | , and thus PE2A = | | 1 – P1A | – P2A | . For the other sequences (AA or AAV, the probability is 1 – SPAAA), SA = 0 and PE1A = | 0 – P1A | , and thus PE2A = | | 0 – P1A | – P2A | . Therefore, the corresponding mean-squared error, denoted as MSE2A, is:

which is a function of P2A and can be minimized by P2A = SPAAA*( | 1 – P1A | ) + (1– SPAAA)*P1A. For P1V and P2V, similar calculations can be applied with the replacement from TPA→A to TPA→V and from SPAAA to SPAAV. Furthermore, the same calculations can be applied to Block 2 but with different values of TP and SP. For Blocks 3 and 4, similar calculations are done by replacing TPA→A with TPV→A, TPA→V with TPV→V, SPAAA with SPVVA, and SPAAV with SPVVV. The comprehensive calculations are shown below. For different hypotheses, different TP and SP values are used.

For Blocks 1 and 2:

For Blocks 3 and 4:

Once, the predictions are determined and the prediction errors are calculated as:

EEG acquisition and preprocessing

EEG was recorded by a 64-channel 10–20 system Quick Cap and Neuroscan SynAmps RT amplifier (Compumedics Neuroscan USA, Ltd. Charlotte, NC, USA). The impedances were kept below 10 kΩ for the two eye electrodes and below 5 kΩ for the rest of the electrodes. The signals were collected with an online reference electrode near Cz, a 500 Hz sampling rate, and an online band-pass filter between 0.01 and 100 Hz. Event codes were sent at the onset of the first stimulus in a sequence. We used EEGLAB (Swartz Center for Computational Neuroscience)57 for data preprocessing including extracting epochs from −1 to +1.9 s relative to the onset of the first stimuli (function: pop_epoch.m), and manually removing bad epochs when there was an obvious movement artifact or 80% of amplitudes were over ±100 microvolts. Then, components containing muscular and eye artifacts were removed using independent component analysis (ICA) (function: pop_ica.m) with ADJUST toolbox58 (function: interface_ADJ.m). Current source density (CSD) analysis was conducted using CSD Toolbox to reduce the impact of volume conduction (function: CSD.m)59. For CSD calculation, the montage of spherical coordinates was created using the channel information of the Quick Cap without channels CB1 and CB2 (function: Get_GH.m). The smoothing constant lambda was set as 1e–5, and the head radius was set as 10 cm.

Event-related spectral perturbation (ERSP)

We used Fieldtrip Toolbox60 to quantify the time-frequency representation (from 1 to 100 Hz) of the preprocessed EEG for each trial, channel, and participant. The Morlet wavelet transformation was used with the wavelet cycles of 7 (function: ft_freqanalysis.m). To measure the ERSP for each trial, channel, and participant, we performed the baseline normalization by calculating the decibel with the baseline period from –0.2 to 0 s (time zero as the onset of the first stimulus) (function: ft_freqbaseline.m).

To make the left hemisphere the contralateral side for both left-side and right-side stimulation delivery, for trials where stimulation was applied to the left side, the ERSPs’ channel assignment was mirrored from left to right, and for trials where stimulation was applied to the right side, the channel layout was unchanged. Then, the ERSPs were then averaged across trials for each trial type in each block, and for each participant and each channel.

Significant contrast responses

Two types of contrasts were performed. First, to validate audiovisual integration (Fig. 2), we contrasted the averaged ERSP of AAA between Blocks 1 and 2 and the averaged ERSP of VVV between Blocks 3 and 4, for each participant and each channel. The significance across participants for each contrast and each channel was estimated using bootstrap analysis (function: bootci.m). For the topography, for each contrast, channel, and participant, the contrast responses were averaged across time points between 0 and 0.9 s after the last stimulus onset and across bins within the four frequency bands. The significance across participants was estimated using permutation analysis with the cluster correction set as at least two channels with close distance (function: ft_timelockstatisitcs.m). Second, to extract prediction-error signals (Fig. 4) for model-fitting, we contrasted the averaged ERSP of AAV to AAA in Blocks 1 and 2 and the averaged ERSP of VVA to VVV in Blocks 3 and 4, for each participant and each channel. For each contrast, channel, the significance across participants was estimated using bootstrap analysis.

Also, the signal-to-noise ratios in ERSPs may vary between the frequent and rare sequences due to the differing number of trials for each sequence type. To evaluate this potential bias, we conducted the same analysis with equalized trial numbers for the frequent and rare sequences. Specifically, we randomly selected trials from the frequent sequence to match the number of the rare sequence. For instance, in Block 1, which consisted of a total of 40 AAA trials, 40 AAV trials were randomly selected from a pool of 200 trials. Finally, the ERSPs were then averaged across the same trials for each trial type in each block, participant, and channel. The new contrast responses (Supplementary Fig. 7) and the extracted components (Supplementary Fig. 8) exhibited similar patterns to the results obtained from the original method (Supplementary Fig. 1 and Fig. 6).

PARAFAC analysis

PARAFAC analysis is a generalization of the principal component analysis to higher-order arrays. This method decomposes a tensor into a sum of outer products of vectors, which helps in identifying underlying patterns or structures in the multi-dimensional data. We performed the analysis using the N-way toolbox61 (function: parafac.m). The input was the significant contrast responses (Block × Channel × Time-Frequency). The option of “Fixmode” was applied to the Block dimension with the modeled contrast values while no constraint was applied on dimensions of Channel and Time-Frequency. The convergence criterion was set to be 1e − 6. We repeated the decomposition 50 times, each time with a set of random orthogonalized values for initialization. Each component decomposed from PARAFAC can be described by score or loading matrices (activation values) in the original dimensions: 4 model contrast values in the Block dimension, 60 activation values in the Channel dimension, and 450*100 activation values in the Time-Frequency dimension. The basic concept of PARAFAC is illustrated in Supplementary Fig. 9. Furthermore, we utilized two surrogate datasets to illustrate our model-fitting approach using PARAFAC, as depicted in Supplementary Fig. 10.

To evaluate the decomposition a CORCONDIA of 80~90% is considered a good consistency, and values below 50% indicate a problematic model with high interaction between components28,30. On the other hand, BIC was calculated to correct the residual sum of squares (RSS) with the complexity of the model:

Where u represents the size of inputs (e.g., 4 blocks × 60 channels × 450*100 time-frequency) and w represents the size of estimated outputs. See the values of w for the three models in Supplementary Table 1.

From 50 decompositions (each with random initialization), we acquired 50 CORCONDIA values and 50 BIC values. These values were compared between models using two-sample t test, and alpha was set as 0.05 with Bonferroni correction (function: ttest2.m).

Response reconstruction

We reconstructed the responses from 5 extracted components (i.e., SA, SV, PE1A, PE1V, and PE2A/V) with dimensions of Block, Channel, and Time-Frequency. The response for Block i and Channel j was reconstructed (denoted as Recon) as follow:

Where c represents the component index, where c = 1 (SA), 2 (SV), 3 (PE1A), 4 (PE1V), and 5 (PE2A/V). For component c, Blockc represents the 4 model contrast values, Channelc represents the 60 activation values in the Channel dimension, and TimeFrequencyc represents the 450*100 activation values in the Time-Frequency dimension.

Connectivity analysis

For the spectral functional connectivity, we performed PPC with Fieldtrip Toolbox (function: ft_connectivityanalysis.m). We paired 25 connections between PE1A and PE2A/V, and 24 connections between PE1V and PE2A/V because of one shared channel. We then segmented EEG responses in each block into 11 learning stages using a moving average approach. N is the number of trials in a phase of a block after bad epochs were excluded, and we created three segments (N/3 trials) for one phase and thus twelve segments in total for one block. Then, we used a window width of two segments and a step size of one segment to have 11 learning stages. For example, in a clean block (no bad trials, N = 30 from 120/4), and a segment has 10 trials (from 30/3). The first stage would include trials from 1st to 20th (first and second segments), and the second stage would include trials from 11th to 30th (second and third segments). For each stage, the trials from the four blocks were then pooled together.

PPC was evaluated at 450 time points and 100 frequency bins (1~100 Hz). These PPC values were then averaged across frequency bins for 4 frequency bands (theta: 4–8 Hz, alpha: 8–13 Hz, beta: 13–30 Hz, and gamma: 30–100 Hz), across time points (0–0.9 s relative to the last stimulus), and across connections (25 or 24 between two components). After average, there were 30 averaged PPC values for each time window, each pair of connected components, and each frequency band. We compared the value between each two windows within each participant for each pair using bootstrap analysis.

Statistics and reproducibility

The sample size in the current study (N = 30) was determined based on previous EEG/MEG studies with similar designs (N = 29 in Bekinschtein et al.13; N = 10 in Wacongne et al.17). For EEG analysis, we followed the general guideline to subjectively remove data containing bad artifacts, and an average of ~2.2% of the total 1920 trials for each participant was excluded (mean: 43.5 ± 38.3 trials). For statistical comparisons and data fitting analysis, the details including dimensional information of EEG datasets, the number of resampling, the correction for statistical threshold, key parameters, and toolbox functions were provided.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The raw EEG data, processed data, and source data underlying main and Supplementary Figs. have been deposited in Open Science Framework (OSF)62.

Code availability

The code to calculate model values of predictions and prediction errors based on the three hypotheses has been deposited in OSF62.

References

Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204 (2013).

Friston, K., Thornton, C. & Clark, A. Free-Energy Minimization and the Dark-Room Problem. Front. Psychol. 3, 130 (2012).

den Ouden, H. E. M., Kok, P. & de Lange, F. P. How Prediction Errors Shape Perception, Attention, and Motivation. Front. Psychol. 3, 548 (2012).

Friston, K. Does predictive coding have a future? Nat. Neurosci. 21, 1019–1021 (2018).

Knill, D. C. & Pouget, A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27, 712–719 (2004).

Näätänen, R. The Mismatch Negativity: A Powerful Tool for Cognitive Neuroscience. Ear Hear. 16, 6–18 (1995).

Näätänen, R., Paavilainen, P., Rinne, T. & Alho, K. The mismatch negativity (MMN) in basic research of central auditory processing: A review. Clin. Neurophysiol. 118, 2544–2590 (2007).

Wacongne, C., Changeux, J. P. & Dehaene, S. A neuronal model of predictive coding accounting for the mismatch negativity. J. Neurosci. 32, 3665–3678 (2012).

Heslenfeld, D. J. Visual Mismatch Negativity. In Detection of Change: Event-Related Potential and fMRI Findings (ed. Polich, J.) 41–59 (Springer US, 2003). https://doi.org/10.1007/978-1-4615-0294-4_3.

Stefanics, G., Astikainen, P. & Czigler, I. Visual mismatch negativity (vMMN): a prediction error signal in the visual modality. Front. Hum. Neurosci. 8, 1074 (2015).

Shen, G., Smyk, N. J., Meltzoff, A. N. & Marshall, P. J. Using somatosensory mismatch responses as a window into somatotopic processing of tactile stimulation. Psychophysiology 55, e13030 (2018).

Shinozaki, N., Yabe, H., Sutoh, T., Hiruma, T. & Kaneko, S. Somatosensory automatic responses to deviant stimuli. Brain Res. Cogn. Brain Res. 7, 165–171 (1998).

Bekinschtein, T. A. et al. Neural signature of the conscious processing of auditory regularities. Proc. Natl. Acad. Sci. 106, 1672–1677 (2009).

Blundon, E. G., Rumak, S. P. & Ward, L. M. Sequential search asymmetry: Behavioral and psychophysiological evidence from a dual oddball task. PLOS One 12, e0173237 (2017).

Chao, Z. C., Takaura, K., Wang, L., Fujii, N. & Dehaene, S. Large-Scale Cortical Networks for Hierarchical Prediction and Prediction Error in the Primate Brain. Neuron 100, 1252–1266.e3 (2018).

El Karoui, I. et al. Event-Related Potential, Time-frequency, and Functional Connectivity Facets of Local and Global Auditory Novelty Processing: An Intracranial Study in Humans. Cereb. Cortex 25, 4203–4212 (2015).

Wacongne, C. et al. Evidence for a hierarchy of predictions and prediction errors in human cortex. Proc. Natl. Acad. Sci. 108, 20754–20759 (2011).

Chao, Z. C., Huang, Y. T. & Wu, C. T. A quantitative model reveals a frequency ordering of prediction and prediction-error signals in the human brain. Commun. Biol. 5, 1–18 (2022).

van Wassenhove, V., Grant, K. W. & Poeppel, D. Visual speech speeds up the neural processing of auditory speech. Proc. Natl. Acad. Sci. 102, 1181–1186 (2005).

Vroomen, J. & Stekelenburg, J. J. Visual Anticipatory Information Modulates Multisensory Interactions of Artificial Audiovisual Stimuli. J. Cogn. Neurosci. 22, 1583–1596 (2010).

Colin, C. et al. Mismatch negativity evoked by the McGurk–MacDonald effect: a phonetic representation within short-term memory. Clin. Neurophysiol. 113, 495–506 (2002).

Olasagasti, I., Bouton, S. & Giraud, A. L. Prediction across sensory modalities: A neurocomputational model of the McGurk effect. Cortex 68, 61–75 (2015).

Driver, J. & Noesselt, T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron 57, 11–23 (2008).

Stein, B. E., Stanford, T. R. & Rowland, B. A. The Neural Basis of Multisensory Integration in the Midbrain: Its Organization and Maturation. Hear. Res. 258, 4–15 (2009).

Mcgurk, H. & Macdonald, J. Hearing lips and seeing voices. Nature 264, 746–748 (1976).

Barraclough, N. E., Xiao, D., Baker, C. I., Oram, M. W. & Perrett, D. I. Integration of Visual and Auditory Information by Superior Temporal Sulcus Neurons Responsive to the Sight of Actions. J. Cogn. Neurosci. 17, 377–391 (2005).

Schroeder, C. E. & Foxe, J. J. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res. Cogn. Brain Res. 14, 187–198 (2002).

Bro, R. & Kiers, H. A. A new efficient method for determining the number of components in PARAFAC models. J. Chemom. 17, 274–286 (2003).

Harshman, R. A. & Lundy, M. E. PARAFAC: Parallel factor analysis. Comput. Stat. Data Anal. 18, 39–72 (1994).

Pouryazdian, S., Beheshti, S. & Krishnan, S. CANDECOMP/PARAFAC model order selection based on Reconstruction Error in the presence of Kronecker structured colored noise. Digit. Signal Process. 48, 12–26 (2016).

Vinck, M., van Wingerden, M., Womelsdorf, T., Fries, P. & Pennartz, C. M. The pairwise phase consistency: A bias-free measure of rhythmic neuronal synchronization. NeuroImage 51, 112–122 (2010).

Göschl, F., Friese, U., Daume, J., König, P. & Engel, A. K. Oscillatory signatures of crossmodal congruence effects: An EEG investigation employing a visuotactile pattern matching paradigm. NeuroImage 116, 177–186 (2015).

Roa Romero, Y., Senkowski, D. & Keil, J. Early and late beta-band power reflect audiovisual perception in the McGurk illusion. J. Neurophysiol. 113, 2342–2350 (2015).

Arnal, L. H. & Giraud, A. L. Cortical oscillations and sensory predictions. Trends Cogn. Sci. 16, 390–398 (2012).

Jiang, Y. et al. Constructing the hierarchy of predictive auditory sequences in the marmoset brain. eLife 11, e74653 (2022).

Bastos, A. M. et al. Visual Areas Exert Feedforward and Feedback Influences through Distinct Frequency Channels. Neuron 85, 390–401 (2015).

Fries, P. A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends Cogn. Sci. 9, 474–480 (2005).

Michalareas, G. et al. Alpha-Beta and Gamma Rhythms Subserve Feedback and Feedforward Influences among Human Visual Cortical Areas. Neuron 89, 384–397 (2016).

Bragin, A. et al. Gamma (40-100 Hz) oscillation in the hippocampus of the behaving rat. J. Neurosci. Off. J. Soc. Neurosci. 15, 47–60 (1995).

Canolty, R. T. et al. High gamma power is phase-locked to theta oscillations in human neocortex. Science 313, 1626–1628 (2006).

Sirota, A. et al. Entrainment of neocortical neurons and gamma oscillations by the hippocampal theta rhythm. Neuron 60, 683–697 (2008).

Cohen, M. X., Elger, C. E. & Fell, J. Oscillatory activity and phase-amplitude coupling in the human medial frontal cortex during decision making. J. Cogn. Neurosci. 21, 390–402 (2009).

Besle, J., Bertrand, O. & Giard, M.-H. Electrophysiological (EEG, sEEG, MEG) evidence for multiple audiovisual interactions in the human auditory cortex. Hear. Res. 258, 143–151 (2009).

Schelenz, P. D. et al. Multisensory integration of dynamic emotional faces and voices: method for simultaneous EEG-fMRI measurements. Front. Hum. Neurosci. 7, 729 (2013).

Starke, J., Ball, F., Heinze, H. J. & Noesselt, T. The spatio-temporal profile of multisensory integration. Eur. J. Neurosci. 51, 1210–1223 (2020).

Champoux, F. et al. A role for the inferior colliculus in multisensory speech integration. Neuroreport 17, 1607–1610 (2006).

Meredith, M. A. & Stein, B. E. Interactions among converging sensory inputs in the superior colliculus. Science 221, 389–391 (1983).

Schroeder, C. E. et al. Somatosensory input to auditory association cortex in the macaque monkey. J. Neurophysiol. 85, 1322–1327 (2001).

O’Sullivan, A. E., Crosse, M. J., Liberto, G. M. D., de Cheveigné, A. & Lalor, E. C. Neurophysiological Indices of Audiovisual Speech Processing Reveal a Hierarchy of Multisensory Integration Effects. J. Neurosci. 41, 4991–5003 (2021).

Dehaene, S., Meyniel, F., Wacongne, C., Wang, L. & Pallier, C. The Neural Representation of Sequences: From Transition Probabilities to Algebraic Patterns and Linguistic Trees. Neuron 88, 2–19 (2015).

Mathys, C. D. et al. Uncertainty in perception and the Hierarchical Gaussian Filter. Front. Hum. Neurosci. 8, 825 (2014).

Iglesias, S. et al. Hierarchical Prediction Errors in Midbrain and Basal Forebrain during Sensory Learning. Neuron 80, 519–530 (2013).

Meyer, G. F. & Noppeney, U. Multisensory integration: from fundamental principles to translational research. Exp. Brain Res. 213, 163–166 (2011).

Saalasti, S., Tiippana, K., Kätsyri, J. & Sams, M. The effect of visual spatial attention on audiovisual speech perception in adults with Asperger syndrome. Exp. Brain Res. 213, 283–290 (2011).

Freiherr, J., Lundström, J. N., Habel, U. & Reetz, K. Multisensory integration mechanisms during aging. Front. Hum. Neurosci. 7, 863 (2013).

Kleiner, M., Brainard, D. & Pelli, D. What’s new in psychtoolbox-3. Perception 36, 1–16 (2007).

Delorme, A. & Makeig, S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004).

Mognon, A., Jovicich, J., Bruzzone, L. & Buiatti, M. ADJUST: An automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology 48, 229–240 (2011).

Kayser, J. & Tenke, C. E. Principal components analysis of Laplacian waveforms as a generic method for identifying ERP generator patterns: I. Evaluation with auditory oddball tasks. Clin. Neurophysiol. 117, 348–368 (2006).

Oostenveld, R., Fries, P., Maris, E. & Schoffelen, J. M. FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data. Comput. Intell. Neurosci. 2011, 156869 (2010).

Andersson, C. A. & Bro, R. The N-way toolbox for MATLAB. Chemom. Intell. Lab. Syst. 52, 1–4 (2000).

Huang, Y. T. et al. Crossmodal Hierarchical Predictive Coding for Audiovisual Sequences in the Human Brain. Open Science Framework. https://doi.org/10.17605/OSF.IO/4S2E3 (2024).

Acknowledgements

We thank Kai-Jie Liang and Shih-Yao Mao for helping with participant recruitment and experiment preparation, and Felix B. Kern for proofreading. We also thank Hui-Fen Mao and Shih-Yao Mao for their help in managing IRB, and Shih-Yao Mao. This work was supported by World Premier International Research Center Initiative (WPI), MEXT, Japan (to Z.C.C.), and the Ministry of Science and Technology of Taiwan (MOST 109-2410-H-002-106-MY3) (to C.W. and H.M.).

Author information

Authors and Affiliations

Contributions

Z.C.C. conceptualized the study. Y.T.H., C.W., and Z.C.C. designed the experimental protocol, and Y.T.H., Y.M.F., and C.F. collected the data (Y.M.F. and C.F. contributed equally). Y.T.H. and Z.C.C. proposed the theoretical models and performed the data analyses. Y.T.H wrote the manuscript, and Z.C.C., C.W., and S.K. helped with editing. All authors contributed to and have approved the final paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Biology thanks Jordan Hamm and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editors: Julio Hechavarria and Joao Valente. [A peer review file is available].

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Huang, Y.T., Wu, CT., Fang, YX.M. et al. Crossmodal hierarchical predictive coding for audiovisual sequences in the human brain. Commun Biol 7, 965 (2024). https://doi.org/10.1038/s42003-024-06677-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42003-024-06677-6

- Springer Nature Limited