Abstract

Digital health technologies (DHTs) have become progressively more integrated into the healthcare of people with multiple sclerosis (MS). To ensure that DHTs meet end-users’ needs, it is essential to assess their usability. The objective of this study was to determine how DHTs targeting people with MS incorporate usability characteristics into their design and/or evaluation. We conducted a scoping review of DHT studies in MS published from 2010 to the present using PubMed, Web of Science, OVID Medline, CINAHL, Embase, and medRxiv. Covidence was used to facilitate the review. We included articles that focused on people with MS and/or their caregivers, studied DHTs (including mhealth, telehealth, and wearables), and employed quantitative, qualitative, or mixed methods designs. Thirty-two studies that assessed usability were included, which represents a minority of studies (26%) that assessed DHTs in MS. The most common DHT was mobile applications (n = 23, 70%). Overall, studies were highly heterogeneous with respect to what usability principles were considered and how usability was assessed. These findings suggest that there is a major gap in the application of standardized usability assessments to DHTs in MS. Improvements in the standardization of usability assessments will have implications for the future of digital health care for people with MS.

Similar content being viewed by others

Introduction

Digital health technologies (DHTs) offer complementary methods to track and manage symptoms, improve treatment adherence, and increase access to healthcare for diverse patient populations1,2. In the context of a heterogeneous and prognostically challenging neurodegenerative disorder such as multiple sclerosis (MS), DHTs have significant potential to promote disease management and personalized patient care3,4. The potential impact of DHTs in MS is even more apparent when one considers the nature of clinical assessments in this population. Specifically, evaluations typically occur at 6-12-month intervals and require clinical visits for a comprehensive examination. This low frequency of patient consultation is reported to contribute to un/under-reported disease progression5. A potential solution is increasing the frequency of clinical consultations, but this is constrained by time, cost, and geography, leading to inequity in healthcare access. To address some of these gaps in care provision, DHTs have become more integrated into the long-term care of people with MS6,7,8.

While the need for innovative digital solutions in MS care is clear, a recent review of 30 unique mobile health applications found that they did not meet the needs of people with MS9. Several factors may potentially thwart the successful implementation of DHTs, including but not limited to, the level of digital and health literacy of end users and the perceived usefulness of, or satisfaction with, a DHT10. Indeed, it is well established that the incorporation of usability principles into the design and evaluation of DHTs is fundamental to ensuring they are appropriately targeted to the end users’ needs and adopted long-term11,12,13. Usability describes the extent to which DHTs can be “used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”14, and typically considers core principles such as effectiveness, learnability, physical comfort/acceptance, ease of maintenance/repairability, and operability15. Indeed, the evaluation of usability is important in the process of development and commercialization of DHTs. Government authorities such as the Federal Drug Administration recommend that medical devices (including DHTs) not yet on the market provide a report summarizing potential target users, training necessary for the operation of the product, usability testing, and any problems encountered during technology evaluation16. Furthermore, the National Health Service in the UK requires consideration of usability and accessibility principles when approving health apps solicited from industry17. Despite such a clear need for usability evaluation of DHTs, there has yet to be a comprehensive assessment of DHT usability in the context of MS. Other groups have assessed the usability of mobile health applications1,18, but there is a need to conduct a comprehensive usability evaluation of all DHTs employed by people with MS, especially wearable technologies. The aim of this scoping review was, therefore, to examine the extent to which usability principles have been considered in DHTs for people with MS and to summarize the methods of usability evaluation. A preliminary search of the Joanna Briggs Institute Database of Systematic Reviews and Implementation Reports was conducted to confirm that there are no current or in-progress scoping or systematic reviews on the same topic.

By following a Population Concept Context mnemonic, we generated a primary review question: how has usability been considered in studies of DHTs targeting people with MS? This primary review question was then divided into four sub-questions:

-

1.

What are the participant characteristics (e.g., age, gender, disease severity) included in studies of DHTs in the context of MS?

-

2.

What are the components (e.g., type of technology and delivery platform, development stage) of DHTs targeting people with MS?

-

3.

What assessment methods (e.g., questionnaires, interviews) of usability are incorporated into the design and/or evaluation of DHTs for people with MS?

-

4.

What usability outcomes (e.g., accessibility, flexibility) are reported from the evaluation of DHTs for people with MS?

Results

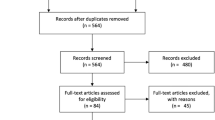

Selection of sources of evidence

A total of 5990 studies were identified in the search process (see Fig. 1). Following duplicate removal (n = 1432), the titles and abstracts of 4558 studies were screened, and 4262 studies were deemed irrelevant. A total of 279 studies moved to full-text review, where 247 articles were excluded; of note, 89 studies did not assess usability. Inter-rater agreement for title and abstract screening was 0.45, and between 0.57 and 0.73 for full-text review (given there were three raters for this stage). These values indicate moderate to substantial agreement between reviewers, respectively19. A total of 32 studies were included in the final narrative synthesis.

Data extracted from Covidence. Exclusion Criteria: 1. Review papers, animal studies, unpublished trial data, conference abstracts, opinion pieces, case studies, and letters.; 2. No reported usability outcome measure; 3. Studies with data in MS patients that is not accessible, or that is presented in conjunction with co-morbidities; 4. DHT not for patient populations with MS (primary diagnosis), healthcare practitioners, formal care providers, and researchers; 5. Machine learning and AI studies to assess healthcare data for MS. κ = Cohen’s kappa value.

Description of Included Studies and Participants

The characteristics of the included studies and participants are summarized in Table 1. Most studies were conducted in Europe (n = 18, 56%), followed by North America (n = 9, 28%). Four studies (13%) involved multi-country investigations. More than half of the studies were published between 2020-2023 (n = 19, 59%). Most studies used a mixed-methods (n = 16, 50%) or quantitative (n = 15, 47%) design. One study (0.3%) used a qualitative design.

Across the studies, the total number of participants was 1213, with the sample sizes ranging between 4 and 126. Most participants were female (71%), with RRMS (73%). Two studies (6.3%) included people with CIS. Of the 28 (86%) studies that reported mean/median age of participants, the age ranged between 36.8 and 56.8 years old. Across all participants, the mean EDSS scores ranged between 1 and 6.5, while the mean disease duration ranged between 6 and 24 years. The education level of participants was reported in 11 (34%) studies and ranged between 8th grade and doctorate level.

Description of Usability Components of Digital Health Technologies (DHTs)

The characteristics of the DHTs are summarized in Table 2. The majority of DHTs were application-based (apps) (n = 23, 70%), followed by wearables (n = 7, 21%), Website/Internet (n = 6, 18%), and others (n = 2, 6%): a game console and a virtual reality system. Five studies used a combination of apps and wearables (n = 4) or apps and Internet/Website (n = 1). Most DHTs were implemented in the patient’s home or community (n = 30, 94%). The remaining two DHTs (6%) were used in a hospital/clinic setting. Of the 30 DHTs implemented in a patient’s home, eight were additionally evaluated within a research facility (n = 7) or hospital (n = 1). All DHTs were evaluated by people with MS (n = 32), with seven DHTs evaluated by two users (22%): people with MS and formal health/social care providers (n = 6) or family caregivers (n = 1). Over half the DHTs were the final versions (n = 17, 53%), while the remainder were in various stages of iterative development (n = 15, 47%). The DHTs were intended for various uses, including remote self-management, education, symptom assessment and monitoring, cognitive and physical rehabilitation, and supporting therapeutic interventions.

Methods of Usability Evaluation

The methods of usability evaluations within each study are detailed in Table 2. When considering the number of methods used to evaluate DHTs across the studies, there was an even split, wherein half of the studies implemented a single method of evaluation (n = 16, 50%), while the remaining studies utilized two (n = 9, 28%) or more methods (n = 7, 22%). Usability of the DHTs was most commonly evaluated using questionnaires (n = 26, 81%), followed by interviews (n = 12, 37%), task completion tests (n = 9, 28%), think aloud protocols (n = 4, 12%), focus groups (n = 3, 9%), and others (n = 4, 12%) which included patient feedback. Within the 26 studies that utilized questionnaires, most included scales developed by the research team (n = 15, 58%). Of the studies that used a standardized questionnaire, the most common scale was the System Usability Scale (SUS)20, used in seven studies (27%).

Description of Usability Outcomes

Table 2 summarizes the usability characteristics across the included studies. There was a wide variety and number of usability characteristics reported across studies, with 20 unique usability characteristics reported in over a third of studies (n = 12, 37.5%). Three studies (9%) reported usability as a general term. Across all studies, the most assessed usability characteristic was user satisfaction (n = 17, 53%). Other usability characteristics assessed were adherence (n = 6, 19%), acceptability (n = 5, 16%), feasibility (n = 5, 16%), usefulness (n = 4, 12.5%), and efficiency (n = 3, 9%). There were seven usability characteristics only reported by two independent studies. Most studies reported two (n = 13, 40.5%) or more (n = 13, 40.5%) usability characteristics, while six studies (19%) reported a single usability characteristic. While the overall conclusions from usability assessment varied, studies most often reported a combination of positive feedback and suggestions for improving future iterations of the DHTs. Two studies explicitly mentioned that participant feedback from usability assessment was incorporated into DHT development21,22.

Discussion

The current scoping review is the first to examine usability characteristics and testing methods in DHTs with a specific focus on people with MS. Usability was evaluated in less than a third of relevant studies, indicating limited consideration of this important topic within the context of DHTs in MS. Evaluation of usability was highly heterogeneous across studies, both in terms of the number of reported characteristics and assessment methods. The most evaluated DHTs were mobile applications and most studies used different types of questionnaires to assess usability. DHTs were evaluated by people with MS, with limited inclusion of health/social care providers or family caregivers in the process. Below, we first summarize key findings and knowledge gaps, and subsequently make recommendations for future work to advance the design and implementation of DHTs in MS.

DHTs have become a rapidly evolving modern health intervention tool, especially amidst the COVID pandemic, and likely beyond23. The importance of evaluating the usability of DHTs has been recently highlighted in the context of other chronic diseases, such as Parkinson’s disease, and in elderly individuals24,25,26,27. A recent review of mobile applications in MS reported only six of 14 studies had evaluated usability18. In the current review, which encompassed the broad spectrum of DHTs, the 32 studies only represented 26% of relevant DHT studies in MS. There is a clear lack of usability assessment in over half of DHT studies in MS. Furthermore, we reported minimal involvement of caregivers and health/social care professionals. The inclusion of usability assessments by formal care providers and family caregivers should be considered given the importance of MS caregivers in patient care28,29. Evaluation of usability is a critical part of the development and effective use of DHTs, and therefore should be considered in emerging DHTs targeting people with MS.

Our findings show that the evaluation methods and usability outcomes assessed were very heterogeneous across studies. Indeed, this heterogeneity in usability assessment and lack of consistency across studies have been reported across other neurodegenerative and chronic diseases, such as dementia, diabetes, and cardiovascular disease24,30. Usability assessment approaches similarly varied more broadly in studies investigating medical devices across several populations depending on the DHT and its intended use31. In the current study, the most frequently used method to evaluate usability was questionnaires, and most studies implemented independent, non-validated, questionnaires developed by the research team. The most commonly used standardized questionnaire was the SUS. Although a valid and reliable measure, the SUS is a self-reported measure with inherent bias and was not intended to be comprehensive in its approach to evaluate usability32,33. Further, the SUS is not specific nor adapted to a particular DHT type, limiting its ability to describe specific aspects of usability.

The usability of a DHT can encompass a wide range of characteristics and these outcomes will vary based on the intended use of the DHT. We reported a large number of different usability outcome characteristics across studies, with the most common usability characteristic evaluated being user satisfaction. Indeed, the SUS questionnaire, the most used usability assessment method, primarily captures user satisfaction, with additional sub-scales for learnability and usability. The usability characteristics reported varied across studies but were somewhat consistent within DHT type. For example, DHT studies that incorporated a wearable component assessed tolerance or wear-time, whereas self-management DHTs used terms such as engagement and acceptability. Furthermore, there were a large number of studies that reported unique descriptors of usability. It is important to capture multiple characteristics of usability, however consistent terminology and descriptions of usability outcomes is important for cross-study comparisons and validation. There is a clear need to develop more standardized and comprehensive approaches to assess the usability of DHTs for people with MS.

The dramatic rise in remote patient management necessitates a framework to effectively evaluate the intended use and quality of DHTs to enhance and optimize user experience. Herein, we provide recommendations to advance research on DHTs in MS. Usability assessments and outcomes should be tailored for the intended use of the DHT and the target user. Usability testing does not require an enormous time investment, in fact, the likelihood of acquiring novel information after six to nine users is minimal34. We found an average of 35 participants assessed usability across the studies included in this review, which is indeed sufficient to reliably and effectively assess DHT usability. Half the evaluated studies were still undergoing development of their DHT, and only two studies explicitly reported that participant feedback from usability testing was incorporated into subsequent DHT development21. Future studies should also consider usability in the context of people with MS who experience additional barriers when accessing DHTs, such as low socio-economic status, rurality, older age, and more severe disability35.

The future of DHTs in MS requires an updated standardized usability framework, and more targeted usability outcomes specific to the type and application of DHT in patient care. Implementation of both qualitative and quantitative measures is important36,37. To update currently used standardized methods, like the SUS, objective and comprehensive measures of usability assessments are needed that do not rely only on self-report. Future work should therefore apply a mixed methods approach to assess usability and implement user feedback during stages of DHT development. Incorporating these considerations, we recommend the following to improve the assessment of DHT usability:

-

1.

Common and clearly defined usability characteristics of DHTs should be evaluated. For example, user satisfaction and acceptability characteristics could be rated on a numeric scale.

-

2.

Additional criterion specific to the type of DHT, and user (patient or caregiver) should be assessed separately. For example, wearables should include wear-time, and apps that require tests should include task completion time.

-

3.

Qualitative measures, such as interviews or focus groups, should be conducted in conjunction with quantitative measures. These should assess user feedback and aim to report the subtle challenges with usability not captured by quantitative measures.

These usability metrics, if combined in the form of a summative score, could be useful to compare across studies of various DHT types. Usability results from these metrics should be integrated into the development of the DHT. Finally, the current scoping review highlights a major gap in the application of standardized usability evaluations and outcomes of current DHTs implemented in MS care. Importantly, our results highlight the opportunity to implement improved methods of usability assessment which will have major implications in the future of mobile care for people living with MS.

This review has several limitations that warrant consideration. First, we have included only English-language articles due to a lack of resources for translation. It is possible that articles published in other languages may have included additional information on usability evaluations of DHTs designed for people with MS. Telehealth and virtual telerehabilitation studies were also excluded in the current review. Furthermore, given the heterogeneity in usability principles and methods used to evaluate usability, it was not possible to synthesize the quantitative and qualitative data reported accurately. Nonetheless, we extracted and included these data in Supplementary Fig. 4 for interested readers. The usability of most DHTs was reported to be good, with satisfaction ranging from ~80-90%. The lowest usability scores were typically associated with wearable technologies. Few studies further evaluated the 10-20% of pwMS who struggled with DHT usage. Future research should focus on this sub-group of the MS population to understand how to develop more targeted and usable DHTs.

Methods

We followed the Joanna Briggs Institute guidance for scoping reviews38,39. The review is reported in accordance with the PRISMA Extension for Scoping Reviews40 (Supplementary Fig. 1). We have registered our protocol prospectively in the Open Science Framework: https://osf.io/y7gqp/.

Eligibility Criteria

Participants

Studies focusing on adults (≥18 years old) with MS and/or their caregivers were considered for inclusion. Any subtype of MS, including Clinically/Radiologically Isolated Syndrome (CIS/RIS), Relapsing-Remitting MS (RRMS), Primary Progressive MS (PPMS), and Secondary Progressive MS (SPMS) was eligible for inclusion. We excluded animal studies and studies involving mixed populations (i.e., MS and other conditions) where data from people with MS could not be separated from other conditions. We further excluded studies focusing on formal health and/or social care professionals.

Concept

Studies that described usability characteristics (e.g., comfort, ease of use, accessibility, flexibility, etc.) and/or usability testing methods (e.g., questionnaires, task completion, “Think-Aloud” protocols, interviews, heuristic testing, and focus groups, etc.) of DHTs were included. We excluded multicomponent studies in which data on the DHT component could not be extracted. Studies on electronic medical records, medical monitoring devices, machine learning, artificial intelligence, biomedical applications, systems for intelligent processing of genetic data, and assistive devices, were excluded.

Context

We considered studies conducted in any geographic location and setting (e.g., hospital settings, primary care, community care, or at home) and published in English. Our pre-screening results found limited studies on DHTs in the context of MS prior to 2010. Therefore, to focus on the most recent and relevant DHT studies, we considered studies published from 2010 until the present.

Types of Sources

We included peer-reviewed quantitative, qualitative, and mixed-methods studies. We recognize that computer-based digital technology development may be conducted outside of academia and published in non-traditional or non-peer-reviewed outlets – but in the context of providing evidence-based information for health and social care providers and researchers, peer-reviewed evidence is considered the gold standard. We excluded systematic and non-systematic reviews, dissertations, conference abstracts and proceedings, observational studies, case reports, opinion pieces, commentaries, and protocols.

Search Strategy

We implemented a systematic, peer-reviewed three-step search strategy in line with the framework developed by the Joanna Briggs Institute and in consultation with a health sciences librarian. The process began with a preliminary search in PubMed to find key articles relevant to the three components of the research question: usability, DHTs, and MS. Using these articles, a list of keywords was developed for the search strategy, and the syntax was modified such that it could be applied to the other databases. The systematic search was then run in five databases: Web of Science, OVID Medline, CINAHL, Embase, and medRxiv. Specific to the medRxiv search, only the MS search component was used. An example of the search terms used in Medline can be found in Supplementary Fig. 2.

Selection of Sources of Evidence

All results were uploaded to Covidence (Veritas Health Innovation, Melbourne, Australia) to facilitate de-duplication, screening of titles and abstracts, full-text review, and extraction. Titles and abstract screening were pilot-tested by two reviewers on a random sample of 10 studies before screening. Following pilot testing, all authors were involved in both screening phases, with two independent reviewers examining each article. When reviewers disagreed about the inclusion status of a citation, another reviewer examined the citation, and a three-way discussion was held to reach a consensus. Full texts for all potentially relevant articles were uploaded to Covidence for further screening by three independent reviewers. Discrepancies in the inclusion/exclusion of full-text studies were resolved during a consensus meeting. A manual search of reference lists from the full-text articles included was then performed to identify additional studies that met the inclusion criteria.

Data Charting Process

Data extraction was completed using the Covidence 2.0 customizable template with categories adapted from the Joanna Briggs Institute38. Extracted variables included study characteristics (authors, year of publication, country of origin, study design), participant characteristics (age, sex, disease duration, Expanded Disability Status Scale (EDSS), and MS phenotype), DHT information (name, type, purpose, implementation setting, stage of DHT development) and usability considerations (evaluation method, questionnaire type, and DHT evaluator). We piloted the template, which led to the inclusion of “not reported” options for several items and the addition of examples to some item definitions to enhance consistency and ease of use. Three independent reviewers performed data extraction. When reviewers disagreed about the inclusion status of a citation, another reviewer examined the citation, and a three-way discussion was held to reach a consensus.

Data analysis

Data synthesis was performed in Microsoft Excel (Microsoft Office, 2019). We calculated inter-rater agreement during title and abstract and full-text screening (before the consensus meeting) using Cohen’s Kappa. No formal measures of agreement were used during the data extraction because differences in capitalization and punctuation generated messages of inconsistency, even if the critical content was the same between reviewers. Our focus was on the synthesis of descriptive features of the studies relative to usability, not on a synthesis of actual study results. We used descriptive statistics (frequencies, median and ranges), with data presented graphically and in tabular format as appropriate. We generated descriptive summaries of study characteristics (i.e., frequency/distribution of publication year, country in which the study was conducted, study design), participant characteristics (mean/median age, disease duration, and Expanded Disability Status Scale (EDSS), and frequency/distribution of sex, and MS phenotype), DHTs included in the literature (frequency/distribution of type, implementation setting, stage of DHT development), and usability considerations (frequency/distribution of usability characteristics, testing methods, and usability evaluator).

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

No data sets were generated or analyzed during the current study. The aggregated data analyzed in this study are available from the corresponding author upon reasonable request. This scoping review uses peer-reviewed articles and therefore does not require ethical approval.

Code availability

No code was generated during the current study.

References

Spreadbury, J. H., Young, A. & Kipps, C. M. A comprehensive literature search of digital health technology use in neurological conditions: review of digital tools to promote self-management and support. J Med Internet Res 24, e31929 (2022).

Dillenseger, A. et al. Digital biomarkers in multiple sclerosis. Brain Sci 11, 1519 (2021).

Ziemssen, T. & Haase, R. Digital innovation in multiple sclerosis management. Brain Sciences 12, 40 (2022).

Gromisch, E. S., Turner, A. P., Haselkorn, J. K., Lo, A. C. & Agresta, T. Mobile health (mHealth) usage, barriers, and technological considerations in persons with multiple sclerosis: a literature review. JAMIA Open 4, ooaa067 (2020).

Marziniak, M. et al. The use of digital and remote communication technologies as a tool for multiple sclerosis management: narrative review. JMIR Rehabil Assist Technol 5, e5 (2018).

Marrie, R. A. et al. Use of eHealth and mHealth technology by persons with multiple sclerosis. Multiple Sclerosis and Related Disorders 27, 13–19 (2019).

Scholz, M., Haase, R., Schriefer, D., Voigt, I. & Ziemssen, T. Electronic health interventions in the case of multiple sclerosis: from theory to practice. Brain Sciences 11, 180 (2021).

Specht, B. et al. Multiple Sclerosis in the Digital Health Age: Challenges and Opportunities - A Systematic Review. medRxiv 2023-11 (2023).

Giunti, G., Guisado Fernández, E., Dorronzoro Zubiete, E. & Rivera Romero, O. Supply and demand in mhealth apps for persons with multiple sclerosis: systematic search in app stores and scoping literature review. JMIR Mhealth Uhealth 6, e10512 (2018).

Cummins, N. & Schuller, B. W. Five crucial challenges in digital health. Frontiers in Digital Health 2, 536203 (2020).

Aiyegbusi, O. L. Key methodological considerations for usability testing of electronic patient-reported outcome (ePRO) systems. Qual Life Res 29, 325–333 (2020).

Maramba, I., Chatterjee, A. & Newman, C. Methods of usability testing in the development of eHealth applications: A scoping review. Int J Med Inform 126, 95–104 (2019).

Yen, P.-Y. & Bakken, S. Review of health information technology usability study methodologies. J Am Med Inform Assoc 19, 413–422 (2012).

Grassi, P. A., Garcia, M. E. & Fenton, J. L. Digital Identity Guidelines: Revision 3. NIST SP 800-63-3 https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-63-3.pdf, https://doi.org/10.6028/NIST.SP.800-63-3 (2017)

Story, M. F. Maximizing usability: the principles of universal design. Assist Technol 10, 4–12 (1998).

Ranzani, F. & Parlangeli, O. In Textbook of Patient Safety and Clinical Risk Management (eds Donaldson, L., Ricciardi, W., Sheridan, S. et al.) Ch. 32, Springer, Cham, https://doi.org/10.1007/978-3-030-59403-9_32 (2021)

Mathews, S. C. et al. Digital health: a path to validation. npj Digit. Med. 2, 1–9 (2019).

Howard, Z., Win, K. T. & Guan, V. Mobile apps used for people living with multiple sclerosis: A scoping review. Multiple Sclerosis and Related Disorders 73, 104628 (2023).

Landis, J. R. & Koch, G. G. The measurement of observer agreement for categorical data. Biometrics 33, 159–174 (1977).

Brooke, J. SUS. A quick and dirty usability scale. Usability Eval Indus 189–194, 4–7 (1996).

Thomas, S. et al. Creating a digital toolkit to reduce fatigue and promote quality of life in multiple sclerosis: participatory design and usability study. Jmir Formative Research 5, e19230 (2021).

Hsieh, K., Fanning, J., Frechette, M. & Sosnoff, J. Usability of a fall risk mHealth app for people with multiple sclerosis: mixed methods study. Jmir Human Factors 8, e25604 (2021).

Abernethy, A. et al. The Promise of Digital Health: Then, Now, and the Future. NAM Perspect, https://doi.org/10.31478/202206e (2022)

Agarwal, P. et al. Assessing the quality of mobile applications in chronic disease management: a scoping review. NPJ Digit Med 4, 46 (2021).

Wang, Q. et al. Usability evaluation of mHealth apps for elderly individuals: a scoping review. BMC Medical Informatics and Decision Making 22, 317 (2022).

Deb, R., Bhat, G., An, S., Shill, H. & Ogras, U. Y. Trends in Technology Usage for Parkinson’s Disease Assessment: A Systematic Review. MedRxiv 2021-02 (2021).

Debelle, H. et al. Feasibility and usability of a digital health technology system to monitor mobility and assess medication adherence in mild-to-moderate Parkinson’s disease. Frontiers in Neurology 14, 1111260 (2023).

Dunn, J. Impact of mobility impairment on the burden of caregiving in individuals with multiple sclerosis. Expert Rev Pharmacoecon Outcomes Res 10, 433–440 (2010).

Lorefice, L. et al. What do multiple sclerosis patients and their caregivers perceive as unmet needs? BMC Neurology 13, 177 (2013).

McKay, F. H. et al. Evaluating mobile phone applications for health behaviour change: A systematic review. J Telemed Telecare 24, 22–30 (2018).

Bitkina, O. V. L., Kim, H. K. & Park, J. Usability and user experience of medical devices: An overview of the current state, analysis methodologies, and future challenges. International Journal of Industrial Ergonomics 76, 102932 (2020).

Lewis, J. R. & Sauro, J. The Factor Structure of the System Usability Scale. In Human Centered Design (ed. Kurosu, M.) 94–103, Springer, Berlin, Heidelberg, https://doi.org/10.1007/978-3-642-02806-9_12 (2009).

Peres, S. C., Pham, T. & Phillips, R. Validation of the System Usability Scale (SUS): SUS in the Wild. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 57, 192–196 (2013).

Nielsen, J. & Landauer, T. K. A mathematical model of the finding of usability problems. In Proceedings of the INTERACT ’93 and CHI ’93 Conference on Human Factors in Computing Systems 206–213, Association for Computing Machinery, New York, NY, USA, https://doi.org/10.1145/169059.169166 (1993).

Marrie, R., Kosowan, L., Cutter, G., Fox, R. & Salter, A. Disparities in telehealth care in multiple sclerosis. Neurology-Clinical Practice 12, 223–233 (2022).

Johnson, S. G., Potrebny, T., Larun, L., Ciliska, D. & Olsen, N. R. Usability methods and attributes reported in usability studies of mobile apps for health care education: scoping review. JMIR Medical Education 8, e38259 (2022).

Wohlgemut, J. M. et al. Methods used to evaluate usability of mobile clinical decision support systems for healthcare emergencies: a systematic review and qualitative synthesis. JAMIA Open 6, ooad051 (2023).

Peters, M. D. J. et al. Guidance for conducting systematic scoping reviews. JBI Evidence Implementation 13, 141–146 (2015).

Peters, M. D. J. et al. Updated methodological guidance for the conduct of scoping reviews. JBI Evid Synth 18, 2119–2126 (2020).

Tricco, A. C. et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. https://doi.org/10.7326/M18-0850 (2018).

Acknowledgements

This work was completed as part of an endMS Scholar Program for Researchers IN Training (SPRINT) interdisciplinary learning project while Adam Groh, Colleen Lacey, and Fiona Tea were enrolled in the program. The SPRINT is part of the endMS National Training Program funded by MS Canada.

Author information

Authors and Affiliations

Contributions

AG: Conceptualization, Data curation, Writing – original draft. CL: Conceptualization, Data curation, Writing – original draft. FT: Conceptualization, Data curation, Writing – original draft. AF: Conceptualization, Data curation, Supervision, Writing – review & editing. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tea, F., Groh, A.M.R., Lacey, C. et al. A scoping review assessing the usability of digital health technologies targeting people with multiple sclerosis. npj Digit. Med. 7, 168 (2024). https://doi.org/10.1038/s41746-024-01162-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-024-01162-0

- Springer Nature Limited