Abstract

Vitrinite reflectance (VR) is a critical measure of source rock maturity in geochemistry. Although VR is a widely accepted measure of maturity, its accurate measurement often proves challenging and costly. Rock–Eval pyrolysis offers the advantages of being cost-effective, fast, and providing accurate data. Previous studies have employed empirical equations and traditional machine learning methods using T-max data for VR prediction, but these approaches often yielded subpar results. Therefore, the quest to develop a precise method for predicting vitrinite reflectance based on Rock–Eval data becomes particularly valuable. This study presents a novel approach to predicting VR using advanced machine learning models, namely ExtraTree and XGBoost, along with new ways to prepare the data, such as winsorization for outlier treatment and principal component analysis (PCA) for dimensionality reduction. The depth and three Rock–Eval parameters (T-max, S1/TOC, and HI) were used as input variables. Three model sets were examined: Set 1, which involved both Winsorization and PCA; Set 2, which only included Winsorization; and Set 3, which did not include either. The results indicate that the ExtraTree model in Set 1 demonstrated the highest level of predictive accuracy, whereas Set 3 exhibited the lowest level of accuracy, confirming the methodology's effectiveness. The ExtraTree model obtained an overall R2 score of 0.997, surpassing traditional methods by a significant margin. This approach improves the accuracy and dependability of virtual reality predictions, showing significant advancements compared to conventional empirical equations and traditional machine learning methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Indicating Kerogen's thermal maturity is an important parameter in petroleum exploration because a rock's potential to generate hydrocarbons is directly related to Kerogen's quantity, quality, and maturity. Kerogen is the primary organic matter that can generate gas or oil during its evolution and thermal cracking. Geoscientists use a variety of methods to assess the source rocks and determine the quantity, type, and level of thermal maturity of the organic material within them1. There are several indicators of maturity characterization. Vitrinite reflectance (VR) is one of the main ways to measure how mature kerogen is, and it also shows how much hydrocarbon can be produced2. As a maceral, vitrinite is found in many kerogens, and as temperature increases, it undergoes complex reactions that increase reflectance. Thus, organic matter's thermal maturity is proportional to vitrinite reflectance. The determination of maturation by vitrinite is generally carried out with sophisticated microscopic instrumentation and expertise. It is possible to figure out the vitrinite reflectance and thermal maturity by looking at the reflectance levels at hundreds of sample points spread out over a very small area3. In addition to vitrinite reflectance, other maturity indicators such as the thermal alteration index (TAI) and the conodont alteration index (CAI) also contribute to assessing maturity. However, these indicators face significant limitations, including the lack of necessary macroorganisms and organic matter prior to the middle Paleozoic, the specificity of carbonate rocks, and subjective interpretation errors, which limit their widespread application4.

Vitrinite reflectance (VR) is considered a robust diagnostic tool for maturity investigation, applicable across various maturity levels5. Using vitrinite reflectance to figure out thermal maturity has been used a lot in the oil industry. However, this method has problems like mistakes made by humans, the lack of vitrinite particles in some cases, high costs, and analysis that takes a long time3,6. Rock–Eval pyrolysis, an alternative approach, is a well-known and highly effective geochemical technique that provides valuable insights into organic matter properties7,8. This technique is widely used in the evaluation of petroleum source rocks because it determines parameters such as S1 (formerly generated hydrocarbon), S2 (remaining hydrocarbon generation potential), TOC (total organic carbon), T-max (temperature of S2 maximum), HI (hydrogen index), and OI (oxygen index)6. In many cases, scientists use T-max as a measure of thermal maturity. However, some researchers dispute the direct correlation between T-max and thermal maturity suggesting that relying solely on T-max may lead to inaccuracies. Factors such as changes in Rock–Eval apparatus conditions, sample matrix, and bitumen's heavy components7,8. affect the parameter T-max, which is critical for thermal maturity determination. These factors can lead to inaccurate conclusions about the proper maturity level9. Additionally, situations with multiple S2 peak maxima or weak intensity further complicate T-max determination10. Moreover, T-max's susceptibility to facies effects makes it unreliable for thermal maturity interpretation11.

Despite the limitations mentioned earlier, RockEval offers certain advantages over vitrinite reflectance (VR), including convenient usage, prompt measurement, non-subjectivity, and availability in sediments with no vitrinite particles. Based on these advantages, it is intriguing to make it an alternative to vitrinite reflectance through the simple procedure of Rock–Eval pyrolysis. Some researchers investigated the relationship between T-max and VR parameters and proposed equations to calculate vitrinite reflectance based on T-max data. However, it is important to note that, despite their usefulness, all the equations mentioned earlier have certain limitations. For example, in some cases, they may suffer from low coefficients of determination or provide incorrect predictions. Additionally, these types of vitrinite reflectance calculations are based on simple correlations using only one parameter (T-max), which increases uncertainties3,10,12,13,14.

On the other hand, machine learning techniques have significant advantages when it comes to complex problems. With the help of machine learning, it is possible to predict the Vitrinite reflectance and thermal maturity by using a combination of Rock–Eval parameters. This approach provides the benefits of Rock–Eval, such as convenient usage and data availability, while also addressing the deficiencies of vitrinite reflectance, such as time-consuming, subjective errors, and data unavailability. Several researchers have used machine learning to forecast thermal maturity through various methods, such as utilizing well-log data or determining vitrinite and maturity automatically. However, only a few studies have attempted to predict vitrinite reflectance.

Instead of using core samples, many studies attempted to determine VR and thermal maturity by evaluating wire logging data. Feng, et al.15 have introduced an innovative approach for forecasting the thermal maturity of shale oil reservoirs. This method utilizes both conventional logs and nuclear magnetic resonance (NMR) logs. The validation using well log data showed minimal mean absolute and relative errors, confirming the method's reliability in evaluating thermal maturity in shale oil fields15. A separate study by Safaei-Farouji and Kadkhodaie16 looks at how well individual and hybrid machine learning methods work at estimating vitrinite reflectance using data from petrophysical well logs. The system utilizes several techniques, such as radial basis function, neural network, support vector machine, Gaussian process regression, random forest, and decision tree. The combination of the WACM and ICA has demonstrated superior performance and exhibits a high level of accuracy and dependability in estimating VR. This makes it a useful tool for evaluating source rocks and studying petroleum systems in the Perth Basin16. However, the suggested papers' reliance on logging data instead of core samples presents numerous issues. Firstly, logging data is inherently indirect, which means it may not provide accurate or precise information. Additionally, logging data is limited to the reservoir interval, where the source rocks are found, and is not accessible outside this region. Furthermore, in comparison to current papers, our research is far more precise.

As a noble work, Sadeghtabaghi et al.17 look into how to use machine learning and Rock–Eval pyrolysis data to predict vitrinite reflectance (VR) values. The study compares the performance of 15 different machine-learning models and empirical equations for VR prediction in the Persian Gulf. For predicting vitrinite reflectance, the decision tree (DT) model worked the best. It had the smallest average absolute relative deviation (AARD) in both the train and total data sets. Additionally, the DT model exhibited the highest coefficient of determination (R2) in all three train, test, and total data groups. The DT model's inputs for the constructed models included the depth, T-max, S1/TOC, and HI parameters17.

This paper aims to improve the accuracy of VR not only by changing the models but also by implementing new preprocessing methods that have been ignored in many previous studies. As a result, this paper investigates the effect of outlier treatment methods and dimensionality reduction techniques on enhancing the results. Besides, a K-fold cross-validation is utilized in this study to address the reliability of the test results and the robustness of the models, a crucial aspect that is often overlooked in other similar research studies. This study presents a new and effective approach that can accurately predict vitrinite reflectance using Rock–Eval parameters. The method achieves a high level of accuracy, with an overall R2 score of 0.997, surpassing traditional methods by a significant margin.

Geological setting

In this study, in order to achieve the highest level of generality in the results, the dataset is carefully chosen among various sources. Rock formations in various oil fields consist of sediments from a diverse range of ages. The samples belong to 10 distinct oil fields that are distributed in a broad area of the Iranian sector of the Persian Gulf, which is known as the world’s richest region in terms of hydrocarbon resources. The dataset includes various source rocks, primarily the Pabdeh, Gurpi, and Kazhdumi Formations, as well as the Ahmadi member of Sarvak. These formations consist of diverse lithologies, including shale, calcareous shale, marl, argillaceous limestone, and dark bituminous limestone. The samples in question span from the Paleocene to the lower Cretaceous period17. Figure 1 uses red points to show the geographical locations of oilfields from which data are collected.

The Location of 10 oilfields in the Iranian sector of the Persian Gulf from which the dataset samples were taken. Oilfield locations are shown in red points. This image is taken from the google map and it is available via https://www.google.com/maps/@27.157306,49.7984314,1441295m/data=!3m1!1e3?entry=ttu.

Material and method

Dataset overview

The study's dataset consists of 118 data points from 10 distinct oil fields which were mentioned in the previous section. The analysis was initiated with a comprehensive examination of the dataset. The objective was to undergo a comprehensive model evaluation journey, considering various aspects of data preprocessing and model performance. It is important to mention that no preprocessing methods were used when the dataset was gathered.

Table 1 provides a summary of the statistical characteristics of the measured samples before any preprocessing steps.

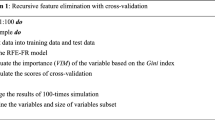

Subsequently, the dataset is carefully divided into an 80% training set and a 20% testing set. There are several preprocessing procedures that can be used to prepare the dataset for modeling and divide it into the training and testing parts18,19,20. Figure 2 illustrates the procedures employed in this study to guarantee the high quality of the dataset for future stages.

Outlier treatment: winsorization

Input data commonly suffers from inaccuracies, errors, or extreme values that manifest as outliers. Treating such outliers can significantly improve the performance of machine learning methods. To this end, various outlier detection and correction techniques have been introduced, one of which is Winsorization. This technique is named after the concept of transforming data to mirror the skyline of Windsor Castle, where extreme values are flattened to achieve a smoother and less jagged profile. Winsorization can be applied at various levels, such as 5% or 1%, by trimming the lowest and highest percentages of data. This technique is extensively used across different fields, including finance and statistics, where outliers can substantially impact analysis outcomes. Analysts strive to create a more stable and robust representation of the data by employing Winsorization, while also accounting for the potential influence of extreme values21.

The process for this method begins with input data, followed by the identification of outliers, according to Fig. 3. To facilitate this, the mean and standard deviation of the dataset are calculated. Based on these statistics, upper and lower thresholds are defined to determine the acceptable range for data values. The data is then winsorized by replacing outliers beyond these thresholds with the nearest threshold values. After this step, a check is performed to determine if any outliers remain. If outliers are still detected, the process iterates; otherwise, it proceeds to output the winsorized data. The integrity of the winsorized data is then verified, and a final report is generated, summarizing the results and ensuring the dataset is ready for further analysis21.

Dimension reduction and decorrelation: principal component analysis (PCA)

The efficacy of machine learning algorithms heavily depends on the dimensionality of input parameters and their correlation. Therefore, it becomes imperative to address these issues to enhance the performance of such algorithms. The present study employs principal component analysis (PCA) as a tool to not only reduce dimensionality but also to decorrelate the input variables. This approach has the potential to improve model accuracy and efficiency, making it an essential tool in the field of machine learning. PCA allows for transforming the original variables into a new set of uncorrelated variables, known as principal components while retaining most of the variability present in the data22,23. Figure 4 illustrates the PCA process, beginning with input data. Initially, the data is standardized to ensure all features contribute equally to the analysis. Following this, the covariance matrix is computed to capture the relationships between the features. The next step is eigendecomposition, which breaks down the covariance matrix into eigenvalues and eigenvectors. Eigenvalues are used to quantify the variance captured by each eigenvector, which represents directions in the feature space. The principal components are selected based on the eigenvalues, and the data is then projected onto this new space defined by these components, resulting in transformed data that retains the most significant patterns while reducing dimensionality. This step-by-step process enhances the interpretability and efficiency of data analysis in various applications22,23,24.

Ensemble methods: bagging and boosting

Ensemble methods, such as bagging and boosting, enhance machine learning model performance and are utilized in the study. These ensemble methods combine the predictions of multiple models to improve the overall performance and accuracy of the system25.

Bagging is an ensemble technique that involves training numerous instances of the same base model on different subsets of the training data sampled with replacement. For regression, the final prediction is obtained by averaging the individual model predictions or by majority voting for classification26,27. In the current study, bagging is implemented using the ExtraTrees algorithm, which is an ensemble method based on decision trees. ExtraTrees enhances the model’s robustness by introducing additional randomness in the tree-building process26,28,29.

Bagging trains several models at the same time and then combines their results. Boosting, on the other hand, trains weak models one after the other, giving more weight to cases that were wrongly classified each time. The final model is a weighted combination of these weak learners30,31. In this study, the researchers employed XGBoost, an efficient and scalable implementation of gradient boosting, to enhance predictive accuracy. XGBoost’s ability to handle missing data, regularization techniques, and parallel computation makes it a suitable choice for boosting models30,31,32.

ExtraTree and XGBoost are better at estimating vitrinite reflectance than linear regression, support vector machines (SVM), neural networks, and decision trees. This is because they can deal with complex, non-linear relationships and interactions in the data. Linear models are limited to linear relationships, making them less effective for this task. SVMs, while powerful, are computationally expensive and require extensive tuning. Neural networks can capture complex patterns but demand significant computational resources and a large amount of data. Decision trees, although interpretable, are prone to overfitting, a problem mitigated by the ensemble nature of ExtraTree and the regularization in XGBoost. ExtraTree’s increased randomness in split selection reduces overfitting and enhances model robustness, while XGBoost incorporates regularization and handles missing values inherently. Both models provide high predictive power, efficiency, and interpretability, making them superior choices for this geological application28,33.

Result and discussion

Winsorization outlier detection

To ensure data reliability, the data preprocessing efforts began with implementing the Winsor outlier detection method, a strategy rooted in domain expertise. Values below the 5th percentile and above the 95th percentile were identified as outliers and rectified.

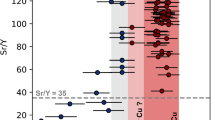

Boxplots were implemented for individual features to substantiate the efficacy of the outlier detection strategy, as presented in Fig. 5. These visualizations provided compelling evidence of the successful removal of data points lying beyond Q3 + 1.5 times the Interquartile Range (IQR) and below Q1 − 1.5 times the IQR33,34. This visual confirmation underscored the rigor of outlier identification and resolution35.

A comparative exploration of dataset statistics was conducted for post-outlier consideration, comparing outcomes in Tables 1 and 2. The noticeable shift in central tendency highlighted the transformative effect of outlier detection on elevating data quality.

Relationship between features

With a heightened awareness of data quality, the study addressed multicollinearity among certain features using a correlation heatmap analysis (Fig. 6). The correlation heatmap shows the correlations between the input features and the target variable. Note that features, also known as independent variables, should not have a high correlation with each other. If their correlation falls between − 0.3 and 0.3, they can be considered independent variables. If their correlation exceeds 0.3 or falls below − 0.3, they are considered highly correlated33,36,37,38. The p-values in Fig. 6 indicate the statistical significance of the correlations. A p-value less than 0.05 typically suggests that the correlation is significant and not due to random chance39.

Analysis

-

a)

T-max vs. Depth: There is a strong correlation between Depth and T-max, with a correlation coefficient of 0.82. This suggests that these two features are closely related. The reason for this correlation could be that as the depth increases, the organic matter produces more light and volatile components, which in turn increases the temperature of peak pyrolysis or T-max.

-

b)

S1/TOC vs. Depth: The S1/TOC and depth variables have a negative correlation value of 0.30. A negative correlation between both attributes means that if one attribute grows, the other attribute declines. Nevertheless, the modest correlation implies that these two attributes may be almost unrelated.

-

c)

HI vs. Depth: The correlation between depth and HI is positive, with a value of 0.58. The positive correlation suggests that these two features exhibit similar behavior and move in the same direction. It may be that the amount of hydrogen in organic matter, which HI measures, tends to increase due to thermal cracking that occurs at higher temperatures and depths. So, as the depth increases, the HI value also tends to rise.

-

d)

T-max vs. S1/TOC: T-max and S1/TOC have a negative correlation of -0.37. As a result, these two features tend to move in opposite directions. S1/TOC can indicate the hydrocarbon already produced, which increases with depth. It can also partially demonstrate thermal maturity, similar to T-max. However, it behaves the opposite way after a certain depth and decreases with increasing depth.

-

e)

T-max vs. HI: There seems to be a positive correlation between T-max and HI, with a value of 0.55, meaning that they tend to move in the same direction. Based on the type of kerogen, HI can be used to assess the quality of hydrocarbons. However, it is worth noting that interpreting the relationship between HI and T-max is quite complex and is influenced by various factors. In fact, for a particular type of kerogen, increasing T-max might decrease the amount of HI.

-

f)

S1/TOC vs. HI: The S1/TOC and HI features seem to have a weak negative correlation of − 0.21, indicating that they tend to move in opposite directions. However, this correlation is not statistically significant, suggesting that the two features are likely independent.

Finally, after analyzing the correlation between the target and features, it can be concluded that VR is strongly and positively correlated with Depth (correlation coefficient of 0.85, p-value 0.020) and T-max (0.71, p-value 0.021), suggesting that as Depth and T-max increase, VR also significantly increases. There is a weaker but still significant positive correlation between HI and VR (0.24, p-value 0.010), implying a slight positive relationship. The correlation between S1/TOC and VR is very weak (0.10) and likely non-significant. Thus, depth and T-max are the most influential features in VR, followed by HI.

Application of principal component analysis

According to the previous section, principal component analysis (PCA) should be implemented because of the high correlation between some features. The PCA method is used to address the issue of multicollinearity and reduce the dataset's dimensions. To better understand the effectiveness of PCA, a metric with the name of Explained Variance Ratio (EVR) has been implemented, which helps to determine the percentage of the total variance of the dataset captured by each principal component. By looking at Fig. 7, we can say that three components are enough because they capture 95% of the dataset's total variance. This is because they include both dimension reduction and information retention. Although 2 components are better in terms of dimensionality reduction, 85 percent of the total variance of the dataset, which is captured by 2 components, is much lower than 95% for 3 components. As a result, PCA with 3 components is a better choice.

This study further validated the efficacy of the dimensionality reduction strategy by examining the correlation among the three retained principal components, as shown in Fig. 8. Because the correlation between the components is so low, this visualization shows that they were not related to each other. This proves that the multicollinearity was successfully reduced.

These results, showcased in Table 3, provided an updated analysis of dataset statistics with three components.

Comparison of predictions

While evaluating the model, several metrics were utilized to gauge its accuracy and predictive capabilities on both the training and testing datasets. These metrics included the Average Absolute Relative Deviation (AARD), R-squared (R2), and Root Mean Squared Error (RMSE). Each of these metrics provided a unique perspective on the model's performance, allowing for a comprehensive understanding of its strengths and limitations.

where N, Oiexp, Oipred, and \(\overline{O }\) represent the total number of data samples, measured VR, predicted VR, and mean VR, respectively. Table 4 shows the results of predictions based on metrics from Eqs. (1), (2), and (3) for sets 1, 2, and 3.

The modeling involved three sets for both the ExtraTree and XGBoost models, reflecting different combinations of preprocessing techniques:

Set 1: After both PCA and Winsorization.

Set 2: With winsorization and without PCA.

Set 3: Without both PCA and Winsorization.

This comprehensive approach led to the implementation of six distinct models, each showing the impact of preprocessing choices on model performance.

Set 1: Models are preferred due to the combined benefits of outlier winsorization and PCA. These models exhibit better performance on the test dataset, with reduced errors and higher predictive accuracy than Sets (2) and (3). Outlier winsorization effectively mitigates extreme values, whereas PCA contributes to the model. The simplification leads to superior results in the test dataset.

Set 2: Models that underwent outlier winsorization without PCA perform reasonably well on the test dataset. However, their performance falls slightly short of that of Set 1. While outlier winsorization proves effective in enhancing generalization, the absence of PCA limits the extent of improvement achievable.

Set 3: Models lacking both PCA and outlier winsorization exhibit the poorest test dataset performance among the three sets. They struggle to handle extreme values, leading to higher errors and reduced predictive accuracy, which highlights the necessity of data preprocessing, particularly outlier treatment, for achieving better test dataset results.

It is important to note that ExtraTree models outperform their XGBoost counterparts on all three sets of test datasets.

In addition to comparing the results of different sets (Set 1, Set 2, and Set 3), the performance of the models will be compared with the best model of the Sadeghtabaghi et al.17 study, which is the Decision Trees (DT). The DT results are shown in Table 5.

-

a.

Train RMSE and R2: The ExtraTree model (Set 1) achieved impressive results with a train RMSE of 0.004 and a train R2 of 0.998; in contrast, the DT model had a train RMSE of 0.025 and a train R2 of 0.929, and the XGBoost model (Set 1) also performed well with a train RMSE of 0.012 and a train R2 of 0.990. Furthermore, the ExtraTree (Set 2) and XGBoost (Set 2) models outperformed the DT model with respect to train RMSE and train R2. Even the ExtraTree (Set 3) and XGBoost (Set 3) models, which had the highest train RMSE and lowest train R2, still performed better than the Sadeghtabaghi et al.17 model.

-

b.

Test RMSE and R2: In the test set, the models performed significantly better than DT. For example, ExtraTree (Set 1) achieved a test RMSE of 0.01 and a test R2 of 0.993, whereas DT had a test RMSE of 0.02 and a test R2 of 0.929.

In the test set, XGBoost (Set 1) also outperformed DT, with a test RMSE of 0.02 and a test R2 of 0.975. Similar trends were observed for ExtraTree (Set 2) and XGBoost (Set 2), as well as ExtraTree (Set 3) and XGBoost (Set 3).

c. Overall RMSE, R2, and AARD: When considering overall performance across all cases, models consistently outperformed DT. For instance, ExtraTree (Set 1) achieved an overall RMSE of 0.06 and an overall R2 of 0.997, whereas DT had an overall RMSE of 0.03 and an overall R2 of 0.929.

Hyperparameters optimization using a grid search

Grid search involves an exhaustive exploration of predefined hyperparameter combinations, systematically evaluating their impact on model performance. This meticulous process played a pivotal role in fine-tuning models, enhancing predictive accuracy and generalization capabilities40,41. For both the ExtraTree (Set 1) and XGBoost (Set 1) models, a range of hyperparameters, including tree depths and the number of estimators, were explored. This comprehensive search ensured that many possible configurations, such as different numbers for depth for each model, were considered. Ultimately, this process led to the identification of the optimal hyperparameters. Table 6 shows the selected hyperparameters for ExtraTree and XGBoost models within the context of each preprocessing case.

The meticulously optimized hyperparameters signify the culmination of the exhaustive optimization process. They ensure that models are precisely tuned for optimal performance across diverse preprocessing sets.

Figures 9 and 10 visually confirm that ExtraTree models consistently outperform XGBoost models across various preprocessing sets. These plots demonstrate that ExtraTree models generate predictions that closely align with the outcomes, indicating their superior ability to capture underlying data patterns.

Figures 11 and 12 show the relative deviation of the developed models, revealing that both ExtraTree and XGBoost models achieve relative deviations between -5 and 5 for the majority of the dataset. This outcome serves as evidence of the models’ accuracy and efficiency.

k-fold cross-validation

As a cornerstone of model evaluation, tenfold cross-validation was implemented to assess model performance rigorously across multiple subsets of the data. tenfold cross-validation outcomes for both models are shown in Table 7.

This approach provided a more comprehensive view of model stability and generalization38.

The performance of ExtraTree(1) and XGBoost(1) on the test set was exceptional, with 0.993 R2-score and 0.974 R2-score, respectively. This indicates that the models could make accurate predictions on new data after PCA and outlier Winsor preprocessing. The tenfold cross-validation results provided a comprehensive view of the models’ performance on different subsets of data. ExtraTree and XGBoost consistently showed high R2 values of around 0.977 and 0.955, respectively, for fold means. This suggests that, on average, ExtraTree (1) performed slightly better across all cross-validation folds. The fact that both models have a minimal variance in R2 values across folds indicates their consistent performance. This implies that the models are stable and robust.

The models are also good at capturing a lot of the variation in the data, as shown by the consistently high R2 values across cross-validation folds and test set evaluations. In other words, they excellently explain the relationship between independent and dependent variables. The strong performance on cross-validation reinforces the models’ ability to generalize to new data, which is consistent with their performance on the test set.

Conclusion

This study presents a novel method for predicting VR using advanced machine learning models, ExtraTree and XGBoost, enhanced by Winsorization for outlier treatment and PCA for dimensionality reduction. These techniques significantly improve model performance, with the ExtraTree model achieving an R2 score of 0.993. Also, several important advantages have been mentioned below.

-

The proposed models offer a reliable and efficient method for assessing the thermal maturity of source rocks, optimizing petroleum exploration by reducing costs and enhancing decision-making processes. Besides, these models enhanced the accuracy of the previous works related to the prediction of vitrinite reflectance using machine learning or other traditional methods.

-

The study underscores the critical role of data preprocessing in machine learning modeling, emphasizing the importance of outlier treatment and dimensional reduction techniques in addition to selecting an appropriate modeling algorithm. In this study, the integration of Winsorization mitigates the impact of extreme values, while PCA reduces dimensionality and decorrelates input variables. The results showed that Winsorization and PCA significantly enhance predictive performance.

-

Across all preprocessing sets, ExtraTree models consistently outperformed XGBoost models, demonstrating superior predictive accuracy.

-

The stability and generalization capabilities of the models were confirmed by ten-fold cross-validation, with consistent R2 values across folds, indicating effective capture of data variance and accurate predictions on unseen data.

As recommendations for future study, the validation of the proposed workflow can be conducted across various underground conditions to ensure robustness. Also, future research can explore alternative outlier detection methods such as Isolation Forest or DBSCAN and other dimensionality reduction techniques like t-SNE or UMAP to enhance model robustness and accuracy.

Data availability

The data will be available upon request. The corresponding author, Hadi Mahdavi Basir, should be contacted for this purpose.

References

Allen, P. A. & Allen, J. R. Basin analysis: Principles and application to petroleum play assessment. (John Wiley & Sons, 2013).

Cardott, B. J. Introduction to vitrinite reflectance as a thermal maturity indicator. AAPG Search and Discovery article (2012).

Wust, R. A., Hackley, P. C., Nassichuk, B. R., Willment, N. & Brezovski, R. In SPE Asia Pacific Unconventional Resources Conference and Exhibition. SPE-167031-MS (SPE).

McCarthy, K. et al. Basic petroleum geochemistry for source rock evaluation. Oilfield Rev. 23, 32–43 (2011).

Maehlmann, R. F. & Le Bayon, R. Vitrinite and vitrinite like solid bitumen reflectance in thermal maturity studies: Correlations from diagenesis to incipient metamorphism in different geodynamic settings. Int. J. Coal Geol. 157, 52–73 (2016).

Dembicki, H. Practical petroleum geochemistry for exploration and production (Elsevier, 2022).

Espitalié, J. et al. Rapid method for source rock characterization, and for determination of their petroleum potential and degree of evolution. Rev. Inst. Fr. Pet 31, 23–42 (1977).

Record, C. U. I. S. Méthode rapide de caractérisation des roches mètres, de leur potentiel pétrolier et de leur degré d’évolution. Rev. Inst. Fr. Pet. 32, 23–42 (1977).

Espitalié, J. (Editions Technip, 1986).

Peters, K. E. Guidelines for evaluating petroleum source rock using programmed pyrolysis. AAPG Bull. 70, 318–329 (1986).

Snowdon, L. R. Rock-Eval Tmax suppression: Documentation and amelioration. AAPG Bull. 79, 1337–1348 (1995).

Jarvie, D. M. Shale resource systems for oil and gas: Part 2—Shale-oil resource systems. (2012).

Jarvie, D. M., Morelos, A. & Han, Z. Detection of pay zones and pay quality, Gulf of Mexico: Application of geochemical techniques (2001).

Galimov, E. & Rabbani, A. Geochemical characteristics and origin of natural gas in southern Iran. Geochem. Int. 39, 780–792 (2001).

Feng, C. et al. Prediction of vitrinite reflectance of shale oil reservoirs using nuclear magnetic resonance and conventional log data. Fuel 339, 127422 (2023).

Safaei-Farouji, M. & Kadkhodaie, A. A comparative study of individual and hybrid machine learning methods for estimation of vitrinite reflectance (Ro) from petrophysical well logs. Model. Earth Syst. Environ. 8, 4867–4881 (2022).

Sadeghtabaghi, Z., Talebkeikhah, M. & Rabbani, A. R. Prediction of vitrinite reflectance values using machine learning techniques: A new approach. J. Pet. Explor. Prod. 11, 651–671 (2021).

Kaleem, W., Tewari, S., Fogat, M. & Martyushev, D. A. A hybrid machine learning approach based study of production forecasting and factors influencing the multiphase flow through surface chokes. Petroleum 10, 354–371 (2024).

Tewari, S. & Dwivedi, U. In Abu Dhabi International Petroleum Exhibition and Conference. D012S122R001 (SPE).

Tewari, S. & Dwivedi, U. In 2019 IEEE Region 10 Symposium (TENSYMP), pp. 90–95 (IEEE).

Dixon, W. J. & Yuen, K. K. Trimming and winsorization: A review. Statistische Hefte 15, 157–170 (1974).

Wold, S., Esbensen, K. & Geladi, P. Principal component analysis. Chemometr. Intell. Lab. Syst. 2, 37–52 (1987).

Labrín, C. & Urdinez, F. In R for Political Data Science, pp. 375–393 (Chapman and Hall/CRC, 2020).

Bro, R. & Smilde, A. K. Principal component analysis. Anal. Methods 6, 2812–2831 (2014).

Dietterich, T. G. in International workshop on multiple classifier systems. 1–15 (Springer).

Breiman, L. Bagging predictors. Mach. Learn. 24, 123–140 (1996).

Talebkeikhah, M., Sadeghtabaghi, Z. & Shabani, M. A comparison of machine learning approaches for prediction of permeability using well log data in the hydrocarbon reservoirs. J. Hum. Earth Future 2, 82–99 (2021).

Kharazi Esfahani, P., Peiro Ahmady Langeroudy, K. & Khorsand Movaghar, M. R. Enhanced machine learning—ensemble method for estimation of oil formation volume factor at reservoir conditions. Sci. Rep. 13, 15199 (2023).

Geurts, P., Ernst, D. & Wehenkel, L. Extremely randomized trees. Mach. Learn. 63, 3–42 (2006).

Schapire, R. E. The boosting approach to machine learning: An overview. In Nonlinear estimation and classification, pp. 149–171 (2003).

Freund, Y., Schapire, R. & Abe, N. A short introduction to boosting. J. Jpn. Soc. Artif. Intell. 14, 1612 (1999).

Peiro Ahmady Langeroudy, K., Kharazi Esfahani, P. & Khorsand Movaghar, M. R. Enhanced intelligent approach for determination of crude oil viscosity at reservoir conditions. Sci. Rep. 13, 1666 (2023).

Kharazi Esfahani, P., Akbari, M. & Khalili, Y. A comparative study of fracture conductivity prediction using ensemble methods in the acid fracturing treatment in oil wells. Sci. Rep. 14, 648 (2024).

Hubert, M. & Vandervieren, E. An adjusted boxplot for skewed distributions. Comput. Stat. Data Anal. 52, 5186–5201 (2008).

Ghosh, D. & Vogt, A. In Joint statistical meetings, pp. 3455–3460.

Sedgwick, P. Pearson’s correlation coefficient. BMJ 345 (2012).

Schober, P., Boer, C. & Schwarte, L. A. Correlation coefficients: Appropriate use and interpretation. Anesth. Anal. 126, 1763–1768 (2018).

Rodriguez, J. D., Perez, A. & Lozano, J. A. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. Mach. Intell. 32, 569–575 (2009).

Thiese, M. S., Ronna, B. & Ott, U. P value interpretations and considerations. J. Thorac. Dis. 8, E928 (2016).

Liashchynskyi, P. & Liashchynskyi, P. Grid search, random search, genetic algorithm: a big comparison for NAS. arXiv preprint arXiv:1912.06059 (2019).

Feurer, M. & Hutter, F. Hyperparameter optimization. In Automated machine learning: Methods, systems, challenges, pp. 3–33 (2019).

Acknowledgements

We would like to thank Zahra Sadeghtabaghi for sharing the dataset.

Funding

This research received no specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Parsa Kharazi Esfahani: modeling, data science, writing—original draft and visualization; Hadi Mahdavi Basir: validation, supervision, writing—review and editing; Ahmad Reza Rabbani: approach validation and results consistency.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kharazi Esfahani, P., Mahdavi Basir, H. & Rabbani, A.R. A rigorous workflow and comparative analysis for accurate determination of vitrinite reflectance using data-driven approaches in the Persian Gulf region. Sci Rep 14, 20366 (2024). https://doi.org/10.1038/s41598-024-71521-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-71521-0

- Springer Nature Limited