Abstract

Bioinformatics tools are essential for performing analyses in the omics sciences. Given the numerous experimental opportunities arising from advances in the field of omics and easier access to high-throughput sequencing platforms, these tools play a fundamental role in research projects. Despite the considerable progress made possible by the development of bioinformatics tools, some tools are tailored to specific analytical goals, leading to challenges for non-bioinformaticians who need to integrate the results of these specific tools into a customized pipeline. To solve this problem, we have developed the BioPipeline Creator, a user-friendly Java-based GUI that allows different software tools to be integrated into the repertoire while ensuring easy user interaction via an accessible graphical interface. Consisting of client and server software components, BioPipeline Creator provides an intuitive graphical interface that simplifies the use of various bioinformatics tools for users without advanced computer skills. It can run on less sophisticated devices or workstations, allowing users to keep their operating system without having to switch to another compatible system. The server is responsible for the processing tasks and can perform the analysis in the user's local or remote network structure. Compatible with the most important operating systems, available at https://github.com/allanverasce/bpc.git.

Similar content being viewed by others

Introduction

The development of computational tools in the field of bioinformatics has completely changed the possibilities of biological analysis and made bioinformatics an indispensable resource for numerous research projects in omics sciences. These software applications have evolved in recent years and have become critical components and, in many cases, integral parts of various biological workflows. They are able to process the large amounts of data that are produced and enable discoveries to be made. In the field of genomics, with next-generation sequencing platforms and the increased accessibility of these technologies, their application has expanded to various tasks such as gene expression analysis, gene prediction, genome assembly and annotation, genetic diversity analysis, and comparative genomic analysis1.

Many tools used in omics research are designed to perform automated actions sequentially based on an input file containing biological information, typically using the result of one step as input for the next step, creating a so-called pipeline or workflow, such as the widely used Broad Institute’s Genome Analysis Toolkit (GATK) bioinformatics pipeline2. In structural genomics, GATK is a comprehensive software package that includes genomics tools for variant detection, genotyping, and annotation. It provides a standardized pipeline for processing next-generation sequencing data, including read alignment, variant calling, and quality control. It has been used extensively in large genome projects, such as the 1000 Genomes Project and the Cancer Genome Atlas2.

Another popular bioinformatics workflow is the ENCODE (Encyclopedia of DNA Elements) Data Coordinating Center, a pipeline specifically designed for the analysis of functional genomics data, such as ChIP-seq (Chromatin Immunoprecipitation Sequencing), RNA-seq (RNA Sequencing), and DNase-seq (DNase I Hypersensitive Site Sequencing). It comprises various tools and algorithms for processing raw data, for quality control, and for gaining valuable insights into gene regulation and chromatin structure3.

In environmental genomics, microbial ecology, and metagenomics, 16S analysis, a valuable resource for the discovery and classification of microorganisms, has led to the emergence of new frontiers with software such as QIIME (Quantitative Insights Into Microbial Ecology). QIIME is a pipeline that has become particularly important in the field of metagenomics. It is a comprehensive suite of tools for analyzing microbial communities using high-throughput sequencing data. It offers a range of modules for analysis, such as quality filtering, taxonomic classification, and diversity inference. QIIME has been widely used for microbiome studies and contributes to our understanding of the role of microbial communities in diverse environments, health, and disease4.

Galaxy is an online platform widely used in the field of bioinformatics that provides an accessible and user-friendly approach for analyzing genomic, metagenomic, transcriptomic, and proteomic data5. One of its main advantages is its intuitive interface, which allows researchers to perform complex analyses of biological data even without in-depth programming experience. In addition, Galaxy offers a wide range of pre-installed tools and pipelines that simplify the analysis process and save researchers time6.

However, the Galaxy has some disadvantages. Its dependence on the Internet can be an obstacle, as a stable connection is required to access the platform and use it efficiently6. In addition, it is difficult to install new software for use within the platform. Another limitation is the limited space for storing files and working with data. In addition, processing capacity may be limited compared to on-premises solutions, which can affect the speed of performing computationally intensive analyses6.

Significant advances in sequencing technologies, the development of bioinformatics tools, and the modernization of omics have opened new horizons for biological and medical research. Despite these new possibilities, most of the tools developed to analyze such data are designed to perform specific analyses, which are usually command-line-based. When users need to perform analyses by integrating the results of these tools into a personalized and private pipeline, this can become a difficult task, especially for those whose laboratory routine focuses on laboratory analyses and who have little experience with computers78.

Considering that modern experiments, especially in the field of "omics" often involve large amounts of data, bioinformatics analysis is obviously necessary in a research laboratory, and despite the modernization of bioinformatics software in recent years, the most user-friendly software is available in paid versions. The free versions of bioinformatics tools are predominantly command-line-based and inaccessible to many non-bioinformaticians who want to perform genomics, metagenomics, or other omics analyses8. Therefore, this paper proposes BioPipeline Creator, a user-friendly Java-based GUI for managing and customizing biological data pipelines with an intuitive graphical user interface that simplifies the user’s interaction with the system and allows a range of bioinformatics tools to be run.

The BioPipeline Creator consists of two parts, the server part, which is responsible for the processing, the provision of the software modules and the transmission of the results, whereby the complexity of the tool is completely abstracted for the user. The client part has a graphical interface that displays all the modules previously installed and registered on the server in a simple, intuitive and friendly way, helping the user to enter data and customize the appropriate pipeline for analysis.

Materials and methods

Programming language

The programming language chosen for the development of BioPipeline Creator was JAVA (https://www.oracle.com/), which was selected for its robustness and cross-platform capabilities. The Swing API was used to create the graphical user interface, and Apache NetBeans version 17 was used as the IDE (Integrated Development Environment) tool.

Maven (https://maven.apache.org/) played a crucial role in managing the project's dependencies. Its ease of use in automatically managing dependencies, including the creation of a JAR package with bundled dependencies, made it the preferred choice.

Network communication

The interaction between the client and server software components of BioPipeline Creator was established through the use of Java socket classes (https://docs.oracle.com/javase/7/docs/api/java/net/Socket.html). This design allows users (the client part) to submit jobs remotely without having to be physically present in the network structure in which the server part of the software operates.

Database

MySQL version 8.0.32 is the default DBMS used for monitoring and controlling processing steps and projects, as well as for storing information about the integration of new tools and their parameters into the BioPipeline Creator. However, users have the option to use SQLite version 3.38.5 (https://www.sqlite.org/) as an alternative to the default database. The Spring Security Crypto module, which is available in the Maven repository, was used to encrypt the administrator password.

Status control via Telegram

The graphical user interface of BioPipeline Creator has a color scheme that reflects the processing status in real time. During the process, each new status updates the pipeline element currently in operation and displays it in a specific color. Yellow stands for elements being processed, green for completed elements, and red for processing errors. A Telegram bot (Telegram Bot API version 6.0, https://core.telegram.org/bots/api) was used to inform the user of any errors, which sends messages about the processing status to the user in real time. Remote monitoring increases the dynamics of the control process and eliminates the need to monitor the user’s device on site.

Available tasks and tools

A list of tools already tested and implemented in BioPipeline Creator, all of which are available in the BioPipeline Creator version 1.0 database. The tools included in this version of the BioPipeline Creator database are for illustrative purposes only to demonstrate the efficiency and customizability of the analysis pipelines. All Linux-based tools are candidates for BioPipeline Creator, and the selection of tools will depend on the analysis the user wishes to perform.

Data quality assessment

The tool implemented to assess the quality of the raw sequencing data was FASTQC version fastqcr 0.1.3 (https://github.com/kassambara/fastqcr), which is installed on the R platform version 4.4.0 (https://cran.r-project.org/). This tool is used to assess the quality of data derived from raw data in the FASTQ standard (https://www.bioinformatics.babraham.ac.uk/projects/fastqc/). With FASTQC, it is possible to assess sequencing reads and identify the presence of adapters, sequences with Ns, average and unexpected GC content, read duplication, and other relevant information when removing poor quality data.

Redundancy removal in reads and treatment for quality improvement

The software NGSReadsTreatment version 1.49 was used to remove redundancies in the raw or treated reads. This tool uses an innovative approach with a probabilistic data structure known as cuckoo filter for the process of redundancy removal in NGS data. The treatment, which aims to improve data processing performance, is performed using version 2.0 of NGSReadsTreatment, which is also integrated into the BioPipeline Creator. To ensure better performance with the best possible quality, this version compares the average quality values of the processed reads in the treated dataset (which contains unique reads) with the reads in the raw dataset during the elimination of redundant reads to ensure that if the same read is found in both datasets, the read with the highest average quality is included in the final dataset.

Genome de novo assembly

The software SPAdes, version 3.15.410, and MEGAHIT, version 1.2.911, were integrated into the pipeline due to their extensive use in de novo genome assembly.

Contig Reordering

The MAUVE tool provides users with the ability to reorder contigs based on a reference genome, which facilitates the organization of assembly results. In a simple and intuitive way, users can reorder the result of a de novo assembly when a reference genome is available. This reordering functionality proves valuable for performing analyses related to completing genome assembly and closing gaps12.

Evaluation of gene completeness

The BUSCO software, version 5.4.413, was used to assess the completeness of the genes. This software is seamlessly integrated with various organism databases and is extensively used by the scientific community.

Annotation of prokaryotic genomes

Prokaryotic genomes are annotated using the Prokka tool, version 1.14.614. Prokka efficiently identifies coding sequences in assembled prokaryotic genomes and annotated genes, which is a straightforward and fast approach. This approach is very useful for annotating de novo assembly results of prokaryotes.

Tool validation

For the validation with the BioPipeline Creator, we used five NGS datasets with raw data from prokaryotic genomes. All raw data used in FASTQ format are publicly available and can be accessed via the National Center for Biotechnology Information (NCBI) database using the following forward IDs: Mycobacterium tuberculosis KZN 4207, SRA number SRR1144800, Escherichia coli KLY, SRA number SRR1424625, Escherichia coli 266917, SRA number SRR5470155, Corynebacterium glutamicum ATCC 13032, SRA number SRR638977, Escherichia coli O111:H- str. 11128, and SRA number ERR351258. The SRA toolkit was used to download the datasets with the fastq-dump script. The “--split-files” option was used for the paired data. The tests carried out with the Trinity and Salmon software were performed with the software's own test data sets.

Results

The BioPipeline Creator

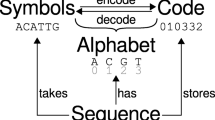

BioPipeline Creator works on the basis of a client/server architecture (Fig. 1). This concept concentrates on the task of installing and configuring tools onto the BioPipeline Creator server. This approach prevents BioPipeline Creator client users from having to install their devices or customize their operating systems in order to use the tools required for their analyses.

An important aspect of this processing methodology is its ability to be configured for remote server access, enabling ex situ user utilization. This implies a more flexible use, since users, in exceptional circumstances, are not required to be physically present on the same local network to leverage the functionalities of the BioPipeline Creator. More comprehensive information about this subject is available in the user guide.

Figure 2 shows the main interface of BioPipeline Creator, which allows users to design a customized version of their pipeline. In the example given, the tools NGSReadsTreatment, which is responsible for removing redundancies in the reads obtained during sequencing, SPAdes, which assembles the genome, followed by the sorting of the contigs with Mauve, and finally Prokka, which is responsible for the annotation of the genome, are registered in BioPipeline Creator.

In the “Tools” section, the available tools of the user repertoire are listed, and to use them in the pipeline, the user can simply select them and drag them into the “Pipeline” workflow section, as shown in Fig. 2. In this way, the user has complete control over the order in which the tools are used to process their data. If a tool outputs a result during the data processing steps that is incompatible with the subsequent processing step, the user will receive a notification about this output-input incompatibility.

Within the same window, there is a data input area to receive data for processing. The format of the input data can vary depending on the tool selected. In the scenario shown in Fig. 2, it is a paired-end read library in FASTQ format that is to be processed. It is important to note that the user must enter a reference file in the “Reference File” field if the tool requires one.

BioPipeline Creator provides two options for obtaining the results of the analysis: “FullResult” and “LastResult”. This allows the user to decide whether he wants to receive the results of all steps with all intermediate files generated in the constructed pipeline or only the result of the last tool selected for processing.

Figure 3 illustrates the windows for adding tools to the BioPipeline Creator repertoire. In the window, the user has the option to add tools that are installed on his workstation or server to make them available to other users. With this setting, the user can specify the executable file of the tool, e.g. “/opt/SPAdes/bin/spades.py”, along with its parameters and default values. It is important that this setting is saved in the BioPipeline Creator database so that the setting step does not have to be repeated later.

Access to the repertoire window for adding new tools to BioPipeline Creator is protected by an encrypted password. This protection aims to maintain the integrity of the information already added to prevent unauthorized access and accidental changes. When accessing this menu, the user is prompted to enter the administrator password for the tool, which is initially set to "admin" and can be changed by the user when using BioPipeline Creator for the first time. However, this does not mean that BioPipeline Creator is inaccessible, as the guest user can add parameters and change parameter values without access to the administrator session. However, these added parameters or changed parameter values are only valid for the current project.

On the project creation screen, the user has the option to create a new project in which all added tools can be listed and integrated into this specific project. The parameters and values previously entered on the previous screen can also be customized for a specific project. In addition, the user can enter new parameters and values that are specific to the project in question.

An additional feature implemented in BioPipeline Creator is the ability to monitor the progress of the user’s pipeline steps directly via a mobile Telegram messenger. This increases dynamism and eliminates the need for the user to be physically present in front of their device to follow the processing phase of their analysis. Further details can be found in the BioPipeline Creator user manual.

Although the tools within BioPipeline Creator remain unchanged, the results of the analysis are consistent with those obtained by running the tools independently of BioPipeline Creator. Nonetheless, the introduction of BioPipeline Creator has several advantages, including (1) the possibility for users on a local network to use bioinformatics tools without the need for local installations on each device and the centralization of software and library management; (2) the ability for beginners and advanced users to create and run custom pipelines, (3) improve the accessibility of tools for users with limited computer skills but with omics analysis needs; (4) facilitate long-term analysis customization and reproducibility by adding new tools and changing parameter values for each tool, (5) provide server configuration options for remote BioPipeline Creator runs, if required; (6) introduce the option to add new tools to a personalized and shareable repertoire, thereby expanding execution and application possibilities in a research group; and (7) enabling convenient monitoring of analysis progress within the pipeline through BioPipeline Creator's graphical user interface or receiving real-time updates on smartphone via Telegram, ensuring seamless tracking of processing status. Compared to currently available web-based tools, the BioPipeline Creator (8) does not require the user to compete with a long queue of jobs, reducing the overall time required to process the analysis. Therefore, users also won't deal with storage and processing restrictions, being the local resources availability the only limitation experienced; (9) the user is able to edit the pipelines, adding new tools and additional parameters and parameter values directly from the database via the configuration menu (config). When adding parameters, the user must have the administrator password, which prevents accidents such as deleting previously added parameters or tools. Adding them to the database, it can be integrally shared with other users, while parameters added directly to the current project are only valid for that specific project/user.

It is important to emphasize that the BioPipeline Creator Client is compatible with Windows, Linux, and MAC operating systems. The server requires a Linux system, and the client can run on simpler devices or workstations with the mentioned OS, allowing users to keep their operating system without the need for a dual-boot installation or migration to another compatible system.

With BioPipeline Creator, users gain access to their personal toolbox of bioinformatics tools that are robust, easy to use, and versatile. This opens countless possibilities for the creation of data analysis pipelines.

Scalability test

In order to evaluate the scalability of running the BioPipeline Creator, ten workstations with the client version were used, five of which used the Windows 10 operating system and two of which used the Ubuntu 22.10 operating system. The server, which is responsible for running BioPipeline Creator_Server and processing the pipelines, is equipped with an Intel® Xeon® Silver 4214 R CPU @ 2.40 GHz×24, has a hard disk capacity of 3.5 TB and runs the Ubuntu 20.04.4 LTS operating system.

The extended Docker version of the BioPipeline Creator_Server was used, which offers flexibility in customizing the number of clients and the number of parallel tools. These configurations can be changed in the “config.properties” file under the parameters “maxClients” and “maxParallelTools” The aim of the test was to simultaneously process a pipeline that included removing duplicate reads from the input dataset (NGS reads), assembling the treated reads (SPAdes) and subsequent genome annotation (Prokka). While the same dataset was used for all available workstations, each pipeline was treated as a separate processing instance to ensure that the repetition of datasets did not affect the test results.

Details regarding the SRA number, genomic library, and dataset size can be found in Table 1, 2. The entire test was concluded within 52 min, 15 s, and 19 ms.

It is worth mentioning that the installation of BioPipeline Creator_Server can be performed on any workstation or server as long as it has the appropriate hardware configurations to run the tools selected by the user. The resource requirements, such as RAM, hard disk space and processor, vary for each tool and are usually described in the respective manuals and can be set by the user. Consequently, resource limitations or requirements are not directly related to BioPipeline Creator, as its purpose is to provide users with the flexibility to aggregate omics tools and create personalized pipelines. If BioPipeline Creator_Client is used in a local network, it can also be installed on other users' machines, simplifying access to the repertoire tools without the need for a separate installation on each local machine.

To determine the minimum hardware requirements needed to run the BioPipeline Creator Client, tests were performed on a laptop with the following configuration: Intel i5 processor, 4 GB RAM, 320 GB disk space, operating system can be Windows 10+ or Linux.

Conclusion

The development of BioPipeline Creator opens the possibility for beginners and researchers who are not bioinformaticians to better utilize bioinformatics resources in omics fields. Due to its flexibility and user-friendly approach to omics tools, this tool enables an expansion of bioinformatics applications and facilitates the use of a wider range of tools compared to previous scenarios that were challenging for users without computer or programming skills, where the lack of a graphical interface, a large number of dependencies and installation difficulties were obstacles that have been removed by BioPipeline Creator.

This tool centralizes the complexity on the BioPipeline Creator server side, providing a streamlined process that only needs to be run once, increasing ease of use for all users involved. In addition to the user-friendly interface for terminal-based bioinformatics tools, BioPipeline Creator allows a workstation or server to be pre-configured with various additional tools in a repertoire tailored to a group’s needs or routines and can access all available computing resources. This makes it accessible to users with different computer skills, from basic to advanced users, with a flexibility that makes it an interesting tool compared to other available pipelines, especially those that resort to web-based versions, due to the availability of good computer resources and the possibility of faster and more accessible results. It should also be noted that processing large datasets via web versions can result in the user facing limitations in submission or even data quality degradation and bit loss, which can lead to processing problems.

The flexibility of BioPipeline Creator allows the analysis of both prokaryotic and eukaryotic data. The BPC validation process involved creating a pipeline for a prokaryotic organism dataset and performing tasks such as removing redundancies in the dataset (ngsreadstreatment), assembly (SPADes), result ordering (Mauve) and annotation. Transcript assembly was also performed using Trinity software and finally, a pipeline for analyzing transcript quantification was created using Salmon software. By choosing BioPipeline Creator, the user has the possibility to use different bioinformatics tools and combine functions to create a personalized and exclusive analysis tailored to your specific needs.

Data availability

I declare for all intents and purposes that the dataset and details of the SRA number, genomic library, and size in Table 1 are publicly available in the NCBI database (https://www.ncbi.nlm.nih.gov/).

References

Iqbal, M. N. et al. BMT: Bioinformatics mini toolbox for comprehensive DNA and protein analysis. Genomics 112, 4561–4566. https://doi.org/10.1016/j.ygeno.2020.08.010 (2020).

McKenna, A. et al. The Genome Analysis Toolkit: A mapreduce framework for analyzing next-generation DNA sequencing data. Genome Res. 20, 1297–1303. https://doi.org/10.1101/gr.107524.110 (2010).

The ENCODE Project Consortium. An integrated encyclopedia of DNA elements in the human genome. Nature 489, 57–74. https://doi.org/10.1038/nature11247 (2012).

Caporaso, J. G. et al. QIIME allows analysis of high-throughput community sequencing data. Nat. Methods 7, 335–336. https://doi.org/10.1038/nmeth.f.303 (2010).

Goecks, J. et al. Galaxy: A comprehensive approach for supporting accessible, reproducible, and transparent computational research in the life sciences. Genome Biol. 11, R86. https://doi.org/10.1186/gb-2010-11-8-r86 (2010).

Afgan, E. et al. The Galaxy platform for accessible, reproducible and collaborative biomedical analyses: 2016 update. Nucleic Acids Res. 44(W1), W3–W10. https://doi.org/10.1093/nar/gkw343 (2016).

Leipzig, J. A review of bioinformatic pipeline frameworks. Brief. Bioinform. https://doi.org/10.1093/bib/bbw020 (2016).

Smith, D. R. The battle for user-friendly bioinformatics. Front. Genet. 4, 187. https://doi.org/10.3389/fgene.2013.00187 (2013).

Gaia, A. S., de Sá, P. H., de Oliveira, M. S. & Veras, A. A. Ngsreadstreatment—a cuckoo filter-based tool for removing duplicate reads in NGS Data. Sci. Rep. 2019, 9. https://doi.org/10.1038/s41598-019-48242-w (2019).

Bankevich, A. et al. Spades: A new genome assembly algorithm and its applications to single-cell sequencing. J. Comput. Biol. 19, 455–477. https://doi.org/10.1089/cmb.2012.0021 (2012).

Li, D., Liu, C.-M., Luo, R., Sadakane, K. & Lam, T.-W. Megahit: An ultra-fast single-node solution for large and complex metagenomics assembly via succinct de bruijn graph. Bioinformatics 31, 1674–1676. https://doi.org/10.1093/bioinformatics/btv033 (2015).

Rissman, A. I. et al. Reordering contigs of draft genomes using the mauve aligner. Bioinformatics 25, 2071–2073. https://doi.org/10.1093/bioinformatics/btp356 (2009).

Simão, F. A., Waterhouse, R. M., Ioannidis, P., Kriventseva, E. V. & Zdobnov, E. M. Busco: Assessing genome assembly and annotation completeness with single-copy orthologs. Bioinformatics 31, 3210–3212. https://doi.org/10.1093/bioinformatics/btv351 (2015).

Seemann, T. Prokka: Rapid prokaryotic genome annotation. Bioinformatics 30, 2068–2069. https://doi.org/10.1093/bioinformatics/btu153 (2014).

Acknowledgements

Thanks to the Brazilian Research Council (CNPq) and the Federal University of Pará, this work received help from PROPESP/UFPA. This work is part of the research developed by BIOD (Bioinformatics, Omics, and Development Research Group www.biod.ufpa.br).

Author information

Authors and Affiliations

Contributions

Cléo Maia Cordeiro: Software; Gislenne da Silva Moia and Mônica Silva de Oliveira: Software, Test Tool and Writing the user’s manual; Lucas da Silva e Silva and Sávio S. Costa: Writing draft manuscript and Test Tool; Maria Paula da Cruz Schneider, Rafael A. Baraúna, Diego Assis das Graças, and Artur Silva: Writing the draft manuscript, Review Methodology; Adonney Allan O. Veras: Software, Methodology, Writing - Review & Editing, Supervision, Conceptualization, and Project administration.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Maia Cordeiro, C., da Silva Moia, G., de Oliveira, M.S. et al. BioPipeline Creator—a user-friendly Java-based GUI for managing and customizing biological data pipelines. Sci Rep 14, 16572 (2024). https://doi.org/10.1038/s41598-024-67409-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-67409-8

- Springer Nature Limited