Abstract

This paper introduces a novel meta-heuristic algorithm named Rhinopithecus Swarm Optimization (RSO) to address optimization problems, particularly those involving high dimensions. The proposed algorithm is inspired by the social behaviors of different groups within the rhinopithecus swarm. RSO categorizes the swarm into mature, adolescent, and infancy individuals. Due to this division of labor, each category of individuals employs unique search methods, including vertical migration, concerted search, and mimicry. To evaluate the effectiveness of RSO, we conducted experiments using the CEC2017 test set and three constrained engineering problems. Each function in the test set was independently executed 36 times. Additionally, we used the Wilcoxon signed-rank test and the Friedman test to analyze the performance of RSO compared to eight well-known optimization algorithms: Dung Beetle Optimizer (DBO), Beluga Whale Optimization (BWO), Salp Swarm Algorithm (SSA), African Vultures Optimization Algorithm (AVOA), Whale Optimization Algorithm (WOA), Atomic Retrospective Learning Bare Bone Particle Swarm Optimization (ARBBPSO), Artificial Gorilla Troops Optimizer (GTO), and Harris Hawks Optimization (HHO). The results indicate that RSO exhibited outstanding performance on the CEC2017 test set for both 30 and 100 dimension. Moreover, RSO ranked first in both dimensions, surpassing the mean rank of the second-ranked algorithms by 7.69% and 42.85%, respectively. Across the three classical engineering design problems, RSO consistently achieves the best results. Overall, it can be concluded that RSO is particularly effective for solving high-dimensional optimization problems.

Similar content being viewed by others

Introduction

In recent years, more and more optimization problems, such as route planning1,2,3, resource allocation4,5,6, and other real-world problems, have received increased attention from scholars. These optimization problems have an optimal solution that comprises a combination of multiple variables. Researchers aim to find the optimal solution as much as possible by using optimization algorithms.

Optimization algorithms play a crucial role in practical applications. In manufacturing7,8,9,10, optimization algorithms can be employed to optimize production processes, reduce costs, and improve efficiency. In the field of transportation planning11,12, they can help optimize traffic flow, reduce congestion, and improve road usage. In healthcare13,14, these algorithms can be utilized to optimize the allocation of hospital resources and improve the efficiency of healthcare services. Another important application area is financial and investment management15,16. Optimization algorithms can help investors find the best asset allocation in their portfolios to minimize risk and achieve expected returns. Furthermore, optimization algorithms have been applied in the development of Deep Learning (DL) and Machine Learning (ML)17,18,19. In ML and DL, optimization algorithms can improve the performance of models by tuning hyperparameters.

There are various types of optimization algorithms. These include gradient descent algorithms20 based on mathematics, dynamic programming algorithms21 based on operations research, and optimization algorithms22,23,24 based on heuristics. Among these, meta-heuristic optimization algorithms have a unique advantage in solving more complex optimization problems. They do not rely on specific problem knowledge but instead explore and exploit the solution space to find the optimal or near-optimal solution. These algorithms are usually designed based on natural phenomena and group behavior. Dehghani25 introduced the Coati Optimization Algorithm (COA), which mimics coati behavior in nature. Al-Betar26 proposed a novel nature-inspired swarm-based optimization algorithm called the Elk Herd Optimizer (EHO), inspired by the breeding process of elk herds. Zhao27 proposed the Sea-Horse Optimizer (SHO), inspired by the behavior of seahorses, mainly mimicking their movement, predation, and breeding behaviors. Hashim28 mathematically modeled the foraging and reproduction behaviors of snakes to present the Snake Optimizer (SO). Abdollahzadeh29 introduced the African Vultures Optimization Algorithm (AVOA), inspired by the foraging and navigation behaviors of African vultures. Zhong30 designed the Beluga Whale Optimization (BWO) algorithm, inspired by the behaviors of beluga whales. MiarNaeimi31 proposed the Horse Herd Optimization Algorithm (HOA), which imitates the social behaviors of horses at different ages. Heidari32 introduced the Harris Hawks Optimization (HHO) algorithm, inspired by the cooperative behavior and chasing style of Harris hawks in nature.

To further improve the performance of existing optimization algorithms, researchers have introduced several enhancements. Based on Bare-Bone Particle Swarm Optimization (BBPSO), Zhou33 proposed an atomic retrospective learning BBPSO (ARBBPSO) algorithm by introducing the renewal strategy of motion around nuclei and the retrospective learning strategy. Additionally, mutation methods34,35 are employed to enhance the exploration capabilities of optimization algorithms. Abed-alguni36 introduced the island-based Cuckoo Search with Polynomial Mutation (iCSPM), which replaces the Lévy flight method in the original Cuckoo Search with the highly disruptive polynomial mutation method.

Although these meta-heuristic optimization algorithms can solve some optimization problems well, high-dimensional optimization problems remain a challenge. High-dimensional optimization problems usually involve a large number of parameters or variables, leading to a dramatic increase in the dimension of the solution space. In high-dimensional space, the increase in the width and depth of the solution space results in a higher number of locally optimal solutions, making it more difficult to escape from the local optimum. Additionally, high-dimensional optimization problems lead to the “curse of dimensionality”37. As the dimension increases, the distance between valid data points becomes extremely dispersed, thus affecting the convergence speed and stability of the algorithm.

When solving high-dimensional optimization problems, although the Honey Badger Algorithm (HBA)38 and the Salp Swarm Algorithm (SSA)39 converge quickly in local search, they tend to fall into local optima due to insufficient global search capability. Due to their weak exploitation abilities, the Chimp Optimization Algorithm (ChOA)40 and the Whale Optimization Algorithm (WOA)41 show low convergence accuracy in high-dimensional optimization problems. The Artificial Gorilla Troops Optimizer (GTO)42 and the Dung Beetle Optimizer (DBO)43 struggle to escape local optima due to a lack of local search capability when dealing with high-dimensional problems.

To address these challenges, we propose a novel swarm-based Rhinopithecus Swarm Optimization (RSO), inspired by the social behaviors of rhinopithecus. In the RSO, we introduce three distinct search strategies: vertical migration, concerted search, and mimicry. Additionally, RSO divides the swarm into different categories, with each category allocated a corresponding search strategy and specific learning objectives. This approach enables individuals in the swarm to explore the solution space from multiple perspectives, thus increasing the coverage of the search space. Consequently, the proposed algorithm can effectively overcome high-dimensional optimization challenges.

The main contributions of this paper are as follows:

-

1.

We propose a novel Rhinopithecus Swarm Optimization (RSO) algorithm, which can solve optimization problems and complex engineering design problems well.

-

2.

Three different search strategies of RSO are devised based on the social behaviors of different groups in the swarm. These strategies aim to escape local optima by enhancing global and local search capabilities.

-

3.

RSO is evaluated against eight well-known optimization algorithms. The results verify its superior performance in solving optimization challenges and complex engineering design problems, especially in high-dimensional optimization problems.

The rest of this paper is organized as follows: section "Methods" introduces the details of RSO. Section "Experiments" shows the results and analysis of the simulation experiments. Section "Engineering design problems" introduces the engineering problems and the experimental results. Section "Conclusions and future works" summarizes this work and details some possible future directions.

Methods

Optimization problems become more challenging with increasing dimensionality. In high-dimensional optimization problems, the number of local optimal solutions increases with dimension, making it easier for traditional optimization algorithms to fall into local optima. Therefore, the challenge is to improve the efficiency of searching the large-scale solution space to overcome the curse of dimensionality. To better address these problems, we have developed a novel Rhinopithecus Swarm Optimization (RSO) algorithm inspired by the social behavior of rhinopithecus swarm, including vertical migration, concerted search, and mimicry. In this section, we discuss some details of rhinopithecus behavior and the RSO.

Behavior of rhinopithecus

Rhinopithecus is an agile and intelligent animal, excelling in leaping and climbing among the canopy and often foraging while traversing trees. Rhinopithecus exhibit strong adaptability, with their habitat spanning various regions from low to high altitudes. Due to the influence of external factors such as ambient temperature and food distribution, the rhinopithecus swarm frequently displays vertical migration tendencies. Within the swarm, individuals of various age structures play distinct roles during these migration events. Mature rhinopithecus are responsible for guiding the migration direction of the swarm. Adolescent rhinopithecus, being less robust than the mature individuals, typically learn survival skills by emulating mature rhinopithecus and generally seek habitats around them. Infant rhinopithecus lack the ability to search for survival resources and usually rely on older individuals to acquire these resources for them.

Rhinopithecus swarm optimization algorithm

Inspired by the social behavior of the rhinopithecus swarm, we have introduced the Rhinopithecus Swarm Optimization (RSO) algorithm. The ways the rhinopithecus swarm searches for survival positions provide new strategies for the optimization algorithm, enhancing its exploration and exploitation capabilities. In RSO, the search space represents the natural habitat of rhinopithecus. The survival position of an individual in the search space corresponds to a solution of the optimization algorithm. Individuals occupy superior or inferior survival positions based on their survival experience, with older individuals typically occupying better positions.

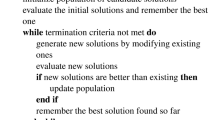

In RSO, we categorize the top 40% of individuals as mature rhinopithecus, those ranking between 40 and 70% as adolescent rhinopithecus, and the remaining individuals as infancy rhinopithecus based on their survival positions. The individual with the best survival position in the rhinopithecus swarm is referred to as the king rhinopithecus, who usually emerges from the mature individuals. The king rhinopithecus and the mature individuals jointly lead the migration of the groups. The flowchart and pseudo-code of RSO is shown in Fig. 1 and Algorithm 1.

Vertical migration

The vertical migratory behavior of rhinopithecus plays a crucial role in the survival of the swarm. Typically, the rhinopithecus swarm periodically migrates between lower and higher altitudes depending on food distribution and climatic conditions. At low temperatures, rhinopithecus prefer to be active at lower altitudes, where the lower altitude reduces the physiological stress of cold temperatures and food is relatively more abundant. At other temperatures, the rhinopithecus swarm displays the opposite migratory trend, preferring to utilize higher altitude areas where food is relatively higher quality.

Researchers have found that rhinopithecus possess a certain degree of spatial cognition. They may be able to memorize positions they have inhabited and incorporate past experience and foraging strategies to guide migration. Mature and king rhinopithecus usually have richer experience and spatial cognition of the migration process, playing a leadership role in the swarm migration. These individuals retain optimal positions for two temperature conditions, as shown in Eq. (1). During the next migration, they search for a new survival position by integrating previous survival experience. This approach helps the rhinopithecus swarm select better positions effectively.

where KingR stands for the king rhinopithecus, MR stands for the mature rhinopithecus.

Each time the mature rhinopithecus search for a candidate position, they try to move towards the position of the king rhinopithecus. Their candidate solution is calculated by Eq. (2).

where \(KingR_{a}\) stand for the locations of the king rhinopithecus, the \(MR_{b}\) stand for the locations of the mature rhinopithecus. \(Gausi(\alpha ,\beta )\) was a function value that generates random from a Gaussian distribution with an expectation of \(\alpha\) and a variance of \(\beta\).

Concerted search

In the rhinopithecus swarm, adolescent rhinopithecus are in the growth stage. Although they have some ability to search for migratory positions, they lack the experience of mature and king rhinopithecus. Consequently, adolescent rhinopithecus often exhibit uncertainty in choosing search paths and migration positions. In such cases, they actively seek guidance from mature rhinopithecus and the king rhinopithecus, relying on their environmental cognition and survival experience to make decisions. Typically, adolescent rhinopithecus communicate information about their historical positions to the king and mature rhinopithecus. The king and mature individuals then use their own experience to guide the adolescents in making judgments. In RSO, adolescent rhinopithecus are set to communicate two historical positions to the king and mature rhinopithecus, respectively.

where AR stands for the adolescent rhinopithecus.

The king Rhinopithecus and the mature individuals provide the adolescent individuals with their respective advice. The adolescent rhinopithecus consider both suggestions equally and synthesize the opinions to generate a candidate solution, which is calculated using Eq. (4). By leveraging the survival experience of the older individuals, adolescent rhinopithecus can select better positions. This strategy allows RSO to explore the solution space more effectively, thereby improving its ability to escape from local optima.

where \(KingR_{a}\) stand for the locations of the king rhinopithecus, the \(MR_{b}\) stand for the locations of the mature rhinopithecus, the \(AR_{c}\) stand for the locations of the adolescent rhinopithecus. \(Gausi(\gamma ,\delta )\) was a function value that generates random from a Gaussian distribution with an expectation of \(\gamma\) and a variance of \(\delta\). \(Gausi(\epsilon ,\zeta )\) was a function value that generates random from a Gaussian distribution with an expectation of \(\epsilon\) and a variance of \(\zeta\).

Mimicry

Infant rhinopithecus are usually in the early stages of learning and development. They have not fully mastered the elements of their environment and survival skills, making it very difficult for them to find suitable positions for migration. During this vital developmental stage, infancy individuals depend on other group members, especially adolescent and mature rhinopithecus, to lead them in their migration. In the searching process, infancy rhinopithecus communicate information about their position to these older individuals in various ways. Adolescent and mature rhinopithecus understand the habits of the infancy individuals based on this positional information. Thus, they can better integrate their own experience to guide the infancy rhinopithecus during migration.

In RSO, after receiving guidance from mature and adolescent rhinopithecus, the infancy individuals consider the suggestions from both groups and determine their candidate positions through comprehensive consideration, calculated using Eq. (5). This group-assisted approach helps infancy rhinopithecus navigate the growth stage seamlessly, allowing them to learn survival skills by imitating the search strategies of older individuals. This strategy enhances the ability to exchange information between different groups, which in turn improves the local search capability of RSO.

where \(MR_{b}\) stand for the locations of the mature rhinopithecus, the \(AR_{c}\) stand for the locations of the adolescent rhinopithecus, IR stands for the position of the infancy rhinopithecus. \(Gausi(\eta ,\theta )\) was a function value that generates random from a Gaussian distribution with an expectation of \(\eta\) and a variance of \(\theta\). \(Gausi(\iota ,\kappa )\) was a function value that generates random from a Gaussian distribution with an expectation of \(\iota\) and a variance of \(\kappa\).

Consent to participate

All authors participated in the manuscript and agreed to participate in it.æ

Experiments

The CEC2017 function set is widely used to measure the comprehensive performance of optimization algorithms. All functions in the test set have undergone rotation and displacement, increasing the difficulty for optimization algorithms to find the optimum. The test set contains 29 test functions, each available in four dimensions: 10, 30, 50, and 100. The difficulty of solving these problems increases with the dimension. Based on the specific properties of the functions in the search space, they can be categorized into Unimodal Functions \((F_1-F_2)\), Simple Multimodal Functions (\(F_3-F_9\)), Hybrid Functions (\(F_{10}-F_{19}\)), and Composite Functions (\(F_{20}-F_{29}\)).

Table 1 shows the details of the CEC2017 benchmark functions. Unimodal Functions have only one significant optimum in the search space, making the optimization target clear. Simple Multimodal Functions contain multiple local optima, increasing the probability of falling into a local optimum. Hybrid Functions combine different properties such as linear and nonlinear components and low and high dimensional combinations, making the search space more complex. Composite Functions comprise combinations of simple functions, including linear combinations, nonlinear combinations, combinatorial functions, and transformation functions.

To verify the comprehensive ability of RSO to solve optimization problems, we performed tests with 30 and 100 dimension using the CEC2017 benchmark. In the study, we used a series of well-known optimization algorithms as the control group, including DBO, BWO, SSA, AVOA, WOA, ARBBPSO, GTO, and HHO. To ensure the reliability of the results, the control group algorithms used the same parameter settings as in their original papers. The proposed algorithm, RSO, is a parameter-free meta-heuristic algorithm.

The time complexity of RSO is \(O(m \cdot n)\), where \(m\) represents the population size and \(n\) represents the number of iterations. The proposed algorithm evaluates each individual only once per iteration without additional computations, resulting in a relatively low time complexity.

Mean Error (ME) was used to measure the performance of the algorithms. ME is defined as the mean error over multiple experiments, where the error is calculated as \(|Real_{optimal} - Theoretical_{optimal}|\). To minimize the impact of chance on the experimental results and performance analysis, we conducted 36 independent experiments for each test function. The errors of these experiments were averaged to obtain the ME. The results of the experiments are shown in Tables 2, 3, 4, 5, 6, 7, 8, 9 and 10. The Mean, Best, Median, Worst, Standard Deviation (Std), and \(p-value\) of the 36 runs were recorded.

When the dimension was set to 30, out of 29 functions, RSO achieved 10 first rankings, 8 second rankings, 6 third rankings, 2 fourth rankings, 2 sixth rankings, and 1 eighth ranking. RSO obtained the highest number of first rankings among all algorithms, 3 more than ARBBPSO, which had the second-highest number. In each type of function in CEC2017, RSO outperformed nearly all the algorithms in the control group, especially for Simple Multimodal Functions, where RSO obtained 4 first rankings out of 7 test functions. However, the test results of RSO on Unimodal Functions were inferior to those of SSA and AVOA.

As the dimension was adjusted to 100, RSO still maintained outstanding performance, with the number of first rankings increasing to 19. While SSA received first rankings in only 6 functions, several algorithms did not achieve any first rankings. In all four types of CEC2017 functions, RSO showed significant advantages over the control group. In each category of test functions, the number of first rankings obtained by RSO was not less than that of the control group. It was only on \(F_3\) of Simple Multimodal Functions that RSO ranked relatively behind.

To visualize the iteration process of the algorithm in 30 and 100 dimension, we selected two test functions from each of the four function types to obtain the convergence curves and box plots, as shown in Figs. 2, 3, 4, 5, 6, 7, 8 and 9. Additionally, we applied a logarithmic transformation to ME used in the convergence figures and box plots to enhance the clarity of these figures.

From the convergence curves of these algorithms in 30 dimension, it is notable that RSO has a significant advantage in local search ability in \(F_{2}\) and \(F_{22}\). This advantage is attributed to the mimicry strategy, which helps individuals update positions by communicating with individuals of other groups, significantly enhancing the local search capability. In \(F_{12}\) and \(F_{14}\), RSO continuously updated the best value throughout the iteration process, demonstrating its excellent global search capability. This is because the vertical migration strategy assists individuals in updating their positions based on the optimal positions of the swarm, thus strengthening global search capability. Due to the concerted search strategy, RSO individuals can simultaneously consider the optimal positions of the swarm while updating their positions by combining the individual positions of other groups. This enables RSO to exhibit an outstanding ability to escape local optima in \(F_{4}\). Although RSO was trapped in local optima in \(F_{8}\) and \(F_{25}\), the upper quartiles of RSO in box plots were among the smallest. This result indicates that RSO is accurate in these two functions.

Regarding the iteration process in 100 dimension, the three search strategies also make a significant difference. It is noteworthy that RSO performed better in Unimodal Functions compared to its performance in 30 dimension. In particular, RSO shows the ability to escape local optima during the iteration process in \(F_2\). Although RSO converged early in \(F_{5}\), \(F_{6}\), and \(F_{21}\), the mean best values of RSO were consistently lower than those of other algorithms. In \(F_{10}\), \(F_{16}\), and \(F_{20}\), RSO demonstrates the ability to escape local optima due to its strong global and local search capabilities. Additionally, it converged to the lowest mean best value, validating the high accuracy of RSO in these functions.

We further investigated the experimental results using the Wilcoxon signed-rank test and Friedman test. The test results are shown in Tables 11 and 12, where ’+’ denotes that RSO performs better than other algorithms, ’-’ denotes that RSO performs worse than other algorithms, and ’\(\approx\)’ denotes that RSO performs equally well as other algorithms. “Mean Rank” denotes the average ranking following 36 independent runs, and “Rank” denotes the overall final ranking.

Results in Table 11 indicate that RSO outperformed the control group in up to 29 of the 29 functions with 30 dimension. Furthermore, RSO significantly outperformed other algorithms, except ARBBPSO, in Simple Multimodal, Hybrid, and Composite Functions. However, the performance of RSO in Unimodal Functions was weaker compared to its performance in other types of functions, particularly underperforming SSA and AVOA.

Table 12 shows the performance analysis of RSO in CEC2017 with 100 dimension. RSO outperformed the control group in at least 20 out of the 29 functions. Specifically, compared to DBO, BWO, WOA, GTO, and HHO, RSO excelled in all functions of Unimodal, Hybrid, and Composite Functions. In Multimodal Functions, RSO performed significantly better than the control group in almost all functions, but performed significantly worse than SSA, AVOA, and HHO in only one function.

In the experimental results for both 30 and 100 dimension, the Mean Rank of RSO ranked first, surpassing the second-ranked algorithms by 7.69% and 42.85%, respectively. Although RSO performed well in both 30 and 100 dimension, its performance advantage in 100 dimension was significantly higher than in 30 dimension. This indicates that RSO is more effective at solving high-dimensional optimization problems than low-dimensional ones.

Engineering design problems

In this section, we simulated RSO on some engineering design problems44 including 10-Bar Truss Design (10-BTD), Gear Train Design (GTD), Three-Bar Truss Design (3-BTD) to demonstrate its efficiency. These problems incorporate the satisfaction of constraints and the search for optimal solution. The effectiveness of optimization algorithms is often significantly affected by the constraints and a limited solution space. Therefore, an added mechanism called Constraint Handling Technique (CHT) is required to address these challenges. This technique ensures the final solution meets constraints by imposing penalties on solutions that do not satisfy them. To represent the fairness of the experiment, we ran each algorithm individually 36 times on all problems with the max number of iteration 500 and population size 50.

10-bar truss design

The 10-BTD is a significant engineering design problem with the objective on weight minimization of the truss structure while satisfying the frequency constraints. The details of the problem are as follows:

Minimize:

Subject to:

With bounds:

Tables 13 and 14 present the results of RSO and other algorithms on 10-BTD. In the Table 13, RSO receives the best solution with \({\bar{x}}\) =(3.3828E-03, 1.4758E-03, 3.6725E-03, 1.4262E-03, 6.4502E-05, 4.5776E-04, 2.4450E-03, 2.3391E-03, 1.1955E-03, 1.2612E-03) and optimal value 5.2662E+02. From the Table 14, it is obvious that RSO demonstrates superior values across Best, Mean, Worst, and Std compared to other algorithms. To visualize the iteration process of these algorithms on 10-BTD, the convergence curves and error bars of RSO and the control group are shown in Fig. 10. Although RSO converged early in the iteration process, the distance between upper and lower quartile in the box plot was the smallest. This indicates that while RSO may have been trapped into a local optimum, it exhibits a robust stability when solving 10-BTD problem.

Gear train design

The GTD is a critical engineering problem focusing on the gear ratio minimization in a compound gear train arrangement. The details of the problem are as follows:

Minimize:

Subject to:

With bounds:

The experimental results of RSO and the control group on GTD are shown Tables 15 and 16. In Table 15, it is clearly that RSO, GTO and HHO obtain the same optimal value 0.0000E+00 with three different sets of decision variables. Furthermore, Table 16 presents the statistical analysis on the experimental results of RSO and the control group. RSO, GTO and HHO still achieve the optimal values among the four metrics. To visualize the optimization process of these algorithms on GTD, the convergence curves and error bars of RSO and the control group are shown in Fig. 11.

Three-bar truss design

The 3-BTD is a classical problem from civil engineering. Its optimization objective is the weight minimization of the truss structure. The primary constraints are based on the stress limitations of each bar, ensuring the structure can safely withstand applied loads without exceeding material stress limits. The details of this problem are as follows:

Minimize:

Subject to:

With bounds:

The optimal results of the experiments on 3-BTD for RSO and the control group are shown in Table 17. The results indicate that RSO obtain the best solution with \({\bar{x}}\)=(7.8868E-01, 4.0825E-01) and optimal value 2.638958E+02. Besides, Table 18 introduces the statistical analysis of the experimental results. Although the difference in the Mean of RSO, GTO, DBO, ARBBPSO, SSA and HHO across 36 independent experiments was small, RSO achieved the optimal values in the other three metrics. Particularly, the lowest Std indicates that RSO performs good robustness in solving 3-BTD problem.

To visualize the optimization process of these algorithms on 3-BTD, the convergence curves and error bars of HSO and the control group are shown in Fig. 12. RSO exhibits a significantly rapid downward trend in the early iterations. This suggests that RSO has the ability to rapidly approach the more optimal solutions at the early stage.

Conclusions and future works

In this paper, we design a novel meta-heuristic optimization algorithm called Rhinopithecus Swarm Optimization (RSO). The proposed algorithm draws inspiration from the social behaviors of rhinopithecus. In RSO, we categorize the population into mature, adolescent and infancy rhinopithecus, each performing one of three search methods: vertical migration, concerted search, and mimicry, respectively. These search methods enhance global and local search capabilities, decreasing the possibility of falling into local optima in high-dimensional space.

To validate the performance of RSO, we conducted benchmark tests using 29 test functions from the CEC2017 and three classical engineering design problems. Furthermore, the Wilcoxon signed-rank and Friedman tests were used to analyze the experimental results. Eight well-known optimization algorithms were selected as the control group, including DBO, BWO, SSA, AVOA, WOA, ARBBPSO, GTO, and HHO. Both the experimental results and statistical analysis reveal that RSO achieves better accuracy than the control group. However, the proposed algorithm still has some limitations that need to be addressed. In particular, RSO performed worse than SSA and AVOA in Unimodal Functions with 30 dimension. Additionally, compared to ARBBPSO, RSO shows an insignificant advantage in Multimodal, Hybrid, and Composite Functions. Thus, enhancing its optimization capability in low-dimensional problems is a significant ongoing work. We also aspire to develop new search strategy for RSO to tackle multi-objective problems in the future.

Data availability

Data will be made available on reasonable request with the coorsponding author.

Code availability

Code will be made available on reasonable request with the coorsponding author.

References

Karakatič, S. Optimizing nonlinear charging times of electric vehicle routing with genetic algorithm. Expert Syst. Appl. 164, 114039. https://doi.org/10.1016/j.eswa.2020.114039 (2021).

Sitek, P., Wikarek, J., Rutczyńska-Wdowiak, K., Bocewicz, G. & Banaszak, Z. Optimization of capacitated vehicle routing problem with alternative delivery, pick-up and time windows: A modified hybrid approach. Neurocomputing 423, 670–678. https://doi.org/10.1016/j.neucom.2020.02.126 (2021).

Liu, W., Dridi, M., Ren, J., El Hassani, A. H. & Li, S. A double-adaptive general variable neighborhood search for an unmanned electric vehicle routing and scheduling problem in green manufacturing systems. Eng. Appl. Artif. Intell. 126, 107113. https://doi.org/10.1016/j.engappai.2023.107113 (2023).

Jain, D. K., Tyagi, S. K. S., Neelakandan, S., Prakash, M. & Natrayan, L. Metaheuristic optimization-based resource allocation technique for cybertwin-driven 6g on ioe environment. IEEE Trans. Industr. Inf. 18, 4884–4892. https://doi.org/10.1109/TII.2021.3138915 (2022).

Chai, Y., Li, G., Qin, S., Feng, J. & Xu, C. A neurodynamic optimization approach to nonconvex resource allocation problem. Neurocomputing 512, 178–189. https://doi.org/10.1016/j.neucom.2022.09.044 (2022).

Chen, H., Zhang, X., Wang, L., Xing, L. & Pedrycz, W. Resource-constrained self-organized optimization for near-real-time offloading satellite earth observation big data. Knowl.-Based Syst. 253, 109496. https://doi.org/10.1016/j.knosys.2022.109496 (2022).

Luo, Q., Du, B., Rao, Y. & Guo, X. Metaheuristic algorithms for a special cutting stock problem with multiple stocks in the transformer manufacturing industry. Expert Syst. Appl. 210, 118578. https://doi.org/10.1016/j.eswa.2022.118578 (2022).

Zhao, Y., Hu, H., Song, C. & Wang, Z. Predicting compressive strength of manufactured-sand concrete using conventional and metaheuristic-tuned artificial neural network. Measurement 194, 110993. https://doi.org/10.1016/j.measurement.2022.110993 (2022).

Abdel-Basset, M., Mohamed, R., Jameel, M. & Abouhawwash, M. Nutcracker optimizer: A novel nature-inspired metaheuristic algorithm for global optimization and engineering design problems. Knowl.-Based Syst. 262, 110248. https://doi.org/10.1016/j.knosys.2022.110248 (2023).

Kumar, S. et al. Chaotic marine predators algorithm for global optimization of real-world engineering problems. Knowl.-Based Syst. 261, 110192. https://doi.org/10.1016/j.knosys.2022.110192 (2023).

Toaza, B. & Esztergár-Kiss, D. A review of metaheuristic algorithms for solving tsp-based scheduling optimization problems image 1. Appl. Soft Comput. 148, 110908. https://doi.org/10.1016/j.asoc.2023.110908 (2023).

Sahraei, M. A. & Çodur, M. K. Prediction of transportation energy demand by novel hybrid meta-heuristic ANN. Energy 249, 123735. https://doi.org/10.1016/j.energy.2022.123735 (2022).

Liu, W., Dridi, M., Fei, H. & Hassani, A. H. E. Hybrid metaheuristics for solving a home health care routing and scheduling problem with time windows, synchronized visits and lunch breaks. Expert Syst. Appl. 183, 115307. https://doi.org/10.1016/j.eswa.2021.115307 (2021).

Abdelmaguid, T. F. Bi-objective dynamic multiprocessor open shop scheduling for maintenance and healthcare diagnostics. Expert Syst. Appl. 186, 115777. https://doi.org/10.1016/j.eswa.2021.115777 (2021).

Farahani, M. S. & Hajiagha, S. H. R. Forecasting stock price using integrated artificial neural network and metaheuristic algorithms compared to time series models. Soft. Comput. 25, 8483–8513. https://doi.org/10.1007/s00500-021-05775-5 (2021).

Yuen, M.-C., Ng, S.-C., Leung, M.-F. & Che, H. A metaheuristic-based framework for index tracking with practical constraints. Complex Intell. Syst. 8, 4571–4586. https://doi.org/10.1007/s40747-021-00605-5 (2022).

Ikeda, S. & Nagai, T. A novel optimization method combining metaheuristics and machine learning for daily optimal operations in building energy and storage systems. Appl. Energy 289, 116716. https://doi.org/10.1016/j.apenergy.2021.116716 (2021).

Ng, C. S. W., Amar, M. N., Ghahfarokhi, A. J. & Imsland, L. S. A survey on the application of machine learning and metaheuristic algorithms for intelligent proxy modeling in reservoir simulation. Comput. Chem. Eng. 170, 108107. https://doi.org/10.1016/j.compchemeng.2022.108107 (2023).

Chakraborty, S., Saha, A. K., Ezugwu, A. E., Chakraborty, R. & Saha, A. Horizontal crossover and co-operative hunting-based whale optimization algorithm for feature selection. Knowl.-Based Syst. 282, 111108. https://doi.org/10.1016/j.knosys.2023.111108 (2023).

Carmon, Y., Duchi, J. C., Hinder, O. & Sidford, A. Accelerated methods for nonconvex optimization. SIAM J. Optim. 28, 1751–1772. https://doi.org/10.1137/17M1114296 (2018).

Liu, D., Xue, S., Zhao, B., Luo, B. & Wei, Q. Adaptive dynamic programming for control: A survey and recent advances. IEEE Trans. Syst. Man Cybern. Syst. 51, 142–160. https://doi.org/10.1109/TSMC.2020.3042876 (2021).

Elaziz, M. A. et al. Cooperative meta-heuristic algorithms for global optimization problems. Expert Syst. Appl. 176, 114788. https://doi.org/10.1016/j.eswa.2021.114788 (2021).

Braik, M. S. Chameleon swarm algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Syst. Appl. 174, 114685. https://doi.org/10.1016/j.eswa.2021.114685 (2021).

Braik, M., Hammouri, A., Atwan, J., Al-Betar, M. A. & Awadallah, M. A. White shark optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl.-Based Syst. 243, 108457. https://doi.org/10.1016/j.knosys.2022.108457 (2022).

Dehghani, M., Montazeri, Z., Trojovská, E. & Trojovský, P. Coati optimization algorithm: A new bio-inspired metaheuristic algorithm for solving optimization problems. Knowl.-Based Syst. 259, 110011. https://doi.org/10.1016/j.knosys.2022.110011 (2023).

Al-Betar, M. A., Awadallah, M. A., Braik, M. S., Makhadmeh, S. & Doush, I. A. Elk herd optimizer: A novel nature-inspired metaheuristic algorithm. Artif. Intell. Rev. 57, 48. https://doi.org/10.1007/s10462-023-10680-4 (2024).

Zhao, S., Zhang, T., Ma, S. & Wang, M. Sea-horse optimizer: A novel nature-inspired meta-heuristic for global optimization problems. Appl. Intell. 53, 11833–11860. https://doi.org/10.1007/s10489-022-03994-3 (2023).

Hashim, F. A. & Hussien, A. G. Snake optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst.https://doi.org/10.1016/j.knosys.2022.108320 (2022).

Abdollahzadeh, B., Gharehchopogh, F. S. & Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng.https://doi.org/10.1016/j.cie.2021.107408 (2021).

Zhong, C., Li, G. & Meng, Z. Beluga whale optimization: A novel nature-inspired metaheuristic algorithm. Knowl.-Based Syst. 251, 109215. https://doi.org/10.1016/j.knosys.2022.109215 (2022).

MiarNaeimi, F., Azizyan, G. & Rashki, M. Horse herd optimization algorithm: A nature-inspired algorithm for high-dimensional optimization problems. Knowl.-Based Syst. 213, 106711. https://doi.org/10.1016/j.knosys.2020.106711 (2021).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Futur. Gener. Comput. Syst. 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 (2019).

Zhou, G., Guo, J., Yan, K., Zhou, G. & Li, B. An atomic retrospective learning bare bone particle swarm optimization. In Advances in Swarm Intelligence: Proceedings of 14th International Conference, ICSI 2023, Shenzhen, China, July 14–18, 2023, Part I, 168–179, https://doi.org/10.1007/978-3-031-36622-2_14 (Springer-Verlag, Berlin, 2023).

Abed-alguni, B. H., Alawad, N. A., Barhoush, M. & Hammad, R. Exploratory cuckoo search for solving single-objective optimization problems. Soft. Comput. 25, 10167–10180. https://doi.org/10.1007/s00500-021-05939-3 (2021).

Abed-alguni, B. H., Paul, D. & Hammad, R. Improved salp swarm algorithm for solving single-objective continuous optimization problems. Appl. Intell. 52, 17217–17236. https://doi.org/10.1007/s10489-022-03269-x (2022).

Abed-alguni, B. H. Island-based cuckoo search with highly disruptive polynomial mutation. Int. J. Artific. Intell. 17, 57–82 (2019).

Aremu, O. O., Hyland-Wood, D. & McAree, P. R. A machine learning approach to circumventing the curse of dimensionality in discontinuous time series machine data. Reliabil. Eng. Syst. Safe. 195, 106706. https://doi.org/10.1016/j.ress.2019.106706 (2020).

Hashim, F. A., Houssein, E. H., Hussain, K., Mabrouk, M. S. & Al-Atabany, W. Honey badger algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 192, 84–110. https://doi.org/10.1016/j.matcom.2021.08.013 (2022).

Mirjalili, S. et al. Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 114, 163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002 (2017).

Khishe, M. & Mosavi, M. R. Chimp optimization algorithm. Expert Syst. Appl. 149, 113338. https://doi.org/10.1016/j.eswa.2020.113338 (2020).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008 (2016).

Abdollahzadeh, B., Gharehchopogh, F. S. & Mirjalili, S. Artificial gorilla troops optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Int. J. Intell. Syst. 36, 5887–5958. https://doi.org/10.1002/int.22535 (2021).

Xue, J. & Shen, B. Dung beetle optimizer: a new meta-heuristic algorithm for global optimization. J. Supercomput. 79, 7305–7336. https://doi.org/10.1007/s11227-022-04959-6 (2023).

Kumar, A. et al. A test-suite of non-convex constrained optimization problems from the real-world and some baseline results. Swarm Evol. Comput. 56, 100693. https://doi.org/10.1016/j.swevo.2020.100693 (2020).

Funding

The work was supported by the Natural Science Foundation of Hubei Province (2023AFB003) and Education Department Scientific Research Program Project of Hubei Province of China (Q20222208).

Author information

Authors and Affiliations

Contributions

G.Z.: Conceptualization, Methodology, Writing, Original draft preparation; D.W.: Validation, Software; G.Z.: Methodology, Software; J.D.: Formal analysis, Investigation; J.G. Writing—review and editing, Conceptualization, Funding acquisition, Supervision, Methodology. All authors read and approved the final manuscript. All authors agreed to publish it.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, G., Wang, D., Zhou, G. et al. A rhinopithecus swarm optimization algorithm for complex optimization problem. Sci Rep 14, 15628 (2024). https://doi.org/10.1038/s41598-024-66450-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-66450-x

- Springer Nature Limited