Abstract

The diagnosis of acute appendicitis and concurrent surgery referral is primarily based on clinical presentation, laboratory and radiological imaging. However, utilizing such an approach results in as much as 10–15% of negative appendectomies. Hence, in the present study, we aimed to develop a machine learning (ML) model designed to reduce the number of negative appendectomies in pediatric patients with a high clinical probability of acute appendicitis. The model was developed and validated on a registry of 551 pediatric patients with suspected acute appendicitis that underwent surgical treatment. Clinical, anthropometric, and laboratory features were included for model training and analysis. Three machine learning algorithms were tested (random forest, eXtreme Gradient Boosting, logistic regression) and model explainability was obtained. Random forest model provided the best predictions achieving mean specificity and sensitivity of 0.17 ± 0.01 and 0.997 ± 0.001 for detection of acute appendicitis, respectively. Furthermore, the model outperformed the appendicitis inflammatory response (AIR) score across most sensitivity–specificity combinations. Finally, the random forest model again provided the best predictions for discrimination between complicated appendicitis, and either uncomplicated acute appendicitis or no appendicitis at all, with a joint mean sensitivity of 0.994 ± 0.002 and specificity of 0.129 ± 0.009. In conclusion, the developed ML model might save as much as 17% of patients with a high clinical probability of acute appendicitis from unnecessary surgery, while missing the needed surgery in only 0.3% of cases. Additionally, it showed better diagnostic accuracy than the AIR score, as well as good accuracy in predicting complicated acute appendicitis over uncomplicated and negative cases bundled together. This may be useful in centers that advocate for the conservative treatment of uncomplicated appendicitis. Nevertheless, external validation is needed to support these findings.

Similar content being viewed by others

Introduction

Acute appendicitis represents one of the most common surgical emergencies, especially in pediatric patients presenting with abdominal pain1,2. The incidence is relatively stable at 1 per 1000 per year in Western countries, whereas a trend towards an increase in incidence is observed in newly industrialized countries3. Although making the diagnosis is usually straightforward in patients presenting with classical symptoms, such as migrating abdominal pain and nausea, atypical presentations commonly lead to unnecessary explorations and delays in treatment4,5. Burdened by the historical data suggesting high mortality and morbidity in untreated appendicitis, management of suspected appendicitis has been based for a long time on the principle of early exploration of wide indications to prevent perforation6.

A prevailing opinion is that untreated appendicitis will eventually progress to perforation thus causing inevitable worsening in clinical outcomes7. Accordingly, medical professionals use advanced diagnostic modalities, such as ultrasound, computerized tomography (CT) and magnetic resonance imaging (MRI) to correctly identify this pathology.

However, available data suggests that spontaneous resolution of appendicitis is more common than previously thought and that delay in treatment after hospital admission doesn’t increase rates of perforation8. Moreover, insights in the large registries of appendectomies point out that although perforation is associated with increased mortality, mortality rates of negative appendectomies in patients undergoing surgery for non-specific abdominal pain are also increased beyond what could be explained by an underlying condition concealed by the appendectomy9.

In the absence of consistent international guidelines, the diagnosis of appendicitis and concurrent surgery referral is mostly based on clinical data, standard laboratory findings, and ultrasound10. The diagnostic accuracy of such an approach can vary significantly depending on sex, race, and hospital experience, but overall, the incidence of negative appendectomies is around 10–15%11. Several risk scores were developed to aid in the diagnosis of acute appendicitis in a pediatric population: the appendicitis inflammatory response score (AIR), Alvarado score, pediatric appendicitis score (PAS), and the pediatric appendicitis risk calculator (pARC)12. AIR and pARC seem to offer higher specificity and positive predictive values compared to the Alvarado and the PAS scores, yet, the rate of negative appendectomies remains relatively high even with the use of the aforementioned scores13.

Machine learning (ML) recently emerged as a useful modality for the improvement of management strategies in virtually all fields of medicine14. The created learning models leverage large amounts of data to extract complex statistical patterns with predictive power superior to that of standard modalities15. The usefulness of ML models is largely dependent on the amount and quality of data on which it is trained, but also on the adequate setting of model accuracy.

The primary aim of the present study was to develop an ML model which can reduce the number of negative appendectomies in pediatric patients with a high clinical probability of acute appendicitis. A secondary aim was to construct a model that would differentiate patients with complicated acute appendicitis from patients who either have uncomplicated appendicitis or no appendicitis.

Materials and methods

Study design and ethical considerations

The data for this study were gathered from the patient’s records from the department of pediatric surgery, University Hospital of Split, with suspected acute appendicitis who underwent appendectomy. The data was gathered from January 2019 to July 2023. The Ethics Committee of the University Hospital of Split approved the study protocol (approval number 500-03/22-01/188; date of approval November 28, 2022) conforming to the World Health Organization Declaration of Helsinki of 1975 as revised in 2013, and the International Conference on Harmonization Guidelines on Good Clinical Practice. The patients’ anonymity was strictly maintained.

Inclusion and exclusion criteria

All pediatric patients (0–17 years of age) that were referred to urgent surgical treatment under assumption of acute appendicitis were included in the study. Exclusion criteria were age > 17 years, the presence of any significant comorbidity (chronic cardiac, renal, or gastrointestinal condition), body mass index (BMI) ≥ 35 kg/m2, incidental appendectomy, and PHD that is neither appendicitis nor histologically normal appendix (e.g. neuroendocrine tumors or enterobiasis) or if no histopathology report was available.

Study aims and data preparation

We defined three study goals:

-

Development of a model for the prediction of negative and positive acute appendicitis cases based on the PHD.

-

Comparison of performance of the appendicitis prediction model to the acute (AIR) score.

-

The differentiation between complicated from uncomplicated appendicitis cases and negative appendectomies.

The initial set of features contained patient data, features from complete and differential blood counts, biochemistry measures (such as sodium concentration and C-reactive protein—CRP), and clinical examination features (presence of abdominal pain and rebound tenderness or guarding). All patients underwent surgical treatment, which in the majority of cases was done as three port laparoscopic appendectomy. In very few cases standard open appendectomy was performed. Operative technique was chosen on surgeon’s preference.

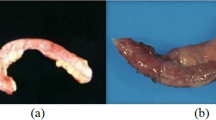

Twenty-two features were included for model training and analysis: age, sex, symptoms duration, height, weight, BMI, body temperature, white blood cells (WBC) count, CRP, neutrophil percentage, lymphocyte percentage, thrombocyte/lymphocyte ratio (TLR), neutrophil/lymphocyte ratio (NLR), mean platelet volume (MPV), mean corpuscular hemoglobin concentration (MCHC), sodium concentration, rebound tenderness, and the presence or absence of signs and symptoms such as vomiting, nausea, and pain migration. The outcome feature was PHD. Patients with confirmed pathohistological diagnosis (PHD) of acute appendicitis were subdivided as having either uncomplicated appendicitis (catarrhal or phlegmonous) or complicated appendicitis (gangrenous or gangrenous-perforated) based on histopathology reports.

Features were excluded if more than 30% of data was missing and/or they were highly correlated with some of the chosen features, such as hemoglobin count, hematocrit, mean corpuscular hemoglobin, etc.

In total, the dataset consisted of 614 pediatric patients. Patients were excluded if more than two missing values were present among the features that were determined to be highly important based on previous studies: neutrophils percentage, lymphocytes count, WBC count, CRP, and sodium concentration features. After applying the above exclusion criteria, 551 patients was included in the final analysis. Among 551 patients, 47 cases were negative for appendicitis, 252 had uncomplicated appendicitis, and 252 had complicated appendicitis, indicating an imbalanced dataset. The final dataset contained missing values as presented in Table 1.

Prediction model training, optimization, and validation

We tested three ML algorithms: random forest, eXtreme gradient boosting (XGBoost), and Logistic regression. The first two algorithms were chosen based on their known effectiveness in dealing with tabular data and imbalanced datasets, while logistic regression was chosen as a baseline model16,17,18.

For model training and validation, the nested cross-validation approach was used, with five-fold inner and outer cross-validation, repeated 10 times (Fig. 1). Consequently, each outer fold was split into training (80%) and test sets (20%), with stratification on the target variables due to the imbalanced nature of the dataset. Inner cross-validation was performed on each outer fold’s training set to tune the hyperparameters and perform threshold shifting. Missing values were imputed by utilizing the Bagged Trees algorithm (using the “step_impute_bag” function from the “recipes” package in the R programming language). To avoid data leakage, the imputation of the validation and test datasets was performed only based on estimates calculated from their training counterparts.

Threshold shifting on the receiver operator characteristic curve (ROC) was used to address the data imbalance. Since our goal was to build a model that improves the detection of negative cases without sacrificing the ability to detect positive cases, we chose to tune our model hyperparameters and perform threshold shifting based on a custom metric that locates the point of highest specificity on the ROC curve for which sensitivity is still 1 (max (specificity/sensitivity) = 1).

The threshold applied to the test set is determined as the second lowest threshold obtained from inner cross-validation. In terms of retaining high sensitivity on the test set, this is a more conservative approach compared to taking the mean threshold. In other words, we imposed a constraint that the model should be 100% accurate in diagnosing true appendicitis for patients in the training data. This way, we can be as certain as possible that when the model outputs a negative diagnosis, it is not a false negative, but a true negative.

The mean and standard error of the target metrics were obtained by averaging the results from 50 outer-fold test sets (five-fold outer cross-validation repeated 10 times). The best model was chosen based on the mean specificity score while retaining maximum sensitivity.

Modeling was performed using Python (version 3.9.5, Python Software Foundation, Wilmington, DE, USA) and R Programming Languages (R Core Team, 2023, Vienna, Austria). Python packages used were “numpy”, “pandas”, “scikit-learn”, and “xgboost”. Within the R ecosystem, “dplyr”, “tidyr”, “purrr”, “ggplot2”, “plotly”, “tidymodels”, “ranger”, “xgboost”, and “fastshap” libraries were used. For random forest models, tuned hyperparameters were the number of variables to possibly be used for splitting at each node, and minimal node size to split at. For XGBoost, aside from the two hyperparameters already mentioned, the learning rate, gamma, number of trees, subsample ratio and maximum tree depth was tuned as well.

Feature importance and model explainability

The significance of individual features was evaluated using shapley additive explanation (SHAP) values19. The values were obtained using the “fastshap” package with 10,000 simulations. By focusing on a specific feature within many feature coalitions, Shapley values measure the impact of each feature on shifting the prediction from the average model prediction to the final model output. Consequently, these values facilitate the explanation of predictions from ML models.

Statistical analysis

The normality of data distributions was tested using the Kolmogorov–Smirnov test. For two group comparisons, the significance of differences was assessed using the t-test for normally distributed data or the Mann–Whitney test for deviations from normality. For comparisons involving more than two groups, ANOVA was used when data from all groups was normally distributed, and the Kruskal–Wallis test otherwise. The Chi-squared test was used for non-numerical features. A p-value threshold of 0.05 was used when considering statistical significance. The R programming language was used for all statistical analyses and visualizations.

Ethical approval

The Ethics Committee of the University Hospital of Split approved the study protocol (approval no: 500-03/22-01/188; date of approval: November 28, 2022). Informed consent was not applicable for this study.

Results

Patient characteristics

In total, 551 patients were included in model training and evaluation, among which 252 patients had uncomplicated appendicitis, 252 had complicated appendicitis, and 47 patients had a negative PHD. Patient features and characteristics are described in Tables 2, 3.

Model for appendicitis prediction

The goal of this research was to develop a model that can reduce the number of negative appendectomies (appendectomies due to misdiagnosed appendicitis). Since surgical appendectomy is a low-risk procedure, it is considered the gold standard for treating acute appendicitis. Nevertheless, although rare, complications occurring during or after surgery are still possible.

As an alternative, conservative antibiotic treatment can be used. This approach results in low morbidity and mortality rates with a moderate recurrence rate. However, it's important to note that delaying surgical intervention can potentially lead to the development of complications. Since complications due to delayed surgery pose a more significant risk for the patient compared to surgical risks, surgery is usually the preferred option.

Taking the above facts into consideration, it is clear that the repercussion of false negative diagnoses is much higher than the cost of false positives. Consequently, while building a model for better identification of false positives, care should be taken to keep the number of false negatives as low as possible.

Following this logic, we chose to tune our model hyperparameters and thresholds with a custom metric that uses threshold shifting on the ROC curve to achieve the highest possible specificity score, while retaining maximum sensitivity. The threshold applied to the test set is determined as the second lowest threshold obtained from inner cross-validation. In terms of retaining high sensitivity on the test set, this is a more conservative approach compared to taking the mean threshold. In other words, we imposed a constraint that the model should be 100% accurate in diagnosing true appendicitis for patients in the training data. This way, we can be as certain as possible that when the model outputs a negative diagnosis, it is not a false negative, but a true negative.

Model characteristics

Labeling negative PHD findings as zeros, and positive (uncomplicated and complicated) as ones, we performed binary classification according to the above criteria using three different models—logistic regression, XGBoost boosted trees, and random forest (Table 4).

The best results were obtained with the random forest model, with a mean specificity and sensitivity of 0.17 ± 0.01 and 0.997 ± 0.001, respectively (Fig. 2). It is important to note that the false negative findings are practically all uncomplicated appendicitis cases, not complicated (gangrenous) ones. We can quantify this by calculating sensitivity separately for uncomplicated and complicated appendicitis patient groups (Table 5). The sensitivity was 0.995 ± 0.002 for uncomplicated appendicitis detection and 0.9996 ± 0.0004 for complicated appendicitis detection.

To better understand what the model has learned, we calculated Shapley values on one of the outer fold models to determine feature importance (Fig. 3). The top 10 most important features seem to have been CRP, WBC count, symptoms duration, neutrophils percentage, sodium concentration, TLR, lymphocytes percentage, MCHC, NLR, and pain migration.

Feature importance based on approximate Shapley values for appendicitis prediction. CRP C-reactive protein, WBC white blood cell, TLR thrombocyte to lymphocyte ratio, MCHC mean corpuscular hemoglobin concentration, NLR neutrophil to lymphocyte ratio, MPV mean platelet volume, BMI body mass index, RDW red blood cell distribution width.

Comparison to the AIR score

To confirm the added value of our model, we also determined the sensitivities and specificities of AIR scores over all possible score thresholds by averaging values over the same 50 outer folds used in the evaluation of our model (Table 6, Fig. 4a). Performance for the threshold of AIR score greater than 0 in terms of sensitivity was similar to ours, but the specificity was 0. For an AIR score threshold set to values greater than 1, the specificity was similar to that of our model, but sensitivity was significantly lower (p = 5.674\(\cdot\)10−14, paired student’s t-test). Aside from the region of high sensitivity, our model outperformed the AIR score across most other sensitivity–specificity combinations as well (Fig. 4b).

Figure 5 shows the relationship between the feature values and model predictions for the six most important features.

In terms of thresholds at which feature values increase the probability of appendicitis, we see a lot of similarity to AIR score cutoffs—around 10 mg/L for CRP, 10 × 109/L for WBC count, and 80% for neutrophils percentage.

Model for prediction of complicated appendicitis

To account for the possibility of using conservative antibiotic treatment another classification model was used in which negative cases of appendicitis were bundled with uncomplicated ones and labeled as zeros, and only complicated ones were labeled as ones. Keeping everything else the same as in the above approach, the random forest model again provided the best predictions (Table 7), with a joint mean specificity of 0.129 ± 0.009 at the sensitivity of 0.994 ± 0.002 (Fig. 6). This model should be used when opting for the conservative approach to appendicitis treatment in which only patients with complicated cases of appendicitis undergo immediate surgery.

If we separate the specificities for negative and uncomplicated cases of appendicitis (Table 8), we obtain a specificity of 0.25 ± 0.02 for negative PHDs and 0.107 ± 0.008 for uncomplicated cases of appendicitis.

The top 10 most important features according to Shapley values seem to have been CRP, sodium concentration, NLR, lymphocyte percentage, symptoms duration, WBC count, neutrophils percentage, rebound tenderness, body temperature and vomiting (Fig. 7).

Feature importance based on approximate Shapley values for prediction of complicated appendicitis. CRP C-reactive protein, NLR neutrophil to lymphocyte ratio, WBC white blood cell, TLR thrombocyte to lymphocyte ratio, RDW red blood cell distribution width, MPV mean platelet volume, MCHC mean corpuscular hemoglobin concentration, BMI body mass index.

Discussion

This study presents an ML model primarily aimed at the reducing negative appendectomies in the pediatric population. Although similar models have so far been developed to support the diagnosis of acute appendicitis, we believe that many of them have limited clinical usefulness as most models were steered towards higher specificity at the cost of sensitivity, inappropriately increasing the risk of misdiagnosing acute appendicitis. Additionally, some of the studies developed models with data from patients who did not have PHD findings, since they did not receive surgical treatment, which has significant impact on development of the ML models and results10,20,21,22,23. Furthermore, in some studies, the issue of finding the optimal balance between sensitivity and specificity was not discussed, and the authors believe that this is crucial if such models are to be used in clinical decision-making21,23.

The model was trained using data from pediatric patients who were already highly suspected of having appendicitis based on clinical judgment and who underwent surgery. In this high-risk group, all patients who actually had appendicitis were considered “true positives”, while those who turned out not to have appendicitis after surgery were “false positives”. There were no patients in the dataset who didn’t have surgery, so the model couldn’t learn to identify “true negatives” (no appendicitis, no surgery) or “false negatives” (appendicitis missed by the model). This model is intended for pediatric patients that a surgeon would typically recommend operating owing to high suspicion for appendicitis. In this population, our model would miss appendicitis in only 3 out of 1000 cases (99.7% sensitivity). In comparison, a hypothetical surgeon operating on all 1000 high-risk patients based on clinical judgment alone would correctly identify all appendicitis cases but would be wrong about the need for surgery 8.5% of the time (0% specificity). Our model achieved 17% specificity in this high-risk group, meaning that for every 1000 patients, it would prevent 17 unnecessary surgeries in children who didn’t actually have appendicitis, while still catching almost all true appendicitis cases (missing only 3). In summary, for pediatric patients already highly suspected of having appendicitis, our model could help prevent some unnecessary surgeries compared to current clinical judgment, while missing very few cases of true appendicitis.

It is important to note that there is a much higher chance that the false negative model predictions would be uncomplicated appendicitis cases, as evident from very high sensitivity in the case of gangrenous appendicitis detection (99.96%). This is relevant since some authors suggest that only complicated appendicitis should be immediately surgically treated, while uncomplicated cases can initially be treated conservatively using antibiotics (approximately 20–30% of these patients will end up in surgery)24,25. Therefore, provided that we apply antibiotic therapy in patients with high-risk but negative ML model results, the sensitivity could be increased even further.

This line of thought led us to test another classification model in which negative appendectomies were bundled with uncomplicated, and tested against complicated. Keeping everything else the same as in the above-noted approach, we obtained comparable sensitivity (99.4%), and somewhat lower specificity (12.9%). Theoretically, such an ML model could be used when opting for the alternative approach to appendicitis treatment in which only patients with complicated appendicitis undergo immediate surgery, whereas uncomplicated ones are initially treated with antibiotics. This approach would increase the number of false positives saved from surgery at the cost of assigning negative outputs to uncomplicated appendicitises. However, since the mislabeled uncomplicated appendicitises were those with the lowest probabilities of appendicitis according to the model, the proportion of patients who were labeled as zeros by the model and have to go to surgery after conservative treatment would most likely be lower than presumed 20%. In this regard, it is worth noting that increasing evidence suggests that not all patients with appendicitis will progress to perforation and that perforation occurs predominantly before the patients arrive at the hospital8,26. In addition, the mortality rate seems to be lower after perforation and larger after negative appendectomy than previously thought, indicating the importance of correct over rapid diagnosis6. Moreover, although we did not perform cost-benefit analysis, it is reasonable to suggest that reducing the number of unnecessary appendectomies would result in lower overall costs, even when accounting for missed cases.

In order to further establish the applicability of our appendicitis prediction model, we compared it to the AIR score27. To the best of our knowledge, this study is the first of its kind to make this comparison. For an AIR score threshold set to values greater than 1, the specificity was similar to that of our model, but at the cost of lower sensitivity (99.7% vs. 97.9%). On the other hand, if the cutoff for the low probability group was set at 5, as suggested by the authors of the AIR score, our model would significantly outperform the AIR score in terms of sensitivity, while still providing useful specificity values (99.7%/17% vs. 31%/92%)27. Nonetheless, as we observed a lot of similarity to AIR score cutoffs (around 10 mg/dL for CRP, 10 × 109/L for WBC count, and 80% for Neutrophils), the AIR score might be useful in settings where one needs a quick approximation of appendicitis or when only basic computational devices are available. However, the ability of ML models to shift the threshold values when necessary and capture nonlinear feature interactions results in superior model performance.

Several other authors developed ML models aimed at improving of diagnosis and management of patients with suspected acute appendicitis10,22,28,29,30,31. Unlike our study in which we aimed to preserve sensitivity as much as possible, other authors opted for high specificity and relatively low sensitivity despite similar AUC scores32. Furthermore, not all models were built based on definitive histopathological data for all patient cases, whereas some models were built on different populations (pediatric and non-pediatric population) or had significantly different numbers of false positive appendectomies, thus limiting our ability to compare the results10,22. Overall, it seems that different authors created models that are of similar accuracy to ours, but our model mostly stands out because of its aforementioned clinical relevance. On the other hand, studies defining the decision-making process in appendicitis using artificial neural networks (ANNs) yielded impressive results (91% sensitivity with 85% specificity, and 100% sensitivity with 97% specificity) but ANNs are burdened with significant data overfitting, and hence, without proper validation can lead to overly optimistic results33,34,35. For example, the study by Prabhudesai et al. involved training and testing an ANN on a limited dataset of only 60 patients34. The absence of methodologies such as bootstrapping and cross-validation in their approach significantly increases the risk of overfitting in the model34,36.

The present study is burdened by several limitations. Firstly, data was collected retrospectively. Secondly, the registry contains the data from only one hospital, and the results should thus be validated on other populations to provide better generalizability. Finally, the dataset was overall imbalanced towards positive appendicitis diagnosis, but this issue cannot inherently be resolved, given the restrictive nature of clinical decision-making for appendectomy referral. On the other hand, the greatest strengths of this study include the fact that the model is based on definitive histopathological reports, but does not require radiological techniques, such as ultrasound and CT, thus making the model widely available, even in underdeveloped regions. Finally, unlike most available ML models, our model maintained extremely high sensitivity, thus ascertaining that virtually every patient with acute appendicitis is adequately recognized as a candidate for appendectomy.

In conclusion, this study presents a machine learning (ML) model designed to reduce the number of unnecessary appendectomies in the pediatric population at high risk of acute appendicitis. The developed ML model aims to achieve high sensitivity to minimize the risk of missing an acute appendicitis diagnosis. Moreover, the model demonstrated superior diagnostic accuracy compared to the AIR score in this population. These findings suggest the potential use of ML models in assisting clinicians in making accurate decisions. Additionally, by presenting a model that predicts complicated (gangrenous) appendicitis cases, the study addresses the needs of centers that endorse performing emergency surgery only in patients with complicated appendicitis. In these centers, implementing such a model could be beneficial for appendicitis management.

Ultimately, the developed ML model could prevent 17% of high-risk patients from undergoing unnecessary appendectomies while maintaining a low rate of missed diagnoses (0.3%). However, to determine whether these models are beneficial in the diagnostic approach to patients with suspected appendicitis, they need to be externally validated in large cohorts.

Data availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Abbreviations

- AIR score:

-

Appendicitis inflammatory response score

- ANN:

-

Artificial neural networks

- AUC:

-

Area under curve

- BMI:

-

Body mass index

- MCHC:

-

Mean corpuscular hemoglobin concentration

- ML:

-

Machine learning

- MPV:

-

Mean platelet volume

- NLR:

-

Neutrophil to lymphocyte ratio

- pARC:

-

Pediatric appendicitis risk calculator

- PAS:

-

Pediatric appendicitis score

- PHD:

-

Pathohistological diagnosis

- RDW:

-

Red blood cell distribution width

- ROC:

-

Receiver operator characteristic curve

- SHAP:

-

Shapley additive explanation

- TLR:

-

Thrombocyte to lymphocyte ratio

- WBC:

-

White blood cells

- XGBoost:

-

EXtreme gradient boosting

References

Abu Foul, S. et al. Is early appendectomy in adults diagnosed with acute appendicitis mandatory? A prospective study. World J. Emerg. Surg. 14, 2 (2019).

Becker, C. & Kharbanda, A. Acute appendicitis in pediatric patients: An evidence-based review. Pediatr. Emerg. Med. Pract. 16, 1–20 (2019).

Ferris, M. et al. The global incidence of appendicitis: A systematic review of population-based studies. Ann. Surg. 266, 237–241 (2017).

Snyder, M. J., Guthrie, M. & Cagle, S. Acute appendicitis: Efficient diagnosis and management. Am. Fam. Phys. 98, 25–33 (2018).

Wang, Z. H., Ye, J., Wang, Y. S. & Liu, Y. Diagnostic accuracy of pediatric atypical appendicitis: Three case reports. Medicine 98, e15006 (2019).

Jumah, S. & Wester, T. Non-operative management of acute appendicitis in children. Pediatr. Surg. Int. 39, 11 (2022).

Howell, E. C., Dubina, E. D. & Lee, S. L. Perforation risk in pediatric appendicitis: Assessment and management. Pediatr. Health Med. Ther. 9, 135–145 (2018).

Di Saverio, S. et al. Diagnosis and treatment of acute appendicitis: 2020 update of the WSES Jerusalem guidelines. World J. Emerg. Surg. 15(1), 27 (2020).

Coccolini, F. et al. Conservative treatment of acute appendicitis. Acta Biomed 89, 119–134 (2018).

Marcinkevics, R., Wolfertstetter, P. R., Wellmann, S., Knorr, C. & Vogt, J. E. Using machine learning to predict the diagnosis, management and severity of pediatric appendicitis. Front. Pediatr. 9, 662183 (2021).

Jukić, M., Nizeteo, P., Matas, J. & Pogorelić, Z. Trends and predictors of pediatric negative appendectomy rates: A single-centre retrospective study. Children 10, 887 (2023).

Pogorelić, Z., Rak, S., Mrklić, I. & Jurić, I. Prospective validation of Alvarado score and pediatric appendicitis score for the diagnosis of acute appendicitis in children. Pediatr. Emerg. Care 31, 164–168 (2015).

Pogorelić, Z. et al. Validity of appendicitis inflammatory response score in distinguishing perforated from non-perforated appendicitis in children. Children 8, 309 (2021).

Sim, J. Z. T., Fong, Q. W., Huang, W. & Tan, C. H. Machine learning in medicine: What clinicians should know. Singap. Med. J. 64, 91–97 (2023).

Shin, S. et al. Machine learning vs. conventional statistical models for predicting heart failure readmission and mortality. ESC Heart Fail. 8, 106–115 (2021).

Chen, C., Liaw, A. & Breiman, L. Using Random Forest to Learn Imbalanced Data 1–12 (University of California, 2014).

Velarde G, Sudhir A, Deshmane S, Deshmunkh A, Sharma K, Joshi V. Evaluating XGBoost for Balanced and Imbalanced Data: Application to Fraud Detection. arXiv preprint. https://arxiv.org/abs/2303.15218 [cs.LG]. Accessed 12 Dec 2023. (2023).

More, A. S. & Rana, D. P. An experimental assessment of random forest classification performance improvisation with sampling and stage wise success rate calculation. Procedia Comput. Sci. 172, 1–8 (2020).

Lundberg S, Lee SI. A Unified Approach to Interpreting Model Predictions. arXiv preprint. https://arxiv.org/abs/1705.07874 [cs.AI]. Accessed 12 Dec 2023. (2017).

Phan-Mai, T. A. et al. Validity of machine learning in detecting complicated appendicitis in a resource-limited setting: Findings from Vietnam. Biomed. Res. Int. 2023, 5013812 (2023).

Mijwil, M. M. & Aggarwal, K. A diagnostic testing for people with appendicitis using machine learning techniques. Multimed. Tools Appl. 81, 7011–7023 (2022).

Akbulut, S. et al. Prediction of perforated and nonperforated acute appendicitis using machine learning-based explainable artificial intelligence. Diagnostics 13, 1173 (2023).

Bhandarkar, S. et al. Emergent applications of machine learning for diagnosing and managing appendicitis: A state-of-the-art review. Surg. Infect. 1(25), 7–18 (2024).

Yeh, D. D. et al. Multicenter study of the treatment of appendicitis in America: Acute, perforated, and gangrenous (MUSTANG), an EAST multicenter study. Ann. Surg. 273(3), 548–556 (2021).

Minneci, P. C. et al. Association of nonoperative management using antibiotic therapy vs laparoscopic appendectomy with treatment success and disability days in children with uncomplicated appendicitis. JAMA 324, 581–593 (2020).

Li, J. Revisiting delayed appendectomy in patients with acute appendicitis. World J. Clin. Cases 9, 5372–5390 (2021).

Andersson, M. & Andersson, R. E. The appendicitis inflammatory response score: A tool for the diagnosis of acute appendicitis that outperforms the Alvarado score. World J. Surg. 32, 1843–1849 (2008).

Reismann, J. et al. Diagnosis and classification of pediatric acute appendicitis by artificial intelligence methods: An investigator-independent approach. PLoS One 14, e0222030 (2019).

Akmese, O. F., Dogan, G., Kor, H., Erbay, H. & Demir, E. The use of machine learning approaches for the diagnosis of acute appendicitis. Emerg. Med. Int. 2020, 7306435 (2020).

Aydin, E. et al. A novel and simple machine learning algorithm for preoperative diagnosis of acute appendicitis in children. Pediatr. Surg. Int. 36, 735–742 (2020).

Stiel, C. et al. The modified Heidelberg and the AI appendicitis score are superior to current scores in predicting appendicitis in children: A two-center cohort study. Front. Pediatr. 8, 592892 (2020).

Afzal, B. et al. Is it possible to predict the severity of acute appendicitis? Reliability of predictive models based on easily available blood variables. World J. Emerg. Surg. 18, 10 (2023).

Hsieh, C. H. et al. Novel solutions for an old disease: Diagnosis of acute appendicitis with random forest, support vector machines, and artificial neural networks. Surgery 149, 87–93 (2011).

Prabhudesai, S. G. et al. Artificial neural networks: Useful aid in diagnosing acute appendicitis. World J. Surg. 32, 305–309 (2008).

Park, S. H. & Han, K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology 286, 800–809 (2018).

Ghareeb, W. M., Emile, S. H. & Elshobaky, A. Artificial intelligence compared to Alvarado scoring system alone or combined with ultrasound criteria in the diagnosis of acute appendicitis. J. Gastrointest. Surg. 26(3), 655–658 (2022).

Author information

Authors and Affiliations

Contributions

IM participated in conceptualization, methodology, investigation, formal analysis and original draft preparation. ZB participated in visualization, investigation, formal analysis and original draft preparation. MK participated in visualization, investigation, formal analysis and original draft preparation. JV participated in visualization, investigation, formal analysis and reviewing and editing of the manuscript. KB participated in visualization, investigation, formal analysis and original draft preparation. ZP participated in conceptualization, resources, project administration and reviewing and editing of the manuscript. JB participated in conceptualization, resources, project administration and reviewing and editing of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Males, I., Boban, Z., Kumric, M. et al. Applying an explainable machine learning model might reduce the number of negative appendectomies in pediatric patients with a high probability of acute appendicitis. Sci Rep 14, 12772 (2024). https://doi.org/10.1038/s41598-024-63513-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-63513-x

- Springer Nature Limited