Abstract

Remote sensing image fusion is dedicated to obtain a high-resolution multispectral (HRMS) image without spatial or spectral distortion compared to the single source image. In this paper, a novel fusion algorithm based on Bayesian estimation for remote sensing images is proposed from the new perspective of risk decisions. In this study, an observation model based on Bayesian estimation for remote sensing image fusion is constructed. Three categories of probabilities including prior, conditional and posterior probabilities are calculated after an intensity-hue-saturation (IHS) transformation is applied to the original low-resolution MS image. To obtain the desired HRMS image, with the corrected posterior probability, a fusion rule based on Bayesian decisions is designed to estimate which pixels to select from the panchromatic (PAN) image and the intensity component of the MS image. The selected pixels constitute a new component that will participate in an IHS inverse transformation to yield the fused image. Extensive experiments were performed on the Pleiades, WorldView-3, and IKONOS datasets, and the results demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

Introduction

Due to technical limitations, Pleiades, WorldView-3, IKONOS etc. satellites cannot obtain high-spatial-resolution multispectral (HRMS) images. Instead, they concurrently use panchromatic (PAN) and multispectral (MS) sensors. PAN sensors provide high-spatial-resolution images without spectral information, and MS sensors provide low-spatial-resolution multispectral (LRMS) images1. However, it is necessary to produce high-spatial-resolution multispectral (HRMS) images to describe the ground truth for map updating, ocean monitoring, land-use classification etc.2. Remote sensing image fusion is the most commonly used method; it integrates the complementary information in PAN and MS images to produce HRMS images3.

The methods for remote sensing image fusion mainly include component substitution (CS), multiresolution analysis (MRA), and methods based on model. CS methods attempt to find a new high-spatial-resolution component similar to a PAN image or directly replace one of the components obtained after transforming the MS image with a PAN image. For example, intensity-hue-saturation (IHS) transform4, principal component analysis (PCA)5 and the Gram‒Schmidt (GS) method6. Generally, methods such as these provide fusion images with clear detail, texture, and contour but large chromatic aberrations. In contrast to CS, MRA decomposes or transforms the input images at multiple scales, and the fusion of the input images is completed at different scales, which effectively preserves spectral information but results in the loss of some high-frequency details. Examples include the Contourlet transform (CT), Shearlet transform (ST), nonsubsampled CT (NSCT) and ST (NSST)7.

In recent years, methods based on models have received much attention. Deep-learning based method is most popular, such as multiscale and multidepth convolutional neural network (CNN) for pansharpening8, deep residual network for pansharpening9, Pan-sharpening via conditional invertible neural network10, and Laplacian pyramid networks for multispectral pansharpening11. Methods such as these have a strong learning ability for complex features and can produce high-quality fusion images, but CNN-based deep models need training with complex procedures and large amounts of resources, which can result in high time consumption. For example, when deep learning first began, Alexnet had only 5 convolutional layers12, recently, the famous network GooglNet has 22 convolutional layers and the remaining network has 152 layers13. Such networks require huge amounts of data to train and test, and the training takes a long time. It usually takes 2–3 days to complete a training session. In contrast, methods based on Bayesian theory or Markov random fields consider remote sensing image attributes to estimate the change state of pixels in remote sensing images. It is easy for them to construct efficient and high-quality fusion models, and they have simple algorithm, and their compute speed is fast14. Such as Khademi et al.15 developed a remote sensing image fusion method based on a combination of an MRF-based prior model with a Bayesian framework, Upla et al.16 used an MRF to consider the spatial dependencies among the pixels, and Yang et al.17 proposed an efficient and high-quality pansharpening model based on conditional random fields. These methods can effectively fuse the remote sensing images and have simple calculation. For example, the method proposed by Yang et al.17 obtains a fused HRMS image only 4.6 s.

To effectively overcome the spatial or spectral distortion caused by the abovementioned methods, a new Bayesian decision-based fusion algorithm for remote sensing images is presented. The proposed algorithm first constructs an observation model based on Bayesian estimation after performing an IHS transformation on the original LRMS image. Then, Bayesian decision theory is used to design a fusion rule to obtain a new high-spatial-resolution panchromatic component. After replacing the I component provided by the IHS forwards transformation with the newly obtained component, an IHS inverse transformation is performed to obtain the fused images. The main contributions of this paper are twofold: (1) we define an observation model based on Bayesian estimation for remote sensing image fusion, and (2) Bayesian decision theory is introduced to design a fusion rule, which is employed to obtain a new I component to replace the original I component to produce a high-quality HRMS image.

Bayesian decision theory

Bayesian decision theory estimates unknown states with subjective probabilities under incomplete information, then modifies their occurrence probabilities with the Bayesian formula, and finally makes optimal decisions by using the modified probability10.

The basic ideas of Bayesian decision theory include (1) determining the prior probabilities, which are the probabilities of various natural states that are determined before any experiment or investigation is conducted; (2) using the Bayesian formula to convert the prior probabilities into posterior probabilities, yielding a new probability distribution regarding the natural states, according to information derived from experiments or investigations; and (3) classifying decisions according to the posterior probabilities. Let \({\uptheta = }\left( {\uptheta _{{1}} {,\theta }_{{2}} {,} \ldots {,\theta }_{i} \ldots {,\theta }_{d} } \right)\) represent D kinds of natural states. \({P(\theta }_{{i}} {)}\) represents the prior probability distribution of the occurrence of natural state \(\uptheta _{{i}}\). Let \({x}\) represent the experimental or investigational result. \({P(x|\theta }_{{i}} {)}\) denotes the probability that the result is exactly \({x}\) at state \(\uptheta _{{i}}\). These results contain information about the natural state \(\uptheta\), which can be used to understand and correct the occurrence probabilities of the natural states. The corrected probability is defined as follows:

Equation (1) is the Bayes formula. Generally, the estimates \({P(\theta }_{1} {|x)}\), \({P(\theta }_{2} {|x)}\), …, \({P(\theta }_{i} {|x)}\) of the occurrence probability for the various natural states \(\uptheta _{{1}} {,\theta }_{{2}} {,} \ldots\uptheta _{i}\) are more accurate than the prior probability distribution. We call \({P(\theta }_{i} {|x)}\) the posterior probability after \(\uptheta _{i}\) occurs. Decision-makers make decisions based on the value of \({P(\theta }_{i} {|x)}\), which is called a Bayesian decision. The higher the value of the posterior probability is, the better the scheme.

Proposed method

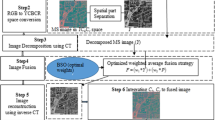

It is well-known that a MS image can be decomposed into intensity (I), hue (H), saturation (S) by IHS transformation18. The purpose of this study is to design a novel fusion rule to integrate the PAN image and the \(I\) component of the MS image to obtain a new intensity component \(I^{new}\), and replace \(I\) with \(I^{new}\) to obtain a desired HRMS image. However, when the PAN image and the \(I\) component of the MS image are fused, pixel selection has two or more options available. Deterministic judgement cannot be used to select a pixel from an image at a certain location as the pixel of the fused image. The key is how to choose pixels from PAN image and the \(I\) component of the MS image to construct \(I^{new}\). Bayesian decision theory can be used to effectively solve this uncertain problem. In this section, we propose to construct \(I^{new}\) based on Bayesian decision, and obtain the fused image by IHS Inverse Transform. The framework of the proposed method is illustrated in Fig. 1.

Flowchart of the proposed approach. LRMS is the upsampled MS image. IHS is IHS transformation, H and S is hue and saturation component, \({\overline{\mathbf{Z}}}\) is the class of the fused \(I\) component, \({P(PAN}_{{{(}i,j{)}}} {)}\) and \({P(}{\mathbf{I}}_{{{(}i,j{)}}} {)}\) are the prior probability of pixel at coordinate \({(}i,j{)}\) in PAN image and the I component, respectively, \(P({\overline{\mathbf{Z}}}|{PAN}_{{{(}i,j{)}}} )\) and \(P({\overline{\mathbf{Z}}}|{\mathbf{I}}_{{{(}i,j{)}}} )\) are the conditional probability that the pixel at coordinate \({(}i,j{)}\) in PAN image and the I component belongs to class \({\overline{\mathbf{Z}}}\), respectively, \({P(PAN}_{{{(}i,j{)}}} |{\overline{\mathbf{Z}}}{)}\) and \({P(}{\mathbf{I}}_{{{(}i,j{)}}} |{\overline{\mathbf{Z}}}{)}\) are the posterior probability of pixel at coordinate \({(}i,j{)}\) in PAN image and the I component, respectively, \(I^{new}\) is the new \(I\) component, FMS is the fused image.

From Fig. 1, we first upsampled (4 × 4) and interpolated MS image to obtain the LRMS image whose size same to the corresponding PAN iamge. Second, we apply IHS transformation to obtain the \(I\) component of the LRMS image. Third, we normalize the PAN image and the \(I\) component of the LRMS image to obtain the prior probability of the occurrence of the natural state LRMS and PAN image. Furth, we consider the intensity variation of a single pixel causes spectral variation in its neighbouring pixels, and divide the PAN image and the \(I\) component of the LRMS image into V patches with a sliding-window technique, and calculate the conditional probability that the result is exactly the class of the fused \(I\) component \({\overline{\mathbf{Z}}}\) based on \(y_{v}^{pan}\) and \(y_{v}^{I}\). Fifth, we calculate the probabilities that the pixels in PAN image or the \(I\) component of LRMS image is selected based on Bayesian formula, and select the pixels with high probability to constitute the new component \(I^{new}\). Therefore, three categories of probabilities including prior, conditional and posterior probabilities are calculated after an intensity-hue-saturation (IHS) transformation on LRMS image. Finally, we replace \(I\) with \(I^{new}\), and obtain FMS (fused image) by IHS Inverse Transform. The detailed information for the proposed method can be described in detail below.

Observation model

Let observed PAN image with size of \(m \times n\) is arranged as a matrix \({\mathbf{PAN}}{ = }\left[ {\left( {x_{1,1} ,x_{1,2} , \ldots x_{1,n} } \right),\left( {x_{2,1} ,x_{2,2} , \ldots x_{2,n} } \right), \ldots ,\left( {x_{m,1} ,x_{m,2} , \ldots x_{m,n} } \right)} \right]\) where \(x_{m,n}\) is the value of the pixel at location \((m,n)\). Analogously, \({\mathbf{MS}} = [{\mathbf{Q}}_{1}^{{}} ,{\mathbf{Q}}_{2}^{{}} ,...{\mathbf{Q}}_{k}^{{}} ]\) denotes the observed MS image, where \({\mathbf{Y}}_{k}^{{}} = \left[ {\left( {q_{1,1}^{k} ,q_{1,2}^{k} , \ldots q_{1,n^{\prime}}^{k} } \right),\left( {q_{2,1}^{k} ,q_{2,1}^{k} , \ldots q_{2,n^{\prime}}^{k} } \right), \ldots ,\left( {q_{m^{\prime},1}^{k} ,q_{m^{\prime},1}^{k} , \ldots ,q_{m^{\prime},n^{\prime}}^{k} } \right)} \right]\) is the observed kth MS band with size of \(m{\prime} \times n{\prime}\). Correspondingly, HRMS image is defined as \({\mathbf{HRMS}} = [{\mathbf{F}}_{1}^{{}} ,{\mathbf{F}}_{2}^{{}} , \ldots {\mathbf{F}}_{k}^{{}} ]\) where \({\mathbf{F}}_{k}^{{}} = \left[ {\left( {f_{1,1}^{k} ,f_{1,2}^{k} , \ldots f_{1,n}^{k} } \right),\left( {f_{2,1}^{k} ,f_{2,1}^{k} , \ldots f_{2,n}^{k} } \right), \ldots ,\left( {f_{m,1}^{k} ,f_{m,1}^{k} , \ldots ,f_{m,n}^{k} } \right)} \right]\) is the kth HRMS band with size of \(m \times n\). Generally, there exists a linear relationship between \({\mathbf{PAN}}\), \({\mathbf{LRMS}}\) and \({\mathbf{HRMS}}\) 19, which expressed as follows:

where \(\phi\) is an operation of low pass filtering or downsampling, \(u\) and \(\tau\) are noises, \(\varphi\) is a sparse matrix, each of which is a spectral response function reflecting the spectrum relationship between PAN and MS images.

Assuming HRMS image exists, then it should include spectral information of MS image, and the same spatial resolution as the PAN image. However, in fact, \({\mathbf{HRMS}}\) can’t be obtained by commercial optical satellites. It is necessary to estimate \({\mathbf{PAN}}\) and \({\mathbf{MS}}\) to obtain a desire image that is close to HRMS image as \(\overline{{{\mathbf{FMS}}}}\). When \(u = 0\) and \(\tau = 0\), \(\overline{FMS}\) is expressed as follows:

where \(estimate( \cdot )\) is the assess operation. In this paper, we adopt Bayesian assessment. In CS based fusion methods, Eq. (4) can be converted the following equation

where \(\overline{{\mathbf{Z}}}\) is the desire component used in place of the corresponding assessed component, \({\mathbf{Z}}_{n}^{{}}\) is the nth component provided after some kind of transformation. In this paper, \(n = 1\) and \({\varvec{Z}}_{1} = I\) in Eq. (5), the desired \(\overline{{\mathbf{Z}}}\) is the following required \(I^{new}\) that is the fused \(I\) component.

Fusion algorithm based on Bayesian decision

Generally, the property of \(I\) is in accordance with PAN image, and the property of H and S agrees with the spectral information in MS image. Therefore, a great many methods based on CS try to use PAN image to replace with \(I\) to produce an HRMS image. Different from the traditional CS methods, the proposed method first upsamples and interpolates MS image into LRMS image, and transforms the LRMS into \(I\), H and S components. Then a new \(I\) component is assessed by Bayesian decision based on the original \(I\) component and PAN image. Finally, the \(I\) component in LRMS image is replaced with the new \(I\) component to transform inversely to obtain HRMS image. The details information can be seen as following:

First, we apply IHS transformation to the LRMS image to produce the I, H and S components. This process is expressed as follows:

where \(LRMStoIHS( \cdot )\) is the operation of forward IHS transformation.

Second, we consider that individual MS or PAN sensors obtain the values of pixels in MS or PAN images with the same parameters. Thus, each pixel is independent of the others within an image. Therefore, we normalize the PAN image and the \(I\) component of the LRMS image and take the normalized values as the prior probabilities of the pixels. Let the prior probability of a pixel at coordinate \({(}i,j{)}\) in the PAN image be \({P(PAN}_{{{(}i,j{)}}} {)}\). Correspondingly, the prior probability of a pixel at coordinate \({(}i,j{)}\) in the \(I\) component of the LRMS image is \({P(}{\mathbf{I}}_{{{(}i,j{)}}} {)}\). Subsequently, according to the theory that the intensity variation of a single pixel causes spectral variation in its neighbouring pixels7, we consider the spatial and spectral influence relationships between a single pixel and its neighbouring pixels to calculate the posterior probabilities of the pixels in the LRMS and PAN images. In this paper, we assume that the spatial or spectral characteristics of the pixels directly adjacent to a certain pixel can be influenced. Thus, for a single pixel, there are eight pixels directly adjacent to it interference the probability that the current pixel is selected. Therefore, a sliding-window technique with overlapping areas of size 3 × 3 is used to divide the PAN image and the \(I\) component of the LRMS image with size of \(m \times n\) into V patches: \(\left\{ {y_{v}^{pan} |v = 0,1, \ldots ,V - 1} \right\}\) and \(\left\{ {y_{v}^{I} |v = 0,1, \ldots ,V - 1} \right\}\) from top to bottom. Thus, each patch is a tuple. We can make the naive assumption of class condition independence. Given two tuple class labels, \(y_{v}^{pan}\) = [\(y_{v,(1,1)}^{pan}\), \(y_{v,(1,2)}^{pan}\),…, \(y_{v,(r,s)}^{pan}\)] and \(y_{v}^{I}\) = [\(y_{v,(1,1)}^{I}\), \(y_{v,(1,2)}^{I}\),…, \(y_{v,(r,s)}^{I}\)], where \(y_{v}^{pan}\) and \(y_{v}^{I}\) are the vth image patch in the PAN image and the \(I\) component of the LRMS image, respectively; \((r,s)\) is the element coordinate in the corresponding patch; and \(r = 3\) and \(s = 3\), attribute values in each tuple are assumed to be conditionally independent of each other. Then, based on Bayes' law 15, the probability that the result is exactly the class of the fused image in the state of coordinate \({(}i,j{)}\) can be calculated by the following formulas:

where \({\overline{\mathbf{Z}}}\) is the class of the fused \(I\) component, \(P({\overline{\mathbf{Z}}}|{PAN}_{{{(}i,j{)}}} )\) is the probability that the pixel at coordinate \({(}i,j{)}\) in the PAN image is assigned to class \({\overline{\mathbf{Z}}}\), and \(P({\overline{\mathbf{Z}}}|{\mathbf{I}}_{{{(}i,j{)}}} )\) is the probability that the pixel at coordinate \({(}i,j{)}\) in the \(I\) component is assigned to class \({\overline{\mathbf{Z}}}\), \(P({\overline{\mathbf{Z}}}|y_{v,(r,s)}^{pan} )\) and \(P({\overline{\mathbf{Z}}}|y_{v,(r,s)}^{I} )\) is the prior probability at coordinate \({(}r,s{)}\) in a window of the PAN image and the \(I\) component, respectively.

Third, according to Bayes formula in Eq. (1), the posterior probabilities of the pixels in the PAN image and the \(I\) component can be expressed as follows:

Fourth, we assess the PAN image and the \(I\) component of the LRMS image based on Bayesian decision theory. According to Eq. (5), the assessment process is defined as follows:

where \(I^{new}\) and \(I\) are the \(I\) components after and before the assessment, respectively.

In the assessment process, determining which pixels from the PAN image or \(I\) component before fusion are selected to become members of \(I^{new}\) is a key problem. Therefore, the assessed \(I\) component \(I^{new} (i,j)\) follows the fusion rule

where \(I^{new} (i,j)\) and \(I(i,j)\) are the pixel at coordinate \({(}i,j{)}\) in the \(I\) component after and before fusion, respectively.

Finally, we replace \(I\) with \(I^{new}\) and conduct an inverse transformation to obtain the fused image as follows:

where \(IHSto\overline{{{\mathbf{FMS}}}} ( \cdot )\) is the operation of the inverse transformation of IHS.

We show the resulting algorithm based on Bayesian decision in Algorithm 1.

Experimental results and analysis

Experimental setup

In this section, we conduct full-scale and reduce-scale experiments on Pleiades, WorldView-3 and IKONOS datasets. These datasets are sourced from http://www.kosmos-imagemall.com. The spatial resolution of the MS and PAN images are equal to 1.84 and 0.46 m for Pleiades, 1.24 and 0.31 m for WorldView-3, and 3.28 and 0.82 m for IKONOS, respectively. In the reduce-scale experiments, we downsample the original PAN and MS images with a factor of 4 following Wald’s protocol20, respectively. The original MS image is regarded as the ground truth. The full-scale experiments fuse the images at the original scale without reference image. In all experiments, the size of pre-fusion MS images are 64 × 64, the size of upsampled (4 × 4) and interpolated MS images and the corresponding PAN images are 256 × 256. Six popular fusion methods are used to compare with the proposed method i.e., spectral preservation fusion (SRS) based method4, Band-dependent spatial detail (BDSD) injection based method21, GSA based method22, AIHS and multiscale guided filter (AIMG) based method23, Robust BDSD (RBDSD) based method24, spectral preserved model (SPM) based method25. All of the comparison methods used in this paper are opened source codes offered by the corresponding authors.

Eight assessment indices19,26 (shown in Table 1) are used to evaluate the fused images obtained by different fusion methods. In Table 1, the symbol ↑ denotes that a higher value implies better performance while the symbol ↓ denotes that a lower value implies lower performance. Among them, five indices of PSNR, RASE, RMSE, SAM, and ERGAS is used assess the performance of the experimentail results on reduce-scale data with reference image. They are defined as.

-

(1)

RMSE calculates the difference in pixel values between the fused image and the reference image. A smaller value of RMSE indicates that the fused image is closer to the reference image. It is defined as

$$RMSE = \sqrt {\frac{1}{MN}\sum\limits_{i = 1}^{M} {\sum\limits_{j = 1}^{N} {(F_{i,j} - R_{i,j} )^{2} } } }$$(14)where \(F_{i,j}\) and \(R_{i,j}\) are the pixel values at the coordinate \((i,j)\) in the fused image \(F\) and the reference image \(R\), respectively.

-

(2)

RASE reflects the average performance of the fusion method in the spectral aspect. It is defined as

$$RASE = \frac{100}{R}\sqrt {\frac{1}{K}\sum\limits_{k = 1}^{K} {RMSE_{k}^{2} } }$$(15) -

(3)

SAM is generally used to measure the similarity of spectral information between two images, and the ideal value of SAM is 0.

$$SAM = \arccos \left( {\frac{{ < u_{R} ,u_{F} > }}{{||u_{R} ||_{2} \cdot ||u_{F} ||_{2} }}} \right)$$(16)where \(u_{R}\) and \(u_{F}\) represent the spectrum vectors of fused image and the reference image, respectively.

-

(4)

ERGAS reflects the overall quality of the fused image. The smaller ERGAS value denotes the smaller spectral distortion. It is defined as

$$ERGAS = 100\frac{h}{l}\sqrt {\frac{1}{K}\sum\limits_{k = 1}^{K} {\left( {\frac{{RMSE_{k} }}{{\overline{{F_{k} }} }}} \right)^{2} } }$$(17)where \({h \mathord{\left/ {\vphantom {h l}} \right. \kern-0pt} l}\) represents the ratio between pixel sizes of the PAN and MS images, and \(\overline{{F_{k} }}\) is the mean value of the kth band of the fused image.

-

(5)

PSNR is usually used to measure the image quality. The higher the value, the better the image quality. It is defined as

$$PSNR = 20\lg \left( {\frac{\max (F)}{{(RMSE)^{2} }}} \right)$$(18)

Three indices of \(D_{\lambda }\), \(D_{S}\), and QNR is used assess the performance of the experimental results on full-scale data without reference image. They are defined as

-

(1)

\(D_{\lambda }\) is defined as

$$D_{\lambda } = \sqrt {\frac{1}{K(K - 1)}\sum\limits_{t = 1}^{K} {\sum\limits_{d = 1,t \ne d}^{K} {|Q(M_{t} ,M_{d} ) - Q(F_{t} ,F_{d} )|^{2} } } }$$(19)where \(M\) and \(F\) are the original MS image and the fused MS image, respectively, \(t \in k\) and \(d \in k\) are the tth and dth band, respectively, Q is a function that measures universal image quality.

-

(2)

\(D_{S}\) is defined as

$$D_{s} = \sqrt {\frac{1}{K}\sum\limits_{t = 1}^{K} {|Q(F_{t} ,PN_{L} ) - Q(M_{t} ,PN_{{}} )|} }$$(20)where \(PN_{L}\) and \(PN\) represent the degraded PAN image and the original PAN image, respectively.

-

(3)

QNR composed of \(D_{\lambda }\) and \(D_{S}\) is defined as

$$QNR = (1 - D_{\lambda } ) \cdot (1 - D_{S} )$$(21)

Experimental results

In this section, the experimental results comprised five groups of Pleiades, WorldView-3 and IKONOS images are used to evaluate the performance of the proposed method. The first and second one shown in Figs. 2 and 3, respectively, display the reduced-scale experimental results. The corresponding quantitative assessment results are shown in Table 2. The third, fourth and fifth one shown in Figs. 4, 5 and 6, respectively, display the full-scale experimental results. The corresponding quantitative assessment results are shown in Table 3. To evaluate the fused images more effectively, we select a small area in each fused image to enlarge it to 2x, and place the enlarged area in a large box. Therefore, in the following five groups, each fused image including ground truth had two red marked rectangle regions. The larger region was an enlarged image of the smaller one. The detailed information can be seen as following:

-

(1)

Reduced-scale experiments.

The reduce-scale experiments of Figs. 2 and 3 are conducted on Pleiades datasets. In Figs. 2 and 3, Figs.2(a) and 3(a) are the ground truths. Figures 2(b) and 3(b) are the degraded PAN image. Figures 2(c)-(i) and 3(c)-(i) show the corresponding fusion results provided by different fusion methods. From the fusion results of two groups of images, we find due to SRS method only considered spectral contribution rate of different band in MS image and BDSD method only considered the spatial and spectral dependence to fused the input images, their results exist spatial and spectral distortion. GSA method improved the replaced component and AIMG methods adopted guided filter to extract the PAN details to enhance the spatital resolution of the LRMS image, which make the fused Pleiades images have the effective spatial enhancement but various degrees of spectral distortion. Although RBDSD method improved BDSD method and SPM improved SRS method, the results obtained by them existed oversharpening in spatial. The result obtained by the proposed method is closest to ground truth. In addition, we apply five assessment indices including PSNR, RASE, RMSE, SAM, and ERGAS together with ground truth to evaluate the fusion results in Figs. 2 and 3. From Table 2, the proposed method achieve the best values in both groups of images and five indicators. Furthermore, we removed the reference images and treated these results of the two groups of data as that of the full-scale experiments, and apply three assessment indices used to evaluate fusion results without ground truth including \(D_{\lambda }\), \(D_{S}\), and QNR to assess the results. From Table 2, the proposed method achieve the second values in Fig. 2 and three indicators, and not in the top three for Fig. 3. Therefore, the proposed method produce the best performance in reduce-scale experiments. However, if degraded Pleiades data is regarded as real data to implement the full-scale experiments, the corresponding quantization results can not obtain the best values.

-

(2)

Full-scale experiments.

The full-scale experiments of Figs. 4, 5, and 6 are conducted on Pleiades, WorldView-3 and IKONOS datasets. In Figs. 4, 5, and 6, Figs. 4(a), 5(a), and 6(a) are the upsampled MS image. Figurse 4(b), 5(b), and 6(b) are the corresponding full-scale PAN image. Figures 4(c)-(i), 5(c)-(i), and 6(c)-(i) show the corresponding fusion results provided by different fusion methods. From the fusion results of three groups of images, we find the result of RBDSD method has a little blurry. The result produced by SRS method suffers serious spectral distortion and oversharpening in spatial. Compared with SRS method, the results obtained by AIMG and SRM methods have lower spectral distortion, and still have some oversharpening in spatial. Compared with AIMG and SRM methods, the results obtained by BDSD and GSA methods are better, but it's worse than the proposed method. Furthermore, we apply three assessment indices including \(D_{\lambda }\), \(D_{S}\), and QNR without ground truth to evaluate the fusion results in Figs. 4, 5, and 6. From Table 3, the proposed method achieves the best values in \(D_{\lambda }\), \(D_{S}\), and QNR indicators for Fig. 4, and obtains the best values in \(D_{S}\) and QNR indicators for Figs. 5 and 6, and obtains the second best value in \(D_{\lambda }\) for Fig. 6. Specially, due to full-scale experiments conducted on real data without ground truth, the results fused by the different methods in Figs. 4, 5, and 6 cannot be measured by PSNR, RASE, RMSE, SAM, and ERGAS indicators. Therefore, above experimental results confirm the proposed method has the best performance in full-scale experiments than other comparsion methods.

Conclusion

In this paper, a novel fusion algorithm based on Bayesian decision is proposed to achieve pansharpening for the PAN and MS images. In the proposed method, an observation model based on Bayesian estimation is firstly established to serve the remote sensing images fusion. Then, three categories of probabilities based on the intrinsic properties of the PAN and MS images to be calculated to construct Bayesian formula. Finally, Bayesian decision theory is introduced to design a fusion rule, which are employed to obtain a new I component to replace the original I component to produce a high-quality HRMS image. Experimental results on full-scale and reduce-scale data from Pleiades, WorldView-3, and IKONOS datasets indicate that the proposed method has better performance than some related and popular existing fusion methods.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Shen, K., Yang, X., Lolli, S. & Vivone, G. A continual learning-guided training framework for pansharpening. ISPRS J. Photogramm. 196, 45–57. https://doi.org/10.1016/j.isprsjprs.2022.12.015 (2023).

Yilmaz, C. S., Yilmaz, V. & Gungor, O. A heoretical and practical survey of image fusion methods for multispectral pansharpening. Inform. Fusion. 79, 1–43. https://doi.org/10.1016/j.inffus.2021.10.001 (2022).

Vivone, G. Multispectral and hyperspectral image fusion in remote sensing: A survey. Inform. Fusion. 89, 405–417. https://doi.org/10.1016/j.inffus.2022.08.032 (2023).

Zhou, X. et al. A GIHS-based spectral preservation fusion method for remote sensing images using edge restored spectral modulation. ISPRS J. Photogramm. 88(2), 16–27. https://doi.org/10.1016/j.isprsjprs.2013.11.011 (2014).

Wang, Z., Ziou, D., Armenakis, C., Li, D. & Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 43(6), 1391–1402. https://doi.org/10.1109/TGRS.2005.846874 (2005).

Malpica, J. A. Hue adjustment to IHS pan-sharpened IKONOS imagery for vegetation enhancement. IEEE Geosci. Remote Sens. Lett. 4, 27–31. https://doi.org/10.1109/lgrs.2006.883523 (2007).

Wu, L., Yin, Y., Jiang, X. & Cheng, T. C. E. Pan-sharpening based on multi-objective decision for multi-band remote sensing images. Pattern Recognit. 118, 1–15. https://doi.org/10.1016/j.patcog.2021.108022 (2021).

Yuan, Q., Wei, Y., Meng, X., Shen, H. & Zhang, L. A multi-scale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 11(3), 978–989. https://doi.org/10.1109/JSTARS.2018.2794888 (2018).

Wei, Y., Yuan, Q., Shen, H. & Zhang, L. Boosting the accuracy of multispectral image pansharpening by learning a deep residual network. IEEE Geosci. Remote Sens. Lett. 14(10), 1795–1799. https://doi.org/10.1109/LGRS.2017.2736020 (2017).

Wang, J. M., Lu, T., Huang, X., Zhang, R. Q. & Feng, X. X. Pan-sharpening via conditional invertible neural network. Inform. Fusion. 101, 101980. https://doi.org/10.1016/j.inffus.2023.101980 (2024).

Jin, C., Deng, L. J., Huang, T. Z. & Vivone, G. Laplacian pyramid networks: A new approach for multispectral pansharpening. Inform. Fusion. 78, 158–170. https://doi.org/10.1016/j.inffus.2021.09.002 (2022).

Yim, J., Joo, D., Bae, J. & Kim, J. A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. IEEE Confer. Comput. Vis. Pattern Recogn. 754, 7130–7138. https://doi.org/10.1109/CVPR.2017.754 (2017).

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V. & Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 1, 1–9. https://doi.org/10.1109/CVPR.2015.7298594(2015).

Khademi, G. & Ghassemian, H. Incorporating an adaptive image prior model into Bayesian fusion of multispectral and panchromatic images. IEEE Geosci. Remote Sens. Lett. 15(6), 917–921. https://doi.org/10.1109/LGRS.2018.2817561 (2018).

Khademi, G. & Ghassemian, H. Incorporating an adaptive image prior model into Bayesian fusion of multispectral and panchromatic images. IEEE Geosci. Remote Sens. Lett. 15(6), 917–921. https://doi.org/10.1109/LGRS.2018.2817561 (2018).

Upla, K. P., Joshi, M. V. & Gajjar, P. P. An edge preserving multiresolution fusion: use of contourlet transform and MRF prior. IEEE Trans. Geosci. Remote Sens. 53(6), 3210–3220. https://doi.org/10.1109/TGRS.2014.2371812 (2015).

Yang, Y., Lu, H. Y., Huang, S. Y., Fang, Y. M. & Tu, W. An efficient and high-quality pansharpening model based on conditional random fields. Inform. Sciences 553, 1–18. https://doi.org/10.1016/j.ins.2020.11.046 (2021).

Wu, L. & Jiang, X. Pansharpening based on spectral-spatial dependence for multibands remote sensing images. IEEE Access 10, 76153–76167. https://doi.org/10.1109/ACCESS.2022.3192461 (2022).

Han, C., Zhang, H., Gao C., Jiang, C., Sang, N. & Zhang, L. A remote sensing image fusion method based on the analysis sparse model. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 9 (1), 439–453. https://doi.org/10.1109/JSTARS.2015.2507859 (2016).

Wald, L., Ranchin, T. & Mangolini, M. Fusion of satellite images of different spa-tial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 63, 691–699. https://doi.org/10.1016/S0924-2716(97)00008-7 (1997).

Garzelli, A., Nencini, F. & Capobianco, L. Optimal MMSE pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 46(1), 228–236. https://doi.org/10.1109/TGRS.2007.907604 (2008).

Aiazzi, B., Baronti, S. & M. Selva. Improving component substitution pansharpening through multivariate regression of MS+Pan data. IEEE Trans. Geosci. Remote Sens. 45 (10), 3230–3239. https://doi.org/10.1109/TGRS.2007.901007 (2007).

Yang, Y. et al. Remote sensing image fusion based on adaptive IHS and multiscale guided filter. IEEE Access 4, 4573–4582. https://doi.org/10.1109/access.2016.2599403 (2016).

Vivone, G. Robust band-dependent spatial-detail approaches for panchromatic sharpening. IEEE Trans. Geosci. Remote Sens. 57(9), 6421–6433. https://doi.org/10.1109/tgrs.2019.2906073 (2019).

Wu, L., Jiang, X., Peng, J., Wu, G. S. & Xiong, X. Z. A spectral preserved model based on spectral contribution and dependence with detail injection for pansharpening. Sci. Rep. 13, 1–10. https://doi.org/10.1038/s41598-023-33574-5 (2023).

Wu, L., Jiang, X., Yin, Y., Cheng, T. C. E. & Sima, X. Multi-band remote sensing image fusion based on collaborative representation. Inform. Fusion 90, 23–35. https://doi.org/10.1016/j.inffus.2022.09.004 (2022).

Funding

This work was supported by Jiangxi Provincial Natural Science Foundation (No. 20212BAB202028, 20232BAB201026), by the Science and Technology Project in Jiangxi Province Department of Education (No. GJJ202301, GJJ212306), and the Jiangxi University Humanities and Social Science Research Project (No. GL22127, GL21141).

Author information

Authors and Affiliations

Contributions

L.W.: L.W. developed mechanics modelling, algorithm design, and analysis, and drafted the original draft; X.J.: X.J. performed sample preparation and data analysis, and provided the conceptualization, and funding acquisition; W.Z.: W.Z. handled data collection, data preprocessing and data analysis; Y.H.: Y.H. applied hardware and software to deal with simulation experiments and aper revision; K.L.: K.L. provided supervision and constructive comments, and performed data re-analysis in the modification process of the paper, and provided the final approval of the version to be submitted.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, L., Jiang, X., Zhu, W. et al. Bayesian decision based fusion algorithm for remote sensing images. Sci Rep 14, 11558 (2024). https://doi.org/10.1038/s41598-024-60394-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-60394-y

- Springer Nature Limited