Abstract

Interpreting chest X-rays is a complex task, and artificial intelligence algorithms for this purpose are currently being developed. It is important to perform external validations of these algorithms in order to implement them. This study therefore aims to externally validate an AI algorithm’s diagnoses in real clinical practice, comparing them to a radiologist’s diagnoses. The aim is also to identify diagnoses the algorithm may not have been trained for. A prospective observational study for the external validation of the AI algorithm in a region of Catalonia, comparing the AI algorithm’s diagnosis with that of the reference radiologist, considered the gold standard. The external validation was performed with a sample of 278 images and reports, 51.8% of which showed no radiological abnormalities according to the radiologist's report. Analysing the validity of the AI algorithm, the average accuracy was 0.95 (95% CI 0.92; 0.98), the sensitivity was 0.48 (95% CI 0.30; 0.66) and the specificity was 0.98 (95% CI 0.97; 0.99). The conditions where the algorithm was most sensitive were external, upper abdominal and cardiac and/or valvular implants. On the other hand, the conditions where the algorithm was less sensitive were in the mediastinum, vessels and bone. The algorithm has been validated in the primary care setting and has proven to be useful when identifying images with or without conditions. However, in order to be a valuable tool to help and support experts, it requires additional real-world training to enhance its diagnostic capabilities for some of the conditions analysed. Our study emphasizes the need for continuous improvement to ensure the algorithm’s effectiveness in primary care.

Similar content being viewed by others

Introduction

Radiology is the speciality of medicine that uses different imaging techniques to detect and treat diseases. One of the most widely used imaging techniques in this field is radiography (X-ray), used to generate images of the inside of the body, which has the advantage of being quick to perform and requiring low levels of radiation1,2. Within the scope of X-rays, one of the most common tests is the chest X-ray3,4,5,6, used to detect pulmonary and cardiovascular diseases, among others.

Despite the importance of this speciality and the volume of radiological tests that exist, the lack of radiologists able to interpret them7,8,9,10,11, as well as the increase in errors in interpretation as a consequence of a heavy workload is becoming increasingly evident12,13,14,15. Furthermore, radiological diagnosis is a component of clinical competencies in various specialities, including family and community medicine. It is a common practice for practitioners in this field to interpret chest X-rays, despite their lower degree of expertise16. This fact highlights the need and, therefore, the opportunity to introduce tools such as artificial intelligence (AI) into this field to support radiologists and other healthcare practitioners who need to interpret an X-ray.

Technological support tools, such as computer-aided diagnostics (CAD), have long been used in this area. However, the advent of deep learning and machine learning models in recent years has offered the potential to develop new support tools aiming to overcome the primary limitations of CAD and enhance accuracy17,18. Deep learning models, compared to CAD, are built to train and work with large databases, to have constant improvement over time by learning from errors, and to have the ability to detect more than one condition at a time, all of which makes them much more powerful than CAD.

In this context, AI algorithms, including deep and machine learning models can assist in diagnosis, potentially enhancing diagnostic accuracy. However, while AI will not replace professionals, it is essential to acknowledge that the implementation of AI in routine clinical practice must be both safe and effective20,21. One of the current concerns lies in the fact that many of the studies on applications of new AI models only present in silico validation, a phenomenon called “digital exceptionalism”, without performing external validation in the actual implementation environment. External validation is important, as it allows estimating the accuracy of the model in a population different from the training population, selected in real clinical practice, thus allowing the subsequent generalisation of the results22,23,24,25,26.

A recent study conducting external validation of an AI algorithm designed to classify chest X-rays as normal or abnormal using a cohort of real images from two primary care centres, indicated that additional training with data from these environments was required to enhance the algorithm’s performance, particularly in clinical setting different from its initial training environment27.

In this context, external validation, crucial for ensuring non-discrimination and equity in healthcare, should be a key requirement for the widespread implementation of AI algorithms. However, it is not yet specifically mandated by European legislation (Regulation 2017/745) and this not a prerequisite for marketing an AI algorithm28. For this reason, different groups of experts around the world have developed guidelines to stipulate the essential requirements for using AI algorithms as a complementary diagnostic tool. Yet, while expert groups emphasise the necessity of external validation to confirm its practical potential in clinical settings, this requirement is not yet mandated in the Regulation26,29,30.

The implementation of AI in healthcare appears to be an imminent reality that can offer significant benefits to both professionals and the general population. However, it is essential to implement safe and validated tools in real clinical settings to maintain fairness. In this sense, this study aims to externally validate an AI algorithm's diagnoses in real primary care settings, comparing them to a radiologist’s diagnoses. The aim is also to identify diagnoses the algorithm may not have been trained for.

Materials and methods

The study protocol has been previously described and published32. Nevertheless, the most relevant points of the present study are described below.

The ChestEye AI algorithm

Oxipit33, one of the leading companies in AI medical image reading, has developed a fully automatic computer-aided diagnosis (CAD) AI algorithm for reading chest X-rays trained with more than 300,000 images, available through a web platform called ChestEye. The ChestEye imaging service has been certified as a Class II medical device on the Australian Register of Therapeutic Goods and has also been CE marked33. The web platform reads the inserted chest X-ray and returns the automatic report with the ability to detect 75 conditions, which cover 90% of diagnoses, as well as a heat map to show the locations of the findings. Thus, ChestEye allows radiologists to analyse only the most relevant X-rays33,34.

Study design

A prospective observational study for the external validation of the AI algorithm in a region of Catalonia of users who were scheduled for chest radiography at the Osona Primary Care Center. For each user, the report of the reference radiologist (considered the gold standard) was obtained. Subsequently, the research team input the image into the AI algorithm to obtaine the diagnosis. This allowed for the comparison of the AI's performance with the reference standard in terms of accuracy, sensitivity, specificity, positive predictive value and negative predictive value c.

Description of the study population, time frame, and data collection

The study was carried out at the Catalan Institute of Health’s Primary Care Centre Vic Nord (Osona, Catalonia, Spain), a reference centre where all chest X-rays in the region are performed (with a coverage of 125,000 users). At this same centre, convenience recruitment was carried out from 7 February 2022 to 31 May 2022. The study was explained, and the information sheet and informed consent were given to all patients who came for a chest X-ray and met the inclusion criteria32.

The reference population of the study was the entire population of the Osona region who underwent chest X-rays at the study centre and agreed to participate in the study. The study included only anteroposterior chest X-rays on those over 18 years of age and excluded pregnant women and poor-quality chest X-rays (poor exposure, non-centred or rotated images).

Sample size

Due to a problem with the image collection centre, the sample size calculated in the protocol32 could not be reached. For this reason, the sample was recalculated by increasing the precision by one percentage point. Thus, in order to validate the algorithm, a sample of 450 images was needed to estimate an overall accuracy expected to be around 80%, with a confidence interval of 95%, a precision level of 5% and a percentage of replacements needed of 15%.

Procedure

Once recruitment was completed, the Technical Service of the Catalan Institute of Health of Central Catalonia extracted the patients’ anonymous and automated images and their corresponding non-anonymised reports. The images and reports were then coded together so that they could be related.

The research team then entered all the images into the AI model to extract their interpretation (the diagnosis or the possibility of no abnormalities). At the same time, three general practitioners interpreted the reference radiologists’ reports, without seeing the images in order to avoid assumptions, with the aim of extracting the diagnoses described.

Finally, the group of general practitioners grouped all the conditions detectable by the AI model into 9 categories according to the anatomy of the thorax in order to build an individual and grouped study. The categories were: external implants, mediastinal findings or conditions, cardiac and valvular conditions, vessel conditions, bone conditions, pleural or pleural space conditions, upper abdominal findings or conditions, pulmonary parenchymal findings or conditions, and others.

Statistical analysis

To validate the algorithm, the AI algorithm’s diagnoses were compared with those of the gold standard. The accuracy of the algorithm and the confusion matrix were obtained from the images correctly classified positive (PT), correctly classified negative (TN), false positive (FP) and false negative (FN). Sensitivity and specificity were also calculated. These measurements were obtained for the total sample, for each condition and for each of the categories according to physiology. Analyses were performed with R software version 4.2.1 and all confidence intervals were 95%.

Ethics committee

The University Institute for Research in Primary Health Care Jordi Gol i Gurina (Barcelona, Spain) ethics committee approved the trial study protocol (approval code: 21/288). Written informed consent was requested from all patients participating in the study.

Ethical considerations

Radiologists’ assessment and decisions were not influenced by this study, as the normal radiology referral workflow was not affected. This project was approved by the Research Ethics Committee (REC) from the Foundation University Institute for Primary Health Care Research Jordi Gol i Gurina (IDIAPJGol) (P21/288-P). The study was performed in accordance with relevant guidelines/regulations, and informed consent was obtained from all participants. All research was performed in accordance with the Declaration of Helsinki.

Results

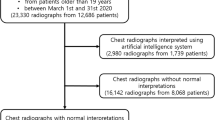

Of the 471 patients who agreed to participate in the study and provide the images and reports, the final sample for external validation of the model was 278, mainly due to computer-related issues when extracting the data. In some cases, when automatically extracting images and reports, both were not obtained, i.e., either the image was missing, or the report was missing. In addition, some reports did not include the interpretation of the image, as it was a follow-up X-ray. In these cases, the report only indicated whether or not there were changes with respect to the previous report and, therefore, they had to be discarded from the analysis (Fig. 1). Of the final sample, 144 (51.8%) obtained images without radiological abnormalities according to the radiologist's report.

Although the final sample consisted of 278 images, it is possible that an image may be suggestive of one or more conditions or anatomical abnormalities. In this sense, the sum of the unaltered images plus the images with one or more abnormalities does not correspond to the total sample (n = 278). Of the conditions or anatomical abnormalities for which the algorithm was trained, the AI model identified 33 in the sample, and the most prevalent were: nodule (n = 37, 13.3%), consolidation (n = 28, 10.1%), abnormal rib (n = 17, 6.1%), enlarged heart (n = 15, 5.4%) and aortic sclerosis (n = 8, 2.9%). On the other hand, the reference radiologist identified 35 in the sample, and the most prevalent were: consolidation (n = 30, 10.8%), pulmonary emphysema (n = 20, 7.2%), enlarged aorta (n = 14, 5.0%), enlarged heart (n = 12, 4.3%), hilar prominence (n = 12, 4.3%) and linear atelectasis (n = 11, 3.9%) (Table 1).

Analysing the validity of the AI algorithm, the average accuracy was 0.95 (95% CI 0.92; 0.98), the average sensitivity was 0.48 (95% CI 0.30; 0.66) and the average specificity was 0.98 (95% CI 0.97; 0.99). The accuracy, sensitivity and specificity values for each condition can be seen in Table 2. The values for true positives, true negatives, false positives, and false negatives for each condition are presented in Table 1 of the supplementary information.

The reference radiologist identified a list of conditions for which the algorithm was not trained, and which were therefore classified as “Other”. The most prevalent were bronchial wall thickening (n = 13, 4.68%), fibroscarring lesions or abnormalities (n = 11, 3.96%) and chronic pulmonary abnormalities (n = 11, 3.96%) (Table 3).

Figures 2 and 3 show some examples of the AI algorithm’s performance in cases where the algorithm’s diagnosis was successful and in cases where errors occurred.

Image of patient (upper-left) where according to the radiologist's report there is only consolidation, but the algorithm detects an abnormal rib (upper-right), consolidation (lower-left) and two nodules (lower-right). It is worth noting the confusion of a consolidation with mammary tissue and of two nodules with the two mammary areolae.

In order to perform a more general analysis, the conditions were grouped into 10 groups according to chest anatomy, considering only the diagnoses for which the AI model was trained. According to the radiologist, the most prevalent groupings found were lung parenchymal conditions (n = 71, 25.5%), bone conditions (n = 21, 7.5%) and vessel conditions (n = 18, 6.47%). According to the AI model, the most prevalent conditions were lung parenchymal conditions (n = 57, 20.5%), bone conditions (n = 21, 7.5%) and cardiac and/or valvular conditions (n = 15, 5.4%) (Table 4).

Finally, Table 5 shows the accuracy, sensitivity and specificity values for each group. Of these, it is worth mentioning the low sensitivity values for mediastinal conditions (0.0, 95% CI 0.0; 0.96), vessel conditions (0.29, 95% CI 0.11; 0.52) and bone conditions (0.24, 95% CI 0.08; 0.47). On the other hand, high sensitivity values were recorded for external implants (0.67, 95% CI 0.22; 0.96), upper abdominal conditions (0.67, 95% CI 0.30; 0.93) and cardiac and/or valvular conditions (0.67, 95% CI 0.35; 0.90). It is also worth mentioning the model’s strong ability to detect images that do not have radiological abnormalities. The values for true positives, true negatives, false positives, and false negatives for each grouping are presented in Table 1 of the supplementary information.

Discussion

The aim of this study was to perform an external validation, in real clinical practice, of the diagnostic capability of an AI algorithm with respect to the reference radiologist for chest X-rays, as well as to detect possible diagnoses for which the algorithm had not been trained. Thus, the overall accuracy of the algorithm was 0.95 (95% CI 0.92–0.98), the sensitivity was 0.48 (95% CI 0.30–0.66) and the specificity was 0.98 (95% CI 0.97–0.99). The results obtained have further highlighted, as indicated by different expert groups26,28,29, the need for external validations of AI algorithms in a real clinical context in order to establish the necessary measures and adaptations to ensure safety and effectiveness in any environment. Therefore, in the context of the model developed, it is important to understand and interpret what each of the results obtained indicate.

High accuracy values were observed in most cases (ranging between 0.7–1). The accuracy is represented by the proportion of correctly classified results among the total number of cases examined. This value was high since, both for each condition and for the groups of conditions, the capacity to detect true negatives was good, taking into account that most of the images analysed were found to have no abnormalities (51.8%). Working with an AI algorithm that quickly determines that there is no abnormality can function as a triage tool, streamlining the diagnostic process, allowing the professional to focus on other tests, reduce waiting lists, reduce waiting times for diagnoses and even reduce expenses in secondary tests.

With sensitivity referring to the ability to detect an abnormality when there really is one, high sensitivity values were shown when detecting anatomical findings or abnormalities such as sternal cables, enlarged heart, abnormal ribs, spinal implants, cardiac valve, or interstitial markings. On the other hand, low sensitivity values were observed for most conditions, indicating that the algorithm had limited ability to detect certain conditions like those in the mediastinum, vessels, or bones. These findings align with the results of a study that performed an external validation of a similar algorithm in an emergency department35. Additionally, the algorithm exhibited low sensitivity in detecting pulmonary emphysema, linear atelectasis, and hilar prominence, which are prevalent conditions in the primary care setting31.

Low sensitivity was also observed when detecting nodules, with the algorithm finding more nodules than the reference radiologist, in most cases confusing them with areolae in the breast tissue. Although it is important to be able to detect any warning signs and that the professional is in charge of making the clinical judgement and determining the need for complementary tests, it is possible that this external validation has detected a possible gender bias in the training of the algorithm. When it comes to chest imaging, it's important to distinguish between the physiological aspects of breast tissue and any potential changes it may undergo during various life stages, as opposed to signs of conditions or abnormalities36. Other studies have also detected a high false positive value in the detection of nodules due to other causes such as fat, pleura or interstitial lung disease37.

Finally, specificity being the ability to correctly identify images in which there are no radiological abnormalities, the results showed high values for all condition groupings, since the algorithm was able to detect images with no abnormalities.

Following the authors' desire to contribute to the improvement of the AI model, some radiologists’ findings were identified that were overlooked during the algorithm's training, especially related to bronchial conditions, including chronic bronchopathy, bronchiectasis, and bronchial wall thickening. Additionally, the algorithm missed common chronic conditions often seen in primary care, including chronic pulmonary abnormalities, COPD, and fibrocystic abnormalities. Furthermore, it was noted that certain condition names within the AI algorithm should be adjusted to align with names used in the radiology field. Interstitial markings could be changed to interstitial abnormality, consolidation to condensation, aortic sclerosis to valvular sclerosis, and abnormal rib to rib fracture.

Once the main variables that characterise the algorithm's capacity were discussed, the results obtained differ from the majority of published studies, since most of them obtained a higher algorithm capacity. However, it should be noted that most of these are internal validations and not tested in real clinical practice settings38,39,40.

A study in Korea performed an internal and external validation of an AI algorithm capable of detecting the 10 most prevalent chest X-ray abnormalities and was able to demonstrate the difference in sensitivity and specificity values. The internal validation obtained sensitivity and specificity values between 0.87–0.94 and 0.81–0.98, respectively. On the other hand, the external validation obtained sensitivity and specificity values between 0.61–1.00 and 0.71–0.98, respectively41. This difference can also be seen in a study in Michigan, where internal and external validation of an AI algorithm capable of detecting the most common chest X-ray abnormalities was performed42, and in a study at the Seoul University School of Medicine, where an algorithm for lung cancer detection in population screening was validated43.

Therefore, the results obtained from the external validation show the need to increase the sensitivity of the algorithm for most conditions. Considering that AI should serve as a diagnostic support tool and the ultimate responsibility for medical decisions rests with the practitioner, it is ideal for the algorithm to flag potential abnormalities for the practitioner to review and confirm. This ensures the highest diagnostic effectiveness. Recent studies have shown that the use of an AI algorithm to support the practitioner significantly improves diagnostic sensitivity and specificity and reduces image reading time20,44.

Enhanced sensitivity could help address the shortage of specialised radiologists globally, especially in Central Catalonia's primary care setting, where this validation was conducted45,46. More and more, general practitioners are tasked with interpreting X-rays. In this context, the advancement of these tools can be a valuable asset in the diagnostic process.

Limitations and strengths

One significant limitation of the study was the small sample size for certain specific conditions. This was due to difficulties in obtaining the required number of cases, as these conditions are not very common in real clinical practice. Consequently, the external validation for these conditions yielded less reliable estimates. However, by representing reality, a large volume of images without radiological abnormalities was obtained and this allowed for a good external validation of the model's ability to detect images without abnormalities.

In addition, the radiologist's reference diagnosis was not always the practitioner’s own, but that of a group of radiologists. This could represent a limitation, since there was no consensus among them, but there was no desire to alter actual clinical practice. In addition, the study aimed to test the algorithm in primary care settings. For this reason, a double interpretation of the images was performed: initially by the radiologist and subsequently, the radiologist's report was interpreted by the family and community physician.Finally, another limitation was the lack of information on the sex of the users analysed. Through the results obtained, we found it very relevant to do another study but separating the capabilities of the algorithm according to gender, since it seems that they might not be the same. In addition, since we have a small sample for most of the conditions, separating the analyses according to sex in the present study would be unreliable and not comparable.

On the other hand, the greatest strength of the study is that it presents an external validation in real clinical practice in primary care and there are currently few studies that have done so. Most studies present an internal validation, but it is very important to perform an external validation in order to estimate the accuracy of the model in a population other than the training population, thus allowing the results to be generalised.

Conclusion

The findings of this study demonstrate the validation of an AI algorithm for reading chest X-rays in the primary care setting, achieved by comparing its diagnoses with those made by a radiologist. The algorithm has been validated in the primary care setting using values such as the accuracy, sensitivity and specificity of the algorithm and has proven to be useful by being able to identify images with or without abnormalities. However, further training is needed to increase the diagnostic capability of some of the conditions analysed. It is important that training is done in a real environment, with real images, in order to perform robust external validations. Our analysis highlights the need for continuous improvement to ensure that the algorithm is a reliable and effective tool in the primary care environment.

The role of AI in healthcare should be to assist and support the practitioners. Being able to reliably detect images without abnormalities can have a very positive impact, reducing waiting times for diagnoses, secondary tests to rule out conditions, streamlining practitioners work and, among others, ultimately favouring patient care and, indirectly, their health.

Data availability

The datasets generated and/or analysed during the current study are not publicly available because our manuscript was based on confidential and sensitive health data. However, they are available from the corresponding author upon reasonable request.

References

Novelline, R. A. & Squire, L. F. Squire’s fundamentals of radiology. La Editorial U, editor. (2004).

W H. Learning radiology: Recognizing the basics. Sciences EH, editor. (2015).

Hwang, E. J. et al. Development and validation of a deep learning-based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Netw. Open 2(3), e191095. https://doi.org/10.1001/jamanetworkopen.2019.1095 (2019).

Wu, J. T. et al. Comparison of chest radiograph interpretations by artificial intelligence algorithm vs radiology residents. JAMA Netw. Open 3(10), e2022779. https://doi.org/10.1001/jamanetworkopen.2020.22779 (2020).

Santos, Á. & Solís, P. Posición SERAM sobre la necesidad de informar la radiología simple. Soc Española Radiol Médica 1, 1–47 (2015).

UNSCEAR. Sources and effects of ionizing radiation volume I: Source. Vol. I, United Nations Scientific Committe on the Effects of Atomic Radiation. 1–654 (2000).

Rimmer, A. Radiologist shortage leaves patient care at risk, warns royal college. BMJ https://doi.org/10.1136/bmj.j4683 (2017).

Bhargavan, M., Sunshine, J. H. & Schepps, B. Too few radiologists?. Am. J. Roentgenol. 178(5), 1075–1082 (2002).

Chew, C., O’Dwyer, P. J. & Young, D. Radiology and the medical student: Do increased hours of teaching translate to more radiologists?. BJR Open 3(1), 20210074 (2021).

Lyon, M. et al. Rural ED transfers due to lack of radiology services. Am. J. Emerg. Med. 33(11), 1630–1634 (2015).

European A. Radiology services in Europe : Harnessing growth is health system dependent (2022).

Patlas, M. N., Katz, D. S. & Scaglione, M. Errors in Emergency and Trauma Radiology. (Springer, 2007). Available from: https://download.bibis.ir/Books/Medical/_old/Errors in Emergency and Trauma Radiology.pdf. Accessed 28 Sep 2023.

Ruutiainen, A. T., Durand, D. J., Scanlon, M. H. & Itri, J. N. Increased error rates in preliminary reports issued by radiology residents working more than 10 consecutive hours overnight. Acad. Radiol. 20(3), 305–311 (2013).

Hanna, T. N. et al. Emergency radiology practice patterns: Shifts, schedules, and job satisfaction. J. Am. Coll. Radiol. 14(3), 345–352 (2017).

Bruls, R. J. M. & Kwee, R. M. Workload for radiologists during on-call hours: Dramatic increase in the past 15 years. Insights Imaging 11(1), 1–7 (2020).

Sociedad Española de Medicina de Família y Comunitaria (semFYC). Programa de la especialidad de medicina familiar y comunitaria [Internet]. Ministerio de Sanidad y Consumo. 2005. Available from: https://www.semfyc.es/wp-content/uploads/2016/09/Programa-Especialidad.pdf. Accessed 28 Sep 2023.

Koenigkam Santos, M. et al. Artificial intelligence, machine learning, computer-aided diagnosis, and radiomics: Advances in imaging towards to precision medicine. Radiol. Bras. 52(6), 387–396 (2019).

Neri, E. et al. What the radiologist should know about artificial intelligence – an ESR white paper. Insights Imaging 10(1), 44 (2019).

Hwang, E. J. et al. Use of artificial intelligence-based software as medical devices for chest radiography: A position paper from the Korean society of thoracic radiology. Korean J. Radiol. 22(11), 1743–1748 (2021).

Kim, J. H., Han, S. G., Cho, A., Shin, H. J. & Baek, S. E. Effect of deep learning-based assistive technology use on chest radiograph interpretation by emergency department physicians: a prospective interventional simulation-based study. BMC Med. Inform. Decis. Mak. 21(1), 1–9 (2021).

Kaviani, P. et al. Performance of a chest radiography AI algorithm for detection of missed or mislabeled findings: A multicenter study. Diagnostics 12(9), 2086 (2022).

Bleeker, S. E. et al. External validation is necessary in prediction research: A clinical example. J. Clin. Epidemiol. 56(9), 826–832 (2003).

Kim, D. W., Jang, H. Y., Kim, K. W., Shin, Y. & Park, S. H. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: Results from recently published papers. Korean J. Radiol. 20(3), 405–410 (2019).

Dupuis, M., Delbos, L., Veil, R. & Adamsbaum, C. External validation of a commercially available deep learning algorithm for fracture detection in children. Diagn. Interv. Imaging 103(3), 151–159 (2022).

Mutasa, S., Sun, S. & Ha, R. Understanding artificial intelligence based radiology studies: What is overfitting?. Clin. Imaging 65, 96–99 (2020).

Liu, X. et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI extension. Nat. Med. 2(10), e537–e548 (2020).

Kim, C. et al. Multicentre external validation of a commercial artificial intelligence software to analyse chest radiographs in health screening environments with low disease prevalence. Eur. Radiol. 33(5), 3501–3509 (2023).

Reglamento (UE) 2017/745 DEL PARLAMENTO EUROPEO Y DEL CONSEJO-de 5 de abril de 2017-sobre los productos sanitarios, por el que se modifican la Directiva 2001/83/CE, el Reglamento (CE) n.o 178/2002 y el Reglamento (CE) n.o 1223/2009 y por el que se derogan las Directivas 90/385/CEE y 93/42/CEE del Consejo.

Vasey, B. et al. Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat. Med. 28(5), 924–933 (2022).

Taylor, M. et al. Raising the bar for randomized trials involving artificial intelligence: The SPIRIT-artificial intelligence and CONSORT-artificial intelligence guidelines. J. Invest. Dermatol. 141(9), 2109–2111 (2021).

van Beek, E. J. R., Ahn, J. S., Kim, M. J. & Murchison, J. T. Validation study of machine-learning chest radiograph software in primary and emergency medicine. Clin. Radiol. 78(1), 1–7. https://doi.org/10.1016/j.crad.2022.08.129 (2023).

Catalina, Q. M., Fuster-Casanovas, A., Solé-Casals, J. & Vidal-Alaball, J. Developing an artificial intelligence model for reading chest X-rays protocol for a prospective validation study. JMIR Res. Protoc. 11(11), e39536 (2022).

Oxipit. Oxipit ChestEye secures medical device certification in Australia. 2020. Available from: https://oxipit.ai/news/oxipit-ai-medical-imaging-australia/. Accessed 22 Sep 2023.

Oxipit. Study: AI found to reduce bias in Radiology Reports. 2019. Available from: https://oxipit.ai/news/study-ai-found-to-reduce-bias-in-radiology-reports/. Accessed 22 Sep 2023.

Hwang, E. J. et al. Deep learning for chest radiograph diagnosis in the emergency department. Radiology 293(3), 573–580. https://doi.org/10.1148/radiol.2019191225 (2019).

Salas Pérez, R., Teixidó Vives, M., Picas Cutrina, E. & Romero, N. I. Diferentes aspectos de las calcificaciones mamarias. Imagen Diagnóstica 4(2), 52–57 (2013).

Koo, Y. H. et al. Extravalidation and reproducibility results of a commercial deep learning-based automatic detection algorithm for pulmonary nodules on chest radiographs at tertiary hospital. J. Med. Imaging Radiat. Oncol. 65(1), 15–22 (2021).

Futoma, J., Simons, M., Panch, T., Doshi-Velez, F. & Celi, L. A. The myth of generalisability in clinical research and machine learning in health care. Lancet Digit. Heal. 2(9), e489–e492 (2020).

Nam, J. G. et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 290(1), 218–228 (2019).

Park, S. H. Diagnostic case-control versus diagnostic cohort studies for clinical validation of artificial intelligence algorithm performance. Radiology 290, 272–273 (2019).

Nam, J. G. et al. Development and validation of a deep learning algorithm detecting 10 common abnormalities on chest radiographs. Eur. Respir. J. 57(5), 2003061 (2021).

Sjoding, M. W. et al. Deep learning to detect acute respiratory distress syndrome on chest radiographs: A retrospective study with external validation. Lancet Digit. Heal. 3(6), e340–e348 (2021).

Lee, J. H. et al. Performance of a deep learning algorithm compared with radiologic interpretation for lung cancer detection on chest radiographs in a health screening population. Radiology 297(3), 687–696. https://doi.org/10.1148/radiol.2020201240 (2020).

Sung, J. et al. Added value of deep learning-based detection system for multiple major findings on chest radiographs: A randomized crossover study. Radiology 299(2), 450–459 (2021).

Sequía de radiólogos en España con plantillas al 50%. Available from: https://www.redaccionmedica.com/secciones/radiologia/marti-de-gracia-vivimos-una-situacion-critica-de-escasez-de-radiologos--4663. Accessed 25 Jun 2022.

Esquerrà, M. et al. Abdominal ultrasound: A diagnostic tool within the reach of general practitioners. Aten primaria/Soc Española Med Fam y Comunitaria 44(10), 576–583 (2012).

Acknowledgements

The authors would like to thank all the users who agreed to participate in the study, and the radiology team at the Osona study centre for their help in recruiting patients. We would also like to thank the general practitioners for their participation in the interpretation of the radiologist's reports. Finally, we would also like to thank the professionals at Oxipit for their help in describing the more technical part of the algorithm. In addition, this study was carried out as part of the Industrial Doctorates programme of Catalonia and obtained a Bayès Grant.

Author information

Authors and Affiliations

Contributions

Q.M.C., A.F.C., J.V.A. and J.S.C. participated in the definition of the project idea and the realisation of the protocol. Q.M.C. and A.F.C. participated in data preparation and Q.M.C. performed the data analysis and interpretation. J.V.A., A.R.C. and A.E.B. performed the interpretation of the radiologist's reports for all X-rays in the study. A.F.C., J.V.A., J.S.C., A.R.C. and A.E.B. participated in the drafting of the article and extensive review.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miró Catalina, Q., Vidal-Alaball, J., Fuster-Casanovas, A. et al. Real-world testing of an artificial intelligence algorithm for the analysis of chest X-rays in primary care settings. Sci Rep 14, 5199 (2024). https://doi.org/10.1038/s41598-024-55792-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-55792-1

- Springer Nature Limited