Abstract

This paper proposes a fluid classifier for a tight reservoir using a quantum neural network (QNN). It is difficult to identify the fluid in tight reservoirs, and the manual interpretation of logging data, which is an important means to identify the fluid properties, has the disadvantages of a low recognition rate and non-intelligence, and an intelligent algorithm can better identify the fluid. For tight reservoirs, the logging response characteristics of different fluid properties and the sensitivity and relevance of well log parameter and rock physics parameters to fluid identification are analyzed, and different sets of input parameters for fluid identification are constructed. On the basis of quantum neural networks, a new method for combining sample quantum state descriptions, sensitivity analysis of input parameters, and wavelet activation functions for optimization is proposed. The results of identifying the dry layer, gas layer, and gas–water co-layer in the tight reservoir in the Sichuan Basin of China show that different input parameters and activation functions affect recognition performance. The proposed quantum neural network based on hybrid parameters and a wavelet activation function has higher fluid identification accuracy than the original quantum neural network model, indicating that this method is effective and warrants promotion and application.

Similar content being viewed by others

Introduction

Tight oil and gas reservoirs, which are situated in most parts of the world, have become a key research topic among petroleum and natural gas engineers, and their exploration and development has affected the world’s energy supply and demand patterns. In China, tight oil and gas reservoirs have become a crucial resource through which the unconventional oil and gas industry can increase reserves and production, and are widely distributed in the following basins: Ordos Basin1, Sichuan Basin2,3, Tarim Basin4, and Qaidam Basin5. Tight reservoirs are characterized by low porosity, low permeability, and strong inhomogeneity, and fluid identification crucially facilitates the formulation and adjustment of field development plans. It is difficult to identify fluids based on traditional statistical methods and cross-plots6,7,8. Fluid identification in tight reservoirs is particularly crucial; thus, its speed, accuracy, and economic sustainability should be optimized.

Datasets for fluid identification comprise seismic data9,10 and well log data11; the latter facilitates the effective analysis of the lithology and physical properties of reservoirs, and promotes the identification of fluid properties12,13. The fluid response of tight reservoirs is not apparent; well log data are affected by various factors, and the fluid properties of reservoirs cannot be accurately identified using only the data of single logs or reservoir parameters. The rapid development of big data has enabled successful reservoir evaluation and interpretation through data-driven machine learning methods14; algorithms such as the classification committee machine6,15, clustering16, neural networks17,18, discriminant analysis19, decision tree20, random forest21, and support vector machine22 have become prevalent, and integrated discriminant methods that combine many of these techniques have been utilized in reservoir fluid identification. Fluid identification based on nuclear magnetic logging and a Formation MicroScanner Image (FMI) is costly8,23, and development wells are rarely logged; by contrast, conventional logging data are available and abundant; hence, they form the basis of the input parameters for most fluid identification models6,17,20,21,22.

The fluid types in tight reservoirs are complex, and the observation samples are linearly non-separable. Hence recognition models and model input parameters must be investigated; thus, new intelligent algorithms can be built. Quantum neural networks combine quantum computing with neural networks, and they apparently optimize the computational efficiency of neural networks23,24. Since Kak proposed quantum neural networks, scholars have proposed quantum-derived neural networks25, quantum dot neural networks26, quantum cellular neural networks27, quantum associative storage algorithms28, and the construction of neural networks through the utilization of quantum rotating gates and controlled non-gates29. Due to research developments, applications in prediction30,31, image recognition32, classification33,34, and fault diagnosis35 have advanced. Quantum neural networks are applicable to reservoir evaluation36,37,38; however, they have not been applied to fluid identification in tight reservoirs.

In recent years, due to the enhancement of computer hardware performance and the continuous optimization of algorithms, artificial neural networks (ANN) and machine learning (ML) have revolutionized various fields39. In the field of computer vision, deep learning models such as convolutional neural networks (CNN) have surpassed human performance in image recognition tasks40,41. In the field of natural language processing, models such as recurrent neural networks (RNN) and long short-term memory (LSTM) can understand and generate natural languages42,43. In the field of medicine, artificial neural networks and machine learning have been widely utilized in tasks such as disease diagnosis, drug discovery, and the optimization of therapeutic programs44,45,46. In the field of energy, artificial neural network (ANN) models have been built to predict various energy-related problems, such as wax deposition, building energy efficiency, and oil field recovery, and a large amount of experimental data has been utilized47,48,49. Moreover, artificial neural networks can be combined with machine learning to predict reservoir porosity and permeability, and the ANN model exhibits satisfactory performance in predicting reservoir permeability and porosity, providing a data-driven approach for oil exploration and extraction50,51.

Fluid identification represents a difficult production and scientific problem for oil and gas exploration in tight reservoirs. Therefore, we refer to the novel quantum computing results to construct a novel recognition model that can enhance recognition accuracy. This study constructs a quantum neural network method based on a wavelet function (QNNw), which can effectively process data with large ambiguity and linearly indistinguishable data, and it utilizes the following preprocessing methods: observation samples for tight reservoirs, vector parameterization to analyze the sensitivity and correlation of the input parameters, and a wavelet function to activate the hidden layer. Thus, the model is optimized. To analyze the influence of different QNNs on identification performance, the proposed method (QNNw) is compared with a quantum neural network method that is based on a sigmoid function (QNNs).

The remainder of this paper is structured as follows. Data acquisition and preparation are described in “Methods and model training/methodology” section, and the proposed QNN is explained. “Experimental investigations and discussion” section presents the results and discussion, and “Conclusions” section offers the conclusions.

Methods and model training/methodology

Fluid feature extraction

Well log and rock physics parameters

Tight reservoir evaluation refers to the detection and quantification of lithology and fluid types through the interpretation of wellbore and well log data52. The input parameters of fluid identification can be divided into two main categories: (1) Well log parameters, which include borehole diameter (CAL), gamma ray (GR), transverse velocity (S-wave velocity, SV), longitudinal velocity (P-wave velocity, PV), shallow lateral resistivity (LLS), compensated neutron log (CN), deep lateral resistivity (LLD), and acoustic log (AC); \({C}_{1}=\{{\text{CAL}},{\text{GR}},{\text{SV}},{\text{PV}},{\text{LLS}},{\text{CN}},{\text{LLD}},{\text{AC}}\}\). (2) Rock physics parameters, which are sensitive to reservoir fluids53, include Poisson’s ratio \(\upnu\), bulk modulus \({\text{K}}\), shear modulus \(\upmu\), Young’s modulus \({\text{E}}\), Lamé coefficient \(\uplambda\), and longitudinal-to-transverse velocity ratio \({V}_{p}/{V}_{s}\), which can be obtained using seismic or logging data as follows:

Young’s modulus \({\text{E}}\)

Bulk modulus \({\text{K}}\)

Shear modulus \(\upmu\)

Poisson’s ratio \(\upnu\)

Lamé coefficient λ

where \(\Delta ts\)(\(\mathrm{\mu s}/{\text{ft}}\)) denotes S-wave time-difference, \(\Delta tp\) (\(\mathrm{\mu s}/{\text{ft}}\)) denotes P-wave time-difference, \(\rho\) (g/cm3) denotes density,

The rock physics parameters are expressed using the following formula: \({C}_{2}=\{E,K,\upmu ,\upnu ,\uplambda , \lambda \times \rho , \mu \times \rho , {V}_{p}/{V}_{s}\}\).

When a tight reservoir contains natural gas, the general Lamé coefficient × density (λ × ρ), shear modulus × density (μ × ρ), Poisson’s ratio \(v\), bulk modulus K, and \(\lambda /\mu\) all exhibit different response characteristics. Goodway analyzed the sensitivity of rock physical parameters and gave an amplitude versus offset (AVO) analysis based on fluid factors such as λ × ρ, μ × ρ, and \(\lambda /\mu\)54. Thus, in addition to identifying clastic lithologies and fluids with various rock physics parameters, the hybrid parameters of various rock physics parameters can also be utilized; thus, lithologies and fluids can be identified.

Fluid sensitivity analysis

Fluid characterization is dependent on the reliability and exactness of well log or rock physics parameters correlation55. For tight reservoirs, the sensitivity of well log parameters and rock physics parameters is analyzed using the sensitivity factor \({{\text{S}}}_{i}\) between the observed values of different parameters; \({{\text{S}}}_{i}\) considers the relative distance using a vector norm, and is computed as

where \(d\) denotes the number of parameters, \(x\) denotes the value of the well log parameters and rock physics parameters of the dry layer in the tight reservoir, \({\upomega }^{d}\mathrm{ denotes}\) the set of parameters of the dry layer, \(y\) denotes the value of the well log and rock physics parameters of the fluid layer corresponding to \(x\), and \({\omega }^{f}\) denotes the set pertaining to the property parameters of the fluid layer.

Equation (1) quantifies the sensitivity of the same parameter for the dry and fluid layers; however, it negates the correlation between the parameters. Due to the presence of redundant information between parameters, modeling and solution become more difficult, and the effectiveness of fluid identification may be affected. Scatter plots are the simplest and most effective method of analyzing the correlation of input parameters11. To provide optimized input parameters for the tight reservoir fluid identification model, we analyze the correlation between two sets of well log and rock physics parameters.

Quantum neural network

Architecture of quantum neural network

Many methods of combining the quantum theory with biological neural networks exist; therefore, quantum neural network models can be implemented. We utilize Narayanan’s quantum neural network to construct a model for tight reservoir fluid identification24,56 that is similar in structure to a feedforward neural network (BPNN); however, the following exception can be observed: a quantum neuron is utilized as the hidden layer unit. In the implicit layer, a multilevel transfer function can adjust the quantum intervals during training. Figure 1 indicates that the quantum neural network comprises n input neurons, m hidden neurons, and one output neuron24,56.

Sample preprocessing method: Suppose there are N d-dimensional fluid observation samples \(\left({x}_{i},{y}_{i}\right),{x}_{i}\in {R}^{d},{y}_{i}\in {R}^{1}\), where input data \({x}_{i}\) comprises corresponding output data \({y}_{i}\). The fluid identification parameters exhibit different magnitudes and large differences in sample values. We normalize d-dimensional fluid input samples \({\text{S}}=\{{{\text{s}}}_{1},{{\text{s}}}_{2},\dots ,{{\text{s}}}_{n}\}\) before inputting them to the QNN (Eq. 7) with values in the interval [0, 1]:

where \({{\text{a}}}_{i}={\text{min}}\,{x}_{i}, {{\text{b}}}_{i}={\text{max}}\,{x}_{i}, i=\mathrm{1,2},\cdots ,m\).

First layer

The input layer comprises \(n\) quantum neurons denoting the input parameters. The input \({x}_{i}\) of datasets \(\left({x}_{i},{y}_{i}\right),{x}_{i}\in {R}^{d},{y}_{i}\in {R}^{1}\) is preprocessed in the interval \(\left[0, 1\right]\), and encoded into the input state of the quantum neural network. The first layer converts them to quantum states \({\upphi }_{{\text{ij}}}\); thus, their phase values (Eq. 3) lie in the interval [0, π/2]24.

Second layer

The implicit layer receives the output value pertaining to the quantum neuron of the first layer, and the output of the j-th quantum neuron of the hidden layer is

where f denotes the activation function of the hidden content layer, and \({\mathrm{\alpha }}_{j}\) denotes the reversal parameter, which is determined for the initial value interval \(\left[\mathrm{0,1}\right]\); the model learns and changes these parameters to attain the optimal value. \({\upbeta }_{j}\) denotes the quantum transition position that determines the shapes of quantum intervals, which represent adjustable parameters of the quantum neural network.

Third layer

This layer performs a weighted summation of the output \({h}_{j}\) of the hidden layer. The output of the input layer neurons can be obtained as

where \({\widehat{y}}_{k}\) denotes the actual output of the quantum neural network, \({\text{g}}\) denotes the chosen output function, and \({w}_{jk}\) denotes the connection power pertaining to the quantum neuron, which is situated in the hidden layer of the quantum neuron in the output layer.

Since the wavelet function exhibits satisfactory time–frequency localization characteristics and satisfactory resolution of the logging signal in both the time and frequency domains, it is utilized as the activation function (Eq. 6), herein denoted as QNNw, for the implicit layer of the quantum neural network; furthermore, the sigmoid function (Eq. 7), herein denoted as QNNs, is utilized for the output layer.

Learning algorithm of quantum neural network

Suppose there are N d-dimensional fluid observation samples \(\left({x}_{i},{y}_{i}\right),{x}_{i}\in {R}^{d},{y}_{i}\in {R}^{1}\), \({\text{y}}=({{\text{y}}}_{1},{{\text{y}}}_{2},\dots ,{{\text{y}}}_{N})\) is the actual output, \(\widehat{{\text{y}}}=({\widehat{{\text{y}}}}_{1},{\widehat{{\text{y}}}}_{2},\dots ,{\widehat{{\text{y}}}}_{N})\) is the desired output, and the loss function is the mean squared error (MSE).

Minimizing the loss function is an optimization problem. The aim of learning is to minimize the root mean square error by modifying the network parameters. If we apply the gradient descent method to update the parameters \({\uptheta }_{ij}\), \({\mathrm{\alpha }}_{j}\), and \({w}_{jk}\) of the quantum neural network, the update formula for \({\uptheta }_{ij}\) can be described as

The update formula for \({\mathrm{\alpha }}_{j}\) can be expressed as

The update formula for \({w}_{jk}\) can be expressed as

where \(\gamma\) denotes the learning efficiency.

The QNN process for fluid identifications is expressed as follows:

-

Step 1 Select feature parameters, including well log parameter and rock physics parameters.

-

Step 2 Obtain the sample set, and divide it into training and test data.

-

Step 3 Perform sensitivity and correlation analyses to obtain the input parameters of fluid identification.

-

Step 4 Input training data, train the QNN, and determine the optimal parameters.

-

Step 5 Input training data pertaining to the optimal parameters, and train the WQNN.

-

Step 6 Perform a comparative analysis based on accuracy, recall, and loss.

Experimental investigations and discussion

Study area and fluid identification solution design

Study area and data

The study area is located in the Xujiahe Formation, Sichuan Basin, China, which is mainly located in Chengdu, Deyang, Mianyang, and Jiangyou in western Sichuan. The thickness of Xujiahe Formation is 400–700 m, with a maximum thickness of 1000 m near An County, which is adjacent to Longmen Mountain. The average porosity of the tight reservoirs is only 4.2%, and the differences between the logging curves of gas- and non-gas-bearing sands, as well as those of sands with different gas abundances, are small. The tight gas reservoirs in the study area are divided into gas layer (GL), water-bearing gas layer (WBGL), gas-bearing water layer (GBWL), and dry layer (DL) formations. For layers in tight reservoirs, the quantization {DL, GBWL, WBGL, GL} is expressed as {0.15, 0.40, 0.65, 0.90}, whose values are all fractional. The transfer function of the output layer is set to a sigmoid activation function; thus, the actual and desired output values can be bounded in the interval \(\left[0, 1\right]\).

Fluid identification scheme design

The number of samples pertaining to each layer varies for the tight reservoirs located in the study area. To realize more globally representative network training samples, they were randomly selected; thus, the fluid identification model was trained. 70% of the samples are utilized for training, and the data generated by the sampling method Bootstrap for cross-validation. 30% of the samples are utilized for testing. To enhance the reliability of the experimental comparison, different input parameters of the fluid recognition model were utilized, the QNN and WQNN network models were trained and tested 30 times with identical training and test samples, and the experimental results were averaged for utilization as the evaluation index of the network training results.

Selection of model input parameters

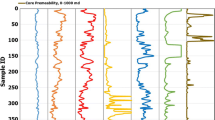

Input parameters for the fluid identification model were determined by sensitivity and correlation analyses. The sensitivity factor \({{\text{S}}}_{i}\) between the parameter values of the same well log parameter of the dry and fluid layers (Eq. 6) was calculated; thus, the fluid sensitivity parameters of the target reservoir were obtained (Fig. 2). According to the results pertaining to the sensitivity analysis of the input parameters (Fig. 1), the sensitivity values of each well log parameters were calculated using Eq. (6); with the exception of the parameter “AC” which has a sensitivity value of 0.03, the sensitivity factor \({{\text{S}}}_{i}\) of the other parameters CAL, GR, SWV, PWV, LLS, LLD, CN and LLD are all greater than 0.19, and the sensitivity values are all high They can be initially identified as fluid identification model input parameters. Further correlation analysis was performed on the initially selected sensitivity parameters. In the scatter plot depicted in Fig. 3, the well log parameters SV and PV exhibit apparent correlation, similar to LLS and LLD; therefore, only SV and LLS are retained as model input parameters. The sensitivity and correlation analyses create a scenario in which the well log parameters CAL, GR, SV, LLS, and CN are defined as the final input parameters 1 (IP1) for fluid identification.

The rock physics parameters were obtained as per the preceding process. According to the results of the sensitivity analysis of the input parameters illustrated in Fig. 4, for the rock physics parameters (Fig. 2), \(\mu\), K, E, λ × ρ, and \(\upupsilon\) can be selected as model input parameters. In the scatter plot of Fig. 5, K and E exhibit apparent correlation, and only K is retained as a model input parameter. Through sensitivity and correlation analyses, the reservoir rock physics parameters \(\mu\), K, E, λ × ρ, and \(\upupsilon\) can be obtained as the final input parameters 2 (IP2) for fluid identification.

IP1 and IP2 can be combined to obtain the input parameters of the fluid recognition model, IP3 = \({S}_{1}=\{{\text{CAL}},{\text{GR}},{\text{SV}},{\text{PV}},{\text{LLS}},{\text{CN}},{\text{LLD}},{\text{AC}},E,K,\upmu ,\upnu ,\uplambda , \lambda \times \rho , \mu \times \rho , {V}_{p}/{V}_{s}\}\). The QNN model was trained with IP1, IP2, and IP3 (Fig. 6). Initially, the loss function values of all three inputs reduces quickly, and this decrease slows down when the number of iterations exceeds approximately 200. The loss function value of the rock physics parameters, an input parameter of the fluid identification model, is greater than those of IP1 and IP3. When the number of iterations approximates 1800, IP3 loss function value is significantly smaller than that of IP1; thus, for tight reservoir fluid identification, IP3 can be utilized as the final input parameter.

Table 1 depicts the range of input variable values for the proposed model. Table 1 indicates that the input variable values of four types of reservoirs, namely gas layer, dry layer, water-bearing gas layer, and gas-bearing water layer, are overlapped, which is a linear non-separation scenario; due to this phenomenon, the difficulty of classification is increased.

The trained fluid recognition model was utilized to classify the test samples, and the calculated classification accuracies that utilize IP1, IP2, and IP3 are illustrated in Fig. 7. When IP3 was selected, the classification accuracy was much lower than that of IP1 and IP3. The IP3 classification accuracy is generally more optimal than that of IP1 when the number of training sessions is > 1000. The normalized confusion matrix between the true values of the test samples and the classification results of the model when IP1 and IP3 are calculated without considering IP2 is depicted in Fig. 8. Regardless of the type of input parameters utilized, the QNN-based fluid recognition model performs effectively for DL and GBWL; for GL and WBGL, the model is less effective; and for GL and WBGL, IP3 is generally more optimal than IP1.

The effect of different input parameters on the fluid identification results is further analyzed using a confusion matrix, where diagonal elements indicate correctly identified reservoir types, with larger values implying higher classification accuracy. Other elements indicate misclassified reservoir types. Figure 8 indicates that when a sigmoid activation function and IP1 are utilized, the Recall is 96% for the dry layer; 100% for the gas-bearing water layer; 81.25% for the water-bearing gas layer, with 18.75% being identified as a gas-bearing water layer; and 88% for the gas layer, with 12% being identified as the water-bearing gas layer. When IP3 is, the recall is 100% for the dry layer and gas-bearing water layer; 75% for the water-bearing gas layer, 6.25% is identified as a gas-bearing water layer, 18.75 is identified as a gas layer; and the gas layer is 100%. In terms of recognition results, IP3 is better than IP1.

If we consider the actual problem (i.e., fluid identification based on logging data), the recognition performance of different methods is depicted in Fig. 9, which is sufficient for the performance evaluation of the model in the test set. The blue bars indicate the number of correctly classified samples, and the red bars indicate the number of incorrectly classified samples. The three methods exhibit different recognition accuracies for the dry layer, water layer, gas layer, and gas–water layer; however, the overall recognition accuracy of the quantum neural network is higher.

Comparison with QNN

We compare the performance of the QNN at the fluid recognition in which the sigmoid activation function (QNNs) and wavelet function (QNNw) are utilized, and we utilize a confusion matrix, where TP denotes the number of correctly classified positive samples, TN denotes the number of correctly classified negative samples, FN denotes the number of positive samples that are incorrectly classified as negative, and FP denotes the number of negative samples that are incorrectly classified as positive. Herein, the recognition effectiveness of QNN when different activation functions were utilized is evaluated using recall, precision (accuracy), and \({F}_{\beta }\), where recall denotes the percentage of reservoir fluid correctly classified as Class \(\omega\); precision denotes the percentage of reservoir fluid that are exactly have Class \(\omega\) between all those were classified as Class \(\omega\); and correctness rate represents the ratio of the correct fluid type identified to the whole identified into that class. \({F}_{\beta }\) denotes a combined metric through which the single class accuracy and recall can be assessed, where \(\upbeta =1\).

Figures 10 and 11 indicate that the precision of the water-bearing gas layer increases from 0.75 to 1 when the wavelet function is utilized for QNN; however, the precision of the gas layer decreases slightly, from 1 to 0.96. With regard to recall, only the dry layer remains unchanged, whereas the recall of the water-bearing gas layer is enhanced from 0.96 to 1, and the recall increases from 0.8928 to 0.9213; however, the recall value for the water-bearing gas layer decreases slightly. The F1-score for the dry layer remains unchanged, whereas the F1 for the water-bearing gas layer, the gas-bearing water layer, and the gas layer all improve. The variation in the recall and precision in regard to F1 indicates that the fluid identification of tight reservoirs is enhanced when the quantum neural network utilizes wavelet functions. The dry layer exhibits the most optimal classification effect, possessing the highest F1-score, recall, and precision, and the gas-bearing water layer ranks second. The gas layer exhibits a higher precision and a slightly lower F1-score and recall, whereas the water-bearing gas layer exhibits a higher recall and a slightly worse recall for the other metrics.

We utilized Eqs. (11) and (12) as activation functions for QNN; the activation functions are recorded as QNNs and QNNw, and the corresponding results are depicted in Figs. 12 and 13. Both QNNs and QNNw can achieve a satisfactory recognition effect; however, overall differences exist: the wavelet functions were somewhat more effective than the s-functions, and the smoothness observed on the plots was somewhat more satisfactory, without the violent vibration phenomenon, as was the case with sigmoid functions. Subsequently, accuracy was introduced; thus, the classification performance of QNNs and QNNw was compared as follows: the number of correctly classified reservoir fluids divided by the total of such fluids, which is expressed as

For the test dataset, when the number of training times exceeded 1000, the average recognition accuracy of both QNNs and QNNw was above 90%; however, the average recognition accuracy of QNNw was higher than that of QNNs, and the fluid recognition accuracy pertaining to the QNNw test dataset was 98%. The misidentified samples were concentrated in the gas layer, and some were identified as water-bearing gas layers.

Machine learning algorithms have been widely utilized in fluid identification, and can be compared and analyzed based on accuracy, recall, and \({F}_{\beta }\). To facilitate comparison, when considering only the accuracy, the accuracy of the quantum wavelet neural network proposed herein is higher than the accuracy of the decision tree classification model by 80.1221, the accuracy of the nearest neighbor algorithm classification model by 86.35, and the accuracy of the dynamic classification committee machine by 91.3915.

Conclusions

The selection of input and model parameters for a quantum neural network model crucially impacts classification results. This study considered the aforementioned questions and attempted to enhance specific applications. By focusing on the problem of fluid identification in a tight reservoir, we analyzed the parameter selection of the identification model, performed sensitivity and correlation analyses, constructed an identification model and determined its parameters, and proposed a specific application of the algorithm. The main observations and the advantage of the proposed model are as follows:

-

(1)

A quantum neural network-based fluid identification scheme was investigated. Three sets of input indicators for the model were determined through sensitivity and correlation analyses, and the network was trained by applying known categories of reservoirs. The results indicated that quantum neural networks can be effectively utilized for fluid identification in tight reservoirs.

-

(2)

The performance of hybrid parameters was significantly more optimal than that of single-type parameters. Through the sensitivity and correlation analysis, well log parameter, rock physics parameters, and hybrid parameters were selected as different model inputs. The results indicated that the fluid identification effect is most optimal when mixed parameters are utilized.

-

(3)

Different activation functions of a quantum neural network affect the recognition result. Because a wavelet function exhibits satisfactory time–frequency localization characteristics, a Morlet wavelet function was utilized as an activation function in the hidden layer. Whether the input parameters were logging or mixed parameters, the recognition effect was more optimal than with a sigmoid function.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Bai, Z. et al. Log interpretation method of resistivity low-contrast oil pays in Chang 8 tight sandstone of Huanxian area, Ordos Basin by support vector machine. Sci. Rep. 12(1), 1–11. https://doi.org/10.1038/s41598-022-04962-0 (2022).

Ma, T., Gui, J. & Chen, P. Logging evaluation on mechanical-damage characteristics of the vicinity of the wellbore in tight reservoirs. J. Pet. Explor. Prod. Technol. 11, 3213–3224 (2021).

Tan, D., Luo, L. & Song, L. Differential precipitation mechanism of cement and its impact on reservoir quality in tight sandstone: A case study from the Jurassic Shaximiao formation in the central Sichuan Basin, SW China. J. Pet. Sci. Eng. 221, 111263 (2023).

Peng, J., Han, H., Xia, Q. & Li, B. Fractal characteristic of microscopic pore structure of tight sandstone reservoirs in Kalpintag Formation in Shuntuoguole area, Tarim Basin. Pet. Res. 5(1), 17 (2020).

Tang, W. et al. Astronomical forcing of favorable sections of lacustrine tight reservoirs in the lower Shangganchaigou formation of the Gasi Area, Western Qaidam Basin. In IFEDC, SSGG 659–673. https://doi.org/10.1007/978-981-19-2149-0_59 (2022).

Tan, M., Bai, Y., Zhang, H., Li, G. & Wang, A. Fluid typing in tight sandstone from wireline logs using classification committee machine. Fuel 271, 117601. https://doi.org/10.1016/j.fuel.2020.117601 (2020).

Zhang, J., Zhang, G. & Huang, L. Crack fluid identification of shale reservoir based on stress-dependent anisotropy. J. Appl. Geophys. 16(2), 209–217 (2019).

Jiang, C. et al. Identification of fluid types and their implications for petroleum exploration in the shale oil reservoir: A case study of the Fengcheng Formation in the Mahu Sag, Junggar Basin, Northwest China. Mar. Pet. Geol. 147, 105966 (2023).

Zhang, G., Pan, X., Li, Z., Sun, C. & Yin, X. Seismic fluid identification using a nonlinear elastic impedance inversion method based on a fast Markov chain Monte Carlo method. Pet. Sci. 12, 406–416 (2015).

Xiong, J. et al. Fluid identification method and application of pre-stack and post-stack integration based on seismic low-frequency. Pet. Res. 2, 90–96. https://doi.org/10.1016/j.ptlrs.2017.06.006 (2017).

Das, B. & Chatterjee, R. Well log data analysis for lithology and fluid identification in Krishna–Godavari Basin, India. Arab. J. Geosci. 11, 231. https://doi.org/10.1007/s12517-018-3587-2 (2018).

Zhang, L. et al. Probability distribution method based on the triple porosity model to identify the fluid properties of the volcanic reservoir in the Wangfu fault depression by well log. Comput. Geosci. 21, 241–246 (2017).

Luo, G., Xiao, L., Shi, Y. & Shao, R. Machine learning for reservoir fluid identification with logs. Pet. Sci. Bull. 01, 24–33 (2022).

Li, N. et al. Application status and prospects of artificial intelligence in well logging and formation evaluation. Acta Pet. Sin. 42(4), 508–522 (2021).

Bai, Y. et al. Dynamic classification committee machine-based fluid typing method from wireline logs for tight sandstone gas reservoir. Chin. J. Geophys. 64(5), 1745–1758 (2021).

Liu, L., Sun, S., Yu, H., Yue, X. & Zhang, D. A modified fuzzy C-means (FCM) clustering algorithm and its application on carbonate fluid identification. J. Appl. Geophys. 129, 28–35 (2016).

He, M., Gu, H. & Wan, H. Log interpretation for lithology and fluid identification using deep neural network combined with MAHAKIL in a tight sandstone reservoir. J. Pet. Sci. Eng. 194, 107498 (2020).

Tan, M., Liu, Q. & Zhang, S. A dynamic adaptive radial basis function approach for total organic carbon content prediction in organic shale. Geophysics 78(6), 445–459 (2013).

Yan, X., Cao, H., Yao, F. & Ba, J. Bayesian lithofacies discrimination and pore fluid detection in tight sandstone reservoir. Oil Geophys. Prospect. 47(6), 945–950 (2012).

Zhao, Q., Yang, B., Li, X., Wang, S. & Wei, J. Application of cross-plot-based decision tree template method in fluid identification. Well Logg. Technol. 42(6), 641–646 (2018).

He, J., Wen, X., Li, B., Chen, Q. & Li, L. The pre-stack fluid identification method based on random forest algorithm. Acta Pet. Sin. 43(3), 376–385 (2022).

Fan, X. et al. Using image logs to identify fluid types in tight carbonate reservoirs via apparent formation water resistivity spectrum. J. Pet. Sci. Eng. 178, 937–947 (2019).

Kak, S. On quantum neural computing. Inf. Sci. 83(3–4), 143–160 (1995).

Aliabadi, F. & Majidi, M. H. Chaos synchronization using adaptive quantum neural networks and its application in secure communication and cryptography. Neural Comput. Appl. 34, 6521–6533. https://doi.org/10.1007/s00521-021-06768-z (2022).

Menneer, T. & Narayanan, A. Quantum-inspired neural networks. In Department of Computer Science, University of Exeter, Exeter, Technical Report, UK 329 (1995).

Behrman, E. C., Niemel, J., Steck, J. E. & Skinner, S. R. A quantum dot neural network. In Proc. 4th workshop on Physics of Computation, Portland, USA 22–24 (1996).

Toth, G. et al. Quantum cellular neural networks. Superlattice Microstruct. 20(4), 473–478 (1996).

Ventura, D. & Martinez, T. Quantum associative memory with exponential capacity. In Proc. 1998 IEEE World Congress on Computational Intelligence, Anchorage, USA 509–513 (1998).

Matsui, N., Takai, M. & Nishimura, H. A network model based on qubitlike neuron corresponding to quantum circuit. Electron. Commun. Jpn. 83(10), 67–73 (2000).

Liu, G. & Ma, W. A quantum artificial neural network for stock closing price prediction. Inf. Sci. 598, 75–85 (2022).

Wang, J., Li, H., Yang, H. & Wang, Y. Intelligent multivariable air-quality forecasting system based on feature selection and modified evolving interval type-2 quantum fuzzy neural network. Environ. Pollut. 274, 116429. https://doi.org/10.1016/j.envpol.2021.116429 (2021).

Wang, Y., Wang, Y. & Chen, C. Development of variational quantum deep neural networks for image recognition. Neurocomputing 501, 566–582. https://doi.org/10.1016/j.neucom.2022.06.010 (2022).

Gandhi, V., Prasad, G., Coyle, D., Behera, L. & Mcginnity, T. M. Evaluating quantum neural network filtered motor imagery brain–computer interface using multiple classification techniques. Neurocomputing 170, 161–167 (2015).

Dong, N., Kampffmeyer, M., Voiculescu, I. & Xing, E. Negational symmetry of quantum neural networks for binary pattern classification. Pattern Recogn. 129, 108750. https://doi.org/10.1016/j.patcog.2022.108750 (2022).

Gao, Z., Ma, C., Song, D. & Liu, Y. Deep quantum inspired neural network with application to aircraft fuel system fault diagnosis. Neurocomputing 238, 13–23. https://doi.org/10.1016/j.neucom.2017.01.032 (2017).

Li, J., Liang, S. & Fan, Y. Ultra deep reservoir evaluation based on quantum neural network. Comput. Dig. Eng. 46(12), 2499–2505 (2018).

Zhao, Y., Wang, W. & Li, P. Recognition of water-flooded layer based on quantum neural networks. Prog. Geophys. 4(5), 1971–1979 (2019).

Wang, T. Application of quantum neural networks in lithology identification in Leijia Area. J. Changchun Univ. 25(4), 56–63 (2015).

Panghald, S. & Kumar, M. Neural network method: Delay and system of delay differential equations. Eng. Comput. 38, 2423 (2021).

Tian, Y. Artificial intelligence image recognition method based on convolutional neural network algorithm. IEEE Access 8, 125731 (2020).

Amini, S. & Ghaemmaghami, S. Lowering mutual coherence between receptive fields in convolutional neural networks. Electron. Lett. 55, 325 (2019).

Song, J. & Yam, Y. Complex recurrent neural network for computing the inverse and pseudo-inverse of the complex matrix. Appl. Math. Comput. 93, 195 (1998).

Lichao, S. et al. Individualized short-term electric load forecasting using data-driven meta-heuristic method based on LSTM network. Sensors 22, 7900 (2022).

Currie, G. et al. Machine learning and deep learning in medical imaging: Intelligent imaging. J. Med. Imaging Radiat. 50, 477–487 (2019).

Konstantinos, P. et al. Artificial intelligence in nuclear medicine physics and imaging. Hell. J. Nucl. Med. 26, 57 (2023).

Mouchlis, V. D. et al. Advances in de novo drug design: From conventional to machine learning methods. Int. J. Mol. Sci. 22, 1676 (2021).

Mohammadali, A. Data-driven approaches for predicting wax deposition. Energy 265, 126296 (2023).

Le, T. L. et al. A comparative study of PSO-ANN, GA-ANN, ICA-ANN, and ABC-ANN in estimating the heating load of buildings’ energy efficiency for smart city planning. Appl. Sci. 9, 2630 (2019).

Moosavi, R. S. et al. ANN-based prediction of laboratory-scale performance of CO2-foam flooding for improving oil recovery. Nat. Resour. Res. 28, 1619 (2019).

Ahmadi, M. et al. Connectionist model predicts the porosity and permeability of petroleum reservoirs by means of petro-physical logs: Application of artificial intelligence. J. Pet. Sci. Eng. 123, 183 (2014).

Ahmadi, A. M. et al. Reservoir permeability prediction by neural networks combined with hybrid genetic algorithm and particle swarm optimization. Geophys. Prospect. 61, 582 (2013).

Hasan, M. M., Akter, F. & Deb, P. K. Formation characterization and identification of potential hydrocarbon zone for titas gas field, Bangladesh using wireline log data. Int. J. Sci. Eng. Res. 4(5), 1512–1518 (2013).

Zhang, J., Huang, H. & Zhu, B. Fluid identification based on P-wave anisotropy dispersion gradient inversion for fractured reservoirs. Acta Geophys. 65, 1081–1093 (2017).

Goodway, W., Chen, T. & Downton, J. Improved AVO fluid detection and lithology discrimination using lame petrophysical parameters; "λρ", "μρ", and "λ/μ fluid stack": From P and S inversions. In Expanded Abstracts of 67th Annual Internet SEG Mtg 183–186 (1997).

Ebong, E. D., Akpan, A. E. & Urang, J. G. 3D structural modelling and fluid identification in parts of Niger Delta Basin, southern Nigeria. J. Afr. Earth Sci. 158, 103565. https://doi.org/10.1016/j.jafrearsci.2019.103565 (2019).

Narayanan, A. & Menneer, T. Quantum artificial neural network architectures and components. Inf. Sci. 128(3–4), 231–255 (2000).

Acknowledgements

This work is supported by the Natural Science Foundation of Sichuan Province (Grant No. 2022NSFSC0510), the Geomathematics Key Laboratory of Sichuan Province (Grant No. scsxdz2021yb01), and the Sichuan Mineral Resources Research Center (Grant No. SCKCZY2022-YB009).

Author information

Authors and Affiliations

Contributions

D.L. was responsible for the topic selection, model construction and manuscript writing. Y.L. supervised this work and gave some suggestions. Y.Y. and X.W. was responsible for calculation and numerical simulation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Luo, D., Liang, Y., Yang, Y. et al. Hybrid parameters for fluid identification using an enhanced quantum neural network in a tight reservoir. Sci Rep 14, 1064 (2024). https://doi.org/10.1038/s41598-023-50455-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-50455-z

- Springer Nature Limited