Abstract

The grey wolf optimizer is an effective and well-known meta-heuristic algorithm, but it also has the weaknesses of insufficient population diversity, falling into local optimal solutions easily, and unsatisfactory convergence speed. Therefore, we propose a hybrid grey wolf optimizer (HGWO), based mainly on the exploitation phase of the harris hawk optimization. It also includes population initialization with Latin hypercube sampling, a nonlinear convergence factor with local perturbations, some extended exploration strategies. In HGWO, the grey wolves can have harris hawks-like flight capabilities during position updates, which greatly expands the search range and improves global searchability. By incorporating a greedy algorithm, grey wolves will relocate only if the new location is superior to the current one. This paper assesses the performance of the hybrid grey wolf optimizer (HGWO) by comparing it with other heuristic algorithms and enhanced schemes of the grey wolf optimizer. The evaluation is conducted using 23 classical benchmark test functions and CEC2020. The experimental results reveal that the HGWO algorithm performs well in terms of its global exploration ability, local exploitation ability, convergence speed, and convergence accuracy. Additionally, the enhanced algorithm demonstrates considerable advantages in solving engineering problems, thus substantiating its effectiveness and applicability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Among the various stochastic optimization methods, population-based algorithms inspired by nature are widely acclaimed and preferred. These approaches replicate problem-solving strategies employed by living organisms, as they pursue survival, which is the ultimate objective for all living beings. In order to achieve this objective, organisms have continuously evolved and adapted in diverse ways. Consequently, seeking inspiration from nature, the most effective and longstanding optimizer on Earth, is a sensible approach.

Swarm intelligence algorithms are a class of nature-inspired algorithms based on group behavior, which make use of mutual collaboration and information exchange among individuals to search for optimal or near-optimal solutions in the solution space. These algorithms are flexible and robust, self-organising and adaptive, and are capable of handling multimodal, high-dimensional, nonlinear and uncertain problems as well as multi-objective optimization and dynamic optimization1,2. These algorithms primarily reflect the behavior and representation of certain social aspects of a group of animals and their evolutionary process. Furthermore, they are characterized by mathematical simplicity, independence from gradient methods, and minimal mathematical derivation. These algorithms approach the problem in a meta-heuristic manner, wherein optimization is performed stochastically and the optimization process initially provides stochastic solutions3. As a result, optimization is achieved through an iterative process. Moreover, these algorithms exhibit stochastic features that allow them to avoid settling for the best solution among neighbouring candidate solutions, instead extensively exploring the entire search space.

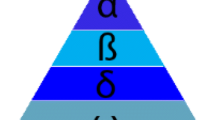

Currently, swarm intelligence algorithms have developed into a large family and been widely used by researchers, which are mainly inspired by animal behaviors such as migration, hunting, foraging, mating, nesting, and defending against enemies. It can be broadly classified into 4 types: insect-based, terrestrial animal-based, bird-based, and aquatic animal-based. Among these algorithms, some are well-known, including ant colony algorithm (ACO)4, particle swarm algorithm (PSO)5, grey wolf optimizer (GWO)6, whale optimization algorithm (WOA)7, ant lion optimizer (ALO)8, artificial bee colony algorithm (ABC)9, moth-flame optimization (MFO)10, Grasshopper optimization algorithm (GOA)11, dragonfly algorithm (DA)12, bat algorithm (BA)13, starling murmuration optimizer (SMO)14, salp swarm algorithm (SSA)15, african vulture optimization algorithm (AVOA)16, harris hawks optimization (HHO)17, aquila optimizer (AO)18, nutcracker optimizer (NOA)19, bird swarm algorithm (BSA)20, artificial gorilla troops optimizer (AGTO)21, quantum-based avian navigation optimizer algorithm (QANA)22, cheetah optimizer (CO)23, mountain gazelle optimizer (MGO)24, red fox optimization algorithm (RFOA), sailfish optimizer (SFO), krill herd algorithm (KHA)25, yellow saddle goatfish algorithm (YSGA) artificial fish swarm algorithm (AFSA)26, pride lion optimizer (LPO)27 and so on. The classification of the nature-inspired algorithms is showed in Fig. 1.

Nature-inspired algorithms include, in addition to swarm intelligence algorithms, other types of evolutionary-based, physics/chemistry-based, human-based, etc., which do not directly use cluster behavior in their algorithms. The most widely known of the evolutionary algorithms are genetic algorithms (GA)28, differential evolutionary (DE)29, evolutionary strategies (ES)30. Physics/chemistry-based algorithms are proposed based on mathematical models inspired by the laws of physics and chemistry, among which henry gas solubility optimization (HGSO)31, arithmetic optimization algorithm (AOA)32, etc. are cited frequently. Human-based algorithms often do not directly engage with nature; instead, they rely on factors such as human emotions, behavior, social interaction, and politics to develop systematic models. In recent years, social network search (SNS)33, political optimizer (PO)34, and anarchic society optimization (ASO)35 are representative algorithms.

The similarity between these types of nature-inspired algorithms lies in the fact that the solution kept being improved until it met the final criterion, and the optimization process would be divided the into two phases: exploration and exploitation36. Exploration refers to the tendency of algorithms with a high degree of stochastic behavior, so that solutions are subject to major changes. Larger variations in the solution will lead to greater exploration of the search space and thus to the discovery of its promising regions. However, due to the tendency of algorithms exploitation, smaller scale changes will happen, and solutions will tend to search locally. The global optimum of a specific optimization problem would be found by striking the proper balance between exploration and exploitation.

Among the huge number of swarm intelligence algorithms, grey wolf optimizer (GWO) proposed by Seyedali Mirjalili et al. in 2014 was inspired by the tracking, encircling, and hunting behaviors of grey wolf pack. GWO gets the advantages of fewer setup parameters being needed, easier to understand and implement, with faster convergence speed and higher solution accuracy. Moreover, GWO could be applied to engineering optimization37,38, economic load dispatch39,40,41,42, path planning43,44,45,46,47 and other fields. In no free lunch (NFL) theorem48, it logically proves that no single optimization technique could be utilized to solve all optimization problems. In other words, the average performance of algorithms in the field is equal while all optimization problems being considered. This theorem, to some extent, has contributed to the rapid growth in the number of algorithms proposed in the last decade. At the same time, there have been numerous performance improvement schemes for various algorithms for practical applications49,50,51,52,53,54, which is of course one of the motivations for this paper.

As a swarm intelligence algorithm proposed early, grey wolf optimizer also experienced the common issues which had been faced by many swarm intelligence algorithms, including limited global searchability and challenges about escaping from local optima. To address these issues, Mohammadet al.37 proposed one of the best improvements called I-GWO. This dimensional learning-based hunting (DLH) strategy enables each wolf to construct a neighborhood where neighboring information can be shared among wolf packs. Mahdis et al.38 introduced a search strategy called representative-based hunting (RH), which combines three efficient trial vectors inspired by the behavior of head wolves to improve population exploration and diversity. Mohammad et al.55 proposed a grey wolf optimizer called gaze cue learning based, benefiting from two new search strategies: neighbor gaze cues learning (NGCL) and random gaze cues learning (RGCL), inspired by the gaze cues behavior of wolves. NGCL enhances exploitation and the ability to avoid local optima, and RGCL improves the population diversity as well as the exploration–exploitation balance between exploration and exploitation. Wang et al.56 combined the principle of survival of the fittest (SOF) with differential evolution (DE) to iterate and eliminate the wolves with the lowest fitness values, while an equal number of new wolves being generated randomly. Ahmed et al.57 fused memory, evolutionary operators and stochastic local search techniques to the grey wolf optimizer, and further linear population size reduction (LPSR) techniques were integrated to achieve impressive performance on numerous benchmark functions and engineering optimization problems. Akbari et al.58 disregarded the social hierarchy in the GWO and randomly chose three agents to guide the population renewal mechanism. By incorporating a greedy algorithm, grey wolves will relocate only if the new location is superior to the current one. Shahrzadet al.59 added evolutionary population dynamics (EPD) to GWO, similar to the principle of survival of the fittest, where the main operation is to cause the worst search agent to perish after each iteration and randomly regenerate it around α、β and δ wolves, which helps to fully utilize the carryover of each search agent's information. Jagdish et al.60 used exploratory equations and opposition-based learning (OBL) to refine GWO, and it was validated with statistical, diversity, and convergence analyses for 23 classical benchmark test problems. Sharma et al.61 combined the exploitation and exploration capabilities of the cuckoo search and grey wolf optimizer respectively, achieving a balanced algorithm that performed effectively in high-dimensional problems. Singh et al.62 improved the utilization of particle swarm optimization by incorporating the exploration capabilities of the grey wolf optimizer, which generated a variant that caused the quality and stability of the solution to boost considerably over the original two. Chi et al.63 transferred the exploratory capabilities of aquila optimizer (AO) to the grey wolf to better balance between the two capabilities during iterations by choosing with random probability whether to make the wolf flight capable or not.

The main contributions of this paper are as follows.

-

(1)

Latin hypercube sampling is used to initialize the population trying to cover the whole solution space as much as possible and the global search performance of the optimization algorithm is improved.

-

(2)

The original linear reduction function in grey wolf optimizer is replaced by a nonlinear function with local perturbations which mimicked the actual hunting style, so that the exploration and exploitation capabilities of the algorithm could be balanced.

-

(3)

In an effort to mitigate the overreliance on the α wolf and avoid getting stuck in a local optimum, it is proposed to modify the position updates of grey wolves by considering the average position of all individuals rather than solely moving towards the three wolves with the lowest fitness values. Moreover, random selection using Lévy flights would be incorporated to enhance the exploration range.

-

(4)

Hybridize the grey wolf optimizer with the exploitation phase of the harris hawks optimization. Multiple active time-varying position updating strategies in the HHO algorithm give grey wolves harris hawks-like flight capabilities and a wide field of view. Combined with the greedy strategy, a more appropriate position is selected for the final update to further accelerate the convergence of the population.

-

(5)

The proposed algorithm's performance is assessed by evaluating it on 23 classical test functions and CEC2020. It is then compared with other algorithms and improvement schemes of grey wolf optimizer.

-

(6)

Four engineering design problems are used to evaluate the effectiveness of the proposed algorithm in solving real-world problems.

The subsequent sections of this paper are organized as follows: "Grey wolf optimizer" section provides a concise overview of the grey wolf optimizer. "Proposed HGWO" section introduces the HGWO algorithm. In "Experimental results and discussion" section, we conduct the relevant experiments and analyses. Additionally, "Real application of HGWO in engineering" section presents four classical engineering problems. Lastly, "Conclusions and future work" section concludes the entire paper by offering a summary and outlook.

Grey wolf optimizer

The design of GWO is to simulate the social behavior of four different populations of grey wolves, namely \(\alpha ,\beta ,\delta\) and \(\omega\) wolves. The individual behaviors of these four types of wolves are influenced by social hierarchy, with α wolves owning the highest status, and the wolves' collective collaborative and communicative behaviors have a greater impact on the improvement and optimization of the algorithm. The GWO ascertains the best solution by considering every agent's position as a probable solution to the optimization predicament. At the same time, \(\alpha ,\beta\) and \(\delta\) wolves are regarded as the optimal, suboptimal, and more optimal solutions respectively. Therefore, the remaining wolves (\(\omega\) wolves) are directed to the promising region. The specific explanation of GWO could be found in the Online Appendix.

Proposed HGWO

Latin hypercube sampling to initialize populations

Latin hypercube sampling (LHS) is a method of approximate random sampling from a multivariate parameter distribution proposed by Mckay et al.64 in 1979. Random sampling does not spread the sample well over the entire interval when the sample size is small. Unlike random sampling, Latin hypercube sampling has the advantages of uniform stratification and the possibility of obtaining sample values in the tails with fewer samples. The convergence speed and accuracy of the algorithm are affected to some extent by the uniformity of the initial population distribution. The initial population generated randomly cannot meet the requirements of distribution rationality and population diversity in the search space. However, Latin hypercube sampling itself has the characteristics of uniform stratification and equal probability sampling, which can generate variables covering the entire distribution space. Therefore, Latin hypercube sampling is used during algorithm initialization to cover the entire search space as much as possible, further increasing the diversity of the initial population and improving the optimal search performance65,66,67. The following is the steps of Latin hypercube sampling.

-

(1)

Suppose \(N\) samples are drawn from a hypercube of dimension \(D\), where \(x_{i}^{j} \in [lb,ub]\) denotes the definition domain space; \(i = 1,2,...,N\) denotes the sample individual; \(j = 1,2,...,D\) represents the dimensionality of the sample individual.

-

(2)

Partition each dimension of the hypercube into \(N\) subintervals, i.e., \(lb^{j} = x_{1}^{j} \le x_{2}^{j} \le ... \le x_{N}^{j} = ub^{j}\), in the corresponding definition domain, and finally obtain \(N^{D}\) small hypercubes.

-

(3)

Generate a fully aligned matrix with \(N\) rows and \(D\) columns.

-

(4)

Each row of the matrix \(A_{N \times D}\) corresponds to a small hypercube, and then a sample is randomly selected from each small hypercube to obtain \(N\) samples.

In order to demonstrate the effectiveness of the Latin hypercube sampling initialization strategy visually, random initialization and Latin hypercube sampling initialization were adopted to initialize the grey wolf population through experiments respectively, and the population distribution maps is shown in Fig. 2.

In Fig. 2, when employing a stochastic approach for population initialization, the majority of individuals in the population are concentrated in the fringe region, resulting in reduced population diversity in the central region. Conversely, employing Latin hypercube sampling for population initialization yields a more uniform distribution of grey wolf individuals across the entire search space, thus showcasing the intuitive efficacy of the Latin hypercube sampling initialization strategy. Additionally, the experimental section of this article provides quantitative evidence supporting the effectiveness of the Latin hypercube sampling initialization strategy.

Nonlinear convergence factors with local perturbations

In the initial grey wolf optimizer, the convergence factor linearly decreased from 2 to 0, and the change in convergence directly affected the algorithm's optimization ability, resulting in an imbalance in the algorithm's search ability before and after. Meanwhile, considering that the hunting behavior of grey wolves is analogized as a complex nonlinear process, this paper constructs a new type of nonlinear decreasing convergence factor based on the characteristics of the sigmoid function. Local perturbation of the convergence factor by introducing a random number \(gamrnd\) that conforms to the gamma distribution improves the global search ability of the algorithm in later iterations and reduces the possibility of falling into a local optimum. The mathematical expression of the improved convergence factor is as follows.

where \(e\) is the base of the natural logarithm function; \(\varepsilon\) is the perturbation adjustment coefficient, \(\varepsilon\) value of 0.1 has the best effect after many tests; Fig. 3 shows the simulation graph of two different convergence factors for 500 iterations. It could be seen clearly that the value of improved convergence factor is larger and the decay speed is slower in the early stage, for the reason that the wolf has a strong global search ability to detect the upper and lower bounds in a wide range. In the middle, the value declined faster, which is conducive to the wolves moving fast towards the prey position to accelerate the convergence speed of the population. In the late stage, the value of \(a\) is taken to be smaller and the speed of decreasing is relatively slow. At this time, the local search ability of the wolf pack is strengthened, and the fine search would be carried out in a small area close to the prey to reduce the omission of the solution. As a consequence, the nonlinear convergence factor proposed in this paper could balance the global search ability and local search ability more effectively than in original grey wolf optimizer.

Expanded exploration

In the grey wolf optimizer, wolves move with complete inclination to the positions of α、β and δ wolves, and have limited ability to cope when caught in a local optimum. Accordingly, a position update formula for extended exploration is proposed.

where \(X_{M} (t)\) denotes the mean position vector of all grey wolves at the \(t\) iteration; \(r\) takes a random vectors in the interval [0,1]. The position update formula proposed in this paper modifies the original formula by diminishing the absolute influence of α, β, and δ wolves on individuals while introducing mutual attraction among individuals. Instead of moving towards the three wolves with the lowest fitness value, the formula replaces this strategy. Furthermore, it reduces the random influence of the α wolf in position updating. As a result, the grey wolf population avoids excessive dependence and being trapped in local optima, particularly when the fitness value of the α wolf is notably lower than that of the β and δ wolves.

The Lévy flight function is a randomized wandering algorithm that simulates a random flight process and is widely validated for improving optimization problems68,69,70,71. The Lévy flight function embodies a randomized search algorithm, which effectively approaches the optimal solution by traversing in a randomized and meandering manner, enabling efficient global search. To mitigate the limitations of this algorithm in escaping local optima and to increase the probability of discovering the optimal solution, this paper introduced a novel position update formula that facilitates extended exploration in tandem with the Lévy flight.

where \(\otimes\) is the dot product operator; \(k\) is the step control parameter; \(\lambda\) takes the value of constant 0.01; \(u\),\(v\) satisfy the normal distribution, \(u \sim N(0,\sigma_{u}^{2} )\), \(v \sim N(0,\sigma_{v}^{2} )\); usually \(\beta\) takes the value between [0,2], in this paper, \(\beta = 2R\), \(R\) is the random numbers between [0,2].

The selection of the two aforementioned position update formulas is based on a randomly sampled number \(c\) from the interval [0, 1]. During the early search phase, when the second position update formula is selected with a certain probability, the large step size allows for an expansion of the search scope and promotes exploration and discovery. This is beneficial for enhancing the diversity of the population and significantly reduces the risk of the grey wolf algorithm getting trapped in local optima. In the later search phase, when the global optimal solution range has been largely determined, the first position update formula continues to attempt to escape from local optima and improve the solution quality.

Location update based on HHO

The position update of the grey wolf optimizer is guided by the three most adaptive wolves, although they are unaware of the exact location of the prey. Consequently, this search method is susceptible to converging to local optima. The harris hawks optimization (HHO)17 is a novel, population-based, nature-inspired optimization technique that draws inspiration from the cooperative behavior of harris hawks in nature, specifically their surprise attacks during the chase. HHO incorporates multiple and time-varying position update strategies that contribute to its comparatively fast convergence speed. The hybridization of GWO and HHO can improve GWO's convergence rate and enhance search accuracy. When the exploitation strategies of HHO are combined with GWO, they form a more complex and effective cooperation mechanism. By leveraging HHO's methods for information exchange, local search, and global search, wolves in the GWO can cooperate and coordinate more effectively, thus accelerating the search process and increasing search diversity.

Harris hawks can adjust their behavior based on the prey's escape energy level. During the prey's escape process, its energy \(E\) will significantly diminish.

In view of the difference in escape energy between different prey animals, \(E_{0}\) (initial value of escape energy) is randomly changed within [− 1,1] in iteration process of the algorithm.

Given the differences in escape energy between prey, the \(E_{0}\) (initial value of escape energy) is made to vary randomly within [− 1, 1] during the iteration of the algorithm. When \(\left| E \right| \ge 1\), HHO's exploitation strategy will not be performed in HGWO, but rather the exploration area should be expanded as much as possible and solution omissions should be reduced. With the prerequisite of \(\left| E \right| < 1\) fulfilled, and the position of the prey replaced by that of the α wolf, the harris hawks begin to make raids on their prey. Unfortunately, the prey often escaped one step ahead of the harris hawks. Thus, based on the prey's escape behavior and its own pursuit strategy, the Harris's hawk evolved four attack strategies. Suppose \(\eta\) represents the escape probability of the prey, which is a random number between [0,1], with a successful escape when \(\eta < 0.5\) and a failed escape when the opposite is true.

Soft besiege

When \(\eta \ge 0.5\) and \(\left| E \right| \ge 0.5\), the quarry retains ample vigor to attempt to elude the pursuit through evasive hops. In this case, the current position update can be done using Eq. (11).

where \(R\) is a random number within the range [0,1] and determines the magnitude of the random leaps of the prey during the process of escaping.

Hard besiege

When \(\eta \ge 0.5\) and \(\left| E \right| < 0.5\), the prey is in a state of exhaustion, facing no choice but heading to grab the ground. At this time, harris hawks overpower directly, and finally perform the surprise attack.

Soft besiege with progressive rapid dives

When \(\eta < 0.5\) and \(\left| E \right| \ge 0.5\), prey has sufficient escape energy, then there is a chance to escape. In this situation, harris hawks engage in a careful strategy of gaining momentum through rapid dives gradually before launching an attack.

After this position update, if the fitness value does not improve, another strategy would be executed, where \(s = rand(1,D)\).

In conclusion, the siege method can be outlined as follows.

Hard besiege with progressive rapid dives

When \(\eta < 0.5\) and \(\left| E \right| < 0.5\), the prey doesn't have enough energy, so harris hawks will conduct an aggressive siege involving successive rapid dives with the purpose of closing the gap between themselves and prey.

Similarly, this type of siege can be summarized as shown in Eq. (19).

The convergence of the algorithm will be further accelerated via the idea of greedy strategy through various position updating formulas mentioned in "Expanded exploration" and "Location update based on HHO" sections of this paper, and the final position updating formula is shown as below.

Pseudocode of HGWO

The whole algorithm will be performed iteratively through the above processes until being iterated to the preset maximum number. The flowchart of HGWO in Fig. 4 as well as the pseudocode of Algorithm 1 could be referred to broadly.

Experimental results and discussion

Experimental setup

In the field of stochastic optimization, it is common to quantitatively evaluate the performance of different algorithms using a set of mathematical test functions that have known optimal values. However, it is important to vary the characteristics of the test functions in order to draw reliable conclusions. In this study, we will assess the performance of HGWO on 23 classical benchmark test functions commonly used in literature to measure the exploration and exploitation capabilities of newly proposed, improved algorithms72,73,74. Detailed information about these test functions, including their formulation, dimensionality (Dim), search space restriction (Range), and optimal reported value (Fmin), can be found in the Online Appendix. Furthermore, this study validates the performance of HGWO using the latest and challenging numerical optimization competition test suite: CEC202075,76,77. This suite consists of 10 test functions that cover a range of characteristics, including unimodal, multimodal, hybrid, and combinatorial, and are specifically designed to challenge both previously proposed and newly proposed metaheuristic algorithms. The characteristics of these test suites are described in the Online Appendix.

To ensure the fairness and reasonableness of the simulation experiments, all algorithms in this paper have a population size of 30 and a maximum number of iterations set at 500. The simulations are conducted on a computer system consisting of an Intel Core i5-6300HQ CPU with a clock speed of 3.20 GHz, 12.0 GB of RAM, and Windows 10 Professional. The simulation software used is MATLAB R2022a. Each test function is solved 20 times to generate statistical results. The algorithms are then compared using various performance metrics, including the mean and standard deviation of the best solution obtained in the final iteration. Smaller values of these metrics indicate better algorithmic performance in terms of avoiding local solutions and identifying the global optimum. Qualitative results, such as convergence curves, grey wolf trajectories, search history, and average fitness of the population, are described and analyzed subsequently.

Compare results using 23 classic test functions

Comparison with standard optimization algorithms

The performance of several optimization algorithms is compared in this paper by solving the 23 benchmark functions mentioned above. The algorithms considered include the standard grey wolf optimizer (GWO)6, whale optimization algorithm (WOA)7, golden jackal optimization (GJO)78, multi-verse optimizer (MVO)79, salp swarm algorithm (SSA)15, seagull optimization algorithm (SOA)80, arithmetic optimization algorithm (AOA)32, and the HGWO proposed in this paper. The parameter configurations for each algorithm were obtained from their respective sources, and the specific settings can be found in Table 1.

The optimal values, mean values, and standard deviations obtained are presented in Tables 2 and 3, with the best values highlighted in bold for easy comparison. The comparison of the results by the number of wins (W), ties (T), and losses (L) for each algorithm is shown at the end of each table. Moreover, the convergence curves of each algorithm on the benchmark functions can be observed in Fig. 5.

Comparison with other hybridization algorithms of WGO

To better assess the effectiveness of HGWO, this study compares it with three other hybrid optimization algorithms: AGWO_CS81, PSOGWO82, and AGWO63. The experimental conditions are consistent with those described in the previous paper. The results and convergence curves can be found in Tables 2 and 3, and Fig. 6, respectively.

Exploitation analysis

The experimental results of each algorithm on the unimodal benchmark functions are presented in Table 2. Unimodal benchmark functions, which only contain one minimum value, are ideal for testing the exploitation performance of the algorithm83. For F1–F4, the HGWO algorithm can achieve the theoretical optimal solution within approximately 50 generations, with a mean and standard deviation of zero. This indicates that the HGWO algorithm demonstrates both strong solution accuracy and stability. The performance results of each algorithm in solving F1–F7 in different dimensions can be found in Tables 4 and 5. Upon comparing the solution outcomes, it becomes evident that the HGWO algorithm proposed in this paper outperforms most of the comparison algorithms.

Exploration analysis

The benchmark functions F8–F13 are multimodal and exhibit multiple extremes that increase with dimensionality. They serve as effective tools for evaluating an algorithm's exploration ability and its capacity to escape local optima83. Tables 2, 4, and 5 compare each algorithm's performance in solving F8–F13 in various dimensions. Despite the increasing difficulty with higher dimensionality, the HGWO algorithm consistently performs exceptionally well in most of the multi-peaked functions, showing no major fluctuations in stability. The multi-peaked function is known to have numerous local optimal solutions. The favorable experimental results presented in the table provide quantitative evidence of the HGWO algorithm's ability to effectively escape these local optima during the optimization process.

The F14–F23 benchmark functions are fixed-dimension multimodal problems. Table 3 demonstrates that the majority of algorithms are capable of locating the theoretical optimum. However, the HGWO algorithm outperforms most algorithms in terms of mean and standard deviation, indicating strong and stable search performance.

The convergence curves of various algorithms for solving the benchmark functions are depicted in Figs. 5 and 6. It is evident that the HGWO algorithm outperforms the majority of algorithms in terms of convergence speed for both unimodal and multimodal functions. Additionally, the HGWO algorithm possesses the capability to escape local optima by utilizing its variable position update formula, particularly in F1–F4 and F9–F11, where it can achieve the theoretical optimum or a value close to it within approximately 50 iterations.

Statistical analysis

In the previous statistical process, we determined the optimal value, mean, and standard deviation of 20 experimental results for each algorithm. This allowed us to compare the superiority of the algorithms. To further compare the differences between the HGWO and other algorithms, we conducted a statistical analysis using the Wilcoxon rank-sum test with a significance level of 5%, based on the aforementioned simulations. The resulting P-values from the experiments are presented in Table 6.

The Wilcoxon rank sum test is a non-parametric statistical test that determines the rejection of the null hypothesis and indicates the significance of HGWO in comparison to other algorithms. If the P-value is less than 0.05, it can be considered strong evidence that HGWO is more significant than other algorithms. Conversely, if the P-value is greater than 0.05, it indicates that HGWO is less significant than other algorithms (indicated in bold in the table). When the P-value is exactly 0.05, the result is noted as "NaN" indicating that a significance judgement could not be made. As the algorithms cannot be compared to themselves, the table no longer includes the P-values of HGWO.

The findings presented in Table 6 demonstrate that the P-values of the test conducted between HGWO and the comparison algorithms, for all 23 benchmark functions, are less than 0.05 in over 95% of cases. As a result, the null hypothesis is rejected, indicating a significant difference between the computation outcomes of HGWO and the other 10 algorithms.

Compare results using CEC2020

To further investigate the quality of the HGWO and assess its ability for exploration, exploitation, and avoidance of local optima, we employed the CEC2020 suite, which is renowned for its challenging benchmarks. This suite encompasses four types of functions: unimodal function (F24), basic functions (F25–F27), hybrid functions (F28–F30), and composition functions (F31–F33). We evaluated the performance of HGWO using these benchmarks and compared it with well-known algorithms such as GWO, WOA, SSA, and AGWO. Each algorithm underwent 20 independent runs with 500 iterations and 30 search agents.

As presented in Table 7, our findings indicate that HGWO outperforms other algorithms in 5 test functions and demonstrates comparable performance to excellent heuristic algorithms in 5 test functions. Consequently, we conclude that HGWO can be classified as a superior optimizer.

Impact analysis of the modifications

Experimental and statistical results have thus far demonstrated that the Hunting and Gathering Optimization algorithm (HGWO) surpasses other comparative algorithms. In this subsection, we will analyze the impact of several factors on HGWO's performance, including the Latin Hypercube Sampling (LHS) method used for population initialization, the convergence factor of local perturbations, and the enhancement of the position update formula. To assess these factors, we integrate improvement strategies into the GWO algorithm separately, denoting them as GWO1, GWO2, and GWO3, respectively. The results of solving the CEC2020 test function with each individual improvement strategy are presented in Table 8. Importantly, the fundamental parameter settings remain unchanged from the previous section.

In the CEC2020 test functions, significant improvements in performance are achieved when individual improvement strategies are added to GWO1, GWO2, and GWO3. Specifically, the LHS method has a limited effect on improving GWO1, as it only enhances the uniformity of the population distribution at the start of the iteration and does not contribute to subsequent iterations. However, GWO2 demonstrates good performance improvements on nine functions, with the exception of the optimal solution and the mean value of F9, where it does not perform better. Overall, the results in Table 8 show satisfactory outcomes, particularly for GWO3. The improved position update formulas in GWO3 effectively enhance the algorithm's convergence accuracy and speed.

Ethical approval

Written informed consent for publication of this paper was obtained from the Shenyang University and all authors.

Real application of HGWO in engineering

To assess the applicability of HGWO in real-world optimization problems, this section employs HGWO to tackle various challenges associated with the design of welded beams, pressure vessels, double bar trusses, and reducers. These engineering optimization problems exhibit varying levels of solution difficulties and complex constraints. By comparing the solution outcomes of the HGWO with those of the 10 algorithms discussed in the prior paper, the effectiveness and applicability of the HGWO in solving engineering design optimization problems can be properly evaluated. The mathematical description of these engineering optimization problems is provided in the Online Appendix.

Constraint optimization problems

Constraint optimization problems are a common class of mathematical planning problems in engineering applications. They are of practical importance due to their difficulty in solving and their wide range of applications in various fields. The constrained optimization problem can be represented by a unified mathematical equation.

where \(X\) is the search space of the constrained optimization problem, \(g_{i} ({\varvec{x}})\) represents the inequality constraint, and \(h_{j} ({\varvec{x}})\) represents the equation constraint.

This paper examines the challenge of solving constrained optimization problems by introducing the FAD (feasibility and dominance) criterion. The FAD criterion acts as a mechanism for handling constraints, allowing for the effective resolution of the complexities associated with them84. When assessing the performance of search agents, the constraint violation value (CV) is added exclusively to the original fitness function value. The formula for an infeasible solution is presented below.

In the search agent iteration, the appropriate individual is selected using Eq. (23) to achieve a feasible solution with a CV value of zero. If both individuals are not feasible solutions, the individual with the smaller CV value is chosen.

Pressure vessel design

The structure of the pressure vessel design (PVD) is shown in Fig. 7, and its goal is to meet the production needs while minimizing the total cost, with four design variables: shell thickness \(T_{s} (x_{{1}} )\), head thickness \(T_{h} (x_{{2}} )\), inner radius \(R(x_{{3}} )\) and vessel length \(L(x_{{4}} )\), where \(T_{s} (x_{{1}} )\) and \(T_{h} (x_{{2}} )\) are integer multiples of 0.625, and \(R(x_{{3}} )\) and \(L(x_{{4}} )\) are continuous variables85.

Table 9 shows the minimum cost and optimal solution for each pressure vessel design algorithm used. It is clear that HGWO outperforms the other methods in solving pressure vessel design problems with the lowest total cost.

Welded beam design

The design of the welded beam (WBD) is presented in Fig. 8. The optimization problem aims to identify four design variables that meet the restrictions of tangential stress \(\tau\), bending stress \(\theta\), beam bending load \(P_{c}\), end deviation \(\delta\), and boundary conditions. These variables include the beam's length \(l(x_{2} )\), height \(t(x_{3} )\), thickness \(b(x_{4} )\), and weld thickness \(h(x_{1} )\). The objective is to minimize production costs of the welded beam and the issue is a standard nonlinear programming problem86.

Examining Table 10 reveals that the majority of algorithms yield enhanced outcomes for the welded beam design problem. However, the HGWO outlined in this paper surpasses all algorithms in terms of solution precision and attains the lowest fabrication expenditure.

Three-bar truss design

The design for a truss made of three-bar truss (TTD) is illustrated in Fig. 9. The main aim of this issue is to minimize the volume while satisfying the stress, deflection and curvature constraints on each side of the truss member33. Since the cross-sectional areas of the rods \(A_{1} (x_{1} )\) and \(A_{3} (x_{3} )\) in the three-bar truss are the same, only the cross-sectional areas of two rods \(A_{1} (x_{1} )\) and \(A_{2} (x_{2} )\) need to be selected as optimization variables.

Based on the results presented in Table 11, it is apparent that HGWO outperforms other methods in solving the three-bar truss design problem and achieves the lowest total cost.

Speed reducer design

Within mechanical systems, the reducer is a crucial element of the gearbox that is applied in several scenarios as indicated in Fig. 10. The objective of speed reducer design(SRD) problem is to minimize the weight of the gearbox while considering 11 constraints87. The problem has seven variables, which are the tooth face width \(b(x_{1} )\), the gear module \(m(x_{2} )\), the number of teeth in the pinion \(z(x_{3} )\), the length of the first shaft between bearings \(l_{1} (x_{4} )\), the length of the second shaft between bearings \(l_{2} (x_{5} )\), the diameter of the first shaft of the bearings \(d_{1} (x_{6} )\) and the diameter of the second shaft \(d_{2} (x_{7} )\).

Table 12 illustrates the minimum cost and optimal solution of each algorithm utilized to solve the reducer design problem. Comparison of the solution outcomes of GWO, WOA, MVO, SSA, AOA, SOA, GJO, AGWO_CS, PSOGWO, and AGWO indicates that the solution of HGWO achieves the lowest fabrication cost.

The findings suggest that the HGWO algorithm exhibits reduced production costs when compared to other algorithms in four engineering optimization problems of varying complexities. This highlights the effectiveness and applicability of the HGWO algorithm in engineering scenarios.

Conclusions and future work

In this paper, a hybrid grey wolf optimizer (HGWO) is proposed, which incorporates the exploitation phase of the harris hawks optimization into the grey wolf optimizer for solving global optimization problems. Firstly, the LHS method is introduced in the population initialization phase to make the search agents more evenly distributed in the search space to improve the population diversity. Secondly, a new nonlinear convergence factor is constructed based on the properties of the sigmoid function, and a random number that conforms to the gamma distribution is added for perturbation to better balance the exploration and exploitation stages. To avoid the algorithm falling into local optimum during the iterative process, the influence of alpha wolves is weakened in the position update of the algorithm, and the interactions between individuals are increased and randomly selected with Levy flights, which greatly enhances the exploratory ability of the algorithm. Finally, in conjunction with the exploitation phase of the harris hawks optimization, the sharing of information about HHO, and the local search method, can promote the "wolves" in the GWO to better collaborate with each other and co-operate, thus speeding up the search process and increasing the diversity of searches. The idea of meritocracy based on greedy strategies is also used to further accelerate the convergence of the algorithm. Quantitative comparisons and convergence behavior analyses with a variety of standard optimization algorithms as well as different improved forms of grey wolf optimizer algorithms in 23 classical benchmark functions and CEC2020 show that the HGWO algorithm is able to obtain a better balance between global and local searches and significantly improves in terms of solution accuracy, convergence speed, and the ability to jump out of the local optimum. In addition, the algorithm has been applied to engineering problems such as pressure vessel design, welded beam design, three-bar truss design, and reducer design. The experimental results show that the improvement effect of HGWO is remarkable.

In future research, HGWO will be used for other real-world problems, including wireless network coverage, machine learning and logistics distribution. Furthermore, the low-parameter nature of the algorithm and its ability to be easily parallelized for simultaneous processing make it an attractive option for future applications on mobile devices, such as UAV trajectory planning.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Talbi, E.-G. Metaheuristics: From Design to Implementation. (John Wiley & Sons, 2009).

Blum, C., Puchinger, J., Raidl, G. R. & Roli, A. Hybrid metaheuristics in combinatorial optimization: A survey. Appl. Soft Comput. 11, 4135–4151 (2011).

Kar, A. K. Bio inspired computing–a review of algorithms and scope of applications. Expert Syst. Appl. 59, 20–32 (2016).

Dorigo, M. Optimization, learning and natural algorithms. Ph. D. Thesis, Politecnico di Milano (1992).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95-International Conference on Neural Networks vol. 4 1942–1948 (IEEE, 1995).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67 (2016).

Mirjalili, S. The ant lion optimizer. Adv. Eng. Softw. 83, 80–98 (2015).

Karaboga, D. in An Idea Based on Honey Bee Swarm for Numerical Optimization. (2005).

Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl. Based Syst. 89, 228–249 (2015).

Saremi, S., Mirjalili, S. & Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 105, 30–47 (2017).

Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27, 1053–1073 (2016).

Yang, X. & Hossein Gandomi, A. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 29, 464–483 (2012).

Zamani, H., Nadimi-Shahraki, M. H. & Gandomi, A. H. Starling murmuration optimizer: A novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 392, 114616 (2022).

Mirjalili, S. et al. Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 114, 163–191 (2017).

Abdollahzadeh, B., Gharehchopogh, F. S. & Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 158, 107408 (2021).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 97, 849–872 (2019).

Abualigah, L. et al. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 157, 107250 (2021).

Abdel-Basset, M., Mohamed, R., Jameel, M. & Abouhawwash, M. Nutcracker optimizer: A novel nature-inspired metaheuristic algorithm for global optimization and engineering design problems. Knowl. Based Syst. 262, 110248 (2023).

Meng, X.-B., Gao, X. Z., Lu, L., Liu, Y. & Zhang, H. A new bio-inspired optimisation algorithm: Bird swarm algorithm. J. Exp. Theor. Artif. Intell. 28, 673–687 (2016).

Abdollahzadeh, B., Soleimanian Gharehchopogh, F. & Mirjalili, S. Artificial gorilla troops optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Int. J. Intell. Syst. 36, 5887–5958 (2021).

Zamani, H., Nadimi-Shahraki, M. H. & Gandomi, A. H. QANA: Quantum-based avian navigation optimizer algorithm. Eng. Appl. Artif. Intell. 104, 104314 (2021).

Akbari, M. A., Zare, M., Azizipanah-Abarghooee, R., Mirjalili, S. & Deriche, M. The cheetah optimizer: A nature-inspired metaheuristic algorithm for large-scale optimization problems. Sci. Rep. 12, 10953 (2022).

Abdollahzadeh, B., Gharehchopogh, F. S., Khodadadi, N. & Mirjalili, S. Mountain Gazelle optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Adv. Eng. Softw. 174, 103282 (2022).

Gandomi, A. H. & Alavi, A. H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 17, 4831–4845 (2012).

Neshat, M., Sepidnam, G., Sargolzaei, M. & Toosi, A. N. Artificial fish swarm algorithm: a survey of the state-of-the-art, hybridization, combinatorial and indicative applications. Artif. Intell. Rev. 42, 965–997 (2014).

Wang, B., Jin, X. & Cheng, B. Lion pride optimizer: An optimization algorithm inspired by lion pride behavior. Sci. China Inf. Sci. 55, 2369–2389 (2012).

Holland, J. H. Genetic algorithms. Sci. Am. 267, 66–73 (1992).

Storn, R. & Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11, 341–359 (1997).

Beyer, H.-G. & Schwefel, H.-P. Evolution strategies—A comprehensive introduction. Natural Comput. 1, 3–52 (2002).

Hashim, F. A., Houssein, E. H., Mabrouk, M. S., Al-Atabany, W. & Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 101, 646–667 (2019).

Abualigah, L., Diabat, A., Mirjalili, S., Abd Elaziz, M. & Gandomi, A. H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 376, 113609 (2021).

Bayzidi, H., Talatahari, S., Saraee, M. & Lamarche, C.-P. Social network search for solving engineering optimization problems. Comput. Intell. Neurosci. 2021, e8548639 (2021).

Askari, Q., Younas, I. & Saeed, M. Political optimizer: A novel socio-inspired meta-heuristic for global optimization. Knowl. Based Syst. 195, 105709 (2020).

Ahmadi-Javid, A. Anarchic society optimization: A human-inspired method. In 2011 IEEE Congress of Evolutionary Computation (CEC) 2586–2592 (2011). https://doi.org/10.1109/CEC.2011.5949940.

Eiben, A. E. & Schippers, C. A. On evolutionary exploration and exploitation. Fundamenta Informaticae 35, 35–50 (1998).

Nadimi-Shahraki, M. H., Taghian, S. & Mirjalili, S. An improved Grey Wolf optimizer for solving engineering problems. Expert Syst. Appl. 166, 113917 (2021).

Banaie-Dezfouli, M., Nadimi-Shahraki, M. H. & Beheshti, Z. R-GWO: Representative-based grey wolf optimizer for solving engineering problems. Appl. Soft Comput. 106, 107328 (2021).

Kamboj, V. K., Bath, S. K. & Dhillon, J. S. Solution of non-convex economic load dispatch problem using Grey Wolf Optimizer. Neural Comput. Appl. 27, 1301–1316 (2016).

Jayabarathi, T., Raghunathan, T., Adarsh, B. R. & Suganthan, P. N. Economic dispatch using hybrid grey wolf optimizer. Energy 111, 630–641 (2016).

Pradhan, M., Roy, P. K. & Pal, T. Grey wolf optimization applied to economic load dispatch problems. Int. J. Electr. Power Energy Syst. 83, 325–334 (2016).

Singh, D. & Dhillon, J. S. Ameliorated grey wolf optimization for economic load dispatch problem. Energy 169, 398–419 (2019).

Dewangan, R. K., Shukla, A. & Godfrey, W. W. Three dimensional path planning using Grey wolf optimizer for UAVs. Appl. Intell. 49, 2201–2217 (2019).

Qu, C., Gai, W., Zhong, M. & Zhang, J. A novel reinforcement learning based grey wolf optimizer algorithm for unmanned aerial vehicles (UAVs) path planning. Appl. Soft Comput. 89, 106099 (2020).

Zhang, S., Zhou, Y., Li, Z. & Pan, W. Grey wolf optimizer for unmanned combat aerial vehicle path planning. Adv. Eng. Softw. 99, 121–136 (2016).

Tsai, P.-W., Nguyen, T.-T. & Dao, T.-K. Robot path planning optimization based on multiobjective grey wolf optimizer. 166–173 (Springer, 2017).

Hou, Y., Gao, H., Wang, Z. & Du, C. Improved grey wolf optimization algorithm and application. Sensors 22, 3810 (2022).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82 (1997).

Nadimi-Shahraki, M. H., Taghian, S., Mirjalili, S. & Faris, H. MTDE: An effective multi-trial vector-based differential evolution algorithm and its applications for engineering design problems. Appl. Soft Comput. 97, 106761 (2020).

Zamani, H., Nadimi-Shahraki, M. H. & Gandomi, A. H. CCSA: Conscious neighborhood-based crow search algorithm for solving global optimization problems. Appl. Soft Comput. 85, 105583 (2019).

Nadimi-Shahraki, M. H., Zamani, H. & Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 148, 105858 (2022).

Nadimi-Shahraki, M. H., Banaie-Dezfouli, M., Zamani, H., Taghian, S. & Mirjalili, S. B-MFO: A binary moth-flame optimization for feature selection from medical datasets. Computers 10, 136 (2021).

Nadimi-Shahraki, M. H. et al. Migration-based moth-flame optimization algorithm. Processes 9, 2276 (2021).

Nadimi-Shahraki, M. H., Fatahi, A., Zamani, H., Mirjalili, S. & Abualigah, L. An improved moth-flame optimization algorithm with adaptation mechanism to solve numerical and mechanical engineering problems. Entropy 23, 1637 (2021).

Nadimi-Shahraki, M. H., Taghian, S., Mirjalili, S., Zamani, H. & Bahreininejad, A. GGWO: Gaze cues learning-based grey wolf optimizer and its applications for solving engineering problems. J. Comput. Sci. 61, 101636 (2022).

Wang, J.-S. & Li, S.-X. An improved grey wolf optimizer based on differential evolution and elimination mechanism. Sci. Rep. 9, 7181 (2019).

Ahmed, R. et al. Memory, evolutionary operator, and local search based improved Grey Wolf optimizer with linear population size reduction technique. Knowl. Based Syst. 264, 110297 (2023).

Akbari, E., Rahimnejad, A. & Gadsden, S. A. A greedy non-hierarchical grey wolf optimizer for real-world optimization. Electron. Lett. 57, 499–501 (2021).

Saremi, S., Mirjalili, S. Z. & Mirjalili, S. M. Evolutionary population dynamics and grey wolf optimizer. Neural Comput. Appl. 26, 1257–1263 (2015).

Bansal, J. C. & Singh, S. A better exploration strategy in Grey Wolf optimizer. J. Ambient Intell. Hum. Comput. 12, 1099–1118 (2021).

Sharma, S., Kapoor, R. & Dhiman, S. A novel hybrid metaheuristic based on augmented grey wolf optimizer and cuckoo search for global optimization. In 376–381 (IEEE, 2021).

Singh, N. & Singh, S. Hybrid algorithm of particle swarm optimization and grey wolf optimizer for improving convergence performance. J. Appl. Math. 2017, (2017).

Ma, C. et al. Grey wolf optimizer based on Aquila exploration method. Expert Syst. Appl. 205, 117629 (2022).

Mckay, M. D., Beckman, R. J. & Conover, W. J. A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42, 55–61 (2000).

He, Z., Pan, Y., Wang, K., Xiao, L. & Wang, X. Area optimization for MPRM logic circuits based on improved multiple disturbances fireworks algorithm. Appl. Math. Comput. 399, 126008 (2021).

Rosli, S. J. et al. A hybrid modified method of the sine cosine algorithm using latin hypercube sampling with the cuckoo search algorithm for optimization problems. Electronics 9, 1786 (2020).

Tharwat, A. & Schenck, W. Population initialization techniques for evolutionary algorithms for single-objective constrained optimization problems: Deterministic vs. stochastic techniques. Swarm Evolut. Comput. 67, 100952 (2021).

Deepa, R. & Venkataraman, R. Enhancing Whale optimization algorithm with Levy flight for coverage optimization in wireless sensor networks. Comput. Electr. Eng. 94, 107359 (2021).

Dixit, D. K., Bhagat, A. & Dangi, D. An accurate fake news detection approach based on a Levy flight honey badger optimized convolutional neural network model. Concurr. Comput: Pract. Exp. 35, e7382 (2023).

Seyyedabbasi, A. WOASCALF: A new hybrid whale optimization algorithm based on sine cosine algorithm and levy flight to solve global optimization problems. Adv. Eng. Softw. 173, 103272 (2022).

Kaidi, W., Khishe, M. & Mohammadi, M. Dynamic Levy flight chimp optimization. Knowl. Based Syst. 235, 107625 (2022).

Li, S., Chen, H., Wang, M., Heidari, A. A. & Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Futur. Gener. Comput. Syst. 111, 300–323 (2020).

Faramarzi, A., Heidarinejad, M., Mirjalili, S. & Gandomi, A. H. Marine predators algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 152, 113377 (2020).

Faramarzi, A., Heidarinejad, M., Stephens, B. & Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl. Based Syst. 191, 105190 (2020).

Acharya, D. & Das, D. K. A novel human conception optimizer for solving optimization problems. Sci. Rep. 12, 21631 (2022).

Ferahtia, S. et al. Red-tailed hawk algorithm for numerical optimization and real-world problems. Sci. Rep. 13, 12950 (2023).

Bakır, H. Fitness-distance balance-based artificial rabbits optimization algorithm to solve optimal power flow problem. Expert Syst. Appl. 240, 122460 (2023).

Chopra, N. & Mohsin Ansari, M. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 198, 116924 (2022).

Mirjalili, S., Mirjalili, S. M. & Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 27, 495–513 (2016).

Dhiman, G. & Kumar, V. Seagull optimization algorithm: Theory and its applications for large-scale industrial engineering problems. Knowl. Based Syst. 165, 169–196 (2019).

A Novel Hybrid Metaheuristic Based on Augmented Grey Wolf Optimizer and Cuckoo Search for Global Optimization. In IEEE Conference Publication | IEEE Xplore. https://ieeexplore.ieee.org/abstract/document/9478142.

Hybrid Algorithm of Particle Swarm Optimization and Grey Wolf Optimizer for Improving Convergence Performance. https://www.hindawi.com/journals/jam/2017/2030489/.

Naruei, I. & Keynia, F. Wild horse optimizer: A new meta-heuristic algorithm for solving engineering optimization problems. Eng. Comput. 38, 3025–3056 (2022).

Deb, K. An efficient constraint handling method for genetic algorithms. Comput. Methods Appl. Mech. Eng. 186, 311–338 (2000).

Agushaka, J. O. & Ezugwu, A. E. Advanced arithmetic optimization algorithm for solving mechanical engineering design problems. PLOS ONE 16, e0255703 (2021).

Yıldız, B. S. et al. A new chaotic Lévy flight distribution optimization algorithm for solving constrained engineering problems. Expert Syst. 39, e12992 (2022).

Hashim, F. A., Hussain, K., Houssein, E. H., Mabrouk, M. S. & Al-Atabany, W. Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl. Intell. 51, 1531–1551 (2021).

Acknowledgements

This research was funded by the National Natural Science Foundation of China: Grant No. 71672117; China Postdoctoral Science Foundation: Grant No. 2020M680979; Doctoral Start-up Foundation of Liaoning Province of China: Grant No. 2020-BS-263; the funding project of Northeast Geological S&T Innovation Center of China Geological Survey: Grant No. QCJJ2022-24.

Author information

Authors and Affiliations

Contributions

B.T. (Bb Tu): Data Curation, Writing—Original Draft, Writing—Review & Editing, Funding Acquisition. F.W. (F Wang): Conceptualization, Methodology, Software, Investigation, Formal Analysis, Writing—Original Draft. Y.H. (Y Huo): Conceptualization, Funding Acquisition, Resources, Supervision, Writing—Review & Editing. X.W. (Xt WANG): Visualization, Investigation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tu, B., Wang, F., Huo, Y. et al. A hybrid algorithm of grey wolf optimizer and harris hawks optimization for solving global optimization problems with improved convergence performance. Sci Rep 13, 22909 (2023). https://doi.org/10.1038/s41598-023-49754-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-49754-2

- Springer Nature Limited

This article is cited by

-

A Novel Hybrid Algorithm Based on Beluga Whale Optimization and Harris Hawks Optimization for Optimizing Multi-Reservoir Operation

Water Resources Management (2024)