Abstract

Agriculture plays a pivotal role in the economies of developing countries by providing livelihoods, sustenance, and employment opportunities in rural areas. However, crop diseases pose a significant threat to both farmers’ incomes and food security. Furthermore, these diseases also show adverse effects on human health by causing various illnesses. Till date, only a limited number of studies have been conducted to identify and classify diseased cauliflower plants but they also face certain challenges such as insufficient disease surveillance mechanisms, the lack of comprehensive datasets that are properly labelled as well as are of high quality, and the considerable computational resources that are necessary for conducting thorough analysis. In view of the aforementioned challenges, the primary objective of this manuscript is to tackle these significant concerns and enhance understanding regarding the significance of cauliflower disease identification and detection in rural agriculture through the use of advanced deep transfer learning techniques. The work is conducted on the four classes of cauliflower diseases i.e. Bacterial spot rot, Black rot, Downy Mildew, and No disease which are taken from VegNet dataset. Ten deep transfer learning models such as EfficientNetB0, Xception, EfficientNetB1, MobileNetV2, EfficientNetB2, DenseNet201, EfficientNetB3, InceptionResNetV2, EfficientNetB4, and ResNet152V2, are trained and examined on the basis of root mean square error, recall, precision, F1-score, accuracy, and loss. Remarkably, EfficientNetB1 achieved the highest validation accuracy (99.90%), lowest loss (0.16), and root mean square error (0.40) during experimentation. It has been observed that our research highlights the critical role of advanced CNN models in automating cauliflower disease detection and classification and such models can lead to robust applications for cauliflower disease management in agriculture, ultimately benefiting both farmers and consumers.

Similar content being viewed by others

Introduction

“Cauliflower” word has been originated from the Italic word cavolfiore, which means “cabbage flower.” It is plant that grows annually and is produced by seed. It comes from Brassica oleracea, with the genus Brassica and the family Brassicaceae (or mustard). After cabbage, it is the second most popular ‘cole’ crop in the world1. The cauliflower consists of parts such as the Head, floret, and stem. The edible part of the cauliflower is head which is also called curd, as shown in Fig. 1. Cauliflower also benefits human health as it contains phytonutrients that reduce cancer risk2. In addition, this cruciferous vegetable provides fibre that lowers the chance of heart problems and contains essential nutrients such as choline to help people with good sleep, learning, muscular movement, and sharp memory3.

Parts of cauliflower2.

Cauliflowers are grown in various countries across the globe, like India, China, Spain, The USA, Mexico, and Bangladesh, with hundreds of varieties that are being commercialized, as shown in Table 1. As far as India is mentioned, the annual production and acreage of cauliflower are about 7,887,000 million tons and 2.5 lac hectares, respectively4.

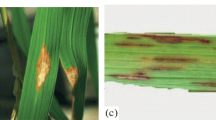

Although cauliflower has various health-based benefits, its disease infection is the main drawback of its production5. When cauliflowers are cultivated, they are infected by either bacteria or fungus, which gives birth to various diseases such as bacterial soft rot (Erwinia and Pseudomonas species), blackleg (Leptosphaeria maculans), black rot, downy mildew (Hyaloperonospora parasitica), powdery mildew (Erysiphe cruciferarum), ring spot (Mycosphaerella brassicicola), white rust (Albugo Candida), etc.6.

The rotten or infected cauliflowers throw a terrible impact on the health of human beings. When they consume them, they cause allergies like sneezing, itching, watery eyes, coughing, difficulty breathing, ear and skin infections, gastrointestinal diseases, etc. The pesticides or insecticides sprayed on them to keep the cauliflowers away from bacteria also cause severe health issues to humans like dizziness, diarrhea, nausea, acute as well as chronic poisoning, Alzheimer, cancer, asthma, bronchitis, etc. Other than human health issues, cauliflower production quality and quantity have also been degraded in the agricultural sector.

In fact, traditional cauliflower disease detection methods suffer from numerous limitations in agriculture. They often rely on subjective human visual inspection, leading to errors and inconsistency. Manual inspection is time-consuming and delays disease detection, enabling rapid infection spread. The cost of training and maintaining agricultural experts for disease identification is prohibitive for many farmers, particularly in remote areas. These methods often miss early or asymptomatic infections, depend on specific environmental conditions, lack data documentation, and are not easily scalable. They rely heavily on expert knowledge, limiting their applicability. In contrast, advanced deep transfer learning techniques offer automated, accurate, fast, and scalable disease detection with continuous crop monitoring, addressing these shortcomings. Hence it is essential to have early detection of such diseases so that appropriate measures will be taken to escalate the profit and yield of cauliflower cultivation7.

Artificial intelligence (AI) has become a transformative force across various industries, and its impact on agriculture, a profession employing approximately 58% of India's population, is undeniable8. As the population continues to grow exponentially, the challenges in ensuring food security and sustaining agricultural businesses have intensified. Integrating AI into agriculture is pivotal, not only for enhancing agricultural efficiency but also for mitigating adverse environmental impacts. It is imperative that rural farmers, the backbone of the agricultural sector, equip themselves with tools to swiftly detect and address crop-related issues.

Deep learning models have emerged as powerful tools in plant disease detection, offering a potent solution to the challenges faced by the agricultural sector in India and worldwide. These models, especially Convolutional Neural Networks (CNNs), are great at recognizing images. This makes them perfect for studying visual data like images of plant leaves, which are often used to diagnose diseases. Deep learning models learn complex patterns and features by being trained on large sets of labelled pictures of healthy and unhealthy plants. This lets those spot even minor signs of disease9 as most of the time, standard methods of detection can’t get to this level of detail. Additionally, deep learning models can also be tuned and changed to fit different types of crops and diseases. This makes them useful in a wide range of farming situations. Their real-time processing capabilities allow for rapid disease identification, offering farmers timely insights to take appropriate action. The integration of deep learning models in handheld devices or smartphones can empower rural farmers with accessible and user-friendly tools for on-the-spot disease diagnosis, ultimately contributing to increased crop yields, sustainable agriculture, and food security10.

In this context, AI holds tremendous promise for diagnosing and managing plant diseases, identifying pests, addressing malnutrition in crops, and even detecting and managing weed infestations. The ability of AI to offer practical and effective solutions to these challenges is undeniable. To harness this potential, publicly available large datasets have been harnessed to train machine learning and deep learning algorithms, paving the way for streamlined disease detection and classification in farming crops, including fruits, plants, and vegetables10.

Against this backdrop, the primary objective of this paper is crystal clear: to identify and detect various diseases afflicting cauliflower crops using advanced deep-transfer learning techniques. By doing so, our research aims to not only protect agricultural yields but, more importantly, to safeguard human health by preventing the consumption of contaminated produce. The following contributions were made to carry out the research:

-

The images of four classes like bacterial spot rot, downy mildew, black rot, and no disease of cauliflower disease dataset is initially taken.

-

In the next step, collected image data is pre processed by reducing its original size to 224 × 224, and various morphological operations are applied such as erosion and dilation.

-

In this phase, images have been visualized graphically to find out the pixel intensity and generate red green and blue histograms to study the pattern of data.

-

Further, characteristics of image data such as mean intensity, min/max value, extent, perimeter, area, etc., are calculated and extreme points are generated to obtain the cropped image. Additionally, adaptive thresholding is also applied so that the background and foreground part of the image can be differentiated to enhance the classification accuracy of the model followed by the splitting of train and test dataset..

-

After splitting of the dataset, various transfer learning classifiers such as Xception, EfficientNetB0, EfficientNetB1, EfficientNetB2, EfficientNetB3, EfficientNetB4, MobileNetV2, DenseNet201, ResNet152V2, and InceptionResNetV2 are taken and trained with the dataset.

-

In the last phase, the performance of all these models have been examined by computing their accuracy and loss as well as generating the confusion matrix to obtain the values of another set of performance metrics.

Organization of the paper

In the “Introduction” section, we briefly described cauliflower, its diseases, and its influence on human health, as well as how AI can detect such sick crops. Section “Background” describes researchers’ use of AI learning models to detect plant and cauliflower-based diseases. This research is based on a cauliflower disease detection system, so section “Materials and methods” discusses the method to develop such a system, where the results are analyzed and compared to state-of-the-art results in section “Analyzing the results”. Section “Conclusion” concludes the article with problems and future scope.

Background

Researchers have shown an impressive contribution to detecting and classifying various cauliflower diseases to protect humans from harmful diseases.

The researchers in paper11, identify the diseases affecting cauliflower plants, to enhance cauliflower production efficiency in Bangladesh’s agricultural sector. K-means clustering was used for image segmentation following preprocessing, and ten relevant features were extracted. For classification, various methods were assessed, with the Random Forest algorithm achieving an overall accuracy of 81.68%. Additionally, Convolutional Neural Networks (CNNs), MobileNetV2, InceptionV3, VGG16, and ResNet50 were employed, with InceptionV3 achieving the highest accuracy of 90.08% among these methods. The researchers in paper12 mentioned about the novel dataset which they had created by collecting the cauliflower leaves. The work was conducted on MATLAB and various traditional machine learning techniques were applied which included decision tree, random forest, support vector machine, naïve Bayes, and sequential minimal optimization to detect diseased leaf.

Likewise, in paper2 researchers created VegNet dataset which consist of various classes of diseased cauliflower plants such as black rot, downy mildew, and bacterial spot rot. These photographs were meticulously shot between December 20th and January 15th, when cauliflower plants were in full bloom and illnesses were most visible. Their dataset was rigorously organized and will be used to develop and validate machine learning-based automated algorithms for detecting cauliflower illnesses. An analysis into multiple convolutional neural network (CNN) models paired with transfer learning methods was conducted in research article13. The major purpose was to classify four cauliflower diseases: bacterial soft rot, black rot, buttoning, and white rust. This study's dataset includes approximately 2500 photos. InceptionV3 outperformed the other CNN models tested, with a test accuracy of 93.93%.

Likewise, the researchers in paper14 presented an online expert system to assist cauliflower farmers in identifying and managing diseases affecting their crops. Their system operated by processing images captured using smartphones or handheld devices, classifying them to pinpoint specific cauliflower diseases. The targeted diseases include ‘black rot,’ ‘bacterial soft rot,’ ‘white rust,’ as well as ‘downy mildew.’ To implement it, they used a dataset comprising 776 images. The process involved initial image segmentation using the K-means clustering algorithm to isolate disease-affected regions. Subsequently, two types of features, statistical and co-occurrence, were extracted from these segmented regions. For disease classification, six different algorithms were employed: Kstar, LMT (Logistic Model Tree), BayesNet, BPN (Back Propagation Neural Network), Random Forest, and J48 where the results indicated that the Random Forest classifier outperformed all others, achieving an accuracy rate of approximately 89.00% for cauliflower disease recognition. The researchers in paper15 used LeNet image processing and deep learning techniques for the classification of cauliflower samples into four categories: healthy, powdery mildew-infected, black rot-infected, and bacterial soft rot-infected. A carefully curated dataset of 655 color images representing these categories was employed, with 70% of the data allocated for model training. Results indicated the model's remarkable ability to accurately classify healthy cauliflowers, those with black rot, and those affected by powdery mildew, achieving a perfect 100% classification rate. Additionally, it demonstrated a highly impressive 99% accuracy in identifying cauliflower specimens afflicted by bacterial soft rot. In paper16, the researchers proposed a convolutional neural network (CNN) with transfer learning for the detection and classification of surface defects in fresh-cut cauliflower, aiming to overcome the inefficiencies of manual detection methods. The dataset comprises 4,790 cauliflower images categorized as diseased, healthy, mildewed, and browning. To optimize the model, the parameters of MobileNet were fine-tuned to enhance the accuracy and training speed. This involved selecting optimal hyper-parameters, adjusting frozen layer counts, and integrating ImageNet parameters with in-house trained ones. Comparisons were made with InceptionV3, NASNetMobile, and VGG19,. Experimental results highlighted the MobileNet model's exceptional performance, achieving a 0.033 loss, 99.27% accuracy, and a 99.24% F1 score on the test set with specific parameter settings. In the research paper denoted as17, an expert system was introduced, which synergized agricultural and medical expertise with machine vision. This system analyzed images taken using smartphones or portable devices to categorize plant diseases, offering valuable support to farmers in managing their crop health issues. The primary focus of their investigation revolved around the detection of eggplant diseases, using a transfer learning technique based on convolutional neural networks (CNNs). Several transfer learning models, including DenseNet201, Xception, and ResNet152V2, were utilized in their study. Among these models, DenseNet201 exhibited the highest level of accuracy, achieving an impressive 99.06% accuracy rate in the identification of diseases. The authors in study18 introduced a model to identify diseases in the plant leaves using advanced CNN model. Four deep learning models (Inception V3, VGG16, DenseNet201, and ResNet152V2,) were evaluated for their accuracy in detecting plant diseases. The research also involved the development of a web-based application for diagnosing plant diseases from leaf images. The dataset comprised 28,310 photos of leaves from three crops: potato, pepper, and tomato were taken. Their proposed model achieved impressive results, with a training and validation accuracy of 99.44% and 98.70% respectively in the experiments. In a certain research study referred to as19, a novel approach was proposed by the researchers. They combined the capabilities of MobileNetV2 and Xception models by integrating the features they extract, with the goal of improving the performance of plant disease detection. The outcomes of their study revealed that, when dealing with the entire Plant Village dataset, MobileNetV2 achieved an accuracy rate of 97.32%, Xception achieved 98.30%, and the ensemble model outperformed both with the highest accuracy rate of 99.10%. Notably, the accuracy of Xception and MobileNetV2 models saw improvements of 0.8% and 1.8%, respectively, when the ensemble approach was employed. Furthermore, the ensemble model showcased exceptional performance, achieving an impressive score of 99.52% across all evaluation metrics in a dataset defined by the user. In paper20, the researchers proposed a deep learning architecture called EfficientNet to classify tomato diseases, using a dataset of 18,161 tomato leaf images, both plain and segmented. They applied two segmentation models i.e. U-net as well as Modified U-net to segment the leaves and assessed their performance] in binary, six, and ten class classification which had groups like healthy vs. unhealthy leaves. The Modified U-net segmentation model achieved impressive results with 98.66% accuracy, 98.5% Intersection over Union (IoU), and a Dice score of 98.73% for leaf segmentation. EfficientNet-B7 outperformed in binary class with 99.95% accuracy and six-class classifications with 99.12% accuracy.

By using a publicly accessible dataset containing 54,306 images of both diseased as well as healthy plant leaves obtained under controlled settings, the researchers in paper21 conducted training for a deep convolutional neural network. The objective was to enable the model to identify 14 different crop species and distinguish between 26 diseases or their absence. Remarkably, our trained model achieved an impressive accuracy rate of 99.35% when evaluated on a separate test dataset, showcasing the practicality of this approach.

Besides this, the aforementioned contribution of the researchers is also presented in Table 2 to compare as well as analyse their work so that some research gaps can be traced out.

After assaying the table on the hand, it has been found that the study of the researchers underscores few shortcomings such as relatively small size and limited testing. The presence of class imbalance raises concerns about model performance, necessitating further exploration into fine-tuning the convolutional neural network (CNN) layers to achieve higher accuracy. Moreover, the computational demands associated with the chosen models, as well as the need for a more diverse dataset for broader real-time applicability, highlight the gaps in practical implementation. Additionally, their work also hints at limited generalization and emphasizes the importance of reducing data dependency. Therefore, the research gap centres on the need for comprehensive investigations addressing these limitations to pave the way for more robust and widely applicable cauliflower disease detection systems in agriculture.

But on the other hand, it has been also found that artificial intelligence techniques are beneficial in detecting the quality of agricultural products. From the background study, it has been found that deep learning models have shown a strong learning ability not only in the case of feature extraction but also in the classification to classify various cauliflower-based diseases. Hence based on it, we have combined the applied advanced deep learning models to develop a cauliflower disease detection model.

Materials and methods

The flow of the research has been mentioned and framed in this section (as shown in Fig. 2). The section holds a description of the dataset used, pre-processing techniques applied, exploratory data analysis, extracting the features, applying learning models, and parameters to predict the performance of the used models.

Dataset used

For this research paper, two files of cauliflower images have been taken, i.e., an original and an augmented image file in which the three classes of diseases were described. In addition, the image of disease-free cauliflower has also been included in the dataset. For this dataset, the original images of six hundred fifty-six and seven thousand three hundred and sixty augmented were compiled to create the dataset. All the images of cauliflower, i.e., the disease affected and disease free, have been assembled from the Manikganj which is the vegetable production area of Bangladesh. Table 3 shows the total number of images taken from each dataset class2.

Data pre-processing

The images of size 224*224 were initially imported and displayed for OpenCV (name, flag)-based data preprocessing using the window (name, flag) command. Later, the images are resized by adjusting the image's height and width to preserve the aspect ratio. As the original images have three color channels i.e. Red, Green, and Blue, so they are converted to grayscale by using the method cvtColor(), as shown in Fig. 3 to reduce the complexity of the data and simplify the architecture of computer vision models. Besides this, two morphological operations i.e. dilation as well as erosion are also applied to either add or remove the pixels from the boundaries of the image so that the from the output image, smallest value of the pixel can be obtained.

Exploratory data analysis

The image data has also been visually demonstrated to present the pixel intensity of the image as shown in Fig. 4. A histogram of red, green, and blue colors has been shown, which contains the quantified value of the of pixels to represent the value of their intensities.

Accordingly, Histogram Equalization (HE) is used to broaden the intensity range. In other terms, the histogram equalization method works on the distribution of those intensity values that are frequently shown, which as a result, improves the contrast in the image.

Feature extraction

Extracting the features from the complete image is an important phase as it reduces the space as well as the time complexity of the model to process the data. Hence, in this section, the required region has been extracted by initially obtaining the properties of the images in the form of a number of parameters that include the image's area, which is the product of its height along with width as shown in Eq. (1).

where height and width defines the shape attribute and are computed using Eqs. (2) and (3)

In addition, we computed epsilon from Eq. (4) for determining the distance of x and y points which belong to their respective class, the aspect ratio (Eq. 5) to find the relationship between the width and height of image and the perimeter of image is obtained by using Eq. (6).

The other parameters of the image such as extent (Eq. 7) which is the ratio of an area in an image and the rectangle that bounds the feature while as, equivalent diameter is calculated from the Eq. (8).

Furthermore, max, min value along with the locations is calculated using Eq. (9–12) followed by the mean color for finding the intensity values of the color using Eq. (13).

Additionally, the extreme left and rightmost points are determined, with the Eqs. (14, 15) ones being mainly accountable for computing these points, where 0 denotes the computation of quantities in the horizontal direction.

On the other hand, extreme bottommost and topmost points are acquired, using the Eqs. (16, 17) where 1 denotes evaluating values in the vertical direction.

All the values for the images of four classes of dataset using these parameters have been computed and shown in Table 4.

After obtaining the different values of the images, continuous curves were generated to obtain the extreme points and the largest contour based on which the image had been cropped. The cropped image has been later sent for adaptive thresholding, where the neighboring pixels are considered at a time to compute the threshold value for any specific region for performing segmentation. Well, in adaptive thresholding, we already know that for each smaller region, a threshold value is calculated, and as there are so many regions, there are various threshold values.

For this research, we have used OpenCV () to perform an adaptive threshold operation in which cv2.adaptiveThreshold() has been used. Five parameters have been passed, i.e., an array of the input image, assignment of maximum value to the pixel, type of adaptive thresholding, size of neighborhood pixels for calculating the threshold value, and a constant value subtracted from the mean of the neighborhood pixels (in Fig. 5).

After features were extracted, the data was divided into training and validation sets with 3:1 ratio utilizing 1384, 1440, 1648, and 1416 training images from the datasets for bacterial spot rot, black rot, downy mildew, and no disease. On the contrary, 346, 360, 412, and 354 validation images were taken from the same dataset, as mentioned earlier.

Applied models

After the images from the dataset are enhanced efficiently, they are fed to the pre-trained models for further computation, such as ResNet152V2, Xception, EfficientNetB1, EfficientNetB0, EfficientNetB3, EfficientNetB2, EfficientNetB4, DenseNet201, and InceptionResNetV2.

Xception: The Xception model is the 71-layer deep CNN model in which the layer slices the output into three segments and then sends it to the next set of filters. The 1*1 filter is for the single convolutional level, while as 3*3 filter is for the three convolutional layers. It has also been studied that, unlike the CNN model, the Xception model involves both point-wise and depth-wise convolution. In this research work, 20,861,480 parameters of Xception with output shape (None, 7,7,2048) have been used. Besides this, the crux of the architecture has globalaveragepooling2d (None, 2048) with 0 parameters, dense layer (None, 256) with 524,288 parameters, batch normalization (None, 256) with 1024 parameters, activation function and dropout (None, 256) with 0 parameters, and at the end second dense layer (None,2) with 1285 parameters. Figure 6 shows the block view and dataflow of Xception model22.

EfficientNet models: In this research work, various versions of EfficientNet models have been used such as EfficientNetB0, EfficientNetB1, EfficientNetB2, and EfficientNetB3. EfficientNet neural network is basically a new scaled up baseline network that use AutoML MNAS framework to optimize the efficiency and accuracy. The model consists of various blocks such as input layer, rescaling, normalization, zero padding, Conv2D, Batch Normalization, and Activation as shown in Fig. 723.

MobileNetV2: This type of model has 53 convolution layers and 1 average pool layer. It consists of two main components i.e., inverted residual block and bottle residual block as shown in Fig. 8. In addition to this, mobilenetv2 architecture also has two types of convolution layers i.e., 1 × 1 convolution and 3 × 3 depth wise convolution. Besides it, each block of it has 3 types of different layers i.e., 1 × 1 convolution layer with Relu6 activation function, depth wise convolution, and 1 × 1 convolution with non-linear layer24. In this research work, 2,257,984 parameters of MobileNetV2 with output shape (None, 7,7,1280) has been used in which global average pooling and dropout (None, 1280) with 0 parameter and dense layer (None, 6) with 7686 parameter is being used.

DenseNet201: DenseNet201 is a CNN model which is utilized to bring out features by learning weights of the input from the ImageNet dataset. The DenseNet201 has shown the best performance on various datasets because of having direct connections from all preceding layers to all subsequent layers which are shown in figure. In this research work, we have added two dense layers for classification having 128 and 64 neurons respectively. The feature extraction network i.e. DenseNet201 followed by softmax activation function for multi class—classification as shown in Fig. 925. The total parameters of DenseNet201 used are 18,815,813 out of which 18,586,245 and 229,568 are trainable and non-trainable parameters, respectively. Other layers that have been used are globalaveragepooling2d (None, 1920) with 0 parameter, dense layer, batch normalization, activation function, dropout (None, 256) with parameters 0,491520,1024,0,0 respectively. At the end the dense layer (None, 5) with parameter 1285 is being used for the classification of the input.

ResNet152V2: Residual network is a convolutional neural network which comprise of thousands of convolution layers. V2 uses batch normalisation before each weight layer, whereas the previous version of ResNetV1, did not. ResNet's impressive performance in image recognition and localization tasks demonstrates the significance of many visual recognition tasks. In this research work, 152 layers of residual network have been used for classification which has reshape step, first dense layer, flatten step, second dense layer, a dropout layer, and finally an activation function (not in sequential form) for classifying the image as shown in Fig. 1026. ResNet152V2 has 58,858,245 parameters, with 58,713,989 trainable parameters and 144,256 non-trainable parameters. Besides this, the other layers that have been used in the architecture are globalaveragepooling2d (None, 2048) with 0 parameters, dense layer, batch normalization, activation function, and dropout having output shape (None, 256) each with 524288, 1024, 0, 0 respectively. At the end, dense layer is being used with output shape (None, 5) and 1285 parameter value.

InceptionResNetV2: It is a hybridization of ResNetV2 and Inception model in order to preserve the various characteristics of the multi-convolutional network and improve the model's classification accuracy. This enhanced version of the Inception architecture expedited the model and dramatically enhanced performance. The InceptionResNetV2’s architecture is demonstrated in Fig. 1127. InceptionResNetV2 has a total parameter count of 54,732,261: out of which 54,671,205 are for trainable parameters and 61,056 are for non-trainable parameters. This architecture has globalaveragepooling2d with output shape (None, 1536) with 0 weights, dense layer, batch normalization, activation function, and dropout with output shape (None, 256) and 393216, 1024, 0, 0.8 parameters. At the end, the architecture has second dense layer of output shape (None, 5) with 1285 parameters.

Evaluative parameters

Various evaluation parameters have been employed to evaluate all applied models. Accuracy is one of these measures, measuring the degree of precision attained by the model during training and validation on the dataset. The metrics also include loss, which reflects how well the model has been trained and verified and is the opposite of accuracy. In this assessment method, the root mean square error is also used as a statistic.

Similarly, we have evaluated the performance of models using precision, F1 score, and recall. Precision refers to how well the model predicts the class, whereas recall refers to the number of times the model correctly defines the relevant class. The F1 score, in this instance, indicates the average of recall and accuracy. The F1 score essentially acts as an indicator of a model's precision when used on a certain dataset. Table 5 provides the formulae that the models used to determine these parameters28, 29.

Analyzing the results

The execution of the models such as Xception, EfficientNet B0, EfficientNetB1, EfficientNetB2, EfficientNetB3, EfficientNetB4, MobileNetV2, DenseNet201, ResNet152V2, and InceptionResNetV2 that have been used to detect and classify various diseases of cauliflower have been evaluated using the performance evaluation parameters. These models' layers were hyper-tuned to enhance the classification accuracy. The learning rate taken was 0.0001, the sigmoidal activation function, and the dropout was 0.5 was used to perform multi-class. Initially, the models were computed during the training and validation phases for the combined dataset, and later, the confusion matrix was generated to evaluate their performances for different classes such as that have been taken.

Table 6 shows that EfficientNetB1 obtained the highest accuracy value of 99.37%, Xception got the best loss, and root mean square error value by 0.19 and 0.44, respectively. Likewise, during the validation phase, the best accuracy has been again obtained by EfficientNetB1 by 99.90%, along with the loss and root mean error square value by 0.16 and 0.40, respectively. On the contrary, the model that computed the least accuracy among all is ResNet152V2 by 52.42% and the worst loss as well as rmse value by 5.92 and 2.43, respectively.

The graphical analysis of the models has also been presented in Fig. 12 to study the pattern of their curves for training and validation accuracy as well as loss. All the models have been trained and tested for 30 epochs, out of which the best epoch has been located at which the model obtained the highest value.

On studying the curves of all the models, it has been observed that they have shown some noisy signals for both cases except a few, such as training and validation loss of ResNet152V2 and Inception ResNetV2. It has also been found that the Xception model, along with all the versions of the EfficientNet model, showed the best line of a curve at various points of epochs. Hence from the curve nature of models, it can be analyzed that the models do not show any modeling error such as overfitting and underfitting except MobileNetV2.

Based on these results, we have also tested the performance of the models based on specific parameters shown in Table 7. It has been found that Xception generated the highest precision, recall, and F1 score value by 99.65%, 99.8%, and 99.62% as compared to the other models. On the contrary, the least obtained by MobileNetV2 was by 61.81% precision score, 63.61% recall, and 68.45% F1 score.

In addition, we have also evaluated models’ performances for various classes of this dataset, such as bacterial spot rot, black rot, downy mildew, and no disease. A confusion matrix of all the applied models has been generated for all the classes, such as bacterial spot rot, black rot, downy mildew, and no disease. The confusion matrix identifies which component of the classification model makes errors when making predictions, allowing us to identify both the classification model’s errors and, more importantly, the types of errors that occurred as shown in Fig. 13.

The diagonal values of this confusion matrix represent the true positive values, and the summation of horizontal values of any particular class represents the false negative. Likewise, the summation of vertical values of any specific class represents the false positive, and from the rest of the values, we compute the true negative output of the class. Using these values, we computed the accuracy, loss, rmse, recall, precision, and F1-score values of models for different classes, as shown in Table 8.

From the table, it has been found that during the training phase, for the bacterial spot rot class, Xception obtained the highest accuracy value of 99.25%, and MobileNetV2 computed the best loss and rmse value by 0.16 and 0.40, respectively. In the same way, for the Black Rot class, Xception once again obtained the highest accuracy by 99.59%, while the best loss and rmse of 0.16 and 0.40, respectively, were obtained by EfficientNetB3. Likewise, for Downy Mildew, EfficientNetB2 obtained the highest accuracy by 99.46%, DenseNet201 obtained the best loss, and rmse value by 0.25 and 0.53, respectively. At the end for the no disease class, Xception computed the highest accuracy, loss, and root mean square error value by 99.25%, 0.19, and 0.44, respectively.

During the bacterial spot rot class validation phase, InceptionResNetV2 obtained the highest accuracy value by 99%, and EfficientNetB1 computed the best loss and rmse value by 0.15 and 0.39, respectively. In the same way, for the Black Rot class, EfficientNetB1 obtained the highest accuracy, loss, and root mean square error by 99.59%, 0.16, and 0.40, respectively. Likewise, Downy Mildew EfficientNetB1 obtained the best accuracy, loss, and root mean square error value by 99.59%, 0.16, and 0.40, respectively. At the end for the no disease class, EfficientNetB2 computed the highest root mean square error, loss, and accuracy value by 0.49, 0.24, 99.41% respectively.

In addition, the recall, precision, and F1 score value of models for different classes have also been computed in Fig. 14. The Xception model obtained the best precision value of 99.9% for all the classes except no disease. Similarly, the highest recall and F1 score of 99.9% has been obtained for every class except black rot and downy mildew, respectively. All four versions of EfficientNet models, i.e., EfficientNetB0, EfficientNetB1, EfficientNetB2, and EfficientNetB3, obtained the highest F1 score, recall, and precision value by 99.9% for bacterial rot and no disease classes. The lowest value of precision, recall, and MobileNetV2 obtained an F1 score of 28.57%, 50%, and 48.71% for black rot and no disease, respectively. DenseNet201 showed the best performance among all the models as this model computed the highest recall, precision, and F1 score of 99.9% for all the classes. ResNet152V2 obtained the highest precision and F1 score for class Downy Mildew by 83.33% and the highest recall of 99.9% for black rot. InceptionResNetV2 obtained the highest precision value of 99.9% for all the classes except downy mildew. Similarly, the highest recall of 99.9% has been obtained by all the classes except Black rot. In the end, the best F1 score of 99.9% has been obtained for the classes such as bacterial spot rot and no disease class.

Besides this, the computational time of the applied models have been also computed and mentioned in Table 9. It has been found that ResNet152V2 took the maximum time i.e. 1 h 36 min 2 s while as the least has been computed by EfficientNetB0 i.e. 24 min 49 s.

In addition, we have also compared the accuracy of our proposed system of this research work with the accuracies obtained by the existing techniques in Table 10. Our research work has been found to perform better to identify and classify cauliflower diseases, as out of the ten applied models, EfficientNetB1 obtained the top accuracy of 99.90%. InceptionV3 calculated the lowest accuracy value in the deep neural network by 93.93%. On the contrary, while comparing the results of a machine and deep learning over the best model i.e. EfficientNetB1, the best one has been taken by deep neural networks only.

Conclusion

Human health depends on vegetables which is an important part of agriculture. Information technology helps vegetable producers to increase yields, promote global food security and sustainable agriculture. To prevent people from becoming ill, we have developed a cauliflower disease detection system as part of this research. To summarise the work, 7360 images from four distinct classes which includes bacterial spot rot, downy mildew, black rot, and no disease, had been used for the training of models such as. Here, Gaussian, Erosion, and Dilation techniques were used to pre-process the images before analyzing the RGB pixel intensities. The contour feature extraction and adaptive thresholding techniques were used to generate morphologic values and cropped images. Ten models were subsequently trained and validated using the aforementioned four-class dataset where EfficientNetB1 obtained the highest validation accuracy of 99.90%, loss of 0.16, as well as root mean square error of 0.40. In addition, there are few limitations, such as the bacterial spot rot class, which contains the image of a dataset with no diseases and can lead to the misbalance or misclassification of other input classes. Additionally, the contour feature extraction failed on a few images, which can also lead to incorrect classification and detection of the input image. Consequently, in the future, the contour feature extraction on the images of the VegNet dataset should be enhanced, and a vegetable disease detection system should be proposed that detects not only cauliflower but also diseases on other vegetables.

Overall, this work signifies a crucial step towards harnessing the power of information technology in agriculture. By providing accurate and efficient cauliflower disease detection, we contribute to increased yields, global food security, and sustainable farming practices. As we continue to refine and expand our methods, we aim to make a lasting impact on agriculture, ultimately benefitting both farmers and consumers worldwide.

Data availability

The data that support the findings of this study is publically available on the given link: https://data.mendeley.com/datasets/t5sssfgn2v/3 (Accessed Date: 13th September, 2023).

Change history

24 November 2023

A Correction to this paper has been published: https://doi.org/10.1038/s41598-023-47732-2

References

Yaseen, A. A. & Ahmed, S. J. Interaction effect of planting date and foliar application on some vegetative growth characters and yield of broccoli (Brassica olerasea var italica) grown under unheated plastic tunnel. J. Garmian Univ. 4, 405–418 (2017).

Rajbongshi, A., Sara, U. S., Shakil, R., Akter, B. & Uddin, M. S. VegNet: An extensive dataset of cauliflower images to recognize the diseases using machine learning and deep learning models. In Mendeley Data, V3. https://doi.org/10.17632/t5sssfgn2v.3 (2022).

Abdull Razis, A. F. & Noor, N. M. Cruciferous vegetables: Dietary phytochemicals for cancer prevention. Asian Pac. J. Cancer Prev. 14(3), 1565–1570 (2013).

Sharma, S. R., Singh, P. K., Chable, V. & Tripathi, S. K. A review of hybrid cauliflower development. J. New Seeds 6(2–3), 151–193 (2004).

Dan, A., Jain, R., Dwivedi, R. K. & Kumar, A. Evaluation of socio-economic conditions of cauliflower (Brassica oleracea) growers in Chaka block of Allahabad district Uttar Pradesh. J. Pharmacogn. Phytochem. 9(5), 148–151 (2020).

Nan schiller. How to identify, prevent, and control common cauliflower diseases. In Gardener’s Path 1–4 (2018).

Kar, A., Mandal, K. & Singh, B. Environmental fate of chlorantraniliprole residues on cauliflower using QuEChERS technique. Environ. Monit. Assess. 185(2), 1255–1263 (2013).

Dubey, S. R. & Jalal, A. S. Fruit and vegetable recognition by fusing colour and texture features of the image using machine learning. Int. J. Appl. Pattern Recogn. 2(2), 160–181 (2015).

Kumar, Y., Singh, R., Moudgil, M. R. & Kamini, F. A systematic review of different categories of plant disease detection using deep learning-based approaches. Arch. Comput. Methods Eng. 30, 1–23 (2023).

Dhiman, B., Kumar, Y. & Kumar, M. Fruit quality evaluation using machine learning techniques: Review, motivation and future perspectives. Multimedia Tools Appl. 81(12), 16255–16277 (2022).

Maria, S. K. et al. Cauliflower disease recognition using machine learning and transfer learning. In Smart Systems: Innovations in Computing 359–375 (Springer, 2022).

Orin, T. Y., Mojumdar, M. U., Siddiquee, S. M. T. & Chakraborty, N. R. Cauliflower leaf disease detection using computerized techniques. In 2021 IEEE 6th International Conference on Computing, Communication and Automation (ICCCA) 730–733 (IEEE, 2021).

Abdul Malek, M., Reya, S. S., Zahan, N., Hasan, Z. & Uddin, M. S. Deep learning-based cauliflower disease classification. In Computer Vision and Machine Learning in Agriculture, Volume 2 171–186 (Springer, 2022).

Rajbongshi, A., Islam, M. E., Mia, M. J., Sakif, T. I., & Majumder, A. A Comprehensive Investigation to Cauliflower Diseases Recognition: An Automated Machine Learning Approach. International Journal on Advanced Science, Engineering and Information Technology, 12(1), 32–41. https://doi.org/10.18517/ijaseit.12.1.15189 (2022).

Pourdarbani, R. & Sabzi, S. Diagnosis of common cauliflower diseases using image processing and deep learning. J. Env. Sci. Stud. 8(3), 7087–7092 (2023).

Li, Y., Xue, J., Wang, K., Zhang, M. & Li, Z. Surface defect detection of fresh-cut cauliflowers based on convolutional neural network with transfer learning. Foods 11(18), 2915 (2022).

Saad, I. H., Islam, M. M., Himel, I. K. & Mia, M. J. An automated approach for eggplant disease recognition using transfer learning. Bull. Electr. Eng. Inf. 11(5), 2789–2798 (2022).

Bakr, M., Abdel-Gaber, S., Nasr, M. & Hazman, M. DenseNet based model for plant diseases diagnosis. Eur. J. Electr. Eng. Comput. Sci. 6(5), 1–9 (2022).

Sutaji, D. & Yıldız, O. LEMOXINET: Lite ensemble MobileNetV2 and Xception models to predict plant disease. Ecol. Inf. 70, 101698 (2022).

Chowdhury, M. E. et al. Automatic and reliable leaf disease detection using deep learning techniques. AgriEngineering 3(2), 294–312 (2021).

Mohanty, S. P., Hughes, D. P. & Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 7, 1419 (2016).

Moid, M. A. & Chaurasia, M. A. Transfer learning-based plant disease detection and diagnosis system using Xception. In 2021 Fifth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC) 1–5 (IEEE, 2021).

Agarwal, V. Complete Architectural Details of all EfficientNet Models. Medium (2021). https://towardsdatascience.com/complete-architectural-details-of-all-efficientnet-models-5fd5b736142.

Tsang, S. H. Review: MobileNetV2—Light Weight Model (Image Classification). Medium (2021).

Mahum, R. et al. A novel framework for potato leaf disease detection using an efficient deep learning model. Hum. Ecol. Risk Assess. Int. J. 29(2), 303–326 (2023).

Sivakumar, P., Mohan, N. S. R., Kavya, P. & Teja, P. V. S. Leaf disease identification: Enhanced cotton leaf disease identification using deep CNN Models. In 2021 IEEE International Conference on Intelligent Systems, Smart and Green Technologies (ICISSGT) 22–26 (IEEE, 2021).

Papers with Code—Inception-ResNet-v2 Explained (2022). https://paperswithcode.com/method/inception-resnet-v2.

Goel, N., Kaur, S. & Kumar, Y. Machine learning-based remote monitoring and predictive analytics system for crop and livestock. In AI, Edge and IoT-based Smart Agriculture 395–407 (Academic Press, 2022).

Chohan, M., Khan, A., Chohan, R., Katpar, S. H. & Mahar, M. S. Plant disease detection using deep learning. Int. J. Recent Technol. Eng. 9(1), 909–914 (2020).

Acknowledgements

This study is supported via funding from Prince Sattam bin Abdulaziz University project number (PSAU/2023/R/1445).

Author information

Authors and Affiliations

Contributions

G.P.K., J.K., Y.K., carried out the experiments; G.P.K., J.K. and M.W., J.S., M.F.I. wrote the manuscript; G.P.K., J.K, Y.K., A.C., M.W., J.S., M.F.I.; conceived the original idea; G.P.K., J.K., Y.K., A.C. and M.F.I. analyses the results; M.W., J.S., M.F.I.; supervised the project. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The Acknowledgements section in the original version of this Article was omitted. The Acknowledgements section now reads: “This study is supported via funding from Prince Sattam bin Abdulaziz University project number (PSAU/2023/R/1445).”

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kanna, G.P., Kumar, S.J., Kumar, Y. et al. Advanced deep learning techniques for early disease prediction in cauliflower plants. Sci Rep 13, 18475 (2023). https://doi.org/10.1038/s41598-023-45403-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-45403-w

- Springer Nature Limited