Abstract

This piece of work deals with a time truncated sampling scheme for cancer patients using exponentiated half-logistic distribution (EHLD) based on indeterminacy. We have studied time truncated schemes like repetitive acceptance sampling plan (RASP) under indeterminacy. We have estimated the projected scheme parameters such as sample size and acceptance and rejection sample numbers for known indeterminacy parameters. In addition to the projected sampling scheme quantities, the corresponding tables are generated for various values of indeterminacy parameters. The results of a sampling scheme show that the average sample number (ASN) decreases as indeterminacy values increase. It leads that the indeterminacy parameter is played a crucial portrayal in ASN. A comparative study is carried out with existing sampling schemes based on indeterminacy and classical sampling schemes. The evaluated sampling schemes are exemplified with the help of cancer data. From tables and exemplification, we wind up that the projected RSP scheme under indeterminacy desired a smaller sample size than the existing schemes.

Similar content being viewed by others

Introduction

Cancer, one of the most severe and lethal diseases, necessitates aberrant cell development that intensifies. It is a cancerous tumor with irregular cell proliferation that has the potential to attack or spread to other human body organs. For further information, check1. The rise may also be immediate, passing directly through the blood or lymphatic system. Different organs may be affected by cancer, and each type of cancer has unique traits. In relation to the location of cancers, various types of cancer are identified, including cervical cancer, lung cancer, gynecological cancer, skin cancer, brain cancer, breast cancer, etc. Breast cancer is the most common type of cancer. In recent years, the function of statistical analysis in cancer biology has grown increasingly significant in determining the various treatment alternatives. The length of time that elapses between the start of a specific time period and the occurrence of a chosen event is the subject of remission times or survival time data analysis. The purpose is usually to assess how different therapies effect remission time or survival time, and using easily available information about each patient adds to the uncertainty of the statistical distribution.

The goal of the current study is to determine how long cancer patients remain in remission after receiving EHLD. States and organizations perform tens of thousands of clinical trials annually to describe diseases and evaluate alternative therapies. The outcomes have a direct impact on how individuals are treated, thus it is critical to accurately assess the information presented in order to save both time and money. The majority of states use exploratory tools based on specified individuals to estimate the expected life or survival of patients. Acceptance sampling plans under indeterminacy would be one quality control methodology to save money and time when testing the patient's remission time or survival time. The oncologists are brainstorming to estimate the average remission time of the patients after attacked cancer due to their new method of treatment. In these situations the oncologists are paying attention to testing the null hypothesis of the average remission time of the patients is equal to the specified average remission time of the patients against the alternative hypothesis that the average remission time of the patients varies significantly. The null hypothesis could be rejected if the average remission time of the patients due to melanoma cancer, called as acceptance number of patients is greater than or equal to the specified average remission time of the patients due to melanoma cancer.

Numerous authors concentrate on studied single sampling plan (SSP) based time truncated life test for a variety of distributions. Some related article can also be explored2,3,4,5,6,7,8. More particulars related to SSP could available in9. The scheme of repetitive group acceptance sampling plan (RGASP) was first initiated by Sherman10. The improvement of single acceptance sampling plan is known as repetitive sampling plan for more details please explore11,12,13,14.

Although the aforementioned authors employed traditional statistics to examine the SSP and RASP, many real-world applications related to cancer patients' longevity may not. Recently, neutrosophic statistics have drawn the attention of more scholars in these circumstances. The development of more details about the neutrosophic logics, their quantification of determinacy, and their indeterminacy15. Various researchers considered neutrosophic logic for different real troubles and showed its competence as compared with fuzzy logic, for more information see16,17,18,19,20,21. The idea of neutrosophic statistics was given using the idea of neutrosophic logic22,23,24. Neutrosophic statistics commit information regarding the quantification of determinacy and measure of indeterminacy. Neutrosophic statistics become conventional statistics if no evidence is enrolled about the quantification of indeterminacy. The SSP using neutrosophic statistics is developed by Aslam25,26.

A fuzzy environment and the sample strategies at hand could not provide accounting data that is relevant to the measure of indeterminacy. Some works related to a single sampling plan using a fuzzy approach can also be explored27,28,29,30,31,32. More recently33,34 developed SSP and RASP to test average wind speed and COVID-19 patients for Weibull distribution under indeterminacy. When all of the observations in the sample or population are determined, the current sampling strategies based on classical statistics are employed. In practice, given the uncertainty environment, certain observations in the sample or population may be uncertain. In the latter scenario, the sampling plan for EHLD utilizing the RASP under classical statistics cannot be condemned. We were unable to identify a time-truncated sampling plan for EHLD under indeterminacy after searching the literature. We hope that the time-truncated sample strategy for EHLD under indeterminacy will be more useful for medical practitioners and industrial engineers when it comes to lot sizing in indeterminate contexts. As a result, we are motivated to examine RASP for EHLD in the presence of indeterminacy in order to calculate the average remission time. While testing to ascertain the average remission time, it is expected that the created sample design will have a lower ASN than the existing sampling designs.

In "Methodologies", we propose the RASP for EHLD under indeterminacy. A comparative study is given in "Comparative studies" and a real example based on the remission time of the patients due to melanoma cancer data is provided in "Applications of proposed plan for remission times of melanoma patients". In the end, concluding remarks, suggestions and future research works are demonstrated in "Conclusions".

Methodologies

This section's goal is to provide an overview of the EHLD using neutrosophic statistics. This section will also show how to use the RASP to study the typical length of remission for melanoma cancer patients based on uncertain circumstances.

Exponentiated half-logistic distribution under indeterminacy

We will provide a brief summary of the EHLD. The EHLD was acquainted and contemplated quite comprehensively by35, further36 studied for various acceptance sampling plans for this distribution. Suppose that \(f\left({t}_{N}\right)=f\left({t}_{L}\right)+f\left({t}_{U}\right){I}_{N};{I}_{N}\epsilon \left[{I}_{L},{I}_{U}\right]\) be a neutrosophic probability density function (npdf) with determinate part \(f\left({t}_{L}\right)\), indeterminate part \(f\left({t}_{U}\right){I}_{N}\) and indeterminacy period \({I}_{N}\epsilon \left[{I}_{L},{I}_{U}\right]\) for more details refer33. Remember that \({t}_{N}\epsilon \left[{t}_{L},{t}_{U}\right]\) be a neutrosophic random variable (NRV) follows the npdf. The npdf is the oversimplification of pdf under conventional statistics. The anticipated neutrosophic form of \(f\left({t}_{N}\right)\epsilon \left[f\left({t}_{L}\right),f\left({t}_{U}\right)\right]\) turns to pdf under classical statistics when \({I}_{L}\)=0. Using this background, the npdf of the EHLD is outlined as under

where \(\sigma\) and \(\theta\) are scale and shape parameters, respectively. It is significant to note that the developed npdf of the EHLD is the oversimplification of pdf of the EHLD based on conventional statistics. The neutrosophic form of the npdf of the EHLD reduces to the EHLD when \({I}_{L}\)=0. The neutrosophic cumulative distribution function (ncdf) of the EHLD is given by

The average lifetime of the NEHLD is given by

Repetitive sampling plan under indeterminacy

The traditional RASP based on the truncated life test sampling scheme is initiated by37. The step-by-step procedure to adopt the repetitive acceptance sampling plan under indeterminacy is stated below:

Step 1: From a lot choose a sample of size n. Conduct a life testing for these sample for a pre-specified time say \({t}_{0}\). Indicate the average \({\mu }_{0N}\) and indeterminacy parameter \({I}_{N} \epsilon \left[{I}_{L},{I}_{U}\right]\).

Step 2: Accept \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) if specified average quantity \({\mu }_{0N}\) is less than or equal to \({c}_{1}\) (i.e., \({\mu }_{0N}\le {c}_{1}\)). If specified average quantity \({\mu }_{0N}\) is more than \({c}_{2}\) (i.e., \({\mu }_{0N}\) > \({c}_{2}\)) then we reject \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) and conclude the test, where \({c}_{1}\le {c}_{2}\).

Step 3: If \({{c}_{1}<\mu }_{0N}\le {c}_{2}\) then go to Step 1 and do again the entire procedure.

The developed RASP based on above indeterminacy methodology is consists of \(n,{c}_{1},{c}_{2}\) and \({I}_{N}\), where \({I}_{N}\epsilon \left[{I}_{L},{I}_{U}\right]\) is known as uncertainty level and it is predetermined. RASP is a generalization of SSP under uncertainty studied in "Comparative studies". The proposed RASP is reduced to a SSP under uncertainty when \({c}_{1}={c}_{2}\). It is a convention to assume that \({t}_{0}=d{\mu }_{0}\) where \(d\) is the termination factor. The operating characteristic (OC) function would be obtained based on lot acceptance probability for more details refer10 and it is defined as:

where \(P_{a} \left( {p_{N} } \right)\) is the chance of accepting under \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) whereas \(P_{r} \left( {p_{N} } \right)\) is the chance of rejecting at \({H}_{0}:{\mu }_{N}={\mu }_{0N}\), these are obtained in the following expressions:

where \(p_{N}\) is the chance of rejecting \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) and it is obtained from Eq. (2) and Eq. (3) and it is defined by

Where \(\vartheta =ln\left(\frac{1+{2}^{-1/\theta }}{1-{2}^{-1/\theta }}\right)\).

Using Eqs. (5) and (6) the Eq. (4) becomes

The researcher is paying attention to concern the developed scheme to test \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) such that the chance of accepting \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) while it is true ought to be more than \(1-\alpha\) (\(\alpha\) is type-I ) for \(\mu /{\mu }_{0}\) and the chance of accepting \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) while it is wrong ought to be smaller than \(\beta\) (type-II error) for \({\upmu }_{{\rm N}}/{\upmu }_{0{\rm N}}=1\). In producer opinion, the chance of approval should be greater than or equal to \(1-\alpha\) at acceptable quality level (AQL), \(p_{1N}\). In the same way, in consumer opinion the lot rejection chance ought to be less than or equal to \(\beta\) at limiting quality level (LQL),\(p_{2N}\). The intended quantities would be obtained by solving the following two inequalities simultaneously.

where \({p}_{1N}\) and \({p}_{2N}\) are respectively given by

and

The estimated intended quantities of the developed scheme should be minimizing the average sample number (ASN) at AQL. The ASN of the developed sampling scheme in terms of fraction defective (\(p_{N}\)) is given below:

The intended quantities for the created method would therefore be determined by resolving the nonlinear programming problem for optimization shown below.

The values of the intended quantities \(\left\{n,{c}_{1}, {c}_{2}\right\}\) for various values of \(\beta\) = {0.25, 0.10, 0.05}; \(\alpha =0.10\); \(d=\left\{0.5, 1.0\right\}\), \({\mu }_{N}/{\mu }_{0N}\)={1.2, 1.3, 1.4, 1.5, 1.8, 2.0} and \({I}_{N}\)= {0.0, 0.02, 0.04, 0.05} for shape parameter \(\theta =\left\{{1,5}, 2.0, 1.0\right\}\) are presented in Tables 1, 2, 3, 4, 5 and 6. Tables 1 and 2 are shown for the EHLD for \(\theta =1.5\), Tables 3 and 4 for \(\theta =2.0\), Tables 5 and 6 for \(\theta =1\) (half-logistic distribution). From these tables, we pointed out the below few points.

-

a.

When the values of \(d\) increases from 0.5 to 1.0 the value of \(ASN\) decreases.

-

b.

It is pointed out that if the shape parameter increases from \(\theta =1 to \theta =2\) the values of \(ASN\) decreases when other parameters are fixed.

-

c.

Further, it is observed that the indeterminacy value \({I}_{N}\) also showing a considerable effect to derogating the \(ASN\).

Comparative studies

This section's goal is to examine the projected RASP's effectiveness in relation to ASN. The average hypothesis may be examined more affordably the lower the ASN. If no uncertainty or indeterminacy is established while remembering the average value, note that the sampling plan developed is an oversimplification of the plan based on conventional statistics. When \({I}_{N}\)=0, the developed RSP becomes the on-hand sampling plan. In Tables 1, 2, 3, 4, 5 and 6 the first spell of column i.e. at \({I}_{N }\)= 0 is the plan parameter of the traditional or existing RASP. From the results from the tables, we would conclude that the ASN is large in traditional RASP as compared with the proposed RASP. For example, when \(\alpha =0.10, \beta =0.25,\) \({\upmu }_{{\rm N}}/{\upmu }_{0{\rm N}}\)=1.3, \(\theta\)=1.5 and \(d\)=0.5 from Table 1, it can be seen that \(ASN\)=107.11 from the plan under classical statistics and \(ASN\)=95.82 for the projected RASP when \({I}_{N}\) = 0.05. Furthermore, when \(\theta\)=1 the EHLD becomes a half-logistic distribution (HLD), we have constructed Tables 5 and 6 for half-logistic distribution for comparison purpose. Table 5 depicts that EHLD shows less ASN as compared with HLD. For example when \(\alpha =0.10,\beta =0.10,\) \({\upmu }_{{\rm N}}/{\upmu }_{0{\rm N}}\)= 1.5, \(d\)= 0.5 and \({I}_{N}\)= 0.04 the Table 5 shows that the ASN is 100.99 where as proposed plan values are ASN = 67.83 for \(\theta\) = 1.5 and ASN = 51.76 for \(\theta\) = 2.0. From this study, it is concluded that the projected plan under indeterminacy is efficient over the existing RASP under traditional statistics with respect to sample size. We have also compared our proposed RASP under indeterminacy with SSP under indeterminacy developed by38. The results show that RASP is superior to the SSP for same specific parameters. For example when \(\alpha =0.10,\beta =0.10,\) \({\upmu }_{{\rm N}}/{\upmu }_{0{\rm N}}\) = 1.4, \(d\)= 0.5, \({I}_{N }\)= 0.04 and \(\theta\) = 1.5 the ASN in SSP is 105 whereas in RASP the ASN is 67.83. Operating characteristic (OC) curve of plan of the EHLD when \(\alpha =0.10, \beta =0.10,\theta =2\), \({\upmu }_{{\rm N}}/{\upmu }_{0{\rm N}}\)= 1.3 and \(d\) = 0.5 is depicted in Fig. 1. From Fig. 1, we conclude that indeterminacy parameter shows significant effect on reduce the ASN. Therefore, the application of the proposed plan for testing the null hypothesis \({H}_{0}:{\mu }_{N}={\mu }_{0N}\) demands a lesser ASN as compared to the on hand plan. Moreover, the OC curve comparison between SSP and RASP is also displayed in Fig. 2. The OC curve in Fig. 2 also shows that RASP is superior to the SSP for the same specific parameters. The researchers advised as proposed RASP under uncertainty is more economical to apply in a medical study specifically for remission time of the patients due to melanoma cancer.

Applications of proposed plan for remission times of melanoma patients

The present section deals with the postulation of the developed sampling scheme for the EHLD under the indeterminacy obtained by means of a real paradigm. This data set is picked out from39 and it constitutes the remission times, in months for 30 melanoma cancer patients at stages 2 to 4. For ready reference, the data is given below.

Remission time (months): 33.7, 3.9, 10.5, 5.4, 19.5, 23.8, 7.9, 16.9, 16.6, 33.7, 17.1, 8.0, 26.9, 21.4, 18.1, 16.0, 6.9, 11.0, 24.8, 23.0, 8.3, 10.8, 12.2, 12.5, 24.4, 7.7, 14.8, 8.2, 8.2 and 7.8.

Melanoma is a very dangerous kind of skin cancer which develops in the cells (melanocytes) that develop melanin and it creates the color change in the skin.

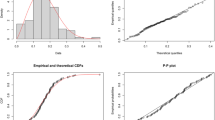

It is establish that the remission times of melanoma patients data comes from the EHLD with shape parameter \(\widehat{\theta }=\) 1.4097 and scale parameter \(\widehat{\sigma }=7.3811\) and the maximum distance between the real time data and the fitted of EHLD is found from the Kolmogorov–Smirnov test as 0.1324 and also the p-value is 0.6687. The demonstration of the goodness of fit for the given model is shown in Fig. 3, the empirical and theoretical cdfs and Q-Q plots for the EHLD for the remission times of melanoma patients’ data. In Tables 7 and 8 presented the plan quantities for the fitted shape parameter. It is assumed there is indeterminacy in measuring remission time and let it is 0.05. The measurements of remission time for cancer patients with respect to interval measures and fuzzy-type data sets were studied by various authors, for instance, refer40,41,42. For the proposed plan, the shape parameter is \({\widehat{\theta }}_{N}=(1+0.05)\times 1.4097 \approx 1.4802\) when \({I}_{U}\) = 0.05. Assume that a medical researcher would like to employ the developed RSP for EHLD under indeterminacy to guarantee the remission time of melanoma cancer patients is at least 6 months using the truncated life test for 3 months (thus d = 0.5). Suppose that medical researchers are paying attention to test \({H}_{0}:{\mu }_{N}=4.7893\) with the support of the developed RASP when \({I}_{U}\) = 0.05,\(\alpha =0.10\), \({\upmu }_{{\rm N}}/{\upmu }_{0{\rm N}}\)= 1.5, \(d\) = 0.5 and \(\beta\) = 0.10. From Table 7, it can be noted that n = 40, \({c}_{1}\)= 5, \({c}_{2}\)= 9 and ASN = 68.35. Thus, the RASP for EHLD under indeterminacy could be enforced in the following way: picking out a random sample of 40 melanoma cancer patients from the indoor group of patients, and conducting the truncated life test of remission time for 3 months. The developed RASP scheme could be adopted in the following way: hypothesis \({H}_{0}:{\mu }_{N}=4.7893\) will be accepted if the average remission time of melanoma cancer patients in 6 months is less than five patients, but a lot of patients should be rejected as soon as the remission time of melanoma cancer patients exceeds nine patients. Contrary, the experimentation could be repeated. From remission time data shows that seven patients before the average remission time of melanoma cancer patients of 4.7893. Therefore, the medical practitioners would have to repeat the entire procedure until accept/reject the hypothesis. Accordingly, it is competent that the developed sampling will be taken into consideration to check the typical length of remission for melanoma cancer patients based on the real application.

Conclusions

In order to design an exponentiated half-logistic distribution based on indeterminacy for a time-truncated repetitive sampling strategy, a thorough investigation of melanoma cancer patients was conducted. The sample scheme parameters are determined for the identified values of the indeterminacy parameters. For simple reference, we have given lengthy tables including the values of the known indeterminacy constants. The developed sampling strategy is compared to the available conventional statistical strategies. The results show that the designed sampling plan is more cost-effective than the on-hand SSP under indeterminacy and conventional sampling plans. Furthermore, the proposed RASP under indeterminacy is more cost effective than the single sample strategy. It is also noticed that indeterminacy values play a vital role in ASN, when the indeterminacy quantities increase at that time the ASN quantity is decreased. Hence, the proposed sample strategies are convenient for researchers, particularly in medical experimentation, because medical experimentation requires more costly and qualified specialists. As a result, the created sampling strategy under indeterminacy is required to be valid for testing the average number of melanoma cancer patients. The real examples based on the melanoma cancer patients for developed sampling scheme under indeterminacy show a piece of evidence. The suggested sampling strategy for big data analytics could be applied to various scientific and technical disciplines. The next step in the research would be to develop multiple dependent state sampling plans and multiple dependent state repeating sampling plans for different lifetime distributions.

Data availability

Data is available in Supplementary Material file. Source of the data link is also provided.

References

Dollinger, M., Rosenbaum, E. & Cable, G. Understanding cancer In Everyone's Guide to Cancer Therapy. (Andrews and McMeel, 1991).

Al-Omari, A. & Al-Hadhrami, S. Acceptance sampling plans based on truncated life tests for extended exponential distribution. Kuwait J. Sci. 45, 30–41 (2018).

Al-Omari, A. I. Time truncated acceptance sampling plans for generalized inverted exponential distribution. Electron. J. Appl. Stat. Anal. 8, 1–12 (2015).

Balakrishnan, N., Leiva, V. & López, J. Acceptance sampling plans from truncated life tests based on the generalized Birnbaum–Saunders distribution. Commun. Stat. Simul. Comput. 36, 643–656 (2007).

Kantam, R. R. L., Rosaiah, K. & Rao, G. S. Acceptance sampling based on life tests: Log-logistic model. J. Appl. Stat. 28, 121–128 (2001).

Lio, Y. L., Tsai, T.-R. & Wu, S.-J. Acceptance sampling plans from truncated life tests based on the Birnbaum–Saunders distribution for percentiles. Commun. Stat. Simul. Comput. 39, 119–136 (2009).

Lio, Y. L., Tsai, T.-R. & Wu, S.-J. Acceptance sampling plans from truncated life tests based on the Burr type XII percentiles. J. Chin. Inst. Ind. Eng. 27, 270–280 (2010).

Tsai, T.-R. & Wu, S.-J. Acceptance sampling based on truncated life tests for generalized Rayleigh distribution. J. Appl. Stat. 33, 595–600 (2006).

Yan, A., Liu, S. & Dong, X. Variables two stage sampling plans based on the coefficient of variation. J. Adv. Mech. Des. Syst. Manuf. 10, 1–12 (2016).

Sherman, R. E. Design and evaluation of a repetitive group sampling plan. Technometrics 7, 11–21 (1965).

Aslam, M., Lio, Y. L. & Jun, C.-H. Repetitive acceptance sampling plans for burr type XII percentiles. Int. J. Adv. Manuf. Technol. 68, 495–507 (2013).

Singh, N., Singh, N. & Kaur, H. A repetitive acceptance sampling plan for generalized inverted exponential distribution based on truncated life test. Int. J. Sci. Res. Math. Stat. Sci. 5, 58–64 (2018).

Yan, A. & Liu, S. Designing a repetitive group sampling plan for weibull distributed processes. Math. Probl. Eng. 2016, 5862071 (2016).

Yen, C.-H., Chang, C.-H. & Aslam, M. Repetitive variable acceptance sampling plan for one-sided specification. J. Stat. Comput. Simul. 85, 1102–1116 (2015).

Smarandache, F. Neutrosophic probability, set, and logic, ProQuest information & learning. Neutrosophy (Ann Arbor, Michigan, USA) 105, 118–123 (1998).

Abdel-Basset, M., Mohamed, M., Elhoseny, M., Chiclana, F. & Zaied, A.E.-N.H. Cosine similarity measures of bipolar neutrosophic set for diagnosis of bipolar disorder diseases. Artif. Intell. Med. 101, 101735 (2019).

Nabeeh, N. A., Smarandache, F., Abdel-Basset, M., El-Ghareeb, H. A. & Aboelfetouh, A. An integrated neutrosophic-topsis approach and its application to personnel selection: A new trend in brain processing and analysis. IEEE Access 7, 29734–29744 (2019).

Peng, X. & Dai, J. Approaches to single-valued neutrosophic MADM based on MABAC, TOPSIS and new similarity measure with score function. Neural Comput. Appl. 29, 939–954 (2018).

Pratihar, J., Kumar, R., Dey, A. & Broumi, S. Transportation problem in neutrosophic environment. In Neutrosophic Graph Theory and Algorithms. (IGI Global, 2020).

Pratihar, J., Kumar, R., Edalatpanah, S. & Dey, A. Modified Vogel’s approximation method for transportation problem under uncertain environment. Complex Intell. Syst. 4, 1–12 (2020).

Smarandache, F. & Khalid, H. E. Neutrosophic Precalculus and Neutrosophic Calculus. (Infinite Study, 2015).

Chen, J., Ye, J. & Du, S. Scale effect and anisotropy analyzed for neutrosophic numbers of rock joint roughness coefficient based on neutrosophic statistics. Symmetry 9, 208 (2017).

Chen, J., Ye, J., Du, S. & Yong, R. Expressions of rock joint roughness coefficient using neutrosophic interval statistical numbers. Symmetry 9, 123 (2017).

Smarandache, F. Introduction to Neutrosophic Statistics. (Infinite Study, 2014).

Aslam, M. Design of sampling plan for exponential distribution under neutrosophic statistical interval method. IEEE Access 6, 64153 (2018).

Aslam, M. A new attribute sampling plan using neutrosophic statistical interval method. Complex Intell. Syst. 5, 1–6 (2019).

Afshari, R. & Sadeghpour, G. B. Designing a multiple deferred state attribute sampling plan in a fuzzy environment. Am. J. Math. Manag. Sci. 36, 328–345 (2017).

Jamkhaneh, E. B., Sadeghpour, G. B. & Yari, G. Important criteria of rectifying inspection for single sampling plan with fuzzy parameter. Int. J. Contemp. Math. Sci. 4, 1791–1801 (2009).

Jamkhaneh, E. B., Sadeghpour, G. B. & Yari, G. Inspection error and its effects on single sampling plans with fuzzy parameters. Struct. Multidiscip. Optim. 43, 555–560 (2011).

Sadeghpour, G. B., Baloui, J. E. & Yari, G. Acceptance single sampling plan with fuzzy parameter. Iran. J. Fuzzy Syst. 8, 47–55 (2011).

Tong, X. & Wang, Z. Fuzzy acceptance sampling plans for inspection of geospatial data with ambiguity in quality characteristics. Comput. Geosci. 48, 256–266 (2012).

Uma, G. & Ramya, K. Impact of fuzzy logic on acceptance sampling plans–A review. Autom. Auton. Syst. 7, 181–185 (2015).

Aslam, M. Testing average wind speed using sampling plan for Weibull distribution under indeterminacy. Sci. Rep. 11, 7532 (2021).

Rao, G. S. & Aslam, M. Inspection plan for COVID-19 patients for Weibull distribution using repetitive sampling under indeterminacy. BMC Med. Res. Methodol. 21, 229 (2021).

Cordeiro, G. M., Alizadeh, M. & Ortega, E. M. M. The exponentiated half-logistic family of distributions: Properties and applications. J. Probab. Stat. 2014, 864396 (2014).

Rao, G. S., Rosaiah, K. & Rameshnaidu, C. Design of multiple-deferred state sampling plans for exponentiated half logistic distribution. Cogent Math. Stat. 7, 1857915 (2020).

Balamurali, S. & Jun, C.-H. Repetitive group sampling procedure for variables inspection. J. Appl. Stat. 33, 327–338 (2006).

Rao, G. S. & Peter, J. K. Testing average traffic fatality using sampling plan for exponentiated half logistic distribution under indeterminacy. Sci. Afr. 23, e01646 (2023).

Lee, E. T. & Wang, J. Statistical Methods for Survival Data Analysis (Wiley, 2003).

Gholami, S., Alasty, A., Salarieh, H. & Hosseinian-Sarajehlou, M. On the control of tumor growth via type-I and interval type-2 fuzzy logic. J. Mech. Med. Biol. 15, 1550083 (2015).

Hansen, R. P., Vedsted, P., Sokolowski, I., Søndergaard, J. & Olesen, F. Time intervals from first symptom to treatment of cancer: A cohort study of 2,212 newly diagnosed cancer patients. BMC Health Serv. Res. 11, 284 (2011).

Naderi, H., Mehrabi, M. & Ahmadian, M. T. Adaptive fuzzy controller design of drug dosage using optimal trajectories in a chemoimmunotherapy cancer treatment model. Inf. Med. Unlocked 27, 100782 (2021).

Acknowledgements

The authors are deeply thankful to the editor and reviewers for their valuable suggestions to improve the quality of the paper.

Author information

Authors and Affiliations

Contributions

G.S.R. contributed simulation work and methodology, P.J.K. contributed concept and manuscript preparation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rao, G.S., Kirigiti, P.J. Repetitive sampling inspection plan for cancer patients using exponentiated half-logistic distribution under indeterminacy. Sci Rep 13, 13743 (2023). https://doi.org/10.1038/s41598-023-40445-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-40445-6

- Springer Nature Limited