Abstract

This research focuses on the predictive modeling between rocks' dynamic properties and the optimization of neural network models. For this purpose, the rocks' dynamic properties were measured in terms of quality factor (Q), resonance frequency (FR), acoustic impedance (Z), oscillation decay factor (α), and dynamic Poisson’s ratio (v). Rock samples were tested in both longitudinal and torsion modes. Their ratios were taken to reduce data variability and make them dimensionless for analysis. Results showed that with the increase in excitation frequencies, the stiffness of the rocks got increased because of the plastic deformation of pre-existing cracks and then started to decrease due to the development of new microcracks. After the evaluation of the rocks’ dynamic behavior, the v was estimated by the prediction modeling. Overall, 15 models were developed by using the backpropagation neural network algorithms including feed-forward, cascade-forward, and Elman. Among all models, the feed-forward model with 40 neurons was considered as best one due to its comparatively good performance in the learning and validation phases. The value of the coefficient of determination (R2 = 0.797) for the feed-forward model was found higher than the rest of the models. To further improve its quality, the model was optimized using the meta-heuristic algorithm (i.e. particle swarm optimizer). The optimizer ameliorated its R2 values from 0.797 to 0.954. The outcomes of this study exhibit the effective utilization of a meta-heuristic algorithm to improve model quality that can be used as a reference to solve several problems regarding data modeling, pattern recognition, data classification, etc.

Similar content being viewed by others

Introduction

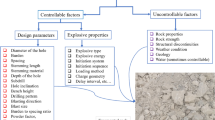

Rock dynamics has a wide range of applications in many rock engineering-related projects including tunneling and excavation, reservoir modeling, hydrocarbon reserve estimation, geotechnical earthquake engineering, drilling and blasting, rock fragmentation processes, and subsurface imaging1,2,3,4. The knowledge of rock dynamics provides a valuable set of information to discern the engineering response of rocks subjected to dynamic loading conditions. Seismic events, shock vibrations, and impact loads are common sources of dynamic loadings that affect particles' displacement, velocity, and acceleration5. Repeated loading cycles or an increasing loading rate considerably alter the mechanical characteristics of rocks. The rocks are not perfectly elastic materials rather they are brittle, anisotropic, discontinuous, and heterogeneous. Therefore, the induced strains in rocks are not fully recoverable even in the elastic domain3, 6. Over the period, the coalescence of these micro strains may lead to major deformation or failure.

The Poisson’s ratio is one of those important parameters that play a significant role in the design work of underground structures such as the foundation of megastructures, radioactive waste repositories, tunnels, large caverns, etc.7,8,9. It is the ratio of lateral strain to the axial strain of a rock specimen under static or dynamic loading10. The Poisson’s ratio may be dynamic or static depending on the state of stress. The dynamic Poisson’s ratio is determined by testing rock specimens non-destructively as per the standard procedure described by the American Society for Testing and Materials (ASTM C21511) to measure their acoustic wave velocities or resonance frequencies. These parameters are further utilized to find the dynamic Poisson's ratio. On the other hand, to measure static Poisson’s ratio rock samples are tested destructively as per the ASTM D314812.

In underground excavation, the subsurface imaging and determination of dynamic properties (specifically dynamic elastic moduli, dynamic Poisson’s ratio, acoustic impedance, quality factor, etc.) considerably help to ascertain the dynamic characteristics of rocks5. Generally, the dynamic Poisson’s ratio shows higher values than the static one because rocks are considered more sensitive toward dynamic loading13. The incongruity between the static-dynamic Poisson’s ratio is attributed to several factors such as rock lithology, mineral composition, loading conditions, strain rate, strain amplitude, etc.14,15,16.

Determination of dynamic Poisson’s ratio either in the field or laboratory needs calibrated instruments and good expertise15. Apart from the experimentation, dynamic Poisson’s ratio can be estimated by empirical relationships. Researchers have developed several empirical relationships based on rock density, strength, acoustic wave velocities, porosity, permeability, and elastic moduli15, 17,18,19,20,21,22.

The statistical models generate varying outputs for a single parameter due to two major reasons: the dependent variable is regressed with a different set of predictors, and the input variables in the empirical equations employ mean values that often lead to underestimation or overestimation of the outputs23, 24. In conventional statistical analysis, multicollinearity and overfitting significantly affect the prediction ability of multiple linear-nonlinear regression models. Apart from these traditional approaches, the use of artificial intelligence and machine learning algorithms gives satisfactory outcomes.

Unlike regression analysis, neural network-based prediction models do not consider mean values rather they use data variance. Thus, they provide optimal solutions for both linear and nonlinear problems of experimentally measured data. Recently published studies have proposed different models for the estimation of Poisson’s ratio. Zhang and Bentley in 200515 studied the static-dynamic behavior of clastic rocks and determined Poisson’s ratio from two independent factors: solid rock and dry or wet cracks. Al-anazi et al. 201125 used an alternating conditional expectation algorithm to predict Poisson’s ratio from measured data of porosity, pore pressure, bulk density, compressional, and shear wave travel time. Asoodeh in 201326 estimated the Poisson’s ratio from conventional well-log data using a radial basis neural network, Sugeno fuzzy inference system, neuro-fuzzy algorithm, and simple averaging method. He used the outputs obtained from each expert to construct a committee machine with an intelligent system using a hybrid genetic algorithm-pattern search technique. The integrated outcomes were much better than the output of the individual one. Abdulraheem in 201921 performed a neural network and fuzzy logic type-2 analysis to estimate Poisson’s ratio of rocks. He found that the neural network model had better prediction ability than the fuzzy logic model.

In light of the above discussion, it is evident that the artificial neural network has a competitive edge over conventional statistical modeling techniques. In regression analysis, highly correlated predictors produce multicollinearity and overfitting that’s why such input variables are excluded from the empirical equations to overcome dimensionality problems16. The artificial neural network can predict target values with minimum estimated residuals and can easily control the aforementioned issues without excluding a single input variable. The neural network can accommodate several rock properties as input variables to completely define its characteristics. Apart from its advantages, it has the demerits of a slow learning rate and getting trapped in local minima18. The training and learning of neural networks with a powerful optimizer such as particle swarm optimization (PSO) overwhelm these problems and ameliorates the performance of neural network models. The PSO is the population-based stochastic optimization approach that works on the social behavior of bird flocks or fish schools and has a powerful ability to solve continuous or discrete optimization problems27. In comparison with other optimizers, PSO has a less complex structure and simple parameter relationships28.

This study measures the dynamic properties of rocks especially Poisson’s ratio using the cyclic excitation frequency method. This approach has not been addressed comprehensively in the literature. In addition, several attempts have been made to develop predictive relationships for different physic-mechanical parameters of rocks such as compressive strength, tensile strength, shear strength, elastic moduli, porosity, permeability, acoustic wave velocities, etc.16, 29,30,31,32. There is limited literature available that focuses on the predictive modeling of dynamic Poisson’s ratio. Apart from the conventional modeling techniques, this research favors using a gradient descent-free optimizer such as PSO for the training of a selected neural network model.

The first objective of this study is to anticipate the dynamic behavior of selected sedimentary rocks in terms of their quality factor, resonance frequency, oscillation decay factor, acoustic impedance, and Poisson's ratio. Secondly, this research develops neural network models for the estimation of dynamic Poisson’s ratio using 3 neural network algorithms including feed-forward, cascade-forward, and Elman. The third objective is to train the proposed neural network model with particle swarm optimization to enhance model performance. The outcomes of this study can be used as a reference to solve the problems related to data modeling, prediction analysis, and indirect estimation of rock properties.

Materials and methods

The representative rock boulders of sandstone, limestone, dolomite, and marl were obtained from their outcrop exposed in the eastern part of the Salt Range, Punjab, Pakistan. The stratigraphic sequence, geological age, characteristics, and features of these rock units are described in Table 1. The collected rock boulders were free from major discontinuities and carried into the laboratory to prepare the required number of core specimens. A total of 50 NX-size (i.e., length to diameter ratio of 2 to 2.5 with a diameter of 54.7 mm) rock core samples were prepared and put into the desiccator to minimize the effect of moisture content on their dynamic properties.

Laboratory testing

A series of non-destructive tests were conducted on the rock core samples to measure their dynamic properties using Erudite Resonance Frequency Meter as per the ASTM C-215 standard. This instrument mainly consists of a vibrator, receiver, and control panel as shown in Fig. 1. In order to measure the dynamic properties of rocks, the applied loading frequency of 7–16 kHz was allowed to pass through the rock core samples clamped between the vibrator and receiver. The rock specimens were tested in longitudinal mode and torsion mode to measure their resonance frequency and quality factor in each case. The measured resonance frequency was used to determine elastic moduli and oscillation decay factor. Whereas elastic moduli were further used to find acoustic impedance and Poisson’s ratio. The experimentally acquired dataset of rock dynamic properties is shown in Table 2. Equations (1), (2) and (3) are described in the ASTM C21511 testing standard. Whereas Eqs. (4), (5) and (6) are explained by Kramer 19966 for earthen materials. Mathematically, these parameters can be expressed as follows:

where: The subscript P and S with the parameters denote their measured values in longitudinal mode and torsion mode respectively. Q is the quality factor, FR is the resonance frequency, Bandwidth is the difference between corner frequencies, E is the modulus of elasticity, Dcylinder is the constant and equal to 520 L/d2 with L and d are the sample length and diameter respectively, G is the modulus of rigidity, Bcylinder is the constant and equal to 400 L/A with L and A are the sample length and cross-section area respectively, W is the weight of the specimen, v is the dynamic Poisson’s ratio, α is the oscillation decay factor, Z is the acoustic impedance, and \(\rho\) is the sample density.

After the experimentation, the acquired dataset was made dimensionless by simply dividing the parameter values measured in the longitudinal and torsion modes. The whole dataset was split into a training dataset (80% of the total population of the data) and a testing dataset (the remaining 20% of the total population of the data). The training dataset was fed to neural network algorithms (i.e. feed-forward, cascade-forward, and Elman) to estimate dynamic Poisson’s ratio through prediction modeling. For this purpose, MATLAB coding was used to execute the designed neural network system. The developed prediction models were validated by an additional validating dataset. The best-performing model was selected and optimized using a particle swarm optimizer to further ameliorate it.

The neural network model works in two phases: (1) the configuration phase and (2) the training phase. In the configuration phase, a model is designed stochastically after selecting the number of neurons, hidden layers, number of variables, weights, and biases. The model is run, and its outcomes are compared with the target data. In case of failure, the model is tuned parametrically or architecturally, until desired results are obtained. In architectural tuning, the structure of the network is modified by varying the number of neurons, hidden layers, etc. Whereas, in parametric tuning weights or biases are changed for network modification. In this research architectural modification was carried out by varying the number of neurons between 10 and 50 and keeping hidden layers constant (i.e., only 2 hidden layers). Before selecting the best one, models must be validated by experimentally acquired data. After selecting the model, the training or optimization phase starts. An optimizer is selected based on its learning rate and resistance to getting trapped in local minima. Almost all gradient descent optimizers face this issue, that’s why a gradient descent free meta-heuristic optimizer (i.e., PSO) was selected in this study.

To get some idea regarding the neural network algorithms and particle swarm optimization, a brief discussion as follows:

Artificial neural network

A neural network is a web of neurons that works on the principle of human brain intelligence33, 34. The artificial neurons or nodes are the core processing units of this adaptive system. The neural network is analogous to the biological neural network and nowadays is getting popularity to solve various complex problems related to artificial intelligence. There are several applications of neural networks in regression analysis, time series forecasting, signal processing, pattern recognition, decision-making, etc.35, 36. The performance of a neural network model is attributed to the design of its architecture. The simple architecture of the neural network is comprised of the input layer and output layer of the neurons connected with the synaptic weights. One or more hidden layers can be introduced between the input layer and the output layer to enhance its performance as shown in Fig. 2. The positive and negative values of the estimated synaptic weights reflect excitatory and inhibitory connections respectively. The input layer receives the input data, processes it, and transfers signals to the hidden layers with the estimated weights and biases. Most of the computations are done at hidden layers. Finally, the output layer gets weighted signals from hidden layers, processes them, and checks whether the estimated scores are close to the target data or not. If the estimated values are not within the defined constraints or threshold limits, then signals are sent back for their recalculation. At this stage, the applied training function adjusts the weights and minimizes the residuals between the target and output data.

In an artificial neural network, two important points need to be addressed: (1) the selection of neurons in the hidden layer and (2) a suitable activation function. An inadequate number of neurons poorly fit the model on complex data; conversely, too many neurons overfit the model on data. In a neural network model, the number of neurons in the hidden layers is selected by the hit-and-trial method because no universal method has been developed yet that can provide guidelines in this regard37. The activation function defines the output of a node that gets the input or a set of input values. The purelin and poslin are considered linear transfer functions. Whereas, sigmoid and tangent hyperbola are taken as nonlinear transfer functions. In most cases, nonlinear transfer functions are preferred because of their better performance. The basic structure of an artificial neuron is described in Fig. 3.

Feed-forward neural network

The feed-forward neural network is one of the early invented simple neural networks that has been widely used for regression and classification purposes38. In this hierarchical neural network, the connections between the signal processing units are free from any loop or cycle. Unlike their counterpart recurrent neural networks, it transfers the information only in a forward direction from the input layer to the output layer through the hidden layer (if any). A feed-forward neural network with no hidden nodes is known as a single-layer perceptron (SLP). Whereas, a feed-forward neural network supported with one or more hidden layers is called a multi-layer perceptron (MLP). Mathematically, it can be expressed as follows:

Set the target output values for the input nodes in layer Lo

Calculate the product sum of synaptic weights and the output of the previous layer at each node. The node outputs in a layer are considered input signals for the subsequent layer.

Compute the output for the output layer Lk.

where: \({\mathrm{H}}_{\mathrm{i}}^{\mathrm{n}}\) is the product sum of weights and previous layer node outputs along with bias \({\mathrm{B}}_{\mathrm{i}}^{\mathrm{n}}\) for ith perceptron in the layer Ln, \({\mathrm{W}}_{\mathrm{li}}^{\mathrm{n}}\) is the weight for ith perceptron in layer Ln connected with the lth node in the layer Ln-1, \({\mathrm{O}}_{\mathrm{i}}^{\mathrm{n}}\) is the output for ith perceptron in the layer Ln, \({\mathrm{r}}_{\mathrm{n}}\) is the number of nodes in the layer Ln, and \(\mathrm{\varphi }\) is the transfer function.

Cascade-forward neural network

The cascade-forward neural network is a modified form of a feed-forward neural network that works similarly to its parent feed-forward algorithm. In the cascade-forward models, the input layer is connected to all subsequent layers to get better results. For example, in a three-layer cascade-forward model, the input layer is connected to both the hidden layer and output layer as shown in Fig. 4. This additional connection improves the learning rate of the model to obtain the required outputs with minimum computational time. The nonlinear and linear activation functions are applied to the hidden layers and an output layer respectively to reach the optimized status. The generalized mathematical expression can be expressed as follows:

where: O is the output, W is the synaptic weight, X is the input signal, B is the bias, \({\mathrm{\varphi }}_{\mathrm{i}}\) is the activation function from the input layer to the output layer, \({\mathrm{\varphi }}_{\mathrm{o}}\) is the activation function from the hidden layer to the output layer, and \(\mathrm{\varphi }\) is the activation function from the input layer to the hidden layer.

Elman neural network

Elman net is a recurrent type of neural network that was designed to recognize and predict the learned values or events39. This neural network mainly consists of an input layer, hidden layer, context layer, and output layer as shown in Fig. 5. Like a conventional multilayer perceptron, Elman net also has connections among the input layer, hidden layer, and output layer; however, an additional “context layer” is added to its architecture. The outputs of the hidden layer are fed to the context layer as input signals. The context layer processes these input signals, stores the values from the previous time step, and feeds them forward to the hidden layer as the input signals. In the case of any delay, this time cycle uses the previously stored values in the current time step, which reduces the overall computational time. According to Elman 199040, the outputs of both the input layer and the context layer activate the hidden layer and then the outputs of the hidden layer are transmitted to the output layer. The hidden layer output units also activate the context layer. The output signals are compared with the teacher input signals and their estimated residuals are fed back for the adjustment of their synaptic weights. In the Elman net, the nonlinear sigmoid transfer function is applied to the hidden layer. While the output layer uses a linear purelin transfer function. The nonlinear-linear combination of transfer functions enhances the performance of this recurrent neural network. There must be a suitable number of neurons in the hidden layers to fit the function properly. This recurrent connection makes the Elman net favorable to detecting and generating time-varying patterns.

Particle swarm optimization (PSO)

Kennedy and Eberhart in 199541 proposed the use of the Particle Swarm Optimization (PSO) algorithm to find an optimal solution. PSO works on the principles of the socio-biological behavior of birds in their flock42, 43. As each bird searches for food randomly and disseminates this information to other birds in the flock. The mutual collaboration among the birds comes up with the best hunt. The same scenario can be simulated to find the best solution in a multidimensional space. Being a metaheuristic algorithm, the PSO tries to find the best global optimal that is very close to the real global optimal44,45,46.

In a population of P particles, the position, and velocity of a jth particle at iteration i can be expressed as follows:

The position and velocities of each particle update at the next iteration

where: U is the particle velocity, w is the inertial weight whose value is chosen between 0 and 1, c1 and c2 are cognitive and social coefficients, r1 and r2 are the random numbers between 0 to 1, pbest is the best position of a particle at given function and gbest is the best position of other particles in the swarm.

Results and discussion

The behavior of rocks subjected to the excitation frequencies

To investigate the dynamic behavior of rocks, samples were tested non-destructively at their ambient conditions under a set of excitation frequencies ranging from 7 to 16 kHz. The intact rock samples may have hidden flaws, microcracks, internal defects, etc.47. The propagation of high-frequency stress waves through rock samples causes the plastic deformation of microcracks. Such alterations can make a stiffer rock weaker and vice versa48.

In this study rock samples were tested in both longitudinal and torsion modes. To anticipate the overall dynamic behavior of rocks, a ratio factor was determined by using the parameters measured in the longitudinal and torsion modes. Figure 6 shows the variations in the mean ratio factor values against increasing excitation frequencies. The ratio factor was determined in terms of the quality factor ratio (Qr), resonance frequency ratio (FRr), acoustic impedance ratio (Zr), oscillation decay factor ratio (αr), and dynamic Poisson’s ratio (v). The quality factor is a dimensionless parameter that describes the compactness of the rock. Figure 6a illustrates that the quality factor increases up to a certain level and then starts to decrease. This behavior signifies that an increasing excitation frequency produces the plastic deformation of microcracks, and the rock becomes stiffer. After getting a peak value, new microcracks start to develop that significantly affect the stiffness of the rock. Consequently, the quality factor declines.

Resonance is a phenomenon in which a material’s frequency synchronizes with the applied frequency6. Thus, the material’s particles vibrate with greater amplitude. In a stiffer material, particles have less chance to vibrate with greater amplitude as compared to the loose material. Figure 6b demonstrates that resonance frequency depreciates to its peak value and then starts to increase. The possible reason for this behavior is the plastic deformation of pre-existing cracks that enhances the rock stiffness. The acoustic impedance and oscillation decay factor can be elucidated in the same manner. Both parameters describe the soundness of the rock. A shattered or internally disrupted rock would have a lag time for stress wave propagation49. Therefore, Fig. 6c and d show a decreasing trend for acoustic impedance and oscillation decay factor respectively. Such depreciation and appreciation trends are owed to the plastic deformation of cracks and the development of new microcracks respectively.

Poisson’s ratio is the ratio of the transverse strain to the longitudinal strain. Figure 6e shows that the Poisson’s ratio decreases as the stiffness of the material increases50. The plastic deformation reduces the transverse strain more as compared to the longitudinal strain. Therefore, the entire fraction diminishes and leads to a reduction in Poisson's ratio. These results were noted only for the selected carbonate and silicate rocks. However, there is no guarantee that similar kind of results would be observed in other rocks. Because each rock type is composed of different minerals and exhibits different behavior under excitation frequencies.

Correlation and sensitivity analysis

Table 3 shows the bivariate correlation between the measured parameters. The negative sign implies a downtrend and vice versa. Poisson’s ratio as a dependent variable did not make a strong correlation with the variables. Correlation values signify that the dimensionality issue would not affect the model performance. A bivariate correlation in terms of Pearson coefficient (R) above 0.5 is taken seriously and a strong correlation leads to the overfitting of the model16. Poisson’s ratio correlation value with the parameters ranged from − 0.19 to 0.16. Whereas, resonance frequencies and quality factors made slightly high correlations with each other and their values varied from 0.24 to 0.68. Oscillation decay factors and impedances had very strong correlations with one another ranging from 0.89 to 0.99. Neural network based models can easily accommodate highly correlated parameters without affecting model performance. That’s why it has a competitive edge over multi-linear regression modeling. However, in this study, to avoid dimensionality and overfitting issues, the same parameters measured in longitudinal and torsion modes were divided to get unitless factor ratios. These ratio parameters were regressed with Poisson’s ratio using the neural network modeling.

Sensitivity analysis was performed to find the degree of importance of input variables. In this technique, input variable values are varied to sensitize their influence on the target variable51. In linear-nonlinear modeling, it helps to select more robust parameters for regression. In this study, unitless factor ratios including quality factor ratio, resonance frequency ratio, impedance ratio, and oscillation decay factor ratio were regressed with the Poisson’s ratio in terms of one to one relationship. Their model equations are described in Fig. 7. To evaluate the sensitivity of input variables, a ± 50% data variation with respect to mean value was incorporated in the regressors. Figure 7a and b show that the quality factor ratio (range = 0.17–0.21) and oscillation decay factor ratio (range = 0.18 to 0.20) respectively are less sensitive to data variation and have fewer differences in predicted and estimated values (range = 0.10–0.29). On the other hand, Fig. 7c and d illustrate that the resonance frequency ratio (range = − 1.00–1.38) and impedance ratio (− 1.11 to 1.36) are more sensitive to data variability. Even only ± 10% of data variation leads to high residual errors between predicted and estimated values (range = 0.10–0.29).

Data modeling and optimization

Apart from traditional linear-nonlinear regression analysis, this study focuses on using the artificial neural network for prediction modeling. For this purpose, backpropagation neural network algorithms including feed-forward, cascade-forward, and Elman were employed for the estimation of dynamic Poisson’s ratio. Overall, 15 neural network models were developed and one of them was selected for optimization. The number of neurons significantly plays an important role in developing the best fit model. A suitable number of neurons can be selected based on the hit-trial method. In the case of the feed-forward neural network, 5 models were developed by taking the number of neurons from 10 to 50. The feed-forward model with 40 neurons was considered the best one with a coefficient of determination (R2) value of 0.783. The rest of the models had a comparatively lower value of R2 ranging from 0.662 to 0.783 (see Fig. 8). The validation of these models by independent data also proposed the model with 40 neurons having a correlation coefficient (R2) value of 0.797. Whereas, the rest of the models had their R2 value from 0.692 to 0.758.

In the case of the cascade-forward neural network, 5 models were developed by varying the number of neurons from 10 to 50. Among all developed models, a cascade-forward model with 40 neurons performed best and had the highest value of R2 (i.e. 0.531). Others showed a variation in their R2 values ranging from 0.391 to 0.531. All the models were validated by the experimentally acquired data and the model with 40 neurons had the highest value of correlation coefficient (i.e. R2 = 0.543) as compared to its counterparts. The validation results of the cascade-forward neural network models were found less significant than the outcomes of the feed-forward neural network models. As illustrated in Fig. 9, the variation in their R2 values was noted from 0.389 to 0.543.

For Elman neural network, 5 models were developed at a varying range of neurons from 10 to 50. Unlike feed-forward and cascade-forward models, the Elman model with 20 neurons had the highest value of R2 = 0.599. Whereas, others showed their determination coefficient values from 0.228 to 0.599. An independent dataset was used for the validation of these models. Results showed that the model with 20 neurons had a comparatively higher value of R2 (i.e. R2 = 0.601) than the rest of the models. The R2 of the remaining Elman models was found by 0.237 to 0.573 as shown in Fig. 10.

It is evident from the above discussion that the feed-forward algorithm produced better outcomes than the cascade-forward and Elman algorithms. Based on the model validation results, the feed-forward model with 40 neurons was considered the best one and optimized further to get a high-quality end product (see Fig. 11a). Figure 11b shows the relationship between the error variance and neurons. At each instance error variance in feed-forward models was noted as a minimum. However, in the case of cascade-forward and Elman models, a slight fluctuation in error variance was observed against the increasing number of neurons. Minimum error variance was found in cascade-forward models at 10, 30, and 50 neurons. Whereas, Elman models showed lower error variance at 20 and 40 neurons. This aspect indicates that the number of neurons considerably affects the model performance, and they must be selected after rigorous analysis.

Among all backpropagation algorithms, the feed-forward model with 40 neurons was chosen for optimization because of its best performance. The optimization was carried out using the particle swarm optimization algorithm. PSO has a competitive edge over traditional optimizers due to its simplicity, ease of implementation, robustness, and computational accuracy. To use PSO the values of the cognitive and social coefficients were set as 1.5 and 2.5 respectively. Whereas, inertial weight and random numbers were selected between 0 to 1. Overall, 1000 iterations were carried along with the set of 50 swarms. Under the above-said conditions, the feed-forward model was trained to get a plausible model. Figure 12a shows that after the optimization the coefficient of determination value of the model was improved from 0.783 to 0.96. The model validation through an experimentally acquired dataset exhibited that the value of R2 got increased from 0.797 to 0.954 (see Fig. 12b). Figure 12c illustrates the error histogram of the model after optimization. It shows the error between predicted and target values. In this case, the bin size and instances were set at 20 and 14 respectively. The histogram shows the minimum error values against different instances which implies that the model was good enough for prediction.

Conclusions

In this research, the dynamic behavior of rocks was investigated under ambient conditions. The overall variation in the values of Qp, Qs, FRp, FRs, Zp, Zs, αp, αs, and v was estimated by 20–28, 10–17, 1.56–2.68 kHz, 1.02–1.76 kHz, 9–20 MPa*sec/m, 6–13 MPa*sec/m, 0.24–0.33 kHz, 0.19–0.34 kHz, and 0.16–0.22 respectively. The mean ratio factor value of Qr, FRr, Zr, αr, and v was determined by 1.366–1.773, 1.524–1.562, 1.523–1.561, 0.906–1.612, and 0.160–0.218 respectively. The outcomes of the dynamic response of rocks reveal that the stiffness of rocks increases against the excitation frequencies and then starts to decrease due to the development of new microcracks.

After the evaluation of the dynamic behavior of rocks, prediction modeling was performed to estimate the dynamic Poisson’s ratio. It was regressed with the Qr, FRr, Zr, and αr by using three backpropagation neural network algorithms including feed-forward, cascade-forward, and Elman. Overall, 15 models were developed by varying the number of neurons from 10 to 50. In the case of feed-forward and cascade-forward algorithms, the models with 40 neurons were found more plausible than the rest of the models. In the learning phase, the coefficient of determination for feed-forward and cascade-forward was estimated at 0.783 and 0.531 respectively. Whereas, during the validation stage, their coefficient of determination values were determined as 0.797 and 0.543 respectively. For Elman neural network algorithm, the model with 20 neurons was considered as best one. The coefficient of determination value for Elman net was calculated as 0.774. Among all models, the feed-forward model with 40 neurons comparatively performed much better. Therefore, a feed-forward net was chosen for the optimization to get a more robust model.

Results showed that the optimization of the selected model with the particle swarm optimization algorithm further improved its quality. After the training and validation of the model, its Pearson’s correlation coefficient and coefficient of determination values got increased from 0.885 to 0.980 and 0.797 to 0.954 respectively.

It is evident from the outcomes of this study that the optimization makes the neural network model more significant and robust. This approach can be used to solve several problems regarding data modeling. Furthermore, the results of rock behavior under dynamic cyclic loading can be utilized as a reference in the design work of mega-structures and to anticipate construction material response subjected to dynamic loadings.

Data availability

The datasets generated and/or analyzed during the current study are not publicly available due to some restrictions but are available from the corresponding author on reasonable request.

References

Duncan, J. M. Factors of safety and reliability in geotechnical engineering. J. Geotech. Geoenviron. Eng. 126(4), 307–316 (2000).

Branets, L. V., Ghai, S. S., Lyons, S. L. & Wu, X. H. Challenges and technologies in reservoir modeling. Commun. Comput. Phys. 6(1), 1 (2009).

Ahmed, M. F., Waqas, U., Arshad, M. & Rogers, J. D. Effect of heat treatment on dynamic properties of selected rock types taken from the Salt Range in Pakistan. Arab. J. Geosci. 11, 1–13 (2018).

Shreyas, S. K. & Dey, A. Application of soft computing techniques in tunnelling and underground excavations: State of the art and future prospects. Innov. Infrastruct. Solut. 4, 1–15 (2019).

Zhang, Q. B. & Zhao, J. A review of dynamic experimental techniques and mechanical behaviour of rock materials. Rock Mech. Rock Eng. 47, 1411–1478 (2014).

Kramer, S. L. Geotechnical Earthquake Engineering (Prentice Hall Upper Saddle River, 1996).

Unlu, T. & Gercek, H. Effect of Poisson’s ratio on the normalized radial displacements occurring around the face of a circular tunnel. Tunn. Undergr. Space Technol. 18(5), 547–553 (2003).

Kwon, S., Cho, W. J. & Lee, J. O. An analysis of the thermal and mechanical behavior of engineered barriers in a high-level radioactive waste repository. Nucl. Eng. Technol. 45(1), 41–52 (2013).

Ji, S. et al. Poisson’s ratio and auxetic properties of natural rocks. J. Geophys. Res. Solid Earth 123(2), 1161–1185 (2018).

Howard, G. C. & Fast, C. R. Hydraulic fracturing, monograph series. Soc. Pet. Eng. AIME 2, 34 (1970).

ASTM C215. Standard test method for fundamental transverse, longitudinal, and torsional resonant frequencies of concrete specimens. ASTM Stand. 1–7 (2019).

ASTM D3148. Standard test method for elastic moduli of intact rock core specimens in uniaxial compression. ASTM Stand. (2002).

Kolesnikov, Y. I. Dispersion effect of velocities on the evaluation of material elasticity. J. Min. Sci. 45, 347–354 (2009).

Wang, Z. Dynamic versus static elastic properties of reservoir rock seismic and acoustic velocities in reservoir rocks ed Z Wang and AM Nur. (2001).

Zhang, J. J. & Bentley, L. Factors determining Poisson’s ratio. CREWES Res. Rep. 17, 123–139 (2005).

Waqas, U. & Ahmed, M. F. Prediction modeling for the estimation of dynamic elastic young’s modulus of thermally treated sedimentary rocks using linear–nonlinear regression analysis, regularization, and ANFIS. Rock Mech. Rock Eng. 53, 5411–5428 (2020).

Khandelwal, M. & Singh, T. N. Predicting elastic properties of schistose rocks from unconfined strength using intelligent approach. Arab. J. Geosci. 4, 435–442 (2011).

Momeni, E., Armaghani, D. J., Hajihassani, M. & Amin, M. F. M. Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 60, 50–63 (2015).

Mohamad, E. T., Armaghani, D. J., Momeni, E., Yazdavar, A. H. & Ebrahimi, M. Rock strength estimation: A PSO-based BP approach. Neural Comput. Appl. 30, 1635–1646 (2018).

Abdi, Y., Garavand, A. T. & Sahamieh, R. Z. Prediction of strength parameters of sedimentary rocks using artificial neural networks and regression analysis. Arab. J. Geosci. 11, 1–11 (2018).

Abdulraheem, A. Prediction of Poisson's ratio for carbonate rocks using ANN and fuzzy logic type-2 approaches. In: International Petroleum Technology Conference, OnePetro. (2019).

Mohamadian, N. et al. A geomechanical approach to casing collapse prediction in oil and gas wells aided by machine learning. J. Pet. Sci. Eng. 196, 107811 (2021).

Kumar, B. R., Vardhan, H., Govindaraj, M. & Saraswathi, S. P. Artificial neural network model for prediction of rock properties from sound level produced during drilling. Geomech. Geoeng. 8(1), 53–61 (2013).

Zhang, L. Engineering Properties of Rocks (Butterworth-Heinemann, 2016).

Al-Anazi, B. D., Al-Garni, M. T., Muffareh, T., & Al-Mushigeh, I. Prediction of Poisson's ratio and Young's modulus for hydrocarbon reservoirs using alternating conditional expectation Algorithm. In: SPE Middle East Oil and Gas Show and Conference, OnePetro (2011).

Asoodeh, M. Prediction of Poisson’s ratio from conventional well log data: A committee machine with intelligent systems approach. Energy Sources A 35(10), 962–975 (2013).

Shi, Y. Particle swarm optimization: developments, applications and resources. In: Proceedings of the 2001 Congress on Evolutionary Computation, IEEE Cat. No. 01TH8546. 1, 81–86 (2001).

Anemangely, M., Ramezanzadeh, A., Tokhmechi, B., Molaghab, A. & Mohammadian, A. Drilling rate prediction from petrophysical logs and mud logging data using an optimized multilayer perceptron neural network. J. Geophys. Eng. 15(4), 1146–1159 (2018).

Najibi, A. R. & Asef, M. R. Prediction of seismic-wave velocities in rock at various confining pressures based on unconfined data. Geophysics 79(4), D235–D242 (2014).

Behnia, D., Behnia, M., Shahriar, K. & Goshtasbi, K. A new predictive model for rock strength parameters utilizing GEP method. Procedia Eng. 191, 591–599 (2017).

Parsajoo, M., Armaghani, D. J., Mohammed, A. S., Khari, M. & Jahandari, S. Tensile strength prediction of rock material using non-destructive tests: A comparative intelligent study. Transp. Geotech. 31, 100652 (2021).

Abdel Azim, R. & Aljehani, A. Neural network model for permeability prediction from reservoir well logs. Processes 10(12), 2587 (2022).

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. 79(8), 2554–2558 (1982).

Sabah, M. et al. A machine learning approach to predict drilling rate using petrophysical and mud logging data. Earth Sci. Inf. 12, 319–339 (2019).

Anemangely, M., Ramezanzadeh, A. & Behboud, M. M. Geomechanical parameter estimation from mechanical specific energy using artificial intelligence. J. Petrol. Sci. Eng. 175, 407–429 (2019).

Billings, S. A. Nonlinear System Identification: NARMAX Methods in the Time, Frequency, and Spatio-Temporal Domains (Wiley, 2013).

Aggarwal, C. C. Neural networks and deep learning. Springer 10(978), 3 (2018).

Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 61, 85–117 (2015).

Fausett, L. V. Fundamentals of neural networks: architectures, algorithms and applications. Pearson Education India (2006).

Elman, J. L. Finding structure in time. Cogn. Sci. 14(2), 179–211 (1990).

Kennedy, J. & Eberhart, R. Particle swarm optimization. Proc. ICNN’95-Int. Conf. Neural Netw. 4, 1942–1948 (1995).

Ashrafi, S. B., Anemangely, M., Sabah, M. & Ameri, M. J. Application of hybrid artificial neural networks for predicting rate of penetration (ROP): A case study from Marun oil field. J. Petrol. Sci. Eng. 175, 604–623 (2019).

Sabah, M., Mehrad, M., Ashrafi, S. B., Wood, D. A. & Fathi, S. Hybrid machine learning algorithms to enhance lost-circulation prediction and management in the Marun oil field. J. Petrol. Sci. Eng. 198, 108125 (2021).

Matinkia, M., Hashami, R., Mehrad, M., Hajsaeedi, M. R. & Velayati, A. Prediction of permeability from well logs using a new hybrid machine learning algorithm. Petroleum 9(1), 108–123 (2023).

Assari, M., Anemangaly, M. & Ramezanzadeh, A. Shear wave velocity prediction from petrophysical logs using MLP-PSO algorithm. In: 4th International Workshop on Rock Physics, (2017).

Poli, R., Kennedy, J. & Blackwell, T. Particle swarm optimization: An overview. Swarm Intell. 1, 33–57 (2007).

Waqas, U., Rashid, H. M. A., Ahmed, M. F., Rasool, A. M. & Al-Atroush, M. E. Damage characteristics of thermally deteriorated carbonate rocks: A review. Appl. Sci. 12(5), 2752 (2022).

Liu, E., Huang, R. & He, S. Effects of frequency on the dynamic properties of intact rock samples subjected to cyclic loading under confining pressure conditions. Rock Mech. Rock Eng. 45, 89–102 (2012).

Walsh, J. B. The effect of cracks on the uniaxial elastic compression of rocks. J. Geophys. Res. 70(2), 399–411 (1965).

Gercek, H. Poisson’s ratio values for rocks. Int. J. Rock Mech. Min. Sci. 44(1), 1–13 (2007).

Christopher Frey, H. & Patil, S. R. Identification, and review of sensitivity analysis methods. Risk Anal. 22(3), 553–578 (2002).

Acknowledgements

This research is supported by the Structures and Material (S&M) Research Lab of Prince Sultan University.

Author information

Authors and Affiliations

Contributions

Conceptualization and Original Draft Preparation, U.W. and H.M.A.R.; writing—review and editing, M.F.A., A.M.R. and M.E.A.-A. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Waqas, U., Ahmed, M.F., Rashid, H.M.A. et al. Optimization of neural-network model using a meta-heuristic algorithm for the estimation of dynamic Poisson’s ratio of selected rock types. Sci Rep 13, 11089 (2023). https://doi.org/10.1038/s41598-023-38163-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-38163-0

- Springer Nature Limited