Abstract

Real-time fundus images captured to detect multiple diseases are prone to different quality issues like illumination, noise, etc., resulting in less visibility of anomalies. So, enhancing the retinal fundus images is essential for a better prediction rate of eye diseases. In this paper, we propose Lab color space-based enhancement techniques for retinal image enhancement. Existing research works does not consider the relation between color spaces of the fundus image in selecting a specific channel to perform retinal image enhancement. Our unique contribution to this research work is utilizing the color dominance of an image in quantifying the distribution of information in the blue channel and performing enhancement in Lab space followed by a series of steps to optimize overall brightness and contrast. The test set of the Retinal Fundus Multi-disease Image Dataset is used to evaluate the performance of the proposed enhancement technique in identifying the presence or absence of retinal abnormality. The proposed technique achieved an accuracy of 89.53 percent.

Similar content being viewed by others

Introduction

According to the vision 2019 world report by World Health Organization, out of the 2.2 billion estimated visually impaired population worldwide, 1 billion cases could have been treated or prevented from vision impairment1. The initial non-invasive procedure in a routine eye clinical setting captures retinal fundus images and analyzes the anomalies. Examination of the eye is an early indicator of other diseases like hypertension, diabetes, cardiovascular-related diseases, etc.2,3,4. Thus, screening and examination of the eye, along with proper treatment, aids in preventing vision loss and protection from the risk of other diseases.

Typical quality issues in fundus images are due to noise, illumination, contrast, and sharpened regions within the image. Ophthalmologists need to view the features of retinal images to suggest appropriate treatment. Improper lighting may result in dark or bright images leading to less visibility of anomalies5. To overcome the illumination or contrast issues on captured retinal images and aid in better visibility of anomalies, image enhancement is an essential step in the image pre-processing stage6. Existing enhancement algorithms are based on the following approaches- histogram-based approach, transformation-based approach, and filter-based approach7. Out of various histogram-based approaches, Contrast Limited Adaptive Histogram Equalization (CLAHE) is found to be effective8,9,10.The enhancement techniques are mainly applied based on either of the three listed methods– 1. color image to grayscale conversion and enhancement of grayscale image, 2. splitting of channels in color space (ex., Splitting BGR (Blue, Green and Red) color space to blue, green, and red channels) and performing enhancement on individual channel followed by merging enhanced channel, 3. Performing enhancement techniques directly on color space11,12. A.W. Setiawan et al. chose a green channel from the RGB (Red, Green and Blue) color model and applied CLAHE5. Alwazzan et al. applied the Weiner filter followed by CLAHE on the green channel and merged with the red and blue channels of the RGB color model13. Jin et al. converted the input image from RGB color space to Lab (L and ab components represent lightness and chromaticity, respectively) color space and applied CLAHE on normalized individual components of the Lab (also called CIELAB color space defined by the International Commission on Illumination (CIE)) color model14. The Related works section discusses more research works in this domain. Most of the existing retinal enhancement methods are focused towards improving the contrast by choosing green channel or luminosity channel. The color information of retinal image will vary depending upon the retinal diseases a patient is suffering from along with other image quality issues. Choosing only the green channel can result in losing information available in optic disc and information about anomalies like drusen, cotton wool spots etc. So, it is important to select the color channel that displays more artifacts depending on the color dominance of retinal image for performing enhancement.

The unique contribution of this paper is utilizing the relation between color spaces to identify color dominance in a retinal image. RGB and Lab color space is chosen for selecting the channel to be enhanced for efficient enhancement of fundus images. The variance information in the blue channel is calculated to identify the color dominance of the selected retinal image and accordingly choose either a* or b* channel from the Lab color space to enhance its artifacts. Existing research works consider the L channel primarily from the Lab space to enhance the contrast in fundus images. The other two channels are generally less explored for image enhancement. But in this research, the information in a* and b* channels is utilized to enhance the retinal image dataset of multiple diseases. Instead of considering the overall average value of performance metrics, this paper analyses the performance metrics concerning various retinal disease categories individually to understand the suitability of proposed image enhancement on multiple fundus diseases with different anomalies.

The organization of the rest of this paper is as follows -Sections “Related works” and “Proposed enhancement method” present the overview of related works and proposed enhancement methodology; the experiments and discussion of results are under sections “Results” and “Discussion”; and finally, the conclusion of this work is under Section “Conclusion”.

Related works

Image enhancement is an essential process in the design of computer-aided diagnosis based solutions. Retinal images are more susceptible to image quality issues. Over the years, researchers have been experimenting with different enhancement methods to enhance the visibility of artifacts in fundus images. Gupta et al.15 proposed an enhancement technique by applying adaptive gamma correction on the luminosity channel of Lab color space, where the weights are calculated using the cumulative distribution of the histogram of input image pixels. The enhanced image contrast in Lab space is further improved by applying a quantile-based histogram. The authors achieved a PSNR value of 27.67, an SSIM value of 0.66 for quantile equals 3, and a PSNR of 28.40 and SSIM of 0.69 for quantile equals 5 on the MESSIDOR dataset. Mohammed et al.16 applied CLAHE on the normalized luminosity channel of Lab space after segmentation of the retinal region. The enhanced luminosity channel is rescaled and merged with the other two chromatic color channels of Lab color space. The authors achieved a PSNR of 24.42, a local contrast index of 0.57, and an Entropy of 5.63. Zhou et al.7 proposed enhancement on fundus images based on luminosity and contrast. The authors applied Gamma correction on the Luminance gain matrix obtained by converting from RGB to HSV channel. The resultant image is converted to Lab color space with an intermediate step conversion to RGB color. CLAHE is applied on the L channel, and the output is converted to RGB color space. From the private dataset of 4000 images, 961 poor-quality images were extracted and tested. The average image quality assessment improved from 0.0404 to 0.4565 for low-quality images. Kumar et al.20 followed a similar approach but applied a weighted average histogram on the luminosity channel instead of CLAHE. The authors assessed the enhancement using the metrics - Edge based contrast measure (EBCM), contrast-enhanced image quality (CEIQ), naturalness image quality evaluator (NIQE), visual saliency-induced index (VSI), and modified measure of enhancement (MEME). Navdeep et al.17 addressed the non-uniform illumination problem by proposing two radiance-based histogram equalization (RIHE-RRVE and RIHE-RVE) for retinal vessel enhancement where one is a recursive algorithm, and the other is non-recursive. A tuneable parameter is estimated to split the histogram into sub-bands and to calculate the radiance value. If the radiance value is less than the threshold, the histogram equalization technique is applied. Performance evaluation is estimated on various databases - DRIVE, STARE, CHASE using the measures entropy, PSNR, Euclidean measure, and visual quality inspection. Qureshi et al.18 converted the RGB image to CIECAM02 color space, and the lightness component of this color space is converted to grayscale. Texture features of the fundus image are enhanced by applying a non-linear contrast enhancement technique on the resultant grayscale image. The performance is evaluated on all the images of MESSIDOR and DRIVE datasets resulting in mean values of 4.60 Entropy, 23.78 PSNR, and 8.78 contrast-to-noise ratios. Dissopa et al.19 enhanced local image contrast by applying CLAHE on Lab space. Followed by histogram rescaling and stretching to standardize the brightness range to Hubbard’s brightness range of fundus images for different histogram clip limits. The performance is measured in terms of Quaternion structural similarity, Global contrast factor, and lightness order error values. In a technique by Wang et al.21 , the fundus image is decomposed into three layers: base, detail, and noise. Then, a visual adaption model is framed to perform non-illumination correction using a luminance map at the base layer, weighted fusion for enhancing the detail layer, and denoising at the noise layer. The authors calculated the Local contrast index and entropy measures for quantitative assessment. To improve the blurred retinal images, Xiong et al.22 applied techniques specific to background and foreground on 319 images. Background pixels are estimated using an illumination map and transmission map. Foreground pixels are enhanced and captured by applying the combination of Mahalanobis distance and entropy-based enhancement methods.

Table 1 presents the overall comparative analysis of the discussed image enhancement techniques. The analysis shows that the current research works perform enhancement techniques mainly on the Luminosity channels. To the best of our knowledge, the current research works have not considered quantifying color information for performing image enhancement. In the proposed method, we quantified the spread of information present in color channels by calculating the variance and performed image enhancement techniques based on color information.

Proposed enhancement method

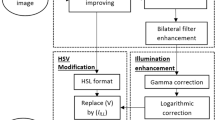

The proposed retinal fundus image enhancement method consists of two stages. Figure 1 presents the flow of each stage- Stage 1 and Stage 2. Stage 1 focuses on selecting the color channel for image enhancement, and Stage 2 focuses on noise removal, brightness, and contrast optimization.

Fundus image dataset

The Retinal Fundus Multi-Disease Image Dataset (RFMiD) dataset is chosen in this research to evaluate the performance of our proposed method because the database contains images of different color dominance, and the proposed method is also based on color dominance. RFMiD is more recent(published in 2021) and the only publicly available dataset that includes 45 retinal disease categories plus one set of healthy fundus images23. Figure 2. shows samples of retinal images from the RFMiD dataset with different resolutions and varied color dominance. The images are captured in either of three cameras with resolutions- 4288x2848, 2048x1536, and 2144x1424. The RFMiD dataset contains 1920 training images, 640 test images, and 640 validation images. This paper evaluates the effectiveness of the enhancement technique in binary retinal disease class prediction of fundus images into the normal or disease-affected fundus. Table 2 shows the distribution of healthy and unhealthy retinal images. To understand the suitability of the proposed enhancement method on other publicly available datasets, we tested the algorithm on DRIVE and MESSIDOR datasets and tabulated the results in Tables 7, 8 and 9.

Stage 1

The input retinal image from the RFMiD dataset is of different resolutions. Image cropping is carried out for each retinal image by applying a square mask in the retinal region. The cropped retinal image in RGB color space is split into individual channels- red, green, and blue to calculate the variance of the blue channel using Eq. (1).

where \(X_(i,j)\), \(\mu \), m and n represents individual pixels, mean, number of rows and columns in blue channel respectively.

The variance measure is selected to understand the spread of data variability. Table 3 shows the variance calculated for each channel from RGB, HSV, and Lab color space. Compared to the variance of all the channels listed in Table 3, the variance of the blue channel shows direct relation with the color dominance in fundus images. It is evident from the table that red dominant images have less blue variance while non-red dominant images have more blue variance value. The underlying principle of human vision and Lab color space is based on the opponent color model. Since Lab color space is based on human visual perception, Lab color space is chosen in this research. The a* channel in the Lab space denotes the relation between red and green pixel values in an image, while the b* channel depicts the yellow-blue pixel values in the Lab space. So, the retinal image in RGB color space is converted to Lab space using the transformations described in Eqs. (2)–(5)24. The conversion from RGB to Lab color space involves transformation to intermediate components X, Y and Z components- of CIE XYZ color space, and \({X_n}\), \({Y_n}\), \({Z_n}\) represents CIE XYZ tristimulus values of the reference white point.

where,

After repeated experiments on analyzing the relation between blue variance and image color dominance, a threshold of \(\theta =1500 \) is fixed. For the red dominant image, i.e., with blue variance \(\sigma ^2 \le \theta \), CLAHE is applied on a* channel of Lab space. And for the non-red dominant images with \(\sigma ^2 > \theta \), CLAHE is applied on the b* channel of Lab space. The enhanced channel of Lab space is merged with the other two unchanged channels of Lab space and finally converted to RGB. The transformation from Lab to RGB color space involves intermediate conversion to CIE XYZ color space described in the Eqs. (6)–(9)24:

where,

where R, G, and B are components in RGB color space; X, Y, and Z are components in CIE XYZ color space, and \({X_n}\), \({Y_n}\), \({Z_n}\) represents CIE XYZ tristimulus values of the reference white point.

The output obtained by implementing algorithm 1 on the input fundus image is passed to the next phase : Stage 2.

Stage 2

Stage 2 is focused on noise removal and brightness and contrast optimization on the output image from stage 1. Out of the red, green, and blue channels in the RGB color space, the green channel is chosen for further performance improvement because the green channel is proportional to the L channel of the Lab color space7. The Green channel has better visibility of more artifacts than the other two channels and is less noise-prone. So, in this research, the modified (RGB)’ channel from stage 1 is split, and CLAHE is applied on the green channel. The enhanced green channel is merged with the red and blue channels. Due to repeated enhancements, it is essential to perform noise removal. A bilateral filter is applied to perform noise removal using the Eq. (10) where the output image (\(X_{output}\)) is given by the weighted average of pixels in input image (\(X_{input}\))25.

where,

where weight \(w_{ij}\) is the product of photometric and euclidean distance between pixels \(X_{input}[i]\) and \(X_{input}[j]\) and \(p_i\) denotes the position of \(i{\textrm{th}}\) pixel

A bilateral filter is better than a popular Gaussian filter because of the algorithm characteristics to preserve edges between the regions in an image and reduce noise by applying a non-linear function over the image pixels. The brightness and contrast of a filtered image are auto-optimized by calculating the gain parameter (\(\alpha \)) and the bias parameter (\(\beta \)) using Eqs. (11)–(13). Alpha and beta values are auto-calculated specific to each input image to produce the final enhanced retinal fundus image.

Method evaluation

The proposed enhancement technique is applied to the RFMiD image data-set to test the disease prediction accuracy. Pre-trained VGG16 model using the transfer learning technique is trained with a training data-set (1920 images), validated with a validation data-set (640 images), evaluated on a test set (640 images), and the accuracy is estimated. A fully connected layer with 512 nodes and a Relu activation function, followed by a dropout layer, and a final layer with 1 node and a sigmoid activation function are added on top of the VGG16 model. The model is trained with a Stochastic gradient optimizer, and for regularization, dropout is used. The code for the proposed method was developed in python, and the VGG16 model was trained on Power Ai 9 server with 16 GB RAM at 8Hz. The experiment results are analyzed and discussed in the following section.

Results

The proposed enhancement technique is implemented, trained, and validated on the training and validation set of the RFMiD data-set using a pre-trained VGG16 model and evaluated on the test set to identify the presence or absence of retinal abnormalities. The model performance is evaluated by calculating accuracy and F1 score. Accuracy is calculated as the ratio between the total number of correct predictions to the total number of predictions. F1 score is defined as the harmonic mean of precision and recall. Visual image analysis of the result is carried out in RGB color space and as well as in gray-scale. Figure 3 shows the comparison of the original input, stage 1 output, and stage 2 output in color space, along with a comparison between the gray-scale of the input image vs. the output of the proposed technique.

Table 4 tabulates the distribution of retinal disorders in the RFMiD training data-set. The performance of the enhancement technique is evaluated on the training set of the RFMiD data-set in terms of the following metrics - Mean square error (MSE), Peak-to-signal noise ratio (PSNR), and Universal Quality Index (UQI). UQI calculates correlation loss, distortion in contrast, and luminance as a single performance metric26. The similarity of the original input image and the enhanced image is evaluated using the Structural similarity index measure (SSIM), and Pearson correlation coefficient27, and the information variability of input and the enhanced image is estimated by comparing the Shannon entropy of the original and enhanced image. The higher Shannon entropy value indicates high information variability in an image. The considered metrics are calculated using the Eqs. (14)–(19) for each channel of RGB color space and averaged; the results are tabulated in Tables 5 and 6 and analyzed in the following section.

where X and Y represent enhanced and input images, respectively; m and n represent the number of rows and columns.

where R represents the maximum fluctuation present in the input image.

where l(x,y), c(x,y), s(x,y) represents luminance, contrast and structure comparison between input(x) and enhanced(y) images.

Pearson’s correlation coefficient, \(r_{1}\)

where \(x_i\) and \(y_i\) Denote \(ith\) pixel intensity of images 1 and 2, respectively \(x_m\) and \(y_m\) correspond to the mean intensity of images 1 and 2.

Shannon entropy, H(X)

where p(\(x_i\) ) represents normalized histogram frequency of pixels in input image- X.

Universal Quality Index, UQI

Discussion

We evaluated the performance of proposed enhancement technique using the performance measures- MSE, PSNR and UQI. Table 5, displays MSE, PSNR, and UQI values of each retinal disease categories. Out of all the disease categories, fundus images with Retinitis Pigmentosa (RP) achieved the lowest MSE value of 864.15, a PSNR value of 28.77, and a higher UQI value of 0.86. UQI above 0.85 is achieved in images with the following abnormalities - Macular Scar (MS), Retinitis (RS), and Retinitis Pigmentosa (RP). Parafoveal Telangiectasia (PT) achieved a higher PSNR value of 30.00. All three abnormalities are related to anomalies or inflammations in arteries, retinal haemorrhage, etc. The MSE values of the above disorders are less in the range of 1000s compared to CRVO, having an MSE value of 2290.57, and the PSNR value is higher than 28.

UQI of 0.83 with SSIM above 78% and Pearson correlation of Coefficient above 93% is achieved on enhancing the images with retinal disorders related to the choroid, features in the fovea, vessels - ARMD, ST, RT, ERM, CRS, RPEC, TV. The MSE value of these retinal disorders ranges from 1142.40 to 1579.63, and the PSNR value is between 28.74 and 29.68. For diseases with symptoms in optic disc (ODP, ODE, ODC), a UQI of 0.81 or 0.82 is achieved with PSNR value in the range 28.45 to 29.43 and Pearson correlation of Coefficient minimum 94%. The MSE value of Media Haze is higher than other retinal abnormalities. The UQI metric is between 0.77 and 0.79 for the retinal diseases- DR, MH, CRVO, PT, and EDN with MSE values between 1920.08 and 2307.89 except for EDN with MSE values equal to 1522.62. For other retinal anomalies like - DN, MYA, BRVO, TSLN, LS, CSR, MHL, and AH the UQI metric is between 0.80 and 0.82; the PSNR values are above 29.0 except for CSR and DN with PSNR 28.66 and 28.99. From the analysis, it can be inferred that high UQI is achieved for retinal images with artery or vessel inflammation-related disorders like RP, RS, and MS by maintaining SSIM of 80% and above with a Pearson correlation of Coefficient 96%. The overall Pearson correlation of Coefficient for all the disease categories is above 90% except for Exudation with 88%. A minimum of 73% structural similarity is maintained between the input image and enhanced images. The tabulated results show that the proposed enhancement method achieves an overall average UQI of 0.81 and PSNR of 29.12 by enhancing the features of the input image from an average Shannon entropy of 5.82 to 6.12 by maintaining average 78% structural similarity and 94 percent Pearson correlation coefficient between the input and enhanced image.Figure 3. compares samples of the input image and the enhanced image in color scale and grayscale. The features are well-enhanced and prominent in the enhanced image compared to the original image. From the closer sectional view shown in Figure 4, it is evident that anomalies present in the path of retinal vessels, like red haemorrhages, are enhanced well. The path of blood vessels serves as vital evidence to identify diseases like retinal pigment epithelium. Figure 4 shows proliferated retinal vessels in the optic cup better in the enhanced retinal image compared to an original input image. It is challenging to enhance both retinal vessels and the optic cup in a fundus image because either of them gets suppressed while enhancing the other. The advantage of the proposed method is it is efficient in enhancing the vessel as well as the optic cup for an image of different resolutions.

We tested the suitability of the proposed enhancement technique on two other publicly available datasets - MESSIDOR and DRIVE. Table 7, 8 and 9 presents the performance comparison of the proposed technique with other existing enhancement methods in terms of PSNR, SSIM, and Entropy values. The results show that the proposed enhancement technique based on color dominance outperforms other considered techniques by achieving a higher PSNR value. Further, we evaluated how best our proposed technique helps to identify the presence or absence of disease in the RFMiD dataset. From Table 10, it can be inferred that the presence or absence of retinal abnormality detection with the VGG16 model using the proposed enhancement technique can achieve 89.53% test accuracy, 0.89 F1 scores compared to the input images without enhancement achieving 87.81% test accuracy, and 0.86 F1 score.

Conclusion

Retinal image enhancement is an essential step under the pre-processing stage to better view the retinal anomalies for identifying the type of disease a patient suffers. This paper proposes an efficient retinal image enhancement technique based on color dominance in an image. In stage 1, the variance of the blue channel is calculated to find the color dominance of the input image. Depending on the resultant value, a* or b* channel of Lab color space is chosen for enhancement. If the variance value is less than the threshold, the information in the blue channel is less. So, a* channel of Lab color space is chosen for enhancement. For values above the threshold, b* channel of Lab color space is chosen for enhancement. The Contrast limited adaptive histogram equalization (CLAHE) technique is applied to perform an enhancement on the selected channels. Enhanced image in Lab space is passed to stage 2. In stage 2, the corresponding green channel is enhanced, followed by noise removal using a bilateral filter and auto-optimizing brightness and contrast.

The proposed image enhancement technique is analyzed using the metrics - UQI, MSE, PSNR, Shannon entropy, SSIM, and Pearson Correlation of Coefficient on the training set of the RFMiD dataset. The analysis shows that enhancement of images with retinal diseases - Macular Scar, Retinitis, and Retinitis Pigmentosa achieved the highest UQI of 0.85 and above. The overall average UQI obtained by evaluating 1920 train images is 0.81 with a 27.90 PSNR value, 1757.6 MSE value with 75% structural similarity, and 96% Pearson correlation of Coefficient. Further, the performance of the proposed enhancement technique in identifying the presence or absence of retinal disease is analyzed using RFMiD data-set and the pre-trained VGG16 deep learning model. The model trained with training images enhanced with the proposed enhancement technique achieved 89.53% test accuracy and an F1 score of 0.89 on RFMiD test set images. The model trained using images without performing any enhancement achieved 87.81% test accuracy and an F1 score of 0.86. The proposed enhancement technique is evaluated on MESSIDOR and DRIVE datasets as well. The results show that the proposed enhancement techniques outperform other considered methods by achieving higher PSNR values.

Data availability

The dataset used in this research is Retinal Fundus Multi-Disease Dataset. It is available in the online repository - https://ieee-dataport.org/open-access/retinal-fundus-multi-disease-image-dataset-rfmid.

References

The World Report on Vision by WHO. https://www.iapb.org/wp-content/uploads/2020/09/world-vision-report-accessible1.pdf Accessed 2022-11-08.

MacGillivray, T. J. et al. Retinal imaging as a source of biomarkers for diagnosis, characterization and prognosis of chronic illness or long-term conditions. Br. J. Radiol. 87(1040), 20130832. https://doi.org/10.1259/bjr.20130832 (2014).

Yang, W., Xu, H., Yu, X. & Wang, Y. Association between retinal artery lesions and nonalcoholic fatty liver disease. Hep. Intl. 9(2), 278–282. https://doi.org/10.1007/s12072-015-9607-3 (2015).

Chang, Y.-S. et al. Risk of retinal vein occlusion following end-stage renal disease. Medicine 95(16), 3474. https://doi.org/10.1097/MD.0000000000003474 (2016).

Setiawan, A.W., Mengko, T.R., Santoso, O.S. & Suksmono, A.B. Color retinal image enhancement using clahe. in International Conference on ICT for Smart Society, pp. 1–3. https://doi.org/10.1109/ICTSS.2013.6588092 (2013)

Daniel, E. & Anitha, J. Optimum green plane masking for the contrast enhancement of retinal images using enhanced genetic algorithm. Optik126(18), 1726–1730

Zhou, M., Jin, K., Wang, S., Ye, J. & Qian, D. Color retinal image enhancement based on luminosity and contrast adjustment. IEEE Trans. Biomed. Eng. 65(3), 521–527. https://doi.org/10.1109/TBME.2017.2700627 (2018).

Pisano, E. D. et al. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 11(4), 193–200. https://doi.org/10.1007/BF03178082 (1998).

Ramlugun, G. S., Nagarajan, V. K. & Chakraborty, C. Small retinal vessels extraction towards proliferative diabetic retinopathy screening. Expert Syst. Appl. 39(1), 1141–1146. https://doi.org/10.1016/j.eswa.2011.07.115 (2012).

GeethaRamani, R. & Balasubramanian, L. Retinal blood vessel segmentation employing image processing and data mining techniques for computerized retinal image analysis. Biocybern. Biomed. Eng. 36(1), 102–118. https://doi.org/10.1016/j.bbe.2015.06.004 (2016).

Banić, N. & Lončarić, S. Smart light random memory sprays Retinex: A fast Retinex implementation for high-quality brightness adjustment and color correction. J. Opt. Soc. Am. A 32(11), 2136. https://doi.org/10.1364/JOSAA.32.002136 (2015).

Sonali, S. S., Singh, A. K., Ghrera, S. P. & Elhoseny, M. An approach for de-noising and contrast enhancement of retinal fundus image using CLAHE. Optics ‘I &’ Laser Technology 110, 87–98. https://doi.org/10.1016/j.optlastec.2018.06.061 (2019).

Alwazzan, M. J., Ismael, M. A. & Ahmed, A. N. A hybrid algorithm to enhance colour retinal fundus images using a wiener filter and CLAHE. J. Digit. Imaging 34(3), 750–759. https://doi.org/10.1007/s10278-021-00447-0 (2021).

Jin, K. et al. Computer-aided diagnosis based on enhancement of degraded fundus photographs. Acta Ophthalmol. 96(3), 320–326. https://doi.org/10.1111/aos.13573 (2018).

Gupta, B. & Tiwari, M. Color retinal image enhancement using luminosity and quantile based contrast enhancement. Multidimension. Syst. Signal Process. 30(4), 1829–1837. https://doi.org/10.1007/s11045-019-00630-1 (2019).

Jawad, E. M., Hazim, H. J. M. & Daway, G. Retinal image enhancement by using adapted histogram equalization based on segmentation and lab color space. Int. J. Intell. Eng. Syst. 15(3), 614–622. https://doi.org/10.22266/ijies2022.0630.52 (2022).

Singh, N., Kaur, L. & Singh, K. Histogram equalization techniques for enhancement of low radiance retinal images for early detection of diabetic retinopathy. Eng. Sci. Technol. Int. J. 22(3), 736–745. https://doi.org/10.1016/j.jestch.2019.01.014 (2019).

Qureshi, I., Ma, J. & Shaheed, K. A hybrid proposed fundus image enhancement framework for diabetic retinopathy. Algorithms 12(1), 14. https://doi.org/10.3390/a12010014 (2019).

Dissopa, J., Kansomkeat, S. & Intajag, S. Enhance contrast and balance color of retinal image. Symmetry 13(11), 2089. https://doi.org/10.3390/sym13112089 (2021).

Kumar, R. & Kumar Bhandari, A. Luminosity and contrast enhancement of retinal vessel images using weighted average histogram. Biomed. Signal Process. Control 71, 103089. https://doi.org/10.1016/j.bspc.2021.103089 (2022).

Wang, J., Li, Y.-J. & Yang, K.-F. Retinal fundus image enhancement with image decomposition and visual adaptation. Comput. Biol. Med. 128, 104116. https://doi.org/10.1016/j.compbiomed.2020.104116 (2021).

Xiong, L., Li, H. & Xu, L. An enhancement method for color retinal images based on image formation model. Comput. Methods Programs Biomed. 143, 137–150. https://doi.org/10.1016/j.cmpb.2017.02.026 (2017).

Pachade, S. et al. Retinal fundus multi-disease image dataset (RFMiD): A dataset for multi-disease detection research. Data 6(2), 14. https://doi.org/10.3390/data6020014 (2021).

Kuru, K. & Girgin, S. A bilinear interpolation based approach for optimizing hematoxylin and eosin stained microscopical images. In Pattern recognition in bioinformatics (eds Loog, M. et al.) 178–179 (Springer, Berlin, Heidelberg, 2011).

Gadde, A., Narang, S.K. & Ortega, A. Bilateral filter: Graph spectral interpretation and extensions. in 2013 IEEE International Conference on Image Processing, pp. 1222–1226 (2013). https://doi.org/10.1109/ICIP.2013.6738252

Wang, Z. & Bovik, A. C. A universal image quality index. IEEE Signal Process. Lett. 9(3), 81–84. https://doi.org/10.1109/97.995823 (2002).

Neto, A.M., Rittner, L., Leite, N., Zampieri, D.E., Lotufo, R. & Mendeleck, A. Pearson’s correlation coefficient for discarding redundant information in real time autonomous navigation system. in 2007 IEEE International Conference on Control Applications, pp. 426–431 (2007). https://doi.org/10.1109/CCA.2007.4389268

Author information

Authors and Affiliations

Contributions

P.C., R.J.K designed the research, P.C. implemented the method. P.C. and R.J.K evaluated the methodology, performed analysis, interpreted the results and wrote the manuscript. The authors declare complete consent for publication.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

C, P., R, J.K. Retinal image enhancement based on color dominance of image. Sci Rep 13, 7172 (2023). https://doi.org/10.1038/s41598-023-34212-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-34212-w

- Springer Nature Limited

This article is cited by

-

A new proposed model for image enhancement using the coefficients obtained by a subclass of the Sakaguchi-type function

Signal, Image and Video Processing (2024)