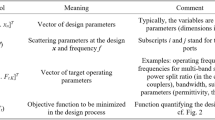

Abstract

The operation of high-frequency devices, including microwave passive components, can be impaired by fabrication tolerances but also incomplete knowledge concerning operating conditions (temperature, input power levels) and material parameters (e.g., substrate permittivity). Although the accuracy of manufacturing processes is always limited, the effects of parameter deviations can be accounted for in advance at the design phase through optimization of suitably selected statistical performance figures. Perhaps the most popular one is the yield, which provides a straightforward assessment of the likelihood of fulfilling performance conditions imposed upon the system given the assumed deviations of designable parameters. The latter are typically quantified by means of probability distributions pertinent to the fabrication process. The fundamental obstacle of the yield-driven design is its high computational cost. The primary mitigation approach nowadays is the employment of surrogate modeling methods. Yet, a construction of reliable metamodels becomes problematic for systems featuring a large number of degrees of freedom. Our work proposes a technique for fast yield optimization of microwave passives, which relies on response feature technology as well as variable-fidelity simulation models. Utilization of response features enables efficient handling of issues related to the system response nonlinearities. Meanwhile, the incorporation of variable-resolution simulations allows for accelerating the yield estimation process, which translates into remarkably low overall cost of the optimizing the yield. Our approach is verified with the use of three microstrip couplers. Comprehensive benchmarking demonstrates its superiority in terms of computational efficiency over the state-of-the-art algorithms, whereas reliability is corroborated by electromagnetic-driven Monte Carlo simulations.

Similar content being viewed by others

Introduction

Standard microwave design procedures, including parametric optimization, normally neglect possible deviations of dimensions and material parameters from the nominal values thereof. At the same time, manufacturing processes are always imperfect, and so is the knowledge pertaining to the material properties (e.g., substrate relative permittivity) or operational conditions (possible geometrical distortions of the device, temperature, signal power level, etc.). All of them may exert some, typically undesirable, effects on electrical characteristics of the device1. The uncertainties pertinent to operating conditions (also referred to as epistemic2) are systematic and can be accounted for by ensuring satisfactory system performance within the prescribed condition range (e.g., input signal level). On the other hand, fabrication tolerances, e.g., inaccuracy of chemical etching for PCB-based components, or mechanical milling for waveguide structures, are of stochastic nature. These have to be described by means of process-specific probability distributions, typically Gaussian of a given mean and covariance matrix. As the performance requirements imposed on contemporary microwave devices are often stringent, tolerance-induced deviations of designable parameters from their nominal values may readily result in the system failing to meet the specifications. Consequently, the ability to quantify the effects of uncertainties is instrumental in estimating the design robustness, and, even more importantly, robustness enhancement. The necessitates optimization of suitably formulated statistical performance figures3, and is often labeled as tolerance-aware design4, robust design5, or design centering6. For microwave circuits, the performance requirements are typically formulated in a minimax form (e.g., through acceptance thresholds for return loss, transmission, power split, or phase responses), which makes yield7 one of the most appropriate metrics of design robustness.

Evaluation of the system robustness under fabrication tolerances requires statistical analysis8, which is a CPU-intensive procedure when conducted utilizing full-wave electromagnetic (EM) simulations. At the same time, EM analysis is required to maintain reliability. The latter may be especially critical for compact microwave passives that normally feature considerable cross-coupling effects being byproducts of such miniaturization strategies as transmission line meandering9 or exploiting the slow-wave phenomenon10. Excessive computational cost of direct EM-driven statistical analysis (e.g., Monte Carlo simulation) fostered the development of accelerated methods. One possibility is to reduce the problem complexity (e.g., worst case analysis11), yet accuracy degradation may be unacceptable. As of now, the most popular uncertainty quantification methods rely on surrogate modeling techniques, which enable massive system evaluations at minimum expenses. Some of widely used techniques include polynomial chaos expansion (PCE)12,13, neural networks14, and response surface approximation15. Unfortunately, utilization of data-driven surrogates is encumbered by the curse of dimensionality16, which may be mitigated to a certain extent by dimensionality reduction through, e.g., the employment of principal component analysis17), improved modeling schemes (e.g., PC kriging18), usage of multi-fidelity simulations (e.g., co-kriging19), or the usage of physics-based surrogates such as space mapping20.

The quantification of the effects of uncertainties is a foundation of robust design procedures (yield-driven optimization21, tolerance-aware design22), which aim at improving the system immunity to manufacturing and other tolerances. In particular, optimization of yield attempts to directly increase the likelihood of fulfilling the performance conditions imposed upon the circuit under the assumed parameter deviations. Robust optimization can also be considered in a geometrical sense (by placing the design near the center of the feasible region), or addressed by increasing the acceptable levels of tolerances that ensure meeting the specifications (e.g., tolerance hypervolume maximization23). Again, the biggest challenge of EM-driven statistical design are computational expenditures entailed by repetitive circuit simulations. Most practical optimization frameworks employ surrogate modeling methods24,25,26,27,28,29,30,31,32 both data-driven24,25, and physics-based30. In some cases, when the considered statistical figures of merit are functions of statistical moments of the response of the system at hand, polynomial chaos expansion models can be used to avoid numerical integration of the underlying probability distributions33. For microwave components and minimax-type of specifications, Monte Carlo analysis34 carried out with the aid of the surrogate may be unavoidable to evaluate the robust objective function. Rendering reliable metamodels for yield optimization is, however, more demanding than for statistical analysis purposes due to a larger part of the parameter space that has to be covered. The alleviation is possible by adopting iterative approaches (e.g., sequential approximate optimization35), in which the model is locally rendered in the proximity to the current design, thereby the training data acquisition cost may be kept low. Meanwhile, the model domain is relocated accordingly in the course of the optimization procedure. The response feature technology36 offers alternative way of handling the problem, in which the design specifications are formulated at the tier of suitably defined characteristic (feature) points of the circuit outputs. Close-to-linear relationship between the feature points coordinates and circuit dimensions facilitates a surrogate model construction, thereby enabling acceleration of various design procedures37.

This paper introduces a technique for fast yield optimization of microwave passive components, which capitalizes on the feature-based framework reported in38, and further expedites a robust design process by incorporating variable-resolution EM models. Following38, our methodology utilizes local feature-based surrogates, employed for cost-effective estimation of the circuit yield, and embeds the search process in the trust region framework to control design relocation, and to ensure convergence. An additional speedup is achieved by estimating response feature sensitivity at the level of low-resolution EM analysis. Due to reasonably good correlations between EM models of various fidelities, zero-order correction turns sufficient to ensure acceptable surrogate accuracy. As a result, the computational expenses of the robust design procedure is cut down, and amounts to a handful of EM high-fidelity simulations of the circuit at hand. Comprehensive verification experiments carried out for three microstrip couplers demonstrate superior performance of the proposed method over the benchmark. The latter include several recent surrogate-assisted techniques, as well as the feature-based approach of38.

Yield optimization of microwave passives using multi-fidelity response features

This section delineates the proposed yield optimization algorithm. Background information concerning nominal (“Nominal optimization problem formulation” section) and robust design problem formulation (“Yield optimization” section) is followed by recalling the concept and the properties of response features (“Response features for low-cost yield estimation” section), as well as variable-resolution computational models (“Variable-resolution EM models for further cost reduction” section). The variable-fidelity feature-based surrogates are discussed in “Yield optimization using variable-resolution feature-based surrogates” section, whereas the complete optimization procedure is summarized in “Complete algorithm” section.

Nominal optimization problem formulation

The fabrication yield is defined with regard to design specifications pertinent to the microwave component of interest. These, in turn, are defined using a set of conditions for the scattering parameters (or the functions thereof). In this paper, the verification structures considered in “Numerical verification” section are branch-line and rat-race couplers, therefore, the specific performance requirements used to illustrate the discussed concept will pertain to this type of circuits. For couplers, the effects of manufacturing inaccuracies manifest themselves as modifications of the power split ratios, but also relocation of the operating frequencies/bandwidths. Maximization of yield aims at adjusting the system geometry parameters to enhance the probability of fulfilling the specs given the parameter deviations. All of these will be rigorously formulated in the remaining part of this section.

Consider an N-band coupler described by n geometry parameters aggregated into a vector x = [x1 … xn]T. Further, let [fL.k fR.k] denote the kth operating band, k = 1, …, N, with fL.k and fR.k being the lower and upper ends of the band, respectively. Within that band, the matching and isolation characteristics are not to exceed a user-defined value Smax (e.g., − 20 dB).

Finally, let Dk be the tolerance thresholds for the power split deviations at the center frequency f0.k = [fR.k + fL.k]/2, and Sk stand for the intended power split ratio. The following conditions are to be fulfilled for the design x to satisfy the aforementioned specifications, where Sk1(x,f) stands for the respective S-parameter response, k = 1, …, 4:

The fulfillment of (1)–(3) is analogous to the circuit operating at the required bandwidth and ensuring assumed power division for all frequency intervals [fL.k fR.k].

Although the conditions (1)–(3) define the minimum requirements, it is normally possible, through optimization, to improve the circuit performance further. This can be done, e.g., by improving both matching and isolation over the target bandwidths, or to broaden the bandwidths at the Smax level. The design that is optimized in the former sense, will be referred to as the nominal one, and denoted as x(0). It is obtained by solving

subject to the equality constraint

The design x(0) refers to the best achievable design that can be attained without considering manufacturing tolerances. In this work, it will be used as a starting point for yield optimization.

Yield optimization

Let dx denote a vector of deviations of the circuit parameters from their nominal values. The deviations originate from manufacturing inaccuracy and are quantified using probability distributions that are specific to the fabrication process. In this work, we assume joint Gaussian distribution with zero mean and variance σ (common for all parameters). In a more generic setup, the distribution can be determined by a covariance matrix that accounts for the circuit topology (e.g., correlations between deviations for certain parameters).

The fabrication yield Y is computed by integrating the probability density function p(x,x(0)) that describes deviations of x from the nominal design x(0). The integration is executed over the set Xf, which contains all designs satisfying the performance specifications (e.g., conditions (1) through (3) for the considered coupling structure). We have39

As the geometry of the feasible set is not known explicitly, in practice, the yield is estimated through numerical integration, most often by employing Monte Carlo simulation. Given a set of random observables dx(k), k = 1, …, Nr, allocated according to the density function p, we get

where H(x) = 1 if the performance specifications are satisfied for the design x, H(x) = 0 if the specs are violated.

The yield optimization task is formulated as

Solving (8) entails repetitive yield estimations, which is associated with a prohibitive CPU cost when realized directly with the use of EM analysis. As elaborated on in the introduction, the majority of practical approaches employ fast surrogate models for the purpose of evaluating (7). However, building the surrogate can also generate considerable expenses and be numerically challenging for circuits described by many parameters. This paper incorporates two mechanisms intended to mitigate these issues: a response feature approach (“Response features for low-cost yield estimation” section) and variable-resolution EM models (“Variable-resolution EM models for further cost reduction” section).

Response features for low-cost yield estimation

Following38, the yield estimation while solving the problem (8) is expedited using a response feature technology36. Feature-based modeling40 and optimization41 benefits from re-formulating the design problem in terms of properly selected characteristic points of the circuit outputs, and weakly nonlinear dependence between the frequency and level coordinates of these points on circuit dimensions. This section discusses how the performance specifications considered in “Nominal optimization problem formulation” section can be expressed in terms of response features, which will be used in “Yield optimization using variable-resolution feature-based surrogates” section to realize reduced-cost yield maximization.

The choice of the feature points depends on design specifications assumed for the system. Going back to the coupler example of “Nominal optimization problem formulation” section (cf. conditions (1)–(3)), it can be concluded that the feature points should account for − 20 dB levels for the matching and isolation responses, and also for the power split at the center frequency. Figure 1 illustrates this case for an exemplary branch-line coupler. Note that for certain feature points the relevant information is carried by their frequency coordinates, level coordinates, or both.

Conceptual illustration of response features for a microwave coupler: S-parameters and feature points representing − 20 dB level of |S11| (matching) and |S41| (isolation) responses (o), along with |S21| and |S31| (transmission) at the intended operating frequency f0 = 1.5 GHz (verbal description: square). The characteristic point coordinates permit assessment of performance requirements satisfaction (100 MHz matching/isolation bandwidth centered around f0), and maximal power split deviation of 0.5 dB at f0): (a) coupler geometry, (b) design fulfilling specs, (c) design infringing power split and bandwidth conditions.

Throughout this paper, the feature points data will form a feature vector P, the entries of which will be the frequency and/or level coordinates of corresponding features. For the considered coupler example, we have

In (9), the frequencies f1 through f4 correspond to the − 20 dB level of |S11| (f1 and f2) and |S41| (f3 and f4), whereas l1 and l2 are the levels of the transmission characteristics |S21| and |S31|, respectively, at the (target) coupler center frequency. This can be generalized to the example of a multi-band coupler, where we have

The secondary subscript is the index of the circuit band. In general, the composition of the feature vector is problem- and performance-specification-dependent. The coordinates of the feature vector are garnered from EM-simulated characteristics of the circuit at hand.

Having defined the vector P, we are in a position to reformulate the performance requirements (conditions (1) through (3)) in terms of its coordinates:

As mentioned at the beginning of this section, the benefit of replacing (1)–(3) by (11)–(13) is that the relationships between the feature point coordinates and circuit dimensions are to a smaller degree nonlinear than an analogous relationship for the entire frequency characteristics. This facilitates a construction of surrogate models but also leads to accelerating the optimization processes.

Variable-resolution EM models for further cost reduction

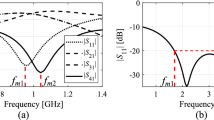

The second mechanism used in this work to accelerate the robust design process lies in the employment of multi-fidelity EM models. Reducing the discretization density of the structure allows for speeding up the process of simulation yet with detrimental effect to accuracy, which can manifest itself through the frequency and/or level shifts of the circuit characteristics. On the other hand, as the underlying physics of both low- and high-fidelity is the same, the EM models of different resolutions are normally well correlated, assuming that the model resolution is not pushed too far. This is illustrated in Fig. 2 for the coupler of Fig. 1a, where we can observe low- and high-fidelity model responses for three randomly generated designs. In this case, the high-fidelity model features about 80,000 mesh cells, whereas the low-fidelity model is set up with approximately 20,000 cells.

S-parameters for the coupler considered in Fig. 1 evaluated using the low- (gray) and high-fidelity (black) EM simulation. Observe considerable misalignment, especially in terms of the frequency shifts, yet the overall shape and the amount of misalignment is consistent for different designs shown in the pictures (plots (a) through (c)).

As explained in “Response features for low-cost yield estimation” section, in this work, the robust design problem will be solved at the level of response features, therefore, we are mainly interested in correlation between the coordinates of the feature points of low- and high-fidelity models, or, more specifically, the feature point sensitivities. Figure 3 shows the differentials Δpj = pj(xk) − pj(xl) of the high- and low-fidelity model computed for all pairs of designs selected from a ten-element random set {xk}k = 1, …, 10, generated in the vicinity of a nominal vector x(0) for a coupler of Fig. 1a.

Scatter plots of feature coordinate differentials Δpj = pj(xk) − pj(xl) computed for all pairs of ten designs {xk}k = 1,…,10, generated in the vicinity of a nominal vector x(0) for a microwave coupler considered in Fig. 1. Horizontal and vertical axis correspond to the differentials of the high- and low-fidelity model, respectively.

The features are selected as presented in Fig. 1 (see also (9)). It can be observed that the correlation between the models is excellent with the linear correlation coefficients equal to 0.99, 0.97, 0.97, 0.97, 0.96, and 0.96 for f1, f2, f3, f4, l1, and l2, respectively. This means that response feature sensitivity predicted by the low-fidelity model will be in a good agreement with sensitivity evaluated with the high-fidelity one. Still, the computational expenditures associated with sensitivity estimation is considerably lower, typically, by a factor three to four.

Yield optimization using variable-resolution feature-based surrogates

The core of the proposed yield optimization follows the technique introduced in38, which is enhanced by utilization of the variable-resolution EM models. The yield is maximized in an iterative fashion using the trust-region (TR) procedure42.

In every iteration, a new design x(i+1) being an approximation of the optimum vector x* is produced, i = 0, 1, … (here, the nominal design x(0) is the starting point), by solving

The yield YP is computed at the level or response features as in (11)–(13), with the feature point coordinates predicted using a linear (regression) model L(i) of P(x) comprising the response features

in which JP.L is the approximation of the Jacobian matrix JP

estimated by finite differentiation with the use of the low-fidelity EM model. Let us denote the time evaluation ratio M between the high- and low-fidelity model. The cost of constructing the model L(i) equals to 1 + n/M, where n is the parameter space dimensionality. Assuming conservatively that M = 3, the computational savings reach sixty percent for n = 10.

Adjusting the size parameter d(i) is an important consideration in TR frameworks. It is normally done based on the gain ratio r, which is defined as a ratio between the observed improvement of the merit function (here, the yield) and the improvement predicted by the linear model. It should be noted that evaluation of the yield at the candidate design x(i+1) requires rebuilding of the linear model at x(i+1), which would turn to be a waste of computational resources if r < 0, i.e., if the candidate design is rejected (according to the TR principles42, the design x(i+1) is only retained if r > 0). For the sake of cost savings, in this work, the gain factor is evaluated as r = [YP#(x(j+1)) − YP(x(j))]/[YP(x(j+1)) − YP(x(j))], where YP# is obtained from a linear model defined as in (15); however, instead of P(x(i)) the vector P(x(i+1)) derived from EM-evaluated system output for x(i+1). This only requires one EM analysis. The approximation is due to using the same Jacobian matrix for both x(i) and x(i+1), which is tenable as the distance between these two vectors is normally small (comparable to or smaller than the maximum assumed parameter deviation).

The actual yield estimation with the linear predictor L(i) is executed as Monte-Carlo-based integrating of (6), with the use of a large number of randomly allocated observables to reduce the estimation variance. For this purpose, the feature-based performance specifications (11)–(13) are verified for the output of the model L(i) obtained for each observable generated using the probability distribution assumed for input tolerances. The cost of this process is small in comparison to EM simulation of the circuit.

Complete algorithm

The flow diagram of the yield enhancement procedure introduced in this section has been presented in Fig. 4. As emphasized before, we use the nominal design x(0) as the starting point for robust design process. The high-resolution EM model is only utilized to evaluate the circuit response at the current design, whereas response feature sensitivities are estimated using the low-fidelity model. The linear regression model is constructed upon extracting feature point coordinates, and utilized to obtain the candidate design x(i+1). The gain ratio is evaluated as explained in “Yield optimization using variable-resolution feature-based surrogates” section. It is used to decide about the acceptance of the candidate design and to adjust the search region size parameter d(i). The algorithm is terminated if either ||x(i+1) − x(i)||< ε (convergence in argument), or d(i+1) < ε (reduction of the TR size). In the numerical experiments of “Numerical verification” section, we employ the termination threshold ε = 10−3.

Numerical verification

The proposed yield optimization framework is validated using three examples of microstrip couplers. It is also compared to four surrogate-assisted methods and the feature-based approach of38. The optimization procedure reliability is verified via Monte Carlo analysis performed with the use of the EM simulation models of the respective structures.

Case studies

In order to validate the yield optimization procedure presented in this work, we use three microstrip couplers, a miniaturized rat-race coupler (Coupler I)43 (Fig. 5a), a compact branch-line coupler, BLC (Coupler II)44 (Fig. 5b), along with a dual-band branch-line coupler (Coupler III)45 (Fig. 5c). Table 1 gathers the necessary data on all circuits, which include independent geometry parameters, dielectric substrates, the setup of low- and high-fidelity models, performance specifications, as well as nominal designs. In all cases, the computational models are evaluated using the time-domain solver of CST Microwave Studio. Also, in all cases, the input parameter tolerances are assumed to follow independent zero-mean Gaussian distributions with 0.017 mm variance, and maximum deviations limited to dmax = 0.05 mm.

Verification circuits used for validation of the yield optimization framework of “Yield optimization of microwave passives using multi-fidelity response features” section: (a) miniaturized rat-race coupler with folded transmission lines (Coupler I)43, (b) compact branch-line coupler (Coupler II)44, (c) dual-band branch-line coupler (Coupler III)45.

Reference algorithms

The performance of the introduced optimization procedure is benchmarked against several methods that have been outlined in Table 2. All of these are surrogate-assisted techniques that represent different approaches to robust design using data-driven models. In Algorithm 1, EM model is entirely replaced by the kriging surrogate built in a sufficiently spacious domain, allocated in the vicinity of the nominal design. This approach is straightforward but the cost of constructing the model may be large owing to the extent of the domain. Algorithm 2 utilizes a sequential approximate optimization approach, with several local surrogates rendered along the optimization path, which allows for lowering the cost of individual model construction at the expense of repeating the process across a few iterations. Overall, this method is expected to offer computational savings over Algorithm 1, especially for parameter spaces of larger dimensions.

Performance-driven yield enhancement21: (a) S-parameters of a microwave coupler for design x(0) (nominal), design x(1) (degraded power split), and design x(2) (enhanced bandwidth at − 20 dB); for clarity, only relevant characteristics are shown; the directions important for yield manipulation are determined by designs x(1) and x(2); (b) surface S(t) parameterized by vector t = [t1 t2]T is delimited by the designs x(0), x(1), and x(2); the model domain XS is a union of intervals SI(t) for − 1 ≤ t1, t2 ≤ 1.

Algorithm 3 employs the performance-driven modeling concept47, in which the extent of the metamodel domain is larger along the important directions (here, representing more consequential variations of the circuit yield), and smaller along the remaining directions (see Fig. 6). This allows to combine the advantages of Algorithms 1 and 2, i.e., to conclude the robust design process using a single model, while maintaining relatively low cost of data acquisition. Finally, Algorithm 4 is the framework reported in38, which utilizes the same mechanisms as described in “Response features for low-cost yield estimation”, “Yield optimization using variable-resolution feature-based surrogates” and “Complete algorithm” sections, but the entire optimization process is conducted using the high-resolution EM model.

The purpose of the verification experiments is to analyze the performance indicators of the presented and the benchmark algorithms, in particular, the computational complexity and reliability. The latter is validated through EM-based Monte Carlo (MC) simulation, carried out with the use of 500 random points featuring the assumed probability distribution for input tolerances. While the number of points is restricted because of high cost of massive EM analyses, a significant consequence is that this leads to a decreased accuracy of MC, which is about two percent.

Results and discussion

Table 3 gathers the yield-enhanced solutions for Circuits I through III found by the proposed variable-resolution feature-based procedure. Tables 4, 5, and 6 gather the comparison data for all the circuits and all benchmark algorithms. Observe that—as expected—the incorporation of variable-resolution models leads to further improvement of the cost efficacy of the yield optimization routine. It is already remarkably low for Algorithms 3 and 4, with the average number of high-fidelity EM simulations being 105 and 31, respectively. Yet, the proposed approach brings these numbers even lower, to the average of twenty, which corresponds to 36-percent saving over Algorithm 4, and 81-percent savings over Algorithm 3. As explained in “Yield optimization of microwave passives using multi-fidelity response features” section, this is due to the fact that most of EM analyses is executed to estimate the Jacobian matrix, and carrying out this task using the low-fidelity models reduces this cost by a factor of about three for the considered coupler circuits.

In terms of design quality, the solutions obtained using the proposed approach are comparable to those identified using the benchmark methods. The same can be said about reliability, as confirmed through EM-based Monte Carlo simulations. It should be reiterated that the variance of the MC-estimated yield is relatively high (up to two percent), as emphasized before, due to a relatively low number of observables used in the process. This means that the yield differences of up to two or three percent are statistically insignificant.

It can also be noticed that the differences between MC- and surrogate-model-estimated yield values are the highest for Circuit III, which is because this circuit is the most difficult to model. For example, the relative RMS error of the surrogate used by Algorithm 1 is better than four percent for Circuits I and II, but it exceeds six percent for Circuit III, despite of using as many as 800 training data samples. Figures 7, 8 and 9 provide visualization of EM-based Monte Carlo simulation at the nominal and robust designs obtained using the proposed algorithm, for Circuit I, II, and III, respectively. Again, MC is based on 500 random samples.

Visualization of EM-based Monte Carlo analysis for Circuit I: (a) at the nominal design, and (b) at the optimal design rendered with the use of the procedure introduced in this work. MC is executed using 500 random data points. Gray curves represent EM simulations, whereas the circuit characteristics at the nominal (a) and optimal design (b) are shown black.

Visualization of EM-based Monte Carlo analysis for Circuit II (a) at the nominal design, and (b) at the optimal design rendered with the use of the procedure introduced in this work. MC is executed using 500 random data points. Gray curves represent EM simulations, whereas the circuit characteristics at the nominal (a) and optimal design (b) are shown black.

Visualization of EM-based Monte Carlo analysis for Circuit III: (a) at the nominal design, and (b) at the optimal design rendered with the use of the procedure introduced in this work. MC is executed using 500 random data points. Gray curves represent EM simulations, whereas the circuit characteristics at the nominal (a) and optimal design (b) are shown black.

Observe that recognition of the response features may prove problematic due to misshaped circuit responses, which may occur for design cases with large statistical variations. Nevertheless, as indicated by the results of EM-driven Monte Carlo simulations (Figs. 7, 8, 9), such a situation would might happen for the parameter variations of at least an order of magnitude larger than those assumed in the paper under review (i.e., dmax = 0.05 mm). Practically, for the PCB technology, such large variations are unrealistic, as this would mean, e.g., error of etching the circuit slits of around one millimeter (i.e., comparable to the slit width). The actual manufacturing procedures (chemical etching or mechanical milling) are considerably more accurate with the deviations corresponding to the levels assumed in this work.

The computational cost of our procedure amounts to around 16, 20 and 23 EM simulations for the structures featuring 6, 9, and 10 parameters (see Tables 4, 5, 6). Thus, the dependence of the cost on the number of design variables is close-to-linear: the ratio between the computational cost for Circuit II (described by the largest number of design variables) and Circuit I (described by the smallest number of design variables) equals around 1.5 and it is almost equal to the ratio between the respective numbers of design variables. This suggests that for higher-dimensionality cases, the computational cost of the proposed procedure would be increased proportionally to the number of geometry parameters describing the microwave component of interest. The relationship between the computational cost and the number of design variables is visualized in Fig. 10.

The remarkable cost reduction obtained using our approach is achieved at the expense of limiting the scope of its applicability to structures whose responses feature discernible characteristics points, which should be defined to enable estimation of the yield. The examples of such structures include microwave filters or impedance matching transformers. In the case of microwave filters, the response features may be defined as the local maxima of the return loss within the pass-band, as well as the crossing points at the edge of the pass-band. Similar definition may be employed in the case of impedance matching transformers, where possible definition of the response features includes local maxima of the reflection characteristics, as well as points defining the bandwidth at the assumed target level, e.g. − 20-dB. Overall, the proposed methodology may be not as versatile as other frameworks that do not impose any restraints on the response structure of the component under design. Yet, the characteristics of many real-world microwave passives are inherently structured. Consequently, the employment of the feature-based techniques such as the proposed one is only slightly hindered by the aforementioned factors.

Conclusion

This work introduced, a novel technique for cost-efficient optimization of the fabrication yield of microwave passives. The presented methodology employs an ensemble of acceleration mechanisms, including regression-based surrogate modeling at the level of response features, as well as variable-fidelity EM simulations. Both permit reliable and fast estimation of the yield, maximization of which exploits a sequential approximate optimization paradigm, and also the trust-region framework to govern design relocation and secure convergence of the procedure. Numerical verification of our procedure has been realized with the use of three microstrip couplers. Its efficacy has been compared to several surrogate-assisted algorithms. The results demonstrate that incorporation of the aforementioned algorithmic tools gives a competitive edge over the benchmark, with computational savings as high as over ninety percent. In absolute terms, the average cost of yield optimization corresponds to only twenty EM circuit simulations at the high-fidelity level, which is 36 percent cheaper than for the feature-based algorithm exclusively using high-fidelity models (being one of the benchmark methods). At the same time, the reported speedup does not compromise the yield evaluation reliability, as corroborated using EM-based MC analysis. The proposed framework is simple to implement, and can be viewed as a CPU-efficient replacement of conventional statistical design methods, particularly for circuits whose responses exhibit easily distinguishable characteristic points.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Teberio, F., et al. Waveguide band-pass filter with reduced sensitivity to fabrication tolerances for Q-band payloads. In IEEE MTT-S International Microwave Symposium (IMS), Honolulu, HI, USA 1464–1467 (2017).

Kapse, I., Prasad, A. K. & Roy, S. Analyzing impact of epistemic uncertainty in high-speed circuit simulation using fuzzy variables and global polynomial chaos surrogates. In IEEE MTT-S International Conference on Numerical Electromagnetic Multiphysics Modeling Optimization for RF, Microwave, and Terahertz Applications (NEMO), Seville, Spain 320–322 (2017).

Chen, Z. et al. A large-signal statistical model and yield estimation of GaN HEMTs based on response surface methodology. IEEE Microw. Wirel. Compon. Lett. 26(9), 690–692 (2016).

Biernacki, R., Chen, S., Estep, G., Rousset, J. & Sifri, J. Statistical analysis and yield optimization in practical RF and microwave systems. In IEEE MTT-S International Microwave Symposium Digest, Montreal 1–3 (2012).

Ma, B., Lei, G., Liu, C., Zhu, J. & Guo, Y. Robust tolerance design optimization of a PM claw pole motor with soft magnetic composite cores. IEEE Trans. Magn. 54(3), paper No. 8102404 (2018).

Scotti, G., Tommasino, P. & Trifiletti, A. MMIC yield optimization by design centering and off-chip controllers. IET Proc. Circuits Devices Syst. 152(1), 54–60 (2005).

Zhang, J., Feng, F., Na, W., Yan, S. & Zhang, Q. Parallel space-mapping based yield-driven EM optimization incorporating trust region algorithm and polynomial chaos expansion. IEEE Access 7, 143673–143683 (2019).

Pietrenko-Dabrowska, A. & Koziel, S. Performance-driven yield optimization of high-frequency structures by kriging surrogates. Appl. Sci. 12(7), paper no. 3697 (2022).

Firmansyah, T., Alaydrus, M., Wahyu, Y., Rahardjo, E. T. & Wibisono, G. A highly independent multiband bandpass filter using a multi-coupled line stub-SIR with folding structure. IEEE Access 8, 83009–83026 (2020).

Chen, S. et al. A frequency synthesizer based microwave permittivity sensor using CMRC structure. IEEE Access 6, 8556–8563 (2018).

Prasad, A. K. & Roy, S. Reduced dimensional Chebyshev-polynomial chaos approach for fast mixed epistemic-aleatory uncertainty quantification of transmission line networks. IEEE Trans. Compon. Packag. Manuf. Technol. 9(6), 1119–1132 (2019).

Kersaudy, P., Mostarshedi, S., Sudret, B., Picon, O. & Wiart, J. Stochastic analysis of scattered field by building facades using polynomial chaos. IEEE Trans. Antenna Propag. 62(12), 6382–6393 (2014).

Sengupta, M. et al. Application-specific worst case corners using response surfaces and statistical models. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 24(9), 1372–1380 (2005).

Matoglu, E., Pham, N., De Araujo, D., Cases, M. & Swaminathan, M. Statistical signal integrity analysis and diagnosis methodology for high-speed systems. IEEE Trans. Adv. Packag. 27(4), 611–629 (2004).

Zhang, J., Feng, F., Jin, J. & Zhang, Q.-J. Efficient yield estimation of microwave structures using mesh deformation-incorporated space mapping surrogates. IEEE Microw. Wirel. Compon. Lett. 30(10), 937–940 (2020).

Koziel, S., Mahouti, P., Calik, N., Belen, M. A. & Szczepanski, S. Improved modeling of miniaturized microwave structures using performance-driven fully-connected regression surrogate. IEEE Access 9, 71470–71481 (2021).

Ochoa, J. S. & Cangellaris, A. C. Random-space dimensionality reduction for expedient yield estimation of passive microwave structures. IEEE Trans. Microw. Theory Technol. 61(12), 4313–4321 (2013).

Leifsson, L., Du, X. & Koziel, S. Efficient yield estimation of multi-band patch antennas by polynomial chaos-based kriging. Int. J. Numer. Model. 33(6), e2722 (2020).

Kennedy, M. C. & O’Hagan, A. Predicting the output from complex computer code when fast approximations are available. Biometrika 87, 1–13 (2000).

Abdel-Malek, H. L., Hassan, A. S. O., Soliman, E. A. & Dakroury, S. A. The ellipsoidal technique for design centering of microwave circuits exploiting space-mapping interpolating surrogates. IEEE Trans. Microw. Theory Technol. 54(10), 3731–3738 (2006).

Pietrenko-Dabrowska, A. Rapid tolerance-aware design of miniaturized microwave passives by means of confined-domain surrogates. Int. J. Numer. Model. 33(6), e2779 (2021).

Laxminidhi, T. & Pavan, S. Efficient design centering of high-frequency integrated continuous-time filters. IEEE Trans. Circuits Syst. I 54(7), 1481–1488 (2007).

Wu, Q., Chen, W., Yu, C., Wang, H. & Hong, W. Multilayer machine learning-assisted optimization-based robust design and its applications to antennas and arrays. IEEE Trans. Antenna Propag. 69, 6052–6057 (2021).

Li, Y., Ding, Y. & Zio, E. Random fuzzy extension of the universal generating function approach for the reliability assessment of multi-state systems under aleatory and epistemic uncertainties. IEEE Trans. Reliab. 63(1), 13–25 (2014).

Easum, J. A., Nagar, J., Werner, P. L. & Werner, D. H. Efficient multiobjective antenna optimization with tolerance analysis through the use of surrogate models. IEEE Trans. Antenna Propag. 66(12), 6706–6715 (2018).

Rossi, M., Dierck, A., Rogier, H. & Vande-Ginste, D. A stochastic framework for the variability analysis of textile antennas. IEEE Trans. Antenna Propag. 62(16), 6510–6514 (2014).

Zhang, J. et al. Polynomial chaos-based approach to yield-driven EM optimization. IEEE Trans. Microw. Theory Tech. 66(7), 3186–3199 (2018).

Du, J. & Roblin, C. Stochastic surrogate models of deformable antennas based on vector spherical harmonics and polynomial chaos expansions: Application to textile antennas. IEEE Trans. Antenna Propag. 66(7), 3610–3622 (2018).

Tomy, G. J. K. & Vinoy, K. J. A fast polynomial chaos expansion for uncertainty quantification in stochastic electromagnetic problems. IEEE Antenna Wirel. Propag. Lett. 18(10), 2120–2124 (2019).

Pietrenko-Dabrowska, A., Koziel, S. & Golunski, L. Low-cost yield-driven design of antenna structures using response-variability essential directions and parameter space reduction. Sci. Rep. 12, art. No. 15185 (2022).

Zhang, J. et al. A novel surrogate-based approach to yield estimation and optimization of microwave structures using combined quadratic mappings and matrix transfer functions. IEEE Trans. Microw. Theory Tech. 70(8), 3802–3816 (2022).

Zhang, J., Feng, F., Na, W., Jin, J. & Zhang, Q. J. Adaptively weighted training of space-mapping surrogates for accurate yield estimation of microwave components. In International Microwave Symposium (IMS), Los Angeles, CA, USA 64–67 (2020).

Rayas-Sanchez, J. E., Koziel, S. & Bandler, J. W. Advanced RF and microwave design optimization: A journey and a vision of future trends. IEEE J. Microw. 1(1), 481–493 (2021).

Rayas-Sanchez, J. E. & Gutierrez-Ayala, V. EM-based Monte Carlo analysis and yield prediction of microwave circuits using linear-input neural-output space mapping. IEEE Trans. Microw. Theory Tech. 54(12), 4528–4537 (2006).

Koziel, S. & Bekasiewicz, A. Sequential approximate optimization for statistical analysis and yield optimization of circularly polarized antennas. IET Microw. Antenna Propag. 12(13), 2060–2064 (2018).

Koziel, S. Fast simulation-driven antenna design using response-feature surrogates. Int. J. RF Microw. CAE 25(5), 394–402 (2015).

Koziel, S. & Bandler, J. W. Rapid yield estimation and optimization of microwave structures exploiting feature-based statistical analysis. IEEE Trans. Microw. Theory Tech. 63(1), 107–114 (2015).

Pietrenko-Dabrowska, A. & Koziel, S. Design centering of compact microwave components using response features and trust regions. AUE Int. J. Electr. Commun. 14, 8550 (2021).

Kim, D., Kim, M. & Kim, W. Wafer edge yield prediction using a combined long short-term memory and feed-forward neural network model for semiconductor manufacturing. IEEE Access 8, 215125–215132 (2020).

Pietrenko-Dabrowska, A. & Koziel, S. Simulation-driven antenna modeling by means of response features and confined domains of reduced dimensionality. IEEE Access 8, 228942–228954 (2020).

Koziel, S. & Pietrenko-Dabrowska, A. Expedited feature-based quasi-global optimization of multi-band antennas with Jacobian variability tracking. IEEE Access 8, 83907–83915 (2020).

Conn, A.R., Gould, N.I.M., Toint, P.L. Trust Region Methods; SIAM: Philadelphia, PA, USA, 2000.

Koziel, S. & Pietrenko-Dabrowska, A. Reduced-cost surrogate modeling of compact microwave components by two-level kriging interpolation. Eng. Opt. 52(6), 960–972 (2019).

Tseng, C. & Chang, C. A rigorous design methodology for compact planar branch-line and rat-race couplers with asymmetrical T-structures. IEEE Trans. Microw. Theory Tech. 60(7), 2085–2092 (2012).

Xia, L., Li, J., Twumasi, B. A., Liu, P. & Gao, S. Planar dual-band branch-line coupler with large frequency ratio. IEEE Access 8, 33188–33195 (2020).

Queipo, N. V. et al. Surrogate-based analysis and optimization. Prog. Aerosp. Sci. 41(1), 1–28 (2005).

Koziel, S. & Pietrenko-Dabrowska, A. Performance-Driven Surrogate Modeling of High-Frequency Structures (Springer, 2020).

Acknowledgements

The authors would like to thank Dassault Systemes, France, for making CST Microwave Studio available. This work was supported in part by the Icelandic Centre for Research (RANNIS) Grant 217771, and by National Science Centre of Poland Grant 2018/31/B/ST7/02369.

Author information

Authors and Affiliations

Contributions

Conceptualization, A.P. and S.K.; methodology, A.P. and S.K.; software, A.P. and S.K.; validation, A.P. and S.K.; formal analysis, S.K.; investigation, A.P. and S.K.; resources, S.K.; data curation, A.P. and S.K.; writing—original draft preparation A.P. and S.K..; writing—review and editing, A.P. and S.K.; visualization, A.P. and S.K.; supervision, S.K.; project administration, S.K.; funding acquisition, S.K., All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pietrenko-Dabrowska, A., Koziel, S. Rapid yield optimization of miniaturized microwave passives by response features and variable-fidelity EM simulations. Sci Rep 12, 22440 (2022). https://doi.org/10.1038/s41598-022-26562-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-26562-8

- Springer Nature Limited