Abstract

Emergency departments (EDs) are experiencing complex demands. An ED triage tool, the Score for Emergency Risk Prediction (SERP), was previously developed using an interpretable machine learning framework. It achieved a good performance in the Singapore population. We aimed to externally validate the SERP in a Korean cohort for all ED patients and compare its performance with Korean triage acuity scale (KTAS). This retrospective cohort study included all adult ED patients of Samsung Medical Center from 2016 to 2020. The outcomes were 30-day and in-hospital mortality after the patients’ ED visit. We used the area under the receiver operating characteristic curve (AUROC) to assess the performance of the SERP and other conventional scores, including KTAS. The study population included 285,523 ED visits, of which 53,541 were after the COVID-19 outbreak (2020). The whole cohort, in-hospital, and 30 days mortality rates were 1.60%, and 3.80%. The SERP achieved an AUROC of 0.821 and 0.803, outperforming KTAS of 0.679 and 0.729 for in-hospital and 30-day mortality, respectively. SERP was superior to other scores for in-hospital and 30-day mortality prediction in an external validation cohort. SERP is a generic, intuitive, and effective triage tool to stratify general patients who present to the emergency department.

Similar content being viewed by others

Introduction

Emergency department (ED) triage is a critical process for emergency patients who need appropriate treatment and for hospitals that need optimal resource allocation1,2. During a pandemic, ED triage is much needed to distinguish patients with high acuity, as there was an increase in the number of cases presenting to ED with higher acuity after COVID-193.

Several early warning-scoring systems, such as the National Early Warning System (NEWS) or the Modified Early Warning System (MEWS), have been established to identify the risk of catastrophic deterioration and inpatient deaths4. The Canadian Emergency Department Triage and Acuity Scale (CTAS) is a well-recognized and validated triage system that prioritizes patient care by the severity of illness5.

Based on the CTAS, the Korean Triage and Acuity Scale (KTAS) was developed to assess the patient’s severity in Korea6. Despite its potential, there were some problems, such as dependence on subjective medical staff assessment during ED triage1,7,8.

Several digital machine learning-based triage systems have been proposed for ED triage7,9,10. However, the black box property of machine learning makes it hard to interpret and implement in real-world situations. Few studies focus on interpretation to solve the black box problem11,12,13.

Interpretable AI includes reasoning processes that can help make AI predictions understandable for triage in ED14. Xie et al. developed the Score for Emergency Risk Prediction (SERP) based on the Singapore population12. It used the AutoScore framework to generate and interpret the score13. However, this was a single-center study, and external validation will be critical for generalization. This study aims to validate the SERP score derived from the Singapore population on the Korean population and compare the prediction result to that of conventional scores for various perspectives.

Results

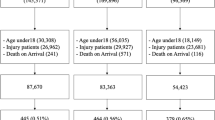

As shown in Fig. 1, during the study period from 2016 to 2020 in SMC, 373,172 patients visited the ED. Among them, 87,649 patients were excluded, and 285,523 patients were included in the final analysis (Fig. 1). The mortality rate of the whole cohort was 1.60% for in-hospital death and 3.80% for death at 30 days.

The distribution of ED patients’ demographics is shown in Table 1. The pre-pandemic period cohort included 232,982 ED visits (mean [SD] patient age, 59.9 [17.1] years; 119,681 [51.6%] female). Whereas the pandemic period cohort included 53,541 ED visits (mean [SD] patient age, 56.1 [17.4] years; 27,114 [50.6%] female).

There were differences between the pre-pandemic and pandemic periods, especially in vital signs and mortality prevalence. Systolic blood pressure and Diastolic Blood Pressure during the pandemic (mean [SD] 130.3 [24.9] and 77.5 [15.1]) were higher than those during the pre-pandemic period (134.1 [24.6] and 81.5 [15.3]). The 30-day mortality was 4.0% during the pre-pandemic period and 2.5% during the pandemic. Regarding the comorbidities, cancer, diabetes, and stroke were the most common diseases. Moreover, patient severity at scene was quite different, the pandemic period saw higher severity patients (1637 (0.8%) vs. 103 (0.2%) (pre-pandemic) for KTAS1, 15,715 (7.2%) and 2762 (5.9%) (pre-pandemic) for KTAS2.

The SERP-30d achieved better performance than KTAS for in-hospital and 30-day mortality prediction, with an AUC of 0.813 (95% CI 0.809–0.817) and 0.795 (95% CI 0.789–0.801), respectively (Table 2). In contrast, KTAS achieved an AUC of 0.717 (0.712–0.722) and 0.741 (0.733–0.749) which results in more than 40% improvement.

The SERP-30d score showed good calibration (based on the Kolmogorov Smirnov test for calibration data: P = 0.405). The SERP-30d calibration plot on the validation data set is illustrated in Supplementary Fig. 1. As shown in Supplementary Table 2, the results before and after the pandemic period based on 2020 were very different. All SERP performance after the COVID season was superior to that before the COVID season.

In terms of score accuracy, we compared the performance at the same sensitivity and specificity level from 0.7 to 0.9. As shown in Table 3, the SERP score achieved a higher sensitivity than KTAS at the same specificity level. For example, at the same 0.7 sensitivity, the specificity of SERP was 0.790, whereas KTAS was 0.568. This result shows that SERP can detect more patient with a higher mortality risk than KTAS.

Regarding the alarm fatigue problem, we compared the performance between scores at the same mortality event occurrence. As shown in Fig. 2, KTAS results in more alarms for the same event than SERP. For example, for 9937 and 7925 events, KTAS raised 263,172 and 143,382 alarms, respectively, whereas the SERP score resulted in only 211,848 and 85,134, a decrease of 19% and 40% of alarms, respectively.

Discussion

We validated the SERP score to predict mortality in the ED using SMC data. The results of SERP in our main two aspects (performance and alarm fatigue) were better than conventional ED triage scores. Also, SERP resulted in fewer false alarms for the same event occurrence. Excessive false alarms can reduce productivity and result in alarm fatigue, putting critical patients at risk15.

Previous studies on machine learning usually focus on accuracy7,9,10. However, only a few studies have demonstrated interpretability for easy use of the model. One of the critical points is the importance of real-world application. In the complex and busy ED environment, it is necessary to make the model light and interpretable. The other strength of SERP is that it requires few features for development. The features in the SERP are routinely collected during triage—so implementing the SERP score in the ED is not a big challenge.

There is growing consensus among researchers related to efforts for the real-world application of AI in healthcare and practical issues regarding the implementation of AI into existing clinical workflows16,17. Brajer et al. suggested a machine-learning model fact sheet reporting for end-users18. Visualization-based efforts such as population, patient, and temporal level feature importance, or nomograms, could be adopted19,20,21,22. Like the Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD) or The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) guidelines for reporting machine learning results23,24, there should be a guideline for the standardization of user interfaces (UIs) and a format for clinical decision support for end-users, including clinicians and patients25,26. In terms of data-sharing, privacy, and interoperability across multiple platforms, hospital policies and national laws are also important. Lack of standardization, black box transparency, proper evaluation, and problems with patient safety are the other major key issues for AI implementation16,17.

The characteristics of the patient populations could be quite different in different hospitals and countries. Although the SERP validation performance was good for long-term outcomes, conventional indexes such as NEWS, MEWS, and KTAS were equivalent for short-term outcomes. There may be a role for customization of a new SERP score for Korea. We recognized that as the mortality timeframe increased from 2 to 30 days, the performance worsened in the conventional indexes but improved in the ML-based score.

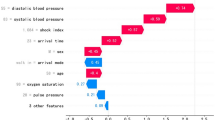

The subgroup analysis showed a difference in the performance between the pandemic and pre-pandemic periods. This could be due to the different patient mix during the pandemic27,28. We also identified differences in feature importance between the pandemic and pre-pandemic periods. During the COVID season, the top three important features were related to vital signs, whereas age was the second most important variable during the pre-pandemic period. Finally, the rate of admission and transfer were higher during the pandemic, even though patient illnesses were less severe based on KTAS.

There are some limitations to this study. First, it is a retrospective study and needs to be further evaluated prospectively, although the strengths of this validation are the multi-center and multi-nation nature of this evaluation. Second, we only considered Korean SMC data, which may not represent all Koreans. In the future, we intend to conduct the same validation with more hospitals in Korea or the National Emergency Department Information System (NEDIS), which is a nationwide registry of ED data29. As the variable used for SERP score is not complicated, we can consider international validation of the score, applying to other nationwide registry ED using Common Data Model or Pan Asia Trauma Outcome Study.

In this study, we validated the SERP score with Korean data. Its performance was better than the conventional indexes in terms of accuracy and false alarms.

Methods

Study setting

This was a retrospective validation study of the SERP score using data from the Samsung Medical Center (SMC) in Korea. SMC is a tertiary hospital located in a metropolitan city in Korea. The hospital has approximately 2000 inpatient beds. More than 80,000 patients visit the ED annually.

The Electronic Health Records (EHR) were obtained from the Clinical Data Warehouse at SMC. This study was approved by the Samsung Medical Center Institutional Review Board (2022-05-083-001), and a waiver of consent was granted for EHR data collection and analysis because of the retrospective and de-identified nature of the data.

All methods were performed in accordance with the relevant guidelines and regulations24.

Population

The population for the validation cohort was ED visits from 2016 to 2020. All patients who visited the ED from January 2016 to December 2020 were initially included. We excluded patients who were under the age of 20 years, did not come for emergency treatment, left without being seen by a clinician, had missing triage data, or were dead on arrival (DOA) (see Fig. 1)15. To assess the impact of the COVID-19 pandemic, we defined two non-overlapping cohorts based on “pre” and “post” pandemic periods.

SERP score

Three SERP scores were validated using the primary outcomes of 30-day and in-hospital mortality from the ED visits. Each score was developed using the AutoScore framework, which is an automatic and interpretable score generator for risk prediction using machine learning and logistic regression12,13.

For outlier data, we assumed that extreme ranges of vital sign data were input errors and designated them as “missing” based on clinical knowledge. For example, any vital signs value under 0, heart rate above 300/min, respiration rate above 50/min, systolic blood pressure above 300 mm Hg, diastolic blood pressure above 180 mm Hg, or oxygen saturation as measured by pulse oximetry above 100% were treated as a missing value and imputed with the median value from a training cohort. Missing rates of each variable are presented in the “Supplemental Tables S1”.

Statistical analysis

The data were analyzed using R software, version 3.5.3 (R Foundation for Statistical Computing).

For the descriptive summaries of baseline characteristics of the study population, frequency (percentages) for categorical variables and mean (SD) for continuous variables were reported.

Performance evaluation

We compared the validation performance of SERP with conventional indexes such as NEWS, MEWS, and KTAS, in terms of two main aspects4. First, how accurately can the SERP score predict the outcome compared to a conventional index? The predictive power of validation was measured using the AUC in the receiver operating characteristic (ROC) curve. Other metrics such as sensitivity, specificity, and positive predictive value, were calculated under a certain threshold from 0.7 to 0.9 for the comparison. We also identified the calibration plot for the agreement between predictions and the observed outcome30. Second, can SERP reduce the false alarm rate more than the conventional index? The alarm rate is important for the validation of SERP because false alarms can result in alarm fatigue31. Alarm fatigue can make medical staff tired and cause critical alerts to be missed. Finally, it could affect patient safety and quality of care in the clinical environment. Therefore, an ideal SERP should have high sensitivity and a low false alarm rate. We compared the frequency of alarming events with the KTAS.

Data availability

Data was available in study site clinical data warehouse. The datasets generated and analyzed during the current study are not publicly available due dataset includes although is de-identified, part of patient information, but are available from the corresponding author on reasonable request.

Abbreviations

- ED:

-

Emergency department

- SERP:

-

Score for emergency risk prediction

- SMC:

-

Samsung Medical Center

- EHR:

-

Electronic health record

- DOA:

-

Dead on arrival

- KTAS:

-

Korean triage acuity scale

- CART:

-

Cardiac arrest risk triage

- NEWS:

-

National early warning system

- MEWS:

-

Modified early warning system

- RAPS:

-

Rapid Acute Physiology Score

- REMS:

-

Rapid Emergency Medicine Score

- CTAS:

-

Canadian Emergency Department Triage and Acuity Scale

- AUROC:

-

Area under the receiver operating characteristic curve

- SD:

-

Standard deviation

- CI:

-

Confidence interval

- NEDIS:

-

National Emergency Department Information System of Korea

- TRIPOD:

-

Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis

- STROBE:

-

The Strengthening the Reporting of Observational Studies in Epidemiology

- UI:

-

User interfaces

References

Htay, T. & Aung, K. Review: Some ED triage systems better predict ED mortality than in-hospital mortality or hospitalization. Ann. Intern. Med. 170, JC47. https://doi.org/10.7326/ACPJ201904160-047 (2019).

Zachariasse, J. M. et al. Performance of triage systems in emergency care: A systematic review and meta-analysis. BMJ Open 9, e026471. https://doi.org/10.1136/bmjopen-2018-026471 (2019).

Alharthi, S., Al-Moteri, M., Plummer, V. & Al Thobiaty, A. The impact of COVID-19 on the service of emergency department. Healthcare (Basel). https://doi.org/10.3390/healthcare9101295 (2021).

Latten, G. H. P. et al. Frequency of alterations in qSOFA, SIRS, MEWS and NEWS scores during the emergency department stay in infectious patients: A prospective study. Int. J. Emerg. Med. 14, 69. https://doi.org/10.1186/s12245-021-00388-z (2021).

Elkum, N. B., Barrett, C. & Al-Omran, H. Canadian Emergency DepartmentTriage and Acuity Scale: Implementation in a tertiary care center in Saudi Arabia. BMC Emerg. Med. 11, 3. https://doi.org/10.1186/1471-227X-11-3 (2011).

Kwon, H. et al. The Korean Triage and Acuity Scale: Associations with admission, disposition, mortality and length of stay in the emergency department. Int. J. Qual. Health Care 31, 449–455. https://doi.org/10.1093/intqhc/mzy184 (2019).

Yu, J. Y., Jeong, G. Y., Jeong, O. S., Chang, D. K. & Cha, W. C. Machine learning and initial nursing assessment-based triage system for emergency department. Healthc. Inform. Res. 26, 13–19. https://doi.org/10.4258/hir.2020.26.1.13 (2020).

Farrohknia, N. et al. Emergency department triage scales and their components: A systematic review of the scientific evidence. Scand. J. Trauma Resusc. Emerg. Med. 19, 42. https://doi.org/10.1186/1757-7241-19-42 (2011).

Choi, S. W., Ko, T., Hong, K. J. & Kim, K. H. Machine learning-based prediction of Korean triage and acuity scale level in emergency department patients. Healthc. Inform. Res. 25, 305–312. https://doi.org/10.4258/hir.2019.25.4.305 (2019).

Levin, S. et al. Machine-learning-based electronic triage more accurately differentiates patients with respect to clinical outcomes compared with the emergency severity index. Ann. Emerg. Med. 71, 565-574.e562. https://doi.org/10.1016/j.annemergmed.2017.08.005 (2018).

Yun, H., Choi, J. & Park, J. H. Prediction of critical care outcome for adult patients presenting to emergency department using initial triage information: An XGBoost algorithm analysis. JMIR Med. Inform. 9, e30770. https://doi.org/10.2196/30770 (2021).

Xie, F. et al. Development and assessment of an interpretable machine learning triage tool for estimating mortality after emergency admissions. JAMA Netw. Open 4, e2118467. https://doi.org/10.1001/jamanetworkopen.2021.18467 (2021).

Xie, F., Chakraborty, B., Ong, M. E. H., Goldstein, B. A. & Liu, N. AutoScore: A machine learning-based automatic clinical score generator and its application to mortality prediction using electronic health records. JMIR Med. Inform. 8, e21798. https://doi.org/10.2196/21798 (2020).

Rudin, C. et al. Interpretable machine learning: Fundamental principles and 10 grand challenges. ArXiv abs/2103.11251 (2021).

Lee, Y. J. et al. A multicentre validation study of the deep learning-based early warning score for predicting in-hospital cardiac arrest in patients admitted to general wards. Resuscitation 163, 78–85. https://doi.org/10.1016/j.resuscitation.2021.04.013 (2021).

Kelly, C. J., Karthikesalingam, A., Suleyman, M., Corrado, G. & King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 17, 195. https://doi.org/10.1186/s12916-019-1426-2 (2019).

He, J. et al. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 25, 30–36. https://doi.org/10.1038/s41591-018-0307-0 (2019).

Brajer, N. et al. Prospective and external evaluation of a machine learning model to predict in-hospital mortality of adults at time of admission. JAMA Netw. Open 3, e1920733. https://doi.org/10.1001/jamanetworkopen.2019.20733 (2020).

Singh, D. et al. Assessment of machine learning-based medical directives to expedite care in pediatric emergency medicine. JAMA Netw. Open 5, e222599. https://doi.org/10.1001/jamanetworkopen.2022.2599 (2022).

King, Z. et al. Machine learning for real-time aggregated prediction of hospital admission for emergency patients. medRxiv. 2022.2003.2007.22271999. https://doi.org/10.1101/2022.03.07.22271999 (2022).

Wu, T. T., Zheng, R. F., Lin, Z. Z., Gong, H. R. & Li, H. A machine learning model to predict critical care outcomes in patient with chest pain visiting the emergency department. BMC Emerg. Med. 21, 112. https://doi.org/10.1186/s12873-021-00501-8 (2021).

Spangler, D., Hermansson, T., Smekal, D. & Blomberg, H. A validation of machine learning-based risk scores in the prehospital setting. PLoS ONE 14, e0226518. https://doi.org/10.1371/journal.pone.0226518 (2019).

Brand, R. A. Standards of reporting: The CONSORT, QUORUM, and STROBE guidelines. Clin. Orthop. Relat. Res. 467, 1393–1394. https://doi.org/10.1007/s11999-009-0786-x (2009).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD Statement. BMC Med. 13, 1. https://doi.org/10.1186/s12916-014-0241-z (2015).

Bohr, A. & Memarzadeh, K. The rise of artificial intelligence in healthcare applications. Artif. Intell. Healthc. https://doi.org/10.1016/B978-0-12-818438-7.00002-2 (2020).

de Hond, A. A. H. et al. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: A scoping review. NPJ Digital Med. 5, 2. https://doi.org/10.1038/s41746-021-00549-7 (2022).

Anderson, K. N. et al. Changes and inequities in adult mental health-related emergency department visits during the COVID-19 pandemic in the US. JAMA Psychiat. https://doi.org/10.1001/jamapsychiatry.2022.0164 (2022).

Chang, H. et al. Impact of COVID-19 pandemic on the overall diagnostic and therapeutic process for patients of emergency department and those with acute cerebrovascular disease. J. Clin. Med. 9, 3842 (2020).

Jeong, J. et al. Development and validation of a scoring system for mortality prediction and application of standardized W statistics to assess the performance of emergency departments. BMC Emerg. Med. 21, 71. https://doi.org/10.1186/s12873-021-00466-8 (2021).

Van Calster, B. et al. Calibration: The Achilles heel of predictive analytics. BMC Med. 17, 230. https://doi.org/10.1186/s12916-019-1466-7 (2019).

Sendelbach, S. & Funk, M. Alarm fatigue: A patient safety concern. AACN Adv. Crit. Care. 24, 378–386. https://doi.org/10.1097/NCI.0b013e3182a903f9 (2013) (quiz 387–388).

Funding

This research was supported by a grant of the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (Grant Number : HI19C1328).

Author information

Authors and Affiliations

Contributions

All authors made a substantial contribution to the concept and design of the manuscript. Conceptualization: W.C.C., L.N.; data curation: J.Y.Y., S.Y.; formal analysis: J.Y.Y.; investigation: L.N., X.F.; methodology: L.N., X.F., M.E.H.O.; visualization: J.Y.Y.; writing—original draft: J.Y.Y., writing—review and editing: J.Y.Y., X.F., L.N., M.E.H.O., Y.Y.N., W.C.C.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yu, J.Y., Xie, F., Nan, L. et al. An external validation study of the Score for Emergency Risk Prediction (SERP), an interpretable machine learning-based triage score for the emergency department. Sci Rep 12, 17466 (2022). https://doi.org/10.1038/s41598-022-22233-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-22233-w

- Springer Nature Limited