Abstract

The use of virtual reality (VR) is frequently accompanied by motion sickness, and approaches for preventing it are not yet well established. We explored the effects of synchronized presentations of sound and motion on visually induced motion sickness (VIMS) in order to reduce VIMS. A total of 25 participants bicycle riding for 5 min with or without sound and motion synchronization presented on a head-mounted display. As a result, the VIMS scores measured by the fast motion sickness scale and simulator sickness questionnaire were significantly lower in the participants who experienced the riding scene with sound and motion than those who experienced the riding scene with sound only, motion only, or neither. Furthermore, analysis of the EEG signal showed that the higher the VIMS, the significant increase in alpha and theta waves in the parietal and occipital lobes. Therefore, we demonstrate that the simultaneous presentation of sound and motion, closely associated with synchronous and visual flow speed, is effective in reducing VIMS while experiencing simulated bicycle riding in a VR environment.

Similar content being viewed by others

Introduction

Virtual reality (VR) is an artificial environment created by humans using computers and other components that has since been used in a number of research1,2. Formerly, VR was primarily implemented on televisions, monitors, or large computer screens; however, small and lightweight head-mounted displays have recently become popular, not just in academics as well as in the public3,4,5. VR has been applied extended to various purposes, including in medicine. Various VR video content is being used including rehabilitation and clinical fields through virtual reality exposure therapy, for educational programs, video games, sports, and tourist guides6,7. Recently, a review to evaluate and identify research status for VR motion sickness has been reported8. In addition, the best-known predictive success for individual motion sickness experiences is reported to be data on quantitative kinematics of postural activity5,9. However, the presence of adverse effects in VR content is the main issue unresolved concern7,10.

Visually induced motion sickness (VIMS) is a comprehensive term that includes virtual reality motion sickness and cybersickness that is induced with a 30–80% frequency when using VR11,12,13. Although the probability and degree of VIMS are conditional on the simulator type and task, it derives from motion sickness that occurs when the human sensory organ receives conflicting inputs from the visual and vestibular systems3,7,14. Immersion in a virtual environment is known to cause motion sickness-like symptoms15. VIMS symptoms include dizziness, drowsiness, exhaustion, cold sweat, nausea, discomfort, stomach, headache, and vomiting16,17. Previous studies have investigated the level of VIMS utilizing various auditory and visual cues such as driving situations and VR games, however, no concord has been reached on how to reduce VIMS18,19,20. Furthermore, proposed methods for reducing VIMS (e.g., restricting the field of view) could reduce the VR experience, and immediate application of the techniques has been restricted21,22. Although the basic origins of VIMS remain unknown, two popular explanations in literature: sensory conflict theory and postural instability theory22,23. According to the sensory conflict theory, motion sickness is generated when visual, somatosensory, and vestibular signals do not correspond with a person's anticipated experiences22,24. The postural instability theory suggests that motion sickness is more likely to occur when an individual's mechanisms for maintaining postural stability are disrupted25. However, the relationship of the neural mechanisms involved in the VIMS has not yet been explained26.

The most commonly used qualitative evaluations are the Motion Sickness Susceptibility Questionnaire (MSSQ) and Simulator Sickness Questionnaire (SSQ) and fast motion sickness scale (FMS)27,28,29. A previous study that investigated the effects of vibration and airflow on VIMS when viewing a visual scene of driving a bicycle found a significant reduction effect of airflow on VIMS30. According to several studies evaluating the level of VIMS by questionnaire, simulators are reported to be caused by an inconsistency between expected and actual sensory inputs based on real experiences in terms of sensory correspondence of visual, auditory, somatosensory, and vestibular sensory information13,22,28,29. Therefore, presenting of more than one modality of sensory input that coincide is thought to reduce VIMS. Even though questionnaires have been frequently used for VIMS in many studies, there has been a barrier to objectively and quantitatively evaluating VIMS11,13.

Functional magnetic resonance imaging (fMRI), electroencephalography (EEG), electrogastrography (EGG), electrocardiography (ECG), heart rate variability (HRV), and galvanic skin response (GSR) are human body signals that could be used to measure the response to VR, including motion sickness31. The EEG is a method of recording the electrical activity of the brain that is used in electrophysiological monitoring32,33. Furthermore, because of its high accuracy and portability, EEG is one of the best methods for measuring the brain mechanics induced by motion sickness34,35. Several studies have found changes in EEG frequency bands for VR motion sickness28,36,37,38. Previous studies have shown that the alpha and gamma band powers of the occipital area increase as subjects increase their subjective motion sickness-related scores while performing auto-driving tasks. These results are supported by sensory conflicts the process of synthesizing signals from different sensory modalities, such as visual, vestibular, auditory, and somatosensory to produce unified percepts of the external environment36. Another study reported an increase the alpha and beta band power in the parietal and motor areas, as well as an increase in subjective motion sickness-related scores, using EEG analysis in a VR-based dynamic 3D environment. These areas have been associated with the integration of multiple sensory information38. A functional magnetic resonance imaging (fMRI) analysis of brain responses to auditory and visual associations in humans demonstrated sound-induced changes in visual motion perception. These findings show that processing auditory and visual stimuli at the same time activate the distributed nervous system in multimodal brain areas. Additionally, a direct relation was identified between subjects' perceptual experiences and activities in the cortex and subcortex39. It has been suggested that the occipital, parietal, and frontal areas synthesize in mediating the interaction of different-modality stimuli39,40. However, aspects of brain activation to multiple sensory stimuli in relation to VR motion sickness have not been investigated. Thus, using the VIMS-inducing HMD (Head Mount Display) to investigate changes in brain activity (EEG), SSQ, and FMS scores according to the level of motion sickness of an individual, objective data such as bio-signals are used to determine the individual's subjective response to motion sickness. It is necessary to quantify the level of motion sickness with support.

Therefore, this study aims to investigate the differences in VIMS according to various sensory stimuli through anatomical activation and questionnaires survey of cortical areas of the brain found in the VIMS-induced environment. In this study, we predicted that visual perception and somatosensory synchrony effectively reduced the severity of VIMS compared to unsynchronized sensory stimuli.

Results

Questionnaires results

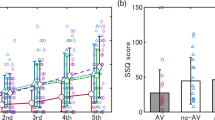

The SSQ and FMS score quantifies subjective feedback from the participants. One-way repeated measures ANOVA on the SSQ and FMS score for each tasks showed statistically significant differences within the tasks (p < 0.05) (Table 1). After Bonferroni correction, the SSQ and FMS score indicated a statistically significant decrease in the virtual reality with sound and riding a bicycle task compared to the VR task (p < 0.05). In contrast, there were no significant differences in SSQ and FMS scores between the VR, VR with sound, and VR with riding a bicycle tasks (p > 0.05) (Fig. 1).

Bonferroni correction of SSQ total score and FMS score according to task performance. SSQ simulator sickness questionnaire, FMS fast motion sickness scale, Task1 virtual reality, Task2 virtual reality with sound, Task3 virtual reality with riding a bicycle, Task4 virtual reality with sound and riding a bicycle; *p < 0.05.

Power changes under different conditions

One-way repeated measures ANOVA of relative alpha and theta power for all tasks showed statistically significant differences within the tasks (p < 0.05) (Table 2). After Bonferroni correction, the relative alpha and theta power indicated showed a statistically significant decrease in the virtual reality with sound and riding a bike task compared to the VR task (p < 0.05). In contrast, there were no significant differences in relative alpha and theta power between the VR, VR with sound, and VR with riding a bicycle tasks (p > 0.05) (Table 2). Figure 2 shows the relative power for the task condition, respectively. An alternating change in alpha and theta power of the parietal area was prominent in VR, VR with sound, VR with riding a bicycle, and VR with sound and riding a bicycle tasks (Fig. 2). An alternating change in alpha and theta power of the occipital area was prominent in VR, VR with sound, VR with riding a bicycle, and VR with sound and riding a bicycle tasks (Fig. 2).

Comparison of relative power in parietal and occipital area according to task performance by Bonferroni correction. (A) Parietal area; (B) Occipital area; Task1 virtual reality, Task2 virtual reality with sound, Task3 virtual reality with riding a bicycle, Task4 virtual reality with sound and riding a bicycle; *p < 0.05.

Motion-sickness-related spectral changes

To study the EEG correlates of motion sickness across subjects, the time–frequency responses of each task were averaged by level of motion sickness (Fig. 3). To study the EEG correlates of motion sickness across subjects, the time–frequency responses of each task were averaged by level of motion sickness (Fig. 3). The parietal and motor area (Fig. 3A,E) exhibited predominant spectral increases in all frequency bands as the motion sickness level increased. Figure 3 shows the average spectral changes in the parietal and occipital areas of 25 subjects and the brain topography during each task. ERSP responses were related to motion sickness according to task. Frequency responses at 4–8 Hz and 8–13 Hz were observed in the parietal lobe with increasing levels of motion sickness. The subjects’ dB power also shows synchronized responses in the 4–8-Hz and 8–13-Hz ranges. This motion sickness-related phenomenon is observed in parietal and occipital area.

Event-related spectral perturbation analysis of the parietal and occipital area between the tasks. (A) Parietal virtual reality task; (B) Parietal virtual reality with sound task; (C) Parietal virtual reality with riding a bicycle task; (D) Parietal virtual reality with sound and riding a bicycle; (E) Occipital virtual reality task; (F) Occipital virtual reality with sound task; (G) Occipital virtual reality with riding a bicycle task; (H) Occipital virtual reality with sound and riding a bicycle; (A–H) horizontal axis: time; vertical axis: frequency; dotted line: start time of task; time–frequency response of parietal and occipital area from 25 subjects (see text).

Discussion

In the present study, we investigated whether the synchronized presentation of sound and motion reduces motion sickness while experience a simulated bicycle ride in a VR environment, using EEG and questionnaire analyses. As a result, it showed that SSQ and FMS scores significantly decreased during the virtual reality with sound and riding a bicycle task that provided subjects with both types of additional sensory stimulation, compared to the VR task that did not provide subjects with either sound or motion stimulation. In addition, the parietal and occipital lobes exhibited significant EEG power changes in response to vestibular and visual stimuli. The ERSP of the spectral changes revealed differences in the subject's level of motion sickness during each task. During the VR task, the dB power in the alpha, beta, gamma, and theta band increased in the parietal and occipital lobe relative to the dB power recorded during the virtual reality with sound and riding a bicycle task. We found that visual perception and somatosensory are that synchronization of sensory stimulation effectively reduces the severity of VIMS compared with unsynchronized sensory stimulation.

Motion sickness felt by an individual in a VR environment could be measured using a questionnaire. The results of this study found that SSQ total scores and FMS scores were higher in the VR task and lower in the virtual reality with sound and riding a bicycle task28,29,30. Many studies have suggested that higher SSQ total and FMS scores indicate more severe motion sickness symptoms. In 2020, Sawada et al. found that when a synchronized stimulus of engine sound and vibration was applied while subjects experienced a simulated motorcycle ride in a VR environment, SSQ and FMS scores significantly decreased, and motion sickness was reduced13. A study that compared direct participation in a VR game to only watching showed that when subjects participated in the game, motion sickness levels were reduced. However, there was no reduction in motion sickness with sound stimulation only41. Several studies have shown that when motion sickness is induced, the alpha and theta power bands near the parietal lobe show the most observable variations on EEG. These results have been related to the location of the parietal lobe, a transition region between the somatosensory and motor cortex, which is involved in the integration of spatial information, including somatosensory information from vestibular sensory system input17,28. These studies concurrence with our results. In 2020, Li et al. compared EEG changes in HMD-based VR roaming scenes with various road conditions and found that the alpha and theta power in the parietal lobe increased as motion sickness levels increased42. Previous theta wave studies have concluded that the increase in parietal theta power with motion sickness is related to increased sensory input and motor planning integration43. It has also been suggested that theta oscillations play a role in coordinating the activity of various brain regions to update the motor plan in response to somatosensory input44. Therefore, increased alpha and theta power in the parietal lobe is thought to be associated with motion sickness.

Previous studies investigated the correlation between motion sickness and EEG activity in a virtual reality-based driving simulator have reported that parietal, motor, and occipital regions exhibit power changes in alpha and theta bands in response to vestibular stimulation31,38. In 2015, Naqvi et al. reported a significant increase in occipital lobe alpha power when the SSQ score increased. It has also been reported that increased alpha power in the parietal and occipital regions is likely to indicate the presence of motion sickness28. Studies on the correlation between VR symptoms and EEG recordings showed that nausea and theta power in the occipital lobe were positively correlated31. It has been suggested that visual information conflicts have the greatest influence and, by reducing the role of the visual domain, result in an increase in theta power. Some studies describe changes in motion sickness with increased power in the lower frequencies (delta, theta, and alpha). In particular, alpha and theta have been shown to increase in synchronization as motion sickness levels33,42. Certain circumstances, like as fatigue and dizziness, are associated with an increase in alpha power45. It must be reasoned that changes in frequency power are due to decreased vigilance by physical exertion in the VR environment. Also, changes in parietal and occipital regions are especially noticeable during stress46. Consequently, this change in occipital lobe power could be an indirect result of multi-sensory system conflict, increasing brain load compared to the steady-state, because the multi-sensory system conflict continues to look at the virtual environment despite motion sickness47,48. Motion sickness is related to the inconsistent input of motor signals transmitted by the sensory system. The vestibular apparatus has been included in the pathogenesis of motion sickness36. Since patients with bilateral vestibular loss do not suffer from motion sickness, it was established that the vestibular system induced motion sickness. Moreover, vestibular afferents project to various cortical areas that receive input from the visual, auditory, and somatosensory systems4. According to an fMRI study investigating the neural correlation between auditory input and vestibular contribution, it was reported that the region is activated for multisensory processing in the inferior and posterior insula, inferior parietal lobule, and cerebellar uvula49.

However, motion-sickness-induced EEG power changes are not consistent among all of the cited studies. One reason could be the different paradigms used to induce motion sickness33,34,47. In this study, we used a combination of visual and vestibular inputs. This could be a way to increase the realism with respect to changes in EEG power for sensory impingement in a VR environment rather than a single modality scheme. Additionally, the study performed a repeated measures within-subject design to measure VR motion sickness for multiple sensory stimuli. However, motion sickness could be changed across repeated VR exposures15. Therefore, consideration should be paid to the exposure interval and washout period. Nevertheless, this study has several limitations. First, it is difficult to generalize the results of this study, because the age range of the recruited subjects (20s) is rather limited, and the sample size is small. Second, since only a limited selection of sensory stimulus types was used in this study, we suggest conducting future studies that include multi-sensory feedback, such as tactile and temperature factors. Third, the presence or absence of VR experiences, and gender differences were not taken into consideration in this study. In addition, it may be difficult to determine whether physiological differences in measured EEG signal changes are caused by the emotional or physical impact of the task. However, the barrier to evaluating VIMS objectively and quantitatively arises from the fact that motion sickness is a subjective experience. Physiological and neurophysiological data generally have a weak relationship with subjective experience, so the use of objective data could not be contradicted by voluntary subjective reports. Therefore, it is important to emphasize that motion sickness is an individual problem.

Conclusion

The present study was conducted to investigate activity changes in cerebral cortex regions and questionnaires that might be related to reductions in motion sickness-induced in a VR environment. The results obtained indicate that discrepancies between visual perception and somatosensory are associated with increases in motion sickness. Thus, provide insights that could be used in the development of VR applications that reduce motion sickness. Consequently, they suggest that the relationship between visual perception and somatosensory is important for the user to adjust to the VR environment and that synchronization of sensory stimulation is necessary.

Methods

Participants

Twenty-five healthy, right-handed volunteers (17 males and 8 females) with no history of cardiovascular, gastrointestinal, or vestibular diseases or drug or alcohol abuse, no medication, and normal or corrected-to-normal vision participated in this study (Table 3). All experiments were performed in accordance with relevant guidelines and regulations from the declaration of Helsinki. The measurements were conducted following the protocol approved by the Institutional Review Board (IRB) of Dankook University (DKU 2021-03-069). All participants were given a comprehensive set of instructions regarding the experiment, agreed to the experimental protocol, and provided written informed consent to participate in the study.

Signal acquisition

Thirty-two-channel EEG signals were acquired at a sampling rate of 128 Hz using an EMOTIV EPOC Flex (Emotiv, San Francisco, CA, USA). The electrode locations were based on a 10–20 international system provided in the Matlab toolbox, EEGLAB (http://sccn.ucsd.edu/eeglab)33,50. The acquired EEG signals were first inspected to remove bad EEG channels. A high pass filter with a cut-off frequency at 1 Hz with a transition band of 0.2 Hz was used to remove baseline drifting and breathing artifacts. Then, a low pass filter with a cut-off frequency of 50 Hz and a transition band width of 7 Hz was applied to the signal to remove muscular artifacts and line noise38. Artifacts such as eye movements were removed using the EEGLAB toolbox based on Independent Component Analysis (ICA)31,50. Figure 4 depicts a flowchart of the procedure for EEG signal processing.

Independent component analysis

The filtered EEG signals were decomposed into independent brain sources by ICA for biomedical time series analysis using EEGLAB33,50. The ICA algorithm can separate N sources from N EEG channels. The summation of the EEG sensors is assumed to be linear and instantaneous the propagation delays are negligible. We assume that the sources of muscle fission, eye, and cardiac signals are not time-locked to EEG activity, which reflects the synaptic activity of cortical neurons. As a result, the sources' time courses are assumed to be statistically independent. For EEG analysis, the rows of the input matrix X represent the EEG signals recorded at different electrodes, the rows of the output data matrix U = WX represent the time courses of activation of the ICA components, and the columns of the inverse matrix W−1 represent the projection strengths of the respective components onto the scalp sensors. The scalp topographies of the components reveal the location of the sources. We obtained useful components for time–frequency and ERSP analysis after removing the sources of muscle activity, eye movement, eye blinking, and single electrode noises23,51.

Questionnaires (FMS and SSQ)

While each experiment, the severity of disease during EEG recordings was reported orally by each subject using FMS with a continuous scale ranging from 0 (no sickness at all) to 20 (severe sickness). It allows a quick estimate of the subject’s motion sickness level through verbal reporting13. Notably, the traditional SSQ was applied after each experiment to provide overall motion-sickness rating information. SSQ was composed of 16 questions that check the symptoms of motion sickness, including general discomfort, fatigue, headache, nausea, vertigo, etc.; 0 is for no symptoms, and 3 is for severe symptoms26. The SSQ total score ranges from 0 to 235, with higher scores indicating higher severity of symptoms.

Time–frequency analysis

The dynamics of the ICA power spectra were tested throughout the experiment using time–frequency analysis. The power of the EEG signal was calculated as a 2-s with overlapping of 50%. The total duration of the signal was 5 min i.e., 300 s. For each participant, the absolute power was calculated for 32 electrodes, and decomposed with Fast Fourier Transform (FFT) function in MATLAB into five bands: Delta (~ 4 Hz), Theta (4–8 Hz), Alpha (8–12 Hz), Beta (12–30 Hz), and Gamma (> 30 Hz). The delta wave was excluded from the analyzes of the data collected in the current study, because it could be influenced by eye blinking and motion artifacts52. Two types of power were calculated from the bands, absolute and relative power. Relative power could be calculated by performing a frequency transform on the absolute power. The ratio of a band's power to the total power is referred to as the relative power. Relative power helps determine how much a given band contributes to the overall EEG53.

Event related spectral perturbations (ERSP)

The time sequence of ICA activations was subject to Fast Fourier Transform (FFT) with overlapped moving windows. Spectra in each epoch were smoothed by 2-windows moving average to reduce random error. Spectra prior to event onsets were considered as baseline spectra for every trial. The mean baseline spectra were converted into dB power and subtracted from spectral power after stimulus onsets so that we can visualize spectral ‘perturbation’ from the baseline54,55. This procedure is applied to all the epochs, the results were then averaged to yield an ERSP image. For all cases, the continuous EEG signals were extracted into several epochs, each of which contained the sampled EEG data from − 100 to 300 s with the stimulus onset at 0 s. The ERSP image mainly showed spectral differences after the event since the baseline spectra were removed prior to event onsets. Therefore, we can compare each component by ERSP analysis of these four tasks between the tasks.

Experimental protocol

For the bicycle riding HMD-VR scene, a four-task experimental protocol was designed. Before each experiment, subjects were given a 10-min explanation of the experiment and completed an SSQ questionnaire to familiarize themselves with the laboratory environment. A baseline EEG was then recorded with the EEG electrode cap and eyes closed, referred to as the “baseline phase”32. Then, in the bicycle riding HMD-VR phase, each of the four tasks was experienced: (1) VR task: sitting in a chair and watching a simulated bike ride through the HMD; (2) VR with sound task; sitting in a chair and watching the bike ride through the HMD while listening to the screen sounds through earphones; (3) VR with riding a bicycle task: riding a stationary bicycle while watching the bicycle ride through the HMD; and (4) VR with sound and riding a bicycle task: riding a stationary bicycle while watching the bicycle ride through the HMD and listening to the screen sounds through earphones. The VR scene comprises a 360° projection (Fig. 5). Earphones delivered sound that was coordinated with the environment while riding the bicycle while wearing the HMD. The auditory stimulus intensity in earphones for 5 min was 56-dB or 90-dB, according on the subject's selection56. The bicycle speed was chosen at a self-selective speed ranging from 13 to 16 km/h in kilometers per hour (km/h)57. Each task was experienced for 5 min, with a 10-min recovery time between tasks15,58,59. The task order was randomly applied. Furthermore, subjects reported their level of MS while each task verbally once per minute and completed the SSQ immediately after finishing the task13,60. To minimize influences on the EEG data recording, the laboratory temperature was maintained constant, and the measuring environment was quiet and free of noise.

Statistical analysis

Data were statistically analyzed with SPSS version. 25.0. (SPSS, Inc., Chicago, IL, USA). The Shapiro–Wilk test was used to determine the normal distribution of the data of each subject. SSQ, FMS, and EEG data between the four tasks repeated were performed with one-way repeated measures ANOVA. Changes in EEG-band relative power were compared between tasks, and then the relative alpha, beta, gamma, and theta power in the parietal and occipital lobes were compared for all tasks using one-way repeated measures ANOVA. Corresponding comparisons between tasks were performed using Bonferroni correction. In the one-way repeated measures ANOVA literature, the effect size statistic is usually called eta squared (η2) and indicated a large effect (η2 = 0.14), medium effect (η2 = 0.06), or small effect (η2 = 0.01). Eta squared (η2) was calculated to determine the EEG-band relative power of the parietal and occipital area (Table 2). Statistical significance was accepted for p-value < 0.05.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Ratcliffe, N. & Newport, R. The effect of visual, spatial and temporal manipulations on embodiment and action. Front. Hum. Neurosci. 11, 227 (2017).

Roettl, J. & Terlutter, R. The same video game in 2D, 3D or virtual reality—How does technology impact game evaluation and brand placements?. PLoS One 13(7), e0200724 (2018).

Nooij, S. A. et al. Vection is the main contributor to motion sickness induced by visual yaw rotation: Implications for conflict and eye movement theories. PLoS One 12(4), e0175305 (2017).

Paillard, A. C. et al. Motion sickness susceptibility in healthy subjects and vestibular patients: Effects of gender, age and trait-anxiety. Res. Vestib. Sci. 23(4–5), 203–209 (2013).

Smart, L. J. et al. Simulation and virtual reality using nonlinear kinematic parameters as a means of predicting motion sickness in real-time in virtual environments. Hum. Factors. https://doi.org/10.1177/00187208211059623 (2021).

Abari, O., Bharadia, D., Duffield, A. & Katabi, D. Enabling {high-quality} untethered virtual reality. In 14th USENIX Symposium on Networked Systems Design and Implementation NSDI, vol. 17, pp. 531–544 (2017).

Saredakis, D. et al. Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front. Hum. Neurosci. 14, 96 (2020).

Stanney, K. et al. Identifying causes of and solutions for cybersickness in immersive technology: Reformulation of a research and development agenda. Int. J. Hum. Comput. Stud. 36, 1783–1803 (2020).

Weech, S., Varghese, J. P. & Barnett-Cowan, M. Estimating the sensorimotor components of cybersickness. J. Neurophysiol. 120, 2201–2217 (2018).

Kourtesis, P., Collina, S., Doumas, L. A. & MacPherson, S. E. Validation of the virtual reality neuroscience questionnaire: Maximum duration of immersive virtual reality sessions without the presence of pertinent adverse symptomatology. Front. Hum. Neurosci. 13, 417 (2019).

Kourtesis, P., Collina, S., Doumas, L. A. & MacPherson, S. E. Technological competence is a pre-condition for effective implementation of virtual reality head mounted displays in human neuroscience: A technological review and meta-analysis. Front. Hum. Neurosci. 13, 342 (2019).

Rebenitsch, L. & Owen, C. Review on cybersickness in applications and visual displays. Virtual Real. 20(2), 101–125 (2016).

Sawada, Y. et al. Effects of synchronised engine sound and vibration presentation on visually induced motion sickness. Sci. Rep. 10(1), 1–10 (2020).

Reason, J. T. & Brand, J. J. Motion Sickness (Academic Press, 1975).

Howarth, P. A. & Hodder, S. G. Characteristics of habituation to motion in a virtual environment. Displays 29, 117–123 (2008).

Chen, Y. C. et al. Motion-sickness related brain areas and EEG power activates. In International Conference on Foundations of Augmented Cognition 348–354 (2009).

Wei, Y. et al. Motion sickness-susceptible participants exposed to coherent rotating dot patterns show excessive N2 amplitudes and impaired theta-band phase synchronization. Neuroimage 202, 116028 (2019).

Dziuda, Ł, Biernacki, M. P., Baran, P. M. & Truszczyński, O. E. The effects of simulated fog and motion on simulator sickness in a driving simulator and the duration of after-effects. Appl. Ergon. 45(3), 406–412 (2004).

Helland, A. et al. Driving simulator sickness: Impact on driving performance, influence of blood alcohol concentration, and effect of repeated simulator exposures. Accid. Anal. Prev. 94, 180–187 (2016).

Munafo, J., Diedrick, M. & Stoffregen, T. A. The virtual reality head-mounted display Oculus Rift induces motion sickness and is sexist in its effects. Exp. Brain Res. 235(3), 889–901 (2017).

Fernandes, A. S. & Feiner, S. K. Combating VR sickness through subtle dynamic field-of-view modification. In 2016 IEEE Symposium on 3D User Interfaces (3DUI) 201–210 (2016).

Oman, C. M. Motion sickness: A synthesis and evaluation of the sensory conflict theory. Can. J. Physiol. Pharmacol. 68(2), 294–303 (1990).

Storzer, L. et al. Bicycling and walking are associated with different cortical oscillatory dynamics. Front. Hum. Neurosci. 10, 61 (2016).

Warwick-Evans, L., Symons, N., Fitch, T. & Burrows, L. Evaluating sensory conflict and postural instability. Theories of motion sickness. Brain Res. Bull. 47(5), 465–469 (1998).

Stoffregen, T. A. & Smart, L. J. Jr. Postural instability precedes motion sickness. Brain Res. Bull. 47(5), 437–448 (1998).

Lim, H. K. et al. Test–retest reliability of the virtual reality sickness evaluation using electroencephalography (EEG). Neurosci. Lett. 743, 135589 (2021).

Keshavarz, B. & Hecht, H. Validating an efficient method to quantify motion sickness. Hum. Factors 53(4), 415–426 (2011).

Naqvi, S. A. A. et al. EEG based time and frequency dynamics analysis of visually induced motion sickness (VIMS). Australas. Phys. Eng. Sci. Med. 38(4), 721–729 (2015).

Zhang, X. & Sun, Y. Motion sickness predictors in college students and their first experience sailing at sea. Aerosp. Med. Hum. Perform. 91(2), 71–78 (2020).

D’Amour, S., Bos, J. E. & Keshavarz, B. The efficacy of airflow and seat vibration on reducing visually induced motion sickness. Exp. Brain Res. 235(9), 2811–2820 (2017).

Chen, Y.-C. et al. Spatial and temporal EEG dynamics of motion sickness. Neuroimage 49(3), 2862–2870 (2010).

Hsin-Hung, L. I. Study of Relationship Between Electroencephalogram Dynamics and Motion Sickness of Drivers in a Virtual Reality Dynamic Driving Environment in Hsinchu (National Chiao Tung University, 2005).

Min, B. C., Chung, S. C., Min, Y. K. & Sakamoto, K. Psychophysiological evaluation of simulator sickness evoked by a graphic simulator. Appl. Ergon. 35(6), 549–556 (2004).

Wu, J. P. EEG changes in man during motion sickness induced by parallel swing. Space Med. Med. Eng. 5(3), 200–205 (1992).

Chen, Y. et al. Assessing rTMS effects in MdDS: Cross-modal comparison between resting state EEG and fMRI connectivity. In 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 1950–1953 (2017).

Chuang, S. W., Chuang, C. H., Yu, Y. H., King, J. T. & Lin, C. T. EEG alpha and gamma modulators mediate motion sickness-related spectral responses. Int. J. Neural Syst. 26(02), 1650007 (2016).

Lin, C. T., Tsai, S. F. & Ko, L. W. EEG-based learning system for online motion sickness level estimation in a dynamic vehicle environment. IEEE Trans. Neural Netw. Learn. Syst. 24(10), 1689–1700 (2013).

Lin, C. T., Chuang, S. W., Chen, Y. C., Ko, L. W., Liang, S. F. & Jung, T. P. EEG effects of motion sickness induced in a dynamic virtual reality environment. In 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society 3872–3875 (2007).

Bushara, K. et al. Neural correlates of cross-modal binding. Nat. Neurosci. 6(2), 190–195 (2003).

Stein, B. E. Neural mechanisms for synthesizing sensory information and producing adaptive behaviors. Exp. Brain Res. 123(1), 124–135 (1998).

Keshavarz, B. & Hecht, H. Visually induced motion sickness and presence in videogames: The role of sound. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 56(1), 1763–1767 (2012).

Li, X. et al. VR motion sickness recognition by using EEG rhythm energy ratio based on wavelet packet transform. Comput. Methods Programs Biomed. 188, 105266 (2020).

Caplan, J. B. et al. Human θ oscillations related to sensorimotor integration and spatial learning. J. Neurosci. 23(11), 4726–4736 (2003).

Bland, B. H. & Oddie, S. D. Theta band oscillation and synchrony in the hippocampal formation and associated structures: The case for its role in sensorimotor integration. Behav. Brain Res. 127(1–2), 119–136 (2001).

Ismail, L. E. & Karwowski, W. Applications of EEG indices for the quantification of human cognitive performance: A systematic review and bibliometric analysis. PLoS One 15, e242857 (2020).

Chen, A. C. N., Dworkin, S. F., Haug, J. & Gehrig, J. Topographic brain measures of human pain and pain responsivity. Pain 37, 129–141 (1989).

Chelen, W. E., Kabrisky, M. & Rogers, S. K. Spectral analysis of the electroencephalographic response to motion sickness. Aviat. Space Environ. Med. 64(1), 24–29 (1993).

Heo, J. & Yoon, G. EEG studies on physical discomforts induced by virtual reality gaming. J. Electr. Eng. Technol. 15(3), 1323–1329 (2020).

Oh, S. Y., Boegle, R., Ertl, M., Stephan, T. & Dieterich, M. Multisensory vestibular, vestibular-auditory, and auditory network effects revealed by parametric sound pressure stimulation. Neuroimage 176, 354–363 (2018).

Delorme, A. & Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134(1), 9–21 (2004).

Snyder, K. L., Kline, J. E., Huang, H. J. & Ferris, D. P. Independent component analysis of gait-related movement artifact recorded using EEG electrodes during treadmill walking. Front. Hum. Neurosci. 9, 639 (2015).

Jeong, D., Yoo, S. & Jang, Y. Motion sickness measurement and analysis in virtual reality using deep neural networks algorithm. J. KCGS 25(1), 23–32 (2019).

Ko, K. E., Yang, H. C. & Sim, K. B. Emotion recognition using EEG signals with relative power values and Bayesian network. Int. J. Control Autom. Syst. 7(5), 865–870 (2009).

Pfurtscheller, G. & Aranibar, A. Evaluation of event-related desynchronization (ERD) preceding and following voluntary self-paced movement. Electroencephalogr. Clin. Neurophysiol. 46(2), 138–146 (1979).

Saxena, M. & Gupta, A. Exploration of temporal and spectral features of EEG signals in motor imagery tasks. In 2021 International Conference on COMmunication Systems and NETworkS 736–740 (2021).

Nakajima, S., Ino, S., Yamashita, K., Sato, M. & Kimura, A. Proposal of reduction method of Mixed Reality sickness using auditory stimuli for advanced driver assistance systems. In 2009 IEEE International Conference on Industrial Technology 1–5 (2009).

Bogacz, M. et al. Comparison of cycling behavior between keyboard-controlled and instrumented bicycle experiments in virtual reality. Transp. Res. Rec. 2674(7), 244–257 (2020).

Han, D. U. et al. Development of a method of cybersickness evaluation with the use of 128-channel electroencephalography. Sci. Emot. Sensib. 22(3), 3–20 (2019).

Akizuki, H. et al. Effects of immersion in virtual reality on postural control. Neurosci. Lett. 379(1), 23–26 (2005).

Lin, C. T. et al. Distraction-related EEG dynamics in virtual reality driving simulation. In 2008 IEEE International Symposium on Circuits and Systems 1088–1091 (2008).

Acknowledgements

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Education, Science and Technology (NRF-2021R1A2C1095047).

Author information

Authors and Affiliations

Contributions

Conceptualization: S.Y.P., S.S.Y.; Methodology: S.Y.P., S.S.Y.; Software: S.Y.P., J.W.K.; Validation: S.Y.P., J.W.K., S.S.Y.; Formal analysis: S.Y.P., J.W.K.; Investigation: S.Y.P., J.W.K.; Resources: S.S.Y.; Data curation: S.Y.P.; Writing and original draft preparation: S.Y.P.; Writing, review, and editing: S.S.Y.; Visualization: S.Y.P.; Supervision: S.S.Y.; Project administration: S.Y.P., J.W.K., S.S.Y.; Funding acquisition: J.W.K., S.S.Y.; All authors have read and agreed to the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yeo, S.S., Kwon, J.W. & Park, S.Y. EEG-based analysis of various sensory stimulation effects to reduce visually induced motion sickness in virtual reality. Sci Rep 12, 18043 (2022). https://doi.org/10.1038/s41598-022-21307-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-21307-z

- Springer Nature Limited

This article is cited by

-

Investigating cortical activity during cybersickness by fNIRS

Scientific Reports (2024)