Abstract

Augmented reality (AR) devices, as smart glasses, enable users to see both the real world and virtual images simultaneously, contributing to an immersive experience in interactions and visualization. Recently, to reduce the size and weight of smart glasses, waveguides incorporating holographic optical elements in the form of advanced grating structures have been utilized to provide light-weight solutions instead of bulky helmet-type headsets. However current waveguide displays often have limited display resolution, efficiency and field-of-view, with complex multi-step fabrication processes of lower yield. In addition, current AR displays often have vergence-accommodation conflict in the augmented and virtual images, resulting in focusing-visual fatigue and eye strain. Here we report metasurface optical elements designed and experimentally implemented as a platform solution to overcome these limitations. Through careful dispersion control in the excited propagation and diffraction modes, we design and implement our high-resolution full-color prototype, via the combination of analytical–numerical simulations, nanofabrication and device measurements. With the metasurface control of the light propagation, our prototype device achieves a 1080-pixel resolution, a field-of-view more than 40°, an overall input–output efficiency more than 1%, and addresses the vergence-accommodation conflict through our focal-free implementation. Furthermore, our AR waveguide is achieved in a single metasurface-waveguide layer, aiding the scalability and process yield control.

Similar content being viewed by others

Introduction

Augmented reality (AR) and mixed/merged reality (MR) devices enable the user to see both the real world and virtual images simultaneously, leading to considerable interest as next-generation mobile wearable devices beyond the smartphone1,2,3,4,5,6. Benchmarked by the iconic Google Glass, there have been many multifunctional displays including recent efforts by Microsoft Hololens and Magic Leap. A key element in the smart glasses is the optical waveguide that delivers virtual images from the display source to the eye, augmenting our vision through the transparent optical glasses. For compactness, recent optical elements have utilized holographic and diffractive optical elements (HOEs and DOEs) instead of bulky free-space optics headsets such as birdbath designs using bulk lenses and mirrors. These HOEs and DOEs have recently been implemented in various diffraction grating structures such as Bragg, volume, surface relief and blazed gratings7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28.

AR devices with HOEs and DOEs serve as next-generation display technology, with improving display resolution, lower time-delay in images, and cost scalability29,30. While the AR display and sensing efforts benefited from the smart phone technology developments, most HOE and DOE-based AR devices still have physical performance limitations—such as insufficient display resolution, small field-of-view, low input–output efficiencies, and manufacturing yield-scalability31,32—hindering their wide-spread adoption. Furthermore, the vergence-accommodation conflict (VAC) is a common and important challenge for head-mount displays (HMDs)33,34,35,36,37. Our eyes use multiple depth cues to conceive the depth of an image, consisting of an accommodation depth (wherein our eyes adjust the lenses foci to acquire a clear image) and a vergence depth (based on the angle between our eyes to perceive a distance). For real-world images, the accommodation and vergence distances are the same. For AR display images, however, the virtual image comes from the display plane and not at the depth of the image. Hence, the binocular disparity to setup the image’s virtual focal distance (vergence distance) does not match the virtual image source’s actual position (accommodation distance). In trying to match the accommodation power to the vergence distance, this results in blurred images, fatigue and nausea in our AR-MR imaging perception, especially for long-term display usage. Maxwellian-view displays, by projecting transmissive displays directly on the retina, can alleviate this conflict by reducing the dependence on the eye lens accommodation38,39,40,41 but are often bulky free-space optical elements and with small field-of-view.

Metasurfaces can overcome the physical limitations of HOEs and DOEs, including our implementation of focal-free metalenses to overcome the vergence-accommodation conflict while preserving a large field-of-view. Conventional HOEs and DOEs utilize periodic grating structures for multiple beam diffraction, fine-tuned for red-blue-green wavelengths simultaneously. To achieve multiple focal positions, multiple HOE/DOE waveguides are implemented simultaneously, a complexity that can result in lower display quality from unwanted diffractions and multi-step fabrication processing. Metasurfaces, with spatially-configured arrays of subwavelength-scale optical scatters, are an alternative platform for direct wavefront shaping42,43,44,45,46,47,48,49,50. The unique versatility and multi-functionality of the metasurface platform have thus led to advances in flat-optics devices, all-dielectric platforms, polarization-spatial conversion, quantum photonics, metalenses51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67, and AR visors68,69,70. Utilizing the building blocks within the metasurface array, metasurface geometrical parameters—such as the size, shape and orientation—can control the reflected and transmitted wavefront from first-principles71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94. The subwavelength orientation also reduces the generation of spurious diffraction orders versus those observed in HOEs and DOEs, resulting in a higher waveguide input–output efficiency and suppression of unwanted effects such as virtual focal points, halos, and ghost images95,96,97,98,99,100,101,102,103,104,105,106,107.

Here we describe our metasurface waveguide for high resolution, field-of-view (FoV), and output efficiency, while preserving a focal-free operation. Our metasurface optical elements (MOEs) are implemented for full-color operation, with the careful dispersion control in the excited propagation and diffraction modes, through analytical–numerical modeling, rigorous nanofabrication, and measurement. Each pixel in the input and output MOEs is carefully optimized for the collective high resolution and output efficiencies, while realizing a focal-free Maxwellian-view display system to overcome the vergence-accommodation conflict. Implemented in a CMOS-compatible cleanroom and foundry service, our MOE waveguide simultaneously preserves a large FoV across the full-color gamut, in our multi-period single-layer demonstration.

Results

Figure 1 shows the key architecture and approach of our MOE AR display waveguide, with the AA’ cross-section (Fig. 1a) and top-view (Fig. 1b) highlights in the display beam propagation. First, we note that, by incorporating careful design of each pixel for the MOEs, the RGB spectrum is guided through the eye lens to the retina, enabling a close to Maxwellian-view operation, for focal-free display to address the vergence-accommodation conflict. Second, our designed MOE display has an FoV determined only by our metasurface grating structure, achieving ≈ 55° currently or higher, even with normal-index glass. Third, as shown in Fig. 1a, the In-MOE is at a slant angle on the glass waveguide to direct the wave propagation towards the Out-MOE. Without the slant angle, the diffraction would be in both positive and negative directions (of the MOE surface normal), leading to a lower (approximately half) efficiency. Together with rigorous coupled-wave analysis to minimize undesired diffraction modes, we are able to maximize the efficiencies of the desired modes towards the output, surpassing efficiencies more than 1%. This aids the embedding of augmented information and virtual images with real-world images, especially with outdoor light, as shown in Fig. 1d example. We also note that prior HOE-DOE AR waveguides, with multi-layer multi-glass waveguides, have spurious diffraction modes and hence efficiencies sizably lower than 1%, necessitating indoor operation or shielding outdoor light by 80%. Fourth, as shown in Fig. 1a, c, our MOE waveguide utilizes only a single glass layer for the whole RGB spectrum, reducing unwanted diffraction and with higher efficiency. Our single-layer implementation also brings compactness and lightweight operation, while simplifying the MOE fabrication and yield.

(a, b) Overview of our metasurface AR/MR waveguide glass architecture with cross-section and top views of the ray propagation, respectively with the cross-section area shown as AA′ in the top view. (c) Single glass waveguide compared with conventional multi-glass waveguide for each color and each focal plane. (d) Efficiency comparison for virtual image.

We first start by defining the FoV bounds from conventional total internal reflection of a waveguide25,108,109,110, without a metasurface. As illustrated in Fig. 2a–d, for a ± 10° input, the ray-traced output would be \(\mp \) 10°; one can obtain an increased output FoV with larger input angles. However, the waveguide total internal reflection requirement bounds the input positive angles to less than 13.3°. This limits the FoV to less than or ≈ 26.7°, for a glass waveguide of 1.46 refractive index. For one of the higher-index glasses at 1.6 refractive index as shown in Fig. 2e, the FoV can be increased to 34.9° but rapidly faces an asymptotic limit. By controlling the wave propagation through multi-period metasurfaces, Fig. 2f shows the increased of the FoV to ≈ 55° for a normal-index glass at 1.46 refractive index. This illustration is for 646 nm, supplementing the 520 nm overview shown in Fig. 1a. Through rigorous coupled-wave analysis (RCWA)111,112,113,114, simple modal method (SMM)115,116,117,118,119,120, and finite-difference time-domain (FDTD)121, we optimize our MOE at each position for each wavelength. With the distance from the out-MOE to the eyebox set by design and a desired FoV fixed, we fine-tune our MOE grating periods for the desired input–output angle at each pixel, for each wavelength. We note that our MOE implementation is mostly bounded by nanofabrication lithography resolution.

Reconstructed total internal reflection and input–output propagation of the conventional optical element waveguide. The chief ray angle is illustrated. (a) Overall input–output ray propagation. (b–d) Comparisons of 10°, 0°, and − 10° propagation (e) Designed FoVs for different waveguide glass refractive indices, versus angle in waveguide. The dashed vertical lines show the total internal reflection bounds for the two corresponding refractive indices. (f) Ray tracing of MOE for 646 nm showing FoV of 55°.

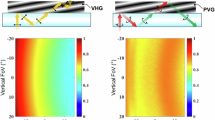

Figure 3 next shows the subsequent MOE efficiency computation and optimization for different propagation x-positions along the metasurface y-center, mapped for a range of SiNx refractive indices, fill factors and SiNx film heights. Our MOE incorporates a pixel-by-pixel phase control by introducing phase changes within a length of the wavelength. The abrupt phase shifts enable freedom in controlling the wavefront, with the propagation of light being governed by Fermat’s principle63. We observe that the efficiency is higher on the right side (positive-x) of each MOE and go up to even 50% total input–output efficiencies. This can be explained in Fig. 1a, where the input–output beams on the out-MOE is shown, for our slanted input MOE design. In a slanted input and via the Littrow mounting effect, the output positive–negative diffraction lobes have unequal efficiencies, with the αP (positive diffraction lobe) having lower diffraction efficiencies than the αN (negative diffraction lobe, more than the surface normal and 90°, in the reverted beam direction). These 2D maps are for a center wavelength of 520 nm and the y-center; other wavelengths and y-positions simply have an offset in the efficiency map or with perturbed efficiencies.

Numerical design map optimization of the metasurface waveguide glass, showing the metasurface optical waveguide display diffraction efficiencies for input/output MOE in the waveguide propagation direction for different positions in the waveguide x, fill factor (FF), and height parameters. Rigorous coupled-wave analysis (RCWA) numerical simulation is used in each design point of the 2D maps. (a) The height is fixed at 0.35 nm. Color scale bar denotes total intensity input–output propagation efficiencies in percentage. (b) Top panel for a fill factor (FF) of 0.4 and bottom panel for a fill factor of 0.5, with varying waveguide heights. The same color scale bar is used. In this implementation, optimal regions for the waveguide heights, fill factors and refractive indices of the metasurface are denoted. (c) Fill factor 0.46 and SiNx refractive index of 2.14 (at wavelength 521 nm) with wavelengths of 447 nm, 521 nm, and 644 nm for blue, green, and red, respectively and the combined average.

In optimizing for the high-quality images for AR devices, we observe two features in these 2D map plots—the average efficiency and the dark low-efficiency crossing lines. The efficiency fluctuations, including the dark crossing lines, can be understood when comparing the grating to the simple case of a slab waveguide, where a wave excites discrete modes in the gratings113. The diffraction properties and efficiency are mainly determined by the modes within the grating region. These modes propagate through the grating region with different effective indices and couple out at the grating-substrate interface108. The behavior of these modes results in the fluctuations and also cause the sharp dips in the efficiency maps of Fig. 3. To choose an implementation for a desired overall design (FoV, full color, and glass refractive index prototype), parameters which result in high average efficiencies and lowest number of dark crossing lines must be accounted for. Higher refractive indexes have generally higher average efficiency trends, but the number of dark lines almost doubles when the refractive index increases from 2.0 to 2.3. The fill factor and height shift the position of the dark crossing lines and have optimal conditions for highest average efficiencies—represented as bright islands, where the brightest pixels are located. The change in optimal height of the MOE based on the wavelength (color) and the combined average efficiency map is shown in Fig. 3c. Similar trends in the map and an increase in optimal height with wavelength can be observed. Based on these modelled mapping results, the SiNx refractive index of 2.14, a MOE height of 0.35 μm and a fill factor of 0.46 are chosen as the best conditions, avoiding most of the dark crossing lines in our display implementation. With each of the input/output MOE having a maximum simulated efficiency close to 50% and a diffraction efficiency division between the three colors (one third efficiency at each input/output), the theoretical efficiency is calculated to be close to 2.8%.

Incorporating our AR waveguide design implementations, we fabricated the nanostructured metasurface optical elements in a silicon foundry process, with nitride film deposition, deep-ultraviolet lithography and MOE nanopatterning. Figure 4 summarizes our fabricated MOE wafer, with Fig. 4b illustrating the cross-section scanning electron micrograph (SEM) of our grating structures, and Fig. 4c illustrating the top-view zoom-in (SEM) of our optimized full-color MOEs. We note that the fabrication dimensions and specifications meet our designs. After each step in the fabrication, the MOE feature critical dimensions are carefully examined to confirm the design fidelity of each metasurface region to achieve the AR waveguide.

Nanofabricated metasurface wafer and optical elements. (a) Wafer-scale nanofabrication of the metasurfaces. (b) Cross-section scanning electron micrograph (SEM) of the engineered grating ridges on the glass wafer. Scale bar: 1 μm. (c) Top-view SEM of the optimized full-color metasurface elements. Scale bar: 5 μm.

Figure 5 shows several experimental setups built specifically for the AR waveguide display characterization. First, Fig. 5a–c are the distortion calibration of the In-MOE and the focal length calibration of the Out-MOE, across the red–green–blue spectrum. The distortion calibration122,123 of the In-MOE is achieved by measuring the relative distances among nine laser spots at the Out-MOE. The small In-MOE distortion has little effect on the mono-MOE and the color-MOE. Based on the calibrated distortion, we modified the MOE and reduced the small distortions to the negligible levels by promoting the distortion calibration setup and optimizing the analysis software for the distortion calibration. The focal length calibration is achieved by tracking the nine laser spots out of the Out-MOE, which determines the focal spot astigmatism of the MOE, making it a key parameter for the design of our MOE. Figure 5d is the instrumentation for FoV124,125,126,127 and input–output efficiency characterization. After guiding the collimated laser beam into the In-MOE, we can obtain the FoV by scanning the beam size near the focal plane of the Out-MOE. The input–output efficiency is obtained by measuring the input–output power. Via changing the input beam size and position on the In-MOE, we can obtain the whole efficiency map of the MOE, which helps to improve the uniformity of the MOE. Figure 5e shows the setup we built for the modulation transfer function (MTF) characterization based on a point source mapping123. A focused laser beam passes through the In-MOE, the Out-MOE and an eye-equivalent lens, with imaging on a CMOS camera.

Measurement setups of the metasurface waveguide field-of-view, input–output efficiency, and modulation transfer function. (a–c) Calibration of the fx and fy focal lengths and in-coupling distortion, across the red–green–blue spectrum. Note, for panel (c) the blue laser input shows up as violet in this figure because of the color camera capture. (d) Setup for the field-of-view and input–output efficiency characterization. (e) Point source setup for the modulation transfer function characterization.

By Fourier analysis of the measured images, Fig. 6 shows the quantified MTF of our MOE waveguide. MTF specifies how the relative contrast of different spatial frequencies is handled by the system of our MOE display6. Here we select a Sony laser projector (MP-CL1, update rate per image of 60 Hz, resolution of 1920 × 720, and projects 43,200 lines/s) for the compact optical engine. In this setup we remove limitations from the optical engine on the MTF caused by limited capability to balance all aberrations using off-the-shelf lenses. These aberrations include spherical aberration, chromatic aberration, astigmatism, field curvature, and distortion. The contrast sensitivity of the human eye depends on several conditions such as the luminance, the viewing angle of the object, and the surrounding illumination128,129,130. Based on our model, we aggregate these conditions and assume the contrast sensitivity of the human eye is above 0.4131 when the resolution of image is below 1080 pixels (full resolution). In addition, the average diameter of human retina is 24 mm132 and its effective diameter within the FoV of our MOE is 20 mm. Therefore, we set the MOE display contrast target at 0.4, with a desired spatial frequency up to 27 lps/mm [1080pixels ÷ 2(pixels/lps) ÷ 20 mm].

Measured modulation transfer function of metasurface waveguide prototypes: (a) Mono-MOE (red spectrum) and color-MOE (across the red–green–blue spectrum). (b) Mono-MOE (red spectrum) is compared across four positions in the MOE (MOE is symmetric with mirror plane at center in y direction). The target contrast is 0.4 at 27 lps/mm which is the full high-definition 1080-pixels for displays. For a contrast at 0.4, a 1064 × 1064 pixel display (≈ 29.0 lps/mm) for the red mono-MOE and a 520 × 520 pixel display (≈ 13.0 lps/mm, mainly limited by green) for the color-MOE are experimentally observed.

Illuminated by a focused red laser, the measured MTF of the mono-MOE is depicted as a grey plot in Fig. 6a. For a contrast at 0.4, a 1064 × 1064 pixel display (≈ 29.0 lps/mm) of the red mono-MOE is experimentally observed. It is closely above our target resolution 1080 × 1080. For a contrast at 0.4 and our color-MOE, the display shows an experimental 520 × 520 pixel resolution. This is from the ≈ 13.0 lps/mm, mainly bounded by the green segments of our MOE; the red and blue segments are higher resolution at ≈ 17.0 and ≈ 15.3 lps/mm respectively in this proof-of-principle demonstration. This green-segment resolution of the color-MOE can be improved via optimization of fabrication to increase input–output efficiency. The complex color-MOE fabrication has a lower resolution currently compared to the mono-MOE because of beam overlap between the red–green–blue segments and the resulting reduced intensity contrast. In Fig. 6b, we show the comparison of the red mono-MOE across four different positions; center, left, bottom and corner. For a contrast at 0.4, the red mono-MOE shows the highest at the center and bottom of ≈ 29.0 and ≈ 30.4 lps/mm, respectively. The left edge and corner show slight degradation of resolution with ≈ 25.5 and ≈ 23.9 lps/mm, respectively, due to less input/output efficiency at those points. Consequently, the resolution of the edge and corner can also be increased similarly to the color-MOE. We also note that although the MTF of our system shown here is free of aberrations from lenses, the actual optical engine setup consisting of off-the-shelf lenses is currently mainly bounded by the slight mismatch between our optical engine and MOE caused by unbalanced aberrations. To overcome this limitation, later we will use customized lenses to optimize the optical engine to our MOE.

Discussion

With the MTF determined and as proof-of-principle, we build up a test measurement system consisting of the optical engine, the MOEs (either the green mono-MOE or color-MOE prototypes), an eye-equivalent lens, and a retina-equivalent white screen as shown in Fig. 7. An example input image at 1080 × 720 pixels is illustrated in Fig. 7a. For the green mono-MOE, only the green laser in our laser beam scanning projector is turned on and therefore only the green channel of the original input image (Fig. 7a) is projected through the whole system on the retina-equivalent white screen. This is depicted in Fig. 7b, for the metasurface display demonstration. We note that the boundary edges of the green image are not captured, and this is due to the cylinder lens pair size in our metasurface demonstration. For the color-MOE, all lasers (red, green and blue) in the laser beam scanning projector are turned on and therefore all RGB channels of the original input image are projected through our metasurface waveguide demonstration. This is illustrated in Fig. 7c, as a proof-of-principle. The image is reddish as the red segments of the color-MOE currently have higher efficiency than the green and blue segments. This can be re-balanced by optimizing the RGB efficiency of our color-MOE. To show the capability of our MOE on real world application, Fig. 8 illustrates the augmented reality image captured on a CMOS camera. The image was observed on a red mono-MOE prototype with the Sony laser projector for the optical engine. We also note that main cause of the image degradation is from the mismatch between the optical engine and MOEs, introducing spherical and chromatic aberrations, astigmatism, field curvature and distortion. This formation of the multi-pixel displays across the input MOE, output MOE, waveguide, optical engine, and imaging sub-system, however, paves the platform of nanostructured metasurfaces towards potential AR displays.

Experimental proof-of-principle observations of our metasurface waveguide display. (a) Original input image, from the International Committee for Display Metrology (ICDM) test pattern, sourced from VESA flat-panel display measurement (FPDM) standard. (b) Green mono-MOE smart glass output green image with only green laser input. (c) Color-MOE smart glass output image with red, green and blue laser input. (d) Optical engine and optical lens coupling interface—metasurface demonstration, with projected image at the retina-equivalent white screen location. Note that the camera photographs (b–d) and documents printouts are displayed in lower resolution than actual viewing in live-operation. The modulation transfer function (Fig. 6) is the more rigorous demonstration of the metasurface glass waveguide display performance.

Display results from our metasurface waveguide display. (a–d) Inset shows the original images displayed. Observed images captured via a CMOS camera. The superimposed image of panel (a) is from the International Committee for Display Metrology (ICDM) test pattern, sourced from VESA flat-panel display measurement (FPDM) standards.

Table 1 summarizes and compares the performance of our prototype with prototypical AR/MR waveguide displays29,133,134,135,136,137,138. These prior approaches use HOE-DOE and waveguides, typically consisting of in-coupling, intermediate, and out-coupling stages. They have, however, low output efficiencies (<< 1%) since their multiple diffraction elements and waveguides generate more undesired diffraction light, while our display architecture can be achieved with one waveguide and two MOEs. The prior DOEs also typically have smaller horizontal FoVs, below 40°. The prior multi-layer grating structures are also more challenging for scaling up the fabrication. The current limitation of our single-layer two MOE implementation is a smaller eyebox, resulting in cutting of the virtual image with eye movement from the center position. However, mechanical or optical methods for eyebox increase are currently widely researched and more advanced concepts on the metasurfaces including eye-tracking139, increasing view-points140 can potentially overcome this. We also note the prior implementations are single-focal or dual focal—with the user only observing the virtual object clearly when focusing their eyes to the plane. In other words, the user cannot see virtual objects at infinity if they are looking a close-by object. In contrast, our MOE architecture is by design focal-free and the image projected onto the retina, alleviating the vergence-accommodation conflict and enables the virtual object to be clearly seen whenever the user is focusing from near to infinity. Enabled by numerical design-optimization and foundry-based nanofabrication, we demonstrate experimental proof-of-principle operation of metasurface optical elements towards AR/MR waveguide displays. Our metasurfaces has enabled FoV greater than 40°, input–output efficiencies greater than 1% and is based on a focal-free implementation, in support of augmented display technologies.

Methods

Device fabrication

First a 350-nm Si-rich SiNx layer is deposited on 500 μm thick fused silica wafers using low-pressure chemical vapor deposition (LPCVD, Tystar Titan II) with a gas mixture of SiH2Cl2 and NH3. The resulting silicon nitride layer was patterned via lithography at Broadcom Inc, by an optimized ASML PAS5500-1150 scanner capable of 90 nm resolution with 280 nm of positive resist and 40 nm of bottom anti-reflective coating or at UCSB Nanofab with a 248 nm DUV ASML 5500 stepper using a positive resist of UV210 and top anti-reflective coating DUV42P-6 with thicknesses of 230 nm and 60 nm, respectively. Subsequently the SiNx layer is etched down at UCLA Nanolab using dry reactive ion etching via ULVAC NLD 570 fluorine etching machine using a photoresist etch mask. The etch parameters were 38 sccm Argon, 4 sccm oxygen, and 38 sccm CHF3, 700 W ICP power, and 100 W RIE power at a pressure of 3 mTorr. The Si3N4 etch was able to achieve a 3:1 aspect ratio with 150 nm feature sizes and a side wall angel of 86 degrees with a mask selectivity of 1.8:1. and diced. Each input–output MOE is then bonded to the glass waveguide for testing. Separate input–output MOEs are examined via SEM (Hitachi S4700) for sidewall and dimensional characterization.

References

Kress, B. C. Digital optical elements and technologies (EDO19): applications to AR/VR/MR. In Proceedings of SPIE 11062, Digital Optical Technologies 2019, Vol. 1106222 (2019).

Bottani, E. & Vignali, G. Augmented reality technology in the manufacturing industry: A review of the last decade. IISE Trans. 51, 284 (2019).

Dey, A., Billinghurst, M., Lindeman, R. W. & Swan, J. E. II. A systematic review of 10 years of augmented reality usability studies: 2005 to 2014. Front. Robot. AI 5, 37 (2018).

Kress, B. C. & Shin, M. Diffractive and holographic optics as optical combiners in head mounted displays. In UbiComp '13 Adjunct: Proceedings of the 2013 ACM conference on Pervasive and ubiquitous computing adjunct publication, 1479 (2013).

Berryman, D. R. Augmented reality: A review. Med. Ref. Serv. Q. 31, 212 (2012).

Rolland, J. & Cakmakci, O. Head-worn displays: The future through new eyes. Opt. Photon. News 20, 20 (2009).

Orange-Kedem, R. et al. 3D printable diffractive optical elements by liquid immersion. Nat. Commun. 12, 3067 (2021).

Goncharsky, A., Goncharsky, A., Melnik, D. & Durlevich, S. Nanooptical elements for visual verification. Sci. Rep. 11, 2426 (2021).

Yu, Y. et al. An edge-lit volume holographic optical element for an objective turret in a lensless digital holographic microscope. Sci. Rep. 10, 14580 (2020).

Banerji, S., Cooke, J. & Sensale-Rodriguez, B. Impact of fabrication errors and refractive index on multilevel diffractive lens performance. Sci. Rep. 10, 14608 (2020).

Goncharsky, A. & Durlevich, S. DOE for the formation of the effect of switching between two images when an element is turned by 180 degrees. Sci. Rep. 10, 10606 (2020).

Cho, S. Y., Ono, M., Yoshida, H. & Ozaki, M. Bragg-Berry flat reflectors for transparent computer-generated holograms and waveguide holography with visible color playback capability. Sci. Rep. 10, 8201 (2020).

Wang, H. & Piestun, R. Azimuthal multiplexing 3D diffractive optics. Sci. Rep. 10, 6438 (2020).

Arns, J. A., Colburn, W. S. & Barden, S. C. Volume phase gratings and their potentials for astronomical applications. Proc. SPIE 3355, 866 (1998).

Blanche, B. P., Gailly, P., Habraken, S., Lemaire, P. & Jamar, C. Volume phase holographic gratings: large size and high diffraction efficiency. Opt. Eng. 43, 2603 (2004).

Mukawa, H. et al. A full color eyewear display using planar waveguides with reflection volume holograms. J. Soc. Inf. Disp. 17, 185 (2009).

Guo, J., Tu, Y., Yang, L., Wang, L. & Wang, B. Design of a multiplexing grating for color holographic waveguide. Opt. Eng. 54, 125105 (2015).

Wu, Z., Liu, J. & Wang, Y. A high-efficiency holographic waveguide display system with a prism in-coupler. J. Soc. Inf. Disp. 21, 524 (2013).

Kämpfe, T., Kley, E. B., Tünnermann, A. & Dannberg, P. Design and fabrication of stacked, computer generated holograms for multicolor image generation. Appl. Opt. 46, 5482 (2007).

Gupta, M. C. & Peng, S. T. Diffraction characteristics of surface-relief gratings. Appl. Opt. 32, 2911 (1993).

Kwan, C. & Taylor, G. W. Optimization of the parallelogrammic grating diffraction efficiency for normally incident waves. Appl. Opt. 37, 7698 (1998).

Moharam, M. G. & Gaylord, T. K. Diffraction analysis of dielectric surface-relief gratings. J. Opt. Soc. Am. A 72, 1385 (1982).

Preist, T. W., Harris, J. B., Wanstall, N. P. & Sambles, J. R. Optical response of blazed and overhanging gratings using oblique Chandezon transformations. J. Mod. Opt. 44, 1073 (1997).

Yokomori, K. Dielectric surface-relief gratings with high diffraction efficiencies. Appl. Opt. 23, 2303 (1984).

Tishchenko, A. V. Phenomenological representation of deep and high contrast lamellar gratings by means of the modal method. Opt. Quantum Electron. 37, 309 (2005).

Miller, J. M., de Beaucoudrey, N., Chavel, P., Turunen, J. & Cambril, E. Design and fabrication of binary slanted surface-relief gratings for a planar optical interconnection. Appl. Opt. 36, 5717 (1997).

Maikisch, J. S. & Gaylord, T. K. Optimum parallel-face slanted surface-relief gratings. Appl. Opt. 46, 3674 (2007).

Levola, T. & Laakkonen, P. Replicated slanted gratings with a high refractive index material for in and outcoupling of light. Opt. Express 15, 2067 (2007).

Kress, B. C. & Chatterjee, I. Waveguide combiners for mixed reality headsets: a nanophotonics design perspective. Nanophotonics 10, 41–74 (2021).

Richter, F. The Diverse Potential of VR & AR Applications. www.statista.com/chart/4602/virtual-and-augmented-reality-software-revenue (2016).

Virtual & Augmented Reality: The Next Big Computing Platform? www.goldmansachs.com/insights/pages/virtual-and-augmented-reality-report.html (2016).

Hall, S. & Takahashi, R. Augmented and virtual reality: The promise and peril of immersive technologies. McKinsey Insight Article (2017).

Cakmakci, O. & Rolland, J. “Head-worn displays: A review”, Display Technology. J. Disp. Technol. 2, 3–199 (2006).

Bharadwaj, S. R. & Candy, T. R. Accommodative and vergence responses to conflicting blur and disparity stimuli during development. J. Vis. 9, 4 (2009).

Hoffman, D. M., Girshick, A. R., Akeley, K. & Banks, M. S. Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 8, 33 (2008).

Lambooij, M., IJsselsteijn, W., Fortuin, M. & Heynderickx, I. Visual discomfort and visual fatigue of stereoscopic displays: A review. J. Imaging Sci. Technol. 53, 030201 (2009).

Reichelt, S., Haussler, R., Futterer, G. & Leister, N. Depth cues in human visual perception and their realization in 3D displays. In Proceedings of SPIE 7690, Three-Dimensional Imaging, Visualization, and Display 2010 and Display Technologies and Applications for Defense, Security, and Avionics IV, Vol. 7690, 76900B (2010).

Lin, T., Zhan, T., Zou, J., Fan, F. & Wu, S. Maxwellian near-eye display with an expanded eyebox. Opt. Express 28, 38616 (2020).

Chang, C., Cui, W., Park, J. & Gao, L. Computational holographic Maxwellian near-eye display with an expanded eyebox. Sci. Rep. 9, 18749 (2019).

Lee, J. S., Kim, Y. K., Lee, M. Y. & Won, Y. H. Enhanced see-through near-eye display using time-division multiplexing of a Maxwellian-view and holographic display. Opt. Express 27, 689 (2019).

Takaki, Y. & Fujimoto, N. Flexible retinal image formation by holographic Maxwellian-view display. Opt. Express 26, 22985 (2018).

Fan, J., Cheng, Y. & He, B. High-efficiency ultrathin terahertz geometric metasurface for full-space wavefront manipulation at two frequencies. J. Phys. D Appl. Phys. 54, 11 (2021).

Wei, Q., Huang, L., Zentgraf, T. & Wang, Y. Optical wavefront shaping based on functional metasurfaces. Nanophotonics 9, 987 (2020).

Wang, Z. et al. On-chip wavefront shaping with dielectric metasurface. Nat. Commun. 10, 3547 (2019).

Lee, G. et al. Metasurface eyepiece for augmented reality. Nat. Commun. 9, 4562 (2018).

Kildishev, A. V., Boltasseva, A. & Shalaev, V. M. Planar photonics with metasurfaces. Science 339, 1232009 (2013).

Yu, N. & Capasso, F. Flat optics with designer metasurfaces. Nature Mater. 13, 139 (2014).

Genevet, P., Capasso, F., Aieta, F., Khorasaninejad, M. & Devlin, R. Recent advances in planar optics: From plasmonic to dielectric metasurfaces. Optica 4, 139 (2017).

Falcone, F. et al. Babinet principle applied to the design of metasurfaces and metamaterials. Phys. Rev. Lett. 93, 197401 (2004).

Hsiao, H. H., Chu, C. H. & Tsai, D. P. Fundamentals and applications of metasurfaces. Small Methods 1, 1600064 (2017).

Solntsev, A. S., Agarwal, G. S. & Kivshar, Y. S. Metasurfaces for quantum photonics. Nat. Photonics 15, 327–336 (2021).

Zhou, J. et al. Metasurface enabled quantum edge detection. Sci. Adv. 6, eabc4385 (2020).

Bekenstein, R. et al. Quantum metasurfaces with atom arrays. Nat. Phys. 16, 676–681 (2020).

Georgi, P. et al. Metasurface interferometry toward quantum sensors. Light Sci. Appl. 8, 70 (2019).

Altuzarra, C. et al. Imaging of polarization-sensitive metasurfaces with quantum entanglement. Phys. Rev. A 99, 020101 (2019).

Ebbesen, T. W., Lezec, H. J., Ghaemi, H., Thio, T. & Wolff, P. Extraordinary optical transmission through sub-wavelength hole arrays. Nature 391, 667 (1998).

Yin, L. et al. Subwavelength focusing and guiding of surface plasmons. Nano Lett. 5, 1399 (2005).

Liu, Z. et al. Focusing surface plasmons with a plasmonic lens. Nano Lett. 5, 1726–1729 (2005).

Huang, F. M., Zheludev, N., Chen, Y. & Javier Garcia de Abajo, F. Focusing of light by a nanohole array. Appl. Phys. Lett. 90, 091119 (2007).

Sun, Z. & Kim, H. K. Refractive transmission of light and beam shaping with metallic nano-optic lenses. Appl. Phys. Lett. 85, 642 (2004).

Shi, H. et al. Beam manipulating by metallic nano-slits with variant widths. Opt. Express 13, 6815 (2005).

Verslegers, L. et al. Planar lenses based on nanoscale slit arrays in a metallic film. Nano Lett. 9, 235 (2009).

Yu, N. et al. Light propagation with phase discontinuities: Generalized laws of reflection and refraction. Science 334, 333 (2011).

Aieta, F. et al. Out-of-plane reflection and refraction of light by anisotropic optical antenna metasurfaces with phase discontinuities. Nano Lett. 12, 1702 (2012).

Aieta, F. et al. Aberration-free ultrathin flat lenses and axicons at telecom wavelengths based on plasmonic metasurfaces. Nano Lett. 12, 4932 (2012).

Memarzadeh, B. & Mosallaei, H. Array of planar plasmonic scatterers functioning as light concentrator. Opt. Lett. 36, 2569 (2011).

Ni, X., Ishii, S., Kildishev, A. V. & Shalaev, V. M. Ultra-thin, planar, Babinet-inverted plasmonic metalenses. Light Sci. Appl. 2, e72 (2013).

Nikolov, D. K. et al. Metaform optics: Bridging nanophotonics and freeform optics. Sci. Adv. 7, 18 (2021).

Bayati, E., Wolfram, A., Colburn, S., Huang, L. & Majumdar, A. Design of achromatic augmented reality visors based on composite metasurfaces. Appl. Opt. 60, 4 (2021).

Nikolov, D. K. et al. See-through reflective metasurface diffraction grating. Opt. Mater. Express 9, 10 (2019).

Brongersma, M. L. The road to atomically thin metasurface optics. Nanophotonics 10, 643–654 (2021).

Zou, H., Li, P. & Peng, P. An ultra-thin acoustic metasurface with multiply resonant units. Phys. Lett. A 384, 126151 (2020).

Guo, X., Ding, Y., Duan, Y. & Ni, X. Nonreciprocal metasurface with space–time phase modulation. Light Sci. Appl. 8, 123 (2019).

Kang, M., Feng, T., Wang, H.-T. & Li, J. Wave front engineering from an array of thin aperture antennas. Opt. Express 20, 15882 (2012).

Chen, X. et al. Dual-polarity plasmonic metalens for visible light. Nat. Commun. 3, 1198 (2012).

Sun, S. et al. High-efficiency broadband anomalous reflection by gradient meta-surfaces. Nano Lett. 12, 6223 (2012).

Sun, S. et al. Gradient-index meta-surfaces as a bridge linking propagating waves and surface waves. Nat. Mater. 11, 426 (2012).

Pors, A., Nielsen, M. G., Eriksen, R. L. & Bozhevolnyi, S. I. Broadband focusing flat mirrors based on plasmonic gradient metasurfaces. Nano Lett. 13, 829 (2013).

Zhang, S. et al. High efficiency near diffraction-limited mid-infrared flat lenses based on metasurface reflect arrays. Opt. Express 24, 18024 (2016).

Monticone, F., Estakhri, N. M. & Alù, A. Full control of nanoscale optical transmission with a composite metascreen. Phys. Rev. Lett. 110, 203903 (2013).

Jahani, S. & Jacob, Z. All-dielectric metamaterials. Nat. Nanotechnol. 11, 23 (2016).

Kuznetsov, A. I., Miroshnichenko, A. E., Brongersma, M. L., Kivshar, Y. S. & Luk’yanchuk, B. Optically resonant dielectric nanostructures. Science 354, e2472 (2016).

Flanders, D. C. Submicrometer periodicity gratings as artificial anisotropic dielectrics. Appl. Phys. Lett. 42, 492 (1983).

Hasman, E., Kleiner, V., Biener, G. & Niv, A. Polarization dependent focusing lens by use of quantized Pancharatnam—Berry phase diffractive optics. Appl. Phys. Lett. 82, 328 (2003).

Levy, U., Kim, H.-C., Tsai, C.-H. & Fainman, Y. Near-infrared demonstration of computer-generated holograms implemented by using subwavelength gratings with space-variant orientation. Opt. Lett. 30, 2089 (2005).

Lin, D., Fan, P., Hasman, E. & Brongersma, M. L. Dielectric gradient metasurface optical elements. Science 345, 298 (2014).

Khorasaninejad, M. et al. Metalenses at visible wavelengths: Diffraction-limited focusing and subwavelength resolution imaging. Science 352, 1190 (2016).

Luo, W., Xiao, S., He, Q., Sun, S. & Zhou, L. Photonic spin Hall effect with nearly 100% efficiency. Adv. Opt. Mater. 3, 1102 (2015).

Zheng, G. et al. Metasurface holograms reaching 80% efficiency. Nat. Nanotechnol. 10, 308 (2015).

Kress, B. C. & Meyrueis, P. Applied Digital Optics: From Micro-optics to Nanophotonics (Wiley, 2009).

Lu, F., Sedgwick, F. G., Karagodsky, V., Chase, C. & Chang-Hasnain, C. J. Planar high-numerical-aperture low-loss focusing reflectors and lenses using subwavelength high contrast gratings. Opt. Express 18, 12606 (2010).

Fattal, D., Li, J., Peng, Z., Fiorentino, M. & Beausoleil, R. G. Flat dielectric grating reflectors with focusing abilities. Nat. Photonics 4, 466 (2010).

Arbabi, A., Horie, Y., Ball, A. J., Bagheri, M. & Faraon, A. Subwavelength-thick lenses with high numerical apertures and large efficiency based on high-contrast transmit arrays. Nat. Commun. 6, 7069 (2015).

West, P. R. et al. All-dielectric subwavelength metasurface focusing lens. Opt. Express 22, 26212 (2014).

Shalaginov, M. Y. et al. Reconfigurable all-dielectric metalens with diffraction-limited performance. Nat. Commun. 12, 1225 (2021).

Balli, F., Sultan, M., Lami, S. K. & Hastings, J. T. A hybrid achromatic metalens. Nat. Commun. 11, 3892 (2020).

Presutti, F. & Monticone, F. Focusing on bandwidth: Achromatic metalens limits. Optica 7, 624–631 (2020).

Lin, R. J. et al. Achromatic metalens array for full-colour light-field imaging. Nat. Nanotechnol. 14, 227–231 (2019).

Zhan, A. et al. Low-contrast dielectric metasurface optics. ACS Photonics 3, 209 (2016).

Estakhri, N. M., Neder, V., Knight, M. W., Polman, A. & Alù, A. Visible light, wide-angle graded metasurface for back reflection. ACS Photonics 4, 228 (2017).

Khorasaninejad, M. et al. Polarization-insensitive metalenses at visible wavelengths. Nano Lett. 16, 7229 (2016).

Devlin, R. C., Khorasaninejad, M., Chen, W. T., Oh, J. & Capasso, F. Broadband high-efficiency dielectric metasurfaces for the visible spectrum. Proc. Natl. Acad. Sci. 113, 10473 (2016).

Khorasaninejad, M. et al. Visible wavelength planar metalenses based on titanium dioxide. IEEE J. Sel. Top. Quantum Electron. 23, 4700216 (2017).

Vo, S. et al. Sub-wavelength grating lenses with a twist. IEEE Photonics Technol. Lett. 26, 1375 (2014).

Arbabi, A. et al. Miniature optical planar camera based on a wide-angle metasurface doublet corrected for monochromatic aberrations. Nat. Commun. 7, 13682 (2016).

Groever, B., Chen, W. T. & Capasso, F. Meta-lens doublet in the visible region. Nano Lett. 17, 4902 (2017).

Chen, W. T. et al. Immersion meta-lenses at visible wavelengths for nanoscale imaging. Nano Lett. 17, 3188 (2017).

Clausnitzer, T. et al. An intelligible explanation of highly-efficient diffraction in deep dielectric rectangular transmission gratings. Opt. Express 13, 10448 (2005).

Zheng, J., Zhou, C., Feng, J. & Wang, B. Polarizing beam splitter of deep-etched triangular-groove fused-silica gratings. Opt. Lett. 33, 1554 (2008).

Braig, C. C. et al. An EUV beamsplitter based on conical grazing incidence diffraction. Opt. Express 20, 1825 (2012).

Wan, C., Gaylord, T. K. & Bakir, M. S. Rigorous coupled-wave analysis equivalent-index-slab method for analyzing 3D angular misalignment in interlayer grating couplers. Appl. Opt. 55, 10006 (2016).

Moharam, M. G., Grann, E. B. & Pommet, D. A. Formulation for stable and efficient implementation of the rigorous coupled-wave analysis of binary gratings. J. Opt. Soc. Am. A 12, 1068 (1995).

Moharam, M. G., Pommet, D. A., Grann, E. B. & Gaylord, T. K. Stable implementation of the rigorous coupled wave analysis for surface-relief dielectric gratings: enhanced transmittance matrix approach. J. Opt. Soc. Am. A 12, 1077 (1995).

Moharam, M. G. & Gaylord, T. K. Rigorous coupled-wave analysis of planar-grating diffraction. J. Opt. Soc. Am. 71, 811 (1981).

Wan, C., Gaylord, T. K. & Bakir, M. S. Grating design for interlayer optical interconnection of in-plane waveguides. Appl. Opt. 55, 2601 (2016).

Allured, R. & McEntaffer, R. T. Analytical alignment tolerances for off-plane reflection grating spectroscopy. Exp. Astron. 36, 661 (2013).

Eisen, L. et al. Planar configuration for image projection. Appl. Opt. 45, 4005 (2006).

Cameron, A. The application of holographic optical waveguide technology to Q-sight family of helmet mounted displays. Proc. SPIE 7326, 1 (2009).

Kogelnik, H. Coupled-wave theory of thick hologram gratings. Bell Syst. Tech. J. 48, 2909 (1969).

Sheng, P., Stepleman, R. S. & Sanda, P. N. Exact eigenfunctions for square-wave gratings—Application to diffraction and surface-plasmon calculations. Phys. Rev. B 26, 2907 (1982).

Oskooi, A. et al. MEEP: A flexible free-software package for electromagnetic simulations by the FDTD method. Computer Phys. Commun. 181, 687 (2010).

Lee, S., Abràmoff, M. D. & Reinhardt, J. M. Retinal image mosaicing using the radial distortion correction model. In International Society for Optics and Photonics 691435 (2008).

Li, J., Su, J. & Zeng, X. A solution method for image distortion correction model based on bilinear interpolation. Comput. Opt. 43, 1 (2019).

Chen, Z., Sang, X., Li, H., Wang, Y. & Zhao, L. Ultra-lightweight and wide field of view augmented reality virtual retina display based on optical fiber projector and volume holographic lens. Chin. Opt. Lett. 17, 090901 (2019).

Martinez, C., Krotov, V., Fowler, D. & Haeberlé, O. Lens-free near-eye intraocular projection display, concept and first evaluation. In Computational Optical Sensing and Imaging, CW1C, Vol. 5 (2016).

Matthies, D. J., Haescher, M., Alm, R. & Urban, B. Properties of a peripheral head-mounted display (phmd). Int. Conf. Hum. Comput. Interact. 528, 208–213 (2015).

Boreman, G. D. Modulation Transfer Function in Optical and Electro-optical Systems (SPIE Optical Engineering Press, 2001).

Barten, P. G. Physical model for the contrast sensitivity of the human eye. Int. Soc. Opt. Photonics 1666, 57 (1992).

Barten, P. G. Contrast Sensitivity of the Human Eye and Its Effects on Image Quality (SPIE Optical Engineering Press, 1999).

Barten, P. G. Formula for the contrast sensitivity of the human eye. Proc. SPIE 5294, 231 (2003).

Kress, B. C. Digital optical elements and technologies (EDO19): Applications to AR/VR/MR. Int. Soc. Opt. Photonics 11062, 1106222 (2019).

Guo, Y., Yao, G., Lei, B. & Tan, J. Monte Carlo model for studying the effects of melanin concentrations on retina light absorption. J. Opt. Soc. Am. A 25, 304 (2008).

Zhang, Y. & Fang, F. Development of planar diffractive waveguides in optical see-through head-mounted displays. Precis. Eng. 60, 482–496 (2019).

Kress, B. C. Optical waveguide combiners for AR headsets: Features and limitations. In Proceedings of SPIE 11062, Digital Optical Technologies 2019, Vol. 11062, 110620J (2019).

Kress, B. C. & Cummings, W. J. 11–1: invited paper: Towards the ultimate mixed reality experience: HoloLens display architecture choices. SID Symp. Dig. Tech. Pap. 48, 127 (2017).

Kress, B. C. & Cummings, W. J. Optical architecture of HoloLens mixed reality headset. Proc. SPIE 10335, 103350K (2017).

Microsoft Hololens, Mixed Reality Technology for Business.

Magic Leap, Augmented reality platform for Enterprise.

Kim, J. et al. Foveated AR: Dynamically-foveated augmented reality display. ACM Trans. Graph. 38, 4 (2019).

Jo, Y., Yoo, C., Bang, K., Lee, B. & Lee, B. Eye-box extended retinal projection type near-eye display with multiple independent viewpoints. Appl. Opt. 60, 4 (2021).

Acknowledgements

The authors acknowledge discussions with Dr. James F. McMillan, Vikas Vepachedu, Leo Chen, Darwin Hu, and Hank Lee, lithography and fabrication at Broadcom, and funding from the National Science Foundation and industry. The authors also acknowledge the original images in Figs. 7a and 8a are International Committee for Display Metrology (ICDM) test pattern images, sourced from VESA flat-panel display measurement (FPDM) standards. A portion of this work was performed in the UCLA Nanolab, California NanoSystems Institute (CNSI) at UCLA and UCSB Nanofabrication Facility, which are open-access laboratories.

Author information

Authors and Affiliations

Contributions

H.B. and Y.L. designed the MOE layout and performed numerical simulations. H.B., Y.L., and C.W.W. designed the MOE AR system. H.B., Y.L., and H.Y. calibrated, optimized, and measured the MOE AR system. H.B., B.M., and T.G.L. performed the device nanofabrication. H.B., Y.L., H.Y., and C.W.W. contributed to discussion and revision of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Boo, H., Lee, Y.S., Yang, H. et al. Metasurface wavefront control for high-performance user-natural augmented reality waveguide glasses. Sci Rep 12, 5832 (2022). https://doi.org/10.1038/s41598-022-09680-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-09680-1

- Springer Nature Limited

This article is cited by

-

Hyperspectral screen-image-synthesis meter with scattering-noise suppression

Scientific Reports (2023)