Abstract

European ash (Fraxinus excelsior) and narrow-leafed ash (F. angustifolia) are keystone forest tree species with a broad ecological amplitude and significant economic importance. Besides global warming both species are currently under significant threat by an invasive fungal pathogen that has been spreading progressively throughout the continent for almost three decades. Ash dieback caused by the ascomycete Hymenoscyphus fraxineus is capable of damaging ash trees of all age classes and often ultimately leads to the death of a tree after years of progressively developing crown defoliation. While studies at national and regional level already suggested rapid decline of ash populations as a result of ash dieback, a comprehensive survey at European level with harmonized crown assessment data across countries could shed more light into the population decline from a pan-European perspective and could also pave the way for a new conservation strategy beyond national boarders. Here we present data from the ICP Forests Level I crown condition monitoring from 27 countries resulting in > 36,000 observations. We found a substantial increase in defoliation and mortality over time indicating that crown defoliation has almost doubled during the last three decades. Hotspots of mortality are currently situated in southern Scandinavia and north-eastern Europe. Overall survival probability after nearly 30 years of infection has already reached a critical value of 0.51, but with large differences among regions (0.20–0.86). Both a Cox proportional hazard model as well as an Aalen additive regression model strongly suggest that survival of ash is significantly lower in locations with excessive water regime and which experienced more extreme precipitation events during the last two decades. Our results underpin the necessity for fast governmental action and joint rescue efforts beyond national borders since overall mean defoliation will likely reach 50% as early as 2030 as suggested by time series forecasting.

Similar content being viewed by others

Introduction

European ash (Fraxinus excelsior) and narrow-leafed ash (F. angustifolia) are key forest tree species with high ecological and economical value for the European forest sector1. As light demanding pioneer species, they succesfully inhabit a wide range of different ecosystems including riparian forests and mountain forests amongst others2. Their ability to particularly tolerate temporary flooding makes them both indispensable components of alluvial forests with strongly fluctuating hydrological regimes3. Moreover, both ash species are host to a wide range of other taxa including mammals, birds, invertebrates, and bryophytes. As such, Mitchell et al.4 estimated that from 953 species associated with European ash, 69 can be characterized as being “highly associated” with its host, which means that these species will be under threat of becoming rapidly extinct when the host populations decline.

However, European ash and narrow-leafed ash are currently endangered, since the invasive pathogen Hymenoscyphus fraxineus (T.Kowalski) Baral, Queloz & Hosoya has entered Europe around 19905. The disease is commonly known as ash dieback (hereafter abbreviated with “ADB”) and has devastatingly spread over entire Europe throughout the last 30 years6 with an expansion velocity of 30–70 km per year7,8,9,10. The epicentre of the disease is thought to be located in north-eastern Poland and has concentrically expanded through wind-dispersed ascospores as well as by human activity11. Briefly, leaves of ash trees are infected by windborne ascospores from where the fungus spreads via the petiole-shoot junction into the woody tissue of the tree. Recurrent infections cause rapid crown decline which often leads to mortality of trees after a few years12. Both species are thought to be equally susceptible against ADB12, while Fraxinus ornus as the third ash species occurring in Europe seems to be more resilient against the disease13 and is hence not considered in this study.

The extent to which the European population of F. excelsior and F. angustifolia has been declining since the arrival of the pathogen is largely unknown, which makes targeted conservation efforts at pan-European level challenging. National and regional reports on mortality rates were so far based upon literature reviews14, experimental forest sites15,16, and national forest inventory data17,18,19. However, the different approaches, datasets, and their specific sampling biases make it difficult to draw a conclusive pattern for European ash and narrow-leafed ash across their entire distributions. In particular, mapping mortality rates in space and time for entire Europe across the last three decades based on a consistent dataset could inform conservationists and policy makers at national and international levels about the current status of ash and can unravel mortality hotspots which should be given the highest priority for rescue and conservation programs in the near future. In this study we make use of survey data from the ICP Forests Level I network, providing detailed information on crown condition and diseases in annual resolution since 198720. In total, we analyzed 36,170 observations on ash trees from 1987 to 2020 in 27 countries and incorporated stand information as well as climate data in order to test for covariation between ash dieback-induced mortality and ecological variables that may accelerate or decelerate the decline as was recently suggested in earlier studies at regional scale17,19,21. We hypothesize that the mortality of both ash species has significantly increased throughout the last three decades resulting in a devastating ash dieback driven by ADB rather than by other abiotic and biotic agents. Moreover, we hypothesize that moist growing conditions, high abundance of ash, and moist weather conditions exacerbate mortality as they are likely to provide optimal growing conditions for the pathogen. Finally, we aim to use the past defoliation history to forecast the near future and unravel critical points for the long-term vitality and persistence for both species.

Results

Observed mortality and defoliation across Europe between 1987 and 2020

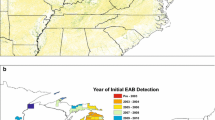

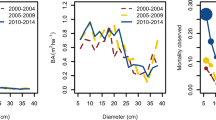

We identified 407 plots across 27 countries in which European ash or narrow-leafed ash occurred since the start of the survey in 1987 (Fig. 1, Table 1). This resulted in a total of 36,170 observations (plots × trees × years). Between 1987 and 2000 mortality frequency was moderate and occurred only sporadically in Spain, France, Romania, Slovakia, Italy, Lithuania and Moldova (range 0.006–0.15). Nevertheless, one stand in Belarus already showed 100% mortality between 1987 and 2000 (Fig. 2). Between 2000 and 2010 mortality accelerated mainly in Poland, Lithuania, Belarus, Sweden, Denmark, Germany, Czech Republic and at some local spots in France. While in most of these countries mortality rates were still low (1 to 3%), Poland and Sweden already showed significant mortality within stands (10% to 100%). However, in the following decade (2010–2020) ash mortality significantly accelerated with observed mortality in nearly all parts of Europe. Most notably, southern Scandinavia became a mortality hotspot with eight stands where all ash trees died within this period.

Generally, crown conditions of ash trees became progressively worse since the beginning of the survey across the entire range of occurrence. While average defoliation was 15% until the year 2000, it already reached 25% in 2010. After the latest survey in 2020 mean defoliation of European ash and narow-leafed ash has reached 38% on average. Mean defoliation showed strongest increase in the eastern, northern, and central part of the distribution since 1987 (Belarus, Denmark, Estonia, Latvia, Lithuania, Poland, Sweden, Germany, France, Luxembourg, Slovakia), and mostly non-significant trends in the southern part (Croatia, Moldova, Romania, Serbia, Slovenia, Spain, Switzerland, Turkey) (Fig. 3).

Mean defoliation across plots by survey country. Red dashed line shows linear time-series trend. 1Note that the downward trend in Denmark is caused by lost of of some plots and does not show recovery. 2Norway assessed ash only since 2013 and only dead trees were recorded without defoliation. 3Sweden changed its survey rotation since 2009 and only dead trees were recorded since then in 5 year intervals.

Survival probability after 30 years of infection history

The two different survival models (simultaneous/non-simultaneous infection) gave similar results for the first 10 years after infection, but diverged right after that period. After 20 years of infection model 1 which assumed an equal start of infection for all ash populations estimated a much higher survival probability of 0.95 (95% CI 0.939–0.959) while model 2 (assuming that infection happened delayed) estimated an overall survival probability of 0.7 (0.607–0.734). Overall survival probability after the last assessment of trees in 2020 was 0.84 (0.823–0.858) for model 1 and 0.5 for model 2 (0.404–0.628). The Kaplan-Maier step functions for both models are shown in Fig. 4.

Kaplan-Maier survival function and overall survival probabilities for two different models (black: infection started in 1992 reegardless of country, red: infection happened delayed with distinct infection timepoints in European countries). For Model 2 we assumed infection start of single countries according to dates of first arrival published in Vasaitis &Enderle 2017.

Cox proportional hazard model & Aalen additive regression model

Both the Cox-model and Aalens additive regression model unraveled significant covariates which determined survival of ash trees. The Cox-model found a significant influence of water status (p < 0.01) and a highly significant influence of extreme precipitation events (p < 0.001) as determinants for survival. Hazard ratios indicated that mortality risk increases towards sites having higher amount of excess water and also towards locations that have experienced more months with extreme precipitation during the last decades (Table 2). In concordance, the Aalen additive regression model revealed a strong negative impact of extreme precipitation events (slope: 0.003; p < 0.01), but also a compensating effect of extreme drought events (slope: -0.012; p < 0.01). Effect sizes of these two covariates were moderate and appeared mainly at time periods between 15 and 20 years after infection (Table 3; Supporting information S1).

Timer-series forecasting of crown condition development

Both estimators (ARIMA, exponential smoothing) gave similar forecasting results with continously increasing defoliation until the end of the forecasting period (2071) and strongly suggested that approximately until the year 2030 (exponential smoothing: 2031, ARIMA: 2033) mean defoliation could reach a critical threshold of 50% (Fig. 5). Confidence intervals were generally narrower for the ARIMA model compared to exponential smooting until 2036, but continously inreased after that time point towards more uncertain estimates. Both models were evaluated against the last ten years of observations (2010–2020) and exhibited high correlation between observed and forecasted data (Pearson-moment correlation 0.82 and 0.84, respectively). The ARIMA model showed generally better goodness-of-fit between observed and forecasted data with a MSE of 6.6% versus 16% (Supporting Information S2).

Mortality of ash versus background mortality

Mortality of ash trees was largely decoupled from background mortality within the same plots (Fig. 6). As already outlined above some mortality occurred in southern Europe in 1990 probably as a result of abiotic factors and therefore strongly correlated with background mortality. Throughout the remaining period there was literally no correlation between mortality of ash and background mortality (r = 0.15). Since 2010, ash mortality clearly accelerated and exceeded background mortality. By the end of 2020 10.8% of all surveyed ash trees had died while background mortality in the same plots was only 0.7%.

Discussion

Mortality of ash has clearly accelerated during the last three decades and has already reached a catastrophic peak in northern Europe where a large majority of ash trees have disappeared from active survey plots by the end of 2020. This is also in agreement with an estimated overall survival probability of 0.2 after nearly twenty years of infection history in this region. The numbers reported here could even represent the most optimistic estimate for survival of ash in Scandinavia, since some of the plots in Sweden and Denmark showed already severe crown defoliation before annual assessments were cancelled (Supporting Information S3). As an example, plot #55 from Denmark harboured 23 ash trees which had almost 95% defoliated crowns before the plot was abondoned in 2010 and most of the trees had meanwhile died. (Dr. Iben Margrete Thomsen, University of Copenhagen, pers. communication). Our observations are in concordance with local and regional surveys from national forest inventories, which reported massive decline of European ash in Southern Scandinavia in recent years. Solheim and Hietala22 monitored the progress of ash dieback in south-western Norway since 2008 and estimated a spread velocity of 51 km per year. Diaz-Yanez et al.17 investigated survival of European ash in Norwegian forest inventory plots and found a 74% increase in mortality rate after the disease has become prevalent in Norway. Although the Norwegian plots in our study are generally charaterized by very low frequency of ash, the results can nevertheless be interpreted as a strong argument for the efficient and devastating spread of ash dieback, because the fungus seems not to depend on high host density in order to conquer new territory quickly. Also in Denmark and Sweden European ash began to disappear rapidly after the disease arrived in 2003 and 2001, respectively15,23,24. In Sweden F. excelsior became red-listed in 2010 and later even reached the status “critically-endangered”15. Consequently and in concert with the results presented here, the evidence strongly suggests that Fraxinus excelsior is currently under extreme extinction risk in northern Europe.

Furthermore, other hotspots of ash mortality are currently situated in the Baltic region (Lithuania, Poland), in eastern Europe (Belarus), north-eastern and south western Germany, as well as in eastern France (Fig. 2). Although not as severe as in in the northern part, our results clearly demonstrate that the disease is establishing across all of Europe, which was also very recently confirmed by national forest inventory data19. Since the Baltic and eastern countries were the first which came in contact with the disease in the beginning of the 1990s, the pattern may be unanticipated and one would expect highest mortality in these countries rather than in the north or in the west. One explanation for this could be that the density of survey plots in which ash occurrs are lower in the eastern part compared to the west (134 vs. 175). This is indeed true for Estonia and Latvia, for instance, where European ash is most likely underrepresented when compared to data from national forest inventories. The second explanation could be that assesment schemes in some eastern countries were harmonized a little later and therefore some trees were probably already gone when the survey started. As an example, Poland started to assess European ash first in 2006 when the disease had already been affecting stands for more than 15 years which could be the reason for the observed discrepancy.

Southern Europe seems to suffer least from ash dieback since mortality rates are still low and happened only sporadically. Mortality in northern Spain and southern France which occurred early at the beginning of the survey was certainly not related to ash dieback, since both areas were free of the disease at that time25. Besides the fact that the infection history of Southern Europe is still short and therefore may have caused higher survival, abiotic reasons such as higher temperatures, lower rainfall and generally drier site condtions could have contributed as well as they may inhibit fungal growth21,26. Nevertheless, H. Fraxineus was very recently also identified for the first time on ash trees in north-western Spain27 and therefore we have to asume that the disease is still in its most infantile stage in the Southern region.

In general, mortality of ash during the last three decades was largely decoupled from background mortality and therefore unlikely to be caused by other biotic or abiotic reasons such as drought, storms, and others (Fig. 6). As already mentioned, only the very first incidences of mortality in 1990 were co-occurring with mortality in other species and therefore are not attributable to ash dieback. Since 2010 the European-wide mortality of ash has even exceeded background mortality and is continously rising, while background mortality in the same plots remains constant. This demonstrates that in fact the majority of all cases in which ash trees are currently dying can be assigned to ash dieback as the predominant cause of death. Although the specific cause of crown damage in the ICP Forests Level I data can generally be retrieved down to the species level in the case of fungal agents (e.g. Hymenoscyphus fraxineus as the main causal agent of ash dieback), it is difficult to incorporate such data in detailed analyses, since the specific cause was hardly assessed and often not recognized in the very early years of the infection history and given that the damage coding system was first introduced in 2005. Nevertheless, H. fraxineus (or Chalara fraxinea as its asexual stadium is named) is currently accounting for 53% of all damage causes where a specific biotic agent can be determined (data not shown). This corresponds to approximately every fourth ash tree in the survey (23.4%) which is already carrying the disease by 2020.

We aimed to assess the overall survival probability of ash after an exposure time of 28 years to ash dieback in order to provide meaningful planning horizons for conservation and ecosystem management. We found that the probability of survival was much lower when the exposure time—approximated by the first observation of ADB at national levels—was corrected for the infection history, since the disease spread concentrically from north-eastern Poland to other regions in Europe. The assumption that entire Europe was simultaneously infected in 1992 is too simplistic and would have caused much longer time spans from infection to death for regions further apart from the epicentre. This, in turn, generated much higher survival probabilities compared to the more realistic scenario of shifted infection history.

We estimated that the overall survival probability of ash after nearly three decades of exposure is approximately 50%, but with large differences among regions (e.g. northern group: 0.2, southern group: 0.8). Coker et al.14 estimated that mortality of ash in woodlands after 11 years of exposure reaches a maximum at 60% and remains constant afterwards. Although the values from Coker et al.14 and those from our study cannot directly be compared due to methodological differences, such a trend cannot be derived from our results. In contrast, the survival probability curve in our study shows a constant downward trend even after 27 years of exposure and the evidence strongly suggests that it will be continued until host availability has reached a value that may be critical for the survival of the pathogen.

We found significant site parameters critical for survival that were also unraveled in earlier studies such as water status. Trees growing in locations with moister growing conditions are under higher risk to die compared to those growing in drier locations. The hazard ratio suggests that trees growing at sites with excessive water status have a 1.8-fold higher risk of death compared to trees growing under sufficient water status and a 3.6-fold higher risk compared to trees growing under dry conditions. In accordance, trees growing at sites which experienced one more months with extreme rainfall surplus during the observation period had a 1.07 fold higher risk. Both covariates are likely to favour the disease, since moisture is a critical factor during infection and for the efficient spread of ascospores12. Excess moisture may also plays a critical role for growth of other wood-deacaying fungi such as Armillaria spec, which were recently found to be involved in subsequent root rot of ash trees after infection with ADB24,28.

Aalen´s additive regression model also unraveled months with extreme high temperature as well as months with extreme rainfall deficit as factors which reduce the risk of death. While this pattern is largely corroborated by other studies26, the effect sizes of both covariates in our model were only moderate and hence it deserves more investigation before concise conclusions can be drawn for those two environmental variables.

Lower host abundance was recently associated with lower mortality in Swiss ash stands19, but our study did not confirm this relationship. At continental scale ash trees were killed regardless of whether they occurred in high abundance or low abundance. This demonstrates probably once more the devastating and highly efficient spreading capacity of the pathogen which allows the fungus to enter new territory within a short time22,29.

This study shows the value of ICP Forests level I data for monitoring ash dieback at continental scale. Although F. excelsior and F. angustifolia are minor tree species in most of the survey countries, they nevertheless constitute keystone species with high importance for ecological communities and local economy4,18. In the British Isles and Ireland, the importance of ash is even higher as F. excelsior is occupying a larger proportion of woodlands compared to other countries30,31. Unfortunately, the United Kingdom never assessed ash for defoliation and left the ICP Forests Level I network in 2011 so that no data on ash dieback is currently available from there.

Overall our study indicates a strongly progressive pattern of ash decline across the last three decades and we estimated that an Europe-wide average defoliation of 50% could be reached as early as 2030. However, we also outlined that the disease strongly differs among regions, since the progress seems to be slower in the south compared to the north. The threshold of 50% defoliation can be seen indeed as a critical benchmark, since we estimated that trees that have reached this defoliation status died on average within the following 9 years (data not shown). A further decline without joint rescue efforts at European level could cause non-reversible loss of genetic diversity which in turn could cause a critical minimum population size vital for long-term survival. Although a minor proportion of ash trees is likely inheriting resistance against ADB32,33, this alone will not guarantee that the ash population will recover after the disease has reached an equilibrium state. Evans34 simulated that long-term recovery of ash is highly dependent on the proportion of ash trees carrying natural resistance and secondly on the degree of heritability of resistance. However, even under extreme high heritability assumptions, long-term recovery under natural conditions will remain low when the founder population consists of only few trees34. Hence, without efforts at European level it is very likely that the two ash species F. excelsior and F. angutifolia will face the same fate as dutch elm (Ulmus spec.), which largely disappeared in most parts of Europe after the outbreak of the devastating dutch elm disease35,36. Even though our results apply mainly to ash trees located in forests and probably exclude trees that are situated in open landscapes such as along alleys, parkways, and in yards, the urgency of the matter is nevertheless justified given that natural regeneration in forests will be driven by healthy seed trees which survive within forest sites.

Conservation efforts at national level have recently been undertaken in Great Britain, Ireland, Denmark, and Austria and new projects are currently starting in Germany and other countries. For instance, reference genomes for F. excelsior and F. angustifolia have been completely sequenced37,38 and novel markers which are able to discriminate between resistent and susceptible F. excelsior trees have been developed39. On the other hand, progeny tests which aim to evaluate resistance against ADB of several thousand saplings of European ash harvested from field-resistant mother trees are currently being tested in Austria (http://www.esche-in-not.at/index.php). Constant monitoring of Ash vitality in natural forest stands but also in field trials will particularly become crucial in the future given that the invasive Emerald ash borer (Agrilus planipennis, EAB) is already entering European territory and constitutes a threat to European ash species in the same order of magnitude as compared to H. Fraxineus. Emerald ash borer is currently responsible for mass decline of ash in Russia and was recently detected not more than 100 km from the eastern border of the European Union40. In analogy to the pathosystem between asian ash species and H. Fraxineus, ash species such as Fraxinus mandshurica or F. chinensis have most likely co-evolved with Emerald ash borer, which probably led to high natural genetic variation in host susceptibility within these species37. While such intra-specific variation could indeed guide national and international breeding programs (e.g. in hybrid breeding or through genomic selection) careful attention needs to be paid in order to avoid maladaptation if both traits (resistance against ADB and resistance against EAB) are negatively correlated. In any of these scenarios, we strongly believe that only a pan-European initiative will be capable of utilizing the already numerous gathered resources in order to avoid ash disappearing from the European forest landscape.

Methods

Data

We used the ICP Forests Level I forest damage survey since this dataset constitutes the only available systematic survey sample across Europe at a 16 × 16 km grid41. Within this dataset, crown parameters and damaging agents are assessed at an annual scale (but see exceptions in Table 1) by survey teams typically including 24 dominant trees per plot. Crown defoliation is assessed in 5%-steps with 99% indicating complete defoliation of a tree and 100% its death. Damaging agents are assessed and are divided into abiotic, biotic and other damage causes. We selected all plots where at least one ash tree (either F. excelsior or F. angustifolia) was recorded and analysed the complete survey period spanning the years 1987 to 2020. An ash tree was subsequently classified as dead when its defoliation status reached 100% and the tree did not occurr any longer in subsequent survey years.

Overall survival probability after 30 years

Mortality data in tree ecology is often treated as a binary variable that can be best accomodated by logistic regression analysis14,42,43. However, when the cause of death is primarily caused by a pathogen which resembles a mortality pattern close to a pandemic situation (i.e. a time-to-death approach), logistic regression will be rather unsuitable. The reason for this is that the data are usually censored, which either means that at a specific survey date not all subjects had yet experienced the event (i.e. death) or got lost without any further assessments conducted44. Consequently, we used a different approach that specifically treats time-to-event data and that is usually applied in medical data such as survival analysis for patients carrying a lethal disease45,46. We justify this because of two reasons: (i) ash dieback usuallly constitutes a dead end for infected trees, since the disease is highly lethal and progressive and recovery of trees is rarely observed12, (ii) ash dieback spreads through windborne ascospores at a fast rate so that we can assume that a large part of the ash population in Europe has already experienced high and equal infection pressure29.

We first estimated the overall survival probability of ash with the Kaplan–Maier step function written as:

with SKM being the overall survival probability, \(\overline{Y }\)(ts) being the trees at risk at time t, d(s) being trees that died since start of infection and \(\overline{Y }\)(s) being the trees at risk since the beginning of infection. Since the observation plots are usually re-visited each year, the Kaplan-Maier curve will display the overall survival probability and 95% confidence intervals at one-year steps. We calculated the overall survival probability for two different models: (i) Model 1 with simultaneous infection: in the first model we assumed that the start of infection happened in 1992 for the entire European population. This is the first date when ash dieback was observed in Europe (Northern Poland) and it is generally believed that this is the epicentre of the disease12. (ii) Model 2 with non-simultaneous infection: although the disease spread rapidly towards the west, east, and south from the epicentre, there were distinct time gaps between subsequent infections of ash populations located further apart from the epicentre. Hence, we gathered dates of first observations of ash dieback (Table 1) for all European countries from different literature resources6,22. Even though these dates are to some extent biased and may do not reflect the exact date of arrival of ash dieback at national level, they can nevertheless serve as a good proxy for the temporal delay in infection due to expansion history of the disease. In few cases, the time between last assessment and event [nominator of Eq. (1)] was negative in the second model, since some mortality already occurred before the estimated arrival of ash dieback. This affected only Spain and southern France, where some cases of mortality were attributable to other disturbance agents rather than to ash dieback (see “Discussion” below). These few cases were consequently removed from both models.

Cox proportional hazard model and Aalen additive regression model

In order to model the transition from “alive” to “dead” we used a Cox-proportional hazard model47 and, alternatively, an Aalen additive regression model48. Such models are commonly applied in clinical testing for explaining mortality patterns for patients under risk when the data is right- or left-censored49. Both models are capable of incorporating covariates of different structure and specifically relate those covariates to survival patterns or any other censored outcome. The Cox-model has the form:

where λ(t) is the single-tree hazard as a function of time t, λ0 is the so-called baseline hazard, X1,2 are the covariate vectors of subject i and β1,2 specify vectors of coefficients associated with the covariates49. The Aalen additive regression model was suggested as an alternative to the Cox-proportional hazard model, because some of the assumptions behind the Cox-model may not always be met by all included covariates (e.g. that all coefficients are constant over time) and because additive effects may reflect the nature of the underlying disease better than the proportionality assumption, in particular when the baseline hazard is very small. The additive model has the linear form:

with β0 being the baseline function and βi (t)xi being the regression functions which determine the influence of the covariates. In contrast to the Cox-proportional hazard model, regression functions in (3) can freely vary over time.

We used two key stand characteristics from the ICP Forests Level I dataset (water availability coded as 1 = insufficient, 2 = sufficient, 3 = excessive and humus type comprising 9 classes) as well as abundance of ash trees in each plot (number of ash trees/total number of trees) as survival predictors. The rationale behind the latter variable is that we hypothesize higher mortality rates when the host abundance is high compared to stands where only few ash trees occur.

Various recent studies showed that tree mortality, in particular in the very last years, has accelerated mainly as a result of climatically-driven changes such as warming and drought50,51. In order to disentangle whether the mortality pattern in ash between 1987 and 2020 has been driven stronger by ADB, climate or both, we calculated the monthly anomaly in maximum temperature and precipitation sums from 1995 to 2020 for all survey plots in which a few ash trees occurred. We used E-OBS daily gridded meteorological data at 0.25° spatial resolution52 and calculated the number of extreme events between 1995 and 2020 for each survey plot. Extreme events were defined as months which showed a standardized climatic anomaly of ≥ or ≤ two standard deviations above or below long-term average, respectively.

Since we expect that some covariates are not randomly distributed in space across the observation plots (i.e. the disease is generally stronger in the east compared to the west of Europe due to the infection history), we divided the data also into geographic strata so that each group (eastern, western, southern, and northern Europe) would have its distinct baseline hazard function, but common values for β. We employed the survival package in R53 for all analysis steps (overall survival probability, Cox model, Aalens additive regression model) described above.

Time-series forecasting of crown defoliation

Finally, we aim to project the past defoliation history (1987–2020) of ash in Europe into the near future in order to determine critical time horizons for conservation and rescue management. We first calculated average defoliation per year and plot including all ash trees and performed timeseries forecasting spanning 50 years from the last available observation (2021–2070). We used both an ARIMA (autoregressive integrated moving average) model as well as exponential smoothing (Holts linear trend method according to Holt54 as alternative approach. Briefly, a non-seasonal ARIMA model was fitted by determining the parameters p, d, and q where p is the order of the autoregressive part, d is the degree of first differencing, and q is the order of the moving average part. We first determined the three parameters by using the auto.arima function on the log transformed defoliation time series with the help of the forecast package in R55. In order to evaluate whether the determined parameters were correctly chosen, we visually inspected the partial autocorrelation plot and checked the model residuals by performing a Portmanteau-test. We calculated 95% confidence intervals around both estimators (ARIMA, exponential smoothing) and evaluated the goodness of the obtained forecasts by using the first 22 years (1987–2009) as training dataset and the last ten years of observations (2010–2020) as evaluation data set. The mean square error (MSE) was used to compare forecast results between the two methods. Since Sweden and Norway changed their monitoring system in 2009 and 2013, respectively (see Table 1), we used only the period 1987–2010 for the northern group (see also results below).

Data availability

This dataset will be available via Zenodo.org upon publication.

References

Hill, L. et al. The£ 15 billion cost of ash dieback in Britain. Curr. Biol. 29(9), R315–R316 (2019).

Pliûra, A. & Heuertz, M. EUFORGEN Technical Guidelines for Genetic Conservation and Use for Common Ash (Fraxinus excelsior) (Bioversity International, 2003).

Dufour, S. & Piégay, H. Geomorphological controls of Fraxinus excelsior growth and regeneration in floodplain forests. Ecology 89(1), 205–215 (2008).

Mitchell, R. J. et al. Ash dieback in the UK: a review of the ecological and conservation implications and potential management options. Biol. Conserv. 175, 95–109 (2014).

Przybył, K. Fungi associated with necrotic apical parts of Fraxinus excelsior shoots. For. Pathol. 32(6), 387–394 (2002).

Vasaitis, R., & Enderle, R. Dieback of European ash (Fraxinus spp.)-consequences and guidelines for sustainable management. Dieback of European ash (Fraxinus spp.). Report on COST Action FP1103 FRAXBACK. ISBN978-91-576-8696-1. (SLU Swedish University of Agricultural Sciences, 2017).

Børja, I. et al. Ash dieback in Norway-current situation. In Dieback of European ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management (eds Vasaitis, R. & Enderle, R.) 166–175 (Swedish University of Agricultural Sciences, 2017).

Ghelardini, L. et al. From the Alps to the Apennines: Possible spread of ash dieback in Mediterranean areas. In Dieback of European ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management (eds Vasaitis, R. & Enderle, R.) 140–149 (Swedish University of Agricultural Sciences, 2017).

Marçais, B., Husson, C., Godart, L. & Cael, O. Influence of site and stand factors on Hymenoscyphus fraxineus-induced basal lesions. Plant. Pathol. 65(9), 1452–1461 (2016).

Queloz, V., Hopf, S., Schoebel, C. N., Rigling, D. & Gross, A. Ash dieback in Switzerland: History and scientific achievements. In Dieback of European ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management (eds Vasaitis, R. & Enderle, R.) 68–78 (Swedish University of Agricultural Sciences, 2017).

Orton, E. S. et al. Population structure of the ash dieback pathogen, Hymenoscyphus fraxineus, in relation to its mode of arrival in the UK. Plant. Pathol. 67(2), 255–264 (2018).

Enderle, R., Stenlid, J. & Vasaitis, R. An overview of ash (Fraxinus spp.) and the ash dieback disease in Europe. CAB Rev. 14, 1–12 (2019).

Heinze, B., Tiefenbacher, H., Litschauer, R. & Kirisits, T. Ash dieback in Austria: History, current situation and outlook. in Dieback of European Ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management, 33–52 (2017).

Coker, T. L. et al. Estimating mortality rates of European ash (Fraxinus excelsior) under the ash dieback (Hymenoscyphus fraxineus) epidemic. Plants People Planet 1(1), 48–58 (2019).

Cleary, M., Nguyen, D., Stener, L. G., Stenlid, J., & Skovsgaard, J. P. Ash and ash dieback in Sweden: A review of disease history, current status, pathogen and host dynamics, host tolerance and management options in forests and landscapes. Dieback of European Ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management, 195–208 (2017).

Stocks, J. J., Buggs, R. J. & Lee, S. J. A first assessment of Fraxinus excelsior (common ash) susceptibility to Hymenoscyphus fraxineus (ash dieback) throughout the British Isles. Sci. Rep. 7(1), 1–7 (2017).

Díaz-Yáñez, O. et al. The invasive forest pathogen Hymenoscyphus fraxineus boosts mortality and triggers niche replacement of European ash (Fraxinus excelsior). Sci. Rep. 10(1), 1–10 (2020).

Enderle, R., Metzler, B., Riemer, U. & Kändler, G. Ash dieback on sample points of the national forest inventory in south-western Germany. Forests 9(1), 25 (2018).

Klesse, S. et al. Spread and severity of ash dieback in Switzerland: Tree characteristics and landscape features explain varying mortality probability. Front. For. Glob. Change 4, 18 (2021).

Timmermann, V., Potočić, N., Ognjenović, M. & Kirchner, T. Tree crown condition in 2020. In Forest Condition in Europe: The 2021 Assessment ICP Forests Technical Report under the UNECE Convention on Long-range Transboundary Air Pollution (Air Convention) (eds Michel, A. et al.) (Thünen Institute, 2021).

Chumanová, E. et al. Predicting ash dieback severity and environmental suitability for the disease in forest stands. Scand. J. For. Res. 34(4), 254–266 (2019).

Solheim, H. & Hietala, A. M. Spread of ash dieback in Norway. Balt. For. 23(1), 1–6 (2017).

Kjær, E. D. et al. Genetics of ash dieback resistance in a restoration context: Experiences from Denmark. In Dieback of European ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management (eds Vasaitis, R. & Enderle, R.) 106–114 (Swedish University of Agricultural Sciences, 2017).

Madsen, C. L. et al. Combined progress in symptoms caused by Hymenoscyphus fraxineus and Armillaria species, and corresponding mortality in young and old ash trees. For. Ecol. Manage. 491, 119177 (2021).

Trapiello, E., Schoebel, C. N. & Rigling, D. Fungal community in symptomatic ash leaves in Spain. Balt. For. 23(1), 68–73 (2017).

Grosdidier, M., Ioos, R. & Marçais, B. Do higher summer temperatures restrict the dissemination of Hymenoscyphus fraxineus in France?. For. Pathol. 48(4), e12426. https://doi.org/10.1111/efp.12426 (2018).

Stroheker, S., Queloz, V. & Nemesio-Gorriz, M. First report of Hymenoscyphus fraxineus causing ash dieback in Spain. New Dis. Rep. 44(2), e12054 (2021).

Chandelier, A., Gerarts, F., San Martin, G., Herman, M. & Delahaye, L. Temporal evolution of collar lesions associated with ash dieback and the occurrence of Armillaria in Belgian forests. For. Pathol. 46(4), 289–297. https://doi.org/10.1111/efp.12258 (2016).

Gross, A., Holdenrieder, O., Pautasso, M., Queloz, V. & Sieber, T. N. H ymenoscyphus pseudoalbidus, the causal agent of E uropean ash dieback. Mol. Plant Pathol. 15(1), 5–21 (2014).

Clark, J. & Webber, J. The ash resource and the response to ash dieback in Great Britain. In Dieback of European ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management (eds Vasaitis, R. & Enderle, R.) 228–237 (Swedish University of Agricultural Sciences, 2017).

Dandy, N., Marzano, M., Porth, E. F., Urquhart, J. & Potter, C. Who has a stake in ash dieback? A conceptual framework for the identification and categorisation of tree health stakeholders. In Dieback of European ash (Fraxinus spp.): Consequences and Guidelines for Sustainable Management (eds Vasaitis, R. & Enderle, R.) 15–26 (Swedish University of Agricultural Sciences, 2017).

Kjær, E. D., McKinney, L. V., Nielsen, L. R., Hansen, L. N. & Hansen, J. K. Adaptive potential of ash (Fraxinus excelsior) populations against the novel emerging pathogen Hymenoscyphus pseudoalbidus. Evol. Appl. 5(3), 219–228 (2012).

Plumb, W. J. et al. The viability of a breeding programme for ash in the British Isles in the face of ash dieback. Plants People Planet 2(1), 29–40 (2020).

Evans, M. R. Will natural resistance result in populations of ash trees remaining in British woodlands after a century of ash dieback disease?. R. Soc. Open Sci. 6(8), 190908 (2019).

Buggs, R. J. A. Changing perceptions of tree resistance research. Plants People Planet 2, 2–4. https://doi.org/10.1002/ppp3.10089 (2020).

Tomlinson, I. & Potter, C. ‘Too little, too late’? Science, policy and Dutch Elm Disease in the UK. J. Hist. Geogr. 36(2), 121–131 (2010).

Kelly, L. J. et al. Convergent molecular evolution among ash species resistant to the emerald ash borer. Nat. Ecol. Evol. 4, 1116–1128. https://doi.org/10.1038/s41559-020-1209-3 (2020).

Sollars, E. S. et al. Genome sequence and genetic diversity of European ash trees. Nature 541(7636), 212–216 (2017).

Stocks, J. J. et al. Genomic basis of European ash tree resistance to ash dieback fungus. Nat. Ecol. Evol. 3(12), 1686–1696 (2019).

Volkovitsh, M. G., Bieńkowski, A. O. & Orlova-Bienkowskaja, M. J. Emerald ash borer approaches the borders of the european union and kazakhstan and is confirmed to infest European ash. Forests 12(6), 691 (2021).

Eichhorn, J. et al. Part IV: Visual Assessment of Crown Condition and Damaging Agents. in Manual on Methods and Criteria for Harmonized Sampling, Assessment, Monitoring and Analysis of the Effects of Air Pollution on Forests. (Thünen Institute of Forest Ecosystems, 2016). Annex http://www.icp-forests.org/manual.htm.

Koontz, M. J., Latimer, A. M., Mortenson, L. A., Fettig, C. J. & North, M. P. Cross-scale interaction of host tree size and climatic water deficit governs bark beetle-induced tree mortality. Nat. Commun. 12(1), 1–13 (2021).

Taccoen, A. et al. Climate change impact on tree mortality differs with tree social status. For. Ecol. Manage. 489, 119048 (2021).

Therneau, T. A Package for Survival Analysis in R. https://cran.r-project.org/web/packages/survival/vignettes/survival.pdf. Accessed 26 May 2021

Godaert, L. et al. Prognostic factors of inhospital death in elderly patients: A time-to-event analysis of a cohort study in Martinique (French West Indies). BMJ Open 8(1), e018838 (2018).

Sargeran, K., Murtomaa, H., Safavi, S. M. R., Vehkalahti, M. M. & Teronen, O. Survival after diagnosis of cancer of the oral cavity. Br. J. Oral Maxillofac. Surg. 46(3), 187–191 (2008).

Cox, D. R. Regression models and life-tables. J. R. Stat. Soc. B 34(2), 187–202 (1972).

Aalen, O. O. A linear regression model for the analysis of life times. Stat. Med. 8(8), 907–925 (1989).

Therneau, T. M., & Grambsch, P. M. The cox model. In Modeling survival data: extending the Cox model, pp. 39–77. (Springer, 2000).

Neumann, M., Mues, V., Moreno, A., Hasenauer, H. & Seidl, R. Climate variability drives recent tree mortality in Europe. Glob. Change Biol. 23(11), 4788–4797 (2017).

Senf, C., Buras, A., Zang, C. S., Rammig, A. & Seidl, R. Excess forest mortality is consistently linked to drought across Europe. Nat. Commun. 11(1), 1–8 (2020).

Haylock, M. R. et al. A European daily high-resolution gridded data set of surface temperature and precipitation for 1950–2006. J. Geophys. Res. Atmos. 113, D20 (2008).

R Development Core Team. RStudio, R: A Language and Environment for Statistical Computing (R Development Core Team, 2017).

Holt, C. C. Forecasting Trends and Season-Als by Exponentially Weighted Averages. (Carnegie Institute of Technology, Pittsburgh ONR memorandum no. 52, 1957)

Hyndman, R. J. & Khandakar, Y. Automatic time series forecasting: the forecast package for R. J. Stat. Softw. 27(3), 1–22 (2008).

Acknowledgements

This study is carried out with the support of the European Regional Development Fund and the programme Mobilitas PLUSS (Project ID: MOBJD588) granted by the Estonian Research Agency (ETAG). The evaluation was based on data collected by partners of the official UNECE ICP Forests Network (http://icp-forests.net/contributors). Part of the data was co-financed by the European Commission (Data achieved at 12.04.2021). We also would like to thank Dr. Iben Margrete Thomsen (Department of Geosciences and Natural Resource Management, University of Copenhagen) for additional information regarding ash in Denmark.

Author information

Authors and Affiliations

Contributions

J.P.G.: Formal analysis, Methodology, Writing—original draft, Visualization, Project administration, Funding acquisition. T.S.: Resources, Writing—review & editing. V.T.: Resources, Writing—review & editing. N.P.: Resources, Writing—review & editing. M.L.: Conceptualization, Methodology, Writing—original draft, Supervision, Project administration, Funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

George, JP., Sanders, T.G.M., Timmermann, V. et al. European-wide forest monitoring substantiate the neccessity for a joint conservation strategy to rescue European ash species (Fraxinus spp.). Sci Rep 12, 4764 (2022). https://doi.org/10.1038/s41598-022-08825-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-08825-6

- Springer Nature Limited

This article is cited by

-

Ash dieback assessments on intensive monitoring plots in Germany: influence of stand, site and time on disease progression

Journal of Plant Diseases and Protection (2024)

-

Evaluation and identification of viruses for biocontrol of the ash dieback disease

Journal of Plant Diseases and Protection (2023)

-

FraxForFuture—research on European ash dieback in Germany

Journal of Plant Diseases and Protection (2022)