Abstract

Relational formulation of quantum mechanics is based on the idea that relational properties among quantum systems, instead of the independent properties of a quantum system, are the most fundamental elements to construct quantum mechanics. In a recent paper (Yang in Sci Rep 8:13305, 2018), basic relational quantum mechanics framework is formulated to derive quantum probability, Born’s Rule, Schrödinger Equations, and measurement theory. This paper further extends the reformulation effort in three aspects. First, it gives a clearer explanation of the key concepts behind the framework to calculate measurement probability. Second, we provide a concrete implementation of the relational probability amplitude by extending the path integral formulation. The implementation not only clarifies the physical meaning of the relational probability amplitude, but also allows us to elegantly explain the double slit experiment, to describe the interaction history between the measured system and a series of measuring systems, and to calculate entanglement entropy based on path integral and influence functional. In return, the implementation brings back new insight to path integral itself by completing the explanation on why measurement probability can be calculated as modulus square of probability amplitude. Lastly, we clarify the connection between our reformulation and the quantum reference frame theory. A complete relational formulation of quantum mechanics needs to combine the present works with the quantum reference frame theory.

Similar content being viewed by others

Introduction

Quantum mechanics was originally developed as a physical theory to explain the experimental observations of a quantum system in a measurement. In the early days of quantum mechanics, Bohr had emphasized that the description of a quantum system depends on the measuring apparatus1,2,3. In more recent development of quantum interpretations, the dependency of a quantum state on a reference system was further recognized. The relative state formulation of quantum mechanics4,5,6 asserts that a quantum state of a subsystem is only meaningful relative to a given state of the rest of the system. Similarly, in developing the theory of decoherence induced by environment7,8,9, it is concluded that correlation information between two quantum systems is more basic than the properties of the quantum systems themselves. Relational Quantum Mechanics (RQM) further suggests that a quantum system should be described relative to another system. There is no absolute state for a quantum system10,11. Quantum theory does not describe the independent properties of a quantum system. Instead, it describes the relation among quantum systems, and how correlation is established through physical interaction during measurement. The reality of a quantum system is only meaningful in the context of measurement by another system. The RQM idea was also interpreted as perspectivalism in the context of modal interpretation of quantum mechanics14, where properties are assigned to a physical system from the perspective of a reference system.

The idea of RQM is thought provoking. It essentially implies two aspects of relativity. The first aspect of RQM is to insist that a quantum system must be described relative to a reference system. The reference system is arbitrarily selected. It can be an apparatus in a measurement setup, or another system in the environment. A quantum system can be described differently relative to different reference systems. The reference system itself is also a quantum system, which is called a quantum reference frame (QRF). There are extensive research activities on QRF, particularly how to ensure consistent descriptions when switching QRFs15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32. Noticeably, Refs.31,32,33,34,35 completely abandon any external reference system and the concept of absolute state. Physical description is constructed using relational variables from the very beginning within the framework of traditional quantum mechanics. In addition, all reference systems are treated as quantum systems instead of some kinds of abstract entities. Treating a reference frame as a classical system, such as how the relativity theory does, should be considered as an approximation of a more fundamental theory that is based on QRF.

The second aspect of RQM is more fundamental. Since the relational properties between two quantum systems are considered more basic than the independent properties of one system, the relational properties, instead of the independent properties, of quantum systems should be considered as starting points for constructing the formulation of quantum mechanics itself. Questions associated with this aspect of RQM include how to quantify the relational properties between two quantum systems, and how to reconstruct a quantum mechanics theory from relational properties. Note that the relational properties themselves are relative to a QRF. Different observers can ascribe a quantum system with different sets of relational properties relative to their choices of QRFs.

It is this second aspect of RQM that inspires our works here. The effort to reconstruct quantum mechanics itself from relational properties was initiated in the original RQM paper10 and had some successes, for example, in deriving the Schrödinger Equation. This reconstruction is based on quantum logic approach. Alternative reconstruction that follows the RQM principle but based on information theory is also developed12,13. These reconstructions appear rather abstract, not closely connect to the physical process of a quantum measurement. We believe that the relational properties should be identified in a measurement event given the idea that the reality of a quantum system is only meaningful in the context of measurement by another system. With this motivation, recently we proposed a formulation36 that is based on a detailed analysis of quantum measurement process. What is novel in our formulation is a new framework for calculating the probability of an outcome when measuring a quantum system, which we briefly describe here. In searching for the appropriate relational properties as the starting elements for the reconstruction, we recognize that a physical measurement is a probe-response interaction process between the measured system and the measuring apparatus. This important aspect of measurement process, although well-known, seems being overlooked in other reconstruction efforts. Our framework for calculating the probability, on the other hand, explicitly models this bidirectional process faithfully. As such, the probability can be derived from product of two quantities and each quantity is associated with a unidirectional process. The probability of a measurement outcome is proportional to the summation of probability amplitude product from all alternative measurement configurations. The properties of quantum systems, such as superposition and entanglement, are manifested through the rules of counting the alternatives. As a result, the framework gives mathematically equivalent formulation to Born’s rule. Wave function is found to be summation of relational probability amplitudes, and Schrödinger equation is derived when there is no entanglement in the relational probability amplitude matrix. Although the relational probability amplitude is the most basic properties, there are mathematical tools such as wave function and reduced density matrix that describe the observed system without explicitly called out the reference system. Thus, the formulation in Ref.36 is mathematically compatible to the traditional quantum mechanics. We restrict our analysis on ideal projective measurement in order to focus on the key ideas, with the expectation that the framework based on an ideal measurement can be extended to more complex measurement theory in future researches.

What is missing in our initial reformulation36 is an explicit calculation of the relational probability amplitude, which is at the heart of the framework. In this paper, we choose path integral method to calculate the relational probability amplitude for the following motivations. First, path integral offers an intuitive physical picture to calculate abstract quantity such as probability amplitude by summation over alternative trajectory paths. Since both path integral and our formulation are based on an idea of summation over alternatives, the technique in path integral can be naturally borrowed here. Second, path integral is a well-developed theory that had been successfully applied in other physical theories such as quantum field theory. By connecting the relational formulation of quantum mechanics to path integral, we wish to extend the relational formulation to more advance quantum theory in the future. Third, we can bring back new insight to the path integral itself by explaining why measurement probability can be calculated as modulus square of the probability amplitude. The outcome of implementing our formulation using path integral are fruitful, as shown in later sections. Besides providing the physical meaning of relation probability amplitude, the path integral formulation also has interesting applications. For instance, it can describe the history of a quantum system that has interacted with a series of measuring systems in sequence. As a result, the double slit experiment can be elegantly explained from the formulation developed here. More significantly, the coordinator representation of the reduced density matrix derived from this implementation allows us to develop a method to calculate entanglement entropy using path integral approach. We propose a criterion on whether there is entanglement between the system and external environment based on the influence functional. This enables us to calculate entanglement entropy of a physical system that interacts with classical fields, such as an electron in an electromagnetic field.

The paper is organized as following. We first review the relational formulation of quantum mechanics in “Relational formulation of quantum mechanics” with a clearer explanation of the framework to calculate the measurement probability. In “Path integral implementation” the path integral implementation of the relational probability amplitude is presented. Section “Interaction history of a quantum system” generalizes the formulation to describe the history of quantum state for the observed system that has interacted with a series of measuring systems in sequence. The calculation confirms the idea that a quantum state encodes information from early interactions. The formulation is applied to explain the double slit experiment in “The double slit experiment”. Section “The Von Neumann entropy” introduces a method to calculate entanglement entropy between the interacting systems based on the path integral implementation. A criterion to determine whether entanglement entropy vanishes based on properties of the influence functional is discussed in “Discussion and conclusion”. Section “Discussion and conclusion” explores the idea of combining the present formulations with QRF theory, and summarizes the conclusions.

Relational formulation of quantum mechanics

Terminologies

A Quantum System, denoted by symbol S, is an object under study and follows the laws of quantum mechanics. An Apparatus, denoted as A, can refer to the measuring devices, the environment that S is interacting with, or the system from which S is created. All systems are quantum systems, including any apparatus. Depending on the selection of observer, the boundary between a system and an apparatus can change. For example, in a measurement setup, the measuring system is an apparatus A, the measured system is S. However, the composite system \(S+A\) as a whole can be considered a single system, relative to another apparatus \(A'\). In an ideal measurement to measure an observable of S, the apparatus is designed in such a way that at the end of the measurement, the pointer state of A has a distinguishable, one to one correlation with the eigenvalue of the observable of S.

The definition of Observer is associated with an apparatus. An observer, denoted as \({{\mathcal {O}}}\), is an entity who can operate and read the pointer variable of the apparatus. Whether or not this observer is a quantum system is irrelevant in our formulation. An observer is defined to be physically local to the apparatus he associates with. This prevents the situation that \({\mathcal {O}}\) can instantaneously read the pointer variable of the apparatus that is space-like separated from \({\mathcal {O}}\).

In the traditional theory proposed by von Neumann38 for an ideal quantum measurement, both the measured system S and the measuring apparatus A follow the same quantum mechanics laws. During the measurement process, A probes (or, disturbs) S. Such interaction alters the state of S, which in turn responses to A and alters the state of A as well. As a result, a correlation is established between S and A, allowing the measurement result for S to be inferred from the pointer variable of A.

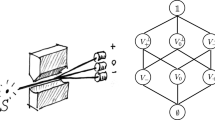

A Quantum Reference Frame (QRF) is a quantum system where all the descriptions of the relational properties between S and A is referred to. There can be multiple QRFs. How the descriptions are transformed when switching QRFs is not in the scope of this study. But we expect the theories developed in Refs.31,32,33 can be applicable here. In this paper, we only consider the description relative to one QRF, denoted as F. It is also possible to choose A as the reference frame. In that case, F and A are the same quantum system in a measurement31,33. Figure 1 shows a schematic illustration of the entities in the relational formulation.

Schematic illustration of the entities for the terminologies. The overlapping of the measured system S and apparatus A represents there is interaction in a measurement event. The relational properties between S and A must be described relative a QRF. There can be multiple QRFs. Selecting a different QRF, \({\mathcal {O}}\) can have a different description of the relational properties in a quantum measurement event.

A Quantum State of S describes the complete information an observer \({\mathcal {O}}\) can know about S. A quantum state encodes the relational information of S relative to A or other systems that S previously interacted with11. The information encoded in the quantum state is the complete knowledge an observer can say about S, as it determines the possible outcomes of next measurement. As we will explain later, the state for a quantum system is not a fundamental concept. Instead, it is a derivative concept from the relational properties.

Measurement probability

In essence, quantum mechanics is a theory to predict the probability of future potential measurement given a prepared quantum system. The relational formulation of quantum mechanics36 is based on a detailed analysis of the interaction process during measurement of a quantum system. To begin with, we assume a QRF, F, is chosen to describe the quantum measurement event. From experimental observations, we know that a measurement of a variable on a quantum system yields multiple possible outcomes randomly. Each potential outcome is obtained with a certain probability. We call each measurement with a distinct outcome a quantum measurement event. Denote these alternatives potential outcome with a set of kets \(\{|s_i\rangle \}\) for S, where (\(i=0,\ldots ,N-1)\), and a set of kets \(\{|a_j\rangle \}\) corresponding to the pointer readings for A, where (\(j=0,\ldots ,M-1)\). A potential measurement outcome is represented by a pair of kets \((|s_i\rangle , |a_j\rangle )\). The probability of finding a potential measurement outcome represented by a pair of kets \((|s_i\rangle , |a_j\rangle )\), is denoted as \(p_{ij}\). The framework to calculate the measurement probability \(p_{ij}\) is the central point of the formulation in Ref.36. Here we will give a more detailed explanation of this framework in a non-selective measurement setting. A physical measurement is a bidirectional process. In such process, the measuring system probes the measured system, and the measured system responses back to the measuring system. Through such interaction the states of both systems are modified and the correlation information is encoded. Although the bidirectional interaction process is well known, the following realization is not fully appreciated in the research literature.

Because a quantum measurement is a bidirectional process, the calculation of the probability of a measurement outcome must faithfully model such bidirectional process.

The bidirectional process does not necessarily imply two sequential steps. Instead, the probing and responding processes are understood as two aspects of a complete process in a measurement event. We can use a classical probability problem to analogize this. Suppose tossing a special coin gets a face up with probability of p. Let us consider a measurement process that requires tossing two such coins in the same time, and the measurement is successful if one coin facing up and one coin facing down. We ask what is the probability of a successful measurement event. The answer is to multiple two probability quantities together, \(p(1-p)\). In the similar manner, given the bidirectional process in a quantum measurement event, the observable measurement probability should be calculated as a product of two quantities of weights. One weight quantity is associated with the probing process from \(A\rightarrow S\), denoted as \(Q^{A\rightarrow S} (|a_j\rangle \cap |s_i\rangle )\), and the other is associated with the responding process from \(S\rightarrow A\), denoted as \(R^{S\rightarrow A} (|s_i\rangle \cap |a_j\rangle )\), so that

The difference between analogy of the classical coin tossing measurement and a quantum measurement is that in a quantum measurement, each aspect of the process itself is not necessarily assigned a non-negative real number. To see this, let us analyze the factors that the weight \(Q^{A\rightarrow S} (|a_j\rangle \cap |s_i\rangle )\) depends on. Intuitively, this quantity depends on three factors:

-

1.

Likelihood of finding system S in state \(|s_i\rangle\) without interaction;

-

2.

Likelihood of finding system A in state \(|a_j\rangle\) without interaction;

-

3.

A factor that alters the above two likelihoods due to the passing of physical elements such as energy and momentum from \(A\rightarrow S\) in the probing process.

Similarly, the other weight quantity \(R^{S\rightarrow A} (|s_i\rangle \cap |a_j\rangle )\) depends on the similar first two factors, and the third factor that is due to the passing of physical elements from \(S\rightarrow A\) in the responding process. The probing and responding processes are two distinct aspects that constitute a measurement interaction. However, only the measurement probability is observable, the unidirectional process of probing or responding alone is not observable. There is no reason to require each factor must be a non-negative real value, as long as the final calculation result of \(p_{ij}\) is still a non-negative real number. The requirement of a quantity being a non-negative real value is only applicable when the quantity is a measurable one, such as \(p_{ij}\). We summarize this crucial but subtle point as following:

Measurement probability of an observable event must be a non-negative real number. However, the requirement of being a non-negative real number is not applicable to non-measurable quantities for sub-processes that constitute a quantum measurement.

It is worth to mention that this approach to construct the measurement probability is an operational one based on the probing and responding model. In theory, there can be different models that yield the same probability value.

Next, we require that the probability \(p_{ij}\) should be symmetric with respect to either S or A. What this means is that the probability is the same for both processes \(|a_j\rangle \rightarrow |s_i\rangle \rightarrow |a_j\rangle\) that is viewed from A and \(|s_i\rangle \rightarrow |a_j\rangle \rightarrow |s_i\rangle\) that is viewed from S. A natural way to meet this requirement is to represent the two weight quantities as matrix elements, i.e., \(Q^{A\rightarrow S} (|a_j\rangle \cap |s_i\rangle ) = Q^{AS}_{ji}\), and \(R^{S\rightarrow A} (|s_i\rangle \cap |a_j\rangle ) = R^{SA}_{ij}\). This implies \(p_{ij}\) can be expressed as product of two numbers,

As just discussed, \(Q_{ji}^{AS}\) and \(R_{ij}^{SA}\) are not necessarily real non-negative number since each number alone only models a unidirectional process which is not a complete measurement process thus non-observable. On the other hand, \(p_{ij}\) must be a non-negative real number since it models an observable measurement process. To satisfy such requirement, we further assume

Written in a different format, \(Q_{ji}^{AS} = (R^{SA})^\dag _{ji}\). This means \(Q^{AS} = (R^{SA})^\dag\). Equation (3) can be justified as following. The three dependent factors for \(Q_{ji}^{AS}\) and \(R_{ij}^{SA}\) are related to each other respectively. The likelihood of finding system S in state \(|s_i\rangle\) and system A in state \(|a_j\rangle\) without interaction are the same. The third factor is triggered by passing physical elements during interaction. There are conservation laws such as energy conservation and momentum conservation during interaction. Conceivably, the third factors for \(Q_{ji}^{AS}\) and \(R_{ij}^{SA}\) must be equal in absolute value, but may be different in phase. With all these considerations, it is reasonable to assume \(|Q_{ji}^{AS}| = |R_{ij}^{SA}|\). We choose \(Q_{ji}^{AS} = (R_{ij}^{SA})^*\) so that \(p_{ij}=Q_{ji}^{AS}R_{ij}^{SA}\) is a non-negative real number. These justifications will be clearer later in the path integral implementation. With (3), Eq. (2) then becomes

where \(\Omega\) is a real number normalization factor. \(Q_{ji}^{AS}\) and \(R_{ij}^{SA}\) are called relational probability amplitudes. Given the relation in Eq. (3), we will not distinguish the notation R versus Q, and only use R. Note that \(Q_{ji}^{AS}\) and \(R_{ij}^{SA}\) are relative to the QRF F. A more accurate notation needs to explicitly call out the dependency on F. This is omitted here since we are not studying how \(Q_{ji}^{AS}\) and \(R_{ij}^{SA}\) are transformed when switching QRF from F to a different QRF in the present works. This notation of not calling out F explicitly will remain throughout the rest of this paper.

To manifest the unique bidirectional process of quantum measurement, let us compare it with a measurement event in classical mechanics. In classical mechanics, for a fixed measurement setting and exact same preparation of the measured system, we expect the measurement result to be deterministic and with only one outcome. In the mathematical notation, this means \(Q_{00}^{AS}=R_{00}^{SA}=1\). Furthermore, a classical measurement process is not considered to alter the state of the measured system. Rather, the measured system S alters the pointer variable in the measurement apparatus A. In a sense this is just a unidirectional process. Therefore, the dependency on the bidirectional process to compute the measurement probability is unique to quantum mechanics. This unique process is not well analyzed in the research literature except the transaction interpretation of quantum mechanics that shares the idea that a quantum event is a bidirectional transaction48. However, there are fundamental differences between the transaction model and the measurement probability framework presented here, which will be discussed in details in “Compared to the transactional interpretation”.

Wave function and density matrix

The relational matrix \(R^{SA}\) gives the complete description of S. It provides a framework to derive the probability of future measurement outcome. Although \(R^{SA}_{ij}\) is a probability amplitude, not a probability real number, we assume it follows certain rules in the classical probability theory, such as multiplication rule, and sum of alternatives in the intermediate steps.

The set of kets \(\{|s_i\rangle \}\), representing distinct measurement events for S, can be considered as eigenbasis of Hilbert space \({{\mathcal {H}}}_S\) with dimension N, and \(|s_i\rangle\) is an eigenvector. Since each measurement outcome is distinguishable, \(\langle s_i|s_j\rangle = \delta _{ij}\). Similarly, the set of kets \(\{|a_j\rangle \}\) is eigenbasis of Hilbert space \({{\mathcal {H}}}_A\) with dimension N for the apparatus system A. The bidirectional process \(|a_j\rangle \rightleftharpoons |s_i\rangle\) is called a potential measurement configuration. A potential measurement configuration comprises possible eigen-vectors of S and A that involve in the measurement event, and the relational weight quantities. It can be represented by \(\Gamma _{ij}: \{|s_i\rangle , |a_j\rangle , R^{SA}_{ij}, Q^{AS}_{ji}\}\).

To derive the properties of S based on the relational R, we examine how the probability of measuring S with a particular outcome of variable q is calculated. It turns out such probability is proportional to the sum of weights from all applicable measurement configurations, where the weight is defined as the product of two relational probability amplitudes corresponding to the applicable measurement configuration. Identifying the applicable measurement configuration manifests the properties of a quantum system. For instance, before measurement is actually performed, we do not know that which event will occur to the quantum system since it is completely probabilistic. It is legitimate to generalize the potential measurement configuration as \(|a_j\rangle \rightarrow |s_i\rangle \rightarrow |a_k\rangle\). In other words, the potential measurement configuration starts from \(|a_j\rangle\), but can end at \(|a_j\rangle\), or any other event, \(|a_k\rangle\). Indeed, the most general form of potential measurement configuration in a bipartite system can be \(|a_j\rangle \rightarrow |s_m\rangle \rightarrow |s_n\rangle \rightarrow |a_k\rangle\). Correspondingly, we generalize Eq. (2) by introducing a quantity for such configuration,

The second step utilizes Eq. (3). This quantity is interpreted as a weight associated with the potential measurement configuration \(|a_j\rangle \rightarrow |s_m\rangle \rightarrow |s_n\rangle \rightarrow |a_k\rangle\). Suppose we do not perform actual measurement and inference information is not available, the probability of finding S in a future measurement outcome can be calculated by summing \(W_{jmnk}^{ASSA}\) from all applicable alternatives of potential measurement configurations.

With this framework, the remaining task to calculate the probability is to correctly count the applicable alternatives of potential measurement configuration. This task depends on the expected measurement outcome. For instance, suppose the expected outcome of an ideal measurement is event \(|s_i\rangle\), i.e., measuring variable q gives eigenvalue \(q_i\). The probability of event \(|s_i\rangle\) occurs, \(p_i\), is proportional to the summation of \(W_{jmnk}^{ASSA}\) from all the possible configurations related to \(|s_i\rangle\). Mathematically, we select all \(W_{jmnk}^{ASSA}\) with \(m=n=i\), sum over index j and k, and obtain the probability \(p_i\).

This leads to the definition of wave function \(\varphi _i = \sum _{j} R_{ij}\), so that \(p_i=|\varphi _i|^2\). The quantum state of S can be described either by the relational matrix R, or by a set of variables \(\{\varphi _i\}\). The vector state of S relative to A, is \(|\psi \rangle _S^A = (\varphi _0, \varphi _1,\ldots , \varphi _N)^T\) where superscript T is the transposition symbol. More specifically, we can define

The justification for the above definition is that the probability of finding S in eigenvector \(|s_i\rangle\) in future measurement can be calculated from it by defining a projection operator \({\hat{P}}_i=|s_i\rangle \langle s_i|\). Noted that \(\{|s_i\rangle \}\) are orthogonal eigenbasis, the probability is rewritten as:

Equations (6) and (7) show why the measurement probability equals the modulus square of wave function. They are derived based on two conditions. First, the probability is calculated by modeling the bidirectional measurement process; Second, there are indistinguishable alternatives of potential measurement configurations in computing the probability. When the alternatives are distinguishable, even through inference from correlation information with another quantum system, the summation in (6) over-counts the applicable alternatives of measurement configurations and should be modified accordingly. A more generic approach to describe the quantum state of S is the reduced density matrix formulation, which is defined as

The probability \(p_i\) is calculated using the projection operator \({\hat{P}}_i=|s_i\rangle \langle s_i|\)

The effect of a quantum operation on the relational probability amplitude matrix can be expressed through an operator. By defining an operator \({\hat{M}}\) in Hilbert space \({{\mathcal {H}}}_S\) as \(M_{ij} = \langle s_i|\hat{M}|s_k\rangle\), one can obtain the new relational probability amplitude matrix \(R^{SA}_{new}\) from the transformation of the initial matrix \(R^{SA}_{init}\)

Consequently, the reduced density becomes

Entanglement measure

The description of S using the reduced density matrix \(\rho _S\) is valid regardless there is entanglement between S and A. To determine whether there is entanglement between S and A, a parameter to characterize the entanglement measure should be introduced. There are many forms of entanglement measure39,40, the simplest one is the von Neumann entropy, which is defined as

Denote the eigenvalues of the reduced density matrix \(\rho _S\) as \(\{\lambda _i\}, i=0,\ldots , N\), the von Neumann entropy is calculated as

A change in \(H(\rho _S)\) implies there is change of entanglement between S and A. Unless explicitly pointed out, we only consider the situation that S is described by a single relational matrix R. In this case, the entanglement measure \(E=H(\rho _S)\).

\(H(\rho _S)\) enables us to distinguish different quantum dynamics. Given a quantum system S and an apparatus A, there are two types of the dynamics between them. In the first type of dynamics, there is no physical interaction and no change in the entanglement measure between S and A. S is not necessarily isolated in the sense that it can still be entangled with A, but the entanglement measure remains unchanged. This type of dynamics is defined as time evolution. In the second type of dynamics, there is a physical interaction and correlation information exchange between S and A, i.e., the von Neumann entropy \(H(\rho _S)\) changes. This type of dynamics is defined as quantum operation. Quantum measurement is a special type of quantum operation with a particular outcome. Whether the entanglement measure changes distinguishes a dynamic as either a time evolution or a quantum operation36,37.

Results

Motivation for path integral implementation

As shown in the previous sections, the relational probability amplitude \(R_{ij}\) provides a complete description of the quantum system relative to a reference system. It is natural to ask what the physical meaning of this quantity is and how to mathematically calculate it. A concrete implementation of the relational quantum mechanics depends on how \(R_{ij}\) is calculated.

In the present works, path integral is chosen to be the implementation method for several reasons. First, traditional path integral offers a physical model to compute the probability amplitude through the summation of contributions from all alternative paths. A physical picture can help to clarify the abstract concepts. Second, mathematically, the technique of summation over alternatives in path integral is similar to the method in calculating the measurement probability, as shown in (6). The mathematical method in path integral can be borrowed intuitively to calculate the relational probability amplitude. Third, path integral is a well-developed theory that had been successfully applied in other physical theories such as quantum field theory. By connecting the relational formulation of quantum mechanics to path integral, we wish to extend the relational formulation to more advance quantum theory in the future.

It is important to point out that we are going to only borrow the idea from path integral on how the relation probability amplitude is calculated, nothing else. Because once the relational probability amplitude is calculated, the basic quantum theory such as the Schrödinger equation and measurement formulation, can be recovered as shown in Refs.36,37. In addition, the path integral implementation presented here brings back valuable inside to the path integral formulation of quantum mechanics itself. In Feynman’s original paper41, the fact that measurement probability equals to the modulus square of the probability amplitude, \(p_i=|\phi |^2\), is introduced as a postulate. The justification is that whenever there are indistinguishable alternatives during a measurement event, such postulate holds42. However, as we mentioned in “Wave function and density matrix”, \(p_i=|\phi |^2\) is derived based on two conditions. Indistinguishable alternatives is just one of them. Modeling the bidirectional measurement process in calculating the probability is the other key reason. In a sense, Feynman’s explanation on \(p_i=|\phi |^2\) is incomplete. By implementing the relation probability amplitude using path integral formulation, we help to complete the justification.

Path integral implementation

Without loss of generality, the following discussion just focuses on one dimensional space-time quantum system. In the Path Integral formulation, the probability to find a quantum system moving from a point \(x_a\) at time \(t_a\) to a point \(x_b\) at time \(t_b\) is postulated to be the absolute square of a probability amplitude, i.e., \(P(b, a)=|K(b, a)|^2\) (as mentioned earlier, such postulate is not needed in our reformulation). The probability amplitude is further postulated as the sum of the contributions of phase from all alternative paths42:

where \({{\mathcal {D}}}x(t)\) denotes integral over all possible paths from point \(x_a\) to point \(x_b\). It is the wave function for S moving from \(x_a\) to \(x_b\)42. The wave function of finding the particle in a region \({{\mathcal {R}}}_b\) previous to \(t_b\) can be expressed as

where \(x_b\) is the position of particle at time \(t_b\). The integral over region \({{\mathcal {R}}}_b\) can be interpreted as integral of all paths ending at position \(x_b\), with the condition that each path lies in region \({{\mathcal {R}}}_b\) which is previous to time \(t_b\). The rational of such definition can be found in Feynman’s original paper41.

Now let’s consider how the relational matrix element can be formulated using similar formulation. Define \({{\mathcal {R}}}_b^S\) is the region of finding system S previous to time \(t_b\), and \({{\mathcal {R}}}_b^A\) is the region of finding measuring system A previous to time \(t_b\). We denote the matrix element as \(R(x_b; y_b)\), where the coordinates \(x_b\) and \(y_b\) act as indices to the system S and apparatus A, respectively. Borrowing the ideas described in Eq. (15), we propose that the relational matrix element is calculated as

where the action \(S_p^{SA}(x(t), y(t))\) consists three terms

The last term is the action due to the interaction between S and A when each system moves along its particular path. The phase contributions from each of the action terms are corresponding to the three factors mentioned in “Measurement probability”. For instance, \(e^{(i/\hbar )S^{S}_p}\) is corresponding to the contribution to the likelihood of finding S in position \(x_a\), and \(e^{(i/\hbar )S^{SA}_{int}}\) is corresponding to the factor due to responding from \(S\rightarrow A\). We would like to remind that the expressions in (17) and (18) are relative to the QRF F but we do not explicitly call out this dependency without loss of clarity.

We can validate (17) by deriving formulation that is consistent with traditional path integral. Suppose there is no interaction between S and A. The third term in Eq. (18) vanishes. Equation (17) is decomposed to product of two independent terms,

Noticed that the coordinates \(y_a\) and \(y_b\) are equivalent of the index j in (7), the wave function of S can be obtained by integrating \(y_b\) over (19)

where constant c is the integration result of the second term in step two. The result is the same as (16) except an unimportant constant.

Next, we consider the situation that there is entanglement between S and A as a result of interaction. The third term in (18) does not vanish. We can no longer define a wave function for S. Instead, a reduced density matrix should be used to describe the state of the particle, \(\rho = RR^\dag\). Similar to (17), the element of the reduced density matrix is

Here \(x_b=x(t_b)\) and \(x'_b=x'(t_b)\). The path integral over \({{\mathcal {D}}}y'(t)\) takes the same region (therefore same end point \(y_b\)) as the path integral over \({{\mathcal {D}}}y(t)\). After the path integral, an integral over \(y_b\) is performed. (21) is equivalent to the J function introduced in Ref.42. We can rewrite the expression of \(\rho\) using the influence functional, \(F(x(t), x'(t))\),

where \(Z=Tr(\rho )\) is a normalization factor to ensure \(Tr(\varrho ) = 1\).

In summary, we show that the relational probability amplitude can be explicitly calculated through (17). \(R^{SA}_{ij}\) is defined as the sum of quantity \(e^{iS_p/\hbar }\), where \(S_p\) is the action of the composite system \(S+A\) along a path. Physical interaction between S and A may cause change of \(S_p\), which is the phase of the probability amplitude. But \(e^{iS_p/\hbar }\) itself is a probabilistic quantity, instead of a quantity associated with a physical property. With this definition and the results in “Relational formulation of quantum mechanics”, we obtain the formulations for wave function (20) and the reduced density matrix (22) that are the consistent with those in traditional path integral formulation. Although the reduced density expression (21) is equivalent to the J function in Ref.42, it has richer physical meaning. For instance, we can calculate the entanglement entropy from the reduced density matrix. This will be discussed further in “The Von Neumann entropy”.

Interaction history of a quantum system

One of the benefits of implementing the relational probability amplitude using path integral approach is that it is rather straightforward to describe the interaction history of a quantum system. Let’s start with a simple case and later extend the formulation to a general case.

Suppose up to time \(t_a\), a quantum system S only interacts with a measuring system A. The detail of interaction is not important in this discussion. S may interact with A for a short period of time or may interact with A for the whole time up to \(t_a\). Assume that after \(t_a\), there is no further interaction between S and A. Instead S starts to interact with another measuring system \(A'\), up to time \(t_b\). Denote the trajectories of \(S, A, A'\) as x(t), y(t), z(t), respectively. Up to time \(t_a\), the relational matrix element is given by (17),

Up to time \(t_b\), as S is interacting with a different apparatus \(A'\), the relational properties is described by a different relational matrix, with the matrix element as

We can split region \({{\mathcal {R}}}_b^S\) into two regions, \({{\mathcal {R}}}_a^S\) and \({{\mathcal {R}}}_{ab}^S\), where \({{\mathcal {R}}}_{ab}^S\) is a region between time \(t_a\) and time \(t_b\). This split allows us to express \(R(x_b, y_a, z_b)\) in terms of \(R(x_a, y_a)\),

where

From (26), one can derive the reduced density matrix element for S,

where

Normalizing the reduced density matrix element, we have

where the normalization factor \(Z=Tr(\rho )\).

The reduced density matrix allows us to calculate the probability of finding the system in a particular state. For instance, suppose we want to calculate the probability of finding the system in a state \(\psi (x_b)\). Let \(\hat{P}=|\psi (x_b)\rangle \langle \psi (x_b)|\) the project operator for state \(\psi (x_b)\). According to (10), the probability is calculated as

To find the particle at a particular position \({\bar{x}}_b\) at time \(t_b\), we substitute \(\psi (x_b)=\delta (x_b-{\bar{x}}_b)\) into (30),

Suppose further that there is no interaction between S and \(A'\) after time \(t_a\), the action \(S_p^{SA'}(x(t), z(t))\) consists only two independent terms, \(S^{SA'}_p(x(t), z(t)) = S^S_p(x(t)) + S^{A'}_p(z(t))\). This allows us to rewrite (26) as a product of two terms:

Consequently, function \(G(x_a, x'_a; x_b, x'_b)\) is rewritten as

The integral in the above equation is simply a constant, denoted as C. Thus, \(G(x_a, x'_a; x_b, x'_b) = CK(x_a, x_b)K^*(x'_a, x'_b)\). Substituting this into (29), one obtains

The constant C is absorbed into the normalization factor. In next section, we will use (34) and (31) to explain the double slit experiment.

We wish to generalize (24) and (29) to describe a series of interaction history of the quantum system S. Suppose quantum system S has interacted with a series of measuring systems \(A_1, A_2, \ldots , A_n\) along the history but interacts with one measuring system at a time. Specifically, S interacts with \(A_1\) up to time \(t_1\). From \(t_1\) to \(t_2\), it only interacts with \(A_2\). From \(t_2\) to \(t_3\), it only interacts with \(A_3\), and so on. Furthermore, we assume there is no interaction among these measuring systems. They are all independent. Denote the trajectories of these measuring systems as \(y^{(1)}(t), y^{(2)}(t), \ldots , y^{(n)}(t)\), and \(y^{(1)}(t_1)=y^{(1)}_b, y^{(2)}(t_2)=y^{(2)}_b, \ldots , y^{(n)}(t_n)=y^{(n)}_b\). With this model, we can write down the relational matrix element for the whole history

where \({{\mathcal {R}}}_n^S\) is the region of finding the measured system S previous to time \(t_n\), \({{\mathcal {R}}}_i^A\) is the region of finding measuring system \(A_i\) between time \(t_{i-1}\) to \(t_i\). Action

is the integral of the Lagrangian over a particular path p lying in region \({{\mathcal {R}}}_n^S\cup {{\mathcal {R}}}_j^A\). The reason the total action among S and \(A_1, A_2, \ldots , A_n\) is written as a summation of individual action between S and \(A_i\) is due to the key assumption that S only interacts with one measuring system \(A_i\) at a time, and the interaction with each of measuring system \(A_i\) is independent from each other. This assumption further allows us to separate path integral over region \({{\mathcal {R}}}_n^S\) into two parts, one is integral over region previous to \(t_{n-1}\), \({{\mathcal {R}}}_{n-1}^S\), and the other is the integral over region between \(t_{n-1}\) and \(t_{n}\), \({{\mathcal {R}}}_{n-1, n}^S\). Thus,

and

Similar to (21), the reduced density matrix element at \(t_n\) is

where

The normalized version of the reduced density matrix element is given by

and the probability of finding S in a position \({\bar{x}}_b^{(n)}\) at time \(t_n\) is

Recall that \(x_b^{(n)}, x_{b'}^{(n)}\) are two different positions of finding S at time \(t_n\). The probability of finding S at position \({\bar{x}}_b^{(n)}\) is simply a diagonal element of the reduced density matrix.

The formulation presented in this section confirms the idea that the relational probability amplitude matrix, and consequently the wave function of S, encodes the relational information along the history of interactions between S and other systems \(\{A_1, A_2,\ldots , A_n\}\). The idea was original conceived in Ref.11, but was not fully developed there.

The double slit experiment

In the double slit experiment, an electron passes through a slit configuration at screen C and is detected at position x of the destination screen B. Denote the probability that the particle is detected at position x as \(p_1=|\varphi _1(x)|^2\) when only slit \(T_1\) is opened, and as \(p_2=|\varphi _2(x)|^2\) when only slit \(T_2\) is opened. If both slits are opened, the probability that the particle is detected at position x after passing through the double slit is given by \(p(x)=|\varphi _1(x)+\varphi _2(x)|^2\), which is different from \(p_1+p_2 = |\varphi _1(x)|^2+|\varphi _2(x)|^2\). Furthermore, when a detector is introduced to detect exactly which slit the particle goes though, the probability becomes \(|\varphi _1(x)|^2+|\varphi _2(x)|^2\). This observation was used by Feynman to introduce the concept of probability amplitude and the rule of calculating the measurement probability as the absolute square of the probability amplitude42. The reason for this, according to Feynman, is that the alternatives of passing T1 or T2 is indistinguishable. Thus, these alternatives are “interfering alternatives” instead of “exclusive alternatives”. However, how the probability \(p(x)=|\varphi _1(x)+\varphi _2(x)|^2\) or \(p(x)=|\varphi _1(x)|^2+|\varphi _2(x)|^2\) is calculated is not provided. In this section we will show that these results can be readily calculated by applying (34) and (31).

Denote the location of \(T_1\) is \(x_1\) and location of \(T_2\) is \(x_2\). Suppose both slits are opened. The electron passes through the double slit at \(t_a\) and detected at the destination screen at \(t_b\). In this process, one only knows the electron interacts with the slits, but there is no inference information on exactly which slit the electron passes through. Assuming equal probability for the electron passing either \(T_1\) or \(T_2\) at \(t_a\), the state vector is represented by a superposition state \(|\psi _a\rangle =(1/\sqrt{2})(|x_1\rangle + |x_2\rangle )\). The reduced density operator at \(t_a\) is \(\varrho (t_a)=(1/2)(|x_1\rangle + |x_2\rangle )(\langle x_1| + \langle x_2|)\). Its matrix element is

where the property \(\langle x_a|x_i\rangle =\delta (x_a-x_i)\) is used in the last step. Later at time \(t_b\), according to (34), the density matrix element for the electron at a position \(x_b\) in the detector screen is

According to (31), the probability of finding the electron at a position \(x_b\) is

where \(\varphi _1(x_b)=K(x_b, x_1)\) and \(\varphi _2(x_b)=K(x_b, x_2)\). Therefore, the probability to find the electron showing up at position \(x_b\) is \(p(x_b, t_b) = (1/2)|\varphi _1(x_b, t_b)+\varphi _2(x_b, t_b)|^2\).

Modified double slit experiment discussed in page 7 of Ref.42. Here a light source is placed behind the double slit to assist detecting which slit an electron passes through.

Now consider the modified experiment proposed by Feynman, shown in Fig. 2. A lamp is placed behind the double slit, which enables one to detect which slit the electron passes through. The photon is scattered by the electron. By detecting the scattered photon one can infer which slit the electron passes through. In a sense, the electron is “measured” by the photon. The variable carried by the photon to tell which slit the electron passed through acts as a pointer variable. There is a one to one correlation between the pointer state of the photon and which slit the electron passes through. Denote the pointer states of photon that are corresponding to the electron passing though \(T_1\) and \(T_2\) as \(|T_1\rangle\) and \(|T_2\rangle\), respectively. The state vector of the composite system of electron and photon right after scattering is \(|\Psi \rangle =1/\sqrt{2}(|x_1\rangle |T_1\rangle + |x_2\rangle |T_2\rangle )\), thus the reduced density operator for the electron after passing the slit configuration is \({\hat{\rho }}_a = Tr_T(|\Psi \rangle \langle \Psi |)=\frac{1}{2}(|x_1\rangle \langle x_1| + |x_2\rangle \langle x_2|)\). Its matrix element is

Substituted this into (34), the reduced density matrix element at \(t_b\) is

From this, the probability of finding the electron at position \(x_b\) is

There is no interference term \(\varphi _1\varphi _2\) in (48). This result can be further understood as following. The interaction between the electron and photon results in an entanglement between them. The alternatives of passing through T1 or T2 become distinguishable as it can be inferred from the correlation encoded in the photon. They are exclusive alternatives instead of interfering alternatives. Thus, the interference terms should be excluded to avoid over-counting alternatives that contribute to the measurement probability (see more detailed explanation of this probability counting rule in Ref.36), resulting in (48). These considerations are consistent with Feynman’s concept of interfering alternatives versus exclusive alternative. Here we further advance the ideas by giving a concrete calculation of the resulting probability.

The Von Neumann entropy

The definition of Von Neumann entropy in (13) calls for taking the logarithm of the density matrix. This is a daunting computation task when the reduced density matrix element is defined using path integral formulation as Eq. (22). Brute force calculation of the eigenvalues of the reduced density matrix may be possible if one approximates the continuum of the position with a lattice model with spacing \(\epsilon\), and then takes the lattice spacing \(\epsilon \rightarrow 0\) to find the limit. On the other hand, in quantum field theory, there is a different approach to calculate entropy based on the “replica trick”43,44,45. This approach allows one to calculate the von Neumann entropy without taking the logarithm. We will briefly describe this approach and apply it here. In the case of finite degree of freedom, the eigenvalues of the reduced density matrix \(\lambda _i\) must lie in the interval [0, 1] such that \(\sum _i\lambda _i=1\). This means the sum \(Tr(\rho ^n)=\sum _i\lambda _i^n\) is convergent. This statement is true for any \(n>1\) even n is not an integer. Thus, the derivative of \(Tr(\rho ^n)\) with respect to n exists. It can be shown that

where \(H_S^{(n)}\) is the R\(\acute{e}\)nyi entropy, defined as

The replica trick calls for calculating \(\rho _S^n\) for integers n first and then analytically continuing to real number n. In this way, calculation of the von Neumann entropy is turned into the calculation of \(Tr(\rho _S^n)\). But first, we have to construct \(\rho _S^n\) from the path integral version of reduced density matrix element in (22).

\(\rho _S^n\) is basically the multiplication of n copies of the same density matrix, i.e., \(\rho _S^n = \rho _S^{(1)} \rho _S^{(2)} \ldots \rho _S^{(n)}\), at time \(t_b\). To simplify the notation, we will drop the subscript S. Since all the calculations are at time \(t_b\), we also drop subscript b in Eq. (22). Denote the element of ith copy of reduced density matrix as \(\rho (x^{(i)}_-, x^{(i)}_+)\), where \(x^{(i)}_- = x^{(i)}_L(t_b)\) and \(x^{(i)}_+ = x^{(i)}_R(t_b)\) are two different positions at time \(t_b\), and \(x^{(i)}_L(t)\) and \(x^{(i)}_R(t)\) are two different trajectories used to perform the path integral for ith copy of reduced density matrix element as defined in (22). With these notations, the matrix element of \(\rho _S^n\) is

The delta function is introduced in the calculation above to ensure that when multiplying two matrices, the second index of the first matrix element and first index of the second matrix element are identical in the integral. From (51), we find the trace of \(\rho ^n\) is

where \(mod(i, n)=i\) for \(i<n\) and \(mod(i, n)=0\) for \(i=n\). To further simplifying the notation, denote \(\Delta _{+-}^{(i)} = \delta (x^{(i)}_+ - x^{(mod(i, n)+1)}_-)\), \(dx_{+-}^{(i)}=dx^{(i)}_+dx^{(mod(i,n)+1)}_-\) and \(Z(n)=Tr(\rho ^n)\), the above equation is rewritten in a more compact form

Substituting (22) into (53), we get

We have omitted the normalization so far. To remedy this, the normalized \(Z(n)=Tr(\rho _n)\) should be rewritten as

where \(Z(1)=Z=Tr(\rho )\) as defined in (22). Once \({{\mathcal {Z}}}(n)\) is calculated, the von Neumann entropy is obtained through

(54) appears very complicated. Let’s validate it in the case that there is no interaction between S and A. One would expect there is no entanglement between S and A in this case. Thus, the entropy should be zero. We can check whether this is indeed the case based on (54). Since there is no interaction between S and A, the influence functional is simply a constant. (22) is simplified into

where \(\varphi (x_b)\) has been given in (20). Taking trace of the above equation, one gets

This gives

(57) implies that S is in a pure state, since by definition, \(\varphi (x_b) = \langle x_b|\varphi \rangle = \int \delta (x-x_b)\varphi (x)dx\), one can rewrite (57) in a form for a pure state,

Multiplication of density matrix that represents a pure state gives the same density matrix itself. Using the same notation as in (51), we obtain

From this we can deduce that \(\rho ^n = Z^{n-1}\rho\). This gives \(Z(n) = Tr(\rho ^n) = Z^n\), and

It is independent of n, thus

as expected. The von Neumann entropy is only non-zero when there is an interaction between S and A. The effect of the interaction is captured in the influence functional. Concrete form of the influence functional should be constructed in order to find examples where the von Neumann entropy is non-zero.

Discussion and conclusion

The G function

The G function introduced in (28) can be rewritten in terms of the influence functional. To do this, we first rewrite (26) as

Substituting this into (28), we have

Consequently, the normalized reduced density matrix element in (29) becomes

This gives the same result as in Ref.46. However, there is advantage of using the G function instead of the influence functional F because the complexity of path integral is all captured inside the G function, making it mathematically more convenient. This can be shown in the following modified double split experiment example. Suppose after the electron passing the double slit, there is an electromagnetic field between the double slit and the destination screen. We will need to apply (29) instead of (34) to calculate the reduced density matrix element. In this case, we simply substitute (43) into (29) and obtain

The probability of finding the electron at position \(x_b\) is

From the definition of \(G(x_a, x_b; x'_a, x'_b)\) in (28), it is easy to derive the following property,

When \(x_a=x'_a\) and \(x_b=x'_b\), we have \(G(x_a, x_b; x_a, x_b) = G^*(x_a, x_b; x_a, x_b)\). Thus, \(G(x_a, x_b; x_a, x_b)\) must be a real function. We denote it as \(G_R(x_a, x_b)\). With these properties, (68) can be further simplified as

This is consistent with the requirement that \(p(x_b)\) must be a real number. The second term \(Re[G(x_1, x_b; x_2, x_b)]\) is an interference quantum effect due to the fact that the initial state after passing through the double slit is a pure state. This interference term also depends on the interaction between the electron and the electromagnetic field. If the electromagnetic field is adjustable, the probability distribution will be adjusted accordingly. Tuning the electromagnetic field will cause the probability distribution \(p(x_b)\) to change through the interference term in (70). Presumably, the Aharonov−Bohm effect47 can be explained through (70) as well. The detailed calculation will be reported in a separated manuscript.

Influence functional and entanglement entropy

In “The Von Neumann entropy”, we show that when there is no interaction, the influence functional is a constant and therefore the entanglement entropy is zero. We can relax this condition to include interaction but want to detect whether there is entanglement. Suppose the influence functional can be decomposed in the following production form,

Such a form of influence functional satisfies the rule42 \(F(x(t), x'(t)) = F^*(x'(t), x(t))\). Equation (71) implies that the entanglement entropy is still zero even there is interaction between S and A. The reason for this is that the reduced density matrix element can be still written as the form of (57) but with

As long as (57) is valid, S is in a pure state therefore the reasoning process from (57) to (63) is applicable here.

We now examine the entanglement entropy for some general forms of influence functional. The most general exponential functional in linear form is42

where g(t) is a real function. Clearly this form satisfies the condition specified in (71) since we can take \(f(x(t)) = exp[i\int x(t)g(t)dt]\) and \(f(x'(t)) = exp[i\int x'(t)g(t)dt]\). The entanglement entropy is zero with this form of influence functional.

On the other hand, the most general Gaussian influence functional42

where \(\alpha (t, t')\) is an arbitrary complex function, defined only for \(t>t'\). This form of influence functional cannot be decomposed to satisfy the condition specified in (71). The entanglement entropy may not be zero in this case. It will be of interest to further study the influence functional of some actual physical setup and calculate the entanglement entropy explicitly.

Compared to the transactional interpretation

After the initial relational formulation of quantum mechanics36, it was brought to our attention that the bidirectional measurement process which is important in the derivation of the measurement probability appears sharing some common ideas with the transaction model48. In particular, the transaction model requires a handshake between a retarded “offered wave” from an emitter and an advanced “confirmation wave” from an absorber to complete a transaction in a quantum event. This is a bidirectional process. While it is encouraging to note that the bidirectional nature of a quantum measurement event has been recognized in the transaction model, there are several fundamental differences between the transaction model and the bidirectional measurement framework presented here. First, the transaction model considers the offered wave and the confirmation wave as real physical waves. In our framework, we do not assume such waves existing. Instead, the probing and responding are just two aspects in a measurement event, and we require the probability calculation to faithfully model such bidirectional process. Second, in the transaction model, the randomness of measurement outcomes is due to the existence of different potential future absorbers. Thus, the randomness in quantum mechanics depends on the existence of absorbers. There is no such assumption in our framework. Third, the transaction model derives that the amplitude of the confirmation wave at the emitter is proportional to the modulus square of the amplitude of the offer wave, which is related to the probability of completing a transaction with the absorber. This appears to be ambiguous since it suggests the confirmation wave is also a probability wave. In our formulation, we only focus on how the measurement probability is calculated, and clearly point out that the wave function is just a mathematical tool.

Outlook of the relational formulation

The framework to calculate the measurement probability in “Measurement probability” is the key to our reformulation. However, it is essentially based on an operational model from a detailed analysis of bidirectional measurement process, instead of being derived from more fundamental physical principles. In particular, there may be better justification for Eq. (3). The current model is only served as a step to deepen our understanding of relational quantum mechanics. It is desirable to continue searching for more fundamental physical principles to justify the calculation of measurement probability. The fact the \(R^{SA}_{ij}\) is a complex number means that this variable actually comprises two independent variables, the modulus and the phase. This implies that more degrees of freedom are needed to have a complete description of a quantum event. Stochastic mechanics, for instance, introduces forward and backward velocities instead of just one classical velocity to describe the stochastic dynamics of a point particle. With the additional degree of freedom, two stochastic differential equations for the two velocities are derived. Then, through a mathematical transformation of two velocity variables in \({\mathbb {R}}\) into one complex variable in \({\mathbb {C}}\), the two differential equations turn into the Schrödinger equation49,50,51,52,53. It will be interesting to investigate if \(R^{SA}_{ij}\) can be implemented in the context of stochastic mechanics, where we expect \(R^{SA}_{ij}\) will be decomposed to two independent variables in \({\mathbb {R}}\) and each of them is a function of velocity variables.

As discussed in the introduction section, the RQM principle consists two aspects. First, we need to reformulate quantum mechanics relative to a QRF which can be in a superposition quantum state, and show how quantum theory is transformed when switching QRFs. In this aspect, we accept the basic quantum theory as it is, including Schrödinger equation, Born’s rule, von Neunman measurement theory, but add the QRF into the formulations and derive the transformation theory when switching QRFs31,32,33. Second, we go deeper to reformulate the basic theory itself from relational properties, but relative to a fixed QRF. Here the fixed QRF is assume to be in a simple eigen state. This is what we do in Refs.36,37 and the present work. A complete RQM theory should combine these two aspects together. This means one will need to reformulate the basic quantum theory from relational properties and relative to a quantum reference frame that exhibits superposition behavior. Therefore, a future step is to investigate how the relational probability amplitude matrix should be formulated when the QRF possesses superposition properties, and how the relational probability amplitude matrix is transformed when switching QRFs.

Conclusions

The full implementation of probability amplitude using path integral provides a concrete physical picture for the relational formulation of quantum mechanics, which is missing in the initial formulation36. It gives a clearer meaning of the relational probability amplitude. \(R^{SA}_{ij}\) is defined as the summation of quantity \(e^{iS_p/\hbar }\), where \(S_p\) is the action of the composite system \(S+A\) along a path. Physical interaction between S and A may cause change of \(S_p\), which is the phase of a complex number. Such definition is consistent with the analysis in “Measurement probability” on the factors that determine the relational probability amplitude. In return, the implementation brings back new insight to the path integral itself by completing the justification on why the measurement probability can be calculated as modulus square of probability amplitude.

The path integral implementation allows us to develop formulations for some of interesting physical processes and concepts. For instance, we can describe the interaction history between the measured system and a series of measuring systems or environment. This confirms the idea that a quantum state essentially encodes the information of previous interaction history11. A more interesting application of the coordinator representation of the reduced density matrix is the method to calculate entanglement entropy using path integral approach. This will allow us to potentially calculate entanglement entropy of a physical system that interacts with classical fields, such as an electron in an electromagnetic field. Section “Influence functional and entanglement entropy” gives a criterion on whether there is entanglement between the system and external environment based on the influence functional.

The present work significantly extends the initial formulation of RQM36 in several fronts. In addition to the concrete path integral implementation of relational probability amplitude, we also provide a much clearer explanation on the framework for calculating the measurement probability from the bidirectional measurement process, as discussed in “Measurement probability”. At the conceptual level, a thorough analysis of the two aspects of relational quantum mechanics connects this work with the QRFs theories, which are currently under active investigations. As a result, one can conceive the next step for a full relational formulation, as suggested in “Outlook of the relational formulation”.

References36,37 and this paper together show that quantum mechanics can be constructed by shifting the starting point from the independent properties of a quantum system to the relational properties between a measured quantum system and a measuring quantum system. In essence, quantum mechanics demands a new set of rules to calculate probability of a potential outcome from the physical interaction in a quantum measurement. Although the bidirectional measurement process is still an operational model, rather than deriving from first principles, we hope the reformulation efforts can be one step towards a better understanding of quantum mechanics.

References

Bohr, N. Quantum mechanics and physical reality. Nature 136, 65 (1935).

Bohr, N. Can quantum mechanical description of physical reality be considered completed?. Phys. Rev. 48, 696–702 (1935).

Jammer, M. The Philosophy of Quantum Mechanics: The Interpretations of Quantum Mechanics in Historical Perspective, Chapter 6 (Wiley-Interscience, 1974).

Everett, H. “Relative state’’ formulation of quantum mechanics. Rev. Mod. Phys. 29, 454 (1957).

Wheeler, J. A. Assessment of Everett’s “relative state’’ formulation of quantum theory. Rev. Mod. Phys. 29, 463 (1957).

DeWitt, B. S. Quantum mechanics and reality. Phys. Today 23, 30 (1970).

Zurek, W. H. Environment-induced superselection rules. Phys. Rev. D 26, 1862 (1982).

Zurek, W. H. Decoherence, einselection, and the quantum origins of the classical. Rev. Mod. Phys. 75, 715 (2003).

Schlosshauer, M. Decoherence, the measurement problem, and interpretation of quantum mechanics. Rev. Mod. Phys. 76, 1267–1305 (2004).

Rovelli, C. Relational quantum mechanics. Int. J. Theor. Phys. 35, 1637–1678 (1996).

Smerlak, M. & Rovelli, C. Relational EPR. Found. Phys. 37, 427–445 (2007).

Höehn, P. A. Toolbox for reconstructing quantum theory from rules on information acquisition. Quantum 1, 38 (2017).

Höehn, P. A. Quantum theory from questions. Phys. Rev. A 95, 012102 (2017) (arXiv:1511.01130v7).

Bene, G. & Dieks, D. A perspectival version of the modal interpretation of quantum mechanics and the origin of macroscopic behavior. Found. Phys. 32(5), 645–671 (2002).

Aharonov, Y. & Susskind, L. Charge superselection rule. Phys. Rev. 155, 1428 (1967).

Aharonov, Y. & Susskind, L. Observability of the sign change of spinors under 2\(\pi\) rotations. Phys. Rev. 158, 1237 (1967).

Aharonov, Y. & Kaufherr, T. Quantum frames of reference. Phys. Rev. D. 30(2), 368 (1984).

Palmer, M. C., Girelli, F. & Bartlett, S. D. Changing quantum reference frames. Phys. Rev. A. 89(5), 052121 (2014).

Bartlett, S. D., Rudolph, T., Spekkens, R. W. & Turner, P. S. Degradation of a quantum reference frame. N. J. Phys. 8(4), 58 (2006).

Poulin, D. & Yard, J. Dynamics of a quantum reference frame. N. J. Phys. 9(5), 156 (2007).

Rovelli, C. Quantum reference systems. Class. Quantum Gravity 8(2), 317 (1991).

Poulin, D. Toy model for a relational formulation of quantum theory. Int. J. Theor. Phys. 45(7), 1189–1215 (2006).

Girelli, F. & Poulin, D. Quantum reference frames and deformed symmetries. Phys. Rev. D 77(10), 104012 (2008).

Loveridge, L., Miyadera, T. & Busch, P. Symmetry, reference frames, and relational quantities in quantum mechanics. Found. Phys. 48, 135–198 (2018).

Pienaar, J. A relational approach to quantum reference frames for spins. (2016) arXiv preprint at arXiv:1601.07320.

Angelo, R. M., Brunner, N., Popescu, S., Short, A. & Skrzypczyk, P. Physics within a quantum reference frame. J. Phys. A 44(14), 145304 (2011).

Angelo, R. M. & Ribeiro, A. D. Kinematics and dynamics in noninertial quantum frames of reference. J. Phys. A 45(46), 465306 (2012).

Bartlett, S. D., Rudolph, T. & Spekkens, R. W. Reference frames, superselection rules, and quantum information. Rev. Mod. Phys. 79, 555 (2007).

Gour, G. & Spekkens, R. W. The resource theory of quantum reference frames: manipulations and monotones. N. J. Phys. 10(3), 033023 (2008).

Bartlett, S. D., Rudolph, T., Spekkens, R. W. & Turner, P. S. Quantum communication using a bounded-size quantum reference frame. N. J. Phys. 11, 063013 (2009).

Giacomini, F., Castro-Ruiz, E. & Brukner, C. Quantum mechanics and the covariance of physical laws in quantum reference frame. Nat. Commun. 10, 494 (2019).

Vanrietvelde, A., Höhn, P., Giacomini, F. & Castro-Ruiz, E. A change of perspective: switching quantum reference frames via a perspective-neutral framework. Quantum 4, 225 (2020) (arXiv:1809.00556).

Yang, J. M. Switching quantum reference frames for quantum measurement. Quantum 4, 283 (2020) (arXiv:1911.04903v4).

Höhn, P., Smith, A. R. H. & Lock, M. The Trinity of Relational Quantum Dynamics (2019) arXiv:1912.00033.

Ballesteros, A., Giacomini, F. & Gubitosi, G. The group structure of dynamical transformations between quantum reference frames (2020). arxiv:2012.15769.

Yang, J. M. A relational formulation of quantum mechanics. Sci. Rep. 8, 13305 (2018) (arXiv:1706.01317).

Yang, J. M. Relational formulation of quantum measurement. Int. J. Theor. Phys. 58(3), 757–785 (2019) (arXiv:1803.04843).

Von Neumann, J. Mathematical Foundations of Quantum Mechanics, Chap. VI (Princeton University Press, Princeton Translated by Robert T. Beyer 1932/1955).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information (Cambridge University Press, 2000).

Horodecki, R., Horodecki, P., Horodecki, M. & Horodecki, K. Quantum entanglement. Rev. Mod. Phys. 81, 865–942 (2009).

Feynman, R. Space-time approach to non-relativistic quantum mechanics. Rev. Mod. Phys. 20, 367 (1948).

Feynman, R. & Hibbs, A. Quantum Mechanics and Path Integral, Emended by Styer, F. (Dover Publications, 2005).

Holzhey, C., Larsen, F. & Wilczek, F. Geometric and renormalized entropy in conformal field theory. Nucl. Phys. B 424, 443–467 (1994).

Calabrese, P. & Cardy, J. Entanglement entropy and conformal theory. J. Phys. A A42, 504005 (2009).

Rangamani, M. & Takayanagi, T. Holographic Entanglement Entropy (Springer, 2017).

Feynman, R. & Vernon, F. Jr. The theory of a general quantum system interacting with a linear dissipative system. Ann. Phys. 24, 118–173 (1963).

Aharonov, Y. & Bohm, D. Significance of electromagnetic potentials in quantum theory. Phys. Rev. 115, 485–491 (1959).

Cramer, J. G. The transactional interpretation of quantum mechanics. Rev. Mod. Phys. 58(3) (1986).

Nelson, E. Derivation of the Schrödinger equation from Newtonian mechanics. Phys. Rev. 150, 1079 (1966).

Nelson, E. Quantum Fluctuations (Princeton University Press, 1985).

Yasue, K. Stochastic calculus of variations. J. Funct. Anal. 41, 327–340 (1981).

Guerra, F. & Morato, L. I. Quantization of dynamical systems and stochastic control theory. Phys. Rev. D, 1774–1786 (1983).

Yang, J. M. Stochastic quantization based on information measures. arXiv:2102.00392.

Acknowledgements

The author would like to thank the anonymous referees for their valuable comments, which motivate the author to improve the explanations of the measurement probability framework, and to clarify the relevancy of this work with the quantum reference frame theories.

Author information

Authors and Affiliations

Contributions

J.M.Y. designed the study, conceived the ideas, performed the mathematical calculation, and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, J.M. Path integral implementation of relational quantum mechanics. Sci Rep 11, 8613 (2021). https://doi.org/10.1038/s41598-021-88045-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-88045-6

- Springer Nature Limited