Abstract

The continuously growing number of COVID-19 cases pressures healthcare services worldwide. Accurate short-term forecasting is thus vital to support country-level policy making. The strategies adopted by countries to combat the pandemic vary, generating different uncertainty levels about the actual number of cases. Accounting for the hierarchical structure of the data and accommodating extra-variability is therefore fundamental. We introduce a new modelling framework to describe the pandemic’s course with great accuracy and provide short-term daily forecasts for every country in the world. We show that our model generates highly accurate forecasts up to seven days ahead and use estimated model components to cluster countries based on recent events. We introduce statistical novelty in terms of modelling the autoregressive parameter as a function of time, increasing predictive power and flexibility to adapt to each country. Our model can also be used to forecast the number of deaths, study the effects of covariates (such as lockdown policies), and generate forecasts for smaller regions within countries. Consequently, it has substantial implications for global planning and decision making. We present forecasts and make all results freely available to any country in the world through an online Shiny dashboard.

Similar content being viewed by others

Introduction

Outbreaks of the COVID-19 pandemic have been causing worldwide socioeconomic and health concerns since December 20191, putting high pressure on healthcare services2. SARS-CoV-2, the causative agent of COVID-19, spreads efficiently and1,3, consequently, the effectiveness of control measures depends on the relationship between epidemiological variables, human behaviour, and government intervention to control the spread of the disease3,4. Different attempts to model the virus outbreaks in many countries have been made, involving mechanistic models5,6, and extensions based on susceptible-infected-recovered (SIR) systems7,8,9. Countries have also put together task forces to work with COVID-19 data and study the direct and indirect impact on the population, economy, banking and insurance, and financial markets2,10,11,12. In addition, funding agencies have put together rapid response calls worldwide for projects that can help to deal with this pandemic. However, further investment is still needed to foment priority research involving SARS-CoV-2, so as to establish high-level coordination of essential, policy-relevant, social and mental health science13,14.

This pandemic is associated with high basic reproduction numbers15,16, spreading with great speed since a significant number of infected individuals remain asymptomatic while still being able to transmit the virus17. Simulation studies18 show that the adoption of mitigation strategies is unlikely to be feasible to avoid going beyond healthcare systems’ capacity limits. Moreover, even if all patients were able to be treated, about 250,000 to over a million deaths are expected in the UK and the US, respectively18. A pressing concern here is how to avoid bringing healthcare systems to a collapse17,18. Knowing how the outbreak is progressing is crucial to predict whether or when this will happen, and therefore to plan and implement measures to reduce the number of cases so as to avoid it.

Policies for reducing the number of infected people, such as social distancing and movement restrictions, have been put in place in many countries, but for many others, a full lockdown may be very difficult (if not impossible) to implement. This also depends heavily on the country’s political leadership, socio-economic reality, and epidemic stage1,19. In this context, accurate short-term forecasting would prove itself invaluable, especially for systems on the brink of collapse and countries whose governments must consider trade-offs between lockdowns and avoiding full economical catastrophe.

The main problem is that not only is this disease new, but there are also many factors acting in concert, resulting in a seemingly unpredictable outbreak progression. Forecasting with great accuracy under these circumstances is very difficult. Here we propose a new modelling framework, based on a state-space hierarchical model, that can generate forecasts with excellent accuracy for up to seven days ahead. To aid policy making and effective implementation of restrictions or reopening measures, we provide all results as an R Shiny Dashboard, including week-long forecasts for every country in the world whose data is collected and made available by the European Centre for Disease Prevention and Control (ECDC).

Results

Our model displayed an excellent predictive performance for short-term forecasting. We validated the model by fitting it to the data up to 18-November-2020, after removing the last seven time points (from 19-November-2020 until 25-November-2020), and compared the forecasted values with the observed ones (Fig. 1A). We observe that it performs very well (Fig. 1B). The concordance correlation coefficient and Pearson correlation coefficient (a measure of precision) remain higher than 0.8, even for the last day ahead forecast. We observed an accuracy close to 1, showing our methodology has a high potential to expand the number of forecast days to more than seven. Interestingly, we observed that Spain reported zero cases on the 22nd and 23rd of November, while the forecast for these days are around 19, 950 (\(\approx 10^{4.3}-1\)). In these circumstances, the forecast could be considered a better depiction of reality than the reported observations.

We carried out this same type of validation study using data up to 18-November-2020, 13-May-2020, 6-May-2020, and up to 29-Apr-2020, and the results were very similar to the ones outlined above, although the validation study carried out in November showed better performance than the validation done in May (see Supplementary Materials). Furthermore, even though performance is expected to fall as the number of days ahead increases, there are still many countries where the forecasted daily number of new cases is very close to the observed one.

(A) Logarithm of the observed \(y_{it}\) versus the forecasted daily number of cases \(y^*_{it}\) for each country, for up to seven days ahead, where each day ahead constitutes one panel. The forecasts were obtained from the autoregressive state-space hierarchical negative binomial model, fitted using data up to 18-November-2020. The first day ahead corresponds to 19-November-2020, and the seventh to 25-November-2020. Each dot represents a country, and the sixteen countries shown in Fig. 2 are represented by blue triangles. We add 1 to the values before taking the logarithm. (B) Observed accuracy, concordance correlation coefficient (CCC) and Pearson correlation (r) between observed (\(y_{it}\)) and forecasted (\(y^*_{it}\)) values for each of the days ahead of 18-November-2020.

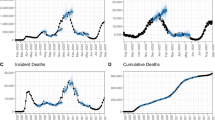

The autoregressive component in the model directly relates to the pandemic behaviour over time for each country (see Supplementary Materials). It is directly proportional to the natural logarithm of the daily number of cases, given what happened in the previous day. Therefore, it is sensitive to changes and can be helpful to detect a possible second wave. See, for example, its behaviour for Australia, Iceland and Ireland—it shows that the outbreak is decaying; however, it may still take time to subside completely (Fig. 2). In Austria, France, Italy, Japan, Serbia, South Korea, Spain and the UK, however, the outbreak is in the middle of another wave, having peaked in some of those countries or aiming at a peak. Hence, these countries must be very cautious when relaxing restrictions. In Brazil and the United States, it seems as if the outbreak is taking a long time to subside.

The estimates for the model parameters suggest that about 12.6% of the number of reported cases can be viewed as contributing to extra variability and possibly may consist of outlying observations (see the model estimates in the Supplementary Materials). These observations may be actual outliers, but this is likely a feature of the data collection process. In many countries, the data recorded for a particular day actually reflects tests that were done over the previous week or even earlier. This generates aggregated-level data, which is prone to exhibiting overdispersion, which is accounted for in our model, but for some observations, this variability is even greater, since they reflect a large number of accumulated suspected cases that were then confirmed. There is also a large variability between countries regarding their underlying autoregressive processes (see the estimates for the variance components in the Supplementary Materials). This corroborates that countries are dealing with the pandemic in different ways and may collect and/or report data differently.

We propose clustering the countries based on the behaviour of their estimated autoregressive parameter over the last 60 days (Fig. 3). This gives governments the opportunity to see which countries have had the most similar recent behaviour of the outbreak and study similar or different measures taken by these other countries to help determine policy. We observe, for example, that Spain, the UK and Russia have been experiencing a very similar situation recently; the same can be said about India and the US. Our R Shiny dashboard displays results in terms of forecasts and country clustering. It can be accessed at https://prof-thiagooliveira.shinyapps.io/COVIDForecast/. Through the dashboard, users can choose to highlight a different number of clusters, which may provide other insights.

Dendrogram representing the hierarchical clustering of countries based on their estimated autoregressive parameters \(\hat{\gamma }_{it}\) from 26-Sep-2020 to 25-Nov-2020. The clustering used Ward’s method and pairwise dynamic time warp distances between the countries’ time series. Each of 10 clusters is represented with a different colour. Country abbreviations: BSES = Bonaire, Saint Eustatius and Saba; IC Japan = Cases on an international conveyance - Japan; CAE = Central African Republic; DRC = Democratic Republic of the Congo; NMI = Northern Mariana Islands; SKN = Saint Kitts and Nevis; SVG = Saint Vincent and the Grenadines; STP = São Tomé and Príncipe; TC Islands = Turks and Caicos Islands; UAE = United Arab Emirates.

Discussion

Our modelling framework allows for forecasting the daily number of new COVID-19 cases for each country and territory for which data has been gathered by the ECDC. It introduces statistical novelty in terms of modelling the autoregressive parameter as a function of time. This makes it highly flexible to adapt to changes in the autoregressive structure of the data over time. In the COVID-19 pandemic, this translates directly into improved predictive power in terms of forecasting future numbers of daily cases. Our objective here is to provide a simple, yet not overly simplistic, framework for country-level decision-making, and we understand this might be easier for smaller countries when compared to nations of continental dimensions, where state-level decision-making should be more effective20. Moreover, the model can be adapted to other types of data, such as the number of deaths, and also be used to obtain forecasts for smaller regions within a country. A natural extension would be to include an additional hierarchical structure taking into account the nested relationship between cities, states, countries and ultimately continents, while also accommodating the spatial autocorrelation. This would allow for capturing the extra-variability introduced by aggregating counts over different cities and districts.

We remark that one must be very careful when looking at the forecasted number of cases. These values must not be looked at in isolation. It is imperative that the entire context is looked at and that we understand how the data is actually generated21. The model will obtain forecasts based on what is being reported, and therefore will be a direct reflection of the data collection process, be it appropriate or not. When data collection is not trustworthy, model forecasts will not be either. Our estimated number of outlying observations of approximately 12.6% is relatively high. When looking at each country’s time series, we observe that countries that display unusual behaviour in terms of more outlying observations are usually related to a poorer model predictive performance as well. This suggests that the data collection process is far from optimal. Therefore, looking at the full context of the data is key to implementing policy based on model forecasts.

As self-criticism, our model may possibly be simplistic in the sense that it relies on the previous observation to predict the next one but does not rely on mechanistic biological processes, which may be accommodated by SEIR-type models5,22,23. These types of models allow for a better understanding of the pandemic in terms of the disease dynamics and are able to provide long-term estimates6. However, as highlighted above, our objective is short-term forecasting, which is a task that is already very difficult. Long-term forecasting is even more difficult, and we believe it could even be speculative when dealing with its implications pragmatically. A more tangible solution to this issue is to combine the short-term forecasting power of our proposed modelling framework with the long-term projections provided by mechanistic models so as to implement policy that can solve pressing problems efficiently, while at the same time looking at how it may affect society in the long run. We acknowledge all models are wrong, including the one presented here. However, we have shown that it provides excellent forecasts for up to a week ahead, and this may be able to help inform the efficacy of country lockdown and/or reopening policies. This is especially useful when using our proposed method coupled with models that take into account the incubation period, variable lag times associated with disease progression, and the estimation of the impact of public health measures. Therefore, the practical relevance of our proposed method is stronger when linked to complementary long-term information.

Even though the model performs very well, we stress the importance of constantly validating the forecasts, since not only can the underlying process change, but also data collection practices change (to either better or worse). Many countries are still not carrying out enough tests, and hence the true number of infections is sub-notified24. This hampers model performance significantly, especially that of biologically realistic models5.

There is a dire need for better quality data21. This is a disease with a very long incubation period (up to 24 days25), with a median value varying from 4.5 to 5.8 days26, which makes it even harder to pinpoint exactly when the virus was actually transmitted from one person to the other. Furthermore, around \(97.5\%\) of those who develop symptoms will do so in between 8.2 and 15.6 days26. The number of reported new cases for today reflects a mixture of infections transmitted at any point in time over the last few weeks. The time testing takes also influences this. One possible alternative is to model excess deaths when compared to averages over previous years27,28. This is an interesting approach, since it can highlight the effects of the pandemic (see, e.g., the insightful visualisations at Our World in Data29, and the Finantial Times30). Again, this is highly dependent on the quality of the data collection process, not only for COVID-19 related deaths but also those arising from different sources. Many different teams of data scientists and statisticians worldwide are developing different approaches to work with COVID-19 data3,9,16,31. It is the duty of each country’s government to collect and provide accurate data. This way, it can be used with the objective of improving healthcare systems and general social wellbeing.

The developed R Shiny Dashboard displays seven-day forecasts for all countries and territories whose data are collected by the ECDC and clustering of countries based on the last 60 days. This can help governments currently implementing or lifting restrictions. It is possible to compare government policies between countries at similar pandemic stages to determine the most effective courses of action. These can then be tailored by each particular government to their own country. The efficiency of measures put in place in each country can also be studied using our modelling framework, since it easily accommodates covariates in the linear predictor. Then, the contribution of these country-specific effects to the overall number of cases can serve as an indicator of how they may be influencing the behaviour of the outbreaks over time.

Government policies are extremely dependent on the reality of each country. It has become clear that there are countries that are well able to withstand a complete lockdown, whereas others cannot cope with the economic downfall19. The issue is not only economic, but also of newly emerging health crises that are not due to COVID-19 lethality alone, but to families relying on day-to-day work to guarantee their food supplies. For these countries, there is a trade-off between avoiding a new evil versus amplifying pre-existing problems or even creating new ones. It is indeed challenging to create a one-size-fits-all plan in such circumstances, which makes it even more vital to strive for better data collection practices.

We hope to be able to contribute to the development of an efficient short-term response to the pandemic for countries whose healthcare systems are at capacity, and countries implementing reopening plans. By providing a means of comparing recent behaviour of the outbreak between countries, we also hope to provide a means to opening dialogue between countries going through a similar stage, and those who have faced similar situations before.

Methods

Data acquisition

The data was obtained from the European Centre for Disease Prevention and Control (ECDC) up to 14 December 2020 (after this date, the ECDC started to report aggregated weekly data; however, the methodology proposed here works for any other data source collecting daily data), and the code is implemented such that it downloads and processes the data from https://www.ecdc.europa.eu/en/geographical-distribution-2019-ncov-cases. We assumed non-available data to be zero prior to the first case being recorded for each country. Whenever the daily recorded data was negative (reflecting previously confirmed cases being discarded), we replaced that information with a zero. This is the case for only 18 out of 71,904 observations as of the 25th of November 2020.

According to the ECDC, the number of cases is based on reports from health authorities worldwide (up to 500 sources), which are screened by epidemiologists for validation prior to being recorded in the ECDC dataset.

Modelling framework

We introduce a class of state-space hierarchical models for overdispersed count time series. Let \(Y_{it}\) be the observed number of newly infected people at country i and time t, with \(i=1,\ldots ,N\) and \(t=1,\ldots ,T\). We model \(Y_{it}\) as a Negative binomial first-order Markov process, with

for \(t=2,\ldots ,T\). The parameterisation used here results in \(\text{ E }(Y_{it}|Y_{i,t-1})=\mu _{it}\) and \(\text{ Var }(Y_{it}|Y_{i,t-1})=\mu _{it}+\mu _{it}^2\psi\). The mean is modelled on the log scale as the sum of an autoregressive component (\(\gamma _{it}\)) and a component that accommodates outliers (\(\Omega _{it}\)), i.e.

To accommodate the temporal correlation in the series, the non-stationary autoregressive process \(\left\{ \gamma _{it} \right\}\) is set up as

where \(\eta _{it}\) is a Gaussian white noise process with mean 0 and variance \(\sigma _{\eta }^2\). Differently from standard AR(1)-type models, here \(\phi _{it}\) is allowed to vary over time through an orthogonal polynomial regression linear predictor using the time as covariate, yielding

where \(P_q(\cdot )\) is the function that produces the orthogonal polynomial of order q, with \(P_0(x)=1\) for any real number x; \(\beta _q\) are the regression coefficients and \(\varvec{b}_{i}\) is the vector of random effects, which are assumed to be normally distributed with mean vector \(\mathbf {0}\) and variance-covariance matrix \(\Sigma _b=\mathrm {diag}\left( {\sigma ^2_{b_0},\ldots ,\sigma ^2_{b_Q}}\right)\).

By assuming \(\phi _{it}\) varying by country over time, we allow for a more flexible autocorrelation function. Iterating (1) we obtain

for \(t = 3,\ldots ,T\). Note that in the particular case where \(\phi _{it}=\phi _{i}=\beta _{0}+b_{0i}\), then \(\gamma _{it}= \phi _{i}^{t-1}\gamma _{i1}+\phi _{i}^{t-2}\eta _{i2}+\phi _{i}^{t-3}\eta _{i3}+ \cdots + \phi _{i}\eta _{it-1}+\eta _{it}\), which is equivalent to a country-specific AR(1) process. On the other hand, if \(\phi _{it}=\phi _{i}=\beta _{0}\), then \(\gamma _{it}= \phi ^{t-1}\gamma _{i1}+\phi ^{t-2}\eta _{i2}+\phi ^{t-3}\eta _{i3}+ \cdots + \phi \eta _{it-1}+\eta _{it}\), which is equivalent to assuming the same autocorrelation parameter for all countries.

Finally, to accommodate extra-variability we introduce the observational-level random effect

where \(\lambda _{it}\sim \text{ Bernoulli }(\pi )\) and \(\omega _{it}\sim \text{ N }(0,\sigma ^2_{\omega })\). When \(\lambda _{it}=1\), then observation \(y_{it}\) is considered to be an outlier, and the extra variability is modelled by \(\sigma ^2_{\omega }\). This can be seen as a mixture component that models the variance associated with outlying observations.

To forecast future observations \(y_{i,t+1}^*\), we use the median of the posterior distribution of \(Y_{i,t+1}|Y_{it}\). This is reasonable for short-term forecasting, since the error accumulates from one time step to the other. We produce forecasts for up to seven days ahead.

We fitted models considering different values for Q. Even though the results for \(Q=3\) showed that all \(\beta _q\) parameters were different from zero when looking at the 95% credible intervals, we opted for \(Q=2\) for the final model fit, since it improves the convergence of the model, as well as avoids overfitting by assuming a large polynomial degree, while still providing the extra flexibility introduced by the autoregressive function (2). This can change, however, as more data becomes available for a large number of time steps.

Model validation

We fitted the model without using the observations from the last seven days and obtained the forecasts \(y_{it}^*\) for each country and day. We then compared the forecasts with the true observations \(y_{it}\) for each day ahead by looking initially at the Pearson correlation between them. We also computed the concordance correlation coefficient32,33, an agreement index that lies between \(-1\) and 1, given by

where \(\mu _{1}=\text{ E }\left( Y_{t}^*\right)\), \(\mu _{2}=\text{ E }\left( Y_{y}\right)\), \(\sigma _{1}^{2}= \text{ Var }\left( Y_{t}^*\right)\), \(\sigma _{2}^{2}= \text{ Var }\left( Y_{t}\right)\), and \(\sigma _{12}=\text{ Cov }\left( Y_{t}^*, Y_{2}\right)\). It can be shown that \(\rho ^{(CCC)}_t=\rho _t C_t\), where \(\rho _t\) is the Pearson correlation coefficient (a measure of precision), and \(C_t\) is the bias corrector factor (a measure of accuracy) at the \(t-\)th day ahead. \(\rho _t\) measures how far each observation deviated from the best-fit line while \(C_b \in [0,1]\) measures how far the best-fit line deviates from the identity line through the origin, defined as \(C_{b}=2\left( v+v^{-1}+u^{2}\right) ^{-1}\), where \(v = \sigma ^2_{1}/\sigma ^2_{2}\) is a scale shift and \(u = (\mu _{1} - \mu _{2}) / \sqrt{\sigma _1\sigma _2}\) is a location shift relative to the scale. When \(C_b=1\) then there is no deviation from the identity line.

Model implementation

The model is estimated using a Bayesian framework, and the prior distributions used are

We used 3 MCMC chains, 2,000 adaptation iterations, 50,000 as burn-in, and 50,000 iterations per chain with a thinning of 25. We assessed convergence by looking at the trace plots, autocorrelation function plots, and the Gelman-Rubin convergence diagnostic34.

All analyses were carried out using R software35, and JAGS36. Model fitting takes approximately 14 hours to run in parallel computing, in a Dell Inspiron 17 7000 with 10th Generation Intel Core i7 processor, 1.80GHz\(\times\)8 processor speed, 16GB RAM plus 20GB of swap space, 64-bit integers, and the platform used is a Linux Mint 19.2 Cinnamon system version 5.2.2-050202-generic.

Clustering

We used the last 60 values of the estimated autoregressive component to perform clustering so as to obtain sets of countries that presented a similar recent behaviour. First, we computed the dissimilarities between the estimated time series \(\varvec{\hat{\gamma }}_i\) for each pair of countries using the dynamic time warp (DTW) distance37,38. Let M be the set of all possible sequences of m pairs preserving the order of observations in the form \(r=((\hat{\gamma }_{i1}, \hat{\gamma }_{i^\prime 1}),\ldots ,(\hat{\gamma }_{im}, \hat{\gamma }_{i^\prime m}))\). Dynamic time warping aims to minimise the distance between the coupled observations \((\hat{\gamma }_{it}, \hat{\gamma }_{i^\prime t})\). The DTW distance may be written as

By using the DTW distance, we are able to recognise similar shapes in time series, even in the presence of shifting and/or scaling38.

Then, we performed hierarchical clustering using the matrix of DTW distances using Ward’s method, aimed at minimising the variability within clusters39, and obtained ten clusters. Finally, we produced a dendrogram of the results of the hierarchical clustering analysis, with each cluster coloured differently so as to aid visualisation.

Code availability

All code and datasets are available at https://github.com/Prof-ThiagoOliveira/covid_forecast.

References

Kassem, A. M. COVID-19: mitigation or suppression?. Arab. J. Gastroenterol. 21, 1–2 (2020).

Goodell, J. W. COVID-19 and finance: agendas for future research. Financ. Res. Lett.https://doi.org/10.1016/j.frl.2020.101512 (2020).

He, X. et al. Temporal dynamics in viral shedding and transmissibility of COVID-19. Nat. Med. 26, 672–676. https://doi.org/10.1038/s41591-020-0869-5 (2020).

West, R., Michie, S., Rubin, G. J. & Amlôt, R. Applying principles of behaviour change to reduce SARS-CoV-2 transmission. Nat. Hum. Behav. 4, 451–459. https://doi.org/10.1038/s41562-020-0887-9 (2020).

Baker, R. E., Peña, J. M., Jayamohan, J. & Jérusalem, A. Mechanistic models versus machine learning, a fight worth fighting for the biological community?. Biol. Lett. 14, 1–4. https://doi.org/10.1098/rsbl.2017.0660 (2018).

Giordano, G. et al. Modelling the COVID-19 epidemic and implementation of population-wide interventions in Italy. Nat. Med.https://doi.org/10.1038/s41591-020-0883-7 (2020).

Bjørnstad, O. N., Shea, K., Krzywinski, M. & Altman, N. Modeling infectious epidemics. Nat. Methodshttps://doi.org/10.1038/s41592-020-0822-z (2020).

Toda, A. A. Susceptible-infected-recovered (SIR) dynamics of COVID-19 and economic impact. arXiv:2003.11221v2 1–15 (2020). 2003.11221.

Calafiore, G. C., Novara, C. & Possieri, C. A Modified SIR model for the COVID-19 contagion in Italy. arXiv:2003.14391v1 1–6 (2020). 2003.14391.

Altena, E. et al. Dealing with sleep problems during home confinement due to the COVID-19 outbreak: practical recommendations from a task force of the European CBT-I Academy. J. Sleep Res.https://doi.org/10.1111/jsr.13052 (2020).

Cohen, J. & Kupferschmidt, K. Countries test tactics in ‘war’ against COVID-19. Science 367, 1287–1288. https://doi.org/10.1126/science.367.6484.1287 (2020).

Perkins, G. D. et al. International Liaison committee on resuscitation: COVID-19 consensus on science, treatment recommendations and task force insights. Resuscitation 151, 145–147. https://doi.org/10.1016/j.resuscitation.2020.04.035 (2020).

Holmes, E. A. et al. Multidisciplinary research priorities for the COVID-19 pandemic: a call for action for mental health science. Lancet Psychiatry 7, 547–560. https://doi.org/10.1016/S2215-0366(20)30168-1 (2020).

Wilson, M. P. & Jack, A. S. Coronavirus disease (COVID-19) in neurology and neurosurgery: a scoping review of the early literature. Clin. Neurol. Neurosurghttps://doi.org/10.1016/j.clineuro.2020.105866 (2020).

Lai, C. C., Shih, T. P., Ko, W. C., Tang, H. J. & Hsueh, P. R. Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) and coronavirus disease-2019 (COVID-19): the epidemic and the challenges. Int. J. Antimicrob. Agentshttps://doi.org/10.1016/j.ijantimicag.2020.105924 (2020).

Wang, H. et al. Phase-adjusted estimation of the number of Coronavirus Disease 2019 cases in Wuhan, China. Cell Discov. 6, 4–11. https://doi.org/10.1038/s41421-020-0148-0 (2020).

Muniyappa, R. & Gubbi, S. COVID-19 pandemic, Corona Viruses, and diabetes mellitus. Am. J. Physiol. Endocrinol. Metab. 318, 736–741. https://doi.org/10.1152/ajpendo.00124.2020 (2020).

Ferguson, N. M. et al. Impact of non-pharmaceutical interventions (NPIs) to reduce COVID-19 mortality and healthcare demand. Imp. Coll. 10(25561/77482), 3–20 (2020).

Mehtar, S. et al. Limiting the spread of COVID-19 in Africa: one size mitigation strategies do not fit all countries. Lancet Glob. Healthhttps://doi.org/10.1016/S2214-109X(20)30212-6 (2020).

White, E. R. & Hébert-Dufresne, L. State-level variation of initial COVID-19 dynamics in the United States: the role of local government interventions. medRxiv 1–14. https://doi.org/10.1101/2020.04.14.20065318 (2020).

Vespignani, A. et al. Modelling COVID-19. Nat. Rev. Phys.https://doi.org/10.1038/s42254-020-0178-4 (2020).

Satsuma, J., Willox, R., Ramani, A., Grammaticos, B. & Carstea, A. S. Extending the SIR epidemic model. Phys. A 336, 369–375. https://doi.org/10.1016/j.physa.2003.12.035 (2004).

De La Sen, M., Alonso-Quesada, S. & Ibeas, A. On the stability of an SEIR epidemic model with distributed time-delay and a general class of feedback vaccination rules. Appl. Math. Comput. 270, 953–976. https://doi.org/10.1016/j.amc.2015.08.099 (2015).

Li, R. et al. Substantial undocumented infection facilitates the rapid dissemination of novel coronavirus (SARS-CoV2). Science 368, 489–493. https://doi.org/10.1126/science.abb3221 (2020).

Guan, W. et al. Clinical characteristics of coronavirus disease 2019 in China. N. Engl. J. Med. 382, 1708–1720. https://doi.org/10.1056/NEJMoa2002032 (2020).

Lauer, S. A. et al. (COVID-19) From publicly reported confirmed cases: estimation and application. Ann. Int. Med.https://doi.org/10.7326/M20-0504 (2019).

Kysely, J., Pokorna, L., Kyncl, J. & Kriz, B. Excess cardiovascular mortality associated with cold spells in the Czech Republic. BMC Public Health 9, 1–11. https://doi.org/10.1186/1471-2458-9-19 (2009).

Nunes, B. et al. Excess mortality associated with influenza epidemics in Portugal, 1980 to 2004. PLoS ONEhttps://doi.org/10.1371/journal.pone.0020661 (1980).

Ritchie, H., Roser, M., Ortiz-Ospina, E. & Hasell, J. Coronavirus andemic (COVID-19) (2020).

FT Visual & Data Journalism Team. Coronavirus tracked: the latest figures as countries fight to contain the pandemic (2020).

Petropoulos, F. & Makridakis, S. Forecasting the novel coronavirus COVID-19. PLoS ONE 15, 1–8. https://doi.org/10.1371/journal.pone.0231236 (2020).

Lin, L. I. A concordance correlation coefficient to evaluate reproducibility. Biometrics 45, 255–268 (1989).

Oliveira, T., Hinde, J. & Zocchi, S. Longitudinal concordance correlation function based on variance components: an application in fruit color analysis. J. Agric., Biol., Environ. Stat.https://doi.org/10.1007/s13253-018-0321-1 (2018).

Gelman, A. & Rubin, D. B. Inference from iterative simulation using multiple sequences. Stat. Sci. 7, 457–472 (1992).

R Core Team. R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, 2020).

Denwood, M. J. runjags: An R package providing interface utilities, model templates, parallel computing methods and additional distributions for MCMC models in JAGS. J. Stat. Softw.https://doi.org/10.18637/jss.v071.i09 (2016).

Muller, M. Dynamic Time Warping, vol. 2, 69–84 (Springer, 2007).

Montero, P. & Vilar, J. A. TSclust: an R package for time series clustering. J. Stat. Softw. 62, 1–43. https://doi.org/10.18637/jss.v062.i01 (2014).

Murtagh, F. & Legendre, P. Ward’s hierarchical agglomerative clustering method: which algorithms implement ward’s criterion?. J. Classif. 31, 274–295 (2014).

Acknowledgements

We would like to thank Prof. John Hinde for helpful comments when preparing this manuscript. T.P.O. received funding from the European Union's Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No. 801215. T.P.O. gratefully acknowledges funding from the Roslin Institute Strategic Programme (ISP) grants.

Author information

Authors and Affiliations

Contributions

T.P.O. and R.A.M. conceived and implemented the modelling framework, and wrote the manuscript. T.P.O. produced the Shiny visualisation app.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Oliveira, T.P., Moral, R.A. Global short-term forecasting of COVID-19 cases. Sci Rep 11, 7555 (2021). https://doi.org/10.1038/s41598-021-87230-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-87230-x

- Springer Nature Limited

This article is cited by

-

Deep learning forecasting using time-varying parameters of the SIRD model for Covid-19

Scientific Reports (2022)

-

COVID-19: average time from infection to death in Poland, USA, India and Germany

Quality & Quantity (2022)

-

Modelling menstrual cycle length in athletes using state-space models

Scientific Reports (2021)