Abstract

The rate of mRNA translation depends on the initiation, elongation, and termination rates of ribosomes along the mRNA. These rates depend on many “local” factors like the abundance of free ribosomes and tRNA molecules in the vicinity of the mRNA molecule. All these factors are stochastic and their experimental measurements are also noisy. An important question is how protein production in the cell is affected by this considerable variability. We develop a new theoretical framework for addressing this question by modeling the rates as identically and independently distributed random variables and using tools from random matrix theory to analyze the steady-state production rate. The analysis reveals a principle of universality: the average protein production rate depends only on the of the set of possible values that the random variable may attain. This explains how total protein production can be stabilized despite the overwhelming stochasticticity underlying cellular processes.

Similar content being viewed by others

Introduction

During translation complex molecular machines called ribosomes scan the mRNA codon by codon. The ribosome links amino-acids together in the order specified by the codons to form a polypeptide chain. For each codon, the ribosome “waits” for a transfer RNA (tRNA) molecule that matches and carries the correct amino-acid for incorporating it into the growing polypeptide chain. When the ribosome reaches a stop codon encoding a termination signal, it detaches from the mRNA and the complete amino-acid chain is released.

Several ribosomes may read the same mRNA molecule simultaneously, as this form of “pipelining” increases the protein production rate. The dynamics of ribosome flow along the mRNA strongly affects the production rate and the correct folding of the protein. A ribosome that is stalled for a long time may lead to the formation of a “traffic jam” of ribosomes behind it, and consequently to depletion of the pool of free ribosomes. Mutations affecting the protein translation rates may be associated with various diseases1, as well as viral infection efficiency2.

As translation is a central metabolic process that consumes most of the energy in the cell3,4,5,6,7, cells operate sophisticated regulation mechanisms to avoid and resolve ribosome traffic jams8,9,10,11. These issues have been studied extensively in recent years using various computational and mathematical models12. Another testimony of the importance of ribosome flow is the fact that about half of the currently existing antibiotics target the bacterial ribosome by interfering with translation initiation, elongation, termination and other regulatory mechanisms13,14. For example, Aminoglycosides inhibit bacterial protein synthesis by binding to the 30S ribosomal subunit, stabilizing a normal mismatch in codon–anticodon pairing, and leading to mistranslations15. Understanding the mechanisms of ribosome-targeting antibiotics and the molecular mechanisms of bacterial resistance is crucial for developing new drugs that can effectively inhibit the synthesis of bacterial proteins16.

Summarizing, an important problem is to understand the dynamics of ribosome flow along the mRNA, and how it affects the protein production rate. As in many cellular processes, a crucial puzzle is understanding how proper functioning is maintained, and adjusted to the signals that a cell receives and to resource availability, in spite of the large stochasticity in the cell17,18. Translation and the measurements of this process are affected by various types of stochasticity (see a review in19), as illustrated in Fig. 1. Specifically,

-

All the chemical reactions related to the process are of course stochastic, and so are the concentrations of factors like cognate tRNA availability and the resulting translation rates (e.g. during cell cycle), structural accessibility of the \(5'\)-end to translation factors, the spatial organization of mRNAs inside the cell and the existence of designated “translation factories”20,21,22,23.

-

Different cells in a population are not identical for example in terms of the number of mRNA molecules and ribosomes in the cell and many other aspects24.

-

It was recently suggested that the ribosomes themselves are not identical25.

-

The stochastic diffusion of translation substrates play a key role in determining translation rates26. The fact that the mRNA molecules of the same gene diffuse (either actively or passively) to different regions in the cell affects their translation properties27.

-

The experimental approaches for measuring translation introduce various types of noise28,29. Thus, the parameters of translation that are inferred from these data are also noisy.

-

Processes such as mRNA methylation can affect all aspects of translation19,30.

-

There are couplings between the translation process and other stochastic gene expression steps19,31 such as transcription32, mRNA stability33,34, and interaction with miRNA35,36 and RNA binding proteins19.

A recent paper analyzes translation and concludes that “randomness, on average, plays a greater role than any non-random contributions to synthesis time”37.

Here, we develop a theoretical approach to analyze translation subject to spatial variation by combining a deterministic computational model, called the ribosome flow model (RFM), with tools from random matrix theory. We model the variation in the initiation, elongation, and exit rates in several copies of the same mRNA by assuming that the rates in the RFM are independent and identically distributed (i.i.d.) random variables, that is, each random variable has the same probability distribution as the others and all are mutually independent. This assumption is of course restrictive, and is needed to obtain our closed-form theatrical results. Yet, it seems to have some empirical justification. For example, away from the ends of the coding sequence the translation rates tend to be independent38. In addition, various noise sources (such as NGS noise) tend to be independent along the mRNA. Furthermore, in "Generalizations" section we describe several generalizations where the i.i.d. assumption on the random variables can be relaxed.

We believe that our approach can be used to tackle various levels of stochaticity and uncertainty in translation and its measurements. Our main results (Theorems 1 and 2 below) reveal a new principle of universality: as the length of the mRNA molecule increases the overall steady-state protein production rate converges, with probability one, to a constant value that depends only on the minimal possible value of the random variables. Roughly speaking, this suggests that much of the variability is “filtered out”, and this may explain how the cell overcomes the variations in the many stochastic factors mentioned above.

The next section reviews the RFM and some of its dynamical properties that are relevant in our context. This is followed by our theoretical results. "Generalizations" section describes several generalizations. The final section concludes and describes possible directions for further research.

Ribosome flow model (RFM)

Mathematical models of the flow of “biological particles” like RNA polymerase, ribosomes, and molecular motors are becoming increasingly important, as powerful experimental techniques provide rich data on the dynamics of such machines inside the cell39,40,41, sometimes in real-time42. Computational models are particularly important in fields like synthetic biology and biotechnology, as they can provide qualitative and quantitative testifiable predictions on the effects of various manipulations of the genetic machinery. They are also helpful for understanding the evolution of cells and their biophysics43.

The standard computational model for the flow of biological particles is the asymmetric simple exclusion process (ASEP)44,45,46,47,48. This is a fundamental model from nonequilibrium statistical mechanics describing particles that hop randomly from a site to a neighboring site along an ordered (usually 1D) lattice. Each site may be either free or occupied by a single particle, and hops may take place only to a free target site, representing the fact that the particles have volume and cannot overtake one another. This simple exclusion principle generates an indirect coupling between the moving particles. The motion is assumed to be directionally asymmetric, i.e., there is some preferred direction of motion. In the totally asymmetric simple exclusion process (TASEP) the motion is unidirectional.

TASEP and its variants have been used extensively to model and analyze natural and artificial processes including ribosome flow, vehicular and pedestrian traffic, molecular motor traffic, the movement of ants along a trail, and more43,49,50. However, due to the intricate indirect interactions between the hopping particles, analysis of TASEP is difficult, and closed-form results exist only in some special cases51,52.

The RFM53 is a deterministic, nonlinear, continuous-time ODE model that can be derived via a dynamic mean-field approximation of TASEP54. It is amenable to rigorous analysis using tools from systems and control theory. The RFM includes n sites ordered along a 1D chain. The normalized density (or occupancy level) of site i at time t is described by a state variable \(x_i(t)\) that takes values in the interval [0, 1], where \(x_i(t)=0\) [\(x_i(t)=1\)] represents that site i is completely free [full] at time t. The transition between sites i and site \(i+1\) is regulated by a parameter \(\lambda _i>0\). In particular, \(\lambda _0\) [\(\lambda _n\)] controls the initiation [termination] rate into [from] the chain. The rate at which particles exit the chain at time t is a scalar denoted by R(t) (see Fig. 2).

When modeling the flow of biological machines like ribosomes the chain models an mRNA molecule coarse-grained into n sites. Each site is a codon or a group of consecutive codons, and R (t) is the rate at which ribosomes detach from the mRNA, i.e. the protein production rate. The values of the \(\lambda _i\)s encapsulate many biophysical properties like the number of available free ribosomes, the nucleotide context surrounding initiation codons, the codon compositions in each site and the corresponding tRNA availability, and so on53,55,56. Note that these factors may vary in different locations inside the cell.

Unidirectional flow along an n site RFM. State variable \(x_i(t)\in [0,1]\) represents the normalized density at site i at time t. The parameter \(\lambda _i>0\) controls the transition rate from site i to site \(i+1\), with \(\lambda _0\) [\(\lambda _n\)] controlling the initiation [termination] rate. \(R(t)\) is the output rate from the chain at time t.

The dynamics of the RFM is described by n nonlinear first-order ordinary differential equations:

where we define \(x_0(t): = 1\) and \(x_{n+1}(t) : = 0\). Every \(x_i\) is dimensionless, and every rate \(\lambda _i\) has units of \(1/{\text {time}}\). Eq. (1) can be explained as follows. The flow of particles from site i to site \(i+1\) is \(\lambda _{i} x_{i}(t) (1 - x_{i+1}(t) )\). This flow is proportional to \(x_i(t)\), i.e. it increases with the occupancy level at site i, and to \((1-x_{i+1}(t))\), i.e. it decreases as site \(i+1\) becomes fuller. This is a “soft” version of the simple exclusion principle. The maximal possible flow from site i to site \(i+1\) is the transition rate \(\lambda _i\). Eq. (1) is thus a simple balance law: the change in the density \(x_i\) equals the flow entering site i from site \(i-1\), minus the flow exiting from site i to site \(i+1\). The output rate from the last site at time t is \({R} (t):=\lambda _n x_n(t)\).

An important property of the RFM (inherited from TASEP) is that it can be used to model and analyze the formation of “traffic jams” of particles along the chain. It was shown that traffic jams during translation are common phenomena even under standard conditions57. Indeed, suppose that there exists an index j such that \(\lambda _j\) is much smaller than all the other rates. Then Eq. (1) gives

this term is positive when \(x\in (0,1)^n\), so we can expect site j to fill up, i.e. \(x_j(t) \rightarrow 1\). Now using Eq. (1) again gives

suggesting that site \(j-1\) will also fill up. In this way, a traffic jam of particles is formed “behind” the bottleneck rate \(\lambda _j\).

Note that if \(\lambda _j=0\) for some index j then the RFM splits into two separate chains, so we always assume that \(\lambda _j>0\) for all \(j \in \{0,\dots ,n\}\).

The asymptotic behavior of the RFM has been analyzed using tools from contraction theory58, the theory of cooperative dynamical systems59, continued fractions and Perron-Frobenius theory60. We briefly review some of these results that are required later on.

Dynamical properties of the RFM

Let x(t, a) denote the solution of the RFM at time \(t \ge 0\) for the initial condition \(x(0)=a\). Since the state-variables correspond to normalized occupancy levels, we always assume that a belongs to the closed n-dimensional unit cube:

Let \((0,1)^n\) denote the interior of \([0,1]^n\).

It was shown in59 (see also58) that there exists a unique \(e=e(\lambda _0,\dots ,\lambda _n)\in (0,1)^n\) such that for any \(a \in [0,1]^n\) the solution satisfies \(x(t,a)\in (0,1)^n\) for all \(t > 0\) and

In other words, every state-variable remains well-defined in the sense that it always takes values in [0, 1], and the state converges to a unique steady-state that depends on the \(\lambda _i\)s, but not on the initial condition. At the steady-state, the flows into and out of each site are equal, and thus the density in the site remains constant. Note that the production rate \({R}(t)=\lambda _n x_n(t)\) converges to the steady-state value \({R} :=\lambda _n e_n\), as \(t\rightarrow \infty\). The rate of convergence to the steady-state e is exponential61.

At the steady-state, the left hand-side of Eq. (1) is zero, and this gives

where we define \(e_0 :=1\) and \(e_{n+1} :=0\). In other words, at the steady-state the flow into and out of each site are equal to R.

Solving the set of non-linear equations in Eq. (2) is not trivial. Fortunately, there exists a better representation of the mapping from the rates \(\lambda _0,\dots ,\lambda _n\) to the steady-state \(e_1,\dots ,e_n\). Let \({{\mathbb {R}}}^k_{>0}\) denote the set of k-dimensional vectors with all entries positive. Define the \((n+2)\times (n+2)\) tridiagonal matrix

This is a symmetric matrix, so all its eigenvalues are real. Since every entry of \({T}_n\) is non-negative and \({T}_n\) is irreducible, it admits a simple maximal eigenvalue \(\sigma >0\) (called the Perron eigenvalue or Perron root of \({T}_n\)), and a corresponding eigenvector \(\zeta \in {{\mathbb {R}}}^{n+2}_{>0}\) (the Perron eigenvector) that is unique (up to scaling)62.

Given an RFM with dimension n and rates \(\lambda _0,\dots ,\lambda _n\), let \({T}_n\) be the matrix defined in Eq. (3). It was shown in63 that then

In other words, the steady-state density and production rate in the RFM can be directly obtained from the spectral properties of \({T}_n\). In particular, this makes it possible to determine R and e even for very large chains using efficient and numerically stable algorithms for computing the Perron eigenvalue and eigenvector of a tridiagonal matrix.

The spectral representation has several useful theoretical implications. It implies that that \({R} ={R} (\lambda _0,\dots ,\lambda _n)\) is a strictly concave function on \({{\mathbb {R}}}^{n+1}_{>0}\). Thus, the problem of maximizing R under an upper bound on the sum of the rates always admits a unique solution63.

Also, the spectral representation implies that the sensitivity of the steady-state w.r.t. a perturbation in the rates becomes an eigenvalue sensitivity problem. Known results on the sensitivity of the Perron root64 imply that

where \(\zeta '\) denotes the transpose of the vector \(\zeta\). It follows in particular that \(\frac{\partial }{\partial \lambda _i} {R} >0\) for all i, that is, an increase in any of the transition rates yields an increase in the steady-state production rate60.

The RFM has been used to analyze various properties of translation. These include mRNA circularization and ribosome cycling65, maximizing the steady-state production rate under a constraint on the rates63,66, optimal down regulation of translation67, and the effect of ribosome drop off on the production rate68. More recent work focused on coupled networks of mRNA molecules. The coupling may be due to competition for shared resources like the finite pool of free ribosomes69,70, or due to the effect of the proteins produced on the promoters of other mRNAs71. Several variations and generalizations of the RFM have also been suggested and analyzed54,68,72,73,74,75.

Several studies compared predictions of the RFM with biological measurements. For example, protein levels and ribosome densities in translation53, and RNAP densities in transcription76. The results demonstrate high correlation between gene expression measurements and the RFM predictions.

All previous works on the RFM assumed that the transition rates \(\lambda _i\) are deterministic. Here, we analyze for the first time the case where the rates are random variables. This may model for example the parallel translation of copies of the same mRNA molecule in different locations inside the cell. The variance of factors like tRNA abundance in these different locations implies that each mRNA is translated with different rates. It is natural to model this variability using tools from probability theory. For example, Ref.77 showed that the distribution of read counts related to a codon in ribo-seq experiments can be approximated using an exponentially modified Gaussian.

Our results analyze the average steady-state production rate given the random transition rates. Note that this provides a global picture of protein production in the cell, rather than the local production in any single chain. For example, when “drawing” the rates from a given distribution, one rate may turn out to be much smaller than the others and this will generate a traffic jam in the corresponding chain. However, our analysis does not consider any specific chain, but the average steady-state production rate on all the chains drawn according to the distribution of the i.i.d. rates.

The following section describes our main results on translation with random rates.

Main results

Assume that the RFM rates are not constant, but rather are random variables with some known distribution supported over \({{\mathbb {R}}}_{\ge \delta }:= \{x\in {{\mathbb {R}}}: x\ge \delta \}\), where \(\delta >0\). What will the statistical properties of the resulting protein production rate be? In the context of the spectral representation given in Eq. (3), this amounts to the following question: given the distributions of the random variables \(\{\lambda _i\}_{i=0}^n\), what are the statistical properties of the maximal eigenvalue \(\sigma\) of the random matrix \({\mathsf {T}}_n\)?

Recall that a random variable \({\mathsf {X}}\) is called essentially bounded if there exists \(0\le {b}<\infty\) such that \({{\mathbb {P}}}\left[ \left| {\mathsf {X}}\right| \le b\right] =1\), and then the \(L_\infty\) norm of \({\mathsf {X}}\) is

Roughly speaking, this is the maximal value that \({\mathsf {X}}\) can attain. Clearly, bounded random variables is the relevant case in any biological model. In particular, if \({\mathsf {X}}\) is supported over \({{\mathbb {R}}}_{\ge \delta }\), with \(\delta >0\), then the random variable defined by \({\mathsf {W}}:= {\mathsf {X}}^{-1/2}\) is essentially bounded and \(||{\mathsf {W}} ||_\infty \le \delta ^{-1/2}\).

We can now state our main results. To increase readability, all the proofs are placed in the final section of this paper. To emphasize that now the production rate is a random variable, and that it depends on the length of the chain, from hereon we use \({\mathsf {R}}_n\) to denote the production rate in the n-site RFM.

Theorem 1

Suppose that every rate \(\lambda _0,\dots ,\lambda _n\) in the RFM is drawn independently according to the distribution of an random variable \({\mathsf {X}}\) that is supported on \({\mathbb {R}}_{\ge \delta }\), with \(\delta >0\). Then as \(n\rightarrow \infty\), the maximal eigenvalue of the matrix \({\mathsf {T}}_n\) converges to \(2 ||{\mathsf {X}}^{-1/2}||_\infty\) with probability one, and the steady-state production rate \({\mathsf {R}}_n\) in the RFM converges to

with probability one.

This result may explain how proper functioning is maintained in spite of significant variability in the rates: the steady-state production rate always converges to the value in Eq. (6), that depends only on \(||{\mathsf {X}}^{-1/2}||_\infty\). This also implies a form of universality with respect to the noises and uncertainties: the exact details of the distribution of \({\mathsf {X}}\) are not relevant, but only the single value \(||{\mathsf {X}}^{-1/2}||_\infty\).

In general, the convergence to the values in Theorem 1 as n increases is slow, and computer simulations may require n values that exhaust the computer’s memory before we are close to the theoretical values. The next example demonstrates a case where the convergence is relatively fast.

Example 1

Recall that the probability density function of the half-normal distribution with parameters \((\mu ,\sigma )\) is

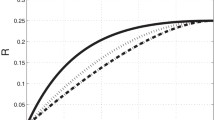

This may be interpreted as a kind of normal distribution, but with support over \([\mu ,\infty )\) only. Suppose that \({\mathsf {X}}\) has this distribution with parameters \(( \mu =1,\sigma = 0.1 )\). Note that \({\mathsf {X}}^{-1/2}\) has support (0, 1], so \(||{\mathsf {X}}^{-1/2}||_\infty =1\). In this case, Theorem 1 implies that \({\mathsf {R}}_n\) converges with probability one to 1/4 as n goes to infinity. For \(n\in \{50,500,1000\}\), we numerically computed \({\mathsf {R}}_n\) using the spectral representation for 10, 000 random matrices. Figure 3 depicts a histogram of the results. It may be seen that as n increases the histogram becomes “sharper” and its center converges towards 1/4, as expected.

Histograms of 10, 000 \({\mathsf {R}}_n\) values in Example 1 for \(n=50\) (green), \(n=500\) (blue), and \(n=1000\) (red). The theory predicts that as \(n\rightarrow \infty\), \({\mathsf {R}}_n\) converges to 1/4 with probability one.

Theorem 1 does not provide any information on the rate of convergence to the limiting value of \({\mathsf {R}}_n\). This is important as in practice n is always finite. The next result addresses this issue. For \(\epsilon >0\), let

Note that \(a(\epsilon ) \in (0,1]\). Intuitively speaking, \(a(\epsilon )\) is the probability that \({\mathsf {X}}^{-1/2}\) falls in the range \([\Vert {\mathsf {X}}^{-1/2}\Vert _\infty -\epsilon ,\Vert {\mathsf {X}}^{-1/2}\Vert _\infty ]\).

Theorem 2

Suppose that every rate \(\lambda _0,\dots ,\lambda _n\) in the RFM is drawn independently according to the distribution of an random variable \({\mathsf {X}}\) that is supported on \({\mathbb {R}}_{\ge \delta }\), with \(\delta >0\). Pick two sequences of positive integers \(n_1<n_2<\dots\) and \(k_1<k_2<\dots\), with \(k_i<n_i\) for all i, and a decreasing sequence of positive scalars \(\epsilon _i\), with \(\epsilon _i \rightarrow 0\). Then for any i the steady-state production rate \({\mathsf {R}}_{n_i}\) in an RFM with \(n_i\) sites satisfies

with probability at least

Note that if we choose the sequences such that

and take \(i \rightarrow \infty\) then Theorem 2 yields Theorem 1. Yet, we state and prove both results separately in the interest of readability.

Example 2

Suppose that \({\mathsf {X}}\) has a uniform distribution over an interval \([\delta ,\gamma ]\) with \(0< \delta <\gamma\). From here on we assume for simplicity that \(\delta =1\) and \(\gamma =2\). Then for any \(\epsilon >0\) sufficiently small, we have

Fix \(d\in (0,1)\) and take \(\epsilon _i = n_i^{(d-1)/k_i}\). Then the condition in Eq. (9) becomes

and this will hold if \(k_i\) does not increase too quickly. We can write \(\epsilon _i\) as

so to guarantee that \(\epsilon _i \rightarrow 0\), we take \(k_i=(\log (n_i))^c\), with \(c\in (0,1)\), and then Eq. (9) indeed holds. Theorem 2 implies that

with probability at least

Example 3

As in Example 1, consider the case where \({\mathsf {X}}\) is half-normal with parameters \((\mu ,\sigma )\), where \(\mu >0\). Then \(\Vert {\mathsf {X}}^{-1/2}\Vert _\infty = \mu ^{-1/2}\), so

where \(z := (\mu ^{-1/2}-\epsilon )^{-2}\). Thus,

It is not difficult to show that this implies that

where \(c(\mu ,\sigma ) : = 2 \sqrt{ \frac{2}{\pi \sigma ^2}} \mu ^{3/2}\). To satisfy Eq. (9), fix \(p\in (0,1)\) and choose \(\epsilon _i\) such that \((c \epsilon _i)^{k_i} = n_i^{p-1}\). This implies that

Now, pick \(q\in (0,1)\) and take \(k_i = (\log ( n_i))^q\). Then Eq. (9) holds, and

Theorem 2 implies that for any \(p,q\in (0,1)\), we have

with probability at least

This shows that \({\mathsf {R}}_{n_i}\) “is close” to \(\mu /4\), and provides an explicit expression for the rate of convergence to \(\mu /4\).

Generalizations

The assumption that all the rates are i.i.d. random variables allows to derive the general theoretical results in Theorems 1 and 2 above. However, this assumption is restrictive. In this section, we describe several cases where we allow more relaxed assumptions on these rates. Our first generalization considers the case where the random variables might be non-identical, but all share the same support. In the second generalization, we allow an increasing (but small compared to n) number of random variables to have a different support from the majority of the other random variables. In these two cases we show that the production rate converges to the same value as in Theorem 1.

We then turn to investigate the most general case, where the rates are arbitrary but bounded, and in this case provide lower and upper bounds on the production rate.

Analysis of the proofs of Theorems 1 and 2 shows that our results remain valid even if each rate \(\lambda _i\) is drawn from the distribution of \({\mathsf {X}}_i\), which are not necessarily identically distributed, but are all independent, supported on the positive semi-axis, and satisfy

namely, they all have the same bound. The next example demonstrates this.

Example 4

Consider \(n+1\) independent random variables with \({\mathsf {X}}_{0},{\mathsf {X}}_{1},\dots ,{\mathsf {X}}_{\frac{n-1}{2}}\) distributed according to the half-normal distribution with parameters \(( \mu =2,\sigma = 0.1 )\), and \({\mathsf {X}}_{\frac{n-1}{2}+1},\dots , {\mathsf {X}}_{n}\) distributed according to the uniform distribution on [2, 3]. Note that \(||{{\mathsf {X}}_{{\mathsf {i}}}}^{-1/2}||_\infty =2^{-1/2}=1 / \sqrt{2}\), for all \(i=0,1,\dots ,n\). Thus, our theory predicts that in this case \({\mathsf {R}}_n\) converges with probability one to \((2/\sqrt{2})^{- 2} = 1/2\) as n goes to infinity. For \(n\in \{50,500,1000\}\), we numerically computed \({\mathsf {R}}_n\) using the spectral representation for 10, 000 random matrices. Figure 4 depicts a histogram of the results. It may be seen that as n increases the histogram becomes “sharper” and its center converges towards 1/2, as expected.

Histograms of 10, 000 \({\mathsf {R}}_n\) values in Example 4 for \(n=50\) (green), \(n=500\) (blue), and \(n=1000\) (red). The theory predicts that as \(n\rightarrow \infty\), \({\mathsf {R}}_n\) converges to 1/2 with probability one.

Our second generalization considers the case where among the \(n+1\) random rates there are d rates drawn from the distributions of the random variables \({\mathsf {Y}}_1,\dots , {\mathsf {Y}}_d\), that might have some different distributions; they do not have to satisfy the uniform support condition in Eq. (14), and they might be dependent. Here \(d=d(n)\) is an integer that is allowed to grow with n, but at a slower rate than n. We assume that the rates modeled by these random variable are larger those rates modeled by the other \(n+1-d\) random variables (see Eq. (15) below).

Theorem 3

Let \(d=d(n)>0\) be an integer such that \(\lim _{n\rightarrow \infty } \frac{d(n)}{n} =0\). Let \(\{{\mathsf {X}}_i\}_{i=0}^{n-d}\) be a set of \((n+1-d)\) independent random variables, supported on \({\mathbb {R}}_{\ge \delta }\), with \(\delta >0\), and satisfying

Also, let \(\{{\mathsf {Y}}_i\}_{i=1}^d\) be a set of d random variables supported on the positive semi-axis, and satisfy

Fix \(\epsilon >0\) and a positive integer k. Denote the concatenation of \(\{{\mathsf {Y}}_i\}_{i=1}^d\) and \(\{{\mathsf {X}}_i\}_{i=0}^{n-d }\) by \({\mathsf {Z}}\), namely, \({\mathsf {Z}} = ({\mathsf {Y}}_1,{\mathsf {Y}}_2,\ldots ,{\mathsf {Y}}_d,{\mathsf {X}}_0,{\mathsf {X}}_1,\ldots ,{\mathsf {X}}_{n-d })\). Let \({\mathcal {S}}^{n+1}\) denote the set of permutations on \(\{1,\dots ,n+1\}\). Fix a permutation \(\pi \in {\mathcal {S}}^{n+1}\), and let \({\mathsf {Z}}^\pi \triangleq \pi \circ {\mathsf {Z}}\). Suppose that every rate \(\lambda _i\) in the RFM is drawn independently according to the distribution of the random variables in \({\mathsf {Z}}^\pi _i\). Then as \(n\rightarrow \infty\), the steady-state production rate \({\mathsf {R}}_n\) in the RFM converges to

with probability one.

In other words, even in the presence of the “interfering” \({\mathsf {Y}}_i\)’s the theoretical result remains unchanged. The next example demonstrates Theorem 3.

Example 5

Consider the case where \(d(n)=\sqrt{n}\). Let \({\mathsf {X}}_0,\dots ,{\mathsf {X}}_{n-d}\) be i.i.d. random variables distributed according to the uniform distribution on [1/2, 1], and let Let \({\mathsf {Y}}_1,\dots ,{\mathsf {Y}}_{d}\) be i.i.d. random variables distributed according to the uniform distribution on [15, 20]. We draw the rates according to the vector \({\mathsf {Z}}^{\pi }\), with \(\pi\) a random permutation (implemented using the Matlab command randperm). Our theory predicts that in this case \({\mathsf {R}}_n\) converges with probability one to \((2||{\mathsf {X}}_i^{-1/2}||_\infty )^{-2} =(2 \sqrt{2})^{-2}=1/8\) as n goes to infinity. For \(n\in \{50,500,1500\}\), we numerically computed \({\mathsf {R}}_n\) using the spectral representation for 10, 000 random matrices. Figure 5 depicts a histogram of the results. It can be seen that the \({\mathsf {R}}_n\) converges with probability one to a limiting value, despite the “interfering” \({\mathsf {Y}}_i\) random variables.

Histograms of 10, 000 \({\mathsf {R}}_n\) values in Example 5 for \(n=50\) (green), \(n=500\) (blue), and \(n=1500\) (red). The theory predicts that as \(n\rightarrow \infty\), \({\mathsf {R}}_n\) converges to 1/8 with probability one.

Our last and most general result considers the case where the random variables are arbitrary but bounded. In particular, they do not necessarily have to be independent or identical. We use the notation \({\mathcal {I}}_k^{p}\) to denote the set of all possible k consecutive integers from the set \(\{1,2,\dots ,p\}\). For example,

Theorem 4

Suppose that every rate \(\lambda _i\) in the RFM is drawn according to the distribution of a random variable \({\mathsf {X}}_i\) that is supported on \({\mathbb {R}}_{\ge \delta _i}\), with \(\delta _i>0\), for \(0\le i\le n\). Then the steady-state production rate \({\mathsf {R}}_n\) in the n-site RFM satisfies

with probability one.

Contrary to our previous analytical results, in this case the steady-state production rate will not necessarily converge to a deterministic value, but rather we show that it is bounded above and below by two random quantities. However, it can be shown that when the random variables are i.i.d. then both bounds converge to \((2||{\mathsf {X}}_0^{-1/2}||_\infty )^{-2}\) as \(n\rightarrow \infty\), and in this sense the bounds in Theorem 4 are tight.

Discussion

Cellular systems are inherently noisy, and it is natural to speculate that they were optimized by evolution to function properly, or even take advantage, of stochastic fluctuations.

Many studies analyzed the fluctuations in protein production due to both extrinsic and intrinsic noise (see, e.g.18,78,79,80,81,82). Here, we derived a new approach, based on random matrix theory, for analyzing the average protein production rate from multiple copies of the same mRNA that are affected by variations in the translation rates due, for example, to the different spatial location of these mRNAs inside the cell. Our approach can also deal with experimental noise.

Our results have both a theoretical and a practical value. We show that given one parameter value \(\delta\) from the i.i.d. distribution allows to determine the steady-state average production rate. The production rate is thus agnostic to many other details underlying the distribution e.g. it’s mean, variance, etc. This may explain how steady-state production is maintained despite the considerable stochasticity in the cell. This theoretical result holds regardless of whether one can actually determine the value \(\delta\) or not.

Our approach can also deal with phenomena that is not directly captured by the RFM, if its affects can be modeled as a stochastic perturbation of the transition rates. Examples may include experimental noise, methylation, and interaction with miRNA. In particular, methylation affects one nucleotide/codon, and miRNA affects a sequence of up to 7 codons.

It is important to note that our results hold for many possible distributions of the translation rates. For example, it was suggested that decoding rates distributions are similar to an exponential modified Gaussian or log normal distributions77,83.

Currently, it is challenging to estimate the distribution of transition rates (and thus the bound on the support \(\delta\)). Indeed, approaches such as ribo-seq plot averages over all mRNA molecules and all cells in a certain population/sample. It is also difficult to estimate the protein translation rate. Usually, the measured quantity is protein level, but this depends not only on translation, but also on the rate of transcription, and mRNA and protein dilution and decay79. Thus, in this respect, the theory in the paper precedes biological measurement capabilities. Our results however may indicate general principles that can be tested experimentally. For example, the analysis suggests that as the length of the mRNA increases while keeping all its statistical properties such as initiation rate and codon usage identical, the translation rate becomes more uniform.

The RFM, just like TASEP, is a phenomenological model for the flow of interacting particles and thus can be used to model and analyze phenomena like the flow of packets in communication networks84, the transfer of a phosphate group through a serial chain of proteins during phosphorelay75, and more. The RFM is also closely related to a mathematical model for a disordered linear chain of masses, each coupled to its two nearest neighbors by elastic springs85, that was originally analyzed in the seminal work of Dyson86. In many of these applications it is natural to assume that the rates are subject to uncertainties or fluctuations and model them as random variables. Then the results here can be immediately applied.

We believe that the approach described here can be generalized to other models of intra-cellular phenomena derived from the RFM75,87, and thus for analyzing additional aspects of translation and gene expression.

Proofs

The proofs of our main results are based on analyzing the spectral properties of the matrix \({\mathsf {T}}_n\) in Eq. (3) when the \(\lambda _i\)s are i.i.d. random variables. The problem that we study here is a classical problem in random matrix theory88, yet the matrix \({\mathsf {T}}_n\) is somewhat different from the standard matrices analyzed using the existing theory (e.g. the Wigner matrix). Hence, we provide a self-contained analysis based on combining probabilistic arguments with the Perron-Frobenius theory of matrices with non-negative entries (see e.g.62, Ch. 8).

Proof of Theorem 1

Recall that the rates \(\{\lambda _i\}_{i=0}^{n}\) are drawn independently according to the distribution of a random variable \({\mathsf {X}}\) that is supported on \({\mathbb {R}}_{\ge \delta }\), with \(\delta >0\). For simplicity of notation, let \({\mathsf {W}}_i := \lambda _i^{-1/2}\), \(i\in \{0,1,\ldots ,n\}\), and note that \(\{{\mathsf {W}}_i\}_{i=0}^{n}\) are essentially bounded, i.i.d., and each random variable \({\mathsf {W}}_i\) follows the same distribution of \({\mathsf {X}}^{-1/2}\). In particular, \({\mathsf {W}}_0\equiv {\mathsf {X}}^{-1/2}\). With this definition, Eq. (3) can be written as:

Therefore, \({\mathsf {T}}_n\) is an \((n+2)\times (n+2)\) symmetric tridiagonal matrix, with zeros on its main diagonal, and bounded positive random variables \(\{{\mathsf {W}}_i\}_{i=0}^{n}\) on the super- and sub-diagonals.

Since \({\mathsf {T}}_n\) is symmetric, componentwise non-negative, and irreducible, it admits a simple maximal eigenvalue denoted \(\lambda _{\max }({\mathsf {T}}_n)\), and \(\lambda _{\max }({\mathsf {T}}_n) >0\). Our goal is to understand the asymptotic behavior of \(\lambda _{ \max }({\mathsf {T}}_n )\), as \(n\rightarrow \infty\). We begin with an auxiliary result that will be used later on.

Proposition 1

Suppose that the random variables \(\{{\mathsf {W}}_i\}_{i=0}^{n}\) are i.i.d. and essentially bounded. Fix \(\epsilon >0\) and an integer \(1\le k\le n+1\). Let \({{\mathcal {K}}}\) denote the event: there exists an index \(0\le \ell \le n-k+1\) such that \({\mathsf {W}}_{\ell },\dots ,{\mathsf {W}}_{\ell +k-1 }\ge \Vert {\mathsf {W}}_0\Vert _\infty -\epsilon\). Then as \(n\rightarrow \infty\) the probability of \({{\mathcal {K}}}\) converges to one.

In other words, as \(n\rightarrow \infty\) the probability of finding k consecutive random variables whose value is at least \(\Vert {\mathsf {W}}_0\Vert _\infty -\epsilon\) goes to one.

Proof

Fix \(\epsilon >0\) and a positive integer k. Let \(s : = \Vert {\mathsf {W}}_0\Vert _\infty -\epsilon\). For any \(j\in \{0,\dots ,n-k+1\}\), let \({{{\mathcal {K}}}} (j)\) denote the event: \({\mathsf {W}}_{j },\dots ,{\mathsf {W}}_{j+k -1}\ge s\). Then

where p is the largest integer such that \((p+1)k\le n\). Since the \({\mathsf {W}}_i\)s are i.i.d.,

The probability \({{\mathbb {P}}}\left( {\mathsf {W}}_0 \ge s \right)\) is positive, and when \(n\rightarrow \infty\), we have \(p\rightarrow \infty\), so \({{\mathbb {P}}}\left( {{{\mathcal {K}}}}\right) \rightarrow 1\). \(\square\)

The next result invokes Proposition 1 to provide a tight asymptotic lower bound on the maximal eigenvalue of \({\mathsf {T}}_n\).

Proposition 2

Suppose that the random variables \(\{{\mathsf {W}}_i\}_{i=0}^{n}\) are i.i.d. and essentially bounded. Fix \(\epsilon >0\) and an integer \(1\le k\le n+1\). Then the probability

goes to one as \(n\rightarrow \infty\).

Proof

Let \(s := \Vert {\mathsf {W}}_0\Vert _\infty -\epsilon\). Conditioned on the event \({{{\mathcal {K}}}}\), Proposition 1 implies that there exists an index \(\ell\) such that \({\mathsf {W}}_{\ell },\dots ,{\mathsf {W}}_{\ell +k-1 }\ge s\). Assume that \(\ell =0\) (the proof in the case \(\ell >0\) is very similar). Let \({\mathsf {M}}_{k}\) denote the \((k+1)\times (k+1)\) symmetric tridiagonal matrix:

Recall that the maximal eigenvalue of this matrix is \(\lambda _{{\mathsf {max}}} ({\mathsf {M}}_{k }) =2 \cos {\frac{\pi }{k+2}}\) (see e.g.89). Now, let \({\mathsf {P}}_n\) be the matrix obtained by replacing the \((k+1)\times (k+1)\) leading principal minor of \({\mathsf {T}}_n\) by \(s {\mathsf {M}}_k\). Note that \({\mathsf {T}}_n \ge {\mathsf {P}}_n\) (where the inequality is componentwise), and thus \(\lambda _{\max } ({\mathsf {T}}_n) \ge \lambda _{\max } ({\mathsf {P}}_n)\). By Cauchy’s interlacing theorem, the largest eigenvalue of \({\mathsf {P}}_n\) is larger or equal to the largest eigenvalue of any of its principal minors. Thus,

and this completes the proof of Proposition 2. \(\square\)

We can now complete the proof of Theorem 1. Recall that if A is an \(n\times n\) symmetric and componentwise non-negative matrix then (see, e.g.62, Ch. 8)

In other words, \(\lambda _{{\mathsf {max}}}( {A} )\) is bounded from above by the maximum of the row sums of A. As any row of \({\mathsf {T}}_n\) has at most two nonzero elements, Eq. (21) implies that

with probability one. Combining this with Proposition 2 implies that

with probability one. Since this holds for any \(\epsilon >0\) and any integer \(k>0\), this completes the proof of Theorem 1. \(\square\)

Proof of Theorem 2

Fix \(\epsilon >0\) and an integer \(1\le k\le n+1\). Let \({{\bar{a}}}(\epsilon ): = {{\mathbb {P}}}\left( {\mathsf {W}}_0 \ge \Vert {\mathsf {W}}_0\Vert _\infty -\epsilon \right)\). The proofs of Propositions 1 and 2 imply that

with probability \({{\mathbb {P}}}({{\mathcal {K}}})\ge 1-(1-({{\bar{a}}}(\epsilon )) ^k )^{\left\lfloor \frac{n}{k} \right\rfloor }\). Fix \(b,c>0\). The trivial bound \(1-b<\exp (-b)\) implies that \(1-(1-b)^{c } > 1-\exp (-bc)\), and thus,

Pick two sequences of positive integers \(n_1<n_2<\dots\) and \(k_1<k_2<\dots\), with \(k_i<n_i\) for all i, and a decreasing sequence of positive scalars \(\epsilon _i\), with \(\epsilon _i \rightarrow 0\). Using Eq. (24) we get

Combining this with the spectral representation of the steady-state in the RFM completes the proof of Theorem 2. \(\square\)

The proofs of Theorems 3 and 4 below are similar to the proof of Theorem 1, and so we only explain the needed modifications in the proof of Theorem 1.

Proof of Theorem 3

The proof of Proposition 1 remains valid due to the fact that \(d>0\) is sub-linear in n, and we let \(n \rightarrow \infty\). Specifically, by the pigeonhole principle it is clear that there must exist a sub-sequence of \({\mathsf {Z}}^{\pi }\), of length at least n/d, which consists of consecutive \({\mathsf {X}}_i\)’s; therefore, we can apply the proof of Proposition 1 on this sub-sequence. In this case, we note that the range of the parameter p in the proof of Proposition 1 becomes \((p+1)k\le \left\lfloor n/d \right\rfloor\), and thus as long as \(n/d\rightarrow \infty\) we have \(p\rightarrow \infty\) as well. Thus, the conclusion of Proposition 2 remains valid. The bound in Eq. (22) also holds, due to the condition in Eq. (15). Thus, Eq. (23) holds, and this completes the proof of Theorem 3. \(\square\)

Proof of Theorem 4

As in the proof of Theorem 1, define \({\mathsf {W}}_i : = {\mathsf {X}}_i^{-1/2}\), \(i\in \{0,1,\ldots ,n\}\). The proof of the upper bound in Theorem 4 is in fact the same as in Eq. (22). Indeed, in Eq. (22) we show that

which implies the lower bound in Eq. (17). The upper bound in Eq. (17) follows from the same arguments used to obtain Proposition 1. Indeed, for any \(1\le k\le n\), let \({\mathsf {I}}_k\) be any set of k consecutive indices in \(\{0,1,\ldots ,n\}\). Let \({\mathsf {P}}_n\) be the matrix obtained by replacing the \((k+1)\times (k+1)\) principal minor that corresponds to the indices \({\mathsf {I}}_k\) of \({\mathsf {T}}_n\) by \({\mathsf {M}}_k\cdot \min _{i\in {\mathsf {I}}_k}{\mathsf {W}}_i\). Note that \({\mathsf {T}}_n \ge {\mathsf {P}}_n\) (where the inequality is componentwise), and thus \(\lambda _{\max } ({\mathsf {T}}_n ) \ge \lambda _{\max } ({\mathsf {P}}_n )\). By Cauchy’s interlacing theorem, the largest eigenvalue of \({\mathsf {P}}_n\) is larger or equal to the largest eigenvalue of any of its principal minors. Thus,

Now, since Eq. (27) holds for any choice of \(1\le k\le n\) and \({\mathsf {I}}_k\in {\mathcal {I}}_k^{n+1}\), we can maximize the r.h.s. of Eq. (27) with respect to these assignments, which implies the upper bound in Eq. (17). \(\square\)

References

Sauna, Z. & Kimchi-Sarfaty, C. Understanding the contribution of synonymous mutations to human disease. Nat. Rev. Genet. 12, 683–691 (2011).

Goz, E., Mioduser, O., Diament, A. & Tuller, T. Evidence of translation efficiency adaptation of the coding regions of the bacteriophage lambda. DNA Res. 24, 333–342 (2017).

Lane, N. & Martin, W. The energetics of genome complexity. Nature 467, 929–934 (2010).

Mahalik, S., Sharma, A. K. & Mukherjee, K. J. Genome engineering for improved recombinant protein expression in Escherichia coli. Microb. Cell Fact. 13, 1–13 (2014).

Buttgereit, F. & Brand, M. A hierarchy of ATP-consuming processes in mammalian cells. Biochem. J. 312, 163–167 (1995).

Russell, J. B. & Cook, G. M. Energetics of bacterial growth: balance of anabolic and catabolic reactions. Microbiol. Rev. 59, 48–62 (1995).

Gorochowski, T. E., Avcilar-Kucukgoze, I., Bovenberg, R. A., Roubos, J. A. & Ignatova, Z. A. Minimal model of ribosome allocation dynamics captures trade-offs in expression between endogenous and synthetic genes. ACS Synth. Biol. 5, 710–20 (2016).

Juszkiewicz, S. et al. Ribosome collisions trigger cis-acting feedback inhibition of translation initiation. eLife 9, e60038 (2020).

Juszkiewicz, S., Speldewinde, S. H., Wan, L., Svejstrup, J. & Hegde, R. S. The ASC-1 complex disassembles collided ribosomes. Mol. Cell 79, 603–614 (2020).

Mills, E. W. & Green, R. Ribosomopathies: there’s strength in numbers. Science 358, (2017).

Tuller, T. et al. An evolutionarily conserved mechanism for controlling the efficiency of protein translation. Cell 141, 344–354 (2010).

von der Haar, T. Mathematical and computational modelling of ribosomal movement and protein synthesis: an overview. Comput. Struct .Biotechnol. J. 1, e201204002 (2012).

Myasnikov, A. G. et al. Structure-function insights reveal the human ribosome as a cancer target for antibiotics. Nat. Commun. 7, 12856 (2016).

Johansson, M., Chen, J., Tsai, A., Kornberg, G. & Puglisi, J. Sequence-dependent elongation dynamics on macrolide-bound ribosomes. Cell Rep. 7, 1534–1546 (2014).

Lambert, T. Antibiotics that affect the ribosome. Rev. Sci. Tech. Off. Int. Epiz. 31, 57–64 (2012).

Wilson, D. N. Ribosome-targeting antibiotics and mechanisms of bacterial resistance. Nat. Rev. Microbiol. 12, 35–48 (2014).

Blake, W. J., Kaern, M., Cantor, C. R. & Collins, J. J. Noise in eukaryotic gene expression. Nature 422, 633–637 (2003).

Newman, J. R. S. et al. Single-cell proteomic analysis of S. cerevisiae reveals the architecture of biological noise. Nature 441, 840–846 (2006).

Sonneveld, S., Verhagen, B. & Tanenbaum, M. Heterogeneity in mRNA translation. Trends Cell Biol. 30, 606–618 (2020).

Korkmazhan, E., Teimouri, H., Peterman, N. & Levine, E. Dynamics of translation can determine the spatial organization of membrane-bound proteins and their mRNA. Proc. Natl. Acad. Sci. 114, 13424–13429 (2017).

Lecuyer, E. et al. Global analysis of mRNA localization reveals a prominent role in organizing cellular architecture and function. Cell 131, 174–187 (2007).

Besse, F. & Ephrussi, A. Translational control of localized mRNAs: restricting protein synthesis in space and time. Nat. Rev. Mol. Cell Biol. 9, 971–980 (2008).

Sabi, R. & Tuller, T. Novel insights into gene expression regulation during meiosis revealed by translation elongation dynamics. NPJ Syst. Biol. Appl. 5, 12 (2019).

Buettner, F. et al. Computational analysis of cell-to-cell heterogeneity in single-cell RNA-sequencing data reveals hidden subpopulations of cells. Nat. Biotechnol. 33, 155–160 (2015).

Genuth, N. R. & Barna, M. The discovery of ribosome heterogeneity and its implications for gene regulation and organismal life. Mol. Cell 71, 364–374 (2018).

Nieb, A., Siemann-Herzberg, M. & Takors, R. Protein production in Escherichia coli is guided by the trade-off between intracellular substrate availability and energy cost. Microb. Cell Fact. 18, 8 (2019).

Martin, K. C. & Ephrussi, A. mRNA localization: gene expression in the spatial dimension. Cell 136, 719 (2009).

Gerashchenko, M. & Gladyshev, V. Ribonuclease selection for ribosome profiling. Nucleic Acids Res. 45, e6 (2017).

Diament, A. & Tuller, T. Estimation of ribosome profiling performance and reproducibility at various levels of resolution. Biol. Direct 11, 24 (2016).

Zaccara, S., Ries, R. & Jaffrey, S. Reading, writing and erasing mRNA methylation. Nat. Rev. Mol. Cell Biol. 20, 608–624 (2019).

Bergman, S. & Tuller, T. Widespread non-modular overlapping codes in the coding regions. Phys. Biol. 17, 031002 (2020).

McGary, K. & Nudler, E. RNA polymerase and the ribosome: the close relationship. Curr. Opin. Microbiol. 16, 112–7 (2013).

Edri, S. & Tuller, T. Quantifying the effect of ribosomal density on mRNA stability. PLoS One 9, e102308 (2014).

Presnyak, V. et al. Codon optimality is a major determinant of mRNA stability. Cell 160, 1111–24 (2015).

Bazzini, A., Lee, M. & Giraldez, A. Ribosome profiling shows that miR-430 reduces translation before causing mRNA decay in zebrafish. Science 336, 233–7 (2012).

Bergman, S., Diament, A. & Tuller, T. New computational model for miRNA-mediated repression reveals novel regulatory roles of miRNA bindings inside the coding region. Bioinformatics https://doi.org/10.1093/bioinformatics/btaa1021 (2020).

Sharma, A. K., Ahmed, N. & O’Brien, E. P. Determinants of translation speed are randomly distributed across transcripts resulting in a universal scaling of protein synthesis times. Phys. Rev. E 97, 022409 (2018).

Tuller, T. & Zur, H. Multiple roles of the coding sequence 5’ end in gene expression regulation. Nucleic Acids Res. 43, 13–28 (2015).

Ingolia, N. T. Ribosome profiling: new views of translation, from single codons to genome scale. Nat. Rev. Genet. 15, 205–213 (2014).

Newhart, A. & Janicki, S. M. Seeing is believing: visualizing transcriptional dynamics in single cells. J. Cell. Physiol. 229, 259–265 (2014).

Mayer, A. & Churchman, L. Genome-wide profiling of RNA polymerase transcription at nucleotide resolution in human cells with native elongating transcript sequencing. Nat. Protocols 11, 813–833 (2016).

Iwasaki, S. & Ingolia, N. T. Seeing translation. Science 352, 1391–1392 (2016).

Zur, H. & Tuller, T. Predictive biophysical modeling and understanding of the dynamics of mRNA translation and its evolution. Nucleic Acids Res. 44, 9031–9049 (2016).

MacDonald, C. T., Gibbs, J. H. & Pipkin, A. C. Kinetics of biopolymerization on nucleic acid templates. Biopolymers 6, 1–25 (1968).

MacDonald, C. T. & Gibbs, J. H. Concerning the kinetics of polypeptide synthesis on polyribosomes. Biopolymers 7, 707–725 (1969).

Spitzer, F. Interaction of Markov processes. Adv. Math. 5, 246–290 (1970).

Zia, R., Dong, J. & Schmittmann, B. Modeling translation in protein synthesis with TASEP: a tutorial and recent developments. J. Stat. Phys. 144, 405–428 (2011).

Shaw, L. B., Zia, R. K. & Lee, K. H. Totally asymmetric exclusion process with extended objects: a model for protein synthesis. Phys. Rev. E Stat. Nonlinear Soft Matter. Phys. 68, 021910 (2003).

Schadschneider, A., Chowdhury, D. & Nishinari, K. Stochastic Transport in Complex Systems: From Molecules to Vehicles (Elsevier, 2011).

Pinkoviezky, I. & Gov, N. Transport dynamics of molecular motors that switch between an active and inactive state. Phys. Rev. E 88, 022714 (2013).

Derrida, B., Domany, E. & Mukamel, D. An exact solution of a one-dimensional asymmetric exclusion model with open boundaries. J. Stat. Phys. 69, 667–687 (1992).

Derrida, B., Evans, M. R., Hakim, V. & Pasquier, V. Exact solution of a 1D asymmetric exclusion model using a matrix formulation. J. Phys. A: Math. Gen. 26, 1493 (1993).

Reuveni, S., Meilijson, I., Kupiec, M., Ruppin, E. & Tuller, T. Genome-scale analysis of translation elongation with a ribosome flow model. PLoS Comp. Biol. 7, e1002127 (2011).

Zarai, Y., Margaliot, M. & Tuller, T. Ribosome flow model with extended objects. J. R. Soc. Interface 14, 20170128 (2017).

Tuller, T. et al. Composite effects of gene determinants on the translation speed and density of ribosomes. Genome Biol. 12, R110 (2011).

Dana, A. & Tuller, T. Efficient manipulations of synonymous mutations for controlling translation rate-an analytical approach. J. Comput. Biol. 19, 200–231 (2012).

Diament, A. et al. The extent of ribosome queuing in budding yeast. PLoS Comput. Biol. 14, e1005951 (2018).

Margaliot, M., Sontag, E. D. & Tuller, T. Entrainment to periodic initiation and transition rates in a computational model for gene translation. PLoS One 9, e96039 (2014).

Margaliot, M. & Tuller, T. Stability analysis of the ribosome flow model. IEEE/ACM Trans. Comput. Biol. Bioinform. 9, 1545–1552 (2012).

Poker, G., Margaliot, M. & Tuller, T. Sensitivity of mRNA translation. Sci. Rep. 5, 1–11 (2015).

Margaliot, M., Tuller, T. & Sontag, E. D. Checkable conditions for contraction after small transients in time and amplitude. In Feedback Stabilization of Controlled Dynamical Systems: In Honor of Laurent Praly (ed. Petit, N.) 279–305 (Springer International Publishing, 2017).

Horn, R. A. & Johnson, C. R. Matrix Analysis 2nd edn. (Cambridge University Press, 2013).

Poker, G., Zarai, Y., Margaliot, M. & Tuller, T. Maximizing protein translation rate in the nonhomogeneous ribosome flow model: a convex optimization approach. J. R. Soc. Interface 11, 20140713 (2014).

Magnus, J. On differentiating eigenvalues and eigenvectors. Econom. Theory 1, 179–191 (1985).

Margaliot, M. & Tuller, T. Ribosome flow model with positive feedback. J. R. Soc. Interface 10, 20130267 (2013).

Zarai, Y., Margaliot, M. & Tuller, T. On the ribosomal density that maximizes protein translation rate. PLoS One 11, 1–26 (2016).

Zarai, Y., Margaliot, M. & Tuller, T. Optimal down regulation of mRNA translation. Sci. Rep. 7, 41243 (2017).

Zarai, Y., Margaliot, M. & Tuller, T. A deterministic mathematical model for bidirectional excluded flow with Langmuir kinetics. PLoS One 12, e0182178 (2017).

Raveh, A., Margaliot, M., Sontag, E. & Tuller, T. A model for competition for ribosomes in the cell. J. R. Soc. Interface 13, 20151062 (2016).

Miller, J., Al-Radhawi, M. A. & Sontag, E. D. Mediating ribosomal competition by splitting pools. IEEE Control Syst. Lett. (2020) To appear.

Nanikashvili, I., Zarai, Y., Ovseevich, A., Tuller, T. & Margaliot, M. Networks of ribosome flow models for modeling and analyzing intracellular traffic. Sci. Rep. 9, 1703 (2019).

Raveh, A., Zarai, Y., Margaliot, M. & Tuller, T. Ribosome flow model on a ring. IEEE/ACM Trans. Comput. Biol. Bioinform. 12, 1429–1439 (2015).

Zarai, Y., Ovseevich, A. & Margaliot, M. Optimal translation along a circular mRNA. Sci. Rep. 7, 9464 (2017).

Zarai, Y., Margaliot, M. & Kolomeisky, A. B. A deterministic model for one-dimensional excluded flow with local interactions. PLoS One 12, 1–23 (2017).

Bar-Shalom, E., Ovseevich, A. & Margaliot, M. Ribosome flow model with different site sizes. SIAM J. Appl. Dyn. Syst. 19, 541–576 (2020).

Edri, S., Gazit, E., Cohen, E. & Tuller, T. The RNA polymerase flow model of gene transcription. IEEE Trans. Biomed. Circuits Syst. 8, 54–64 (2014).

Dana, A. & Tuller, T. The effect of tRNA levels on decoding times of mRNA codons. Nucleic Acids Res. 42, 9171–9181 (2014).

Pviseaulsson, J. Summing up the noise in gene networks. Nature 427, 415–418 (2004).

Hausser, J., Mayo, A., Keren, L. & Alon, U. Central dogma rates and the trade-off between precision and economy in gene expression. Nat. Commun. 10, 1–15 (2019).

McAdams, H. H. & Arkin, A. Stochastic mechanisms in gene expression. Proc. Natl. Acad. Sci. 94, 814–819 (1997).

R Sharma, Extrinsic noise acts to lower protein production at higher translation initiation rates. bioRxiv (2020).

Zarai, Y. & Tuller, T. Oscillatory behavior at the translation level induced by mRNA levels oscillations due to finite intracellular resources. PLoS Comput. Biol. 14, e1006055 (2018).

Dana, A. & Tuller, T. Properties and determinants of codon decoding time distributions. BMC Genom. Suppl. 6, S13 (2014).

Zarai, Y., Mendel, O. & Margaliot, M. Analyzing linear communication networks using the ribosome flow model. In Proc. 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing 755–761 (2015).

Zarai, Y. & Margaliot, M. On minimizing the maximal characteristic frequency of a linear chain. IEEE Trans. Autom. Control 62, 4827–4833 (2017).

Dyson, F. The dynamics of a disordered linear chain. Phys. Rev. 92, 1331–1338 (1953).

Zarai, Y., Margaliot, M. & Tuller, T. Modeling and analyzing the flow of molecular machines in gene expression. In Systems Biology (eds. Rajewsky, N., Jurga, S. & Barciszewski, J.) 275–300 (Springer, Cham, 2018).

Zhidong, B. & Silverstein, J. W. Spectral Analysis of Large Dimensional Random Matrices (Springer-Verlag, New York, 2010).

Da Fonseca, C. M. & Kowalenko, V. Eigenpairs of a family of tridiagonal matrices: three decades later. Acta Mathematica Hungarica 160, 376–389 (2020).

Acknowledgements

The authors thank Yoram Zarai for helpful comments. The work of MM is partially supported by a research Grant from the Israel Science Foundation (ISF). We thank the anonymous reviewers and the editor for many helpful comments and for the timely review process.

Author information

Authors and Affiliations

Contributions

All authors performed the research and wrote the paper together.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Margaliot, M., Huleihel, W. & Tuller, T. Variability in mRNA translation: a random matrix theory approach. Sci Rep 11, 5300 (2021). https://doi.org/10.1038/s41598-021-84738-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-84738-0

- Springer Nature Limited