Abstract

Biomarker selection and cancer classification play an important role in knowledge discovery using genomic data. Successful identification of gene biomarkers and biological pathways can significantly improve the accuracy of diagnosis and help machine learning models have better performance on classification of different types of cancer. In this paper, we proposed a LogSum + L2 penalized logistic regression model, and furthermore used a coordinate decent algorithm to solve it. The results of simulations and real experiments indicate that the proposed method is highly competitive among several state-of-the-art methods. Our proposed model achieves the excellent performance in group feature selection and classification problems.

Similar content being viewed by others

Introduction

With the development of DNA microarray technology1,2, the biological researchers can analyze the expression levels of thousands of genes simultaneously. Many studies have shown that microarray data can be used to classify the different types of cancer, which includes how long the incubation period is, and what drugs are effective in the diagnosis and treatment processes.

From a biological point of view3, only a small number of genes (biomarkers) strongly indicate the target cancer, while other genes are not related to disease. Therefore, the data with unrelated genes may bring noise, and make the machine learning approaches less easy to find pathogenic genes that cause the disease. Moreover, from a machine learning perspective, the large number of genes (features) with few samples in the datasets may cause overfitting4, and have negative impact on classification performance. Due to the importance of these issues, effective gene (biomarker) selection methods are needed to help classify different cancer types and improve prediction accuracy.

In recent years, many methods for gene selection in microarray datasets have been developed and generally can be divided into three categories: filters, wrappers, and embedded methods. Filter methods5,6,7,8 evaluate genes based on discriminative power without considering their regulation correlations with other genes. The main disadvantage of the filtering methods is that it examines each gene separately, and makes each gene independent, thereby ignores the possibility that the genes have combined and grouping effects. This is a common problem with statistical methods, such as t-test, which can also examine each gene individually.

Wrapper methods9,10,11 utilize feature assessment measures based on the learning performance to select subsets of genes. Generally, they can acquire a small number of related genes to notable promote the learning ability. In some cases, the results of the wrapper methods are better than those of the filter methods. However, the main fault of wrapper methods is their computational cost is high.

A third set of feature selection approaches is the embedded methods12,13,14,15,16,17,18,19,20,21,22,23,24,25,26 that perform feature selection as part of the learning procedure of a single process. Under similar learning performance, the computational efficiency of embedded methods is more efficient than wrapper approaches. Hence, embedded methods have recently attracted a lot of attention in the literature. The regularization methods are important embedded technologies, which can perform feature selection and model training simultaneously. Many regularization methods have been proposed, such as Lasso12, SCAD13, adaptive Lasso14, MCP15, Lq (0 < q < 1)16, L1/217,18, LogSum19, etc. These methods perform well with the independent feature selection. When the features are highly correlated, some regularization methods which pay attention to the grouping effect can be used to select the groups of the relevant features, such as group Lasso20, Elastic net21, Fused Lasso22, OSCAR23, adaptive Elastic net24, SCAD-L225, L1/2 + L226.

On the other hand, many machine learning models have been used to analyze microarray gene expression data for cancer classification. For example, Furey et al. used support vector machines (SVMs) to classify cell and tissue types27. Medjahed et al. applied the K-nearest neighbors (K-NN) to the diagnosis and classification of breast cancer28. Meanwhile, some researchers used the logistic regressions with optimization methods for binary cancer classification29,30,31,32,33. However, the traditional logistic regression model has two obvious shortcomings, mainly in the following two aspects:

-

1.

Feature selection problem.

All or most of the feature coefficients obtained by fitting the logistic regression model are not zero, i.e. all most of the features are related to the classification target and not sparse. However, the key factors affecting the model are often only a few in many practical problems. This non-sparseness of the logistic models increases the computational complexity on the one hand and is not conducive to the actual interpretation of the practical problems.

-

2.

Overfitting problem.

The logistic regression models can often obtain good precision for the training data, but for the test data outside the training set, the classification accuracy rate is not ideal. In fact, not only logistic regression, many other data analysis models will also be affected by overfitting. It has become one of the hot research topics in statistics, machine learning and other fields.

In recent years, there is growing interesting to apply the regularization techniques in the logistic regression models to solve the above mentioned two shortcomings. For example, Tibshirani and Friedman34,35 proposed the sparse logistic regression based on the Lasso regularization and the coordinate descent methods. Algamal et al.36,37 proposed the adaptive Lasso and the adjusted adaptive elastic net for gene selection in high dimensional cancer classification. Like sparse logistic regression with the L1 regularization method, Cawley and Talbot30 investigated sparse logistic regression with Bayesian regularization. Liang et al.38 investigated the sparse logistic regression model with the L1/2 penalty for gene selection in cancer classification.

Inspired by above mentioned methods, in this paper, we proposed a LogSum + L2 penalized logistic regression model. The main contributions of this paper include.

-

1.

Our proposed method can not only select sparse features (biomakers), but also identify the groups of the relevant features (gene pathways). The coordinate decent algorithm is used to solve the LogSum + L2 penalized logistic regression model.

-

2.

We also evaluate the capability of our proposed method and compare its performance with other regularization methods. The results of simulations and real experiments indicate that the proposed method is highly competitive among several state-of-the-art methods.

The rest of this paper is organized as follows. In “Related works” section, we introduce the related work. “Methods” section represents the LogSum + L2 penalized logistic regression model and its optimization algorithm. “Experiments experimental results and discussion” section analyzes the results of the simulated data. “Discussion and conclusion” section analyzes the results of real data. Section 6 concludes this paper.

Related works

Sparse penalized logistic regression

We focused on binary classification using logistic regression (LR), which is a statistical method for modeling a binary classification problem. Suppose we have n samples and p genes. Datasets X and y are the genes matrix and the dependent variable, respectively. So, the n samples mean the set D, \(x_{ij}\) denotes the value of gene \(j\) for the \(i{\rm th}\) samples, yi is a corresponding variable that takes a value of 0 or 1, \(y_{i}\) = 0 indicates the \(i{\rm th}\) sample in Class 1 and \(y_{i}\) = 1 indicates the \(i{\rm th}\) sample is in Class 2. Then, we define a classifier \(f(x) = \frac{{e^{x} }}{{(1 + e^{x} )}}\) such that for any input x with class label \(y\),\(f(x)\) predicts y correctly. The \(LR\) is given as follows:

In Eq. (1), \(\beta { = (}\beta_{0} {,}\beta_{1} {,}...{,}\beta_{p} {)}\) are the coefficients need to be estimated. We should notice that \(\beta_{0}\) is the intercept. The log-likelihood function of the transformation of Eq. (1) is defined as:

Then we can obtain the coefficients \(\beta\) when Eq. (2) is minimized. In the cancer classification problem with high-dimensional and low-sample size data \((p \gg n),\)directly solving the logistic model (2) will make overfitting. Therefore, to solve this problem, we need add a regularization term to (2), the sparse logistic regression can be modelled as:

where \(l(\beta )\) is the loss function, \(p(\beta )\) is the penalty function, and \(\lambda > 0\) is a control parameter.

A coordinate decent algorithm for different thresholding operators

The coordinate decent algorithm is a “one-at-a-time” approach40, and before considering the coordinate descent algorithm for the nonlinear logistic regularization, we first introduce a linear regression case. The objective function of the linear regression is as follow:

where \(y = (y_{1} , \ldots ,y_{n} )^{T}\) is the vector of n response variables,\(X_{i} = (x_{i1} ,x_{i2} , \ldots ,x_{ij} )\) is ith input variables with dimensionality \(p\) and \(y_{i}\) is the corresponding response variable. \(||.||\) denotes the \(L_{2}\)-norm.

The coordinate decent algorithm “one-at-a-time” is to solve \(\beta_{j}\) and other \(\beta_{k \ne j}\)(represent the coefficients \(\beta_{k \ne j}\) remained after \(j\hbox{th}\) element \(\beta_{j}\) is removed) are fixed. The Eq. (4) can be rewritten as:

In Eq. (5), kth represents other features than the jth feature.

The first order derivative at \(\beta_{j}\) can be estimated as:

We define \(\tilde{y}_{i}^{\left( j \right)} = \mathop \sum _{k \ne j} x_{ik} \beta_{k}\) as a part of fitting \(\beta_{j}\), \(\tilde{r}_{i}^{\left( j \right)} = y_{i} - \tilde{y}_{i}^{\left( j \right)}\), and \(w_{j} = \sum_{i = 1}^{n} {x_{ij} \tilde{r}_{i}^{(j)} }\), where \(\tilde{r}_{i}^{(j)}\) represents the partial residuals with respect to the jth feature.

To consider the correlation of features, Elastic Net (\(L_{EN}\))21 had been proposed, which emphasizes a grouping effect. The \(L_{EN}\) penalty function is given as follows:

The penalty function of \(L_{EN}\) is combination of \(L_{1}\) penalty and ridge penalty which \(a = 1\) and \(a = 0\) respectively. Therefore, Eq. (6) is rewritten as follows:

Zou and Hastie have proposed the univariate solution21 for a \(L_{EN}\) penalized regression coefficient as follows:

where \(S(w_{j} ,\lambda a)\) is soft thresholding operator for the \(L_{1}\) penalty if a is equal to 1, so Eq. (9) can be divided into three situations as follows:

Fan et al. have proposed the SCAD penalty13, which can produce sparse set of solutions and approximately unbiased coefficients for large coefficients. Its penalty function is shown as follows:

Additionally, the SCAD thresholding operator is given as follows:

Like the SCAD penalty, Zhang et al. have proposed the maximum concave penalty (MCP)15. The formula of its penalty function is shown as:

And the MCP thresholding operator is given as follows:

In Eq. (14), \(\gamma\) is the experience parameter.

Xu et al. have proposed \(L_{1/2}\) regularization17, and its penalty function can be written:

Then the univariate half thresholding operator for a \(L_{1/2}\) penalized linear regression coefficient is given as follows:

in Eq. (16), \(\phi_{\lambda } (w) = \frac{\lambda }{8}\left( {\frac{\left| w \right|}{3}} \right)^{{ - \frac{3}{2}}}\).

To consider the correlation of genes, Huang et al. have proposed \(HLR\) regularization26. Equation (15) can be rewritten:

And the univariate half thresholding operator for the \(HLR\) penalized linear regression coefficient is as follows:

Theoretically, the L0 regularization produces the better solutions with more sparsity, but it is NP problem. Therefore, Candes et al. have19 proposed \(LogSum\) penalty, which approximates much better the \(L_{0}\) regularization. We could rewrite the penalty function of the \(LogSum\) regularization as follows:

where \(\varepsilon > 0\) should be set arbitrarily small, to closely make the \(LogSum\) penalty resemble the L0-norm. Equation (19) has a local minimal39.

where \(\lambda > 0\), \(0 < \varepsilon < \sqrt \lambda\), \(c_{1} = w_{j} - \varepsilon\), \(c_{2} = c_{1}^{2} - 4(\lambda - w_{j} \varepsilon )\).

Methods

LogSum + L 2 penalized logistic regression model

In this paper, we proposed the LogSum + L2 penalized logistic regression model for feature group selection. We could write the LogSum + L2 penalty as follows:

where \(\left\| {y - X\beta } \right\|^{2}\) is the loss function, \((y,X)\) is a data set, \(\varepsilon > 0\) is a constant, \(\lambda>0\), \(\lambda_{1} \ge 0\) and \(\lambda_{2} \ge 0\) are regularization parameters that control the complexity of the penalty function.

Figure 1 describes the contour plots on two-dimensional for the penalty functions of L1, LEN, HLR and LogSum + L2 approaches. It is demonstrated that the LogSum + L2 penalty is non-convex for the given parameters \({\lambda }_{1}\) and \({\lambda }_{2}\) in Eq. (21).

The LogSum + L2 thresholding operator is given as follows:

where \({\lambda} = 2\sqrt {\lambda_{1} \left( {1 + 2\lambda_{2} } \right)} - \left( {1 + 2\lambda_{2} } \right)\varepsilon\), \(\lambda_{1} + \lambda_{2} = 1\).

The proof of Eq. (22) is given as follows:

Considering the regression model has the following form

where the response \(y \in R^{n}\) , the predictors \(X = (x_{1} ,x_{2} ,...,x_{p} ),X \in R^{n \times p}\) and the error term \(e = (e_{1} ,e_{2} ,...,e_{n} )\) are i.i.d. with mean 0 variance \(\sigma^{2}\).

The \(Logsum + L_{2}\) regularization can be expressed as:

Its first partial derivative with respect to \(\beta_{k}\) is given by follows:

Equation (25) is obtained from condition that the design matrix \(X\) is orthonormal. By setting the first partial derivative equal to zero, we obtain the estimator with its kth element \(\hat{\beta }_{k}\).

We first considers the situation \(\beta_{j} > 0\), let \(r_{i}^{(k)} = y_{i} - \sum_{j \ne k}^{p} {x_{ij} \beta_{j} }\), \({w}_{k}=\sum_{i=1}^{n}{r}_{i}^{\left(k\right)}(-{x}_{ik})\). Set the first partial derivative \(\frac{{\partial l_{{Logsum + L_{2} }} }}{{\partial \beta_{k} }} = 0\), we have:

and Eq. (26) is equivalent to follows:

Let

We discuss the solutions of Eq. (27) according to the value of \(\Delta\).

-

1.

if \(\Delta < 0\), Eq. (27) has no solution, that is no real root.

-

2.

if \(\Delta = 0\), Eq. (27) has unique root, that is \({\widehat{\beta }}_{k}=\frac{{w}_{k}-\left(1+2{\lambda }_{2}\right)\varepsilon }{2\left(1+2{\lambda }_{2}\right)}\).

-

3.

if \(\Delta > 0\), Eq. (27) has two roots, we have

$${{(w}_{k}+\left(1+2{\lambda }_{2}\right)\varepsilon )}^{2}>4{\lambda }_{1}\left(1+2{\lambda }_{2}\right)$$$${w}_{k}+\left(1+2{\lambda }_{2}\right)\varepsilon >2\sqrt{{\lambda }_{1}\left(1+2{\lambda }_{2}\right)}$$

Therefore, when \({w}_{k}\ge 2\sqrt{{\lambda }_{1}\left(1+2{\lambda }_{2}\right)}-\left(1+2{\lambda }_{2}\right)\varepsilon\), we obtain the estimator

For \({\beta }_{k}<0\), we can obtain the estimator in a similar way. Finally, we obtain the thresholding function of the \(Logsum + L_{2}\) regularization as Eq. (22).

According to different thresholding operators, we also discuss three properties to satisfy the coefficient estimator as shown in Fig. 2:

-

(a)

Unbiasedness the resulting estimator is nearly unbiased when the true unknown parameter is large to avoid unnecessary modeling bias;

-

(b)

Sparsity the resulting estimator is a thresholding rule, which automatically sets a small estimated coefficient to zero to reduce model complexity;

-

(c)

Continuity the resulting estimator is continuous to avoid instability in model prediction.

Figure 2 shows four regularization methods:\(L_{1}\), \(L_{EN}\), \(HLR\) and \(LogSum + L_{2}\) penalties with an orthogonal design matrix in the regression model. The estimators of \(L_{1}\) and \(L_{EN}\) are biased, whereas the \(HLR\) penalty is asymptotically unbiased. Similar to the \(HLR\) method, the \(LogSum + L_{2}\) approach also performs better than \(L_{1}\) and \(L_{EN}\) in the property of unbiasedness. All of these four regularization methods fulfil requirements of sparsity and continuity.

A coordinate decent algorithm for the LogSum + L 2 model

Inspired by Liang et al.38, Eq. (3) is linearized by one-term Taylor series expansion:

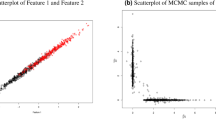

where \(\varepsilon > 0\), \(Z_{i} = X_{i} \tilde{\beta } + \frac{{Y_{i} - f(X_{i} \tilde{\beta })}}{{f(X_{i} \tilde{\beta })(1 - f(X_{i} \tilde{\beta }))}}\) is the estimated response, \(W_{i} = f(X_{i} \tilde{\beta })(1 - f(X_{i} \tilde{\beta }))\) is the weight and \(f(X_{i} \tilde{\beta }) = \frac{{exp(X_{i} \tilde{\beta })}}{{1 + exp(X_{i} \tilde{\beta })}}\). Redefine the partial residual for fitting current \(\tilde{\beta }_{j}\) as \(\tilde{Z}_{i}^{(j)} = \sum_{k \ne j} {x_{ik} \tilde{\beta }_{k} }\) and \(w_{j} = \sum_{i = 1}^{n} {W_{i} x_{ij} } (Z_{i} - \tilde{Z}_{i}^{(j)} )\). A pseudocode of the coordinate descent algorithm for the \(Logsum + L_{2}\) penalized logistic regression model is shown in Algorithm 1 (Fig. 3).

Experiments experimental results and discussion

Analysis on simulated data

In this section, we analyze the performance of the proposed method (the \(LogSum + L_{2}\) penalized logistic regression model) by simulation analysis. We compare the proposed method with other three methods, which are logistic regression with \(L_{1}\), \(L_{EN}\), \(HLR\) regularizations. We simulate data from the true model.

where \(X\sim N(0, \, 1)\), \(\varepsilon\) is the independent random error and σ is the parameter that controls the signal to noise. Two scenarios are presented here. In each example, the dimension of features is 1000. Here are the details of the two scenarios.

-

1.

In Scenario 1, the dataset consists of 200 observations, we set σ = 0.3 and simulate the group feature situation.

$$\beta = \left( {\underbrace {2,2,2,2,2}_{5},\underbrace {0, \ldots ,0}_{995}} \right);$$$$x_{i} = \rho \times x_{1} + (1 - \rho ) \times x_{i} , \, i = 2,3,4,5;$$where \(\rho\) is the correlation coefficient of the group features.

In this example, there is one set of related features. The ideal sparse regression method should select 5 real features and set other 995 features as noise features to zero.

-

2.

In Scenario 2, we set σ = 0.4 and the dataset consists of 400 observations, and defined two group features.

$$\beta = \left( {\underbrace {2,2,2,2,2,1.5, - 2,1.7,3, - 2.5,}_{10}\underbrace {3, \ldots ,3}_{10}\underbrace {0, \ldots ,0}_{980}} \right);$$$${x}_{i}=\rho \times {x}_{1}+\left(1-\rho \right)\times {x}_{i},i=\mathrm{2,3},\dots ,10;$$$${x}_{i}=\rho \times {x}_{11}+\left(1-\rho \right)\times {x}_{i},i=\mathrm{12,13},\dots ,20;$$

In this example, there are two sets of related group features. The ideal penalized logistic regression method should select 20 real features and set other 980 features as noise features to zero.

In this experiment, we initialize the coefficient \(\rho\) of features’ correlation as 0.2, 0.6 respectively, and hope to observe the accuracy of testing under different correlations by running different correlation values. The \(L_{1}\) and \(L_{EN}\) approaches were executed by Glmnet (http://web.stanford.edu/~hastie/glmnet_matlab/, MATLAB version 2014-a). We use the tenfold cross-validation (CV) approach to optimize the regularization parameters or tuning parameters (balance the tradeoff between data fit and model complexity) of the \(L_{1}\), \(L_{EN}\), \(HLR\) and \(LogSum + L_{2}\) approaches.

At the beginning, we divided the datasets at random into the training sets and the test sets. In our experiment, the approximate 70% of samples are proposed as training sets, and the rest are used as test sets. We repeated the simulations 30 times for each penalty method and computed the mean classification accuracy, mean classification sensitivity, and mean classification specificity on the training and test datasets respectively. To evaluate the quality of the selected features for the regularization approaches, the sensitivity and specificity of the feature selection performance39 were defined as the follows:

where the \(.*\) is the element-wise product, and \(\left| . \right|_{0}\) calculates the number of non-zero elements in a vector, \(\overline{\beta }\) and \(\overline{\hat{\beta }}\) are the logical “not” operators on the vector \(\beta\) and \(\hat{\beta }\).

The training results of different methods on simulate datasets are reported in Table 1. As it can be seen, for all scenarios, our proposed \(LogSum + L_{2}\) procedure generally achieves higher or comparable classification performance than the \(L_{1}\), \(L_{EN}\) and \(HLR\) methods. For example, in the Scenario 1 with \(\rho\) = 0.6, our proposed method gained the 97.86% of accuracy, 95.38% of sensitivity and 100% of specificity, all of this data has increased by 6% for other methods. And whatever Scenario 1 or 2, the \(LogSum + L_{2}\) methods always show the highest accuracy of training set, both \(\rho\) = 0.2 and \(\rho\) = 0.6. In summary, in the case of different scenarios and different values \(\rho\), the LogSum + L2 penalized logistic regression model is always the best.

Table 2 shows test results of different methods on simulate datasets. We can find that the performance of the LogSum + L2 penalized logistic regression model is still the best one among the four methods. And in Scenario 1, whatever \(\rho\) = 0.2 or \(\rho\) = 0.6, the \(LogSum + L_{2}\) approach shows similar values, but in Scenario 2, the sensitivity of the LogSum + L2 model is far apart, and its accuracy and specificity are not much different compared with other three methods.

Table 3 shows the feature selection of all competing regularization methods. As shown in Table 3, these are the β-Sensitivity and β-Specificity. The approximate results are similar to the previous two Tables. In the same \(\rho\) value, the LogSum + L2 penalized logistic regression model contains the greatest number of features and highest sensitivity and specificity. And in different \(\rho\) value, the performance of \(\rho\) = 0.6 always greater than the performance of \(\rho\) = 0.2.

Analysis of real data

We use three publicly available lung cancer microarray datasets, which download from GEO (https://www.ncbi.nlm.nih.gov/geo/). Some detail information and introduction will be shown below:

-

1.

GSE10072: Series GSE10072 is a gene expression signature of cigarette smoking and its role in lung adenocarcinoma development and survival. Tobacco smoking can cause 90% of lung cancer cases, but the changes in the level of the molecules that lead to cancer development and affect survival are still unclear.

-

2.

GSE19188: Series GSE19188 is a dataset about gene expression for early stage Non-small-cell lung carcinoma (NSCLC). 156 tumors and normal samples are aggregated into the expected group. The prognostic characteristics of 17 genes showed the best correlation with the survival time after surgery.

-

3.

GSE19804: Series GSE19804 is a dataset about Genome-wide screening of transcriptional modulation in non-smoking female lung cancer in Taiwan. Although smoking is a major risk factor for lung cancer, only 7% of women with lung cancer in Taiwan have a history of smoking, which is much lower than that of white women. Researchers extracted RNA from paired tumors and normal tissues for gene expression analysis to explain this phenomenon. This dataset and its reports comprehensively analyze the molecular characteristics of lung cancer in non-smoking women in Taiwan.

The GSE10072 dataset contains 22,284 microarray gene expression profiles and GSE19188 and GSE 19,804 both have 54,675 microarray gene expression profiles. As same as simulation data, we randomly divide the datasets such that 70% of the datasets become training samples and 30% become test samples. A brief introduction of these datasets is summarized in Table 4.

Table 5 describes the average training and test accuracies are obtained by different variable selection methods in the three datasets. It is easy to find that the performance of the LogSum + L2 penalized logistic regression model is better than other three approaches. For example, in terms of training accuracy, the \(LogSum + L_{2}\) approach reached 99.43%, and other three methods are 98.32%, 99.04% and 98.21% respectively in GSE10072 dataset. In GSE19188 dataset, we observe the test accuracy of the \(LogSum + L_{2}\) method is 75%, and other three methods are 51.46%, 47.56% and 46.19% respectively. From the number of selected genes, we can find the LogSum + L2 penalized logistic regression model always select the lowest number of genes and the \(L_{EN}\) approach select the highest number of genes.

In order to search the common gene signatures selected by the different methods, we used VENNY software to generate Venn diagrams. As show in Fig. 4, we consider the common gene signatures selected by the logistic regression model with \(L_{1}\), \(L_{EN}\), \(HLR\) and \(LogSum + L_{2}\) regularizations, which are the most relevant signatures of lung cancer. Many genes selected by the LogSum + L2 penalized logistic regression model do not appear in the results of the other three regularization methods. For example, the \(LogSum + L_{2}\) approach selects 5, 6, and 3 unique genes from GSE10072, GSE19188 and GSE19804 datasets respectively. This means that the LogSum + L2 penalized logistic regression model can find the different genes and pathways related to lung cancer compared with other three regularization methods.

Figures 5, 6 and 7 show the interactive networks of all the features selected by the LogSum + L2 penalized logistic regression model. The integrative networks among these selected features are represented by the cBioPortal from publicly lung cancer datasets. The circles with thick border represent the selected genes, and the rest circles with gradient color-coded represent genes according to their alteration frequencies in databases. The hexagons represent target drugs, and among of them some with yellow color represent the drugs approved by FDA. The links connected some selected genes represent that they have regulation correlations with group effect.

In GSE10072 dataset, from Fig. 5, we find a gene named EGFR, which has been conformed as the important target gene of NSCLC40. It belongs to ERBB receptor tyrosine kinase family, which include some other genes like HER2, HER3 and HER4. Due to observed patterns of oncogenic mutation of EGFR and HER2, many research works report their attractive option for targeted therapy in patients with NSCLC.

As shown in Fig. 6, three important genes TUBB1, PRKD1 and STK11 have been selected, and genes PRKD1 and STK11 have the regulation correlation with group effect from GSE19188 dataset. In fact, there are many drugs have been developed to target the gene TUBB1. And many research works report that genes PRKD1 and STK11 significantly influence the patients’ survival rates across all tumors41.

As shown in Fig. 7, four important genes EPCAM, SMC3, HIST1H2BL, and LMNA and their regulation correlations with group effect have been selected from GSE19804 dataset. Many research works report that the epithelial cell adhesion molecule (EPCAM) represents true oncogenes as the tumor-associated calcium signal transducer, and study the relationship between gene EPCAM and NSCLC42.

Table 6 summarizes that the genes were selected by the LogSum + L2 penalized logistic regression model. At the beginning of the experiments, the attribute of genes is prob set ID. Thus, we could transform prob set ID to gene symbol by using the website DAVID (https://david.ncifcrf.gov). According to the experimental results, the LogSum + L2 penalized logistic regression model can find some unique genes, which cannot be identified by other regularization models but are significantly related to the disease. Therefore, we believe that the LogSum + L2 penalized logistic regression model can accurately and efficiently identify cancer-related genes.

Discussion and conclusion

Successful identification of gene biomarkers and biological pathways can significantly improve the accuracy of diagnosis and help machine learning models have better performance on classification of different types of cancer. Many researchers used the logistic regressions with optimization methods for binary cancer classification. However, the traditional logistic regression model has two obvious shortcomings: feature selection and overfitting problems. In this paper, we proposed the \(LogSum + L_{2}\) penalized logistic regression model. Our proposed method can not only select sparse features (biomakers), but also identify the groups of the relevant features (gene pathways). The coordinate decent algorithm is used to solve the LogSum + L2 penalized logistic regression model. We also evaluate the capability of our proposed method and compare its performance with other regularization methods. The results of simulations and real experiments indicate that the proposed method is highly competitive among several state-of-the-art methods. The disadvantage of the proposed method is its three regularization parameters need to be tuned by the k-fold cross-validation approach.

In recent years, increasing associations between of microRNAs (miRNAs) and human diseases have been identified. Based on accumulating biological data, many computational models for potential miRNA-disease associations inference have been developed43,44,45,46. We will apply the proposed LogSum + L2 penalized logistic regression model to identify the non-coding RNA biomarker of human complex diseases as the future direction of our research.

References

Guyon, I., Weston, J., Barnhill, S. & Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 46(1–3), 389–422 (2002).

Heller, M. J. DNA microarray technology: Devices, systems, and applications. Annu. Rev. Biomed. Eng. 4(1), 129–153 (2002).

Greenbaum, D., Colangelo, C., Williams, K. & Gerstein, M. Comparing protein abundance and mRNA expression levels on a genomic scale. Genome Biol. 4(9), 1–8 (2003).

Hawkins, D. M. The problem of overfitting. J. Chem. Inf. Comput. Sci. 44(1), 1–12 (2004).

Dudoit, S., Fridlyand, J. & Speed, T. P. Comparison of discrimination methods for the classification of tumors using gene expression data. J. Am. Stat. Assoc. 97(457), 77–87 (2002).

Li, T., Zhang, C. & Ogihara, M. A comparative study of feature selection and multiclass classification methods for tissue classification based on gene expression. Bioinformatics 20(15), 2429–2437 (2004).

Lee, J. W., Lee, J. B., Park, M. & Song, S. H. An extensive comparison of recent classification tools applied to microarray data. Comput. Stat. Data Anal. 48(4), 869–885 (2005).

Ding, C. & Peng, H. Minimum redundancy feature selection from microarray gene expression data. J. Bioinform. Comput. Biol. 3(02), 185–205 (2005).

Monari, G. & Dreyfus, G. Withdrawing an example from the training set: An analytic estimation of its effect on a non-linear parameterised model. Neurocomputing 35(1–4), 195–201 (2000).

Rivals, I. & Personnaz, L. MLPs (mono-layer polynomials and multi-layer perceptrons) for nonlinear modeling. J. Mach. Learn. Res. 3, 1383–1398 (2003).

Liu, X. Y., Liang, Y., Wang, S., Yang, Z. Y. & Ye, H. S. A hybrid genetic algorithm with wrapper-embedded approaches for feature selection. IEEE Access 6, 22863–22874 (2018).

Guyon, I. & Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn Res. 3, 1157–1182 (2003).

Fan, J. & Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001).

Zhang, H. H. & Lu, W. Adaptive Lasso for Cox’s proportional hazards model. Biometrika 94(3), 691–703 (2007).

Zhang, C. H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010).

Rosset, S. & Zhu, J. Piecewise linear regularized solution paths. Ann. Stat. 35, 1012–1030 (2007).

Xu, Z., Zhang, H., Wang, Y., Chang, X. & Liang, Y. L1/2 regularization. Sci. China Inf. Sci. 53(6), 1159–1169 (2010).

Xu, Z., Chang, X., Xu, F. & Zhang, H. L1/2 regularization: A thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23(7), 1013–1027 (2012).

Candes, E. J., Wakin, M. B. & Boyd, S. P. Enhancing sparsity by reweighted L1 minimization. J. Fourier Anal. Appl. 14(5–6), 877–905 (2008).

Yuan, M. & Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc.: Ser. B (Stat. Methodol.) 68(1), 49–67 (2006).

Zou, H. & Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc.: Ser. B (Stat. Methodol.) 67(2), 301–320 (2005).

Efron, B., Hastie, T., Johnstone, I. & Tibshirani, R. Least angle regression. Ann. Stat. 32(2), 407–499 (2004).

Fan, J. & Li, R. Variable selection for Cox’s proportional hazards model and frailty model. Ann. Stat. 30, 74–99 (2002).

Zou, H. & Zhang, H. H. On the adaptive elastic-net with a diverging number of parameters. Ann. Stat. 37(4), 1733 (2009).

Zeng, L. & Xie, J. Group variable selection via SCAD-L 2. Statistics 48(1), 49–66 (2014).

Huang, H. H., Liu, X. Y. & Liang, Y. Feature selection and cancer classification via sparse logistic regression with the hybrid L1/2+ 2 regularization. PLoS ONE 11(5), e0149675 (2016).

Furey, T. S. et al. Support vector machine classification and validation of cancer tissue samples using microarray expression data. Bioinformatics 16(10), 906–914 (2000).

Medjahed, S. A., Saadi, T. A. & Benyettou, A. Breast cancer diagnosis by using k-nearest neighbor with different distances and classification rules. Int. J. Comput. Appl. 62(1), 1–5 (2013).

Zhou, X., Liu, K. Y. & Wong, S. T. Cancer classification and prediction using logistic regression with Bayesian gene selection. J. Biomed. Inform. 37(4), 249–259 (2004).

Cawley, G. C. & Talbot, N. L. Gene selection in cancer classification using sparse logistic regression with Bayesian regularization. Bioinformatics 22(19), 2348–2355 (2006).

Algamal, Z. Y. & Lee, M. H. A two-stage sparse logistic regression for optimal gene selection in high-dimensional microarray data classification. Adv. Data Anal. Classif. 13(3), 753–771 (2019).

Algamal, Z. An efficient gene selection method for high-dimensional microarray data based on sparse logistic regression. Electron. J. Appl. Stat. Anal. 10(1), 242–256 (2017).

Shevade, S. K. & Keerthi, S. S. A simple and efficient algorithm for gene selection using sparse logistic regression. Bioinformatics 19(17), 2246–2253 (2003).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc.: Ser. B (Methodol.) 58(1), 267–288 (1996).

Friedman, J., Hastie, T. & Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33(1), 1 (2010).

Algamal, Z. Y. & Lee, M. H. Penalized logistic regression with the adaptive LASSO for gene selection in high-dimensional cancer classification. Expert Syst. Appl. 42(23), 9326–9332 (2015).

Algamal, Z. Y. & Lee, M. H. Regularized logistic regression with adjusted adaptive elastic net for gene selection in high dimensional cancer classification. Comput. Biol. Med. 67, 136–145 (2015).

Liang, Y. et al. Sparse logistic regression with a L 1/2 penalty for gene selection in cancer classification. BMC Bioinform. 14(1), 198 (2013).

Xia, L. Y. et al. Descriptor selection via log-sum regularization for the biological activities of chemical structure. Int. J. Mol. Sci. 19(1), 30 (2018).

Jänne, P. A. et al. AZD9291 in EGFR inhibitor–resistant non–small-cell lung cancer. N. Engl. J. Med. 372(18), 1689–1699 (2015).

Nath, A. & Chan, C. Genetic alterations in fatty acid transport and metabolism genes are associated with metastatic progression and poor prognosis of human cancers. Sci. Rep. 6, 18669 (2016).

Pak, M. G., Shin, D. H., Lee, C. H. & Lee, M. K. Significance of EpCAM and TROP2 expression in non-small cell lung cancer. World J. Surg. Oncol. 10(1), 53 (2012).

Chen, X., Wang, L., Qu, J., Guan, N. N. & Li, J. Q. Predicting miRNA–disease association based on inductive matrix completion. Bioinformatics 34(24), 4256–4265 (2018).

Chen, X., Xie, D., Zhao, Q. & You, Z. H. MicroRNAs and complex diseases: From experimental results to computational models. Brief. Bioinform. 20(2), 515–539 (2019).

Chen, X., Yin, J., Qu, J. & Huang, L. MDHGI: Matrix Decomposition and Heterogeneous Graph Inference for miRNA-disease association prediction. PLoS Comput. Biol. 14(8), e1006418 (2018).

Chen, X., Yan, C. C., Zhang, X. & You, Z. H. Long non-coding RNAs and complex diseases: From experimental results to computational models. Brief. Bioinform. 18(4), 558–576 (2017).

Funding

Tis work is supported in part by the Key Project for University of Department of Education of Guangdong Province of China Funds (Natural) under Grant.

Author information

Authors and Affiliations

Contributions

X.Y.L. and S.B.W. conceived the conception, designed and developed the method, acquired and analyzed the data and result. W.Q.Z., Z.J.Y. and H.B.X. wrote, reviewed and revised the manuscript. X.Y.L. is the correspondence author. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, XY., Wu, SB., Zeng, WQ. et al. LogSum + L2 penalized logistic regression model for biomarker selection and cancer classification. Sci Rep 10, 22125 (2020). https://doi.org/10.1038/s41598-020-79028-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-79028-0

- Springer Nature Limited

This article is cited by

-

Feature selection techniques for machine learning: a survey of more than two decades of research

Knowledge and Information Systems (2024)

-

Mortality Prediction of Various Cancer Patients via Relevant Feature Analysis and Machine Learning

SN Computer Science (2023)