Abstract

Holographic display is the only technology that can offer true 3D with all the required depth cues. Holographic head-worn displays (HWD) can provide continuous depth planes with the correct stereoscopic disparity for a comfortable 3D experience. Existing HWD approaches have small field-of-view (FOV) and small exit pupil size, which are limited by the spatial light modulator (SLM). Conventional holographic HWDs are limited to about 20° × 11° FOV using a 4 K SLM panel and have fixed FOV. We present a new optical architecture that can overcome those limitations and substantially extend the FOV supported by the SLM. Our architecture, which does not contain any moving parts, automatically follows the gaze of the viewer’s pupil. Moreover, it mimics human vision by providing varying resolution across the FOV resulting in better utilization of the available space-bandwidth product of the SLM. We propose a system that can provide 28° × 28° instantaneous FOV within an extended FOV (the field of view that is covered by steering the instantaneous FOV in space) of 60° × 40° using a 4 K SLM, effectively providing a total enhancement of > 3 × in instantaneous FOV area, > 10 × in extended FOV area and the space-bandwidth product. We demonstrated 20° × 20° instantaneous FOV and 40° × 20° extended FOV in the experiments.

Similar content being viewed by others

Introduction

Head-worn displays (HWDs) have been gathering more and more attention over the last decade, especially due to the increasing popularity of augmented reality and virtual reality applications1. The majority of HWDs are fixed focus stereoscopic systems2,3, which results in a mismatch between the accommodation (focus) and vergence (rotation of the eyes to maintain single binocular vision) responses and inevitably leads to viewing discomfort4,5.

Holographic HWDs, on the other hand, show computer generated holograms (CGHs) with the correct perspective and all the natural depth cues to each eye by emulating the wavefront pattern that would be emitted from the displayed 3D objects6,7. As a result, the user can focus directly on the gazed 3D object, eliminating the discomfort caused by the vergence-accommodation conflict. In holographic HWDs, CGHs are typically displayed on phase-only spatial light modulators (SLMs)8. The need for relay optics is not present for such displays, since the SLM can be imaged at an arbitrary distance away from the eye9.

Despite their great potential, current holographic HWDs suffer from field-of-view (FOV) and eyebox (exit pupil) limitations, due to the limited pixel counts of currently available SLMs. Total pixel count of the SLM puts an upper limit on the space-bandwidth product (SBP) of the system10,11,12,13. To alleviate the SBP limitations, holographic displays can utilize pupil tracking and deliver the object waves through two small eyeboxes around the eye pupil, which maximizes the FOV attainable from the system14. State-of-the-art SLMs can have resolutions of up to 4 K (3,840 horizontal and 2,160 vertical pixels), a display designed with such an SLM would have a limited SBP (= product of FOV and eyebox size) of 100 deg × mm in the horizontal axis. As an example, one can obtain a horizontal FOV of 20 degrees and an eyebox size of 5 mm. A FOV of 20 degrees is small for a true AR experience and a 5 mm static (non-moving) eyebox is also small for a comfortable viewing experience using a binocular HWD15. In order to match human vision capabilities, one might desire a FOV of 80 degrees and an eyebox size of 15 mm, which will require an SLM with pixel count that is in the order of 50,000 pixels in the horizontal axis (more than gigapixels in total). Such an SLM is beyond the capabilities of current technology and would be overkill from the system design perspective. Various methods have been attempted to increase the eyebox size and the FOV simultaneously with state-of-the-art SLMs, including time multiplexing16,17 and using multiple SLMs for spatial multiplexing18,19,20. Despite their advantages over using a single SLM, these approaches result in complex and high-cost systems.

Since it is not currently possible to rival the FOV of human vision using holographic displays, one solution is to implement a foveated display where only the CGH for the gazed object is being generated and the peripheral image is displayed with a low-resolution microdisplay14. Using the properties of the human visual system is common practice in other display technologies, such as light field displays21,22. It is possible to combine a travelling microdisplay with a wide field-of-view peripheral display and enable natural focus depth using either a light field or a multifocal/varifocal approach23. Various methods have been attempted to steer the FOV from one region to another, which generally involve utilizing motorized optical components. Such solutions suffer from actuator size and synchronization issues between multiple dynamic elements, as it is not possible to steer the FOV with a single dynamic component.

We propose a novel optical architecture for holographic HWD where the SLM is imaged at the rotation center of the eye. In this architecture, instantaneous FOV can be steered without using any dynamic optical components. A particular implementation of such a system in eyeglasses form factor is illustrated in Fig. 1.

Foveated holographic display with the eye-centric design concept in eyeglass format. (a) Red box shows the total FOV that can be delivered by the AR display. Within the FOV, an SLM is used to deliver high-resolution holographic images to the fovea. The peripheral content is delivered by a low-resolution microdisplay (e.g., micro-LED or OLED on transparent substrate) that can be placed on the eyeglass frame. (b) The high-resolution holographic image that is projected to the fovea appears sharp and the peripheral image appears blurred. (c) The eye-centric design ensures that the SLM image follows the fovea for all gaze directions by imaging the SLM to the rotation center of the eye. (d) Eye rotations are measured in real-time with a pupil tracker to update the hologram on the SLM and content on the peripheral display accordingly. (e) A close-up illustration of the eye-centric design.

Eye-centric design concept

In conventional holographic displays, steering the FOV (i.e., keeping the image centered at the fovea as the eye rotates) is not possible by changing the CGH. As illustrated in Fig. 2a–c, eye rotation causes the displayed image to shift towards the peripheral vision. SLM or lenses need to be moved mechanically to bring the image onto the fovea. Imaging the SLM at the rotation center of the eye, which we call the eye-centric design, is at the core of our proposed architecture. Since the SLM is imaged at the rotation center of the eye, changing gaze directions are handled by changing the direction of rays via CGH as illustrated in Fig. 2d–e. The direction of the rays are altered by adding diffractive lens terms and grating terms on the CGH without any moving components. In essence, a demagnified SLM image at the center of the eye projects the holographic image onto the fovea and the eye rotations are handled by adding tilt terms to the CGH. FOV, eyebox size and spatial resolution tradeoffs for different cases are discussed in the supplementary material.

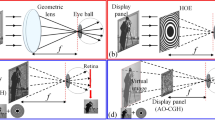

Eye-centric design concept. (a) Conventional display architecture where the SLM is imaged at the retina. (b) If the eye rotates, the SLM image shifts to the peripheral vision. (c) SLM movement is required to shift the image back to fovea. (d) In our eye-centric design the SLM is imaged at the rotation center of the eye projects a holographic image to the retina. (e) If the eye rotates, light from the SLM still enters through the pupil and always falls on the fovea. (f) Conventional displays have fixed FOV and eyebox size, therefore fixed spatial resolution across the FOV. In the eye-centric design, the eyebox size decreases towards the edges of the instantaneous FOV due to vignetting effects, which results in degrading resolution away from the foveal vision. As the instantaneous FOV follows the pupil, an extended FOV is achieved.

The eye-centric design has two major advantages compared to conventional holographic HWD architectures: (i) Instantaneous FOV can be digitally steered by only modifying the CGH to follow the gaze, providing a natural foveated display with no moving parts. (ii) The extended FOV is much larger than what is achievable with conventional holographic HWDs.

The eye-centric design can be realized using various optical components as illustrated in Fig. 3. The key point is to image the center of the SLM to the rotation center of the eye. If the SLM image is not located at the rotation center of the eye, the pupil, the fovea, and the SLM image will not be lined up for a gaze direction other than the nominal one, which results in a reduction in the instantaneous FOV. Imaging the SLM to the center of the eye can be achieved by using a flat holographic optical element (HOE) in front of the eye that can be optimized for small form factor, but color correction remains a challenge for wide FOV. Figure 3b shows an alternative design using a section of an ellipsoid as the combiner element. An SLM that is placed at one of the foci of the ellipsoid is imaged at the second focal point. This is a simpler solution, but the magnification is not constant across the FOV. Additional imaging optics can be used to design a more compact system with balanced magnification as illustrated in Fig. 3c.

For the single ellipsoidal mirror based imaging optics case, the numerical aperture of the imaging optics is the limiting factor for the extended FOV. A larger mirror would result in a wider area where the instantaneous FOV can be steered, but it would interfere with the user’s face as the eye relief is relatively short. This would not be an issue for the temporal gaze direction, but the extremity of the change in magnification for this case results in a very small eyebox size.

Since there are no additional optics required between the eye and the ellipsoid, eye relief can be adjusted by selecting the ellipsoid parameters accordingly. If a single ellipsoid mirror with smaller eye-relief is used, the ellipse would need to be tilted more for the light from the SLM to clear the viewer’s head, and the magnification would vary more from nasal to temple direction. However, using multiple lenses, freeform lenses embedded in solid optics, and HOEs can provide good optical solutions for future implementations of the core eye-centric architecture.

Results

For experimental verification of the eye-centric design, we used the imaging system in Fig. 3b. We placed the SLM at one of the focal points of the ellipsoid and the rotation center of the eye was aligned with the other focus. The ellipsoid mirror was tilted in the temple direction (towards the ear) by 17° to achieve better form factor as shown in Fig. 4.

Experimental setup. (a) Illustration of the optical setup. (b) Side view illustration of the setup, where the peripheral display is shown clearly. (c) Experimental setup without the peripheral display and beamsplitter. (d) Block diagram illustrating how an input image is displayed using the SLM and the peripheral display for the nominal gaze direction.

Figures 4 and 5 shows the foveated display with no moving parts. A low-resolution image of the periodic table is displayed on the peripheral display at all times. The high-resolution image of the viewed section is projected onto the fovea by updating the CGH on the SLM. The gaze angle is measured by a pupil tracker camera and the holograms are updated accordingly to steer the projected image. The SLM is limited to 30 fps, therefore, dynamic hologram update with real-time pupil tracker is not possible in this system. As the eye rotates (as seen by the pupil camera images), the CGH image on the fovea remains high-resolution at all times and the peripheral display image is low-resolution and blurred, mimicking human vision. The term “high resolution image” is used to refer to the central vision image provided as a hologram by the SLM. The low resolution image is used to refer to the peripheral image on an LCD, which is placed closer than the near-point of the human eye. Our experimental results are limited to about 20 degrees FOV and show about 10cycles/degrees resolution, which is below the retinal resolution limit. The limitations are mainly due to tight alignment tolerances and imperfections in producing the elliptical mirror. The content on the LCD is masked to provide only the regions outside the FOV provided by the CGH image. We demonstrated FOV steering in the horizontal direction only, so the instantaneous FOV is 20 × 20 degrees and extended FOV is up to 40 × 20 degrees in the experiments. The range was mainly limited by tight experimental tolerances and mounting aberrations in the bench setup. Simulation results in Supplementary Fig. 2 illustrate the extended FOV up to 60 × 40 degrees.

Experimental verification of foveated display. (a,c,e) Experimental results of the eye-centric foveated holographic display. Each row corresponds to a gaze direction shown in Fig. 4a. The periodic table is displayed by the SLM. As the eye rotates, a high-quality holographic view of different regions of the image is seen. (b,d,f) Corresponding pupil images captured by the camera. Note the shift of pupil center with respect to the yellow reference line due to eye rotation. (g) Simulation of human vision using the image in (a). The white rings indicate the field of view of the human eye (10°, 20° and 30° FOV). The resolution rapidly degrades away from the fovea.

Human vision was also simulated as seen in Fig. 5g, where only the resolution degradation of the human eye was taken into consideration. The minimal resolvable angle size was assumed to be linearly increasing with eccentricity, which is a commonly used approximation in both vision and computer graphics24,25. The simulation results show that the difference between the regions generated by the holographic and the peripheral displays is not completely eliminated, but it is not as severe as the difference observed in the camera images.

Figure 6 demonstrates the capability of providing true depth information of our foveated holographic display. Objects are displayed at different depths so only the gazed depth appears sharp, while others appear blurred due to expected focus blur effect. Note that coherent blur appears sharper in camera images and smoother to the eye. We can also display color holograms by time-sequentially updating the CGH on the SLM and turning on the correct light source as seen in Fig. 7.

Holographic display with correct focus blur. Different sections of the text are displayed at different depths. In (a) and (b), the upper-left section of the text is displayed at 100 cm and the rest of the text is displayed at 25 cm. As the camera focus is changed, different regions appear sharp or blurred accordingly. Similarly in (c) and (d), top and bottom rows are displayed at 25 cm and 100 cm, respectively.

Discussion

In this paper, we proposed the eye-centric approach for 3D holographic HWDs, which makes it possible to steer the instantaneous FOV within a larger extended FOV without using any moving parts or dynamic optical components. Given a reflective phase-only SLM, we have developed a design procedure for an eye-centric holographic HWD and described an algorithm to compute holograms for any optical setup as discussed in detail in the supplementary material. The experimental results verify that the proposed system is able to display 3D images in a wide, steerable FOV.

In conventional holographic HWDs, the rays from any virtual object point fill the entire eyebox. The eyebox size and resolution are constant across the FOV as shown in Fig. 2f. However, the resolution in our eye-centric architecture is not constant across the FOV. As illustrated in Fig. 2d–e, while rays from central object points fill the eyebox fully, those from the peripheral object points fill the eyebox partially, due to vignetting from the SLM. As the object points get closer to the periphery, the available eyebox size decreases gradually. This results in a degrading resolution towards the peripheral vision, mimicking the human visual system. Using this effect, it is possible to achieve an instantaneous FOV that is larger than the FOV achieved in conventional architectures as compared in Fig. 2f.

Magnification (M) is one of the key parameters of the ellipsoid for our display. Smaller magnification brings larger eyebox and FOV, but high enough magnification is needed for sufficient separation of the diffraction orders due to the pixelated structure of the SLM, which results in a small eyebox and small FOV. Therefore, an optimum point should be selected for a given SLM and desired display parameters. According to our analysis, M = 4 gives the best trade-off between eyebox size and instantaneous FOV. For detailed discussions on selecting the optimal magnification, see the supplementary material.

Experimental demonstrations were limited to an instantaneous FOV of 20 × 20 degrees. Zemax simulations show that a 28 × 28 degrees FOV is achievable with this architecture. While the FOV aspect ratio is linked to pixel aspect ratio (equal to 1 for square pixels), the resolution in each axis is linked to display aspect ratio (equal to 16:9 for our SLM). Conventional holographic display architectures can provide a static FOV of 20 × 11.25 degrees using a 4 K SLM. Therefore, our proposed architecture can effectively provide a total enhancement of 1.4 × 2.5 times improvement in horizontal and vertical axis. Furthermore, the architecture allows for steering the FOV within an extended FOV as the eye rotates. We show that 3 × 4 times improvement in horizontal and vertical extended FOV (> 10 × in extended FOV area) and a similar improvement in space-bandwidth product is achievable.

For eye-centric design to work as intended, the pupil position should be measured in real time to update the hologram on the SLM and the image on the peripheral display accordingly. We provided basic proof of concept demonstrations using off-line computed holograms and precise positioning of the camera during recording of the experimental images. Details of real-time pupil tracking26,27 and real time computation of CGH for generic architectures9 are discussed elsewhere. Fast hologram computation for eye-centric architecture will be the subject of future publications.

Materials and methods

In our experiments, we used Holoeye GAEA as the SLM, which is a 4 K (3,840 × 2,160 pixels) LCoS phase modulator chip with 3.74 μm pixel pitch. The light source is a LASOS MCS4 series 3 wavelength fiber coupled laser with wavelengths of 473 nm, 515 nm and 639 nm for blue, green and red colors, respectively. The ellipsoid mirror in our experiments has the equation parameters a = 125 mm, b = 86.96 mm and c = 86.96 mm, as defined in the supplementary material. We cut a section of the ellipsoid that gives 60° horizontal and 40° vertical FOV. The part is fabricated using diamond turning on aluminum substrate.

In the optical setup, the RGB laser beam out of a single mode fiber is collimated by a lens and the collimated beam is reflected towards the SLM by a pellicle beamsplitter to avoid ghosts. The incident beam on the SLM is modulated by the CGH and reflected towards the ellipsoid. The ellipsoid creates the Fourier plane at the eye pupil plane and the diffraction orders are filtered-out by the pupil. The ellipsoid also images the SLM to the rotation center of the eye and a holographic image forms on the retina. A pupil tracker camera measures the pupil position and the CGH on the SLM is updated accordingly.

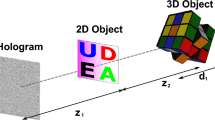

The 3D scene is modelled as a set of luminous points, similar to methods where 3D objects are represented by point clouds28,29,30, and the hologram corresponding to the entire scene is generated by superposing the holograms for the individual points. The hologram for a single point is computed with an algorithm based on ray-tracing, which makes use of Zernike polynomials to estimate the wavefront exiting the SLM31,32,33. As a final step, the iterative Fourier transform algorithm is employed for phase encoding34,35. The hologram calculation algorithm is discussed in further detail in the supplementary material.

As the fabricated ellipsoid is not transparent, a true augmented reality demonstration could not be made. In order to provide the peripheral image, we inserted a beam splitter between the eye and the ellipsoid and showed the low-resolution peripheral image on an LCD screen at 10 cm from the eye location without using any magnifier lenses in order to keep the overall complexity of the system low. The peripheral display can be located at any distance from the eye. We chose a distance of 10 cm to keep the sizes of both the peripheral display and the entire HWD bench top prototype small.

Data availability

The data supporting the figures in this paper and other findings of this study are available from the corresponding author upon request.

References

Rolland, J. & Cakmakci, O. Head-worn displays: the future through new eyes. Opt. Photonics News https://doi.org/10.1364/opn.20.4.000020 (2009).

Kress, B. & Starner, T. A review of head-mounted displays (HMD) technologies and applications for consumer electronics. In Photonic Applications for Aerospace, Commercial, and Harsh Environments IV (2013). https://doi.org/10.1117/12.2015654

Urey, H., Chellappan, K. V., Erden, E. & Surman, P. State of the art in stereoscopic and autostereoscopic displays. In Proceedings of the IEEE (2011). https://doi.org/10.1109/JPROC.2010.2098351

Lambooij, M., Fortuin, M., Ijsselsteijn, W. A. & Heynderickx, I. Measuring visual discomfort associated with 3D displays. In Stereoscopic Displays and Applications XX (2009). https://doi.org/10.1117/12.805977

Hoffman, D. M., Girshick, A. R., Akeley, K. & Banks, M. S. Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. https://doi.org/10.1167/8.3.33 (2008).

Yaraş, F., Kang, H. & Onural, L. State of the art in holographic displays: a survey. IEEE/OSA J. Disp. Technol. https://doi.org/10.1109/JDT.2010.2045734 (2010).

Maimone, A., Georgiou, A. & Kollin, J. S. Holographic near-eye displays for virtual and augmented reality. In ACM Transactions on Graphics (2017). https://doi.org/10.1145/3072959.3073624

Slinger, C., Cameron, C. & Stanley, M. Computer-generated holography as a generic display technology. Computer (Long. Beach. Calif). (2005). https://doi.org/10.1109/MC.2005.260

Kazempourradi, S., Ulusoy, E. & Urey, H. Full-color computational holographic near-eye display. J. Inf. Disp. https://doi.org/10.1080/15980316.2019.1606859 (2019).

Macovski A. Hologram Information Capacity. J. Opt. Soc. Am. (1970).

Chen, J. S., Smithwick, Q. Y. J. & Chu, D. P. Coarse integral holography approach for real 3D color video displays. Opt. Express https://doi.org/10.1364/oe.24.006705 (2016).

Agour, M., Falldorf, C. & Bergmann, R. B. Holographic display system for dynamic synthesis of 3D light fields with increased space bandwidth product. Opt. Express https://doi.org/10.1364/oe.24.014393 (2016).

Yu, H., Lee, K., Park, J. & Park, Y. Ultrahigh-definition dynamic 3D holographic display by active control of volume speckle fields. Nat. Photonics https://doi.org/10.1038/nphoton.2016.272 (2017).

Häussler, R., Schwerdtner, A. & Leister, N. Large holographic displays as an alternative to stereoscopic displays. In Stereoscopic Displays and Applications XIX (2008). https://doi.org/10.1117/12.766163

Rolland, J. P., Thompson, K. P., Urey, H. & Thomas, M. See-through head worn display (HWD) architectures. In Handbook of Visual Display Technology (2012). https://doi.org/10.1007/978-3-540-79567-4_10.4.1

Liu, Y.-Z., Pang, X.-N., Jiang, S. & Dong, J.-W. Viewing-angle enlargement in holographic augmented reality using time division and spatial tiling. Opt. Express https://doi.org/10.1364/oe.21.012068 (2013).

Sando, Y., Barada, D. & Yatagai, T. Holographic 3D display observable for multiple simultaneous viewers from all horizontal directions by using a time division method. Opt. Lett. https://doi.org/10.1364/ol.39.005555 (2014).

Hahn, J., Kim, H., Lim, Y., Park, G. & Lee, B. Wide viewing angle dynamic holographic stereogram with a curved array of spatial light modulators. Opt. Express https://doi.org/10.1364/oe.16.012372 (2008).

Finke, G., Kozacki, T. & Kujawińska, M. Wide viewing angle holographic display with a multi-spatial light modulator array. In Optics, Photonics, and Digital Technologies for Multimedia Applications (2010). https://doi.org/10.1117/12.855778

Kozacki, T., Kujawinska, M., Finke, G., Hennelly, B. & Pandey, N. Extended viewing angle holographic display system with tilted SLMs in a circular configuration. Appl. Opt. https://doi.org/10.1364/AO.51.001771 (2012).

Lee, S. et al. Foveated retinal optimization for see-through near-eye multi-layer displays. IEEE Access https://doi.org/10.1109/ACCESS.2017.2782219 (2017).

Liu, M., Lu, C., Li, H. & Liu, X. Near eye light field display based on human visual features. Opt. Express https://doi.org/10.1364/oe.25.009886 (2017).

Kim, J. et al. Foveated AR: dynamically-foveated augmented reality display. ACM Trans. Graph. https://doi.org/10.1145/3306346.3322987 (2019).

Strasburger, H., Rentschler, I. & Jüttner, M. Peripheral vision and pattern recognition: a review. J. Vis. https://doi.org/10.1167/11.5.13 (2011).

Guenter, B., Finch, M., Drucker, S., Tan, D. & Snyder, J. Foveated 3D graphics. In ACM Transactions on Graphics (2012). https://doi.org/10.1145/2366145.2366183

Hedili, M. K., Soner, B., Ulusoy, E. & Urey, H. Light-efficient augmented reality display with steerable eyebox. Opt. Express https://doi.org/10.1364/oe.27.012572 (2019).

Başak, U. Y., Kazempourradi, S., Yilmaz, C., Ulusoy, E. & Urey, H. Dual focal plane augmented reality interactive display with gaze-tracker. OSA Contin. https://doi.org/10.1364/osac.2.001734 (2019).

Lucente, M. E. Interactive computation of holograms using a look-up table. J. Electron. Imaging https://doi.org/10.1117/12.133376 (1993).

Shimobaba, T., Nakayama, H., Masuda, N. & Ito, T. Rapid calculation algorithm of Fresnel computer-generated-hologram using look-up table and wavefront-recording plane methods for three-dimensional display. Opt. Express https://doi.org/10.1364/oe.18.019504 (2010).

Kim, S.-C., Dong, X.-B., Kwon, M.-W. & Kim, E.-S. Fast generation of video holograms of three-dimensional moving objects using a motion compensation-based novel look-up table. Opt. Express https://doi.org/10.1364/oe.21.011568 (2013).

Shi, Y., Cao, H., Gu, G. & Zhang, J. Technique of applying computer-generated holograms to airborne head-up displays. Hologr. Displays Opt. Elem. II(3559), 108–112 (2003).

Cao, H., Sun, J. & Chen, G. Bicubic uniform B-spline wavefront fitting technology applied in computer-generated holograms. In 2nd International Symposium on Advanced Optical Manufacturing and Testing Technologies: Advanced Optical Manufacturing Technologies (2006). https://doi.org/10.1117/12.674234

Li, S. et al. A practical method for determining the accuracy of computer-generated holograms for off-axis aspheric surfaces. Opt. Lasers Eng. https://doi.org/10.1016/j.optlaseng.2015.08.009 (2016).

Fienup, J. R. Iterative method applied to image reconstruction and to computer-generated holograms. Opt. Eng. https://doi.org/10.1117/12.7972513 (1980).

Wyrowski, F. & Bryngdahl, O. Iterative Fourier-transform algorithm applied to computer holography. J. Opt. Soc. Am. A https://doi.org/10.1364/josaa.5.001058 (1988).

Acknowledgements

The authors would like to thank Selen Esmer for helping with the rendering of 3D figures. This work was funded by the European Research Council (ERC)/ERC-AdG Advanced Grant Agreement (340200).

Author information

Authors and Affiliations

Contributions

M.K.H. designed the ellipsoid and got the parts fabricated. A.C., M.K.H., and E.U. conducted the experiments. A.C. and M.K.H analyzed the results. A.C. and E.U. developed the hologram computation algorithm. H.U. conceived and supervised the project. All co-authors wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

H.U., E.U., and M.K.H are inventors on a patent application describing the device. H.U. and E.U. have involvement with financial interest in CY Vision related to the subject matter discussed in this manuscript. A.C declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cem, A., Hedili, M.K., Ulusoy, E. et al. Foveated near-eye display using computational holography. Sci Rep 10, 14905 (2020). https://doi.org/10.1038/s41598-020-71986-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-71986-9

- Springer Nature Limited

This article is cited by

-

Real-time 4K computer-generated hologram based on encoding conventional neural network with learned layered phase

Scientific Reports (2023)

-

End-to-end learning of 3D phase-only holograms for holographic display

Light: Science & Applications (2022)

-

Foveated glasses-free 3D display with ultrawide field of view via a large-scale 2D-metagrating complex

Light: Science & Applications (2021)