Abstract

We design a framework based on Mask Region-based Convolutional Neural Network to automatically detect and separately extract anatomical components of mosquitoes-thorax, wings, abdomen and legs from images. Our training dataset consisted of 1500 smartphone images of nine mosquito species trapped in Florida. In the proposed technique, the first step is to detect anatomical components within a mosquito image. Then, we localize and classify the extracted anatomical components, while simultaneously adding a branch in the neural network architecture to segment pixels containing only the anatomical components. Evaluation results are favorable. To evaluate generality, we test our architecture trained only with mosquito images on bumblebee images. We again reveal favorable results, particularly in extracting wings. Our techniques in this paper have practical applications in public health, taxonomy and citizen-science efforts.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Mosquito-borne diseases are still major public health concerns. Across the world today, surveillance of mosquito vectors is still a manual process. Steps include trap placement, collection of specimens, and identifying each specimen one by one under a microscope to determine the genus and species. Unfortunately, this process is cognitively demanding and takes hours to complete. This is because, mosquitoes that fall into traps include both vectors, and also many that are not vectors. Recently, AI approaches are being designed to automate classification of mosquitoes. Works like1,2,3,4 design machine learning models based on hand-crafted features from image data that are generated from either smartphones or digital cameras. Two recent 2020 papers design deep neural network techniques (that do not need hand-crafted features) to classify mosquitoes from image data generated via smartphones5,6. Other works process sounds of mosquito flight for classification, based on the notion that wing-beat frequencies are unique across mosquito species7,8,9,10.

In this paper, we demonstrate novel applications for mosquito images when processed using AI techniques Since, the most descriptive anatomical components of mosquitoes are the thorax, abdomen, wings and legs, we present a technique in this paper that extracts just the pixels comprising of these specific anatomical components from any mosquito image. Our technique is based on Mask Region-based Convolutional Neural Network11. Here, we first extract feature maps from our training dataset of 1500 smartphone images of 200 mosquito specimens spread across nine species trapped in Florida. Our network to extract feature maps is ResNet-101 with a Feature Pyramid Network12 (an architecture that can handle images at multiple scales, and one well suited for our problem). Subsequently, we detect and localize anatomical components only (denoted as foreground) in the images in the form of rectangular anchors. Once the foreground is detected, the next step is to segment the foreground pixels by adding a branch to mask (i.e., extract pixels of) each component present in the foreground. This is done in parallel with two other branches to classify the extracted rectangular anchors and to tighten them to improve accuracy. Evaluation of our technique reveals favorable results. We see that the thorax, wings, abdomen and legs are extracted with high Precision (i.e., very low False Positives). For legs though, False Negatives are high, since the number of background pixels overwhelm the number of leg pixels in the image. Nevertheless, we see that enough descriptive features within the leg of a mosquito are indeed extracted out, since mosquito legs are long, and the descriptive features do repeat across the leg.

We believe that extracting images of mosquito anatomy has impact towards (a) faster classification of mosquitoes in the wild; (b) new digital-based, larger-scale and low-cost training programs for taxonomists; (c) new and engaging tools to stimulate broader participation in citizen-science efforts and more. Also, to evaluate generality, we tested our architecture trained on mosquito images with images of bumblebees (which are important pollinators). We see excellent accuracy in extracting the wings, and to a certain extent, the thorax, hence demonstrating the generality of our technique for many classes of insects.

Results

We trained a Mask Region-based Convolutional Neural Network (Mask R-CNN) to automatically detect and separately extract anatomical components of mosquitoes-thorax, wings, abdomen and legs from images. For this study, we utilized 23 specimens of Aedes aegypti and Aedes infirmatus, and 22 specimens of Aedes taeniorhynchus, Anopheles crucians, Anopheles quadrimaculatus, Anopheles stephensi, Culex coronator, Culex nigripalpus and Culex salinarius. After imaging the specimens via multiple smartphones, our dataset was 1600 mosquito images. These were split into 1500 images for training the neural network, and 100 images for validation. Together, this dataset yielded 1600 images of thorax, 1600 images of abdomen, 3109 images of wings and 6223 images of legs. We trained our architecture illustrated in Fig. 1 on an Nvidia graphic processing unit (GPU) cluster of four GeForce GTX TITAN X cards having 3,583 cores and 12 GB memory each. It took 48 h to train and validate the architecture. For testing, we trapped and imaged (via smartphones) another set of 27 mosquitoes, i.e., three per species. The testing data set consisted of 27 images of thorax and abdomen, 48 images of wings and 105 images of legs.

First, we visually present results of our technique to extract anatomical components of a mosquito in Fig. 2 for one sample image among the nine species in our testing dataset. This figure is representative of all other images tested. We see that the anatomical components are indeed coming out clearly from image data. Next, we quantify performance for our entire dataset using four standard metrics: Precision, Recall, Intersection over Union (IoU) and Mean Average Precision (mAP). Precision is basically the fraction of relevant instances (here, pixels) among those instances (again, pixels) that are retrieved. Recall is the fraction of the relevant instances that were actually retrieved. IoU is a metric that assesses the ratio of areas of the intersection and the union among the predicted pixels and the ground truth. A higher IoU means more overlap between predictions and the ground-truth, and so better classification. To define our final metric, the Mean Average Precision (mAP), we define another metric, Average precision (AP), which is the average of all the Precision values for a range of Recall (0 to 100 for our problem) at a certain preset IoU threshold for a particular class among the four for our problem (i.e., wings, thorax, legs and abdomen). This metric essentially balances both Precision and Recall for a particular value of IoU for one class. Finally, the Mean Average Precision (mAP) is the average of AP values among all our four classes.

The Precision and Recall values for the validation and testing datasets are presented in Tables 1 and 2 respectively for various values of IoU. We see that the performance metrics in the validation dataset during training match the metrics during testing (i.e., unseen images) post training across all IoUs. This convinces us that our architecture is robust and not overfitted. Precision for all classes is high, which means that false positives are low. Recall is also high for the thorax, abdomen and wings, indicating low false negatives for these classes. However, Recall for legs class is relatively poor. It turns out that a non-trivial portion of the leg pixels are classified as the background in our architecture. While this may seem a bit discouraging, we once again direct readers to Fig. 2, wherein we can see that a very good portion of the legs are still identified and extracted correctly by our architecture (due to the high Precision). As such, the goal of gleaning the morphological markers from all anatomical components is still enabled. Finally, the mean Average Precision is presented in Table 3 for all classes. The lower numbers in Table 3, are due to poorer performance for classifying legs, as compared to thorax, abdomen and wings.

Results from a small experiment with bumblebee images We subsequently verified how our AI architecture that was trained only with mosquito images, performs, when tested with images of bumblebees. Bumblebees (Genus: Bombus) are important pollinators, and detecting them in nature is vital. Figure 3 presents our results for one representative image among three species of bumblebees, although our results are representative of more than 100 bumblebee images we tested. Our image source for bumblebees was Smithsonian National Museum of Natural History in Washington, D.C. Images can be found here13. As we see in Figure 3, our technique is robust in detecting and extracting wings. While the thorax is mostly extracted correctly, the ability to extract out the abdomen and legs is relatively poor. With these results, we are confident that our architecture in its present form, could be used to extract wings of many insects. With appropriate ground-truth data, only minimal tweaks to our architecture will be needed to ensure robust extraction of all anatomical components for a wide range of insects.

Discussion

We now present discussions on the significance of contributions in this paper.

(a) Faster classification of trapped mosquitoes Across the world, where mosquito-borne diseases are problematic, it is standard practice to lay traps, and then come next day to pick up specimens, freeze them and bring them to a facility, where expert taxonomists identify each specimen one-by-one under a microscope to classify the genus and species. This process takes hours each day, and is cognitively demanding. During rainy seasons and outbreaks, hundreds of mosquitoes get trapped, and it may take an entire day to process a batch from one trap alone. Based on technologies we design in this paper, we expect mobile cameras can assist in taking high quality pictures of trapped mosquito specimens, and the extracted anatomies can be used for classification by experts by looking at a digital monitor rather than peer through a microscope. This will result in lower cognitive stress for taxonomists and also speed up surveillance efforts. For interested readers, Table 4 presents details on morphological markers that taxonomists look for to identify mosquitoes used in our study14.

(b). AI and Cloud Support Education for Training Next-generation Taxonomists The process of training taxonomists today across the world consists of very few training institutes, which store a few frozen samples of local and non-local mosquitoes. Trainees interested in these programs are not only professional taxonomists, but also hobbyists. The associated costs to store frozen mosquitoes are not trivial (especially in low economy countries), which severely limit entry into these programs, and also make these programs expensive to enroll. With technologies like the ones we propose in this paper, digital support for trainees is enabled. Benefits include, potential for remote education, reduced operational costs of institutes, reduced costs of enrollment, and opportunities to enroll more trainees. These benefits when enabled in practice will have positive impact to taxonomy, entomology, public health and more.

(c). Digital Preservation of Insect Anatomies under Extinction Threats: Recently, there are concerning reports that insects are disappearing at rapid rates. We believe that digital preservation of their morphologies could itself aid preservation, as more and more citizen-scientists explore nature and share data to identify species under immediate threat. Preservation of insect images many also help educate future scientists across a diverse spectrum.

Conclusions

In this paper, we design a Deep Neural Network Framework to extract anatomical components-thorax, wings, abdomen and legs from mosquito images. Our technique is based on the notion of Mask R-CNN, wherein we learn feature maps from images, emplace anchors around foreground components, followed by classification and segmenting pixels corresponding to the anatomical components within anchors. Our results are favorable, when interpreted in the context of being able to glean descriptive morphological markers for classifying mosquitoes. We believe that our work in this paper has broader impact in public health, entomology, taxonomy education, and newer incentives to engage citizens in participatory sensing.

Methods

Generation of Image Dataset and Preprocessing

In Summer 2019, we partnered with Hillsborough county mosquito control district in Florida to lay outdoor mosquito traps over multiple days. Each morning after laying traps, we collected all captured mosquitoes, froze them in a portable container and took them to the county lab, where taxonomists identified them for us. For this study, we utilized 23 specimens of Aedes aegypti and Aedes infirmatus, and 22 specimens of Aedes taeniorhynchus, Anopheles crucians, Anopheles quadrimaculatus, Anopheles stephensi, Culex coronator, Culex nigripalpus and Culex salinarius. We point out that specimens of eight species were trapped in the wild. The An. stephensi specimens alone were lab-raised whose ancestors were originally trapped in India.

Each specimen was then emplaced on a plain flat surface, and then imaged using one smartphone (among iPhone 8, 8 Plus, and Samsung Galaxy S8, S10) in normal indoor light conditions. To take images, the smartphone was attached to a movable platform 4 to 5 inches above the mosquito specimen, and three photos at different angles were taken. One directly above, and two at \(45^{\circ }\) angles to the specimen opposite from each other. As a result of these procedures, we generated a total of 600 images. Then, 500 of these images were preprocessed to generate the training dataset, and the remaining 100 images were separated out for validation. For preprocessing, the images were scaled down to \(1024 \times 1024\) pixels for faster training (which did not lower accuracy). The images were augmented by adding Gaussian blur and randomly flipping them from left to right. These methods are standard in image processing, which better account for variances during run-time execution. After this procedure, our training dataset increased to 1500 images.

Note here that all mosquitoes used in this study are vectors. Among these, Aedes aegypti is particularly dangerous, since it spreads Zika fever, dengue, chikungunya and yellow fever. This mosquito is also globally distributed now.

Our Deep Neural Network Framework based on Mask R-CNN

To address our goal of extracting anatomical components from a mosquito image, a straightforward approach is to try a mixture of Gaussian models to remove background from the image1,15. But this will only remove the background, without being able to extract anatomical components in the foreground separately. There are other recent approaches in the realm also. One technique is U-Net16, wherein semantic segmentation based on deep neural networks is proposed. However, this technique does not lend itself to instance segmentation (i.e., segmenting and labeling of pixels across multiple classes). Multi-task Network Cascade17 (MNC) is an instance segmentation technique, but it is prone to information loss, and is not suitable for images as complex as mosquitoes with multiple anatomical components. Fully Convolutional Instance-aware Semantic Segmentation18 (FCIS) is another instance segmentation technique, but it is prone to systematic errors on overlapping instances and creates spurious edges, which are not desirable. DeepMask19 developed by Facebook, extracts masks (i.e., pixels) and then uses Fast R-CNN20 technique to classify the pixels within the mask. This technique though is slow as it does not enable segmentation and classification in parallel. Furthermore, it uses selective search to find out regions of interest, which further adds to delays in training and inference.

In our problem, we have leveraged Mask R-CNN11 neural network architecture for extracting masks (i.e. pixels) comprising of objects of interest within an image which eliminates selective search, and also uses Regional Proposal Network (RPN)21 to learn correct regions of interest. This approach best suited for quicker training and inference. Apart from that, it uses superior alignment techniques for feature maps, which helps prevent information loss. The basic architecture is shown in Fig. 1. Adapting it for our problem requires a series of steps presented below.

Annotation for Ground-truth First, we manually annotate our training and validation images using VGG Image Annotator (VIA) tool22. To do so, we manually (and carefully) emplace bounding polygons around each anatomical component in our training and validation images. The pixels within the polygons and associated labels (i.e., thorax, abdomen, wing or leg) serve as ground truth. One sample annotated image is shown in Fig. 4.

Generate Feature Maps using CNN Then, we learn semantically rich features in the training image dataset to recognize the complex anatomical components of the mosquito. To do so, our neural network architecture is a combination of the popular Res-Net101 architecture with Feature Pyramid Networks (FPN)12. Very briefly, ResNet-10123 is a CNN with residual connections, and was specifically designed to remove vanishing gradients at later layers during training. It is relatively simple with 345 layers. Addition of a feature pyramid network to ResNet was attempted in another study, where the motivation was to leverage the naturally pyramidal shape of CNNs, and to also create a subsequent feature pyramid network that combines low resolution semantically strong features with high resolution semantically weak features using a top-down pathway and lateral connections12. This resulting architecture is well suited to learn from images at different scales from only minimal input image scales. Ensuring scale-invariant learning is specifically important for our problem, since mosquito images can be generated at different scales during run-time, due to diversity in camera hardware and human induced variations.

Emplacing anchors on anatomical components in the image In this step, we leverage the notion of Regional Proposal Network (RPN)21 and results from the previous two steps, to design a simpler CNN that will learn feature maps corresponding to ground-truthed anatomical components in the training images. The end goal is to emplace anchors (rectangular boxes) that enclose the detected anatomical components of interest in the image.

Classification and pixel-level extraction Finally, we align the feature maps of the anchors (i.e., region of interest) learned from the above step into fixed sized feature maps which serve as input to three branches to: (a) label the anchors with the anatomical component; (b) extract only the pixels within the anchors that represents an anatomical component; and (c) tighten the anchors for improved accuracy. All three steps are done in parallel.

Loss functions

For our problem, recall that there are three specific sub-problems: labeling the anchors as thorax, abdomen, wings or leg; masking the corresponding pixels within each anchor; and a regressor to tighten anchors. We elaborate now on the loss functions used for these three sub-problems. We do so because, loss functions are a critical component during training and validation of deep neural networks to improve learning accuracy and avoid overfitting.

Labeling (or classification) loss For classifying the anchors, we utilized the Categorical Cross Entropy loss function, and it worked well. For a single anchor j, the loss is given by,

where p is the model estimated probability for the ground truth class of the anchor.

Masking loss Masking is most challenging, considering the complexity in learning to detect only pixels comprising of anatomical components in an anchor. Initially, we experimented with the simple Binary Cross Entropy loss function. With this loss function, we noticed good accuracy for pixels corresponding to thorax, wings and abdomen. But, many pixels corresponding to legs were mis-classified as background. This is because of class imbalance highlighted in Fig. 5, wherein we see significantly larger number of background pixels, compared to number of foreground pixels for anchors (colored blue) emplaced around legs. This imbalance leads to poor learning for legs, because the binary class entropy loss function is biased towards the (much more, and easier to classify) background pixels.

To fix this shortcoming, we investigated another more recent loss function called focal loss24 which lowers the effect of well classified samples on the loss, and rather places more emphasis on the harder samples. This loss function hence prevents more commonly occurring background pixels from overwhelming the not so commonly occurring foreground pixels during learning, hence overcoming class imbalance problems. The focal loss for a pixel i is represented as,

where p is the model estimated probability for the ground truth class, and \(\gamma\) is a tunable parameter, which was set as 2 in our model. With these definitions, it is easy to see that when a pixel is mis-classified and \(p \rightarrow 0\), then the modulating factor \((1-p)^\gamma\) tends to 1 and the loss (log(p)) is not affected. However, when a pixel is classified correctly and when \(p \rightarrow 1\), the loss is down-weighted. In this manner, priority during training is emphasized more on the hard negative classifications, hence yielding superior classification performance in the case of unbalanced datsets. Utilizing the focal loss gave us superior classification results for all anatomical components.

Regressor loss To tighten the anchors and hence improve masking accuracy, the loss function we utilized is based on the summation of Smooth L1 functions computed across anchor, ground truth and predicted anchors. Let (x, y) denote the top-left coordinate of a predicted anchor. Let \(x_a\) and \(x^*\) denote the same for anchors generated by the RPN, and the manually generated ground-truth. The notations are the same for the y coordinate, width w and height h of an anchor. We define several terms first, following which the loss function \(L_{reg}\) used in our architecture is presented.

Hyperparameters

For convenience, Table 5 lists values of critical hyperparameters in our finalized architecture.

Data availability

The sample dataset is available at https://github.com/mminakshi/Mosquito-Data/tree/master/Data.

Code availability

We have leveraged Matterport Github repository for Mask RCNN implementation. The code is open source and publicly available25.

References

Minakshi, M., Bharti, P. & Chellappan, S. Leveraging smart-phone cameras and image processing techniques to classify mosquito species. In Proceedings of the 15th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, 77–86 (ACM, 2018).

De Los Reyes, A. M. M., Reyes, A. C. A., Torres, J. L., Padilla, D. A. & Villaverde, J. Detection of aedes aegypti mosquito by digital image processing techniques and support vector machine. In 2016 IEEE Region 10 Conference (TENCON), 2342–2345 (IEEE, 2016).

Fuchida, M., Pathmakumar, T., Mohan, R. E., Tan, N. & Nakamura, A. Vision-based perception and classification of mosquitoes using support vector machine. Appl. Sci. 7, 51 (2017).

Favret, C. & Sieracki, J. M. Machine vision automated species identification scaled towards production levels. Syst. Entomol. 41, 133–143 (2016).

Minakshi, M. et al. Automating the surveillance of mosquito vectors from trapped specimens using computer vision techniques. In ACM COMPASS (ACM, 2020).

Park, J., Kim, D. I., Choi, B., Kang, W. & Kwon, H. W. Classification and morphological analysis of vector mosquitoes using deep convolutional neural networks. Sci. Rep. 10, 1–12 (2020).

Chen, Y., Why, A., Batista, G., Mafra-Neto, A. & Keogh, E. Flying insect detection and classification with inexpensive sensors. J. Vis. Exp. (JoVE) e52111 (2014).

Mukundarajan, H., Hol, F. J., Castillo, E. A., Newby, C. & Prakash, M. Using mobile phones as acoustic sensors for the surveillance of spatio-temporal mosquito ecology (2016).

Vasconcelos, D., Nunes, N., Ribeiro, M., Prandi, C. & Rogers, A. Locomobis: a low-cost acoustic-based sensing system to monitor and classify mosquitoes. In 2019 16th IEEE Annual Consumer Communications & Networking Conference (CCNC), 1–6 (IEEE, 2019).

Ravi, P., Syam, U. & Kapre, N. Preventive detection of mosquito populations using embedded machine learning on low power iot platforms. In Proceedings of the 7th Annual Symposium on Computing for Development, 1–10 (2016).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask r-cnn. Proceedings of the IEEE International Conference on Computer Vision 2961–2969 (2017).

Lin, T.-Y. et al. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2117–2125 (2017).

Smithsonian homepage. https://www.si.edu/.

The importance of learning identification of larvae and adult mosquitoes. https://juniperpublishers.com/jojnhc/pdf/JOJNHC.MS.ID.555636.pdf.

Stauffer, C. & Grimson, W. E. L. Adaptive background mixture models for real-time tracking. In Computer Vision and Pattern Recognition, 1999. IEEE Computer Society Conference on., vol. 2, 246–252 (IEEE, 1999).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 234–241 (Springer, 2015).

Dai, J., He, K. & Sun, J. Instance-aware semantic segmentation via multi-task network cascades. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3150–3158 (2016).

Li, Y., Qi, H., Dai, J., Ji, X. & Wei, Y. Fully convolutional instance-aware semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2359–2367 (2017).

Pinheiro, P. O., Collobert, R. & Dollár, P. Learning to segment object candidates. In Advances in Neural Information Processing Systems 1990–1998 (2015).

Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision 1440–1448 (2015).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems 91–99 (2015).

Dutta, A. & Zisserman, A. The VGG image annotator (via). arXiv preprint arXiv:1904.10699 (2019).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778 (2016).

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, 2980–2988 (2017).

Mask RCNN code. https://github.com/matterport/Mask_RCNN.

IFAS. Florida medical entomology laboratory. https://fmel.ifas.ufl.edu/mosquito-guide/species-identification-table/species-identification-table-adult/.

Glyshaw, P. & Wason, E. Anopheles quadrimaculatus. https://animaldiversity.org/accounts/Anopheles_quadrimaculatus/edu/mosquito-guide/species-identification-table/species-identification-table-adult/.

Dharmasiri, A. G. et al. First record of anopheles stephensi in Sri Lanka: a potential challenge for prevention of malaria reintroduction. Malaria J. 16, 326 (2017).

IFAS. Florida medical entomology laboratory. https://fmel.ifas.ufl.edu/publication/buzz-words/buzz-words-archive/is-it-culex-tarsalis-or-culex-coronator/.

Floore, T. A., Harrison, B. A. & Eldridge, B. F. The anopheles (anopheles) crucians subgroup in the united states (diptera: Culicidae) (Tech. Rep, Walter Reed Army Inst Of Research Washington Dc Department Of Entomology, 1976).

Nmnh-usnment01001576 smithsonian institution. https://collections.si.edu/search/results.htm?fq=tax_kingdom%3A%22Animalia%22&fq=online_media_type%3A%22Images%22&fq=data_source%3A%22NMNH+-+Entomology+Dept.%22&q=NMNH-USNMENT01001576&gfq=CSILP_6.

Nmnh-usnment01006317 smithsonian institution. https://collections.si.edu/search/results.htm?fq=tax_kingdom%3A%22Animalia%22&fq=online_media_type%3A%22Images%22&fq=data_source%3A%22NMNH+-+Entomology+Dept.%22&q=NMNH-USNMENT01006317&gfq=CSILP_6.

Nmnh-usnment01036430 smithsonian institution. https://collections.si.edu/search/results.htm?fq=tax_kingdom%3A%22Animalia%22&fq=online_media_type%3A%22Images%22&fq=data_source%3A%22NMNH+-+Entomology+Dept.%22&q=NMNH-USNMENT01036430&gfq=CSILP_6.

Acknowledgements

We acknowledge support from the Hillsborough County Mosquito Control District in helping us identify mosquitoes trapped in the wild.

Author information

Authors and Affiliations

Contributions

M.M. led the design of Mask R-CNN algorithms and data collection for anatomy extraction. P.B. and M.M. conducted experiments of various loss functions and fine tuned the neural network parameters. T.B. conducted experiments on performance evaluations and collaborated with M.M. in architecture design. S.K. assisted in data collection and annotation of ground-truth images. S.C. conceptualized the project, and coordinated all technical discussions and choices of parameters for the neural network architectures.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Minakshi, M., Bharti, P., Bhuiyan, T. et al. A framework based on deep neural networks to extract anatomy of mosquitoes from images. Sci Rep 10, 13059 (2020). https://doi.org/10.1038/s41598-020-69964-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-69964-2

- Springer Nature Limited

This article is cited by

-

Global mosquito observations dashboard (GMOD): creating a user-friendly web interface fueled by citizen science to monitor invasive and vector mosquitoes

International Journal of Health Geographics (2023)

-

Wing Interferential Patterns (WIPs) and machine learning for the classification of some Aedes species of medical interest

Scientific Reports (2023)

-

Gender identification of the horsehair crab, Erimacrus isenbeckii (Brandt, 1848), by image recognition with a deep neural network

Scientific Reports (2023)

-

replicAnt: a pipeline for generating annotated images of animals in complex environments using Unreal Engine

Nature Communications (2023)

-

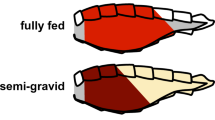

Classifying stages in the gonotrophic cycle of mosquitoes from images using computer vision techniques

Scientific Reports (2023)