Abstract

Face-selective neurons in the monkey temporal cortex discharge at different rates in response to pictures of different individual faces. Here we tested whether this pattern of response across single neurons in the face-selective area ML (located in the middle Superior Temporal Sulcus) tolerates two affine transformations; picture-plane inversion, known to decrease the average response of face-selective neurons and the other, stimulus size. We recorded the response of 57 ML neurons in two awake and fixating monkeys. Face stimuli were presented at two sizes (10 and 5 degrees of visual angle) and two orientations (upright and inverted). Different faces elicited distinct patterns of activity across ML neurons that were reliable (i.e., predictable with a classifier) within a specific size and orientation condition. Despite observing a reduction in the average response magnitude of face-selective neurons to inverted faces, compared to upright faces, classifier performance was above chance for both upright and inverted faces. While decoding was largely preserved across changes in stimulus size, a classifier trained with one orientation condition and tested on the other did not lead to performance above chance level. We conclude that different individual faces can be decoded from patterns of responses in the monkey area ML regardless of orientation or size, but with qualitatively different patterns of responses for upright and inverted faces.

Similar content being viewed by others

Introduction

Single neurons that respond selectively to face compared to non-face visual stimuli were identified in the inferior temporal (IT) cortex of non-human primates over forty years ago1. Face-selective neurons in the IT cortex of macaque monkeys are characterized by their high category-selectivity (i.e., responding at least twice as much to faces than other similar shapes and objects2,3,4,5,6) across scale and position changes of the retinal image4,7,8,9. Most face-selective neurons respond with different orders of magnitude, i.e. firing rate, to pictures of different individual faces2,3,10,11,12 offering a potential mechanism for achieving individual face discrimination13,14,15.

FMRI studies have defined a cortical face processing system in the monkey brain comprised of multiple interconnected, functionally-defined regions or ‘patches’3,4,5,16,17,18,19. In the last 10 years researchers have been able to use these fMRI maps to guide single cell recordings in monkeys, in order to understand the role of each functionally-defined patch, and to shed light on how face representations are successively transformed along the ventral visual pathway3,10,15,16.

Among the face-selective clusters identified in the monkey brain, area ML, in the middle lateral section of the Superior Temporal Sulcus (STS; see Fig. 1A) is the most consistently observed and investigated3,15,20. Using population decoding, studies have shown that activity in area ML, combined with MF (a face-selective patch in the fundus of the middle STS region), is identity-selective3,10,11,15,18,21.

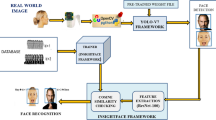

Functional maps and experimental stimuli. (A) The Area ML was defined in both monkeys using an fMRI block-design localizer with 5 categories of objects (the contrast was defined as [faces] – [bodies, fruit, hands, and gadgets]). In both cases, MION activation is superimposed on a high resolution anatomical scan obtained with tungsten markers positioned in the recording chamber grid to indicate the recording position. The t-maps are thresholded at p < 0.05 (Family-Wise Error), corresponding to a t > 4.9. The recording location in both monkeys is indicated by both the vertical position of the tungsten markers and blue arrows that have been superimposed on the scans. (B) Illustrative examples of the individual face pictures used (NB here we show examples of the orientation manipulation and not the size manipulation).

Based on these observations, the aim of the present study is to test whether the identity of 12 randomly selected faces can be decoded from single neuron output in area ML, and, most importantly, the extent to which this performance depends on stimulus size and picture-plane inversion. A number of studies combining functional imaging and single cell recordings have reported that the average firing rate of ML neurons is systematically lower when face stimuli are turned upside down3,4,18. However, one cannot infer from an overall reduction in the average firing rate of neurons whether each neuron’s preferences have changed. Thus, these previous studies did not test whether the unique patterns of responses across neurons elicited by different face identities were altered when the faces were turned upside down. In this paper we are specifically interested in whether the patterns of activity across neurons, in response to different faces, is influenced by stimulus size or picture-plane inversion (see Fig. 1B). On a single neuron basis, this influence would manifest as a change in the preferred facial identities dependent on either size or inversion or both.

Results

Analysis of average activity across neurons

Fifty-seven face-selective neurons were recorded in area ML across two monkeys (average Face Selectivity Index (FSI; see Methods) = 0.67, sd = 0.25). Average normalized firing rate was analyzed using a 2 (Size) × 2 (Orientation) × 12 (Face identity) repeated measures ANOVA corrected for violations of sphericity using the Greenhouse-Geisser method. Neurons in ML responded stronger to upright than inverted faces (main effect of Orientation, F(1,56) = 13.19, p < 0.001), which extends previous findings in this region3,5 and shows that the preference for upright faces remains even when averaging across multiple identities and stimulus sizes (Fig. 2A). There was also a main effect of Face Identity, indicating that mean response strength was greater for some identities than others (F(8.26,462.49) = 2.67, p = 0.006). In contrast, the Size of the face stimulus did not change the response strength of ML neurons significantly (F(1,56) = 3.33, p = 0.08; see Fig. 2B), and there was no significant interaction between Size and Face identity (F(8.43,472.39) = 1.43, p = 0.17). Thus, we found no evidence that the average response profile across facial identities 1 through 12 was influenced by stimulus size. When averaging across face identities, there was no interaction either between Orientation and Size (F(1,56) = 0.24, p = 0.62) and the three way interaction also failed to reach significance (F(8.43,471.90) = 1.86, p = 0.06). For individual monkey data, please see Fig. 2C.

Overall anova results. (A) Upright compared to Inverted Faces (averaging across face identity and stimulus size). (B) Large faces compared to Small faces (averaging across face identity and orientation). (C) Individual Monkey Data; All four unique conditions (averaging across face identity) – (from left to right) Upright Large Faces; Inverted Large Faces; Upright Small Faces; Inverted Small Faces.

We found a significant interaction between Face identity and Orientation implying that picture-plane inversion modulated the average firing rate elicited by the 12 face identities, (F(8.49,475.57) = 4.90, p < 0.001; see Fig. 3A for single units). Hence, the individual face stimuli contributing to the strongest or weakest response were not the same across orientations. This same interaction was significant when the analysis was repeated for each subject separately (i.e. Monkey G, N = 32, p < 0.01; Monkey D, N = 25, p < 0.001; See Fig. 2C).

Variance across neurons. (A) The average net (raw – baseline) firing rates and standard error (error bars) for 2 randomly selected neurons responding to all 12 face stimuli (blue bars = upright; red bars = inverted). Top neuron was recorded in Monkey D and bottom neuron was recorded in Monkey G. (B) The average net (raw – baseline) firing rates and standard error (error bars) for 2 randomly selected neurons responding to all 12 face stimuli (blue bars = large; red bars = small). Top was recorded in Monkey D and bottom was recorded in Monkey G. (C) Line graph indicating the average normalized firing rate as a function of stimulus identity, after stimuli have been ranked based on the average response in the upright face condition (i.e. the upright identity that elicited the highest average firing rate was ranked as number 1 and is represented on the far left of the x-axis). (D) Line graph indicating the average normalized firing rate as a function of stimulus identity, after stimuli have been ranked based on the average response in the large face condition (i.e. the upright identity that elicited the highest average firing rate was ranked as number 1 and is represented on the far left of the x-axis). (E) The effect of stimulus orientation on each neuron’s response presented in a scatterplot where the horizontal axis reflects the z-score for each neuron’s response to upright faces (marker type distinguishes the 12 stimulus identities). The vertical axis reflects each neuron’s corresponding response to inverted faces (z-scores). (F) The effect of stimulus size in a scatterplot; same conventions as (E) except the horizontal axis represents the response to large faces and the vertical axis represents the response to small faces.

Ranking Analysis of identity preference

To determine whether each neuron’s preference among the upright face stimuli was independent of its preference among the inverted face stimuli analytically, we compared identity preference in the orientation conditions using the rank approach22,23. For each neuron, the upright face trials were used for ranking the 12 identities according to response strength in descending order (from best to worst) and the inverted face trials were analysed using the upright face rankings (responses averaged across size). A one-way ANOVA on the ranked inverted face data showed no evidence of a decrease in response as a function of upright face rank (F(8.663,485.14) = 0.53, p = 0.88; G-G corrected; see Fig. 3C). Thus, these results provide no indication that identity preferences, when averaging across neurons, were the same in the upright and inverted conditions.

We also performed this same ranking analysis on the two size conditions (10 dva hereafter referred to as ‘large’; and 5 dva hereafter referred to as ‘small’). For each neuron, the large face trials were used for ranking the 12 identities according to response strength in descending order (from best to worst) and the small face trials were analysed using the large face rankings. For this analysis, responses were averaged across the manipulation of orientation (large and small trials). A one-way repeated measures ANOVA on the ranked small face data revealed evidence for a preserved rank (F(8.196,458.959) = 3.599, p < 0.001; G-G corrected; see Fig. 3D). The ANOVA indicated there was significant variation in the average response across the ranked identities. Moreover, a follow-up test using orthogonal polynomial coefficients confirmed the linear trend from first to last identity (F(1,56) = 21.429, p < 0.001). This result indicates that the rank order of the identities, ranked based on responses in large face trials, was preserved in the small face trials.

In Fig. 3A,B we provide the average response of single neuron’s, across identity and orientation (Fig. 3A) and across identity and size (Fig. 3B). These provide further indication that identity preferences were altered by orientation but not stimulus size. In order to examine whether this observation held for the entire population, beyond four illustrative neurons, we z-scored the response to each identity, size and orientation for each ML neuron. In Fig. 3F we plotted the z-scores for large faces against the z-scores for small faces. In Fig. 3E we plotted the z-scores across neurons for upright faces against inverted faces. The stronger positive relationship evident in Fig. 3F (Spearman’s rho = 0.383, p < 0.001, 2-tailed), compared to Fig. 3E (Spearman’s rho = −0.02, p = 0.594, 2-tailed), provides further evidence that preferences across identities were largely preserved across the stimulus size manipulation but not across orientation.

Variability across neurons and classifier performance

The above analysis indicates that across the population of face-selective neurons recorded in area ML, there was a difference in the average response to upright and inverted faces. However, the same neurons showed a similar mean response to faces with different presentation sizes. Importantly, the ranking analysis unpacked an important difference between these affine manipulations: stimulus rank was preserved across the size manipulation but not the orientation manipulation.

To further probe whether our images manipulations differentially affected identity decoding in area ML we used the correlation coefficient classifier described in the methods section. The percentage of correct classifications (classifier score) is indicative of how accurate the population of ML neurons can identify an upright or inverted face. First, we classified face identity within the two orientation conditions (ignoring differences in size). The classification score obtained with upright faces was 38.62%, which was above the 99th percentile of the corresponding null distribution (median = 7.5%; 2nd percentile = 2.5%; 98th percentile = 16.67%). For the classifier on inverted face trials performance was 36.51%, which was also above the 99th percentile of the corresponding chance distribution (median = 7.5%; 2nd percentile = 2.5%; 98th percentile = 16.67%). To test whether the classifier score for inverted faces was different from the classifier score for upright faces we first created an upright performance distribution by repeating the classifier procedure, with correct identity labels, 1000 times (see Methods). The 5th and 95th percentiles for this upright score distribution were 30.83% and 45.83% respectively. The classification score for inverted faces (i.e. 36.51%) was in the 29th percentile of the upright distribution. Therefore, there was no evidence that the inverted score was sampled from a different distribution.

Finally, we tested cross-orientation classifier performance (i.e. training with upright data and testing with inverted and vice versa). The classifier performed more poorly when trained with data from upright face trials and tested with data from inverted face trials (classifier performance = 10.10%) which was below the 75th percentile of the null distribution computed by training the classifier with randomly shuffled identity labels (chance distribution median score = 8.3%; 2nd percentile = 3.3%; 98th percentile = 14.17%). Likewise, when we trained the classifier with inverted face trials and tested again upright face data, classifier performance was low (9.19%) and below the 70th percentile of the chance distribution (median score = 8.3%; 2nd percentile = 2.5%; 98th percentile = 15%). Collectively these observations provide no evidence that the classifier could accurately decode identity across the orientation conditions at a level greater than chance. These results are consistent with the results of the rank analysis above, which also suggested there was little correspondence between the response pattern to upright and inverted faces.

We then examined classifier performance for identity when data were restricted to the large and small face trials (with orientation trials combined). The classification score for large face trial data was 31.54%, falling above the 99th percentile of the null distribution (median = 8.3%; 2nd percentile = 3.3%; 98th percentile = 15%). The classification score for small face trial data was also significantly above chance (classifier performance = 16.09%, 96th percentile; null distribution median score = 8.3%; 2nd percentile = 2.5% and 98th percentile = 16.67%). Interestingly, when the same cross-size decoding procedure was performed using large and small face trials (i.e. the classifier was trained with large face trials and tested on data from small face trials), the classifier performed at a level above the 95th percentile of the chance distribution obtained by shuffling identity labels. For instance, trained with large face trials and tested with small face trials, classifier performance was 29.55%. This score was above the 99th percentile of the chance distribution (median score = 7.5%; 2nd percentile = 2.5 and 98th percentile = 16.67%). Results were similar when we trained the classifier with data from small face trials and tested against data from large face trials: Classifier performance was 15.95% which was above the 99th percentile of a chance distribution (median score of 8.3%, 2nd percentile = 3.3%, 98th percentile = 14.17%).

Discussion

Overall, our results obtained by recording in the face-selective middle patch (ML) of the monkey IT confirm that the individuality of a human face picture can be decoded from the firing rates of a modest number of face-selective neurons, i.e. the output of 57 neurons classifier performance was above chance for all four conditions. The observation that there is a distinct “neural code” for a set of 12 independent and “naturally occurring” human faces is an important replication of previous work13,15,24, here sampling face-selective units exclusively in area ML, a functionally defined area of the cortical face processing network in rhesus monkeys. Although we cannot distinguish between norm-based and feature-based coding (see15,24), we confirm that the pattern of activity elicited from a relatively small number of ML neurons varies with stimulus identity reliably across trials even without preselecting the preferred identity for any given neuron.

The average response of the neurons we recorded tolerated the change in scale and, on average, responded less to inverted faces than to upright faces in line with previous observations3,4,25. It is worth noting, that we tested a single octave change in size and, thus, it remains possible a larger reduction in size would change the average response magnitude and the response profile of neurons dramatically (see7,8,9). In this study we made no attempt to equate the manipulation of size with the manipulation of orientation. Instead, using a ranking analysis, we report evidence that identity preferences were tolerant of a single octave decrease in stimulus-size but not a 180-degree rotation in the picture-plane.

While there was no evidence that the cross-orientation classifier performed above chance, the performance of the cross-size classifier indicates that the population response to stimulus identity was tolerant of a change in stimulus size. These findings suggest that identity-selectivity at a population level is dependent on stimulus orientation but not on stimulus size. However, we also observed classifier performance based on inverted trials was well above chance. Moreover, this performance was not significantly lower from classifier performance based on upright orientation. This means that, even though the average response of the population is reduced, and the identity preferences change when faces are inverted – there is no information loss for inverted faces in area ML. We note that this observation is in agreement with the lack of behavioral inversion effect in macaque monkeys26,27,28,29.

While the role of the face-selective area ML in the monkey face processing network remains controversial, it has been suggested that area ML builds representations of face stimuli at a population level that are shape-dependent15. Our results are largely consistent with this conclusion, demonstrating that both the average response of a population and the population code are sensitive to changes in stimulus orientation. We also show that the distinct population response in area ML to individual faces is not, simply, sensitive to all affine images transformations; the pattern of responses across 57 neurons to 12 different faces tolerated a change in stimulus size. That is, scaling the faces down to half their original height results in no change in the average response magnitude, and the sampled neurons retained their identity-selectivity.

Methods

Subjects and Localization

We used fMRI to localize the face-selective patches in two male monkeys (Macaca mulatta), D and G. Animal care and experimental procedures were approved by the ethical committee of the KU Leuven medical school. All methods were performed in accordance with the relevant guidelines and regulations. To optimize the signal-to-noise ratio, we used an iron oxide contrast agent (monocrystalline iron oxide nanoparticle or MION; the details of this procedure are described elsewhere4,16,30,31,32). Eighty images of faces, bodies, fruits, manmade objects and hands (16 images per category) were presented to the monkeys in blocks during continuous fixation. These images have been used to isolate face-selective cells in previous studies of rhesus monkeys3,15 and were presented on a square canvas with a height that subtended a visual angle of 8°. Consistent with previous reports, there were several discrete regions (face-selective patches) in both monkeys that responded more to faces than the four other non-face categories. Single unit recordings were performed in three regions in the right hemisphere of both subjects. All recordings were in the lateral lip of the lower bank of the Superior Temporal Sulcus (STS; Fig. 1A) in the middle lateral face patch (ML). ML was located ~4 mm anterior to the interaural line in monkey D and ~6 mm anterior to the interaural line in monkey G (see Fig. 1A).

Single Cell Procedure and Analysis

We surgically implanted a plastic recording chamber in both monkeys that targeted ML and isolated 882 single neurons in total, using epoxylite-insulated tungsten microelectrodes (FHC) and standard electrophysiological procedures described in detail elsewhere4,16,17,22,23,31,33,34,35,36,37,38. Online isolation of single neuron activity was achieved using a level and time threshold (for more details see22,23,35). Stimuli were displayed on a CRT display (Philips Brilliance 202 P4; 1024 × 768 screen resolution; 75 Hz vertical refresh rate) at a distance of 57 cm from the monkey’s eyes. The 32 images (16 faces, 16 non-face objects) that were used to search for responsive neurons and measure their face-selectivity were taken from the 80 images that were used in the fMRI block-design localizer. The 16 non-face objects were taken from 4 different categories (headless bodies, hands, gadgets, and fruits), selected to be similar to faces in their round shape (e.g. an orange or a closed fist). All images were 8° of visual angle in height, width was allowed to vary. For electrophysiological recordings, however, the noise background was removed from these images and replaced with a uniform grey background and then gamma corrected.

The position of the subject’s right eye was continuously tracked by means of an infrared video-based tracking system (SR Research EyeLink; sampling rate 1KHz). A monkey initiated a trial by fixating on a central fixation spot (size = 0.2° of visual angle) that was always present throughout the trial. The monkey was then required to fixate on this spot (within a 2° × 2° fixation window) for 300 ms prior to stimulus onset and during the stimulus presentation (300 ms). An additional 300 ms fixation period after stimulus offset was required before the monkey was rewarded for continuous fixation with a fluid reward. Trials were separated by an inter-stimulus interval of at least 500 ms, the exact duration being dependent on the oculomotor behavior of the monkey in between the trials (see Fig. 1B). In the main tests, the stimuli were at the center of the screen, behind the fixation spot. Each trial presented a monkey with a single stimulus, drawn from the set in a pseudo random order. Each stimulus was repeated at least twice for every neuron discriminated. In each recording session we recorded the first single unit encountered at the predetermined depth with respect to the silence associated with the sulcus, regardless of face-selectivity or visual responsiveness. Each unit thereafter was at least 150 µm deeper than the previous.

After a neuron’s spike was isolated, we recorded its response to Face/Non-face stimuli (at least 2 trials per stimuli) in order to compute the neuron’s face-selectivity index. Following previous studies3,4,16,17,23, we defined for each neuron a face-selectivity index as FSI = (mean net response faces − mean net response nonface objects)/(│mean net response faces│+│mean net response nonface objects│). We counted a neuron as being “face-selective” if the FSI was greater than 0 (meaning it’s average response to face stimuli was greater than its average response to non-face stimuli).

Without further selection, we then tested each neuron using an independent image set comprised of 12 achromatic faces of unfamiliar adult Caucasian individual (6 females). The height of these stimuli subtended 10° of visual angle in the “large” condition and 5° in the “small” condition. The timing parameters were identical to those described for the category-search procedure. All images depicted neutral expressions and were frontward facing. External cues to facial identity (e.g., hair, ears, and neck) were removed using Adobe Photoshop. The luminance and root-mean square (RMS) contrast of all stimuli were adjusted to match the mean luminance and contrast values of the entire image set. To create the “inverted” stimuli we rotated the 12 “upright” faces 180° in the picture-plane.

Firing rate was computed for each unaborted trial in two analysis windows: a baseline window ranging from 250 to 50 ms before stimulus onset and a response window ranging from 50 to 350 ms after stimulus onset. Responsiveness of each recorded neuron was tested offline by a split-plot ANOVA with repeated measure factor baseline versus response window and between-trial factor stimulus. Only neurons for which either the main effect of the repeated factor or the interaction between the two factors was statistically significant (i.e. p < 0.05) were analyzed further. Net firing rate during a trial was calculated by subtracting the firing rate in the baseline window from that in the response window. There were 12 face identities that were presented in each of the four experimental conditions (upright large/upright small/inverted large/inverted small). There were, thus, 48 different conditions in total. These 48 conditions were repeated in at least 5 trials per neuron. In order to pool across neurons and monkeys, we normalized the data with respect to the maximum response across the 48 conditions (averaging across trials) for each neuron, using the net response.

Pattern Classifier

To assess face identity coding we used a correlation coefficient classifier10,39 based on zero-one loss measure. In this analysis, a pattern classifier is trained on a subset of data to derive the presented stimulus from the pattern of activity in a population of neurons. The proportion of correct classifications (classifier accuracy) is indicative of how well the neural activity pattern represents face identity.

The classifier was trained on the pattern of activity across all 57 neurons on 83.3% of the trials and tested on the remaining 16.6%. To do this, we first examined classifier performance based on the upright face trials. Excluding trials where the stimulus was presented upside down we used a subset of 6 randomly selected trials per combination of neuron (57 neurons) and face identity (12 identities). For the training, 12 vectors, one for each upright face identity, were created containing the average responses of all 57 neurons on 5 of the 6 selected trials (i.e. 12 vectors of 57 average responses).

For the test phase, a vector with the responses of all 57 neurons on a remaining 6th trial was correlated with each of the 12 vectors created using the training phase. The trained identity that yielded the highest correlation with the test identity was used as the predicted identity (the classifier was correct if the predicted identity was equal to the test identity). This procedure was repeated 6 times, once for each of the selected trials to serve as the test data. To minimize trial selection influence, this total procedure was repeated 100 times, with random permutations of the selection of trials for each neuron. To eliminate the influence of net differences in firing rate of the individual neurons in the correlation, for each permutation, before training and testing, the data were Z-scored (using the mean and standard deviation within each neuron, across orientation and stimuli). The average percentage of ‘correct’ decisions of the classifier was used as the classifier performance.

To test statistical significance of the classifier performance against chance, a null distribution was generated by repeating the above described procedure 1000 times, while, randomly shuffling the identity labels of the stimuli during training. For each of these 1000 repetitions, classifier performance was obtained, resulting in a probability distribution. The proportion of data points from this distribution higher than the performance obtained by using the real face identities indicated the probability level of the classifier not being better than chance (in theory; 1/12 or 8.3%).

Data availability Statement

Requests for the data analyzed during this study should be directed to and will be fulfilled by the corresponding author Jessica Taubert (jesstaubert@gmail.com).

References

Gross, C. G., Rocha-Miranda, C. E. & Bender, D. B. Visual properties of neurons in inferotemporal cortex of the Macaque. Journal of neurophysiology 35, 96–111 (1972).

Perrett, D. I., Rolls, E. T. & Caan, W. Visual neurones responsive to faces in the monkey temporal cortex. Experimental brain research 47, 329–342 (1982).

Tsao, D. Y., Freiwald, W. A., Tootell, R. B. & Livingstone, M. S. A cortical region consisting entirely of face-selective cells. Science (New York, N.Y.) 311, 670–674, https://doi.org/10.1126/science.1119983 (2006).

Taubert, J., Van Belle, G., Vanduffel, W., Rossion, B. & Vogels, R. The effect of face inversion for neurons inside and outside fMRI-defined face-selective cortical regions. Journal of neurophysiology 113, 1644–1655, https://doi.org/10.1152/jn.00700.2014 (2015).

Bell, A. H. et al. Relationship between functional magnetic resonance imaging-identified regions and neuronal category selectivity. The Journal of neuroscience: the official journal of the Society for Neuroscience 31, 12229–12240, https://doi.org/10.1523/jneurosci.5865-10.2011 (2011).

Desimone, R., Albright, T. D., Gross, C. G. & Bruce, C. Stimulus-selective properties of inferior temporal neurons in the macaque. The Journal of neuroscience: the official journal of the Society for Neuroscience 4, 2051–2062 (1984).

Rolls, E. T. & Baylis, G. C. Size and contrast have only small effects on the responses to faces of neurons in the cortex of the superior temporal sulcus of the monkey. Experimental brain research 65, 38–48 (1986).

Tovee, M. J., Rolls, E. T. & Azzopardi, P. Translation invariance in the responses to faces of single neurons in the temporal visual cortical areas of the alert macaque. Journal of neurophysiology 72, 1049–1060 (1994).

Ito, M., Tamura, H., Fujita, I. & Tanaka, K. Size and position invariance of neuronal responses in monkey inferotemporal cortex. Journal of neurophysiology 73, 218–226, https://doi.org/10.1152/jn.1995.73.1.218 (1995).

Meyers, E. M., Borzello, M., Freiwald, W. A. & Tsao, D. Intelligent information loss: the coding of facial identity, head pose, and non-face information in the macaque face patch system. The Journal of neuroscience: the official journal of the Society for Neuroscience 35, 7069–7081, https://doi.org/10.1523/jneurosci.3086-14.2015 (2015).

Freiwald, W. A. & Tsao, D. Y. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science (New York, N.Y.) 330, 845–851, https://doi.org/10.1126/science.1194908 (2010).

Hasselmo, M. E., Rolls, E. T. & Baylis, G. C. The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behavioural brain research 32, 203–218 (1989).

Young, M. P. & Yamane, S. Sparse population coding of faces in the inferotemporal cortex. Science (New York, N.Y.) 256, 1327–1331 (1992).

Abbott, L. F., Rolls, E. T. & Tovee, M. J. Representational capacity of face coding in monkeys. Cerebral cortex (New York, N.Y.: 1991) 6, 498–505 (1996).

Chang, L. & Tsao, D. Y. The Code for Facial Identity in the Primate Brain. Cell 169, 1013–1028.e1014, https://doi.org/10.1016/j.cell.2017.05.011 (2017).

Taubert, J., Van Belle, G., Vanduffel, W., Rossion, B. & Vogels, R. Neural Correlate of the Thatcher Face Illusion in a Monkey Face-Selective Patch. The Journal of neuroscience: the official journal of the Society for Neuroscience 35, 9872–9878, https://doi.org/10.1523/jneurosci.0446-15.2015 (2015).

Taubert, J., Goffaux, V., Van Belle, G., Vanduffel, W. & Vogels, R. The impact of orientation filtering on face-selective neurons in monkey inferior temporal cortex. Scientific reports 6, 21189, https://doi.org/10.1038/srep21189 (2016).

Freiwald, W. A., Tsao, D. Y. & Livingstone, M. S. A face feature space in the macaque temporal lobe. Nature neuroscience 12, 1187–1196, https://doi.org/10.1038/nn.2363 (2009).

Grimaldi, P., Saleem, K. S. & Tsao, D. Anatomical Connections of the Functionally Defined “Face Patches” in the Macaque Monkey. Neuron 90, 1325–1342, https://doi.org/10.1016/j.neuron.2016.05.009 (2016).

Tsao, D. Y., Freiwald, W. A., Knutsen, T. A., Mandeville, J. B. & Tootell, R. B. Faces and objects in macaque cerebral cortex. Nature neuroscience 6, 989–995, https://doi.org/10.1038/nn1111 (2003).

Dubois, J., de Berker, A. O. & Tsao, D. Y. Single-unit recordings in the macaque face patch system reveal limitations of fMRI MVPA. The Journal of neuroscience: the official journal of the Society for Neuroscience 35, 2791–2802, https://doi.org/10.1523/jneurosci.4037-14.2015 (2015).

Mysore, S. G., Vogels, R., Raiguel, S. E. & Orban, G. A. Shape selectivity for camouflage-breaking dynamic stimuli in dorsal V4 neurons. Cerebral cortex (New York, N.Y.: 1991) 18, 1429–1443, https://doi.org/10.1093/cercor/bhm176 (2008).

Popivanov, I. D., Jastorff, J., Vanduffel, W. & Vogels, R. Tolerance of macaque middle STS body patch neurons to shape-preserving stimulus transformations. Journal of cognitive neuroscience 27, 1001–1016, https://doi.org/10.1162/jocn_a_00762 (2015).

Leopold, D. A., Bondar, I. V. & Giese, M. A. Norm-based face encoding by single neurons in the monkey inferotemporal cortex. Nature 442, 572–575, https://doi.org/10.1038/nature04951 (2006).

Sugase-Miyamoto, Y., Matsumoto, N., Ohyama, K. & Kawano, K. Face inversion decreased information about facial identity and expression in face-responsive neurons in macaque area TE. The Journal of neuroscience: the official journal of the Society for Neuroscience 34, 12457–12469, https://doi.org/10.1523/jneurosci.0485-14.2014 (2014).

Dahl, C. D., Rasch, M. J., Tomonaga, M. & Adachi, I. The face inversion effect in non-human primates revisited - an investigation in chimpanzees (Pan troglodytes). Scientific reports 3, 2504, https://doi.org/10.1038/srep02504 (2013).

Rossion, B. & Taubert, J. What can we learn about human individual face recognition from experimental studies in monkeys? Vision research (in press).

Bruce, C. Face recognition by monkeys: absence of an inversion effect. Neuropsychologia 20, 515–521 (1982).

Rosenfeld, S. A. & Van Hoesen, G. W. Face recognition in the rhesus monkey. Neuropsychologia 17, 503–509 (1979).

Vanduffel, W. et al. Visual motion processing investigated using contrast agent-enhanced fMRI in awake behaving monkeys. Neuron 32, 565–577 (2001).

Popivanov, I. D., Jastorff, J., Vanduffel, W. & Vogels, R. Stimulus representations in body-selective regions of the macaque cortex assessed with event-related fMRI. NeuroImage 63, 723–741, https://doi.org/10.1016/j.neuroimage.2012.07.013 (2012).

Janssens, T., Zhu, Q., Popivanov, I. D. & Vanduffel, W. Probabilistic and single-subject retinotopic maps reveal the topographic organization of face patches in the macaque cortex. The Journal of neuroscience: the official journal of the Society for Neuroscience 34, 10156–10167, https://doi.org/10.1523/jneurosci.2914-13.2013 (2014).

Adab, H. Z., Popivanov, I. D., Vanduffel, W. & Vogels, R. Perceptual learning of simple stimuli modifies stimulus representations in posterior inferior temporal cortex. Journal of cognitive neuroscience 26, 2187–2200, https://doi.org/10.1162/jocn_a_00641 (2014).

Popivanov, I. D., Schyns, P. G. & Vogels, R. Stimulus features coded by single neurons of a macaque body category selective patch. Proceedings of the National Academy of Sciences of the United States of America 113, E2450–2459, https://doi.org/10.1073/pnas.1520371113 (2016).

Kaliukhovich, D. A. & Vogels, R. Divisive Normalization Predicts Adaptation-Induced Response Changes in Macaque Inferior Temporal Cortex. The Journal of neuroscience: the official journal of the Society for Neuroscience 36, 6116–6128, https://doi.org/10.1523/jneurosci.2011-15.2016 (2016).

Kumar, S., Popivanov, I. D. & Vogels, R. Transformation of Visual Representations Across Ventral Stream Body-selective Patches. Cerebral cortex (New York, N.Y.: 1991), 1–15, https://doi.org/10.1093/cercor/bhx320 (2017).

Vinken, K. & Vogels, R. Adaptation can explain evidence for encoding of probabilistic information in macaque inferior temporal cortex. Current biology: CB 27, R1210–r1212, https://doi.org/10.1016/j.cub.2017.09.018 (2017).

Kuravi, P. & Vogels, R. Effect of adapter duration on repetition suppression in inferior temporal cortex. Scientific reports 7, 3162, https://doi.org/10.1038/s41598-017-03172-3 (2017).

Zhang, Y. et al. Object decoding with attention in inferior temporal cortex. Proceedings of the National Academy of Sciences of the United States of America 108, 8850–8855, https://doi.org/10.1073/pnas.1100999108 (2011).

Acknowledgements

We thank Christophe Ulens, Marc De Paep, Sara De Pril, Wouter Depuydt, Astrid Hermans, Piet Kayenbergh, Gerrit Meulemans, Inez Puttemans and Stijn Verstraeten for technical and administrative assistance. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

Experiment was designed by all authors. Data collection was performed by J.T. The data were analyzed by J.T. and G.V.B. All authors wrote the main manuscript text and prepared the figures. All authors reviewed and approved the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Taubert, J., Van Belle, G., Vogels, R. et al. The impact of stimulus size and orientation on individual face coding in monkey face-selective cortex. Sci Rep 8, 10339 (2018). https://doi.org/10.1038/s41598-018-28144-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-28144-z

- Springer Nature Limited

This article is cited by

-

Face pareidolia in male schizophrenia

Schizophrenia (2022)

-

Social cognition in individuals born preterm

Scientific Reports (2021)