Abstract

Data quality is of crucial importance in the field of automated or digitally assisted assembly. This paper presents a comprehensive data set of triangle meshes representing electrical and electronic components obtained by scraping Computer Aided Design (CAD) models from the Internet. Consisting of a total of 234 triangle meshes with labelled vertices, this data set was specifically created for segmentation tasks. Its versatility for multimodal tasks is underscored by the presence of various labels, including vertex labels, categories, and subcategories. This paper presents the data set and provides a thorough statistical analysis, including measures of shape, size, distribution, and inter-rater reliability. In addition, the paper suggests several approaches for using the data set, considering its multimodal characteristics. The data set and related findings presented in this paper are intended to encourage further research and advancement in the field of manufacturing automation, specifically spatial assembly.

Similar content being viewed by others

Background & Summary

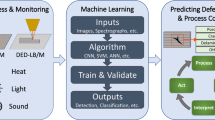

Manufacturers are under increasing pressure to deliver quality products while meeting their customers’ unique requirements. Many companies also face the growing challenge of efficiently producing customized products in a cost-effective manner1. One approach for this can be the use of automation or digital assistance solutions. However, there is often a lack of high-quality data, which companies have had to reengineer at great expense, creating a significant barrier to deploying such solutions2. Key to this is the need for precise and consistent geometric representations that support the integration of Design for Testability methodologies1. With this in mind, the research presents an in-depth analysis of 3D triangle meshes, which are critical components in understanding the geometric intricacies of electronic components. The study design involved collecting labels for 24 triangle meshes from three different raters, resulting in a total of 72 label files. These labels underwent a rigorous statistical evaluation, employing inter-rater reliability metrics like Cohen’s kappa, Fleiss’ kappa, and Randolph’s kappa, as well as advanced geometric descriptors such as optimal transport distance (OTD) and heat kernel signature (HKS). Descriptive statistics were also leveraged to provide foundational insights. The overarching objectives of this study are twofold: To establish a dependable benchmark for human performance in 3D mesh segmentation, a critical step is taken to define the essential features for the automated assembly of electrical and electronic components in control cabinets. This benchmark aims to serve as an invaluable guide for the development of future automation algorithms. Second, the study rigorously evaluates the geometric similarity between multiple mesh resolutions with the intent of confirming their interchangeability in applications where the granularity of tessellation is not a deciding factor. The data set can be used for many different use cases. Besides the domain-related use case for learning the geometric features of electronic components, the data set is additionally suitable for benchmarking of most mesh algorithms. Concerning triangle mesh downsampling algorithms, this data set holds a pivotal role. Apart from the semantic segmentation, the instance segmentation of the features is also conceivable, as well as the determination of the features by means of other methods such as 3D bounding boxes. Figure 1 shows the data preprocessing pipeline to convert standard for the exchange of product model data (STEP) files into corresponding suitable formats for deep learning.

Methods

Aggregation

As part of the work, 46,068 STEP files of electrical components were scraped from the internet. These components stem from a total of 75 different manufacturers. In addition to the components themselves, the metadata fields shown in Table 1 were scraped.

The completeness of the properties for all components is not given, because not all manufacturers list all mentioned information, which additionally shows the need for software-based information generation. The property “External document” refers to the official manufacturer documentation of the component. Since many components differ only in electrical characteristics but have identical geometry, all files were hashed using a Secure Hash Algorithm (SHA) 256 algorithm to identify geometric duplicates. This resulted in 21,134 geometrically different STEP files. During data preprocessing, a STEP file is converted into a STL triangle mesh via Open Cascade Technology. Triangle meshes and point clouds can be represented much better as tensors than STEP files and are therefore more suitable for Deep Learning (DL). Previous approaches of DL based on triangle meshes mostly work starting from Wavefront OBJ (OBJ) or object file format (OFF) files. Accordingly, the goal of data preprocessing is to convert and scale the files efficiently and correctly. All files in the data set can be filtered using the technology, category and subcategory to work with a subset. User-specific filters based on the scraped metadata can also be used to select components of a certain size, manufacturer, or electrical characteristics. The data set was then filtered to include only components relevant to control cabinet assembly, leaving 4,729 components. Figure 2 shows sample triangle meshes from the data set with their visualized geometric features.

Annotation

After filtering the files, a manual inspection of all remaining files was performed. The remaining files were converted to OBJ files, 234 of these files were subsequently labelled in Blender into five different classes as shown in Fig. 3: housing (dark blue, class ‘0’), contacting (green, class ‘1’), snap-on point (red, class ‘2’), cable entry (yellow, class ‘3’) and labelling area (orange, class ‘4’). The classes were chosen following extensive discussions with three domain experts from the control cabinet industry. Through thorough considerations on the final application and domain-specific use cases, the selected classes were determined based on their relevance to the assembly and disassembly processes of control cabinets. The labelling was done vertex-wise and all vertices were assigned to exactly one vertex group representing the different classes.

This chapter considers only the remaining 234 files. Special attention was paid to their geometry when selecting the labelled components. Due to the similarity and only minimal differences of some components, some criteria were considered during the selection of the components to prevent overfitting to one component shape. Only components that met one of the following criteria were selected:

-

Components with differences in width, height or length

-

Components with different numbers of contacts, snap-on points, cable entries or labelling areas

-

Components with features that deviate from the standard, e.g. angled contacts

-

Components with strongly asymmetrical features

Taking these criteria into account, a diligent effort was made to mitigate potential data bias as much as possible by having the test data labelled by three independent people. The majority vote was then calculated and used as ground truth. By combining the annotations of three different people, human-level performance can be approximated. This also provides valuable insight into the extent to which the data is consistently labelled by experts and how the labels affect the final segmented features.

Post processing

Since errors can occur during the conversion, they were corrected first and only then the scaling was performed. The goal is to generate a robust 2-manifold. The conversion in this work is done using an octree to represent the original mesh and constructs the surface by isosurface extraction3. Finally, a projection of the vertices onto the original mesh is performed to achieve high precision. In addition, a watertight 2-manifold is recovered by extracting outer surfaces between occupied and empty voxels and a projection-based optimization method4.

Labelling format

The files are available as high-resolution STL and downsampled OBJ and OFF files. While OBJ files represent exclusively a format for the representation of triangle networks, polygon networks can be represented additionally by means of OFF files. However, the double submission of the files is exclusively due to the fact that already published and established neural networks like MeshCNN5 or DiffusionNet6 mostly accept one of these two files as input in their inference pipeline. This allows for quick and easy experimentation with many different network architectures on the data set. For triangle meshes, vertex-wise, edge-wise or triangle-wise labels are common. The conversion of the labels into one of the other two formats is easily possible under definition of uniform conditions. In the context of the work, the conversion of the labels into other labels was also provided. The difference between vertex, edges and triangle labels is exemplified in Fig. 4.

It can be seen that the conversion is possible without any problems in the direction shown from (a) to (c), but it is not lossless. A conversion back from (c) to (a) would, for example, label the leftmost green vertex blue instead of green. Since both STL and OBJ and OFF files number the vertices and triangles sequentially from the beginning to the end of the file, label assignment is easy for vertex or triangle labels. In this case, the labels consist of a one-dimensional tensor of shape n×1 with n equal to the number of vertices or triangles of the triangle mesh depending which labelling format is chosen. In the case of edge labels, an implementation following Hanocka et al. is used.

Data Records

The Electrical and Electronic Components Dataset7 is available at the Harvard Dataverse. Components are labelled vertex-wise. The labels are in a txt-format and represent a one-dimensional tensor. In addition, components are labelled for classification tasks in technology, category, and subcategory. Possible values for these are the following classes:

-

Technology: Cabinet Engineering; Not Categorized; Fluid Engineering; Electrical Installation; Electrical Engineering

-

Category: Cables and Condcutors; Measuring + reporting devices; KNX (EIB); Power Source; Loads; Switch + Protection; Contactor, Relays; Plugs; Terminals

-

Subcategory: Accessories

All triangle meshes are scaled to about 12,000 triangles and are available as OBJ. In addition, the triangle meshes are in multiple form. The Electrical and Electronic Components Dataset consists of five subfolders as shown in Fig. 5.

In the “split” folder, the three files “train.txt”, “val.txt” and “test.txt” each contain the names of the components that are intended as a train/val/test split. The subfolder “test_raters” contains the test labels of the three independent labellers. All three subfolders contain 24 files. The “train”, “val”, and “test” folders are identical in structure and contain the triangle meshes in OBJ, OFF, and STL formats, respectively. While the former both are identical, the STL files represent triangle meshes with a higher resolution. The choice between OBJ and OFF files is based solely on the final algorithm used. The labels in the “test” folder were not manually relabelled but represent the majority vote of the other three labellers.

Technical Validation

In the field of data-driven research, the quality of a data set is of utmost importance, especially when it is based on manual annotations. This technical validation aims to provide a robust evaluation of a data set consisting of labelled triangle meshes. Four critical aspects are addressed: internal consistency, inter-rater reliability, geometric mesh similarity, and feature similarity.

Internal consistency

The consistency of labelling, particularly focusing on the mode and median being uniformly zero across all raters and data set partitions, underscores a coherent labelling strategy. This cohesiveness in central labels provides a stable and reliable baseline for subsequent vertex-wise analyses in geometric deep learning and other related applications. Ensuring such uniformity across different segments signifies that the labelling process was methodologically sound and adhered to a consistent norm or reference, thereby enhancing the reliability and applicability of the data set. A meticulous examination of the spread and variability in the labels, evidenced by subtle fluctuations in standard deviation values ranging from 1.1581 (validation set) to 1.2239 (rater 0), provides an implicit assurance of the data set’s integrity. This subtle range in spread does not allude to erratic variability but rather indicates a controlled and consistent labelling process. Furthermore, the tight range of variance values also corroborates the data set’s stability, providing a controlled environment where the data complexity is maintained without introducing unintended biases or skews. The data’s kurtosis and skewness were reviewed to ensure that any variability and asymmetry in the distribution were authentic and not a result of inconsistencies or errors in the labelling process. The validation set, exhibiting a pronounced kurtosis of 2.813, was specifically scrutinized to validate that it genuinely contains more extreme or rare cases, thereby enhancing the robustness of the data set by encompassing a wide array of potential real-world scenarios. The hierarchical pattern observed in the frequency distribution of each label, with label ‘0’ considerably dwarfing the others and comprising about 68% to 73% of the data set, was meticulously validated to ensure it reflects genuine data characteristics. The subsequent labels (‘1’ through ‘4’) present in a diminishing frequency order, each serving a specific and consistent role in representing various complexities and nuances in the triangle meshes. This hierarchical and consistent presence of labels, ensuring that neither is neglected nor disproportionately represented, validates that the data set can faithfully represent and be applied to a variety of network complexities and challenges.

Inter-rater reliability

Technical validation is crucial for establishing the reliability and applicability of this triangle mesh data set, both in academic and industrial contexts. The validation process begins with a thorough analysis of inter-rater reliability, employing multiple metrics such as Cohen’s, Fleiss’, and Randolph’s kappa.

These metrics, with all kappa statistics above 0.81, indicating almost perfect agreement levels8, are further supported by t-tests on continuous performance measures. This multi-metric approach robustly validates the reliability of the data set, making it useful for a range of applications, including high-performance machine learning models. In particular, the value of the data set is tied to its manually annotated labels, the reliability of which is assessed using a variety of metrics. While percentage agreement provides a basic measure, it lacks the nuance provided by the kappa statistic κ, which accounts for random agreement. This multi-faceted evaluation ensures the reliability of the data set, especially considering that these annotations serve as ground truth for future analyses.

Cohen’s kappa not only quantifies the degree to which raters agree but also adjusts for what could be expected by random chance. In case of Cohen’s kappa, the observed agreement Po is given by the ratio of the number of vertices on which both raters agree to the total number of vertices rated, where k is the number of categories and N is the total number of vertices. The expected agreement Pe of two raters is defined as the probability that rater one picks class i times the probability that rater two picks class i. In our data set, Cohen’s kappa values for the corresponding rater pairs are 0.9225 for rater (0,1), 0.8388 rater (0,2) and rater 0.8561 (1,2). This suggests “substantial” agreement based on Landis and Koch’s scale9. However, Cohen’s kappa is best suited for pairwise comparisons and may not fully capture the dynamics in scenarios with more than two raters, even when averaging the values10. Therefore the significance of Fleiss’ kappa11 becomes evident. Designed as a statistical measure for assessing the reliability of agreement between a fixed number of raters when assigning categorical ratings, Fleiss’ kappa extends the utility of Cohen’s kappa to multi-rater scenarios10. It is defined as11

where \(\bar{P}\) and \(\overline{{P}_{e}}\) is the average observed and expected agreement among all raters respectively. Here njk is the number of raters who assigned the kth category to the jth subject with n being the number of raters, K the number of categories and N the number of subjects11. The calculated Fleiss’ kappa value is 0.8561, corroborating the finding of substantial agreement among the raters. While kappa statistics are widely used, they are not without limitations. They can be sensitive to trait prevalence and base rates, potentially leading to paradoxical or misleading results. To mitigate these limitations, we also employed Gwet’s AC112 which is defined by:

Po is the observed agreement among the raters and Pc is the agreement by chance12. Where N is the total number of meshes being rated and Xii is the number of raters that agree on class i for a given vertex. Xij is the number of raters that assigned the jth vertex to class i. With a value of 0.9272, it provides an additional layer of confirmation for the substantial agreement among raters. The convergence of these different metrics while being largely consistent lends robustness to the reliability of the data set. Similarly, the independent hypothesis tests from Table 3 ensure that the data set is not only internally consistent, but also a reliable tool for a variety of external applications, ranging from academic research, computational geometry research to machine learning models aimed at approximating human-level performance in mesh segmentation.

In addition, the Brennan-Prediger kappa13 (0.9203), Conger’s kappa14 (0.87218), and Krippendorff’s alpha15 (0.87215) were also evaluated, confirming the high agreement.

Geometric similarity

Understanding the geometric similarity between different mesh formats – in particular OFF and STL – is essential not only for computational analysis, but also for ensuring that geometric information is not lost during downsampling. This study uses the Hausdorff distance, HKS, and p-Wasserstein distance to effectively tackle shape representation and comparison. The Hausdorff distance offers a precise measure for maximum discrepancies, vital for exact shape matching. HKS provides deep insights into local geometric features, enhancing our ability to distinguish between similar shapes through isometric invariance. The p-Wasserstein distance complements these by quantifying distribution transformations based on spatial geometry, ensuring a comprehensive analysis. The combination of these three descriptors ensures that the similarity measurement is both thorough and efficient. The Hausdorff distance16 lends itself to quantifying the extent to which two subsets of a metric space are close defined by16:

Surprisingly, a high Hausdorff distance was found between the OFF and STL formats, with a mean average distance of 14.1604 mm. This is significant because the distance is measured in the same unit as the meshes themselves, which is millimeters. While this could be a warning signal in most contexts, it is important to remember that the Hausdorff distance is very sensitive to mesh tessellation. Considering that the OFF and STL formats have different tessellation granularity, the high Hausdorff distance is more of a warning sign than a judgment of geometric dissimilarity. This therefore highlights the need for a multimetric approach. To further investigate the surprisingly high Hausdorff distance, the optimal transport distance17 in the form of p-Wasserstein distance18 and heat kernel signature19 were measured as more nuanced shape descriptors. The first also known as the Wasserstein-Kantorovich-Rubinstein18 distance or in the context of computer vision referred to as Earth Mover’s18,20 distance provides a robust way to measure shape similarity by considering the cost of transforming one shape into another18:

On the other hand, HKS as a function of time t, offers a spectral analysis of the shape, encapsulating local and global geometric properties. The HKS19 is defined as the diagonal (x = y) of the heat kernel kt with ϕ being the eigenfunctions of the Laplace-Beltrami-Operator evaluated at x and y respectively and λi being the corresponding eigenvalues21:

Both the OFF and STL mesh formats exhibit a remarkable degree of geometric congruence, as substantiated by the HKS and OTD. The HKS values were computed on the normalized meshes, using 16 eigenvalues6 for computational efficiency, and further analyzed through dynamic time warping22. This analysis yielded a mean HKS value of 3.5677 × 10−8 and a maximum of 5.36 × 10−7, both extremely close to zero. These low HKS values strongly suggest that the diffusion processes on both mesh formats are nearly identical, affirming their intrinsic geometric similarity. Complementing this, the mean Earth Mover’s distance between the OFF and STL formats was found to be 9.8169, with a maximum distance of 148.1478. This metric, which quantifies the minimal “cost” of transforming one shape into another, further corroborates the geometric congruence indicated by the HKS values. Despite differences in tessellation granularity, these metrics collectively demonstrate that the OFF and STL formats are highly similar in their underlying geometry and can be considered interchangeable for applications where tessellation granularity is not a critical factor.

Feature similarity

The detailed evaluation of feature similarity across different raters constitutes a vital component of the technical validation process in this study. This is of particular importance given the study’s overarching aim of facilitating the automated assembly of electrical and electronic components in control cabinets. For this purpose, distinct features were extracted from the main triangle meshes using the labels provided by each rater. These isolated, disconnected submeshes were then subjected to an exhaustive geometric analysis, which included computations of their surface area, the volume encapsulated by their convex hulls, and various centroids. Despite slight variations in labelling metrics like accuracy, precision, recall F1-score, and the Jaccard index among the raters, these differences had a negligible impact on the geometric attributes of the segmented features. The surface area of the labelled classes exhibited only small percentage differences as shown in Table 4.

The percentage of the total surface area occupied by all features shows minimal variation when compared to the surface area of the triangle meshes as a whole. Notably, certain classes, such as 2 and 3, occupy a significant amount of surface area, although the vertex label frequencies in Table 2 suggest otherwise. This indicates that classes 2 and 3 may require fewer vertices for representation, indicating simpler geometry. Consequently, it would be prudent to consider either a surface-based segmentation approach or one based on shape descriptors to address the label imbalance.

Usage Notes

The Electrical and Electronic Components Dataset7 is freely available at the Harvard Dataverse at https://doi.org/10.7910/DVN/D3ODGT. Throughout the software development, only open-source software was used. Blender and associated Python packages were used for labelling and exporting the labels. All statistical results can be replicated using the code in https://github.com/bensch98/eec-analysis. The script stats.py calculates all statistics in pandas DataFrames and exports them to CSV files. The implementation of the HKS is based on Sharp et al.

Experimental application

The data set was already used in segmentation related tasks using MeshCNN and DiffusionNet23. In this proposed concept meaningful results were achieved across all segmentation classes based on the 234 models included in this data set. The results obtained enable the precise determination of feature vectors. This is particularly relevant for direction-dependent assembly and disassembly steps such as cable contacting. Due to the dispersed nature of information sources relevant to assembly of control cabinets, the generated directional vectors are of particular significance as a unified database.

Existing data sets

Other noteworthy data sets that can be used for similar deep learning-based approaches from a purely technological perspective include the ABC24, SHREC25 or MPI FAUST26 data set. While SHREC and MPI FAUST have no domain reference and therefore tend to be unsuitable for the intended use case of assembly and disassembly of control cabinets, our Electrical and Electronic Components data set is a useful addition to the widely used ABC data set. A combination of both data sets in the form of training a more general foundation model based on the ABC data set and a fine-tuning using the data set presented by us offers additional potential alongside the proof of concept already presented by Bründl et al.23.

Code availability

Figures 2, 3 were generated using the data provided in the data set using the open source library Open3D27. To facilitate the reproduction of these figures, a copy of the raw data is included in the repository’s corresponding directory. Data set preparation was done with a combination of libraries, including Open3D27, numpy28 and bpy. Blender version 3.3.0 was used to label the 234 triangle meshes. Further on, various functions were programmed to interact with the data set. These include functions for scaling labels up and down, cropping triangle meshes by labels, converting vertex labels to triangle or edge labels and calculating surface, volume and cluster centroids of the cropped out meshes like the labelled regions. Additionally, based on the technology, category, and subcategory, the components can be filtered. All the software used in the study are open source available at https://github.com/bensch98/eec-analysis.

References

Bründl, P., Stoidner, M., Nguyen, H. G., Baechler, A. & Franke, J. Challenges and Opportunities of Software-Based Production Planning and Control for Engineer-to-Order Manufacturing. In Advances in Production Management, edited by IFIP International Federation for Information Processing (2023).

Stoidner, M., Bründl, P., Nguyen, H. G., Baechler, A. & Franke, J. Towards the Digital Factory Twin in Engineer-to-Order Industries: A Focus on Control Cabinet Manufacturing. In Advances in Production Management Systems. Production Management Systems for Responsible, edited by IFIP International Federation for Information Processing (Springer Nature, Switzerland, 2023).

Huang, J., Su, H. & Guibas, L. Robust Watertight Manifold Surface Generation Method for ShapeNet Models, (2018).

Huang, J., Zhou, Y. & Guibas, L. ManifoldPlus: A Robust and Scalable Watertight Manifold Surface Generation Method for Triangle Soups, (2020).

Hanocka, R. et al. MeshCNN: A Network with an Edge https://doi.org/10.48550/arXiv.1809.05910 (2018).

Sharp, N., Attaiki, S., Crane, K. & Ovsjanikov, M. DiffusionNet: Discretization Agnostic Learning on Surfaces, (2020).

Scheffler, B. & Bründl, P. Electrical and Electronic Components Dataset. Harvard Dataverse https://doi.org/10.7910/DVN/D3ODGT (2023).

McHugh, M. L. Interrater reliability: the kappa statistic. Biochem Med, 276–282, https://doi.org/10.11613/BM.2012.031 (2012).

Landis, J. R. & Koch, G. G. The measurement of observer agreement for categorical data. Biometrics 33, 159–174 (1977).

Warrens, M. J. Inequalities between multi-rater kappas. Adv Data Anal Classif 4, 271–286, https://doi.org/10.1007/s11634-010-0073-4 (2010).

Fleiss, J. L. Measuring nominal scale agreement among many raters. Psychological Bulletin 76, 378–382, https://doi.org/10.1037/h0031619 (1971).

Gwet, K. L. Computing inter-rater reliability and its variance in the presence of high agreement. The British journal of mathematical and statistical psychology 61, 29–48, https://doi.org/10.1348/000711006X126600 (2008).

Brennan, R. L. & Prediger, D. J. Coefficient Kappa: Some Uses, Misuses, and Alternatives. Educational and Psychological Measurement 41, 687–699, https://doi.org/10.1177/001316448104100307 (1981).

Conger, A. J. Integration and generalization of kappas for multiple raters. Psychological Bulletin 88, 322–328, https://doi.org/10.1037/0033-2909.88.2.322 (1980).

Krippendorff, K. Measuring the Reliability of Qualitative Text Analysis Data. Qual Quant 38, 787–800, https://doi.org/10.1007/s11135-004-8107-7 (2004).

Taha, A. A. & Hanbury, A. An efficient algorithm for calculating the exact Hausdorff distance. IEEE transactions on pattern analysis and machine intelligence 37, 2153–2163, https://doi.org/10.1109/TPAMI.2015.2408351 (2015).

Peyré, G. & Cuturi, M. Computational Optimal Transport https://doi.org/10.48550/arXiv.1803.00567 (2018).

Mémoli, F. Gromov–Wasserstein Distances and the Metric Approach to Object Matching. Found Comput Math 11, 417–487, https://doi.org/10.1007/s10208-011-9093-5 (2011).

Sun, J., Ovsjanikov, M. & Guibas, L. A Concise and Provably Informative Multi-Scale Signature Based on Heat Diffusion. Computer Graphics Forum 28, 1383–1392, https://doi.org/10.1111/j.1467-8659.2009.01515.x (2009).

Rubner, Y. The Earth Mover’s Distance as a Metric for Image Retrieval. International Journal of Computer Vision 40, 99–121, https://doi.org/10.1023/A:1026543900054 (2000).

Sharp, N. & Crane, K. A Laplacian for Nonmanifold Triangle Meshes. Computer Graphics Forum 39, 69–80, https://doi.org/10.1111/cgf.14069 (2020).

Senin, P. Dynamic Time Warping Algorithm Review (2008).

Bründl, P. et al. Semantic part segmentation of spatial features via geometric deep learning for automated control cabinet assembly. J Intell Manuf https://doi.org/10.1007/s10845-023-02267-1 (2023).

Koch, S. et al. ABC: A Big CAD Model Dataset For Geometric Deep Learning. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019).

Lian, Z. et al. SHREC ‘11 Track: Shape Retrieval on Non-rigid 3D Watertight Meshes, (2011).

Bogo, F., Romero, J., Loper, M. & Black, M. J. FAUST: Dataset and evaluation for 3D mesh registration. In Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) (IEEE, Piscataway, NJ, USA, 2014).

Zhou, Q.-Y., Park, J. & Koltun, V. Open3D: A Modern Library for 3D Data Processing, (2018).

Harris et al. Array programming with NumPy. Nature 585, 357–362, https://doi.org/10.1038/s41586-020-2649-2 (2020).

Acknowledgements

This work was also financially supported by RITTAL GmbH & Co KG.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

B.S. aggregated the data and evaluated several different neural network architectures and did the statistical analysis. P.B. designed and supervised the study. H.N. conducted an extensive literature research. M.S. has provided input regarding the application-oriented problems. B.S. and P.B. wrote the manuscript. All authors reviewed the manuscript draft.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships which have or could perceived to have influenced the work reported in this article.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scheffler, B., Bründl, P., Nguyen, H.G. et al. A Dataset of Electrical Components for Mesh Segmentation and Computational Geometry Research. Sci Data 11, 309 (2024). https://doi.org/10.1038/s41597-024-03155-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-03155-w

- Springer Nature Limited