Abstract

Precision medicine has the potential to provide more accurate diagnosis, appropriate treatment and timely prevention strategies by considering patients’ biological makeup. However, this cannot be realized without integrating clinical and omics data in a data-sharing framework that achieves large sample sizes. Systems that integrate clinical and genetic data from multiple sources are scarce due to their distinct data types, interoperability, security and data ownership issues. Here we present a secure framework that allows immutable storage, querying and analysis of clinical and genetic data using blockchain technology. Our platform allows clinical and genetic data to be harmonized by combining them under a unified framework. It supports combined genotype–phenotype queries and analysis, gives institutions control of their data and provides immutable user access logs, improving transparency into how and when health information is used. We demonstrate the value of our framework for precision medicine by creating genotype–phenotype cohorts and examining relationships within them. We show that combining data across institutions using our secure platform increases statistical power for rare disease analysis. By offering an integrated, secure and decentralized framework, we aim to enhance reproducibility and encourage broader participation from communities and patients in data sharing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Main

The goal of precision medicine is to individualize medical treatment for patients based on their characteristics, including genetics, physiology and environment. Given the potential to improve patient outcomes and reduce healthcare costs, it has become a national research agenda in the United States and elsewhere1. However, its potential cannot be realized without unifying clinical and genetic data to improve understanding of clinical observations within their genetic context2,3,4,5. Although some progress has been achieved, such as the All Of Us research project, the UK Biobank and the eMERGE research network, several key hurdles remain3,6. For example, integrated data systems that harmonize distinct clinical and omics data formats are lacking, leading to missed opportunities2,5. Furthermore, larger sample sizes and diverse populations are necessary when attempting to link genotypes to diseases, as biobanks tend to contain small numbers of patients from disease cohorts. Thus, achieving large sample sizes is only possible by aggregating data across institutions in a systematic way1. This requires a framework for uniformly processing and analyzing clinical and genetic data from multiple sources3. Importantly, a critical barrier to addressing these needs is a lack of robust tools to ensure data integration while maintaining security4. An ideal platform for storage and analysis of clinical and genetic data would enable unified data storage of multidomain data, protect from loss and manipulation, provide appropriate and controlled access to researchers and record usage logs.

Linking clinical information (for example, electronic health records (EHRs)) with genetic data across multiple sites presents several logistical challenges. First, they are stored under different file formats in separate databases. This increases technical requirements as users must learn distinct tools to parse different data types. There are also different data security requirements, as EHRs are considered protected health information and cannot be easily shared, even in anonymized form3,7. This often results in the creation of multiple databases for different domains, with a heavy analysis load required for linking them. Although initiatives to integrate clinical and genetic data are ongoing (for example, HL7 FHIR Genomics8), they do not address issues related to data security in multisite settings8,9. As such, concerns relating to data ownership, cost and dissemination procedures remain unresolved. Blockchain, a distributed ledger technology, can be an infrastructure solution that overcomes a number of these logistical challenges due to its properties of security, immutability and decentralization10. Furthermore, the inherent flexibility of blockchain technology makes it readily compatible with and complementary to efforts related to data standardization and harmonization.

Despite the fact that the implementation of blockchain technology in science is in its infancy, there are numerous blockchain-based solutions for clinical or genetic data sharing10,11,12. Blockchain has been used for next-generation sequencing data indexing and querying13, omics data access logging14,15, pharmacogenomics data querying16, patient-controlled data sharing17,18, coordinating EHR information19,20, infectious disease reporting21 and trustworthy machine learning22. However, most of these solutions do not enable the integration of genetic and clinical data. Moreover, most of them rely on storing the data outside the blockchain with only hashed references stored ‘on chain’22,23. When data are stored outside the blockchain, several limitations exist. First, the integrity of data stored outside the network is not guaranteed by blockchain’s immutability, posing a risk of tampering. Second, access cannot be as strictly controlled or audited when data can be accessed via methods outside of the network. Importantly, data contributors do not retain sovereignty with the ability to directly audit how their data are used. Finally, storing data ‘on chain’ offers the ability to perform computation directly on the network. This streamlines processing, as data are easily co-queryable, and also ensures stronger oversight on what computation is performed by researchers.

Here we present a decentralized data-sharing platform (PrecisionChain) using blockchain technology that unifies clinical and genetic data storage, retrieval and analysis. The platform works as a consortium network across multiple participating institutions, each with write and read access10,13. PrecisionChain was built using the free version of blockchain application programming interface (API) MultiChain, a well-maintained enterprise blockchain24. Although MultiChain provides data structures called ‘streams’ for data storage, the vanilla implementation does not allow for multimodal data harmonization and was shown to be still inefficient with performance overheads and high data storage cost13. Therefore, we created a data model and indexing schema that harmonizes clinical and genetic data storage, enables multimodal querying with low latency and contains an end-to-end analysis pipeline25. We included a proof-of-concept implementation with an accessible front end to showcase functionality for building cohorts and identifying genotype–phenotype relationships in a simulated network. We also showed that we can accurately reproduce existing association studies using UK Biobank data. We further assessed the utility of our framework within the context of a rare disease by analyzing genetic and clinical data of patients with amyotrophic lateral sclerosis (ALS) using data from 26 institutions in the New York Genome Center (NYGC) ALS Consortium.

Results

PrecisionChain enables efficient indexing of multimodal data

We envisage PrecisionChain to be used by a consortium of biomedical institutions that share genetic and clinical data for research purposes (Fig. 1a). We developed a data model and indexing schemes to enable simultaneous querying of clinical and genetic data. To overcome challenges related to blockchain technology, specifically transaction latency and lack of data structures for flexible querying, we developed an efficient data encoding mechanism and sparse indexing schema on top of MultiChain’s ‘data stream’ feature25.

a, Consortium network. Network is made up of biomedical institutions. All sites share data on a decentralized blockchain platform maintained by all nodes. New joining institutions are verified via cryptographic tokens. Once joined, they can upload new data and access existing data. b, High-level indexing. Indexing of data into three levels: EHR, Genetic and Audit. EHR and Genetic levels are further divided into Domain and Person views and Variant, Person, Gene, MAF counter and Analysis views, respectively. Each view is made up of multiple streams with streams organized by property. c, Indexing of Domain view is by OMOP clinical table. Within each clinical table, we index streams using the OMOP vocabulary hierarchy. d, Indexing of Person views is by person ID with all data for a patient under one stream. This is done for both clinical and genetic data. e, Indexing of Variant view is by chromosome and genomic coordinates. f, MAF counter is organized by MAF range. MAF calculation occurs at every insertion. g, Analysis view records metadata to harmonize sequencing data, assess relatedness among samples and conduct population stratification.

We indexed data into three levels: clinical (EHR), genetics and access logs (Fig. 1 and Extended Data Fig. 1a–d). Within each level, we organized the data into views. We further used additional nesting within each view to enable efficient and flexible access to the data. We then created a mapping stream that records how data have been indexed and how to retrieve information under all views. This speeds up query time and allows us to efficiently store multimodal data under one network.

At the EHR level, we used the standardized vocabularies of the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM) format (see Methods for details), which supports integration of clinical data from multiple sources26,27. OMOP is made up of concepts that represent some unique clinical information (for example, a specific medication or diagnosis)27. Under the EHR level, we developed two separate indexing schemes, which we call Domain view and Person view. The Domain view is indexed by concept type (for example, diagnosis, medications, etc.). If one queries the network with a concept (for example, diabetes diagnosis), the network will return all the patients with that record. The Person view is organized by patient ID—that is, each data stream contains the entire medical record of a patient to allow quick querying of single patients’ medical records. In both views, each entry contains several keys that can be used for specifying queries, including patient ID, concept ID, concept type, date of record (that is, when the clinical event occurred) and concept value or information (for example, laboratory value, medication dose, etc.). Combining multiple keys in a query enables cohort creation.

At the genetic level, we indexed data from variant call format (VCF) files to store and query genetic variants. We developed five sub-indexing schemes called Variant view, Person view, Gene view, Minor Allele Frequency (MAF) counter and Analysis view. Within the Variant view, we log all genetic variants into streams binned and indexed by their genomic coordinate. Users can extract patients’ genotypes for any variant using its genomic location. Patient view inserts all variant data for a patient into one stream, enabling fast retrieval of a patient’s genome. Gene view records the variants associated with specific genes, biological information about the gene and clinical and other annotations from ClinVar28, variant effect predictor (VEP) score29 and combined annotation-dependent depletion (CADD) score30. In doing so, gene view acts as a comprehensive repository of a variant’s known biological function and clinical impact. MAF counter is the most dynamic view of this level. It records the MAF values of the variants and is automatically updated as more patients are added to the network. We overcame the issues with immutability of stored data by timestamping the MAF values. System uses the MAF value with the most recent timestamp. In Analysis view, we recorded information necessary to conduct analysis, including sequencing and genotype calling metadata, principal components (PCs) for population stratification and variants needed to calculate kinship among samples.

A key aspect of controlled access is secure storage and query of audit logs to check for potential misuse. At the access logs level, whenever a researcher subscribes to the network or performs any kind of query or analysis, the information is automatically logged with a timestamp and the user’s wallet address. This creates an immutable record of use. Notably, the record is highly granular and can be searched in multiple ways. First, it is possible to determine exactly which records were viewed by a specific user. Second, it can return all user queries that returned a specific data point. The former is key for Health Insurance Portability and Accountability Act (HIPAA) audits, and the latter allows contributors to determine exactly how their data have been used. One key benefit of PrecisionChain is that the audit logs (and any other data on the chain) are immutable. This makes unauthorized alterations virtually impossible and ensures that any attempt to tamper with records is easily detectable.

One of the unique aspects of PrecisionChain is the ability to perform multimodal queries on the data. Query modules take advantage of the nested indexing scheme and mapping streams to efficiently retrieve information used for building cohorts and examining relationships (Fig. 2a). We provide the following queries in real time: (1) domain queries, such as pulling all patient IDs for individuals diagnosed with a particular disease; (2) patient queries, such as pulling all laboratory results for a single patient; (3) clinical cohort creation, based on any combination of clinical concepts, concept values, demographics and date ranges; (4) genetic variant queries, such as pulling all patient IDs that have a specific disease-causing variant; (5) patient variant queries, such as pulling the genome of a patient; (6) MAF queries, such as pulling all patients who have rare or common variants (that is, querying variants with a MAF threshold); (7) gene queries, such as pulling the IDs of all patients who have a disease-causing variant associated with a specific gene of interest; (8) variant annotation queries, such as retrieving all patients with variants annotated as pathogenic; (9) genetic data harmonization queries, such as extracting all samples aligned and processed using the same analysis pipeline; (10) kinship assessment queries, such as determining relatedness between samples in a cohort; (11) genetic cohort creation based on any combination of genetic variants, MAFs and sequencing metadata; (12) combination EHR and genetic cohort creation using both clinical and genetic logic gates (for example, all the patients with variant X and disease Y; Fig. 2b); and (13) combination EHR and genetic queries to identify genotype–phenotype relationships within a cohort (for example, presence of rare variants in a particular gene for patients with disease X compared to controls; Fig. 2c). See Extended Data Fig. 1e, Extended Data Table 1, Table 1 and Methods for more details on indexing and querying. Note that, due to the immutable nature of blockchain technology, the entries cannot be altered. Therefore, our system was designed to return queries with the latest timestamp if multiple entries exist.

a, Mapping stream indexing. Based on the users’ query, search keys are directed to the appropriate stream. A mapping stream is created for every view. Entries in the mapping stream follow a Key:Value structure (Key is the user’s input; Value is the stream where the data are stored). b, Cohort creation. Users input desired clinical characteristics, genes of interest and a MAF filter into the search function. Using the EHR-level ‘Domain view’, patient IDs for those that meet clinical criteria are identified. Using the Genetic-level ‘Gene, MAF counter and Variant views’, the appropriate variants are identified, and patient IDs with those variants are extracted. A set intersection of the two cohorts is done to create a final cohort, which can be analyzed further. c, Genotype–phenotype relationships. Users input variants of interest into the search function. Using the Genetic-level ‘Variant view’, IDs for patients with that variant(s) are extracted. All diagnoses for each patient are retrieved using the EHR-level ‘Person view’. The strength of relationship between each SNP and condition can be examined. ‘Gene view’ can give further information on what genes are carrying the variants, linking the clinical information to detailed genetic information (chr, chromosome; pos, position).

PrecisionChain provides security while being scalable

To showcase the value of our framework for precise cohort building and analysis, we simulated a data-sharing network consisting of clinical and genetic data for 12,000 synthetic patients. For clinical data, we used the Synthea patient generator to create EHR data in OMOP CDM format (Methods). Synthea has been shown to produce comprehensive and realistic longitudinal healthcare records that accurately simulate real-world datasets31,32. For genetic data, we simulated samples using 1000 Genomes Project (1000GP) individuals as reference (Methods)33,34. To reflect the technical considerations of genetic data sharing, we also assigned sequencing metadata to each sample (for example, sequencing machine, sequencing coverage, alignment pipeline and variant calling pipeline). We used the simulated network to showcase network functionality and the utility of combining genetics and clinical data in one infrastructure. For example, it is possible to build a cohort of patients who have a diagnosis of diabetes, take metformin and have a rare variant in the SLC2A2 gene, which is known to influence metformin response35. In this example, the availability of genetic and clinical information allows for more targeted cohort creation. See Table 1 for a full list of available query types.

A major challenge with blockchain technology adoption is that end-users are not experts in distributed systems or cryptography. We developed a user-friendly front-end graphical user interface (GUI) for researchers to access the network, query data and run analysis via an interactive Jupyter Notebook (https://precisionchain.g2lab.org; username: test@test.com, password: test-ME). Researchers sign into the system with their username, and, in the back end, the blockchain recognizes the wallet addresses associated with the username and grants access. Users see drop-down menus and search windows in the front end, and all executions are performed over the blockchain network in the back end. For analysis, users can leverage one of the predefined functions in our query and analysis scripts or build new ones using the MultiChain command line interface (CLI) (Extended Data Fig. 2). We hope to increase adoption by abstracting away knowledge of blockchain algorithms while users are querying and analyzing data.

We then developed an ‘on chain’ analysis pipeline that can be used for a number of association studies for both common and truly rare genetic diseases, including genome-wide association studies (GWASs) and the classification of variants of uncertain significance (VUS) (see Methods for details). To illustrate the network’s analysis capabilities, we provide an example script to conduct a GWAS (can also be spinned as a Jupyter Notebook in the GUI), which uses built-in functionality to perform sample quality checks, harmonize genetic data from multiple sources, build analysis cohorts and extract relevant genetic, phenotypic and covariate information (Fig. 3). We provide security by enabling all analysis scripts to be run on the nodes of PrecisionChain to ensure that users cannot download patient data locally. Key to this analysis pipeline is the Analysis view, which facilitates genetic data harmonization, assessment of relatedness between samples and population stratification. For genetic data harmonization, we recorded technical metadata, such as sequencing machine, sequencing protocol, coverage, alignment pipeline and variant calling pipeline. Users can filter for samples based on any combination of metadata, enabling post hoc correction for batch effects. Although this approach mitigates much of the bias36,37, it does not completely eliminate all bias arising from analyzing data derived from different sources or variant calling pipelines. This issue is not unique to decentralized platforms but is a major challenge that large-scale genetics studies face. The research community suggested solutions, such as whole-genome sequencing (WGS) data processing standards that allow different groups to produce functionally equivalent results36 or iterative joint genotyping38. Owing to PrecisionChain’s modular build, these solutions can readily be adopted.

All relevant data are first inserted into the chain, including genetic and clinical data, sequencing metadata and population stratification PCs. Variant data are passed through a QC script before insertion. Filtering. Sequencing metadata are queried and filtered to extract patients who can be analyzed together. Patient relatedness is also assessed, and only unrelated samples are included in the final cohort. Extraction. Relevant phenotype, genotype and covariate information for the cohort is retrieved. Analysis. The data are analyzed and results are returned to the user.

We used the National Center for Biotechnology Information (NCBI)’s Genetic Relationship and Fingerprinting (GRAF) protocol used in the database of Genotypes and Phenotypes (dbGaP)’s processing pipeline for kinship assessment39. We stored the kinship single-nucleotide polymorphisms (SNPs) used by GRAF in the kinship stream. For a given query of person IDs, one can extract the patients’ kinship SNP genotypes and calculate the kinship coefficient. For population stratification, we projected every patient’s genotype onto the PC loadings from the 1000GP and stored the top 20 PC scores for each patient. Although we use 1000GPʼs PCs to showcase this functionality, any reference population panel can be used. Notably, we calculated the PCs using an unbiased estimator shown to limit shrinkage bias40. Note that, for the purpose of a GWAS, these population stratification covariates are nuisance parameters—that is, their exact values are not essential as long as they correct for the population stratification (see Extended Data Fig. 3 for an empirical comparison)41.

Blockchain technology has several inherent challenges, including large storage requirements, high latency and energy inefficiency. We addressed the storage requirements by organizing entries for both genetic and EHR data by clinical concept, genomic coordinate or person ID. This allows us to consolidate multiple related data points into a single entry and minimizes the storage overhead associated with making a unique entry. Figure 4a shows the total storage requirements (log[mb]) for the raw files and a single-node blockchain network with sample sizes between one and 12,000 patients. Total storage grows sublinearly for the blockchain network but remains higher than the raw files at all sample sizes, as expected. Figure 4b shows the growth rate in storage requirements, with values presented as a ratio to the storage costs of a network with a single patient. The growth of data storage within the blockchain network is slower compared to that of the raw files. Compared to a blockchain network with a single patient, a network with 12,000 patients requires only 245 times the storage. Next, we examined storage requirements as the number of nodes in the network increases. Extended Data Fig. 4 shows how storage costs of a network with 100 patients varies as the number of nodes increases from one to 16. We found that, as the number of nodes in the network increases, total data storage requirements at each node decrease. This is due to MultiChain’s inherent stream indexing property, which requires only the nodes pushing data onto the stream to hold full data copies and allows other nodes to store hashed references of the data. This process maintains data integrity on the blockchain without necessitating every node to store all the data42.

a, Total data storage. Total data storage requirements (log[mb]) for the raw files and blockchain network at 100, 1,000, 2,000, 4,000, 8,000 and 12,000 patients. b, Storage growth rate. Growth rate in network storage requirements. Values are expressed as a ratio to storage requirements of a single patient network (baseline). c, Query time by query type (in log[s]). Query times broken down by query type. d, Analysis time by analysis type (in log[s]). Analysis times are broken down by analysis type.

To overcome high latency, we implemented an efficient indexing structure. Under each view, we binned the data into fixed sizes to create separate hash tables (that is, streams) from each bin, which allows the upper bound of query times to be proportional to the size of these hash tables. We then used a mapping stream to direct each query to the appropriate stream (Fig. 2a). We showed that query times increase linearly with the size of the stream rather than the full network. In Fig. 4c,d, we show the query and analysis times by network size. We found that, for most queries, query times remain constant after 4,000 patients, which is the point at which streams reach their maximum size. We showed that our platform’s query time is around 61 s (6.7 s per query), and analysis time is around 79 s (26.3 s per analysis). Note that, as the size of the network increases, query times may still increase as there is additional latency independent of stream size, including number of streams, size of the mapping stream and blockchain consensus mechanism. However, our empirical evaluations show that latency is dominated by stream size (Fig. 4c).

We addressed energy inefficiency by using a proof-of-authority (PoA) consensus mechanism whereby any institution with write access can insert data into the blockchain without approval from other nodes. This differs from proof-of-work (PoW), as used in Bitcoin, where 51% consensus is needed10. PoA drastically reduces the computational work on the network by a factor of 108 when compared to a public PoW network43. PoA is only possible within networks consisting of trusted entities. In our design, the hospitals and institutional nodes, which possess ‘write’ permissions, are the trusted entities, whereas the researchers with ‘query’ permission may not fall into this category and do not need to be fully trusted. Strict verification and access controls can be enforced on researchers. Although this verification requirement exists for any data-sharing network, the benefit of PrecisionChain is that institutions retain data sovereignty, setting their own access criteria and tracking use via the audit trail, allowing easier tracing of malicious actors.

Another challenge is the availability of the data on a blockchain to all nodes, which may not be desirable due to privacy concerns. To mitigate this, we propose a selective data masking system, which is composed of encryption of selected data, creating streams that contain sensitive data open only to select users and restrictions on querying. In addition, limits on user access can be implemented to minimize risks from excessive data exposure. These are available features in the API that can be readily adopted into PrecisionChain. See the Methods for the proposed selective data masking and user access controls.

PrecisionChain can identify genotype–phenotype insights

We replicated an existing GWAS from the UK Biobank dataset44 by storing data and performing computations on PrecisionChain. This study sought to identify genetic variants associated with coronary artery disease (CAD) among patients with type II diabetes mellitus (T2DM). We used this study because of its use of complex phenotyping algorithms and the large sample size involved45. We showed that we closely match the cohort size of the original study (Extended Data Table 2) and are able to streamline the cohort creation process (that is, rule-based phenotyping) by leveraging our indexing structure. Notably, we successfully replicated all of the significant lead variants identified in the original study, including rs74617384 (original odds ratio (OR) = 1.38, P = 3.2 × 10−12 versus our OR = 1.37, P = 2.6 × 10−12) and rs10811652 (original OR = 1.19, P = 6 × 10−11 versus our OR = 1.20, P = 5.3 × 10−13). We next showed that PrecisionChain minimizes the need to query multiple databases with distinct file formats (Extended Data Table 3). We also compared β coefficients and P values obtained performing GWAS on PrecisionChain compared to using the standard software, PLINK46 (Fig. 5a,b). We showed that there is excellent agreement between the two methods (Pearson correlation > 0.98, P < 0.05). For more details, see Methods and Extended Data Fig. 5a,b.

a, Effect size coefficient agreement between UK Biobank (UKBB) GWAS results from PLINK and PrecisionChain. Two-sided t-statistics were used. No multiple hypothesis testing was involved. b, P value agreement between UKBB GWAS results from PLINK and PrecisionChain. Two-sided t-statistics were used. No multiple hypothesis testing was involved. c, Manhattan plot for variants with P < 5 × 10−2 in ALS GWAS. Variants are ordered by genomic coordinates. y axis is the −log(P value). Dashed lines represent the significance line (5 × 10−8) and the suggestive line (5 × 10−6). Two-sided t-statistics were used with standard GWAS Bonferroni correction for multiple hypothesis testing with a cutoff of P < 5 × 10−8. d, Statistical strength of signal by the number of sites included in the ALS GWAS. Plot showing the signal strength (−log(P value)) for variant as a function of the number of sites (and sample size in parentheses) participating in the network. This variant is located on the FAM230C gene. Variant is labeled by rsID. Two-sided t-statistics were used with standard GWAS Bonferoni correction for multiple hypothesis testing with a cutoff of P < 5 × 10−8.

We next assessed the utility of our data-sharing infrastructure to support discovery of genotype–phenotype relationships in a rare disease, ALS. We used data from the NYGC ALS Consortium that consists of 26 institutions and 4,734 patients. We conducted a GWAS to find significant associations between genotypes and site of onset (bulbar versus limb) and repeated this analysis using a replication cohort from the Genomic Translation for ALS Care (GTAC) dataset, which contains 1,340 patients collected from multiple sites47.

We identified one locus, 13q11 (lead SNP rs1207292988), associated with site of onset at a significance threshold of P < 5 × 10−8 that was successfully replicated on the GTAC dataset (Fig. 5c, Extended Data Fig. 5c and Extended Data Table 4). Extended Data Table 4 also contains details of the additional significant variants in 13q11 that were pruned for being in linkage disequilibrium (LD) (R2 > 0.5). The significant and replicated variant is located in the FAM230C gene. Although FAM230C, a long non-coding RNA (lncRNA), has not been previously implicated in ALS, there is growing evidence for the role lncRNAs in ALS48. For details on all significant and suggestive variants, categorized by replication status on the GTAC dataset, see Extended Data Table 4. We again compared results to a central GWAS conducted using PLINK and found an excellent agreement (Pearson correlation > 0.99) for both effect size coefficients and P values (Extended Data Fig. 6).

To assess the importance of a data-sharing network, we repeated ALS GWAS by varying the number of sites included in the network. We ordered sites by sample size contribution and iteratively added them to the analysis. This allowed us to determine the minimum number of sites required to meet our significance threshold. Note that we consolidated all sites with fewer than 50 patients into one bucket called ‘other’. We showed that a significant P value can be achieved only after data from all sites are included. We observed a linear relationship between the number of sites and −log10(P value), indicating that patients from all sites contribute to the results (Fig. 5d). This highlights the need for a formal data-sharing infrastructure in precision medicine, especially in the context of rare diseases.

Discussion

We present PrecisionChain, a data-sharing platform using a consortium blockchain infrastructure. PrecisionChain can harmonize genetic and clinical data, natively enable genotype–phenotype queries, record user access in granular auditable logs and support end-to-end robust association and phenotyping analysis. It achieves these while also storing all data on the blockchain (see Methods for the benefits of storing data ‘on chain’). We think that PrecisionChain can be used by precision medicine initiative networks to store and share data in a secure and decentralized manner. To achieve this framework, we developed three key innovations: a unified data model for clinical and genetic data, an efficient indexing system enabling low-latency multimodal queries and an end-to-end analysis pipeline specifically designed for research within a decentralized network.

Despite the adoption of blockchain technologies being in its infancy, we think that it can be a solution that overcomes many limitations of current data-sharing frameworks. A blockchain has inherent security safeguards and can cryptographically ensure tightly controlled access, tamper resistance and record usage10,11. These safeguards enable data provenance, increase transparency and enhance trust11,13,15. We enable this not only for storage of the data but also for performing computations ‘on chain’. This helps sovereignty and protection of the use of sensitive health data, particularly of marginalized groups49,50. We extend the inherent safeguards by introducing a granular audit system that can reliably track use. It provides a log of user activities, including records of when specific data were accessed with exact timestamps and which queries returned a specific data point. Access to this detailed and immutable log can support security audits necessary for regulatory compliance (for example, HIPAA compliance) as well as investigations into data misuse. The audit logs can be used to implement stricter access and verification controls on users and also allow greater supervision from patients and communities into how their data are stored and used for research purposes. This can help operationalize equitable data sharing, a key priority of any precision medicine research program49,51.

The flexible nature of the ledger technology allows a wide range of data types from different sources to be stored under one network. This reduces the processing tools needed by researchers to examine clinical and genetic data together. An added benefit of this flexibility is that data collection can be extended to capture patient-reported and clinical trial outcomes data, in effect making the network a research repository for any healthcare-related data52. By enabling conversion of the widely adopted file formats for clinical and genetic data, we hope to further promote semantic interoperability across health systems—a major barrier in biomedical informatics53. In addition, it opens the possibility of integrating the architecture directly into clinical practice, with all data collected on a patient available to any relevant institution and provider, irrespective of geographic location, in a more secure manner.

Data quality poses an inherent challenge in distributed networks. We proactively address this by integrating established pre-processing and data insertion safeguards with innovative blockchain-based solutions for data extraction. For clinical data, we employ Observational Health Data Sciences and Informatics (OHDSI)’s suite of tools to ensure that quality standards are met, which can be customized and automated at the point of insertion. For genetic data, we implemented a standard quality control (QC) process, address site-specific sequencing quality issues and embed advanced filtering techniques within the platform. Given the flexible nature of PrecisionChain, it is possible to integrate additional checks from external databases, such as the Genome Aggregation Database (gnomAD). Additionally, the immutable audit trail can help to identify and understand the sources of data quality issues, expose hidden patterns affecting data quality and facilitate targeted interventions.

Despite the many benefits of blockchain, it is still a nascent technology. Storing and querying large-scale data remains challenging due to inherent storage redundancy, transaction latency and a lack of data structures for flexible querying10,54. Although the former two ensure security guarantees, they also increase computational overhead. We think that decentralized control and security safeguards make this an acceptable tradeoff, especially given the increasing compute power available to institutions. In designing PrecisionChain, we balanced optimizing for both storage and query efficiency. We prioritized querying efficiency with a nested indexing system, as this has a greater impact on network functionality and user experience. Compared to a traditional system, our network offers more flexible multimodal queries but is less storage efficient. However, this deeper indexing can also slow down data insertion. We anticipate insertion to be planned on a monthly or quarterly basis once data have been transformed and quality checked. This update cadence is in line with standard institutional research data warehouse practices and can minimize QC concerns55,56. As our system has a slower increase in storage costs, we anticipate that the gap in costs should decline as the network grows. On-chain analysis also adds to the overhead. Thus, PrecisionChain strategically divides tasks between off-chain and on-chain processing. On-chain processing is reserved for tasks requiring data from multiple sites.

The data on a blockchain are available to all nodes, which may not be desirable due to privacy concerns. Although our platform was designed to be used in trusted consortium settings, we also propose a data masking system that can be readily adopted into our framework (see Methods, ‘Selective data masking’). In addition, limits on user access can be implemented to minimize risks from excessive data exposure (see Methods, ‘User access controls’). Moreover, through the platform’s control parameters, patient and research communities can exert direct control over data access and query rights for their community. For example, it is possible to manage data access via cryptographic tokens assigned to users’ wallets, revoking them after a certain time or after a specific use has been achieved.

Overall, PrecisionChain lays the groundwork for a decentralized multimodal data-sharing and analysis infrastructure. Although other decentralized platforms exist, they focus on either clinical or genetic data and not on a combination (see Methods for existing blockchain solutions)11,57,58,59,60. A unique advantage of PrecisionChain is the potential to implement trustless QC mechanisms and have a transparent analysis workflow. This allows for ‘methods-oriented’ research where workflows and findings can be easily verified36. In unifying multimodal data, we anticipate a growth in the discovery of more genotype–phenotype relationships that will translate into improved care. We hope that by enabling secure multimodal data sharing with decentralized control, we can encourage more institutions and communities to participate in collaborative biomedical research.

Methods

The authors confirm that research conducted in this study complies with all relevant ethical regulations. The NYGC ALS Consortium samples presented in this work were acquired through various institutional review board (IRB) protocols from member sites transferred to the NYGC in accordance with all applicable foreign, domestic, federal, state and local laws and regulations for processing, sequencing and analysis. The Biomedical Research Alliance of New York (BRANY) IRB serves as the central ethics oversight body for the NYGC ALS Consortium. Note that the BRANY IRB determined that the request for waiver of informed consent satisfies the waiver criteria set forth in 45 CFR 46.116(d).

We designed PrecisionChain using the blockchain API MultiChain, a framework previously used for biomedical applications24,61. MultiChain’s ‘data streams’ feature makes it possible for a blockchain to be used as a general purpose database as it enables high-level indexing of the data. The data published in every stream are stored by all nodes in the network. Each data stream consists of a list of items. Each item in the stream contains the following information as a JSON object: a publisher (string), Key:Value pairs (from one to 256 ASCII characters, excluding whitespace and single/double quotes) (string), data (hex string), a transaction ID (string), blocktime (integer) and confirmations (integer). When data need to be queried or streamed, they can be retrieved by searches using the Key:Value pairs. Publishing an item to a data stream constitutes a transaction (see below for a primer in MultiChain).

PrecisionChain is a permissioned blockchain where network access is limited to consortium members, and each joining node requires a token from validators (see below for a primer in blockchain). As it is a semi-private blockchain, we use a PoA consensus mechanism, whereby any trusted node can validate transactions (for example, data insertion and token access). Because all data insertion is made public to the consortium members, validating nodes are incentivized to maintain their reputation via accurate and timely data sharing. Once verified and joined, an institution can subscribe to and query the network as well as contribute data. Auditing is set up by querying the audit logs such that institutions receive a summary of their own usage and usage of their contributed data at regular intervals, but they are also able to query the usage in real time using audit logs level. We used the free version of MultiChain version 2.3.3 and Python version 3.6.13 in all implementations. VCF files are analyzed using BCFtools version version 1.9. Plaintext GWAS, including QC, was performed using PLINK version 1.90.

We developed three modules: buildChain, which creates a new chain; insertData, which inserts data into the streams and contains createStream, which creates the indexing structure for efficient data storage; and queryData, which enables multimodal queries.

Module 1: buildChain

buildChain initializes PrecisionChain, including runtime parameters and the access rights for all nodes joining from initialization (that is, read and write access). The default runtime parameters and node rights can be changed before initializing PrecisionChain.

Module 2: insertData

insertData creates the streams that are hierarchically indexed and inserts the data into the appropriate stream. For all views, we first created a mapping stream. The mapping stream records how data in the view have been indexed, including what is contained within each stream. insertData has nine submodules: createStream-Clinical, createStream-Variant, createStream-StructuralVariant, createStream-GTF, insertData-Clinical-Domain, insertData-Clinical-Person, insertData-Variant, insertData-Variant-Person and insertData-GTF. To ensure query efficiency, we fixed the maximum size of each stream. Once a stream exceeds this size, a second stream is created for additional data. Streams are then labeled with a bucket number to record the order of insertion.

Mapping streams

Extended Data Table 1 describes how we record indexing in the mapping stream for each view. The mapping stream has a Key:Value pair for each entry. The Key is the entity being queried (that is, variant or concept ID), and the Value is the name of the stream holding that information. This mapping is automatically assigned during insertion. Once a variant or concept is added to a certain stream, all instances of that entity will be added to the same stream.

EHR level

We inserted all standardized clinical data tables included in the OMOP CDM to the blockchain network. The OMOP CDM harmonizes disparate coding systems into a standardized vocabulary, increasing interoperability and supporting systematic analysis across many sites26,27,62. We took an approach that maximizes the efficiency of querying these data. We created two views: Domain and Person. Domain view contains data streams organized by concepts. Concept IDs are binned using the OMOP vocabulary hierarchy, with a group of related concepts assigned a separate stream. To achieve this, we selected a number of high-level OMOP concepts as ancestor concepts (for example, cardiovascular system, endocrinology system, etc.) and created a stream for each. Then, every remaining (child) concept is assigned to a unique ancestor concept (stream). This assignment is based on the first ancestor concept that subsumes the child concept in the OMOP vocabulary. To accommodate multiple inheritance, if a child concept belonged to multiple ancestor concepts, it was assigned the closest ancestor, and this assignment was recorded in the mapping stream. If one of the child’s other concept ancestors is queried, the mapping stream directs the query to the appropriate stream. This means that the assignment to an ancestor stream is purely for indexing purposes. As an illustrative example, imagine that ‘Hepatic failure’ (code 4245975) is queried. First, all of its descendants are extracted from the vocabulary, including ‘Hepatorenal syndrome’ (code 196455), which is stored under a different concept ancestor. The mapping stream is queried for ‘Hepatorenal syndrome’, and the concept ancestor ‘Disorder of kidney and/or ureter’ (code 404838287) is returned. The stream ‘Conditions_04838287’ is then searched to retrieve the relevant data for ‘Hepatorenal syndrome’. Using the vocabulary hierarchy ensures that related concepts are grouped together. On average, this would limit the number of streams searched in a single query, improving query times. In the patient view, we created a stream for a group of patients bucketed by patient ID ranges. Each stream includes the entire medical record of a patient in the stream, thereby enabling fast querying of all records from the same patient. As each stream contains data entries of different domains, it can be viewed similarly to a ‘noSQL’ database in its flexibility. Mapping stream keeps a record of which stream contains each patient.

Genetic level

We inserted genetic variants (SNPs, small insertions and deletions (indels) and structural variants (SVs)) and the information on the genes that overlap with these mutations. The genetic variant data are in VCF format, and the information on genes is in general transfer format (GTF). Before insertion, variant data are passed through a QC script to ensure that all inserted variants are suitable for analysis. We included five views: Variant, Person, Gene, Analysis and MAF counter. A mapping stream that records high-level indexing is also added. The mapping stream includes the stream that each variant, patient or gene is stored. Within the Variant view, we logged all genetic variants in the population into streams indexed by their genomic coordinate. That is, the genome is divided into discrete bins with each bin corresponding to a specific genomic coordinate range, and a stream is created for each bin. The exact genomic coordinate of the variant, alternative and reference allele and the genotype are included as keys for every entry, such that they are queryable. The data field for each entry includes the patients (person IDs) carrying the genotype in each entry. As such, each variant may have multiple entries, one for each genotype (genotype = 0, 1 and 2). Patient view creates a stream per patient and includes all heterozygous and homozygous alternative allele variants (genotype = 1 and 2) for the patient. To reduce storage requirements, homozygous reference alleles (genotype = 0) for a patient are not stored but are automatically recreated when queried because any variant in the network that was not logged for a specific patient is a homozygous reference for that patient (if the sequencing technology assayed that variant). Gene view stores the structural annotation of the variants, such as whether they overlap with different parts of a gene (for example, exons, introns and untranslated regions (UTRs)). Gene view also includes clinical and biological annotations from ClinVar, VEP and CADD, providing information on the pathogenicity and functional impact of the variants. Analysis view records information related to genetic analysis, including sequencing metadata, population stratification PCs and variants needed to calculate kinship among samples. The MAF counter records the MAF values of the variants and is automatically updated as more patients are added to the network. Because the blockchain is immutable, a new item with the updated MAF values is pushed with each insertion. During the insertion, our algorithm checks the timestamp of the existing entries to determine which MAF stream item is the most recent and accurate. It then extracts the relevant information (that is, sample size and allele frequency for each variant) and combines this with data from the current insertion to determine the new MAF. This is then inserted into the MAF counter stream.

Access logs

We created an access log view, a queryable stream that stores information on each subscription to the network and any query run on the network. An entry is inserted into the stream after an activity on the network. By inserting this into the blockchain, we created an immutable log on the blockchain that can be interrogated. We indexed and stored the type of query, the type of data (EHR or genetic), timestamp of the activity and user wallet ID. Each transaction (that is, query) is stored as a single item. The access log can be queried in real time, acting as an alert system for any potential misuse.

Submodule

createStream-Clinical

Creates streams for clinical data using the OMOP CDM format. For the domain view, each data table in the OMOP clinical tables database is a domain type for which streams are created. Each domain type has its own unique set of streams. Streams are created using a predefined stream structure based on ancestor concepts in the OMOP vocabulary. We selected a number of high-level concepts as ancestor concepts. These represent a broad clinical category (for example, cardiovascular system, endocrinology system, etc.). We assigned approximately 200 concepts as ancestor concepts, and, for every ancestor concept, we created a stream. Each stream follows the naming convention DOMAIN_ANCESTORCONCEPTID. For example, CONDITIONS_436670 represents all metabolic disease concepts, including diabetes (436670 is the concept ID for metabolic diseases). All known concepts are either covered under an ancestor concept ID or placed under the stream DOMAIN_0 (if no ancestor is found in the hierarchy). For the patient view, a stream is created for each group of patient IDs with the format CLINICAL_PERSON_IDBUCKET. For example, CLINICAL_PERSON_1 is the stream for ID bucket 1 (the mapping stream also contains a list of person IDs included in bucket 1). Using the full range of patient IDs (that is, minimum and maximum possible ID value), we create 20 uniformly sized buckets. Note that this range can be calculated automatically using the data or inputted by the user.

createStream-Variants

Creates streams based on genomic coordinates of genetic variants. This follows a predefined structure of CHROMOSOME_START_END. For example, 9_1_3000 includes all variants between positions 1 and 3,000 in chromosome 9. Person view streams are created in the same way as in createStream-Clinical.

createStream-GTF

Creates streams based on genomic coordinates of the genes. This follows a predefined structure of GTF_CHROMOSOME_START_END. For example, GTF_9_1_3000 includes all gene annotations between positions 1 and 3,000 on chromosome 9.

insertData-Clinical-Domain

Inserts tabular clinical data using the OMOP CDM format in the Domain view. Clinical data are added to the clinical domain streams (created via createStream-Clinical). Concept IDs are added to the mapping stream for all unique concept IDs. Each clinical table is a unique domain and has an exclusive set of streams to which data are added. To index concepts, we assigned every child concept (not an ancestor concept) to a unique ancestor concept (stream). This assignment is recorded in the mapping stream and is based on the OMOP vocabulary, so child concepts are assigned to ancestor concepts that subsume them in the hierarchy. Note that a predefined set of ancestor concepts is used to create the streams. Each entry in a stream represents a row of tabular data. This functionality allows querying with the following keys: Concept ID, Person ID, Year, Month-Year, Day-Month-Year and Value (if applicable).

insertData-Clinical-Person

Inserts tabular clinical data using the OMOP CDM format in the Person view. Clinical data are added to the clinical person streams, and patients (person IDs) are inserted into the mapping stream. Each person stream contains all medical record information for a patient irrespective of domain. This functionality allows querying with the following keys: Concept ID, Concept TYPE (the domain of the concept (for example, medication)), Person ID, Year, Month-Year, Day-Month-Year and Value (if applicable).

insertData-Variant

Inserts variant data using the VCF file format. Genetic variants are added to the variant streams, and the coordinates of these variants are added to the mapping stream. Each entry is a unique variant–genotype combination. That is, one genetic variant can have multiple entries, one for each genotype (for example, if, for a given variant, patients can have one of three genotypes (that is, 0, 1 or 2), then three separate entries are made). This functionality allows querying with the following keys: position, reference allele, alternative allele, genotype, sample size and MAF. Data for each entry then contain all patients (person IDs) with that particular variant–genotype combination. With each insertion, MAF is recalculated and inserted into the MAF counter stream.

insertData-Variant-Person

Inserts variant data using the VCF file format. Genetic variants are added to the Variant-Person streams, and patients (person IDs) carrying these mutations are added to the mapping stream. Each entry contains all genetic variants and associated information for a patient across a range of genomic coordinates (for example, Chr 1, Positions 1–300). Keys include person ID and genomic position start and end. Data for each entry are then a JSON entry with the genotype of the genetic variant. Note that only homozygous and heterozygous alternative alleles are stored to save space. With each insertion, MAF is re-calculated and inserted into the MAF counter stream.

insertData-GTF

Inserts details of genes overlapping with the genetic variants using GTF file format. Data are added to the GTF streams, and the gene IDs are added to the mapping stream. Each entry is a row from the GTF file and contains information for a particular gene. Keys include gene ID and gene feature (for example, intron, exon, transcript, etc.). Data are a JSON object and include gene start, gene end, gene type and strand.

insertData-Analysis

Inserts data necessary for genetic analysis. This includes sequencing and technical analysis metadata, SNPs used to calculate kinship using NCBI’s GRAF protocols and sample PCs for population stratification. Sequencing metadata entries are grouped by metadata such that all patients with a certain metadata are included in the same entry (for example, all patients sequenced by Oxford Nanopore PromethION are grouped under a single entry). Keys for the entry include the metadata type (for example, sequencing machine, variant calling pipeline, etc.) and the specific metadata itself (for example, Illumina NovaSeq 6000 and Genome Analysis Toolkit (GATK)). Data for kinship and population stratification are added per sample. This means that each patient will have a unique entry with their specific data. Data can then be extracted using sample IDs.

Module 3: queryData

We developed the queryData module to extract information for downstream analysis. To support efficient and multimodal querying, we leveraged our indexing schema and mapping streams for this module. By indexing all data types into distinct but related streams and using mapping streams to identify the appropriate streams, we reduced the query time significantly and enabled combination queries15. Our query module uses the Key:Value property of stream items to retrieve data from a chain based on a range of defined keys, including concept IDs, concept values, person IDs, dates, genomic locations, genotype, MAF, sequencing metadata and genes involved. When a user queries the chain, they first specify the query type (clinical, genetic or combined); our query module then finds the correct streams/bins based on the query information. This is achieved through querying the mapping streams, which contain a record of the data stored in each stream. Once the appropriate stream is identified, the module extracts the data, performs computation if necessary and returns the relevant information (see Extended Data Fig. 1e for more details). Table 1 describes all the available query functionalities. Overall, queries can be clinical (queryDomain, queryPerson), genetic (queryVariant, queryVariantPerson, queryVariantGene) or a combination of the two (queryClinicalVariant, queryVariantClinical). To increase flexibility and speed of the queries, we included multiple views with distinct indexes optimized for querying.

Three main query types are possible in the query module: clinical, genetic and combination. Multiple key searches are possible in each query using any of the keys included with an entry. For all queries, the mapping stream is first checked to determine the relevant streams to search. After each query, a call is made to the audit module to record the activity.

queryDomain

This functionality allows for a clinical query based on OMOP concept ID, date of concept ID occurrence and/or concept ID value. For each concept ID in the query, the mapping stream is searched to check for the appropriate stream, where data for that concept are stored. If the concept subsumes child concepts, these are also identified in the mapping stream and searched. Then, the relevant streams are searched with the queries, and the relevant patients (person IDs) are returned. It is possible to create cohorts by specifying multiple concept IDs, values and date ranges.

queryPerson

This functionality allows for a clinical query based on person ID, concept type (for example, diagnosis or test), concept ID, date of concept ID occurrence and/or concept ID value. The mapping stream is searched to determine which stream holds the patient’s data. All clinical data in that stream that meet the criteria (for example, person ID, concept type, date and value) can be returned. It is possible to specify a particular concept ID or concept type to return (known as the searchKey). If this is provided, then only data of that type or ID are returned.

queryVariant

This functionality allows for a genetic query based on the variant of interest’s genomic coordinate, genotype and MAF (optional). For each variant included in the query, the mapping stream is searched to determine the appropriate stream that holds data on that coordinate. The genomic coordinate and genotype are then searched in that stream, and patients (person IDs) with the variants of interest are returned. If a MAF range is inputted, variants outside that MAF range are filtered out.

queryVariantPerson

This functionality allows for a genetic query based on person ID, genetic coordinates (optional) and a MAF filter (optional). The person mapping stream is searched to identify which stream contains the person’s genetic data. Once found, that stream is then searched for the queried genetic coordinates, and the relevant variants are returned. If a MAF range is inputted, variants outside that MAF range are filtered out.

queryVariantGene

This functionality allows for a genetic query based on a gene of interest, genotype and MAF range (optional). For each gene (based on gene ID) included in the query, the mapping stream is searched to determine the appropriate stream to check. That stream is searched for variants that are associated with the gene. For each variant, patients (person IDs) with the queried genotype are returned. If a MAF range is inputted, variants outside that MAF range are filtered out.

queryAnalysis

This functionality enables cohort creation suitable for genetic analysis. This includes harmonizing samples across sites by accounting for differences in sequencing metadata, removing related samples from the cohort and extracting population stratification PCs. Metadata queries return all patient IDs that meet the search criteria. The criteria can include any number of metadata (for example, all patients with WGS on Illumina machines with at least 30–60× coverage depth and that were aligned using BWA63, and variants were called using GATK64). Kinship queries accept a list of patients and return a matrix with the pairwise kinship coefficients between all patients. To do this, all kinship SNPs for the cohort are extracted, and the kinship coefficient is then calculated similarly to GRAF39. GRAF is a tool developed by the NCBI specifically to support processing in dbGaP, a large database with phenotype and genotype data collected from multiple sources. It enables kinship calculation on any subset of samples given that they contain a limited number (10,000) of independent and highly informative SNPs39. Population stratification accepts a list of patient IDs and returns their PC scores. A user-defined value k is used to determine how many PC scores should be returned per patient.

queryClinicalVariant

This functionality allows for a ‘combination’ query based on clinical characteristics, genes of interest, genotype (optional) and MAF range (optional). It returns a cohort (person IDs) of patients with their clinical characteristics and relevant genetic variants. First, a clinical domain query is completed that returns a list of person IDs with the desired clinical characteristics (see ‘queryDomain’). Second, a genetic query is completed that returns person IDs for patients with relevant variants in the gene of interest (that is, variants within a MAF range or certain genotype (see ‘queryVariantGene’)). A set intersection of the two cohorts is done to create a final cohort with both clinical and genetic characteristics.

queryVariantClinical

This functionality allows for a ‘combination’ query based on variants and clinical characteristics of interest. First, patients (person IDs) with the queried genetic variants are returned (see ‘queryVariant’). These IDs are then searched using the ClinicalPerson module to extract relevant clinical characteristics for each person (see ‘queryClinicalPerson’). The characteristics are aggregated together as summary information.

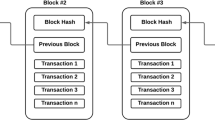

Blockchain

Blockchain was initially proposed in 2008 as the cryptocurrency Bitcoin but now has a range of uses65. This is because blockchain has several desirable properties, including decentralization, security and immutability. Blockchains are made up of append-only data blocks that are shared among nodes in a decentralized, distributed network. Transactions are appended to the blockchain as new blocks. Each block is cryptographically connected to the previous block via a header, creating a verifiable chain with a deterministic order. As such, once a block is added to the chain, it is immutable, as any attempt to change a block alters the header and so disrupts the chain. To ensure ‘trustless’ maintenance of the network, there is a consensus mechanism before data are added to the chain. Most widely used is PoW, where participating nodes compete to solve a computationally difficult problem with the winner gaining rights to append the next block. Other mechanisms are proof-of-stake (PoS) and PoA. Public blockchain networks typically make use of PoW or PoS, which are suitable for large networks with unknown and untrusted users. Conversely, private blockchain networks include only individuals who are known, and so PoA can be used. The specific benefits of blockchain for biomedical research applications include decentralized management, immutability of data, transparent data provenance, no single point of failure and security and privacy.

MultiChain

Several blockchain platforms are available to develop a clinical/genetic data-sharing platform. Kuo et al.61 produced a detailed review of the options and identified Ethereum, Hyperledger and MultiChain as the most appropriate. MultiChain is a popular blockchain API used for biomedical applications as it can create permissioned networks that can be used as consortium blockchains65. This is preferred as biomedical data are sensitive and should be shared only with a set of individuals or institutions. MultiChain is a fork of the Bitcoin Blockchain that provides features such as permission management and improved data indexing. The MultiChain ‘streams’ feature makes it particularly good at indexing. Streams are append-only, on-chain lists of data. They have Key:Value retrieval capability, which makes storage and query functionality easy. Furthermore, it is possible to have multiple keys, enabling complex logic gates for a given data query.

OMOP CDM

The OMOP CDM is an open community data standard that aims to standardize the structure of clinical data across different sources27. By standardizing different clinical databases, it is possible to combine and analyze them together. This is achieved by transforming clinical databases into a common format (that is, data model) with a common representation (that is, terminologies, vocabularies and coding schemes). One of the major elements in the data model are the clinical data tables. These contain the key data elements extracted from the EHR related to a patientʼs clinical characteristics, including diagnoses, measurements, observations, notes, visits, procedures and devices. A common representation is achieved using the OMOP vocabulary, which standardizes the medical terms used. It contains records, or concepts, uniquely identifying each fundamental unit of meaning that is used to express clinical information27. Each concept, or piece of clinical information, has a unique ID that is used as its identifier in the data table. Concepts can represent broad categories (such as ‘Cardiovascular disease’), detailed clinical elements (‘Myocardial infarction of the anterolateral wall’) or modifying characteristics and attributes that define concepts (severity of a disease, associated morphology, etc.). Concepts are derived from national or international vocabularies, such as Standard Nomenclature of Medicine (SNOMED), RxNorm and Logical Observation Identifiers Names and Codes (LOINC). Concepts are grouped into a hierarchy with detailed terms subsumed by broader terms. The OMOP CDM is used by many existing research networks, including OHDSI, which has over 2,000 collaborators from 74 countries62. The main aim of the conversion to OMOP is to support secondary analysis of observational clinical data. In places where the data cannot be shared, analytic code can be shared and executed at a local site’s OMOP instance, preserving privacy. It is worth noting that conversion to OMOP has been shown to facilitate faster, more efficient and accurate analysis across sites and it is particularly helpful for rare diseases66,67. It is increasingly being adopted by existing research networks, such as the UK Biobank and the All of Us Research Program68. The recording of EHR to OMOP CDM has already been done by over 200 health systems covering over 800 million unique patients. The typical process involves leveraging local data experts and resources made available by OHDSI, including conversion scripts and QC tools. A multidiscplinary team of data engineers, informaticians and clinicians at an institution works on mapping EHR data elements to standardized terminologies.

OMOP conversion

EHR data are converted to OMOP CDM using extract, transform, load (ETL) guidelines and data standards by OHDSI27. Although the full pipeline will be different for each institution, the general steps are well established and many open-source tools and scripts exist to automate the process. Generally, the process requires expertise from clinicians, who understand the source data; informaticians, who understand the OMOP CDM; and data engineers, who can implement the ETL logic. The steps can be broken down into the following. (1) Analyzing the dataset to understand the structure of the tables, fields and values. This can be done using the open-source software ‘White Rabbit’, which can automatically scan the data and generate reports69. (2) Defining the ETL logic from the source data to OMOP CDM. The open-source tool ‘Rabbit-in-a-hat’ from ‘White Rabbit’ can be used for connecting source data to CDM tables and columns, completing field-to-field mappings and identifying value transformations. (3) Creating a mapping from source to OMOP codes. This step is optional and only necessary if the source codes have not been previously mapped to OMOP. In most cases, this is not necessary. The ‘Usagi’ tool can be used to support this process70. (4) Implementing the ETL. The exact pipeline will differ by institution, and a wide variety of tools have been used successfully (for example, SQL builders, SAS, C#, Java and Kettle). Several validated ETLs are publicly available and can be used71,72. (5) Validating the ETL. This is an iterative and ongoing process that requires unit testing, manual review and replication of existing OHDSI studies. Full details can be found in The Book of OHDSI, chapter 6 (ref. 27). It is worth highlighting that OHDSI has many communities, resources and established conventions that adopters of the OMOP CDM can use for support.

Generating synthetic patient population

Synthea

Synthea is an open-source software project that generates synthetic patient data to model real-world populations33. The synthetic patients generated by Synthea have complete medical histories, including medications, procedures, physician visits and other healthcare interactions. The data are generated based on modules that simulate different diseases to create a comprehensive and realistic longitudinal healthcare record for each synthetic patient. Synthea aims to provide high-quality and realistic synthetic data for analysis. Previous studies showed that Synthea produces accurate and valid simulations of real-world datasets31,32. We use Synthea OMOP, which creates datasets using standard OHDSI guidelines.

1000GP dataset

The 1000GP is a detailed dataset of human genetic variation, containing sequencing data for 2,504 genomes34. We simulate genetic data using MAF values observed in 1000GP data as a baseline.

Creating the population

For the patient population in the publicly available demonstration network (accessed via https://precisionchain.g2lab.org/), we combined synthetically generated clinical data using Synthea and synthetically generated genetic data that are based on the 1000GP dataset. For every patient, we randomly assigned an ID that can be used across both their clinical and genetic data. Note that this population is essentially random, and any analysis and results are for demonstration purposes only.

Genetic data pre-processing

Before insertion, we implemented an automated QC step for all genetic samples. This ensures that genetic data shared on the network are suitable for downstream analysis. First, we filtered the data based on sample call rate (<5% genotypes missing) and genotype call rate (<5% samples missing genotype). We also tested for Hardy–Weinberg equilibrium (HWE) (<1 × 10−6) and LD (50-SNP window size, 5-SNP step size and 0.5 R2 threshold) in that cohort. Any removed SNPs are then recorded on the network such that, when researchers combine data from multiple cohorts, they can track which SNPs have been removed. Pre-processing steps before insertion are done using PLINK46. Additionally, every sample undergoes population stratification projection using the 1000GP as a reference. This is achieved by using the method outlined by Prive et al.40 to limit shrinkage bias. Importantly, we noted that, for the purpose of a GWAS, these population stratification covariates are nuisance parameters41. We empirically evaluated the projected scores (Extended Data Fig. 3).

GWAS on the blockchain

The analysis follows a similar workflow as a GWAS on traditional infrastructure. However, we replace many of the steps with functionality from the network. The full script can be viewed on GitHub. First, using the Analysis view, we harmonize genetic data using technical sequencing metadata. In the provided script, we select for patients with the same reference genome (HG38), sequencing machine (Illumina, NovaSeq 6000), alignment pipeline (BWA) and variant calling pipeline (GATK) used. Next, for all included samples, we extract data on genetic ancestry, filtering for white Europeans (>80%). We then assess relatedness between all samples and filter for unrelated samples. Next, we retrieve necessary covariates, such as population stratification PCs and sequencing sites. Using the ClinicalPerson view, we then extract the site of onset (phenotype) and gender (covariate) for all samples in the cohort. Note that the site of onset phenotype is based on the patient having the OMOP concept code for ALS (4317965) with attribute site (4022057) bulbar or limb. Patients with other sites recorded were excluded. More advanced logic can be implemented for complex phenotypes. The final cohort is constructed of patients who have all relevant data. Next, we extract genotype data per variant. At this stage, it is possible to conduct additional MAF filtering or HWE testing if necessary. Note that this was already done during insertion. We then run a logistic regression model to test for genetic associations with age of onset, adjusting for PCs, site of sequencing and gender. This final analysis step is conducted using a standard software package. To protect data security, the analysis is done on the blockchain node with users accessing only the results. This is similar to how analysis is run on All of Us73. In the future, it is possible to have the analysis itself run on the network as a smart contract such that no data ever leave the blockchain.

Rare genetic disease analysis

The platform is well suited to support research into truly rare diseases, including the reclassification of VUS. First, our platform facilitates the secure sharing of patient clinical and genetic data among many institutions, enabling the creation of larger and more comprehensive datasets of patients with rare genetic diseases. Moreover, the platform also contains a repository of all annotations associated with a variant, simplifying the tracking of VUS updates. A key strength of this platform is the multimodal queries, which allow researchers to simply build patient cohorts based on both clinical presentations and the presence of a specific VUS. This functionality, coupled with the integrated analysis pipeline, could support association analyses that uncover potential links between a VUS and a particular disease. Should new links be identified and verified, the annotation stream can be updated. Notably, a user-level alert system can be implemented via the audit stream, enabling physicians and researchers to subscribe to updates for specific variants and stay informed about any reclassifications. This workflow reduces technical barriers associated with rare disease research and ensures that the latest knowledge is rapidly disseminated. Our platform offers two key benefits for such analysis: (1) it allows institutions to contribute even a few samples while retaining control over their data, thereby increasing sample sizes; and (2) it serves as a self-contained repository that integrates genetic, clinical and variant annotation data, along with the cohort-building and analysis functionality needed to classify a VUS.

GWAS projection using the 1000GP

To validate the projection of samples onto the 1000GP PC loadings, we compared the top 10 PCs from the 1000GP projection to those from the NYGC ALS dataset (Extended Data Fig. 3). Note that we use samples from 1000GP with European ancestry. This is valid as our study only uses samples with European ancestry. We use Pearson correlation coefficients to assess their linear relationship and the Kolmogorov–Smirnov test to determine distributional similarity. Our analysis shows high correlation for PCs 1–4, with their distributions aligning significantly (P > 0.05). In contrast, PCs 5–10 do not show a strong relationship. This is likely due the top four PCs accounting for 79% of the explained variance from the top 10 PCs.

Blockchain GWAS implementation accuracy

Extended Data Fig. 5 shows the QQ plot for both (UK Biobank and ALS) GWASs that we performed. The QQ plot represents the deviation of the observed P values from the null hypothesis. Notably, we observe that the P values closely match the expected distribution up to approximately P = 1 × 10−6, after which they rise above the expected line, suggesting that we have detected a true association74.

To validate our distributed blockchain-based GWAS implementation, we directly compared our results against a centralized analysis conducted on PLINK. Extended Data Fig. 6 compares the coefficients and P values obtained from the two methods. We observe a very high agreement (Pearson correlation coefficients > 0.99). This high concordance confirms the reliability and accuracy of our blockchain-powered GWAS.

Findings on UK Biobank GWAS