Abstract

Neural networks (NNs) often assign high confidence to their predictions, even for points far out of distribution, making uncertainty quantification (UQ) a challenge. When they are employed to model interatomic potentials in materials systems, this problem leads to unphysical structures that disrupt simulations, or to biased statistics and dynamics that do not reflect the true physics. Differentiable UQ techniques can find new informative data and drive active learning loops for robust potentials. However, a variety of UQ techniques, including newly developed ones, exist for atomistic simulations and there are no clear guidelines for which are most effective or suitable for a given case. In this work, we examine multiple UQ schemes for improving the robustness of NN interatomic potentials (NNIPs) through active learning. In particular, we compare incumbent ensemble-based methods against strategies that use single, deterministic NNs: mean-variance estimation (MVE), deep evidential regression, and Gaussian mixture models (GMM). We explore three datasets ranging from in-domain interpolative learning to more extrapolative out-of-domain generalization challenges: rMD17, ammonia inversion, and bulk silica glass. Performance is measured across multiple metrics relating model error to uncertainty. Our experiments show that none of the methods consistently outperformed each other across the various metrics. Ensembling remained better at generalization and for NNIP robustness; MVE only proved effective for in-domain interpolation, while GMM was better out-of-domain; and evidential regression, despite its promise, was not the preferable alternative in any of the cases. More broadly, cost-effective, single deterministic models cannot yet consistently match or outperform ensembling for uncertainty quantification in NNIPs.

Similar content being viewed by others

Introduction

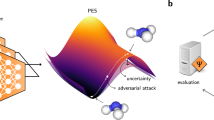

Over the last decade, neural networks (NN) have increasingly been deployed to study complex materials systems. In addition to modeling Quantitative Structure Property/Activity Relationship (QSPR/QSAR), NNs have been used extensively to model interatomic potentials in atomistic simulations of materials1,2,3,4,5. When employed to predict the potential energy surfaces (PESs) of materials systems, NN interatomic potentials (NNIPs) recover the accuracy of ab initio methods while being orders of magnitude faster, enabling simulations of larger time- and system size-scales6,7. Furthermore, NNIPs have displayed sufficient flexibility to learn across diverse chemical compositions and structures8,9,10. As a result, a wide range of NNIP architectures has been developed11,12,13,14,15,16 and used for applications in reactive processes17,18, protein design19,20, solids21,22, solid-liquid interfaces23, coarse-graining24,25, and more. Nevertheless, NNIPs remain susceptible to making poor predictions in extrapolative regimes. This often results in unphysical structures or inaccurate thermodynamic predictions during atomistic simulations that can compromise the scientific validity of the study26,27.

To maximize NNIP robustness and avoid distribution shift, the training data should contain representative samples from the same ensemble that the simulation will visit. However, since high-quality ab initio calculations are computationally expensive, acquiring new data points through exhaustive exploration of the chemical space is intractable. Quantifying model uncertainty and active learning are thus key to training robust NNIPs. A good uncertainty quantification (UQ) method should estimate both the uncertainty arising from measurement noise (i.e., aleatoric uncertainty), and the uncertainty in the prediction due to the model error (i.e., epistemic uncertainty)28. Epistemic uncertainty originates from data scarcity, model architecture limitations, and/or suboptimal model parameter optimization. Since ab initio-calculated training data is free from aleatoric error, NNIPs mostly suffer from epistemic uncertainties due to their strong interpolative but weak extrapolative capabilities. Hence, it is important to acquire new training data efficiently using a good UQ metric to facilitate active learning and mitigate epistemic uncertainty29,30.

Out of the many UQ schemes, Bayesian neural networks (BNNs), in which the weights and outputs are treated as probability distributions, offer an inherent UQ scheme31,32,33. However, BNNs suffer from scaling problems and are prohibitively expensive for use as interatomic potentials for bulk materials systems. Another architecture commonly used for UQ is the NN ensemble34. NN ensemble offers a straightforward approach to estimating uncertainties since NN weight initialization, training, and even hyperparameter choices are stochastic. Although NN ensembles are straightforward and can yield good accuracy, the computational cost associated with training and inference for an ensemble of several independent NNs can be very high for large systems. Other uncertainty estimation methods seek to address these bottlenecks by estimating uncertainty with a single, deterministic NN. Such strategies are particularly interesting due to the lower computational cost of training and inference for a single NN model. Furthermore, the predictive uncertainty of single deterministic NNs can be estimated from a single evaluation of the data, eliminating the need for stochastic sampling to generate approximations of the underlying uncertainty functions.

UQ strategies using single deterministic NNs can be broadly grouped into antecedent and succedent methods35. In the succedent scheme, the NN has been trained and predictor uncertainty corresponding to the data set is estimated from the feature space36, latent space37, gradient matrices of the trained NNs38,39, or Gaussian Process-based kernel on latent space40. One instance of a succedent method is introduced by Janet et al.37 for non-NNIP application and employs Euclidean norm distance in latent features for uncertainty approximation. A more recent development building upon this work involves fitting Gaussian mixture models (GMMs) on the latent space of the NN41.

In antecedent methods, on the other hand, priors are placed on the input data42,43,44 or conditions are placed on the NN’s training objective45. The mean-variance estimation method, a regression-prior scheme, is a commonly used antecedent scheme, where a Gaussian prior distribution is placed on the input data and the NN is trained to predict the mean and variance (also taken as the uncertainty)42. Another antecedent method that has been introduced recently is the deep evidential regression, where a high order prior distribution is placed on top of the input data43.

To the best of our knowledge, there exists no uncertainty quantification method that significantly outperforms all others in the setting of NNIPs. In fact, there seems to be a lack of comparison between UQ methods for this task. Different from the prediction of physical properties (QSAR/QSPR), NNIPs both necessitate and rely upon force-matching gradients in addition to fitting PESs, making UQ for NNIP applications more challenging and unique46,47. With the application of molecular dynamics (MD) simulations in mind, an UQ method not only has to be computationally cheap and confidence-aware especially for out-of-domain (OOD) points, it should also minimize any accuracy reductions that might arise from adopting specific loss functions tailored for single model UQ. In this work, we study if UQ methods using single, deterministic NNs can consistently outperform NN ensembles with respect to their capacity to produce a strong ranking of uncertainties while having lower computational costs. In particular, we use mean-variance estimation (MVE), deep evidential regression, and Gaussian mixture models (GMM). We evaluate their performance in ranking uncertainties using multiple metrics across three different data sets, and analyze the improvement of the stability of MD simulations via active learning. The purpose of this work is to provide a pragmatic comparison of ensembling and single-model UQ approaches within the realm of active learning. In general, we find that ensemble-based methods consistently perform well in terms of uncertainty rankings outside of the training data domain and provide the most robust NNIPs. MVE has been shown to perform well mostly in identifying data points within the training domain that corresponds to high errors. Deep evidential regression, offers less accurate epistemic uncertainty prediction, while GMM is more accurate and lightweight than deep evidential regression and MVE.

Results

Revised MD17 data set (rMD17)

As a starting point, we performed UQ on the rMD17 data set, which is readily available online. We evaluated whether the predicted uncertainties are ranked correctly with respect to the true error for all molecules in the data set (Fig. 1a). An immediate observation is that the uncertainty-error distributions for the ensemble-based and mean-variance estimation (MVE) methods follow a positively-correlated relationship desirable for good UQ schemes. Similarly, the predicted uncertainty of Gaussian mixture model (GMM) exhibits a relatively linear but slightly skewed distribution with respect to the true errors. Evidential regression, on the other hand, shows a two-tiered distribution that does not show good correlation with energy. In addition to that, it is interesting to note that the predicted uncertainties by evidential regression span eight orders of magnitudes, which is due to optimization of the ν, α − 1, and β parameters during training such that they approach 0 (See Supplementary Section V). In terms of computational resources used for each method (Fig. 1b), the training time and GPU memory usage for the ensemble-based method containing 5 independent neural networks (NNs) are roughly 5 times greater than the other single deterministic NN methods.

a Hexbin plots showing (predicted) uncertainties versus squared errors of atomic forces in all molecules of all 5-fold test sets from the rMD17 data set for each considered UQ method. Note that since the uncertainties (-NLL) for the GMM method contain negative values, all uncertainties are scaled by the minimum value to yield positive quantities for plotting the log scale figure (see Supplementary Section VI for the cause of non-positive NLL values). b Total average wall time used for training the NNs and total GPU memory usage incurred by the NNs. Error bars denote standard deviation across the 5-fold data sets. c Bar chart showing the prediction errors on the test set of the considered UQ models trained using their corresponding loss functions. Each bar presents the overall average of the metrics for all molecules in all 5-fold test sets of the rMD17 data set, and the error bars show the standard deviation. Prediction error is described by the mean absolute error (MAE) of the atomic forces in the unit kcal mol−1 Å−1. d Bar charts showing the evaluation metrics for the considered UQ methods, including Spearman’s rank correlation coefficient, ROC AUC score, miscalibration area and calibrated negative log-likelihood (NLL) score. For (c) and (d), refer to Supplementary Fig. 13 for breakdown of the metrics for each molecule.

Since UQ is often utilized in the context of NNIPs to improve robustness for atomistic simulations, it is critical to first assess the prediction accuracy of each model. From Fig. 1c, we can see that the ensemble-based method has a lower overall error at inference than the other methods which all employ only a single NN. Given that the choice of model architecture, input representation, and training data for all UQ methods in the same splits are the same, we can assume that all models possess the same model uncertainty (also referred to as model bias), which is the component of epistemic uncertainty associated with model form insufficiencies and lack of data coverage. This suggests that the accuracies of models differ due to suboptimal parameter optimization mainly arising from the difference in loss functions used during training. It has been shown that ensemble learning removes parametric uncertainty (also referred to as model variance) associated with ambiguities in parameter optimization28,35,48, and thus improves generalization which reduces the overall error29,49,50. MVE, on the other hand, shows the highest average test set error, possibly due to a harder-to-optimize NLL loss function, which has been reported in ref. 51. Since the robustness of NNIPs largely depends on the accuracy of the models, the overall error is an important criterion to consider while choosing UQ methods to be incorporated into the active learning workflows. In terms of quality of uncertainty estimates (Fig. 1d), no single method consistently outperforms the other (see Methods for details of UQ evaluation metrics). For the ensemble-based method, all the evaluated metrics are, by comparison, in good ranges. Interestingly, MVE demonstrates good performance in uncertainty ranking despite having low prediction accuracy. Evidential regression, in contrast, outperforms the others in all evaluated metrics except for the miscalibration area, possibly due to the bimodal distribution in Fig. 1a. It seems that evidential regression generates atom type-dependent parameters which lead to large variations in the UQ metric for different molecules (see Supplementary Section V for a more detailed analysis of the bimodal distribution). Lastly, GMM shows the worst performance in all metrics although within the error bar of the other approaches. We also found that the optimal number of GMM clusters is similar across latent feature dimensions D = 32 to D = 128 for most molecules in rMD17 (see Supplementary Fig. 14). Details on the UQ methods and evaluation metrics can be found in the Methods section.

Ammonia

In this example, we tested the UQ methods by studying the process of nitrogen inversion in ammonia. In the first generation, all NNs are trained on the same initial training and validation data that contain only 78 geometries with energies ranging between 0 and 5 kcal mol−1 (0–1.25 kcal mol−1 atom−1) (Fig. 2c). When tested on 129 conformations with energies between 0 and 70 kcal mol−1 (0–17.5 kcal mol−1 atom−1) (2 conformations per 1 kcal mol−1 bin), it can be clearly observed that the ensemble-based and GMM model produce good monotonous relationship for the predicted uncertainty with respect to the true error (Fig. 2a). This can also be confirmed by examining the evaluation metrics for ensemble and GMM in which all metrics outperform the other two methods. Intriguingly, the performance of GMM on this data set is quite different from the results for the rMD17 data set, where MVE and evidential regression discernibly outperform GMM (Fig. 1d). The most probable reason for this is the difference in distribution of train and test sets. More specifically, the energies of conformations in the train and test sets overlap in the rMD17 data set (Supplementary Fig. 17), whereas for ammonia, the train set contains only low energy conformations close to the minimum (0–5 kcal mol−1), while the test set includes conformations in both low and high energy ranges (0–70 kcal mol−1). This suggests that the ensemble-based and GMM methods are better at predicting epistemic uncertainties outside of the training data domain. Evidential regression is also good at ranking uncertainties with respect to the true errors, but the distribution again shows a peculiar triangular trend. By contrast, MVE underperforms compared to the other models in all evaluated metrics and produces a two-tiered distributions. Comparing the performance of MVE in this data set against the rMD17 data set, we can infer that MVE is likely worse at predicting uncertainties for data outside of the training domain.

a Plots showing predicted uncertainties versus squared errors of atomic forces in the test data set for each considered UQ method trained only on the initial training data set (subfigure (c) below). The box at the top right corner of each subfigure shows the evaluation metrics of the methods, namely Spearman’s rank correlation coefficient (ρ), ROC AUC score (AUC), miscalibration area (Area), and calibrated negative log-likelihoods (cNLL). b Energy barrier of nitrogen inversion calculated with NEB using DFT and the considered UQ methods in generation 1. All NNs in the methods were trained on the same initial training data. c Histogram showing distribution of energy of geometries in the initial training data. d Fraction of stable MD trajectories generated using the NNs of the UQ methods in generation 1 as force field. e Energy barrier of nitrogen inversion calculated with NEB using DFT and the UQ methods in generation 3. The NNs are trained on new adversarial examples generated with their respective UQ method on top of the initial training data. f Distribution of energy in training set after two rounds of adversarial sampling is performed. The top and bottom horizontal marks denote the maximum and minimum values, respectively. g Fraction of stable MD trajectories generated using the NNs of the UQ methods in generation 3 as force field.

When it comes to predicting the nitrogen inversion energy barrier, all the methods seem to either under- or over-estimate the overall ground truth energy curve (Fig. 2b). This observation is reasonable since all models have only seen low energy conformations in the initial (first generation) training data (Fig. 2c). When the different models are used as interatomic potentials for MD simulations, NN-ensembles produced the highest fraction of stable MD trajectories at 50%, in which there are no atomic dissociations or collisions (Fig. 2d). Evidential regression produces 42% of stable trajectories, followed by GMM at 33% and lastly, MVE at 25%.

After performing adversarial sampling for two generations, 40 data points were added to training of the NNIPs for each UQ method. It is important to note that the new data points were generated independently by each UQ method and differ from one method to the other. The energy distributions of the new training data for each method, including the initial training data that contains 78 geometries, are shown in Fig. 2f. Looking closely at the energy distributions, we can see that while the ensemble-based method are able to drive some conformations to higher energies, the majority of the new data points are still concentrated in the lower energy region around 0-30 kcal mol−1. This is also similar to the MVE method, but the number of new conformations with energies in the 20-60 kcal mol−1 range is higher than in the ensemble-based method. In contrast, evidential regression samples more new geometries with energies in the range of 40-85 kcal mol−1 and below 20 kcal mol−1. This may be due to the diverging trend shown in Fig. 2a, where the maximization of uncertainty during the adversarial sampling pushes geometries to low and high energy ranges in a tiered manner. Similar observations can be made for the geometries sampled by the GMM method, since new geometries are concentrated in the very high energy region (70–90 kcal mol−1), as the GMM UQ values are also the largest. When attempting to predict the nitrogen inversion barrier in ammonia, only the ensemble-based method improved after two rounds of adversarial sampling and produced accurate predictions (Fig. 2e). However, after two rounds of adversarial sampling, the fraction of stable MD trajectories produced by the ensemble-based model, MVE, and evidential regression improved by roughly 30-40% points (Fig. 2g). GMM, on the other hand, achieved an improvement of 52% points, indicating that the quality of uncertainty estimates improved robustness of the NNIP outside of the training domain more than the other methods (see Supplementary Section VII for more discussion on bond geometry analysis of geometries from the NN-based MD simulations).

It is important to note that since the UQ functions for the methods are different, the magnitude and range of predicted uncertainties for the same structure can be different. Further, for most UQ methods the prediction of energies and forces and of the uncertainty are intertwined. Thus, by construction the way these models make predictions are different and naturally the range of energies and forces for newly selected structures are different. However, this is not necessarily a flaw since we only need the uncertainty measures to be monotonic within its own method. For example, the purpose of having performed adversarial sampling in this paper is to assess whether newly selected structures using a specific UQ method give us more information than other methods. By maximizing the uncertainty estimated using one UQ method, we thus obtain new structures with different energy and force ranges different from another UQ method. Details of the ammonia data set, adversarial sampling, and subsequent MD simulations can be found in the Methods section.

Silica glass

Lastly, we tested the UQ methods on silica glass, which has a long history for interatomic potential fitting52,53,54,55,56. This is motivated by the fact that silica glass is made up of large bulk structures and the computational cost of performing quantum chemistry calculations on these bulk systems is prohibitively high. NNs, which have increasingly been used for interatomic potential fitting for vitreous silica57,58, are unfortunately still unreliable outside of the training data domain. This calls for efficient sampling methods to generate small sets of additional data to improve high-error regions. These rely significantly on the quality of UQ methods, which should additionally be lightweight and fast. We trained individual NNs and an NN ensemble containing 4 networks on the same initial training data, and evaluated the errors and uncertainties from the respective UQ method on the test data set. Distributions of the initial training and testing data set are shown in Fig. 3a. The testing set contains some structures with very high energies and forces which have not been trained or validated on any of the NNs, such that evaluation of the predicted uncertainties can be used a generalizability measure outside of training data domain. Evaluated true errors and uncertainties, presented in Fig. 3b, for the UQ methods show that all methods are capable of ranking uncertainties relatively well with respect to the true errors, even when the evaluated metrics deliver inconsistent results as to which model is the best. Disputably, evidential regression seems to perform the worst in uncertainty ranking for this data set considering that the evaluated metrics, the order of magnitude of predicted uncertainties, and the distribution of uncertainty-error are less favorable.

a Distributions of energy and forces in training and testing sets. In the first generation (first row), all models are trained on the same initial training set. In subsequent generations, NN models are trained on both initial training data and new sampled data points using their corresponding UQ methods. All models are finally evaluated on the test set, where the energy and force magnitudes are considerably larger than the train sets. Note that the x-axis scale of the test set energy distribution (last row first column) is different from the other energy distributions. b Hexbin plots showing the predicted uncertainties against squared errors of atomic forces from NNs trained only on initial training data. c Average length of time in which no atomic dissociation or collision occurs in the MD trajectories. Average is taken from 10 trajectories. Item “ensemble (one network)” in legend describes MD trajectories performed with predictions only from a single NN in the ensemble. d Mean absolute error of predicted energy and forces by the NN models on the test set.

We then performed adversarial sampling using each of the UQ methods and obtained new data points which are evaluated using DFT and incorporated into re-training of the NNs in subsequent generations. Note that for NNs in each UQ method, the training data thus becomes different starting in generation 2 since the new data points are sampled using different UQ methods (Fig. 3a). In generation 2, energies of the adversarial samples for the methods have similar distributions with the exception of evidential regression, which interestingly did not drive the adversarial samples to very high energies but to energies lower than 0 kcal mol−1 (the reference energy). The reference energy is chosen as the minimum energy of the initial training data, which consists only of structures extracted from MD at 300 K or higher (refer to Supplementary Fig. 2), and evidential regression instead sampled structures with energies lower than those included in the training data. Ensemble-based method, on the other hand, sampled structures that are within 0–5000 kcal mol−1 (0–7 kcal mol−1 atom−1) energy ranges, but contain high atomic forces. While this is arguably the constraint constructed for adversarial sampling (Equation (16)), the effect of this constraint is most apparent in the ensemble-based method, where these new data points significantly increased the robustness of the NNIP. In the third generation, GMM sampled new geometries with both energies and atomic forces much higher than the other methods.

After training of NNs in each generation, 10 MD simulations are performed using the NNs. The lengths of time where the simulations are stable are recorded in Supplementary Fig. 24. Averages are plotted in Fig. 3c. The figures show that the simulations for generation 1 behaved unphysically early on (training was conducted only on the initial data). This is potentially due to the prediction of false minima from the NN potentials that produce unphysical structures and large forces during the simulations27. Additionally, the length of time of stable MD trajectories differs despite the shared training data and model architecture. This is consistent with the results on the ammonia data set, where the robustness of NNIPs is directly influenced by the accuracy of the predictions, represented as the mean absolute error of the energy and atomic forces on the test set (Fig. 3d). It is worth noting that despite early instability in the simulations, the NNIPs generated structures with Si–Si and O–O atom pair distances closer to the experimental values than the training data. The NNIPs, having learned from DFT data produced more accurate atom pair distances than what was supplied by the classical force-matching potential (see Supplementary Section VII and Supplementary Fig. 9 for more discussion). In generation 2, the length of stable MD time for ensemble-based method increases greatly alongside the accuracy of prediction, potentially as a result of the inclusion of new adversarial data with higher forces. A similar trend can be observed for GMM, but at a smaller magnitude of improvement. However, in generation 3, there seems to be a reversal of improvement for both ensemble-based and GMM methods even though more data has been included and prediction errors on the test set shown in Fig. 3d have decreased. This suggests that adding more data does not always improve robustness of NNs and that the quality of new data is more crucial59. MVE shows steady improvement but all MD simulations were unable to exceed 1 ps. In contrast, evidential regression did not show improvement with the inclusion of more structures that were obtained through adversarial sampling (see Supplementary Fig. 24). Since the gradients of the different UQ measures could be different, we set both the Boltzmann temperatures and learning rates in adversarial sampling to be the same for all methods, but increased the number of iterations such that the uncertainties could be maximized. For this data set, we can see that ensemble-based method not only produces higher stability simulations, it also samples higher quality uncertain data in a way that cannot be quantified using the evaluation metrics.

Discussion

The performance of the uncertainty quantification (UQ) methods varies broadly between data sets and evaluation metrics. The ensemble-based UQ method performs consistently well across all data sets and metrics in this work. In addition, due to the averaging effect, ensemble predictions typically show lower prediction error albeit at a higher cost. However, other work has shown that ensemble-based methods can only be used to address epistemic uncertainty stemming from parametric uncertainty (model variance), and is less reliable in identifying data points outside of the training domain28. Contrary to these findings, our study reveals that the ensemble-based method is capable of identifying data points outside of the training domain when performing adversarial sampling.

Mean-variance estimation (MVE), on the other hand, has shown inconsistent performance across the data sets. While MVE performs relatively well in ranking uncertainty with respect to the true error and is computationally cheaper, it achieves a lower prediction accuracy than other methods even with the same training parameters and conditions. Additionally, the predicted uncertainty optimized using the loss function during training (Supplementary Equation (3)) intrinsically describes only the aleatoric uncertainty. For training data generated via ab initio methods, the data set is relatively noise-less and technically does not have meaningful aleatoric uncertainty, meaning MVE has somehow co-opted epistemic uncertainty. We infer that MVE is less capable in systematically predicting high uncertainty for underrepresented regions, but is better at identifying regions within the training domain that correspond to high errors.

In the case of deep evidential regression, the method performs remarkably well in terms of uncertainty estimation but achieves a slightly lower prediction accuracy than the ensemble-based method. Nevertheless, it is important to note that training of the evidential regression method often incurs numerical instability due to optimization of the loss function (Supplementary Equation (6)) that drives the parameters (ν, α − 1, and β) close to zero, which leads to extremely large error predictions, Equation (10).

The Gaussian mixture model (GMM) approach shows good performance in UQ, especially for out-of-domain frames, where its uncertainty estimate is by construction smooth, which is not true for the otherwise strong ensemble method. However, it does not do as well in the interpolative regime, where MVE seems to excel. Furthermore, a GMM is very versatile since it is applicable, post-training, to any model architecture, and thus does not compromise the achieved model accuracy.

Nevertheless, for applications in neural network interatomic potentials (NNIPs), generalizability plays a very important role to ensure robustness of the atomistic simulations. A model that seemingly estimates uncertainty well and achieves good prediction accuracy but fails to achieve stable production simulations from scratch or through active learning, is less helpful. The unsolved challenge, thus is identifying a model that is able to rank uncertainty well, while at the same time scales practically, has high prediction accuracy, and produces stable atomistic simulations. UQ and robustness in NNIPs may pose a unique challenge because forces are a derivative function, which is easily accessible from the energy at training time, but the relationship between force variance and energy variance is not handled well by any of the methods explored.

Albeit cheaper than ensemble-based method, single deterministic NNs showed lower generalizability, and thus failed to exceed the performance of the ensemble-based method, especially in the active learning loops, since ensemble learning lowers the parametric uncertainty. This is especially true for MVE since it has been shown that joint optimization of the mean and variance estimators lowers the stability of prediction51. When a single NN architecture shows higher generalizability than that of a NN ensemble, then usage of UQ methods such as deep evidential regression or GMM for the single NNs would be justified.

More broadly, the lack of a one-size-fits-all solution, the high cost of the more robust ensemble-based method, and the disappointing performance of elsewhere-promising evidential approaches confirm that UQ in NNIPs is an ongoing challenge for method development in AI for science.

Methods

Given a data set \({{{\mathcal{D}}}}\) containing a collection of tuples (x, y), a neural network takes the input, x and learns a hypothesis function to predict the corresponding output \(\hat{y}\). In the case of an NNIP, x contains the atomic numbers \({{{\bf{Z}}}}\in {{\mathbb{Z}}}_{+}^{n}\) and nuclear coordinates \({{{\bf{r}}}}\in {{\mathbb{R}}}^{n\times 3}\) of a material system with n atoms, while \(\hat{y}\) denotes its potential energy value, \(\hat{E}\in {\mathbb{R}}\), and/or the energy-conserving forces, \({\hat{{{{\bf{F}}}}}}_{i}\) acting on the atom i. The energy-conserving forces, \({\hat{{{{\bf{F}}}}}}_{i}\) are calculated as the negative gradients of the predicted potential energy with respect to the atomic coordinates, ri

The Polarizable atom interaction Neural Network (PaiNN), an equivariant message-passing NN was used to learn the interatomic potentials12, given its balance between speed and accuracy. Details of the architecture, training, and loss functions are shown in Supplementary Section I of the Supplementary Information (SI).

Uncertainty quantification (UQ)

Four methods of uncertainty quantification (UQ) were compared: ensemble-based uncertainty34, mean-variance estimation (MVE)42, deep evidential regression43, and Gaussian mixture models (GMM)41 (See Fig. 4). Since forces are a derivative of energy, they typically exhibit higher variability upon overfitting or outside the training domain and thus describe epistemic uncertainty better than energy-derived measures. Hence, the uncertainty estimates were evaluated as σF for all models29.

x denotes the input to the neural networks (NNs), while y is the predicted property. In the case of NNIPs, x generally represents the positions and atomic numbers of the input structure, whereas y is the energy and/or forces of the corresponding input structure. In addition, red texts indicate the variables used as the uncertainty estimates. a Multiple NNs trained in an ensemble are made to predict the desired property of the same structure. The mean and variance of the property can then be calculated, where higher variation in the property implies higher uncertainty34,60. b In the mean-variance estimation (MVE) method, a Gaussian prior distribution is applied to the input data and the NN is made to predict the mean and variance describing the Gaussian distribution. A higher variance parameter indicates higher uncertainty42,60. c In the deep evidential regression method, an evidential prior distribution is applied to the input data and the NN predicts the desired property and the parameters to describe both the aleatoric and epistemic uncertainties43,44. d In the Gaussian mixture model (GMM) method, the input data is assumed to be drawn from multiple Gaussian distributions. The negative log-likelihood (NLL) function is calculated from a fitted GMM on the learned feature vectors, ξx of the structures. A higher NLL value denotes higher uncertainty41.

Ensemble-based models

are among the most common and trusted approaches to UQ in deep learning. Because there are various sources of randomness in the training of NNs, from initializations to stochastic optimization or hyperparameter choices, a distribution of predicted output values can be obtained from the ensemble-based model that consists of M distinct NNs29,34,35,60. For an input system x, each neural network m in the ensemble predicts the total energy, \({\hat{E}}_{m}(x)\) and atomic forces \({\hat{{{{\bf{F}}}}}}_{m}(x)\) (calculated using Equation (1)). The predictions of the ensemble are then taken to be the arithmetic mean of the predictions from each individual NN

Lakshminarayanan et al. has proposed that variance of predictions from individual NNs in an ensemble can be used as the uncertainty estimates since the predicted values of NNs may become higher outside of the training data domain34. In the application of NNIPs, the uncertainty estimates are provided by variances of predicted energy, \({\sigma }_{E}^{2}\), and atomic forces, \({\sigma }_{{F}_{i}}^{2}\), which can be computed as

Note that \({\sigma }_{{F}_{i}}^{2}\) is the force variance for atom i, whereas \({\sigma }_{F}^{2}\) is the variance of force for the structure. In our case, we have focused only on using the basic ensembling scheme of random weight initialization as a baseline comparison with the single NNs. See Supplementary Figs. 20 and 21 for a comparison of basic ensembling methods including random initialization, hyperparameter diversification, bagging, and a combination of them.

Mean-variance estimation (MVE)

As a function approximator, a standard NN does not have the intrinsic capability to estimate the trustworthiness of its prediction. By treating the training data set as Gaussian random variables with variances, a NN can be constructed to predict the mean, variance pairs that parametrize the Gaussian distributions \({{{\mathcal{N}}}}(\mu ,{\sigma }^{2})\)42. In other words, the NN predicts a distribution—itself parameterized by a mean and a variance—as an output rather than a fixed point. This method is called mean-variance estimation (MVE), in which the NN predicts the mean and variance for each datum using maximum likelihood estimation.

The final layer of the NN is modified to predict both the mean, μE (gradients are subsequently computed to obtain \({\mu }_{{F}_{i}}\)) and variance, \({\sigma }_{{F}_{i}}^{2}\) for each datum, and a softplus activation is applied to ensure that the variances are strictly positive, see Supplementary Equation (4) in SI for training loss function. The predicted variances are then taken as the uncertainty metric. Similar to the ensemble method, the uncertainty estimates are described only by the variances of the atomic forces.

Deep evidential regression

While MVE has been widely used for UQ, the predicted variance used in lieu of a proper uncertainty fails to provide estimates of epistemic uncertainties. This is due to the assumption underlying MVE, where the predicted variance of each data point only describes the Gaussian distribution over the data but not over the NN parameters. Thus, MVE does not rigorously inform about distributions outside of the training data regions61. To address this limitation, an emerging evidential regression algorithm has been developed to directly handle representations of epistemic uncertainty by parameterizing a probability distribution function over the likelihood of the learned NN representations43.

Instead of placing priors directly on the data set like MVE, evidential regression places priors on the likelihood function of the data set. It is assumed that the data set is drawn from an independent and identically distributed (i.i.d.) Gaussian distribution (\({{{\mathcal{N}}}}(\cdot )\)) with unknown mean and variance (μ, σ2). Here we assume that the data is represented by the atomic forces, Fi. With this assumption, the unknown mean can then be described as a Gaussian distribution while the variance is drawn from an inverse-gamma distribution (Γ( ⋅ ))43.

where γi, νi, αi and βi for each atom i are predicted from the NN, and \({\gamma }_{i}\in {\mathbb{R}}\), νi > 0, αi > 1 and βi > 0. Consequently, this higher-order evidential distribution is represented over the mean and variance as

For detailed derivation, we refer to refs. 43,61. In a similar fashion to MVE, the final layer of the NN is modified to predict γE (\({\gamma }_{{F}_{i}}\) is then calculated from derivative shown in Equation (1)), νi, αi and βi. A softplus activation is applied to the 3 parameters νi, αi and βi to ensure that they are strictly positive (with a +1 for αi). Supplementary Equation (7) is the loss function used for training. Finally, the aleatoric, \({\mathbb{E}}[{\sigma }_{{F}_{i}}^{2}]\) and epistemic uncertainty, \({{{\rm{Var}}}}[{\mu }_{{F}_{i}}]\) can be calculated, respectively, as

Gaussian mixture model (GMM)

A GMM models the distribution of the data set from a weighted mixture of K multivariate Gaussians of dimension D62. Each D-variate Gaussian k in the mixture has a mean vector, \({{{{\boldsymbol{\mu }}}}}_{k}\in {{\mathbb{R}}}^{D\times 1}\) and a covariance matrix, \({\Sigma }_{k}\in {{\mathbb{R}}}^{D\times D}\). The data distribution can then be described as

where πk are the mixture weights and satisfy the constraint \(\mathop{\sum }\nolimits_{k = 1}^{K}{\pi }_{k}=1\). To start, the latent features of each training set datum \({\xi }_{{{{\rm{train}}}}}\in {{\mathbb{R}}}^{D\times 1}\) are extracted from the immediate layer of a trained NN before the per-atom energy prediction41. A GMM is constructed on ξtrain using the expectation-maximization (EM) algorithm with full-rank covariance matrices and mean vectors predetermined using k-means clustering. To determine the number of Gaussians needed, we investigated the trade-off between the Bayesian Information Criterion (BIC) and the silhouette score (see Supplementary Figs. 14 and 15). Using the fitted GMM, we can obtain uncertainty of the test data by taking the latent features, \({\xi }_{{{{\rm{test}}}}}\in {{\mathbb{R}}}^{D\times 1}\) and evaluating the negative log-likelihood function NLL(ξtest∣ξtrain) for the test data. For all our models, the dimension of latent features, D were set to 128. A higher NLL(ξtest∣ξtrain) indicates that the data is “far” from the mean vectors of the Gaussians in the GMM and thus corresponds to high uncertainty.

Data Sets

Revised MD17 (rMD17)

The rMD17 data set contains snapshots from long molecular dynamics trajectories of ten small organic molecules63,64, in which the energy and forces were calculated at the PBE/def2-SVP level of electronic structure theory. For each molecule, there are 5 splits and each split consists of 1000 training and 1000 testing data points. A new NN is trained for each molecule, split, and UQ model. The data set was obtained from https://doi.org/10.6084/m9.figshare.12672038.v3. Because the test set is drawn randomly from the same trajectory as the training data, rMD17 is more representative of an in-domain interpolative UQ challenge.

Ammonia

The ammonia training and validation data sets comprise a total of 78 geometries, where the energies and forces are calculated using the BP86-D3/def2-SVP level of theory as implemented in ORCA. The geometries were generated using hessian-displacement in the direction of normal mode vectors on initial molecular conformers generated using RDKit with the MMFF94 force field29. The data set was obtained from https://doi.org/10.24435/materialscloud:2w-6h. The test set contains 129 geometries with energies ranging from 0 to 100 kcal mol−1 (or 25 kcal mol−1 atom−1), calculated using the same level theory. The geometries were generated using adversarial sampling during trial runs of this work, and are completely independent of the models and data set analyzed in this work. As the test set comprises higher-energy geometries and the training set encompasses low-energy structures near the ground state, the ammonia dataset serves as a fundamental example of an out-of-domain, extrapolative UQ challenge. While energy is generally not a valid way of separating in-domain (ID) and out-of-domain (OOD) data, it does effectively separate the configurations well due to ammonia being such a small molecule. For a more comprehensive analysis of ID and OOD partition, see Supplementary Section IIA.

Silica glass

The silica glass (SiO2) data set consists of structures sampled from multiple long molecular dynamics trajectories subjected to different conditions including temperature equilibration, cooling, uniaxial tension, uniaxial compression, biaxial tension, biaxial compression, and biaxial shear at different rates using the force-matching potential described in ref. 55, and adversarial sampling (obtained during preliminary tests for this study, these samples were acquired separately from the results presented here65.). Each silica glass structure consists of 699 atoms (233 Si and 466 O atoms). In total, 1591 silica structures were sampled from the molecular dynamic trajectories and 101 structures using adversarial sampling. The structures were then partitioned into a training set that contains only structures generated under low-temperature, low-deformation rate conditions, whereas the test set contains structures extracted from higher temperature and higher deformation rate trajectories. As can be seen in Supplementary Figs. 2–3 and Supplementary Section IIB, it is much harder to split the data for this much bigger system in such a way that it creates a clear in and out of distribution part. DFT calculations were performed on the structures using the Vienna Ab-initio Simulation Package (VASP)66,67,68,69. Details of the MD simulations70,71, DFT calculations, and adversarial sampling of the silica structures are discussed in Supplementary Section IIB. Because of its high configurational complexity—including high energy fracture geometries—and low chemical complexity, our silica dataset represents a step-up in generalization of UQ in extreme condition regimes72.

Evaluation metrics

Spearman’s rank correlation coefficient

Since UQ should measure how much the model does not know about the data presented to it, we expect that a high true error should correlate with a high predicted uncertainty. In other words, we expect a monotonic relationship between the predicted uncertainty, U, and the true error, ϵ, for a good uncertainty estimator60,73. Since we are particularly interested in UQ in the context of active learning to improve NNIPs where they are most erroneous, we use Spearman’s rank correlation coefficient to assess the reliability of the uncertainty estimator such that the correlation between the rank variable of the predicted uncertainty, RU, and that of the true error, Rϵ, can be quantified. The correlation coefficient can then be defined as

where cov(RU, Rϵ) is the covariance of the rank variables while \({\sigma }_{{R}_{U}}\) and \({\sigma }_{{R}_{\epsilon }}\) are the standard deviations of the rank variables. In the case of a perfect monotone function, a Spearman correlation of +1 or -1 is expected, whereas a correlation of 0 indicates no association between the ranks of the two variables. However, since this metric only compares ranks of variables, it is still possible for an estimator constantly predicting low uncertainties in the event of high errors to achieve high Spearman coefficient. Hence, complementing this with another metric allows us to understand the performance better.

Area under the receiver operating characteristic curve (ROC-AUC)

Since uncertainty estimates are expected to be high at high true error points, a criterion to split uncertainties and errors into high and low values is established41,74. More precisely, an error threshold, ϵc is specified such that data with true error higher than the error threshold, ϵ > ϵc are classified as “high error” points while data with lower true error, ϵ≤ϵc are classified as “low error” points. Similarly, a specified uncertainty threshold is applied to uncertainty Uc. For a structure to be considered as a true positive (TP), both the true error and estimated uncertainty have to be above their corresponding thresholds ((ϵ > ϵc) ∧ (U > Uc)). A false positive (FP) point is a structure with a true error below its threshold while the estimated uncertainty is above the threshold ((ϵ ≤ ϵc) ∧ (U > Uc)). Conversely, a true negative (TN) point occurs when (ϵ ≤ ϵc) ∧ (U ≤ Uc), and a false negative (FN) when (ϵ > ϵc) ∧ (U ≤ Uc).

However, setting the threshold values requires manual optimization for each uncertainty method, especially since the magnitude and distribution of uncertainties for each method compared in this work vary extensively. Instead of evaluating the true positive rate (TPR) and predictive positive value (PPV) like in previous work41,74, we compute the area under curve (AUC) of the receiver operating characteristic (ROC) curve such that the quality of the uncertainty methods can be assessed independent of the discrimination threshold45. Note that the threshold for “target” score, which in this case is the threshold for true error, ϵc, still has to be specified in order for the ROC curve to be plotted. For all models, we set ϵc to be at the 20th percentile of the error distribution because we find that varying the error percentile threshold does not affect the ROC-AUC score by a significant amount (see Supplementary Table 2). We also found that using system-dependent absolute cutoff values give the same trend as using percentile cutoffs. The more accurate the method is, the higher the ROC-AUC score. An ROC-AUC score of 0.5 indicates that the method has no discrimination power between high or low uncertainty samples. A score lower than 0.5 implies that the method is better at inverting the uncertainty prediction, predicting low uncertainty for high error points.

Miscalibration area

Another way to evaluate the quality of the uncertainty estimates is to quantify the calibration error of the models, which is a numerical measure of the accuracy of calibration curve. A calibration curve shows the relationship between the predicted and observed frequencies of data points within different ranges. Tran et al.75 proposed to compare how well the expected fraction of errors for each data point falling within z standard deviations of the mean follow the observed Gaussian random variables constructed with the uncertainty estimates (U(x)) as the variances. In other words, if the quality of uncertainty estimate is good, we would expect that there will be around 68% of the errors falling within one standard deviation of the mean of the Gaussian distributions constructed using the uncertainty estimates60,73,75,76. From this calibration curve, we can calculate the area between this curve and the line of perfect calibration to provide a single numerical summary of the calibration. This quantity is called the miscalibration area. A perfect UQ method will show an exact agreement between the observed and expected fractions and thus yield a miscalibration area of 0.

Calibrated negative log-likelihood function (cNLL)

For observed (true) errors, a negative log likelihood can be calculated as an evaluation metric assuming that the errors are normally distributed with a mean of 0 and variances given by the predicted uncertainties. However, given that some methods do not directly produce uncertainty estimates equivalent to variances, the uncertainty estimates can be calibrated such that they resemble variances more closely37,60. Specifically, the “variances” can be approximated as \({\hat{\sigma }}^{2}(x)=aU(x)+b\), where the scalars can be a and b can be estimated as values that minimize the negative log-likelihood function of errors in the validation set, \({{{{\mathcal{D}}}}}_{val}\),

With the calibrated scalars a and b, the cNLL metric can then be computed on the test set, \({{{{\mathcal{D}}}}}_{test}\), as

Adversarial sampling

In addition to evaluating the reliability of an uncertainty estimator using metrics like Spearman’s rank correlation coefficient and ROC-AUC, we would like to assess whether new structures sampled using the estimated uncertainties from each method help to increase robustness of the NNIPs in different ways. To this end, we employed the adversarial sampling method proposed by Schwalbe-Koda et al.29 to sample new conformations that maximize the estimated uncertainty such that these adversarial examples can be incorporated into the retraining of the NNs in an active learning (AL) loop. This would test reliability, smoothness, and utility of the UQ methods because adversarial sampling requires the UQ method to systematically identify underrepresented regions where the models are most uncertain about. Effectively, improvement in robustness of NNIPs after active learning is a better metric for uncertainty estimation methods since ideally a good uncertainty estimator is able to acquire new training examples that improve the model’s generalizability more than random sampling.

In the adversarial sampling strategy, a perturbation, δ is applied to randomly chosen structures, x from the initial training data. The perturbation is then iteratively updated using gradient-ascent to maximize the uncertainty of the perturbed structures, U(xδ), as defined below

More precisely, U(xδ) is taken to be \({\sigma }_{F}^{2}({x}_{\delta })\) for the ensemble-based method (Eq. (5)) and MVE, Var[μF(xδ)] for evidential regression (Equation (10)), and NLL(xδ∣xtrain) for GMM (Equation (12)). p(xδ) is the thermodynamic probability of the perturbed structures based on the distribution of data already available to the model

where kB is the Boltzmann constant, T the absolute temperature, and \(\hat{E}({x}_{\delta })\) in the numerator is the predicted energy of the perturbed structure, while E(x) in the denominator denotes ground truth energy of structures in the initial training data set. Refer to Supplementary Section III for parameters used in adversarial sampling.

Molecular dynamics (MD) simulations

NN-based MD simulations were performed in the NVT ensemble with the Nosé-Hoover thermostat. In the case of ammonia, 100 5 ps-long MD simulations were run for four different temperatures (300 K, 500 K, 750 K, and 1000 K) and for each UQ method at a timestep of 0.5 fs. The initial configurations for all simulations were picked randomly from the initial training data set. The trajectories were considered unstable if the distance between the atoms were closer than 0.75 Å or larger than 2.25 Å or if the predicted energy was lower than the global minimum energy, which is at a reference point of 0 kcal mol−1 in this work. For simulations of silica, 10 1 ns-long trajectories at a temperature of 2500 K were performed for each UQ methods at all generations with a time step of 0.25 fs. The initial structures for all simulations were picked randomly from the structures sampled at 2500 K equilibration simulation in the initial training data set. The trajectories were considered unstable if the kinetic energy becomes 0, a null value or greater than 10,000 kcal mol−1 or 14.3 kcal mol−1 atom−1 (due to destabilization of the predicted potential energy in a false minimum27), or if the distances between any atoms are less than 1.0 Å. No upper bound was imposed on the atomic distances because the simulations tend to fail before any stretching of bond occurs. All simulations were performed using the Atomic Simulation Environment (ASE) library in the Python language77.

Data availability

The molecular dynamic trajectories generated in the current study have been deposited in the Materials Cloud Archive under accession code https://doi.org/10.24435/materialscloud:mv-a365.

Code availability

The code used to produce the results in this paper is available at https://github.com/learningmatter-mit/UQ_singleNN.gitunder the MIT license.

References

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Schwalbe-Koda, D. & Gómez-Bombarelli, R. in Machine Learning Meets Quantum Physics (Lecture Notes in Physics, Vol. 968) (eds Schütt, K. et al.) 445–467 (Springer, 2012).

Kocer, E., Ko, T. W. & Behler, J. Neural network potentials: a concise overview of methods. Annu. Rev. Phys. Chem. 73, 163–186 (2022).

Behler, J. Four generations of high-dimensional neural network potentials. Chem. Rev. 121, 10037–10072 (2021).

Yang, K. et al. Analyzing learned molecular representations for property prediction. J. Chem. Inf. Model. 59, 3370–3388 (2019).

Mueller, T., Hernandez, A. & Wang, C. Machine learning for interatomic potential models. J. Chem. Phys. 152, 050902 (2020).

Botu, V., Batra, R., Chapman, J. & Ramprasad, R. Machine learning force fields: Construction, validation, and outlook. J. Phys. Chem. C 121, 511–522 (2017).

Behler, J. & Parrinello, M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 98, 146401 (2007).

Onat, B., Cubuk, E. D., Malone, B. D. & Kaxiras, E. Implanted neural network potentials: application to Li-Si alloys. Phys. Rev. B 97, 1–9 (2018).

Gokcan, H. & Isayev, O. Learning molecular potentials with neural networks. Wiley Interdiscip. Rev. Comput. Mol. Sci. 12, 1–22 (2022).

Schütt, K. T., Sauceda, H. E., Kindermans, P.-J. J., Tkatchenko, A. & Müller, K.-R. R. SchNet—a deep learning architecture for molecules and materials. J. Chem. Phys. 148, 241722 (2018).

Schütt, K. T., Unke, O. T. & Gastegger, M. Equivariant message passing for the prediction of tensorial properties and molecular spectra. In Proc. 38th Int. Conf. on Machine Learning Vol. 139 (eds. Meila, M. & Zhang, T.) 9377–9388 (PMLR, 2021).

Batzner, S. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun. 13, 2453 (2022).

Zubatyuk, R., Smith, J. S., Nebgen, B. T., Tretiak, S. & Isayev, O. Teaching a neural network to attach and detach electrons from molecules. Nat. Commun. 12, 1–11 (2021).

Unke, O. T. & Meuwly, M. PhysNet: a neural network for predicting energies, forces, dipole moments, and partial charges. J. Chem. Theory Comput. 15, 3678–3693 (2019).

Gasteiger, J., Groß, J. & Günnemann, S. Directional message passing for molecular graphs. In International Conference on Learning Representations (ICLR, 2020).

Gastegger, M. & Marquetand, P. High-dimensional neural network potentials for organic reactions and an improved training algorithm. J. Chem. Theory Comput. 11, 2187–2198 (2015).

Ang, S. J., Wang, W., Schwalbe-Koda, D., Axelrod, S. & Gómez-Bombarelli, R. Active learning accelerates ab initio molecular dynamics on reactive energy surfaces. Chem 7, 1–32 (2021).

Wang, Z., Han, Y., Li, J. & He, X. Combining the fragmentation approach and neural network potential energy surfaces of fragments for accurate calculation of protein energy. J. Phys. Chem. 2020, 3035 (2020).

Wang, W., Axelrod, S. & Gómez-Bombarelli, R. Differentiable molecular simulations for control and learning. Preprint at http://arxiv.org/abs/2003.00868 (2020).

Marchand, D., Jain, A., Glensk, A. & Curtin, W. A. Machine learning for metallurgy I. A neural-network potential for Al-Cu. Phys. Rev. Mater. 4, 1–21 (2020).

Jakse, N. et al. Machine learning interatomic potentials for aluminium: application to solidification phenomena. J. Condens. Matter Phys. 35, 035402 (2022).

Natarajan, S. K. & Behler, J. Neural network molecular dynamics simulations of solid-liquid interfaces: water at low-index copper surfaces. Phys. Chem. Chem. Phys. 18, 28704–28725 (2016).

Morawietz, T., Singraber, A., Dellago, C. & Behler, J. How van der waals interactions determine the unique properties of water. Proc. Natl Acad. Sci. USA 113, 8368–8373 (2016).

Ruza, J. et al. Temperature-transferable coarse-graining of ionic liquids with dual graph convolutional neural networks. J. Chem. Phys. 153, 164501 (2020).

Fu, X. et al. Forces are not enough: benchmark and critical evaluation for machine learning force fields with molecular simulations. Preprint at https://arxiv.org/abs/2210.07237 (2022).

Morrow, J. D., Gardner, J. L. A. & Deringer, V. L. How to validate machine-learned interatomic potentials. J. Chem. Phys. 158, 121501 (2023).

Heid, E., McGill, C. J., Vermeire, F. H. & Green, W. H. Characterizing uncertainty in machine learning for chemistry. J. Chem. Inf. Model. 63, 4012–4029 (2023).

Schwalbe-Koda, D., Tan, A. R. & Gómez-Bombarelli, R. Differentiable sampling of molecular geometries with uncertainty-based adversarial attacks. Nat. Commun. 12, 1–12 (2021).

Shuaibi, M., Sivakumar, S., Chen, R. Q. & Ulissi, Z. W. Enabling robust offline active learning for machine learning potentials using simple physics-based priors. Mach. Learn.: Sci. Technol. 2, 025007 (2021).

Vandermause, J. et al. On-the-fly active learning of interpretable Bayesian force fields for atomistic rare events. Npj Comput. Mater. 6, 20 (2020).

Jinnouchi, R., Lahnsteiner, J., Karsai, F., Kresse, G. & Bokdam, M. Phase transitions of hybrid perovskites simulated by machine-learning force fields trained on the fly with Bayesian inference. Phys. Rev. Lett. 122, 225701 (2019).

Thaler, S., Doehner, G. & Zavadlav, J. Scalable Bayesian uncertainty quantification for neural network potentials: promise and pitfalls. J. Chem. Theory Comput. 14, 4520–4532 (2023).

Lakshminarayanan, B., Pritzel, A. & Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. Adv. Neural Inf. Process Syst. 31, 6405–6416 (2017).

Gawlikowski, J. et al. in Artificial Intelligence Review (ed. Liu, D.) 56, 1513–1589 (Springer Netherlands, 2023).

Nandy, A., Duan, C., Janet, J. P., Gugler, S. & Kulik, H. J. Strategies and software for machine learning accelerated discovery in transition metal chemistry. Ind. Eng. Chem. Res. 57, 13973–13986 (2018).

Janet, J. P., Duan, C., Yang, T., Nandy, A. & Kulik, H. J. A quantitative uncertainty metric controls error in neural network-driven chemical discovery. Chem. Sci. 10, 7913–7922 (2019).

Oberdiek, P., Rottmann, M. & Gottschalk, H. in Artificial Neural Networks in Pattern Recognition. Lecture Notes in Computer Science vol. 11081 (eds. Pancioni, L., Schwenker, F., & Trentin, E.) 113–125 (LNAI, Springer International Publishing, 2018).

Lee, J. & Alregib, G. Gradients as a measure of uncertainty in neural networks. In: Proc. Int. Conf. on Image Processing, ICIP 2416–2420 (2020).

Wollschläger, T., Gao, N., Charpentier, B., Ketata, M. A. & Günnemann, S. Uncertainty estimation for molecules: Desiderata and methods. In: Proc. 40th Int. Conf. on Machine Learning. Vol 202. (eds. Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., & Scarlett, J.) 37133-37156 (ICML, 2023).

Zhu, A., Batzner, S., Musaelian, A. & Kozinsky, B. Fast uncertainty estimates in deep learning interatomic potentials. J. Chem. Phys. 158, 164111 (2023).

Nix, D. A. & Weigend, A. S. Estimating the mean and variance of the target probability distribution. Proceedings of 1994 IEEE International Conference on Neural Networks 1, 55–60 (1994).

Amini, A., Schwarting, W., Soleimany, A. & Rus, D. Deep evidential regression. Adv. Neural Inf. Process. Syst. 33, 1–20 (2020).

Soleimany, A. P. et al. Evidential deep learning for guided molecular property prediction and discovery. ACS Cent. Sci, 7, 1356–1367 (2021).

van Amersfoort, J., Smith, L., Teh, Y. W. & Gal, Y. Uncertainty estimation using a single deep deterministic neural network. In: Proc. 37th Int. Conf. on Machine Learning (eds. Daume III, H. & Singh, A.) 9632–9642 (2020).

Behler, J. Perspective: machine learning potentials for atomistic simulations. J. Chem. Phys. 145, 170901 (2016).

Kulichenko, M. et al. The rise of neural networks for materials and chemical dynamics. J. Phys. Chem. Lett. 12, 6227–6243 (2021).

Gabriel, J. J. et al. Uncertainty quantification in atomistic modeling of metals and its effect on mesoscale and continuum modeling: a review. Jom. 73, 149–163 (2021).

Tumer, K. & Ghosh, J. Error correlation and error reduction in ensemble classifiers. Conn. Sci. 8, 385–404 (1996).

Mendes-Moreira, J., Soares, C., Jorge, A. M. & De Sousa, J. F. Ensemble approaches for regression: a survey. ACM Comput. Surv. 45 (2012).

Seitzer, M., Tavakoli, A., Antic, D. & Martius, G. On the pitfalls of heteroscedastic uncertainty estimation with probabilistic neural networks. Preprint at http://arxiv.org/abs/2203.09168 (2022).

Beest, B. V., Kramer, G. J., Van Beest, B. W. H., Kramer, G. J. & Van Santen, R. A. Force fields for silicas and aluminophosphates based on ab initio calculations. Phys. Rev. Lett. 64, 1955–1958 (1990).

Tangney, P. & Scandolo, S. An ab initio parametrized interatomic force field for silica. J. Chem. Phys. 117, 8898–8904 (2002).

Tsuneyuki, S., Tsukada, M., Aoki, H. & Matsui, Y. First-principles interatomic potential of silica applied to molecular dynamics. Phys. Rev. Lett. 61, 869–872 (1988).

Urata, S., Nakamura, N., Aiba, K., Tada, T. & Hosono, H. How fluorine minimizes density fluctuations of silica glass: Molecular dynamics study with machine-learning assisted force-matching potential. Mater. Des. 197, 109210 (2021).

Pedone, A., Bertani, M., Brugnoli, L. & Pallini, A. Interatomic potentials for oxide glasses: past, present, and future. J. Non-Cryst. Solids: X 15, 100115 (2022).

Urata, S., Nakamura, N., Tada, T. & Hosono, H. Molecular dynamics study on the co-doping effect of Al2O3 and fluorine to reduce Rayleigh scattering of silica glass. J. Am. Ceram. Soc. 104, 5001–5015 (2021).

Balyakin, I. A., Rempel, S. V., Ryltsev, R. E. & Rempel, A. A. Deep machine learning interatomic potential for liquid silica. Phys. Rev. E 102, 52125 (2020).

Koh, P. W. & Liang, P. Understanding black-box predictions via influence functions. In: Proc. 34th Int. Conf. on Machine Learning (eds. Precup, D. & Teh, Y.) PMLR 70 (2017).

Hirschfeld, L., Swanson, K., Yang, K., Barzilay, R. & Coley, C. W. Uncertainty quantification using neural networks for molecular property prediction. J. Chem. Inf. Model. 60, 3770–3780 (2020).

Gurevich, P. & Stuke, H. Gradient conjugate priors and multi-layer neural networks. Artif. Intell. 278 (2020).

Reynolds, D. in Encyclopedia of Biometrics (eds. Li, S. & Jain, A.) 659–663 (Springer US, 2009).

Christensen, A. S. & Anatole von Lilienfeld, O. On the role of gradients for machine learning of molecular energies and forces. Mach. Learn.: Sci. Technol.1 (2020).

Chmiela, S. et al. Machine learning of accurate energy-conserving molecular force fields. Sci. Adv. 3, e1603015 (2017).

Tan, A. R., Urata, S., Goldman, S., Dietschreit, J. C. B. & Gómez-Bombarelli, R. Data from: Single-model uncertainty quantification in neural network potentials does not consistently outperform model ensembles. https://doi.org/10.24435/materialscloud:mv-a3 (2023).

Kresse, G. & Furthmüller, J. Efficiency of ab-initio total energy calculations for metals and semiconductors using a plane-wave basis set. Comput. Mater. Sci. 6, 15–50 (1996).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758–1775 (1999).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Plimpton, S. Fast parallel algorithms for short-range molecular dynamics. J. Comput. Phys. 117, 1–19 (1995).

Evans, D. J. Computer “experiment” for nonlinear thermodynamics of Couette flow. J. Chem. Phys. 78, 3297–3302 (1983).

Kovács, D. P. et al. Linear atomic cluster expansion force fields for organic molecules: Beyond rmse. J. Chem. Theory Comput. 17, 7696–7711 (2021).

Varivoda, D., Dong, R., Omee, S. S. & Hu, J. Materials property prediction with uncertainty quantification: A benchmark study. Appl. Phys. Rev. 10, 021409 (2023).

Kahle, L. & Zipoli, F. Quality of uncertainty estimates from neural network potential ensembles. Phys. Rev. E 105, 1–11 (2022).

Tran, K. et al. Methods for comparing uncertainty quantifications for material property predictions. Mach. Learn.: Sci. Technol. 1, 025006 (2020).

Hu, Y., Musielewicz, J., Ulissi, Z. W. & Medford, A. J. Robust and scalable uncertainty estimation with conformal prediction for machine-learned interatomic potentials. Mach. Learn.: Sci. Technol. 3, 045028 (2022).

Hjorth Larsen, A. et al. The atomic simulation environment—a Python library for working with atoms. J. Condens. Matter Phys. 29, 273002 (2017).

Acknowledgements

This work was supported by funding from the AGC, Inc.

Author information

Authors and Affiliations

Contributions

R.G.-B. and A.R.T. conceived the project. A.R.T. designed and conducted the experiments, performed data analysis and wrote the manuscript. S.U. performed molecular dynamics simulations and DFT calculations on the initial silica training data set. S.G. and J.C.B.D. provided fruitful discussions and contributed to manuscript writing. J.C.B.D. contributed to content organization and manuscript review. R.G.-B. supervised the research, secured the funding and contributed to manuscript writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tan, A.R., Urata, S., Goldman, S. et al. Single-model uncertainty quantification in neural network potentials does not consistently outperform model ensembles. npj Comput Mater 9, 225 (2023). https://doi.org/10.1038/s41524-023-01180-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-023-01180-8

- Springer Nature Limited

This article is cited by

-

Accurate machine learning force fields via experimental and simulation data fusion

npj Computational Materials (2024)

-

Machine-learning-accelerated simulations to enable automatic surface reconstruction

Nature Computational Science (2023)