Abstract

Structural search and feature extraction are a central subject in modern materials design, the efficiency of which is currently limited, but can be potentially boosted by machine learning (ML). Here, we develop an ML-based prediction-analysis framework, which includes a symmetry-based combinatorial crystal optimization program (SCCOP) and a feature additive attribution model, to significantly reduce computational costs and to extract property-related structural features. Our method is highly accurate and predictive, and extracts structural features from desired structures to guide materials design. We first test SCCOP on 35 typical compounds to demonstrate its generality. As a case study, we apply our approach to a two-dimensional B-C-N system, which identifies 28 previously undiscovered stable structures out of 82 compositions; our analysis further establishes the structural features that contribute most to energy and bandgap. Compared to conventional approaches, SCCOP is about 10 times faster while maintaining a comparable accuracy. Our framework is generally applicable to all types of systems for precise and efficient structural search, providing insights into the relationship between ML-extracted structural features and physical properties.

Similar content being viewed by others

Introduction

Predicting the crystal structure for a given composition before experimental syntheses is central to computation-guided materials discovery. The state-of-the-art approaches for crystal structure prediction (CSP) rely on efficient search algorithms such as simulated annealing (SA)1,2,3, genetic algorithm (GA)4,5,6, and particle-swarm optimization (PSO)7,8,9. To search for the global minima on the potential energy surface, SA focuses on overcoming energy barriers; GA utilizes self-improving methods, while PSO takes advantage of the collective intelligence of particles. In the conventional methods, extensive energy and force evaluations by density functional theory (DFT)10,11 are required when exploring the configuration space. As the numbers of atoms and species increase, the number of configurations grows exponentially, leading to an intolerable time and resources consumption. In this context, machine learning (ML) is particularly powerful in reducing the computational consumption by adopting a surrogate model, e.g., crystal graph convolutional neural network (CGCNN)12, and other graph-based prediction models13,14,15. For instance, CGCNN considers the crystal topology to build undirected multigraphs, which can efficiently integrate structural features and be used to predict physical properties to replace DFT calculations. More recently, ML has been employed in CSP approaches16,17, demonstrating significant speedups in search algorithms by replacing DFT calculations with surrogate models. However, these methods typically require a substantial amount of training data to build a general potential, and their effectiveness for compositions unavailable in the database remains uncertain.

After a large amount of structural searches, extracting the property-related structural features is essential for the exploration of materials. To deeply explore and visualize the underlying relationship between global and local atomic structures and physical properties such as stability and conductivity, numerous efforts have been made18,19. For example, the transformation between fold and unfold states in protein-folding dynamics has been unveiled by encoding the entire mapping from biomolecular coordinates to a Markov state model20; similarly, the transition that contributes to Li-ion conduction can also be clearly verified by using graph dynamical network to learn local atomic environment instead of global dynamics21. These studies imply that local atomic-scale structural motifs play a critical role in physical properties. However, this relationship remains unclear in the structural generation field because of the huge possible materials population and complex interatomic bonding, which are difficult to analyze by conventional methods. An ML-based framework for structural search and data analysis is thus in critical demand in order to improve the efficiency of exploring materials.

Two-dimensional (2D) materials are under extensive research, especially after the successful syntheses of 2D materials such as carbon biphenylene22 and T-carbon nanowires23,24, for fancinating physical phenomena induced by special structural features, e.g., nonhexagonal bonding and carbon tetrahedron. Since the differences in atomic mass and electronegativity are small enough, boron, carbon, and nitrogen elements can be combined into abundant planar BxCyN1-x-y compounds25,26,27 and enable the flexibility to modulate stability and electronic structure by tuning the alloy composition28. Nevertheless, systematic structural searches for the B-C-N alloy system are still rare29,30.

In this work, we construct a prediction-analysis framework that combines a symmetry-based combinatorial crystal optimization program (SCCOP) for structural search of target compositions and a feature additive attribution model for data analyses. The generality of SCCOP is showcased by testing it on 35 typical compounds. A practical demonstration is performed for a 2D B-C-N system to illustrate the high-throughput structural search and the ability on extracting structural features. We first convert the structures generated from 17 plane space groups to crystal vectors by graph neural network (GNN) and predict their energies. A Bayesian optimization is performed to explore the structure at the minimum of the potential energy surface. For the desired structures, we optimize it with ML-accelerated SA, in conjunction with a limited number of DFT calculations to obtain the lowest energy configuration. We further demonstrate that the additive feature attribution model can efficiently capture the structural features that dominate the energy and bandgap. We identify five low-energy semiconductors among all the B-C-N compounds, which have bandgaps and mechanical performance comparable with 2D hexagonal BN. Finally, we compare the performance of three methods: SCCOP, DFT-GA and DFT-PSO, which indicates that SCCOP is about 10 times faster while maintaining comparable accuracy.

Results and discussion

We apply SCCOP to 35 representative 2D compounds to demonstrate its generality. We then employ SCCOP to explore 82 different compositions of the B-C-N system (see Supplementary Figs. 1–4); for each composition, we select the structures up to 0.5 eV atom−1 above the convex hull, and a total of 2623 structures are identified. Further, we analyze the average energy and bandgaps with structural features extracted by the additive feature attribution model. By these approaches, five N-rich wide-bandgap insulators are discovered. Lastly, we compare SCCOP with other DFT-based methods, such as DFT-GA and DFT-PSO that have been employed in the mainstream USPEX9 and CALYPSO5 structural search codes, respectively.

Generality of SCCOP

We begin by demonstrating the generality of SCCOP in CSP, and the workflow is shown in Fig. 1. We apply SCCOP to 2D materials and select 35 representative compounds to evaluate its performance. To ensure fairness, the data of these 35 compounds are excluded from all training, validation, and testing processes. By employing transfer learning, we modify the GNN model, enabling us to reuse the pre-trained model for different compounds. This approach significantly reduces the number of DFT calculations required, with only 180 single-point energy calculations performed per compound. Figure 2a provides a comparison between the lowest energy structure in the 2D material database and the structures discovered by SCCOP. The results demonstrate that SCCOP successfully reaches the lowest energy level for 30 compounds, with an average computational time of 6.38 minutes. The remaining five structures are categorized as puckered structures, including Bi2Se3, GaS, MoS2, Ti2C, and WS2. SCCOP utilizes 17 plane space groups to search for 2D materials and introduces perturbations in the z-direction to generate puckered structures. It is important to note that while SCCOP ensures the inclusion of all plane structures, not all puckered structures are covered.

Step 1: Generating structures by symmetry. Step 2: characterizing structures into crystal vectors and exploring the potential energy surface by a Bayesian optimization. Step 3: updating energy prediction model. Step 4: optimizing structures to obtain the lowest-energy configuration by ML and DFT. The whole program runs in a closed loop.

Additionally, we present the three lowest energy structures for eight compounds in Fig. 2b. The result show that seven of these structures exhibit lower energies than those in the database. For example, in the case of AgI, the lowest energy structure in the database corresponds to a honeycomb structure (−2.308 eV atom−1), while SCCOP identifies a more energetically favorable puckered structure in space group P21/m (−2.37 eV atom−1). Furthermore, MgCl2 is recorded as having a four-fold coordination (−3.509 eV atom−1) in the database, but SCCOP discovers that a six-fold coordination exhibits lower energy (−3.591 eV atom−1). These findings underscore the broad applicability of SCCOP to 2D materials. Additional results to support the generality of SCCOP are provided in Supplementary Figs. 5–9.

Energy-related feature extraction

For a thorough understanding of the connection between stability and structural feature, we first plot the ternary phase diagram of the B-C-N system in Fig. 3a. In addition to 11 previously reported structures (blue circle)31,32,33, 28 dynamically stable low-energy structures are discovered (red hollow triangle). Figure 3b provides a list of examples, including more stable structures found in previously explored compositions such as B1C1, B3C5, B2N3, and C4N1, as well as structures found in previously unexplored compositions like B1N2, C5N4, B2C1N2, and B2C3N1. The stable phases of the B-C-N system have thus been greatly extended by the systematic search via SCCOP. We note that the low-energy structures are located on a line, where the stoichiometric ratio of B:N is 1:1, e.g., B1N1, B1C1N1, B1C2N1 and B1C4N1, since the valence electrons of boron and nitrogen can be fully paired to reduce the energy of structure. Similarly, the average valence electrons of boron carbides and carbon nitrides are either less or greater than four; they both hinder electrons pairing. Thus, their formation energies are relatively high. The phonon spectra of all searched stable structures are shown in Supplementary Figs. 10–12.

a Ternary phase diagram of the B-C-N system. All calculations are carried out at 0 K. The borene, graphene, and nitrogen are chosen as the corners of the Gibbs triangle. Blue circles and red hollow triangles represent stable compounds and searched stable structures, respectively; the gray dashed line indicates the compositions with a B:N ratio of 1. b Illustration of typical stable structures of four compounds searched by SCCOP. c Distribution of two-dimensional crystal vectors on a 2D plane using the TSNE dimensionality reduction. Energy contribution of the structural motifs in four compounds are listed on the sides; each motif contains center and neighbor atoms.

Next, we cluster structures by the crystal vectors and extract stable structural features in Fig. 3c. The crystal vectors strongly relate to the atomic species of the compounds and can be clearly grouped into four clusters: carbon nitrides (CxN1-x), boron carbides (BxC1-x), boron nitrides (BxN1-x) and boron-carbon nitrides (BxCyN1-x-y). This indicates that the compounds in the same cluster have similar electronic structures to form structural features with similar energies, making it possible for GNN to predict energy from these features. For all four compounds, ML finds that sp2 hybridization with bond angles of 120o is a universal structural feature, as the number of their valence electrons is close to four per atom. The honeycomb structure might thus be energetically favorable. In addition, the B-centered structural features contribute less to energy than those of carbon and nitrogen. This is primarily due to its electron-deficient bonding nature34. Carbon and nitrogen atoms can, however, form conjugated \(\pi\) bonds or fill empty p orbitals with lone pairs of electrons to enhance the stability. In the carbon nitrides, the two most common types of nitrogen atoms are found, i.e., pyridinic-N (−0.68 eV) and graphitic-N (−0.61 eV)31. For pyridinic-N, the nitrogen atom is coordinated to two carbons, and one orbital is occupied by a lone-pair of electrons, while graphitic-N is characterized by nitrogen sp2 hybridization with three carbon atoms. In the boron carbides and boron nitrides, the boron atoms tend to bond with more than three atoms, implying that boron can stabilize the structure by forming coordination bonds or multi-centered bonds29. Moreover, because of the good match on the chemical valence, three-fold coordination dominates the structural features of boron carbon nitrides. These extracted structural features deepen the understanding of structural stability and may guide future searches of low-energy B-C-N materials.

Bandgap-related feature extraction

To find out how element composition and bandgap are related, the bandgap distribution of the B-C-N system is plotted in Fig. 4a, which shows narrower bandgaps for the B-rich and C-rich compositions and wider bandgaps for the N-rich compositions. Interestingly, two metallic phase regimes are located on two sides of a line with a B:N ratio of 1 (see the red dashed line in Fig. 4); this is because the mismatch of valence electrons, which form a band crossing the Fermi level. Suitable compositions (e.g., B:N = 3:1 and 1:3) help to open the band gap, while the N-rich compounds are more likely to have larger bandgaps. We cluster the structural features by the coordination number in Fig. 4b. 2-fold and 3-fold coordination carbon atoms play a key role in closing the bandgap due to the free p electrons. However, 4-fold coordination carbon, strong electronegative nitrogen, 6-fold coordination boron have little contributions to the electrical conductivity due to either fully paired of electrons or the absence of free electrons. Overall, ML enables the bandgap analysis from the perspective of coordination number, allowing to draw conclusions that are consistent with our physical intuition.

a Bandgap distribution of the B-C-N system. For each composition, the bandgap of the lowest-energy structure is calculated. The red dashed line indicates the compositions with a B:N ratio of 1. b Contributions to the bandgap from different coordination numbers. Brown, pink and blue colors denote carbon, nitrogen and boron, respectively. c Structural features for opening or closing the bandgap of 4 typical structures; the spatial valence band edge state distribution, band structure near the Fermi level, as well as the density of state (DOS) are also depicted. The bandgap contributions and structural features are obtained from the additive feature attribution model.

Furthermore, we consider the contribution of larger structural features comprising several atoms to the bandgap. The percentage of contribution is defined by \(F={\sum }_{i}\frac{{G}_{i}}{{G}_{{\rm{tot}}}}\times 100 \%\), where the summation is over the atoms in the selected structural feature and \({G}_{\rm{tot}}\) is the total contribution to open or close the bandgap. Therefore, greater F implies that this structural feature is more important to the bandgap. Here, four structures are given as examples to show the main factor identified by ML that relates to the formation of bandgap in Fig. 4c. In C4N1, the band-edge states are mainly projected on the N-C-C-N chain, and ML identifies that the chain provides 86% contribution to the band-edge states. The N-C-C-N chain introduces a localized low-energy impurity energy level near the Fermi level, thus leading to the split of the electron cloud in 5-, 6-, and 8-membered rings. In B1C1, C chains are identified to be the central factor in closing the bandgap (100% contribution), which enable the formation of continuous electron clouds and spread to the empty orbitals of adjacent boron atoms.

In B2C1N2 and B2N3, 6- and 8-membered rings of alternating B-N bonds contribute 100 and 75% to the band-edge states to enlarge the bandgap, respectively. Both of them are formed by the same structural motif that is characterized by nitrogen coordination with boron atoms with electrons localized on nitrogen, The direct wide-bandgap insulator hexagonal BN (h-BN) is composed entirely of this feature. In general, ML can quantify the contribution percentage for a given structural feature to rationalize the formation of a bandgap. However, the selection of multi-atom structural features still requires human assistance to verify the rationality; a general method for the selection of features is still in demand.

Wide-bandgap insulators

It is known to be challenging to predict N-rich materials, since two nitrogen atoms can easily combine into nitrogen molecule, resulting in ill structures during structural searches. SCCOP solves the dilemma by quickly screening a large number of structures, with which we identify five stable wide-bandgap materials with bandgaps, mechanical performance, and structural motifs similar to h-BN in the N-rich area (see Fig. 5, Supplementary Figs. 10 and 11, and Table 1). B1N2, B3N4, and B4N5 are direct-gap, while B3N5 and B1C1N3 are indirect. Especially, B1N2 has a bandgap (5.32 eV) that is even greater than that of h-BN. This is because the formation of the fully occupied N-p dangling-bond states reduces the hybridization and band width of the band-edge states, and thus enlarges the bandgap. The Young’s modulus, Poisson’s ratio, and shear modulus of B3N4 are 180.24 N m−1, 0.19 N m−1, and 75.90 N m−1, respectively. The abundant strong bonding between boron and nitrogen in the plane leads to the fact that B3N4 has comparable mechanical properties with h-BN, and it is essential for the reliability in practical applications. Moreover, the thermal conductivity of B1N2 is 10.13 W m−1 K−1, which is 70 times smaller than B1N1 (708.07 W m−1 K−1).

The dramatic drop in the thermal conductivity is mainly caused by the asymmetric distribution of boron, carbon, and nitrogen atoms, which activates a phonon anharmonic effect, and hence results in the enhancement of phonon-phonon scattering to hinder thermal transport. Overall, owing to the exotic optoelectronic properties, good mechanical robustness, and low thermal conductivity, the discovered materials thus have fruitful potential applications, e.g., in ultraviolet photodetectors35,36, thermal insulation materials37,38, and energy storage devices39,40.

Method comparison

Finally, we compare the computational performance of SCCOP with other commonly used DFT-based search approaches, such as DFT-GA in USPEX and DFT-PSO in CALYPSO in Fig. 6. All of them are tested on 82 compositions while ensuring that the parameter setup and computational resources were as consistent as possible. Notably, SCCOP is the most time-saving among the three methods and performs well in most cases. For a more concise understanding of the performance of the three methods, we summarize the key results of comparison in Table 2. We find that SCCOP identifies the lowest-energy structures among 45 compositions with an average time of 5.7 minutes, which is about 10 times faster than DFT-GA and DFT-PSO; the successful rate of SCCOP is comparable or even greater than that of the other two. Therefore, we are confident that SCCOP can greatly reduce the search time while maintaining a comparable accuracy to the state-of-the-art DFT-based search approaches. As a matter of fact, the GNN model is trained based on the DFT-calculated data; it thus cannot surpass the accuracy of DFT results. However, due to the effective feature extraction and relative simple calculation style, GNN can predict energies faster than DFT by 3–5 orders of magnitude15,16,41 while keeping a comparable accuracy. Hence, the GNN-enhanced efficiency of SCCOP significantly reduces the time spent on initial structure screening and structural optimization, and this is the main reason why SCCOP can outperform DFT-based prediction methods.

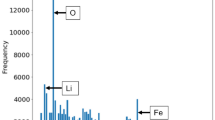

Comparison of computational time cost and the lowest energy found after 1 iteration in (a) carbon nitrides, (b) boron carbides, (c) boron nitrides, and (d) boron carbon nitrides by SCCOP, DFT-GA, and DFT-PSO approaches. The left y-axis is the time cost in log scale and the right is the energy of the searched structures. The computational time is accounted for running on 2*GTX 1080 GPUs and 12*Xeon Gold 6248 CPUs.

In summary, we have developed an ML-based framework for crystal structure prediction and analysis, which consists of five parts: i) generating abundant random structures in AU with symmetry and distance constraints, ii) Bayesian optimization with crystal graph representation for structures to search, iii) modifying the energy prediction model to adapt to target composition by transfer learning techniques, iv) carrying out GNN-accelerated SA for structural optimization, and v) constructing an additive feature attribution model for feature extraction of the search results. We checked the generality of SCCOP by testing it on 35 typical compositions. We demonstrated this framework by applying it to predict the crystal structures of 82 compositions in the B-C-N system. In addition to the successful identification of previously unknown crystal structures, we were also able to extract the key features for structural stabilization, to establish the relationship between bandgap and coordination number, and to discover the critical factors for bandgap formation for specific structures. Five stable wide-bandgap materials with good mechanical properties and low thermal conductivities have been successfully discovered. Compared to conventional DFT-based prediction approaches and domain knowledge analysis methods, this integrated prediction-analysis framework, which takes full advantage of ML, can greatly shorten the discovery and design cycle of novel functional materials.

Methods

The framework of prediction-analysis consists of five parts: i) random sampling, ii) structural search, iii) prediction model update, iv) structural optimization, and v) structural analysis. The workflow of SCCOP is illustrated in Fig. 1, where GNN characterizes the crystal structures and connects each part to achieve iterations.

Random sampling

In the first step of SCCOP, to roughly measure the potential energy surface, unbiased initial structures are randomly generated from 17 plane space groups (PSGs), which cover all types of symmetry of 2D materials, as shown by step 1 in Fig. 1. To determine the structure with a target composition, only the periodic lattice \({\boldsymbol{L}}=\left({{\boldsymbol{l}}}_{1},{{\boldsymbol{l}}}_{2},{{\boldsymbol{l}}}_{3}\right)\in {{\mathbb{R}}}^{N\times 3}\), PSG, atom types \({\boldsymbol{A}}=({a}_{1},\ldots ,{a}_{N})\), and atomic positions \({\boldsymbol{X}}=\left({{\boldsymbol{x}}}_{1},\ldots ,{{\boldsymbol{x}}}_{N}\right)\in {{\mathbb{R}}}^{N\times 3}\) are necessary. The \(n\) atoms of a structure are placed in an asymmetric unit (AU)42, which is the irreducible space and can fill the primitive cell by applying symmetry operations, enabling efficient configurational evolution. The space discretization and minimal interatomic distance techniques43 are employed to reduce the search space. This fast structure sampling method in AU guarantees the generation of a set of reasonable crystal structures \({\mathcal{C}}\) with different space groups in a short time. All asymmetric units used in SCCOP are listed in Supplementary Tables 1–3.

Structural search

To further constrain the search space, a Bayesian optimization is applied to redistribute the sampling probability in order to find the energetically favorable structures, as illustrated in step 2 in Fig. 1. In this step, crystal structures are first converted to crystal vector \({\boldsymbol{c}}\) to achieve crystal characterization. A crystal graph \({\mathcal{G}}\) is built upon the atoms in AU to maximize the efficiency of GNN (Supplementary Table 4), and the graph convolutional operator12 defined as \({{\boldsymbol{v}}}_{i}^{(t+1)}={\rm{Conv}}\left({{\boldsymbol{v}}}_{i}^{\left(t\right)},{{\boldsymbol{v}}}_{j}^{\left(t\right)},{{\boldsymbol{u}}}_{\left(i,j\right)}\right)\), where \({{\boldsymbol{v}}}_{i}^{\left(t\right)},{{\boldsymbol{v}}}_{j}^{\left(t\right)}\) and \({{\boldsymbol{u}}}_{\left(i,j\right)}\) are atom feature vectors and bond feature vectors at \(t\) convolution, respectively. After \(K\) convolutions, the crystal vector \({\boldsymbol{c}}={{\boldsymbol{W}}}_{{\rm{m}}}{\boldsymbol{V}}\) is the weighted sum of atom vectors \({\boldsymbol{V}}=\left({{\boldsymbol{v}}}_{1}^{\left(K\right)},\ldots ,{{\boldsymbol{v}}}_{n}^{\left(K\right)}\right)\in {{\mathbb{R}}}^{n\times 64}\), where \({{\boldsymbol{W}}}_{{\rm{m}}}=\left({w}_{1},\ldots ,{w}_{n}\right)\in {{\mathbb{R}}}^{n\times 1}\) denotes the multiplicity weight matrix that depends on the symmetry of atoms. Lastly, two dense layers are added to map crystal vector \({\boldsymbol{c}}\) to \(\hat{E}\); hence, a rough energy estimation and structural clustering of samples in \({\mathcal{C}}\) can be realized by the GNN model. A few low-\(\hat{E}\) structures in each cluster are selected to obtain more precise energies by DFT calculations for the Bayesian optimization.

Approximating the function \(E=U({\boldsymbol{c}})\) between energy and structures is key for the Bayesian optimization. Here we characterize the structures by the crystal vectors and use samples from precise DFT calculations to fit the function \(U\) by a Gaussian Process Model44. The probability of improvement45 is adopted as the acquisition function \({PI}\left({\boldsymbol{c}}\right)=1-\Phi [(\mu \left({\boldsymbol{c}}\right)-U\left({{\boldsymbol{c}}}^{* }\right)-\xi )/\sigma ({\boldsymbol{c}})]\), where \({{\boldsymbol{c}}}^{* }={{\rm{argmin}}}_{i}U({{\boldsymbol{c}}}_{i})\); \(\mu ({\boldsymbol{c}})\) and \(\sigma ({\boldsymbol{c}})\) are the mean and standard deviations of the posterior distribution on \({\boldsymbol{c}}\) from the Gaussian Process, respectively, and \(\Phi\) is the cumulative distribution function for a normal distribution. The \(\xi\) parameter is used to balance the trade-off between exploitation and exploration. We calculate \({PI}\) among \({\mathcal{C}}\), and choose high-acquisition-value structures for further structural optimization. In this way, abundant initial structures are screened and clustered by GNN, enabling the location of low-energy structures and exploration of potential candidates through Bayesian optimization.

Prediction model update

For target compositions, the pretrained GNN prediction model should be slightly updated to reach a better accuracy, as seen in step 3 in Fig. 1. The pretrained model is trained by the 2D material databases JARVIS-DFT46, C2DB47, and 2DMATPedia48, which contain 10751 crystals covering 85 elements, 4 lattice systems and 17 PSGs. The train:validation:test ratio is 60%:20%:20%; a batch of 128 structures with the Adam optimizer49 is used, and the best-performing model in validation set is chosen as the pretrained model. The lowest mean absolute error (MAE) in the validation set is 0.1468 eV atom−1, with a smaller MAE of 0.1451 eV atom−1 in the test set, implying that the model has a strong generalization ability (shown in Supplementary Fig. 13). According to the transfer learning techniques50, when a small amount of DFT data is used in the search, the prediction model freezes the parameters of graph convolutional layers and only optimizes the full connected layers, which prevents overfitting of the DFT data and improves the capability of distinguishing the energy changes for different predicted structures. Hence, only a small number of single-point energy calculations are needed to modify the pre-train GNN model, greatly reducing the size of the training dataset required for newly added or unknown compounds.

Structural optimization

To obtain more accurate structural parameters and energies of target structures, SCCOP optimizes the structures by first ML and then DFT, as illustrated in step 4 in Fig. 1. The structures occupy the relatively high-energy area on the potential energy surface. We first optimize the structural candidates with the ML-accelerated SA. ML adjusts the structures by displacing the atomic positions and distorting lattice vectors with the Metropolis criterion1, i.e., using the probability \(\exp (-\Delta \hat{E}/{k}_{{\rm{B}}}T)\) to decide if the changes are accepted according to the energy differences \(\Delta \hat{E}\) given by the GNN prediction model. For the ML-optimized structures, \(t\)-distributed stochastic neighbor embedding (TSNE)51 is performed to reduce the dimension of crystal vectors and the Kmeans method52 is used to group the vectors into different clusters. Then DFT optimization is performed to more rigorously relax the structure (that has the lowest energy in each cluster) to find the local minimum on the potential energy surface. The optimized lattice in this step will be employed as the initial lattice in the next search iteration to sample crystal structures. Therefore, DFT is applied to the ML-searched structures to ensure the structures satisfy the physical constraints.

Structural analysis

An additive feature attribution model18,53 is applied to extract property-related features from massive amounts of data (as shown in Fig. 7. Thus, the averaged total energy per atom is predicted by the sum over different local chemical environments, i.e., \(\hat{E}=\mathop{\sum }\nolimits_{i}^{N}{\hat{E}}_{i}/N\), where \({\hat{E}}_{i}={{\boldsymbol{W}}}_{l}{{\boldsymbol{v}}}_{i}^{T}+{b}_{l}\) is built by the atom feature vector \({{\boldsymbol{v}}}_{i}^{T}\), the weight \({{\boldsymbol{W}}}_{l}\), and the bias \({b}_{l}\). To focus on the environment consisting of center and neighbor atoms, we calculate its contribution to energy \({\bar{E}}_{i}\) by the average of \({\hat{E}}_{i}\) on the data that are clustered by coordination atoms, bond lengths, and bond angles. In this way, the energy contribution from each structural motif can be accessed independently, and lower \(\bar{E}\) means higher local structural stability. Meanwhile, for solid-solution systems, the bandgap \(\hat{G}=\mathop{\sum }\nolimits_{i}^{N}{\hat{G}}_{i}/N\) is analyzed in the same way. \({\hat{G}}_{i}\) is also calculated by a linear transformation acting on \({{\boldsymbol{v}}}_{i}^{T}\), with a specifically designed loss function \({\mathcal{L}}={\hat{{\mathbb{E}}}}_{G > 0}\left[{\left(G-\hat{G}\right)}^{2}\right]+{\hat{{\mathbb{E}}}}_{G=0}\left[{\left(G-\max \left(\hat{G},0\right)\right)}^{2}\right]\); the expectation \(\hat{{\mathbb{E}}}[\ldots ]\) indicates an average over a finite batch of samples, and \(G\) is the bandgap computed from DFT. Therefore, structures with zero or negative \(\hat{G}\) are classified as metal, which makes \({\hat{G}}_{i}\) a physically meaningful term; a positive \({\hat{G}}_{i}\) means opening the bandgap, otherwise closing the bandgap. Both of the two analysis models are trained with 80% of the data and then validated with the remaining 20% of the data; the best-performing model in the validation set is selected. A comparison between additive feature attribution model and cluster expansion is provided in Supplementary Fig. 14.

DFT calculations

The DFT relaxations, energy and bandgap calculations for the searched structures are carried out using the Vienna Ab-initio Simulation Package (VASP)54,55,56. For structural relaxations and energy evaluations, the generalized gradient approximation (GGA) within the Perdew-Burke-Ernzerhof (PBE) form for the exchange-correlation functional57 is used. The ion-electron interactions are treated by projector-augmented-wave (PAW)58,59 technique. The plane-wave energy cutoff is set to 520 eV. The Brillouin zone associated with the primitive cell is sampled using a Monkhorst-Pack \(k\)-point mesh of \(4\times 4\times 1\). A vacuum space of 15 Å is applied to avoid artificial interactions between the periodic images. All structures are relaxed with energies and forces converged to 10−5 eV and 0.01 eV Å−1, respectively. The electronic band structures are calculated with the HSE06 hybrid functional60. The phonon thermal conductivity is predicted by the ShengBTE code61.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The SCCOP algorithm has been released as open source code in the Github repository at https://github.com/TheCatOfHs/sccop.

References

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680 (1983).

Wille, L. T. Searching potential energy surfaces by simulated annealing. Nature 325, 374 (1987).

Doll, K., Schön, J. C. & Jansen, M. Global exploration of the energy landscape of solids on the ab initio level. Phys. Chem. Chem. Phys. 9, 6128–6133 (2007).

Wang, Y., Lv, J., Zhu, L. & Ma, Y. Crystal structure prediction via particle-swarm optimization. Phys. Rev. B 82, 094116 (2010).

Wang, Y., Lv, J., Zhu, L. & Ma, Y. Calypso: A method for crystal structure prediction. Comput. Phys. Commun. 183, 2063–2070 (2012).

Wang, Y. et al. An effective structure prediction method for layered materials based on 2d particle swarm optimization algorithm. J. Chem. Phys. 137, 224108 (2012).

Deaven, D. M. & Ho, K. M. Molecular geometry optimization with a genetic algorithm. Phys. Rev. Lett. 75, 288–291 (1995).

M. Woodley, S., D. Battle, P., D. Gale, J. & Richard A. Catlow, C. The prediction of inorganic crystal structures using a genetic algorithm and energy minimisation. Phys. Chem. Chem. Phys. 1, 2535–2542 (1999).

Lyakhov, A. O., Oganov, A. R., Stokes, H. T. & Zhu, Q. New developments in evolutionary structure prediction algorithm uspex. Comput. Phys. Commun. 184, 1172–1182 (2013).

Hohenberg, P. & Kohn, W. Inhomogeneous electron gas. Phys. Rev. 136, B864–B871 (1964).

Kohn, W. & Sham, L. J. Self-consistent equations including exchange and correlation effects. Phys. Rev. 140, A1133–A1138 (1965).

Xie, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 120, 145301 (2018).

Choudhary, K. & DeCost, B. Atomistic line graph neural network for improved materials property predictions. NPJ Comput. Mater. 7, 185 (2021).

Chen, C., Ye, W., Zuo, Y., Zheng, C. & Ong, S. P. Graph networks as a universal machine learning framework for molecules and crystals. Chem. Mater. 31, 3564–3572 (2019).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O., & Dahl, G. E. Neural message passing for quantum chemistry. In Int. Conf. Mach. Learn., pp. 1263–1272, (PMLR2017).

Cheng, G., Gong, X.-G. & Yin, W.-J. Crystal structure prediction by combining graph network and optimization algorithm. Nat. Commun. 13, 1492 (2022).

Wang, J. et al. MAGUS: machine learning and graph theory assisted universal structure searcher. Natl Sci. Rev. 10, nwad128 (2023).

Xie, T. & Grossman, J. C. Hierarchical visualization of materials space with graph convolutional neural networks. J. Chem. Phys. 149, 174111 (2018).

Hsu, T. et al. Efficient and interpretable graph network representation for angle-dependent properties applied to optical spectroscopy. NPJ Comput. Mater. 8, 151 (2022).

Mardt, A., Pasquali, L., Wu, H. & No´e, F. Vampnets for deep learning of molecular kinetics. Nat. Commun. 9, 5 (2018).

Xie, T., France-Lanord, A., Wang, Y., Shao-Horn, Y., & Grossman, J. C. Graph dynamical networks for unsupervised learning of atomic scale dynamics in materials. Nat. Commun. 10, 2667 (2019).

Fan, Q. et al. Biphenylene network: A nonbenzenoid carbon allotrope. Science 372, 852–856 (2021).

Sheng, X.-L., Yan, Q.-B., Ye, F., Zheng, Q.-R. & Su, G. T-carbon: A novel carbon allotrope. Phys. Rev. Lett. 106, 155703 (2011).

Zhang, J. et al. Pseudo-topotactic conversion of carbon nanotubes to t-carbon nanowires under picosecond laser irradiation in methanol. Nat. Commun. 8, 683 (2017).

Hudspeth, M. A., Whitman, B. W., Barone, V. & Peralta, J. E. Electronic properties of the biphenylene sheet and its one-dimensional derivatives. ACS Nano 4, 4565 (2010).

Demirci, S., C¸ allıŏglu, I. M. C., Görkan, T., Aktürk, E. & Ciraci, S. Stability and electronic properties of monolayer and multilayer structures of group-iv elements and compounds of complementary groups in biphenylene network. Phys. Rev. B 105, 035408 (2022).

Liang, H., Zhong, H., Huang, S. & Duan, Y. 3-x structural model and common characteristics of anomalous thermal transport: The case of two-dimensional boron carbides. J. Phys. Chem. Lett. 14, 10975 (2021).

Bafekry, A., Shayesteh, S. F. & Peeters, F. M. Twodimensional carbon nitride (2dcn) nanosheets: Tuning of novel electronic and magnetic properties by hydrogenation, atom substitution and defect engineering. J. Appl. Phys. 126, 215104 (2019).

Luo, X. et al. Predicting two-dimensional boron–carbon compounds by the global optimization method. J. Am. Chem. Soc. 133, 16285 (2011).

Zhou, X. et al. Two-dimensional boron-rich monolayer bxn as high capacity for lithium-ion batteries: A firstprinciples study. ACS Appl. Mater. Interfaces 13, 41169–41181 (2021).

Adekoya, D. et al. Dft-guided design and fabrication of carbon-nitride-based materials for energy storage devices: A review. Nano-Micro Lett. 13, 13 (2020).

Song, L. et al. Binary and ternary atomic layers built from carbon, boron, and nitrogen. Adv. Mater. 24, 4878–4895 (2012).

Angizi, S., Akbar, M. A., Darestani-Farahani, M. & Kruse, P. Review—two-dimensional boron carbon nitride: A comprehensive review. ECS J. Solid State Sci. Technol. 9, 083004 (2020).

Ogitsu, T., Schwegler, E. & Galli, G. β-rhombohedral boron: At the crossroads of the chemistry of boron and the physics of frustration. Chem. Rev. 113, 3425 (2013).

Long, M., Wang, P., Fang, H. & Hu, W. Progress, challenges, and opportunities for 2d material based photodetectors. Adv. Funct. Mater. 29, 1803807 (2019).

Qiu, Q. & Huang, Z. Photodetectors of 2d materials from ultraviolet to terahertz waves. Adv. Mater. 33, 2008126 (2021).

Wicklein, B. et al. Thermally insulating and fire-retardant lightweight anisotropic foams based on nanocellulose and graphene oxide. Nat. Nanotechnol. 10, 277–283 (2015).

Si, Y., Yu, J., Tang, X., Ge, J. & Ding, B. Ultralight nanofibre-assembled cellular aerogels with superelasticity and multifunctionality. Nat. Commun. 5, 5802 (2014).

Biener, J. et al. Advanced carbon aerogels for energy applications. Energy Environ. Sci. 4, 656–667 (2011).

Hamedi, M. et al. Nanocellulose aerogels functionalized by rapid layer-by-layer assembly for high charge storage and beyond. Angew. Chem. Int. Ed. 52, 12038–12042 (2013).

Zuo, Y. et al. Accelerating materials discovery with bayesian optimization and graph deep learning. Mater. Today 51, 126–135 (2021).

Hahn, T., Shmueli, U., & Wilson, A. International tables for crystallography, (D. Reidel Pub. Co.; Sold and distributed in the USA and Canada by Kluwer Academic Publishers Group 1984).

Oganov, A. R. & Glass, C. W. Crystal structure prediction using ab initio evolutionary techniques: Principles and applications. J. Chem. Phys. 124, 244704 (2006).

Rasmussen, C. E. & Williams, C. K. I. Gaussian Processes for Machine Learning, (MIT Press 2006).

Shahriari, B., Swersky, K., Wang, Z., Adams, R. P. & de Freitas, N. Taking the human out of the loop: A review of bayesian optimization. Proc. IEEE 104, 148–175 (2016).

Choudhary, K. et al. The joint automated repository for various integrated simulations (jarvis) for data-driven materials design. NPJ Comput. Mater. 6, 173 (2020).

Haastrup, S. et al. The computational 2d materials database: high-throughput modeling and discovery of atomically thin crystals. 2D Mater. 5, 042002 (2018).

Zhou, J. et al. 2dmatpedia, an open computational database of two-dimensional materials from top-down and bottom-up approaches. Sci. Data 6, 86 (2019).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. In Int. Conf. Learn. Represent. (2015).

Weiss, K., Khoshgoftaar, T. M. & Wang, D. A survey of transfer learning. J. Big Data 3, 9 (2016).

Laurens, V. D. M. & Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 9, 2579–2605 (2008).

Bahmani, B., Moseley, B., Vattani, A., Kumar, R., & Vassilvitskii, S. Scalable k-means + . Proc. VLDB Endow. 5 (2012).

Jiménez-Luna, J., Grisoni, F. & Schneider, G. Drug discovery with explainable artificial intelligence. Nat. Mach. Intell. 2, 573 (2020).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558–561 (1993).

Kresse, G. & Hafner, J. Ab initio molecular-dynamics simulation of the liquid-metal–amorphous-semiconductor transition in germanium. Phys. Rev. B 49, 14251–14269 (1994).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Blöchl, P. E. Projector augmented-wave method. Phys. Rev. B 50, 17953–17979 (1994).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758–1775 (1999).

Heyd, J., Scuseria, G. E. & Ernzerhof, M. Hybrid functionals based on a screened coulomb potential. J. Chem. Phys. 118, 8207–8215 (2003).

Li, W., Carrete, J., A. Katcho, N. & Mingo, N. Shengbte: A solver of the boltzmann transport equation for phonons. Comput. Phys. Commun. 185, 1747–1758 (2014).

Acknowledgements

The work is sponsored by the National Natural Science Foundation of China (Nos. 12074362, 12374017, 52172136, 11991060, 12088101, and U2230402). Science and Technology Innovation 2030–Quantum Communications and Quantum Computers (2021ZD0303303 & ZD0203080000) and the computing time of the Supercomputing Center of the University of Science and Technology of China are gratefully acknowledged.

Author information

Authors and Affiliations

Contributions

C.L. and H.L. equally contributed to developing the framework, preparing the figures and writing the manuscript. X.Z. contributed to the discussion of the results. Z.L. and S.-H.W. supervised and guided the project. All authors reviewed and edited the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, CN., Liang, HP., Zhang, X. et al. Graph deep learning accelerated efficient crystal structure search and feature extraction. npj Comput Mater 9, 176 (2023). https://doi.org/10.1038/s41524-023-01122-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-023-01122-4

- Springer Nature Limited

This article is cited by

-

Designing semiconductor materials and devices in the post-Moore era by tackling computational challenges with data-driven strategies

Nature Computational Science (2024)