Abstract

The rise of automation and machine learning (ML) in electron microscopy has the potential to revolutionize materials research through autonomous data collection and processing. A significant challenge lies in developing ML models that rapidly generalize to large data sets under varying experimental conditions. We address this by employing a cycle generative adversarial network (CycleGAN) with a reciprocal space discriminator, which augments simulated data with realistic spatial frequency information. This allows the CycleGAN to generate images nearly indistinguishable from real data and provide labels for ML applications. We showcase our approach by training a fully convolutional network (FCN) to identify single atom defects in a 4.5 million atom data set, collected using automated acquisition in an aberration-corrected scanning transmission electron microscope (STEM). Our method produces adaptable FCNs that can adjust to dynamically changing experimental variables with minimal intervention, marking a crucial step towards fully autonomous harnessing of microscopy big data.

Similar content being viewed by others

Introduction

Machine learning (ML) techniques have been widely applied in electron microscopy for applications such as atom localization1,2,3, defect identification4,5,6, image denoising7,8,9, determining crystal tilts and thickness10,11,12, classifying crystal structures13,14, optimizing convergence angles15, identifying Bragg disks16, visualizing material deformations17, automated microscope alignment18, and many others. Several recent reviews19,20,21 provide an overview of new and emerging opportunities at the interface of electron microscopy and ML.

One of the biggest challenges of ML in materials research is that it requires a large amount of high quality training data, which must be paired with the ground truth for supervised learning methods. For example, training a defect identification network requires both a set of images and a known set of defect positions in each image. Because manual labeling is extremely time consuming and prone to human bias and error, the typical approach is to train ML models using simulated data, which simultaneously produces both images and labels. However, simulated scanning transmission electron microscopy (STEM) images deviate non-trivially from experimental images because it is extremely difficult to accurately reproduce complex experimental factors that impact the image, including detector noise22,23, sample drift and scan distortions24,25,26, time-dependent alignment errors27, radiation damage28, and surface contamination29,30. These factors make it challenging to train high-accuracy ML models with simulated data; often times such training requires considerable manual optimization to generate a usable training set for a specific experiment data set. Most of these experimental imperfections can be simulated to certain degree3,5, but doing so accurately requires significant parameter optimization for each set of experimental conditions. To maximize their accuracy and precision, ML models are typically trained using a small distribution of simulation parameters, which are carefully chosen to cover most of the experimental variations. Such models must be re-trained each time the effective resolution or contrast of the image changes, which may occur several times a day, even during a single experimental session. Far from realizing the promise of fully automated image processing, this lack of generalizability of ML models represents a significant barrier for microscopy data processing at the scale of big data.

Here, we construct a cycle generative adversarial network (CycleGAN)31 to minimize the difference between simulated and experimental STEM data, producing realistic training data while simultaneously preserving the ground truth for ML applications. CycleGANs are often used for image-to-image translation, such as style transfer tasks like converting a photograph into a Monet-like painting, or changing an image of a zebra into a horse31. We utilize CycleGANs to generate a high quality, CycleGAN-processed training set for defect identification tasks using a fully convolutional network (FCN)1,2,4,5. We find that FCNs trained on CycleGAN-processed images show comparable defect identification performance with the manually optimized training set, while requiring much less human intervention.

Results and discussions

Generating realistic images with CycleGANs

Figure 1 shows an array of simulated (Fig. 1a–c), CycleGAN-processed (Fig. 1d–f), and experimental (Fig. 1g–i) annular dark field (ADF) STEM images and their power spectra for three different materials: monolayer graphene, monolayer WSe2, and bulk SrTiO3 (see Methods for experimental details). The simulated images are calculated with the ‘incoSTEM’ package in Computem32,33 with minimal parameter optimization. These out-of-the-box simulated images are a poor qualitative and quantitative match for the experimental data for two main reasons. First, in contrast with quantitatively accurate multislice or Bloch wave approaches for STEM image simulation, incoherent approximations such as incoSTEM are mainly used because they output images nearly instantaneously, and are thus mainly used to generate initial, qualitative image simulations. Second, images simulated using any method lack key aspects of real experimental data, which contain detector noise, drift-induced distortions, probe jittering, lens aberrations, thickness variations, and surface contamination or damage.

Each row displays the real space image along with its power spectrum. Power spectrum of the image is calculated by the fast Fourier transform (FFT) and displayed in log scale for clarity. a–c Semi-quantitative, simulated ADF-STEM images are generated by the Computem program32,33. These simulated images are passed into pre-trained CycleGANs and resulted in highly realistic images in d–f. Individual CycleGANs are trained for each materials system using both simulated and experimental data as the training set. The CycleGANs transfer experimental imperfections, including noise, jittering, distortion, and surface contamination to simulated images, making the simulated images appear realistic. g–i Experimental ADF-STEM images and their power spectra for comparison. Note that h has been selected to contain the same type of bright defect as in e so that images are more comparable. Scale bars are equal to 1 nm.

Next, we generate realistic STEM images (Fig. 1d–f) by feeding the simulated images (Fig. 1a–c) into the CycleGAN. We trained a separate CycleGAN for each material system, because CycleGANs are typically unable to perform image translation when large shape changes are needed34. The CycleGAN-processed images are similar to the experimental ones in both real and reciprocal space, including their atom size, noise level, image contrast, and contamination. These CycleGAN-processed images can be used as high-quality ML training data. The use of CycleGAN removes the need for manual parameter optimization in the simulation process, but unlike conventional GAN, preserves the original labels and associated ground truth. The CycleGANs are trained with both experimental and out-of-the-box simulated images (see Methods for training details) and do not require paired training data. This makes CycleGAN particularly useful for this ‘simulation-to-experiment’ style transfer task, because a large number of simulated images can be generated without manually matching all atom positions with the experimental data.

CycleGAN architecture and optimization

Figure 2 shows the ‘cycle’ structure of our CycleGAN. In our work, the CycleGAN converts the images between experiment domain X and simulation domain Y. The conversion between domains is commonly called a ‘mapping.’ The CycleGAN provides two mappings, G: X → Y, which makes experimental images look like simulations; and F: Y → X, which makes simulated images look experimental.

The CycleGAN translates images between two domains: (1) the experiment domain X and (2) the simulation domain Y, and has two main components: generators and discriminators. The generator G converts input experimental images x to simulation-like images G(x), and the generator F converts input simulated images y to experiment-like images F(y). The generated images (F(y) and G(x)) are then fed into four discriminators (Dx,img, Dx,FFT, Dy,img, Dy,FFT) along with the raw images (x and y) to evaluate the quality of generated images. Both the input images and their FFTs are examined by the discriminators to calculate the adversarial losses (Ladv), which are used to optimize both generators F and G respectively. By passing the raw images (x and y) with combinations of both generators, identity images (F(x) and G(y)) and cycled images (F(G(x)) and G(F(y))) are also generated. The corresponding identity loss Lid and cycle consistency loss Lcyc are added to ensure the identity and cycle consistency mapping of the generators.

To implement this pair of mappings, the CycleGAN is built on two separate GANs connected in a cyclic manner, where each GAN is responsible for converting images from one domain to the other domain. The main components of our CycleGAN are neural networks called the ‘generator’ and the ‘discriminator’. The goal of a generator is to generate high-quality ‘fake’ images from inputs of another domain, while the goal of a discriminator is to distinguish whether the output from the generator is real or fake. Hence, the training of a CycleGAN is essentially the competition between generators and discriminators. At the end of the training, the final products are two well-trained generators that act as the domain-to-domain mappings G and F. Here, we mainly use the CycleGAN to map simulated images into experiment-like images. However, as we show in Supplementary Fig. 1, the generator G can also be used to effectively remove imperfections from experimental data to produce simulation-like, high signal-to-noise ratio images that can be more easily interpreted through other data-processing methods.

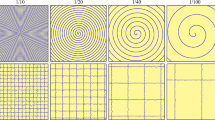

Our CycleGAN contains two generators (G and F), two discriminators in experiment domain X (Dx,img and Dx,FFT), and two discriminators in simulation domain Y (Dy,img and Dy,FFT) (Methods). While GANs typically operate in real space, we add Fourier space discriminators (Dx,FFT and Dy,FFT) because certain information, such as low-frequency contamination and high-frequency noise, are better encoded in Fourier space. The generators are then forced to generate images that can fool not only the real-space image discriminator, but also the Fourier space discriminator. The FFT discriminators are critical for ensuring both the real- and k-space information of the generated STEM images look realistic, especially for images with lower signal-to-noise ratio. For example, a CycleGAN trained without the FFT discriminator produces streak artifacts in graphene images and their FFTs, as shown in Supplementary Fig. 2.

The optimization of the 6 neural networks (G, F, and Ds) is done by minimizing a set of loss functions (Methods). CycleGANs contain additional machinery in the form of regularization terms to ensure the stability and reversibility of the two mappings F and G. The first is the cycle-consistency loss Lcyc(F, G), which regularizes the mappings so that a full cycle of forward and backward operation returns the input image back to its original domain and is similar to the initial input. Mathematically, Lcyc enforces F(G(x)) ≈ x, and G(F(y)) ≈ y to preserve major image information while transferring between domains. Additionally, the identity loss Lid aims to have F(x) ≈ x and G(y) ≈ y, so that images would be mapped to themselves if they are already in the target domain. Without these two constraints, it is likely that a trained mapping will map a simulated image into a realistic experiment-like image that will otherwise have no relation to the original input, i.e. it will not preserve important features like atomic defects.

Evaluation metrics of CycleGAN images

While Fig. 1 shows that CycleGAN-processed images are a good qualitative match with the experimental images, we also examined whether these image types are quantitatively indistinguishable by calculating the Fréchet Inception Distance (FID)35 and Kullback–Leibler (KL) divergences36 between CycleGAN and experimental images (Fig. 3). The FID score is extensively used in the field of computer vision to evaluate the image quality and the performance of generative models37,38,39. The FID score is evaluated by first converting the images from different data sets into feature vectors with a convolutional network (Inception v3) trained on the ImageNet35, and then calculating the Fréchet distance40 between the feature vectors. Note that the feature vectors are extracted through a non-local and non-linear mapping using a convolutional network, specifically designed for computer vision tasks. This process captures high-level, emergent features of the STEM images and allows us to compare the similarity of two different image distributions. In general, a lower FID score indicates higher similarity and better performance of the generative model.

Images are generated from (a) experiments, (b) simulation without noise, (c) simulation with manually optimized noise, and (d) CycleGAN. The FID score measures the dissimilarity between image data sets, so a smaller FID score implies higher similarity between image data sets. The FID scores with respect to the experimental data set are labeled at the bottom right corner of each representative image, with the lowest non-zero value marked in yellow. e–h Histograms of normalized pixel intensity are calculated for each image data set. Each histogram is normalized so that the probability distribution sums to unity. The KL divergence DKL(P∣∣Q) of each data set with the experimental histogram are labeled as DKL at the top right corner of each histogram, with the lowest non-zero value marked in red. Note that both the FID score and KL divergence of intensity histograms are calculated for the entire data set with respect to the experimental data set, where each data set contains roughly 1700 image patches with 256 × 256 pixels. The CycleGAN generated image set exhibits the best FID score and lowest KL divergence, indicating it is the best match for experimental data.

We calculate the FID scores of images generated from simulation without noise (Fig. 3b), simulation with manually optimized noise (Fig. 3c), and CycleGAN (Fig. 3d). We choose experimental STEM images of WSe2 (Fig. 3a) as our reference for the FID score calculation because the ultimate goal is to have a generator that outputs realistic, experiment-like images. We find that the CycleGAN-processed images show the lowest (i.e. best) FID score (FID = 0.35) compared to simulations without noise (FID = 32) and simulations with manually added noise (FID = 0.73). Table 1 shows the FID scores of graphene, WSe2, and SrTiO3.

In addition to the FID score comparison, which involves an additional Inception model, we calculate the KL divergence directly from the pixel intensity histograms of different image data sets (Fig. 3e–h). The KL divergence (DKL) is a type of statistical distance that measures the difference between two probability distributions (P and Q). It is computed as \({D}_{{{{\rm{KL}}}}}(P| | Q)=\sum P\log (\frac{P}{Q})\), so DKL → 0 when P and Q are similar. In other words, DKL(P∣∣Q) is the amount of lost information when approximating P with Q, therefore, it is widely used as a similarity metric for distributions. We find that the DKL of CycleGAN with respect the experimental histogram is significantly lower than the others, indicating the pixel intensity distribution is a better match to the experimental data than the other data sets. We also analyze the power spectra of the images and find that CycleGAN-processed images have the closest power distribution to experimental images (Supplementary Fig. 3), again indicating the high similarity between CycleGAN-processed and experimental images.

Finally, as a qualitative way to understand how the noise transfer works for CycleGANs, we train CycleGANs on one material system and apply it to another material system. This procedure allows us to separate the noise profile learned from the underlying crystal structure and visualize it (see Supplemental Fig. 4).

Defect identification with CycleGAN and FCN

We demonstrate the utility of the CycleGAN-processed, realistic STEM images for defect identification in a two dimensional (2D) material, monolayer WSe2. Identifying atomic defects in atomic-resolution STEM data is critical to understanding the materials’ structure-property relations. 2D materials are particularly well suited for this approach because they are atomically thin, making it possible to image individual atoms across large areas5,41. Several studies42,43,44,45 have employed quantitative analysis of ADF-STEM image intensities, or so called Z-contrast, to identify atomic defects in 2D materials as the integrated intensity approximately scales with the atomic number Z1.5−1.8. However, the integrated intensity is very sensitive to local contamination and microscope conditions, rendering this approach much less reliable for data sets with varying imaging conditions.

Figure 4 shows our defect identification workflow. We utilize an FCN for defect identification; this FCN is trained on the CycleGAN-processed, realistic STEM images. The workflow has 6 main steps: (1) Acquire experimental images using automated scripts, (2) simulate STEM images, (3) train the CycleGAN with both experimental and simulated images, (4) use the CycleGAN to post-process simulated images into realistic images, (5) train the FCN with both CycleGAN-processed images from (4) and the corresponding defect ground truth from (2), and (6) identify atomic defects in experimental images with the FCN. Once set up, the workflow is relatively straightforward with steps being automatically linked together. These steps, and quantitative measurements of the resulting defect identification performance, are described in detail below.

First, we acquire large data sets of experimental STEM images with a custom-build automated acquisition script. We then simulate STEM images using Computem with pre-determined defect types and positions, which constitute the ground truth of defect labels. The experimental images and the simulated images are then used to train a CycleGAN, whose main purpose is to train a generator that can produce realistic images from simulated input images without altering the defect ground truth. CycleGAN learns the noise distribution from experimental images and adds it to the input simulated images, making them higher quality training data for further supervised learning applications. These CycleGAN-processed images, along with the defect ground truth, are used to train a defect identification FCN. Lastly, the FCN takes in the same experimental images with atomic defects, and returns the locations of those defects. The black arrows represent the input and output data flow, and the gray arrows indicate the input data for neural network training.

First, large-scale experimental STEM images are automatically acquired with our custom-built script46. We acquire 2 experimental data sets of monolayer WSe2 on 2 different days. These data are labeled as ‘Day A’ and ‘Day B’, which include 107 and 211 images, respectively, with representative images shown in Fig. 5 (Methods). These data sets are not only massive (they contain 1.5 and 3 million atoms, respectively), but they also demonstrate the typical variations of imaging conditions on different days. The irregular, bright haziness on the atomic-resolution images corresponds to carbon-based surface contamination, whose spatial distribution, thickness, and intensity vary drastically between different regions in a single specimen. In addition, microscope instabilities can cause degradation of image resolution as shown in Fig. 5d, h, where the resolution is degraded from 96 to 110 pm. In each of these data sets, we manually label single Se vacancy defects in 3 images to serve as the ground truth for FCN performance evaluation (Methods); this manual labeling is for testing purposes only and would not be needed in a typical workflow for data analysis. In total, 2 different test sets with 3 images each are constructed and labeled as ‘A’ and ‘B’. By combining the experimental data sets (Day A and Day B) and test sets (A and B) separately, we obtain the 3rd experimental data set (Day AB) and the 3rd test set (AB). Note that ‘Day AB’ contains 107 + 211 = 318 images, while the test set ‘AB’ has 3 + 3 = 6 images.

Experimental STEM images acquired on Day A (a–c) and B (e–g) showing variations within and between days due to sample contamination and microscope instabilities. d, h Power spectra obtained with FFT of a and e demonstrate the variation of image resolution, from 96 pm to 110 pm, determined by the highest transferred spatial frequency marked with white circles. The power spectra are displayed in log scale for visibility. Scale bars are equal to 1 nm.

We then generate incoSTEM-simulated STEM images with defect labels, which contain the same atomic defect species with the experimental STEM images. Note that CycleGANs do not require that defect positions in the experimental and simulated images be identical for training, because it is an unsupervised learning technique for style-transfer between unpaired images31. Here, the ‘style’ being transferred is the ‘local texture’, or noise, rather than the defects; the simulated images are generated with random defect distributions. Using both the experimental and simulated STEM images, we train the CycleGAN to generate realistic, high-quality simulated training data that matches the experimental conditions, while keeping the initial defect positions unchanged. Figure 6 shows the defect positions before and after the CycleGAN processing. The defect positions (circled) are preserved by the CycleGAN because only the ‘local texure’, or noise, is being transferred to the simulated images. This allows us to train an FCN with the combination of CycleGAN-processed STEM images and corresponding defect labels that are produced in the initial simulation. Once the CycleGAN is trained, it can generate unlimited amount of experiment-like training data because the sole input is the labeled out-of-the-box simulated data, which can be generated almost instantaneously.

a Atomic structure of WSe2 with atomic defects. The model is rotated by 10° for better visualization of the overlapping Se atoms and atomic defects. b Simulated images are generated by Computem with pre-determined defect types and positions, therefore the ground truth of defect labels are known. c By using the simulated images (b) as input, the CycleGAN-processed images show realistic, experimental-like noise distribution while keeping the defect label unaltered. Note that only 5 defects in the field of view are circled for better clarity. Scale bars are equal to 1 nm.

FCNs performance with different training sets

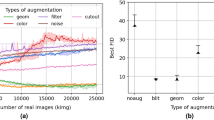

Lastly, we use the CycleGAN-generated images to train FCNs and evaluate their performance for defect identification on experimental images (Methods). Table 2 shows the FCN performance with different FCN training sets. To demonstrate the flexibility of our CycleGAN approach, we trained 5 FCNs with 5 different training sets and evaluate their performance with 3 manually labeled experimental test sets (A, B, and AB) as described above.

The 5 different FCN training sets are: (1) simulation without noise or any processing ‘no noise’, (2) simulation with manually optimized noise ‘manual noise’, as described by our previous work5, (3) CycleGAN-A-processed images ‘CycleGAN-A’, (4) CycleGAN-B-processed images ‘CycleGAN-B’, and (5) CycleGAN-AB-processed images ‘CycleGAN-AB’. In this naming convention, CycleGAN-A-processed images means that the CycleGAN is trained with the experimental data set from day A. The FCN training sets are kept the same size, and other training details are described in the Methods. The FID scores of these training sets are described in Supplemental Table 1. Conventional classifier metrics including precision, recall, and F1 scores47 are chosen to evaluate the trained FCNs. Higher values indicate better FCN performance. While FCNs are typically tested with simulated data, FCNs that perform well on simulated data do not necessarily perform well on experimental data. For example, we show in Supplemental Table 2 that all of our FCNs exhibit high precision, recall, and F1 scores ( > 96%) when tested on simulated data. Here, we use a more challenging test for the FCNs: evaluating their performance directly on manually labeled experimental data.

When evaluated on experimental data, we observe excellent FCN performance for the CycleGAN processed data. As shown in Table 2, the FCNs trained with CycleGAN-processed images perform comparably well with the ones trained with manually optimized simulated images, while requiring significantly less human intervention. Note that precision and recall values above 90% are considered exceptional, and often only achieved while training and testing with simulated data.

We obtain the best results when we test the FCN on the same dataset of images that the CycleGAN is trained on (gray cells in Table 2). This suggests the CycleGAN is adapting to the microscopy conditions of that day. To further validate this, we train the CycleGAN on one day (i.e. data set) and then test the FCN using images from a different day. Here we should expect poor results due to the differing microscopy conditions and this is often what we find (the non-gray cells in Table 2) giving further evidence that the CycleGAN is learning local-in-time microscopy conditions. In comparison, the performance of the FCN trained with noise-free images (first row of Table 2) is significantly worse than the other 4 FCNs . This suggests that adding proper noise into the simulated images is necessary for the FCNs to correctly locate defects in experimental STEM images. This is not surprising because one would expect that FCNs perform the best when the input is well-described by the training set.

While we use 107 (Day A), 211 (Day B), and 318 (Day AB) experimental images for the CycleGAN training for the tests described above, we often achieve comparable FCN performance using as few as only 6 experimental images, though these small training sets occasionally lead to poor FCN performance (Supplemental Table 3). Notably, this small number of experimental images required for CycleGAN training opens the door to dynamically evolving ML models. Although training a CycleGAN from scratch takes 6 hours in our case, updating a pre-trained CycleGAN with a small set of new experimental images may take significantly less time, which could enable nearly in-time adaptation as the microscope conditions or sample properties evolve. While we chose FCN and defect identification as a simple example to demonstrate the application of CycleGAN, it is also possible to combine CycleGAN with more advanced architectures such as ensemble learning48 to achieve even higher generalization capacity for real-time microscopy applications.

In conclusion, we developed a CycleGAN approach to generate high quality, realistic STEM images and benchmarked its performance against manually optimized simulated images. Using this approach, we show that an FCN trained on CycleGAN-generated data achieves high precision for identifying atomic defects in STEM images, enabling flexible, high throughput data processing for large, automatically acquired experimental data sets. Our CycleGAN approach could potentially be applied to most imaging techniques with a proper forward model for generating realistic images for ML applications in microscopy, such as autonomous collection and processing of atomic resolution materials data20,49,50.

Methods

STEM sample preparation

The monolayer graphene (Grolltex) was wet-transferred from a Cu foil to an in situ heating chip (Protochips). The monolayer WSe2 was first mechanically exfoliated from bulk crystals (2D Semiconductors) using the Au-assisted exfoliation method51, and then was transferred onto perforated TEM grids (Quantifoil) using a polymer free transfer52 modified with subsequent KOH and deionized water bath after the grid contact. The SrTiO3 sample was fabricated using the standard focused ion beam lift-out procedures in an FEI Helios 600i Dual Beam FIB-SEM system.

STEM image acquisition

ADF-STEM image acquisition was conducted in a Thermo Fisher Scientific Themis-Z aberration-corrected STEM operated at 80 kV. For atomic-resolution ADF-STEM imaging, the point resolution was about 1 Å with 25 mrad convergence semi angle, 35 pA probe current, 63 to 200 mrad collection semi angles for WSe2 and SrTiO3, 14–20 pm pixel size and a total dwell time of 20 μs per pixel using 10-frame averages. The STEM images for graphene were acquired with 100 pA, a 25 mrad inner collection angle, and were imaged after annealing at 1000 ∘C for a few minutes to reduce the contamination.

STEM image simulation

The STEM simulation is done with the ‘incoSTEM’ package in Computem32,33 with minimal parameter optimization. The ‘Simulation’, or ‘no noise’ images in Fig. 3b and Table 2 are simulated with the same experimental conditions described above, except that no counting noise, source size, or aberrations are included. The manually optimized parameters for the ‘manual noise’ data set in both Fig. 3c and Table 2 include surface contamination extracted from experimental images, Poisson and Gaussian noise, image shear, source size, aberrations up to 2nd order, and image brightness/contrast. All atom positions are randomly perturbed (std = 0.01 Å) before simulation to remove a periodic sampling artifact that can occur when atoms are not exactly located on a pixel, which would show up as stripes in their FFTs.

CycleGAN architecture

The generators follow a U-net architecture as described in the appendix of ref. 53. The discriminators are patch-level31, meaning that instead of the output being a single number indicating whether an image is real or fake, the discriminator outputs a 30 × 30 array of outputs, where each element in the array denotes the realness of a 70 × 70 patch of the original image. One difference between the architecture here and that in ref. 53 is that we use an instance normalization instead of a batch normalization in each layer. The FFT discriminators are implemented using the log of the power spectrum of the image, or \(\log (| FT(I){| }^{2})\), where I is the image, and FT is the Fourier transform. Therefore, only the amplitude information are being considered in the FFT discriminators. It is possible to extend the approach to complex values for phase information16, but we do not expect a big difference in this case because the spatial frequencies of experimental imperfections (noise and contamination) do not necessarily hold specific phases in STEM images. Additionally, their spatial distributions are partially regularized by image discriminators as well.

CycleGAN training

The training set of CycleGAN is composed by both experimental images and simulated STEM images. To prepare the CycleGAN training set, for each data set, we normalize all the image intensities into [-1, 1] within their group by their minimum and maximum value after a saturation step ( ± 3.5σ). We then cut them into 256 × 256 patches. The batch-size is 42, however when adding each patch into the batch, they are randomly augmented by a combination of rotation (multiples of 90 degrees) and flipping (vertically or horizontally). The out-of-the-box simulated images initially contain no noise, which is detrimental to a CycleGAN because a sufficient amount of variability is required to generate unique images. We add Gaussian noise (std = 0.1) to the out-of-the-box simulated images to ensure enough variability across the simulation data set. Note that this parameter does not require further manual optimization. All networks were trained for 297 epochs, where in the first 148 epochs, the learning rate was 0.032, and from epochs 148–297, the learning rate linearly decayed to zero. Supplementary Fig. 5 shows the training loss as a function of epochs. One key difference between traditional CycleGAN training and here is that the FFT discriminator loss is also incorporated in the generator loss alongside the real-space discriminator loss. For the discriminators such as DX,img, and DY,img, the loss functions are

and similarly for DX,FFT, and DY,FFT. For the generators G : X → Y and F : Y → X, the loss functions are

Here, the adversarial loss \({{{{\mathcal{L}}}}}_{{{{\rm{adv}}}}}\) that is commonly used in normal GANs54 is the term that aims to fool its respective discriminator(s):

The cycle-consistency loss is given by

and the identity loss is given by

For the cycle-consistency loss, λcyc was 10, and for the identity loss, λid was 5. Each CycleGAN in Table 2 has different numbers of 1K-resolution STEM images as their training set. CycleGAN-A has 107 images from Day A, CycleGAN-B has 211 images from Day B, and CycleGAN-AB has 318 images from Day AB. All the models are constructed with Tensorflow and ran on the Delta cluster at the National Center for Supercomputing Applications (NCSA). There, training a CycleGAN model from scratch takes around 6 hours to complete using a single NVIDIA A40 GPU.

FCN architecture and training

We employ the same FCN architecture as in our previous report5. We used the Adam optimizer with a categorical cross entropy loss function. For each 1024 × 1024 (1K-resolution) STEM image that is used for FCN training, we cut the image into 16 256 × 256 patches with stride (256, 256). Each of these patches is then rotated, flipped, and scale jittered, so that each 256 × 256 patch has 24 different augmentations. Hence, each 1K-resolution STEM image leads to 16 × 24 = 384 smaller patches, which are then divided into training set and simulated test set with a proportion of 10:1. In all FCN training, we use 107 1K-resolution STEM images. While training the FCN, each epoch randomly chooses 1000 256 × 256 patches from the training set. We train for 500 epochs.

FCN test sets

The FCNs are tested with simulated (Supplemental Table 2) and experimental test sets (Table 2). The simulated test sets are generated along with the FCN training sets but are picked out before the FCN training. The experimental test sets are constructed by selecting 3 images from Day A (107 images) and Day B (211 images) experimental data set, respectively. The images are selected to roughly cover the entire experiment time span, which is usually 4–8 hours. The single Se vacancy positions are determined by the local contrast, as single vacancy in monolayer WSe2 would produce only half of the ADF-STEM pixel intensity comparing to a defect-free Se column. Each image is slightly Fourier filtered (high pass and masking the Bragg reflections) to aid the manually labeling process, while the final labeling is confirmed with the raw image. Test images are 21 × 21 nm2 large with 1024 × 1024 pixels, while test set A and B contains 117 and 177 single Se vacancies per image in average, respectively.

Data availability

The data sets are available on Zenodo: https://doi.org/10.5281/zenodo.7696721.

Code availability

The codes are available on github: https://github.com/ClarkResearchGroup/stem-learning.

References

Ziatdinov, M. et al. Deep learning of atomically resolved scanning transmission electron microscopy images: chemical identification and tracking local transformations. ACS Nano 11, 12742–12752 (2017).

Madsen, J. et al. A deep learning approach to identify local structures in atomic-resolution transmission electron microscopy images. Adv. Theory Simul. 1, 1800037 (2018).

Lin, R., Zhang, R., Wang, C., Yang, X.-Q. & Xin, H. L. TEMImageNet training library and AtomSegNet deep-learning models for high-precision atom segmentation, localization, denoising, and deblurring of atomic-resolution images. Sci. Rep. 11, 5386 (2021).

Maksov, A. et al. Deep learning analysis of defect and phase evolution during electron beam-induced transformations in WS2. npj Comput. Mater. 5, 12 (2019).

Lee, C.-H. et al. Deep learning enabled strain mapping of single-atom defects in two-dimensional transition metal dichalcogenides with sub-picometer precision. Nano Lett. 20, 3369–3377 (2020).

Guo, Y. et al. Defect detection in atomic-resolution images via unsupervised learning with translational invariance. npj Comput. Mater. 7, 180 (2021).

Quan, T. M. et al. Removing imaging artifacts in electron microscopy using an asymmetrically cyclic adversarial network without paired training data. In 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 3804–3813 (IEEE, 2019). https://ieeexplore.ieee.org/document/9022346/.

Ede, J. M. & Beanland, R. Improving electron micrograph signal-to-noise with an atrous convolutional encoder-decoder. Ultramicroscopy 202, 18–25 (2019).

Wang, F., Henninen, T. R., Keller, D. & Erni, R. Noise2Atom: unsupervised denoising for scanning transmission electron microscopy images. Appl. Microsc. 50, 23 (2020).

Xu, W. & LeBeau, J. M. A deep convolutional neural network to analyze position averaged convergent beam electron diffraction patterns. Ultramicroscopy 188, 59–69 (2018).

Zhang, C., Feng, J., DaCosta, L. R. & Voyles, P. M. Atomic resolution convergent beam electron diffraction analysis using convolutional neural networks. Ultramicroscopy 210, 112921 (2020).

Yuan, R., Zhang, J., He, L. & Zuo, J. M. Training artificial neural networks for precision orientation and strain mapping using 4D electron diffraction datasets. Ultramicroscopy 231, 113256 (2021).

Aguiar, J. A., Gong, M. L., Unocic, R. R., Tasdizen, T. & Miller, B. D. Decoding crystallography from high-resolution electron imaging and diffraction datasets with deep learning. Sci. Adv. 5, 1–10 (2019).

Kaufmann, K. et al. Crystal symmetry determination in electron diffraction using machine learning. Science 367, 564–568 (2020).

Schnitzer, N., Sung, S. H. & Hovden, R. Optimal STEM convergence angle selection using a convolutional neural network and the Strehl Ratio. Microsc. Microanal. 26, 921–928 (2020).

Munshi, J. et al. Disentangling multiple scattering with deep learning: application to strain mapping from electron diffraction patterns. npj Comput. Mater. 8, 254 (2022).

Shi, C. et al. Uncovering material deformations via machine learning combined with four-dimensional scanning transmission electron microscopy. npj Comput. Mater. 8, 114 (2022).

Xu, M., Kumar, A. & LeBeau, J. M. Towards augmented microscopy with reinforcement learning-enhanced workflows. Microsc. Microanal. 28, 1952–1960 (2022).

Ede, J. M. Deep learning in electron microscopy. Mach. Learn. Sci. Technol. 2, 011004 (2021).

Kalinin, S. V. et al. Machine learning in scanning transmission electron microscopy. Nat. Rev. Methods Prim. 2, 11 (2022).

Botifoll, M., Pinto-Huguet, I. & Arbiol, J. Machine learning in electron microscopy for advanced nanocharacterization: current developments, available tools and future outlook. Nanoscale Horiz. 7, 1427–1477 (2022).

Seki, T., Ikuhara, Y. & Shibata, N. Theoretical framework of statistical noise in scanning transmission electron microscopy. Ultramicroscopy 193, 118–125 (2018).

Jones, L. Quantitative ADF STEM: acquisition, analysis and interpretation. In IOP Conference series: materials science and engineering, vol. 109 (IOP Publishing Ltd, 2016).

Braidy, N., Le Bouar, Y., Lazar, S. & Ricolleau, C. Correcting scanning instabilities from images of periodic structures. Ultramicroscopy 118, 67–76 (2012).

Ophus, C., Ciston, J. & Nelson, C. T. Correcting nonlinear drift distortion of scanning probe and scanning transmission electron microscopies from image pairs with orthogonal scan directions. Ultramicroscopy 162, 1–9 (2016).

Savitzky, B. H. et al. Image registration of low signal-to-noise cryo-STEM data. Ultramicroscopy 191, 56–65 (2018).

Schramm, S. M., van der Molen, S. J. & Tromp, R. M. Intrinsic instability of aberration-corrected electron microscopes. Phys. Rev. Lett. 109, 163901 (2012).

Egerton, R. Control of radiation damage in the TEM. Ultramicroscopy 127, 100–108 (2013).

Hettler, S. et al. Carbon contamination in scanning transmission electron microscopy and its impact on phase-plate applications. Micron 96, 38–47 (2017).

Goh, Y. M. et al. Contamination of TEM holders quantified and mitigated with the open-hardware, high-vacuum bakeout system. Microsc. Microanal. 26, 906–912 (2020).

Zhu, J.-Y., Park, T., Isola, P. & Efros, A. A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In 2017 IEEE international conference on computer vision (ICCV), vol. 2017-Octob, 2242–2251 (IEEE, 2017).

Kirkland, E. J. Computem, http://sourceforge.net/projects/computem (2013).

Kirkland, E. J. Advanced computing in electron microscopy 3 edn (Springer Cham, 2020) https://doi.org/10.1007/978-3-030-33260-0.

Gokaslan, A., Ramanujan, V., Ritchie, D., Kim, K. I. & Tompkin, J. Improving shape deformation in unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision (ECCV), 649–665 (ECCV, 2018).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In Advances in neural information processing systems, vol. 30 (NIPS, 2017).

Kullback, S. & Leibler, R. A. On Information and Sufficiency. Ann. Math. Stat. 22, 79–86 (1951).

Lucic, M., Kurach, K., Michalski, M., Gelly, S. & Bousquet, O. Are GANs created equal? A large-scale study. Advances in Neural Information Processing Systems vol. 31 (NIPS, 2018).

Borji, A. Pros and cons of GAN evaluation measures. Comput. Vis. Image Underst. 179, 41–65 (2019).

Ren, C., Ziemann, A. K., Theiler, J. & Durieux, A. Deep snow: synthesizing remote sensing imagery with generative adversarial nets. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imagery XXVI, (eds. Messinger, D. W. & Velez-Reyes, M.) 33 (SPIE, 2020).

Dowson, D. & Landau, B. The Fréchet distance between multivariate normal distributions. J. Multivar. Anal. 12, 450–455 (1982).

Huang, P. Y. et al. Imaging atomic rearrangements in two-dimensional silica glass: watching Silica’s dance. Science 342, 224–227 (2013).

Krivanek, O. L. et al. Atom-by-atom structural and chemical analysis by annular dark-field electron microscopy. Nature 464, 571–574 (2010).

Azizi, A. et al. Defect coupling and sub-angstrom structural distortions in W 1- x Mo x S 2 monolayers. Nano Lett. 17, 2802–2808 (2017).

Zheng, Y. J. et al. Point defects and localized excitons in 2D WSe2. ACS Nano 13, 6050–6059 (2019).

Ding, S., Lin, F. & Jin, C. Quantify point defects in monolayer tungsten diselenide. Nanotechnology 32, 255701 (2021).

Lee, C.-H. et al. Automated acquisition and deep learning of 2D materials on the million-atom scale. Microsc. Microanal. 28, 3062–3063 (2022).

Murphy, K. P. Machine learning: a probabilistic perspective. (MIT Press, Cambridge, 2012).

Roccapriore, K. M. et al. Probing electron beam induced transformations on a single-defect level via automated scanning transmission electron microscopy. ACS Nano 16, 17116–17127 (2022).

Spurgeon, S. R. et al. Towards data-driven next-generation transmission electron microscopy. Nat. Mater. 20, 274–279 (2021).

Olszta, M. et al. An automated scanning transmission electron microscope guided by sparse data analytics. Microsc. Microanal. 28, 1–11 (2022).

Velický, M. et al. Mechanism of gold-assisted exfoliation of centimeter-sized transition-metal dichalcogenide monolayers. ACS Nano 12, 10463–10472 (2018).

Pacilé, D., Meyer, J. C., Girit, Ç. Ö. & Zettl, A. The two-dimensional phase of boron nitride: few-atomic-layer sheets and suspended membranes. Appl. Phys. Lett. 92, 133107 (2008).

Isola, P., Zhu, J.-Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2017-Janua, 5967–5976 (IEEE, 2017). http://ieeexplore.ieee.org/document/8100115/.

Goodfellow, I. J. et al. Generative adversarial networks. In Advances in neural information processing systems vol. 27 (NIPS, 2014).

Acknowledgements

This material is based upon work supported by the U.S. Department of Energy, Office of Science, Office of Basic Energy Sciences, Division of Materials Sciences and Engineering under award number DE-SC0020190, which supported the electron microscopy and related data analysis. We acknowledge Yue Zhang and Prof. Arend van der Zande for the WSe2 sample fabrication. This work was carried out in part in the Materials Research Laboratory Central Facilities at the University of Illinois Urbana–Champaign. This research is also part of the Delta research computing project, which is supported by the National Science Foundation (award OCI 2005572), and the State of Illinois. Delta is a joint effort of the University of Illinois Urbana–Champaign and its National Center for Supercomputing Applications.

Author information

Authors and Affiliations

Contributions

Under supervision by B.K.C., A.K. built and evaluated the CycleGAN and FCN models, and conducted the data set similarity analysis. Under supervision by P.Y.H., C.-H.L. acquired and analyzed the experimental and simulated STEM images, and conducted the data set similarity analysis. A.K. and C.-H.L. contributed equally to this work and are considered as co-first authors. All authors read and contributed to the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khan, A., Lee, CH., Huang, P.Y. et al. Leveraging generative adversarial networks to create realistic scanning transmission electron microscopy images. npj Comput Mater 9, 85 (2023). https://doi.org/10.1038/s41524-023-01042-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-023-01042-3

- Springer Nature Limited

This article is cited by

-

Atomically engineering metal vacancies in monolayer transition metal dichalcogenides

Nature Synthesis (2024)

-

Machine learning the microscopic form of nematic order in twisted double-bilayer graphene

Nature Communications (2023)