Abstract

High Entropy Alloys (HEAs) are composed of more than one principal element and constitute a major paradigm in metals research. The HEA space is vast and an exhaustive exploration is improbable. Therefore, a thorough estimation of the phases present in the HEA is of paramount importance for alloy design. Machine Learning presents a feasible and non-expensive method for predicting possible new HEAs on-the-fly. A deep neural network (DNN) model for the elemental system of: Mn, Ni, Fe, Al, Cr, Nb, and Co is developed using a dataset generated by high-throughput computational thermodynamic calculations using Thermo-Calc. The features list used for the neural network is developed based on literature and freely available databases. A feature significance analysis matches the reported HEAs phase constitution trends on elemental properties and further expands it by providing so far-overlooked features. The final regressor has a coefficient of determination (r2) greater than 0.96 for identifying the most recurrent phases and the functionality is tested by running optimization tasks that simulate those required in alloy design. The DNN developed constitutes an example of an emulator that can be used in fast, real-time materials discovery/design tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.INTRODUCTION

For much of human history, the development of metallurgy has been intrinsically tied to the development of civilization. Over the course of modern history, new alloys were made by identifying one principal element and different elements were added to this matrix to achieve the desired properties, forming the principal element alloy family. However, the number of elements in the periodic table is limited, thus so are the number of alloy families that can be developed conventionally. High Entropy Alloys (HEAs)1,2,3,4,5 are defined as alloys composed of five or more elements with concentrations from 5% to 35%6. Yeh et al. coined the term and stated that configurational entropy (ΔScon) has a maximum value when the elements are in equal proportions and increases with the number of elements2. This high configurational entropy would contribute to the stability of solid solutions relative to other competing phases (such as intermetallic phases). While configurational entropy as a key alloying concept in HEAs now plays a minor role, the unique properties and incredibly large search space for HEAs make them an incredibly rich and attractive research program for the metallurgy community.

Despite their promise, the vast compositional space in HEAs makes it unfeasible to study through exhaustive experimental approaches. In order to address this problem, there has been considerable effort at trying to develop Machine Learning (ML) techniques in order to make the exploration of the HEA space more efficient and effective. In fact, ML-guided research in the HEA field has covered substantial ground, particularly when it comes to identifying single-phase solid solutions amenable for further (experimental) development. Traditionally, to design solid solutions, the most used criteria is the empirical Hume-Rothery rules7,8. However, since the distinction between the solute and the solvent is nebulous in HEAs, the thermodynamic relations are accordingly modified9,10. Parameterization of unary and binary properties have been used to draw empirical relationships for phase selection11,12. Most notably, the average formation enthalpy, the atomic size difference13, and the Valence Electron Concentration (VEC)14 have been proposed as discerning parameters for phase selection. Recently, the idea behind the Hume-Rothery rule has stimulated further interest in developing ML frameworks for alloy design12. The accessibility of open-source ML algorithms and a constantly growing database of experimentally synthesized HEAs has opened the way for recent ML-assisted exploration and phase stability predictions in the HEA chemical space11,12,15,16,17,18,19,20,21,22,23. Zhou et al.12 used a featurization of 13 design parameters heavily based on thermodynamic properties into different ML models. Classification into four different classes was thus achievable by using these design parameters. Wu et al.16 developed an ANN model to predict HEAs with near-eutectic compositions. ML-assisted design of compositionally complex HEAs was able to obtain superior mechanical properties.

Literature contributions to ML-guided models for HEA’s stable phases are mainly applied towards classification, yet HEA phase composition is a continuum space that could be described better by regression. Deep Neural Networks (DNN), for example, have been proven useful in real-world complex applications24 and in materials science as well25,26, and should be able to quantitatively predict the phase constitution of a system—i.e., specific numerical phase fraction values—, provided sufficient data is available. Moreover, the use of phase fraction values instead of alloy classes could improve the efficiency at which ML models map the potential energy landscape12,27,28,29,30,31 of competing phases in HEAs. The major drawback of this approach is the lack of sufficient data with accurate phase fraction value, since the objective multiphase HEAs are rarely characterized as most of the literature is biased around single solutions HEAs.

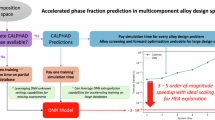

In this work, an inline ML model for predicting phase fraction is developed which can be used in conjunction with other available computational tools for alloy design. In this regard, a deep neural network is designed and trained using a dataset generated by Thermo-Calc phase equilibria calculations for the elements: Mn, Ni, Fe, Al, Cr, Nb, and Co. Featurization for the HEA space has been approached by composition-dependent transformations inspired by available literature in HEA phase classification11,12. This parametrization from elemental properties is useful in that more information is available to build the model and feature selection may unveil new empiric relationships. The trained model was used as a surrogate for computational phase equilibria calculations and high-throughput search alloys with targeted phase composition.

We note that the major motivation for this work is the demonstration that it is possible to develop fast-acting surrogates for computational thermodynamic engines that otherwise would be available in alloy design operations. To address this demanding objective we propose a Deep Neural Network (DNN) trained on high-throughput data obtained via the CALPHAD method to accelerate the discovery of materials by accelerating optimization techniques and advancing the knowledge on the feature importance on the HEA design. The development of the proposed model also serves as a demonstration of the ability of neural networks to represent complex, highly-dimensional and non-linear phase constitution spaces. The reader is referenced to the Methods section for the DNN specifications.

RESULTS

Pearson’s correlation between the proposed feature space and the phase classes are shown in Fig. 1. By visual inspection, feature correlations for each phase have clear distinctions, creating a unique phase-feature signature. While insufficient for discerning between phases, given a composition vector and its subsequent feature transformation, it points toward the fact that phases are responsive to different features even if they are a transformation of the same elemental property (avg, red, and diff).

Averages (avg) and harmonic averages (red) have almost the same correlation values with the phases, however mean differences (dif) have a distinctive correlation. For instance, commonly referenced properties such as the VEC average have a high correlation with the FCC phase, and therefore the harmonic average too, but for the difference average, the correlation becomes slightly negative. Alternatively, the BCC phase has a negative correlation with the average and a positive correlation with the difference. However, opposite correlation in difference averaging to those in arithmetic avg and harmonic (red) does not repeat for all properties. Therefore, different features turn out to unveil new information not accessible by common averaging methods.

A previously studied feature, the atomic difference (δ) feature, has a strong negative correlation with FCC, interestingly it has a slightly positive correlation with BCC. A low δ is usually linked to single solutions, yet Pei et al.19 have shown that single BCC solutions have higher delta values compared to HCP and FCC, even overlapping with multi-phase alloys.

To further explore how this feature construction maps the HEA space in question, a Principal Component Analysis (PCA) is run in the whole feature space in the train dataset. Figure 2 shows the two first Principal Components out of the PCA and a coloring corresponding to the phase fraction of each phase/class: Regions with a higher concentration of one of the classes cluster together and are separated by faded borders to different classes. This indicates the proposed space is linked to the phase fraction given that there is enough information for each phase.

Even though some of the features have been proven to efficiently discern between different phases and/or mixtures of phases, this specific set has not yet been proven to do so. Thus since PCA succesfully clusterizes the phase fraction in an unsupervised training setup, we proceed to apply a more computationally expensive model.

The DNN architecture consists of four hidden ReLU layers and a single softmax output layer. As shown in Fig. 3, the DNN was trained for 10,000 epochs, on which over-fitting on the training set never compromised the validation loss, the loss chosen for training is the mean squared error, in which errors for each data point are taken in each class, squared, summed up and averaged. This choice of loss also prefers smaller errors in all phases, rather than a perfect match at the cost of more pronounced errors. Activation functions in the hidden layers have been chosen due to optimal performance and on the output due to the composition problem constraint. We refer to the output layer as phase fractions instead of the common probabilities used for soft-max-based DNNs. Layer sizes are proven to capture the complexity of the input space and efficiently map them to five different classes of phases.

A K-medoids partition method was also tested as illustrated in Supplementary Fig. 1 to test the model’s invariance under a different splitting strategy and maximize the composition distance between training data-points. The test showed a slight decrement in the overall accuracy, yet the model still performs within reasonable margin errors as shown in Supplementary Table 3.

Subsets of data are chosen randomly from each of the complexity levels of the subsystems (3-component, 4-component, ...) so we assure there is an equal partition in all levels of ideal entropy of the alloy space: 70% for training, 10% for validation, and 20% for testing; similar values for accuracy are expected through all subsets assuming the data behave uniformly across the levels of complexity of the system. Random data partition within each subset of different elemental complexity ensures the testing data falls within a reasonable alloy space composition that reflects the application of the regressor and the training data alike32. In addition to the classic training, validation, and test partition of the data-set, a 10-fold Cross-Validation method was applied in the combined train and validation data, where this set was split ten times randomly. Additional information on each partition subsystem and prediction results are provided in Supplementary Tables 1 and 2, respectively. For each split, a DNN model trained and validated in 90% of the data is tested in the rest of the available data. Lastly, the model is chosen and results for prediction at each phase for training, validation, and testing data-sets are shown in Fig. 4. Parity plots show the efficiency of predicting the phase fraction for each final class for our 5-dimensional regressor. The DNN model not only follows this premise but also restrains the coefficient of determination for all classes to be above 0.95 and for simple solid solution classes FCC and BCC above 0.98. This makes the trained DNN extremely efficient in quickly map the system in search of concentrations that stabilize simple solid solutions.

Feature significance

Unveiling elemental features that explain the relationship between phase fraction and composition is of special interest for HEA design. This work takes advantage of the heavy featurization construction and the final surrogate model to explore the Shapley values for each feature. A random subset of 5000 from the training data on the highest complexity subsystem (7-element alloy) is analyzed to obtain the Shapley values33,34.

Shapley analysis returns SHAP values for each of the generated classes. SHAP summary plots are shown in Fig. 5(a,c) for the highest ranking features using a Deep Explainer to approximate the shape values using a random subsection of the training data of 5000 samples. The features returned by this SHAP analysis seem to favor those historically used in HEA design (Sid,Hmix,Kstd, etc.). Generated composition-based features for the model regressor come in second by the SHAP analysis. Specifically, electronegativities appear to be ubiquitous in the model. Different analytic definitions of electronegativity appear multiple times in the top 30 features.

Two different background datasets were used to generate these two analyses, one where 5000 datapoints are randomly chosen and other where data is composed of 5000 high FCC concentration (>90%FCC) as per the DNN regressor output. Overall, common features are chosen in both analyses. Nevertheless, high concentration FCC changes the ranking of the features due to a highest FCC concentration. Giving this single model changes the concentration profile of the background more weight is given to those features that are more likely to determine the single FCC phase selection.

Feature selection for future analysis was chosen by selecting commonly reported features in the literature11,35 in comparison with obscure new feature vectors that appear in the Shapley analysis. In Fig. 6, the simplified results from random sampling in the seven-component system are showcased in order to visualize the value for the chosen features and how phase constitution results are arranged in a pseudo-ternary diagram. In this diagram, FCC and BCC remain as a single-phase class and the rest of the intermetallic phases are summed up together in a vertex of the ternary representing the Rest (classes σ, C14,Laves, and IMs). The diagram consists of 200,000 random data-points where those on the sides of the triangle are filtered out. The features chosen by the feature analysis are atomic size difference (δ), Valence Electron Concentration (VEC), enthalpy of mixing (ΔHmix, Ideal Entropy of Mixing Sid, Pauling Electronegativity (χPauling), and Polarizability (α).

Pseudo-ternary phase fraction plots show a qualitative representation of a random sampling with color heatmap that correspond to the values (Black arrows point towards the higher values in each feature value) for a polarizability average, b the atomic size difference (δ), c ideal entropy of mixing, d mixing enthalpy, e the valence electron concentration, f difference mean for the electron concentration, g difference mean for the Pauling electronegativity, and h the standard deviation of electronegativity (not used in training).

The parameter δ qualitatively matches the previously reported findings of a higher probability of finding a Solid Solutions at lower values of δ13,35,36. Results match those presented by Pei et al.19 in that FCC-rich zones undoubtedly show the lower values in the feature, while BCC shows larger values, it makes it difficult to tell apart from the IMs-rich zone values. Furthermore, VECavg coincides with the available literature the highest values for VECavg a high probability of FCC, and at values below 7, we start to see BCC formation37,38. The difference between values near full BCC and the Rest class are not far apart, but those for BCC are slightly higher such as was shown by Yang et al.39. Again, a property-related feature, the weighted difference mean VECdif, offers a higher specificity towards BCC, with higher values pointing towards the formation of this simple solution.

Thermodynamic quantities chosen return no surprising results, entropy shows an almost uniform value throughout the sample space with the slight higher concentration of lower values near the full FCC vertex. Therefore, while entropy gives its name and most probably its stability to this alloy subset is not the only parameter that drives its stability. Enthalpy of mixing excludes higher values for high-concentration FCC alloys in this work and it agrees with previous reported works12,13,35. It also agrees with previously reported range of solid solutions at enthalpies higher than −5 kJ/mol and the more negative the enthalpy becomes, the more likely there is compound formation37.

The electronegativity parameter chosen is the weighted difference (\({\chi }_{Pauling}^{dif}\)). Yet, this value doesn’t match the trends of standard deviation of electronegativity shown to favor solid solutions at lower values37,40. In our case, the stoichiometrically weighted mean difference goes from low value on intermetallic phases, and higher values on high-concentration FCC. This stark deviation from the expected behavior was analyzed by calculating the widely used standard deviation of electronegativity which shows agreement with the previously reported trend, that attributes single solution formation to smaller deviations improving the chemical compatibility. This contrasting difference is attributed to the binary construction of the weighted difference formula. The difference weighted mean is built to keep track of the difference between each atom pair in the alloy in contrast with the unary and averaged method used in standard deviation. This result indicates that different approaches in featurization of the same atomic property may benefit the robustness of an ML model in HEAs providing more information of the system. Similarly, average polarizability (α which is closely connected to electronegativity also draws a clear trend in which lower α have higher concentrations of simple phases. This can be understood, in the same manner, as a consequence of chemical compatibility in the alloy. The atoms less likely to polarize are consequently more stable in a solid solution.

The correlation profiles from the significant features are shown in Fig. 7, the ones theorized to contain valuable information on the phase space of the alloy, from these, we are set to draw guidelines for the quick design of new alloys. The figure showcases the scattered heat map of the phase composition space using the same pseudo-ternary representation where the coloring of the scattering corresponds to the RGB color code obtained from the composition vector [FCC, BCC, Rest]. In the right-hand corner the correlations are supported by Linear Suppport Vector Classification (l-SVC) decision borders where classes correspond to the highest concentration class in a data-point, this is used as a quick visualization tool and a first approach for alloy design. Also, the values for precision for the classification task for each one of the classes are shown at the upper right-hand corner to serve as quantitative reference for the visual inspection of the features. From top to bottom, the values correspond to FCC (red), BCC (green), and the Rest (blue).

The use of only two features may accelerate and simplify first approaches towards composition optimization in the HEA space. The features that appear to work the best towards this end are the polarizability (α), the weighted difference (VECdif) and the average of the valence (VECavg); they create the most partitions in phase constitution concentration in the randomly sampled data-set. That is, similar phase constitution profiles appear to cluster better when using one of these features. Notably, the combination of αavg and VECavg returns the highest values for the l-SVC model precision followed by the pair αavg and δ. Polarizability has a higher concentration of simple solutions at lower values and the use of an extra feature allows to discern between FCC and BCC. Therefore, alloys designed from only two easily calculated features from atomic properties may yield single solid solutions. For example, αavg and VECavg can be optimized for chemical compatibility using polarizability and the topology governed by the delta parameter discerns between FCC and BCC.

Some of the precision values are zero or one for the BCC phase, this means the model broke at these parameters and is either predicting none or just a few BCC-rich alloys, respectively. That is, the model gives up BCC prediction for heavy superposition of classes. Consequently, the use of this tool is only for visualization purposes and it is not designed to be used as a robust model but to give soft borders for general HEA design inspired by the Hume-Rothery rules. Besides, following optimization mechanisms do take advantage of the continuous output of the DNN regressor.

FCC phase prediction

The experimental data-set compiled by Ronald Machaka11 was filtered for alloys that presented single FCC solutions annealed at a temperature higher than 800∘C. The DNN is applied to these points and it is expected for it to return values close to 1. At best, the regressor trained with Thermo-Calc extracted values will be able to emulate the phase fraction predictive capability of the CALPHAD method. It will function as a simpler approach designed to provide a faster and natively surrogate model for the phase selection in the selected system in high-demand workflows. FCC systems in the comprehensive experimental database contain elements both in the chosen system and never seen by the DNN model. In Fig. 8, predictions for all observed experimental alloys that satisfy the criteria are shown. Alloys present in the elemental system used in the training step are generally closer to unity in the FCC phase fraction, while unused elements perform considerably worse. Notable additions such as Ti and C lower the probability of phase fraction of FCC, breaking the predictive power of the model to only those elements considered beforehand. Recognizing this shortcoming, following exploration of the material space is restricted to the current system to unveil unique HEA compositions. The predictions while erroneous still falls within a reasonable difference from the Thermo-Calc predictions (shown as black markers). Yet, there is an overall greater miss-match on compositions outside the training space than inside. For example, the addition of carbon seems to throw off the regressor estimation. And addition of titanium seems to negatively affect the prediction of both the CALPHAD implementation and the DNN regressor alike.

DNN regressor predictions for the FCC phase in alloys experimentally characterized as single FCC phase at annealing temperatures of 800 °C or higher. Those alloys with all elements considered in the training system and those which are not, are colored green and red, respectively. Labels for the alloy correspond to the stoichiometry of the alloy and in parentheses the extra elements not considered in the training. Black dots represent the FCC phase fraction prediction using Thermo-Calc single equilibrium running on the TCHEA5 database.

Design and exploration of the HEA space

Given that the model is not restricted to a classification problem, its application can be implemented as a part of an optimization problem with a specific objective and loss function.

Bayesian optimization

Optimization in the composition space to maximize a proposed phase is exemplified as a suitable first attempt at alloy design. The short response time of the DNN model makes the surrogate model the ideal kernel for optimization tasks. Bayesian Optimizations is a widely known algorithm for its efficiency, usually reaching global minimum within a few optimization loops41,42. While the algorithm does not exploit the high-throughput capabilities of the model, the optimization showcases how the surrogate model mimics the continuous nature of the alloy phase fractions with those of the constituents.

Three case scenarios were tested: a maximized FCC and a BCC phase fraction, and one case where both have an equal phase fraction of 0.5 and all other more complex phases are zero in all test cases. All these specific objectives correspond to specific interests in the alloy community, the last intermediate phase is relevant for the retrieval of promising lamellar eutectic compositions. The Bayesian Optimization algorithm fits a Gaussian Processor (the Radial Basis Function kernel) to the prior knowledge of the model given by an initial generation of random points and its respective phase fraction as given by the DNN model. Afterward, the Expected Improvement is used as the Acquisition Function to obtain the next sampling point43. The optimization loop starts with a random space of 20 points and runs over 500 iterations. The search space for the seven alloy component is mapped onto an unconstrained 6-dimensional space using the isometric logarithmic ratio transformation. With no stopping criteria, the algorithm reaches the minimum on several occasions or returns values near the absolute minima, below in Table 1 three different data compositions are listed for each case. A list of contrasting compositions was selected to showcase the versatility of the model inside the seven element space. For the intermediate composition, minima are harder to reach and so the number of perfect solutions is scarcer, still, three points close to this signature phase fraction and considerably different to each other are obtained by the optimization.

Even though the application of a Bayesian Optimization may seem counter-intuitive in a relatively non-expensive model, we showcase the capability of the regressor as a driving agent for HEA design: validating the previous feature analysis.

Exhaustive search

Exhaustive search using single equilibrium calculators is not always feasible. Assuming the DNN model is as accurate in a randomly generated space as in the grid sampling it was trained, then an accurate prediction for FCC and BCC phase fractions in a high-throughput style is attainable in a matter of minutes. To simulate the robustness of the grid method, random sampling was carried in each one of the subsystems: in each ternary, quaternary, and quinary 5000 points were sampled, each senary 50,000 and the last septenary 500,000. While not rigorous, the growth in the complexity of the system is replicated by an increase in the number of data-points sampled in the subsystem. Then, potential alloys for optimized FCC composition (xFCC) were filtered from the most complex septenary system. Out of the 500,000 alloys, 1268 points showed an FCC fraction higher than 97%.

Results for the FCC selection task are shown in Fig. 9a, where the high FCC fraction alloys are showcased on top of a 2-D t-Distributed Stochastic Neighbor Embedding (tSNE) representation of the seven-dimension composition space randomly generated to exemplify low and high entropy regions in the HEA space. Where low entropy regions are those in which any of the elements contributes to more than 50% molar percentage of the alloy, using tSNE these regions are excluded to the corner regions of the sampled space, effectively leaving higher entropy regions at the center of the representation. The background tSNE consists of 100,000 random points in the seven-component system.

tSNE representation of FCC selected/optimized data-points (in red) obtained through a PSO analysis in top of a subset of 100,000 randomly selected compositions for reference on the seven-component system: Al-Co-Cr-Fe-Mn-Nb-Ni. a filtering for FCC phase fraction greater than 0.97 from a random sampling. b PSO optimized full phase fraction FCC, c PSO optimized phase fraction FCC and entropy maximization.).

As a part of a high-throughput effort, this additional randomly sampled dataset is queried on the CALPHAD engine in order to validate the model for points outside the training grid (since validation and test set in Fig. 4 are also randomly chosen grid points). The results were favorable, the coefficient of determinations for all phases stayed above 0.95, and for the simple solid solutions was above 0.97. In addition to the consistent model accuracy, the efficiency of the model is worth mentioning. A model capable of mapping the almost 1,300,000 data points of the randomly sampled data-set is trained in a data-set six times smaller. On top of that, the surrogate model is built using commonly used ML frameworks that are easily transferable.

Particle Swarm Optimization (PSO)

Bayesian Optimization is increasingly expensive as the number of observations increases, mainly due to the computational cost associated with the fitting of the Gaussian Process surrogate models used to emulate the design space. While BO-type approaches tend to be preferable when the querying of the problem space is costly41,44, other optimization algorithms can be used when querying the space to optimize is not a bottleneck. Here, we note that the trained DNN is able to explore millions of new options as the optimization algorithm requires. Particle Swarm Optimization (PSO) algorithms tend to execute multiple queries to the problem space and can thus benefit from using a fast-acting emulator45,46,47. The nature of the PSO algorithm clusterize solutions around a local minimum, since the number of local minima in this space is numerous and are simultaneously global minima the algorithm quickly finds a solution highly dependent on its initial position. Therefore, constant refresh of the initial positions of the PSO task is done: multiple optimizations are done to get scattered results, instead of a single optimization with clustered solutions.

A high-throughput approach is then applied where FCC phase fraction is optimized to the full composition for 100 PSO optimizations, where at each run five optimized data points are taken, totaling 500 different compositions. Each PSO run consists of a swarm of 30 alloys and is run over 20 iterations, totaling up to 6000 function calls. As expected, PSO optimization is more efficient than plain random search. Yet, even after the precautions taken to avoid clusterization, results of multiple PSO appear to converge towards similar compositions. Swarm velocities at the beginning of the optimization appear to bias the algorithm heavily toward high Ni concentration. This optimization algorithm combined with the surrogate model has the potential to produce a large number of solutions in a high-throughput style. Even so, multiple objectives can be part of the optimization task. From Fig. 9b, optimized alloys clusterize and tend to be closer in distance to those alloys with higher concentrations of Ni and Fe. Which is not in line with the HEA definition of composition. To alleviate this, we can add a high entropy optimizer for the alloy to favor those alloys with higher ideal entropy of mixing as shown in Fig. 9c. This not only lowers the possibility for the PSO to converge towards high concentration areas but also reduces clusterization, so multiple areas appear to optimize high FCC concentrations.

Robust search, Bayesian Optimization, and single- and multi-objective PSO are showcased for specific phase profiles here. Yet, there may be occasions for when there are more complex phase fraction profiles to be desgined. Therefore, the true value of a phase constitution regressor lies in the user’s needs and time limitations. For all of the design methods, a CALPHAD prerun is still needed and therefore the methodology shown here is aimed to work together with CALPHAD to exploit as much as possible the high-throuput possibilities of nowadays computational resources.

Time advantage on DNN-applied frameworks

As we mentioned above, a use case for the proposed trained DNN is the development of a fast-acting emulator of CALPHAD-based phase equilibria calculations amenable for inline deployment into an alloy search/optimization workflow. We recognize that ultimately, the ground truth derived from a rigorous computational thermodynamic analysis should be the gold standard for computer-aided alloy design. Yet, we could envision scenarios in which access to a rapid emulator may be highly beneficial. Specifically, this ML-based extension of the CALPHAD-based method could greatly decrease the time to initially screen vast alloy spaces. This work exemplifies that even from a DNN trained with a relatively small grid-sampled data-set (170,000) a high-throughput random exploration can be carried out in an accurate way.

Even though there is an initial investment in the training data population and the consequent Neural Network training, sampling using a DNN regressor is much faster than using the CALPHAD method by itself. While the robustness of the training data and DNN’s training parameters will vary from user to user, the comparison for the estimation is straightforward: using the same system specifications, the DNN surrogate model is approximately 436 times faster than Thermo-Calc’s TC-Python implementation that runs in a multi-thread calculation. While this is considerably faster, it only shortens tasks for which the only requirement is the alloy’s phase constitution. The training data generation time is not negligible: the time taken for training data generation and running half a million data-points versus running half a million data-points straight away is only about two times faster. Therefore, the use of a DNN simulator is best when used in a set that requires a high-throughput output of data. This acceleration could cut the rapid screening of large alloy spaces from weeks to a matter of hours in a conventional computer. It is worth mentioning that the DNN implementation written in Tensorflow/Keras with Python programming language can also be easily scaled to different system’s specifications and the use of Graphical Processor Units.

Extrapolation from lower entropy subsystems

To study how well the featurization chosen extrapolates to higher complexity subsystems within the same seven-component system. Extra DNNs were trained on the lower subsystems of the data-set containing up to ternaries, quaternaries, and quinaries, these are subsequently randomly partitioned per complexity level into training, validation, and testing data. The remaining data is excluded for a final higher order (senaries and septenaries) testing data-set as shown in Supplementary Fig. 2. Validation results in Supplementary Table 4 show promising accuracy values for both senaries and septenaries when using training data from all of the lower complexity (3,4,5) systems in the HEA.

Leave-one-element-out

Another partition method to study extrapolation is carried out by leaving all data-points where a particular element is present out of the training set and use it as a testing set as shown in Supplementary Fig. 3. Accuracy results for this test in Supplementary Table 5 are far from useful and renders the model incapable of extrapolating to system where it does not have previous knowledge on all systems’ elements. Confirming the previous observations on the FCC experimental prediction (Fig. 8).

DISCUSSION

Extrapolation is discarded for this work’s DNN product, the approach is still worth considering and it is proposed to work alongside more computationally expensive first principles and thermodynamic single equilibrium calculators. In other words, while the DNN works with the current system, its application and feature significance inferred from the output, and consequently, the database it originated from, cannot be translated to other systems confidently. Yet, the accuracy and readability of the model suggest a new high-throughput ML-CALPHAD framework for the prediction of phase fractions and the design of complex systems.

While empiric rules are not written in this work, correlations for a few constructed features are shown to control the tendencies towards simple phase (FCC and/or BCC) majority. High-throughput calculation and featurization match previously reported findings and shows potential for a new set of design rules including polarizability. Also showcases how the mean difference48, which builds in binary additions to feature difference in the overall alloy, may add significant value for said rules, adding more information than commonly used averages.

The model was validated using a High Throughput CALPHAD sampling as well as experimental data when available. In the latter, verification was done in an HEA space where the elements matched those in the training data, the model predicted high FCC phase fraction when not full simple solution except for the outlier: Al0.5CoCrFeMnNi.

While the thermodynamic database (TCHEA5) and the CALPHAD system is thoroughly tested and generated by expert assessment from the Thermo-Calc group, uncertainity may still propagate from the acquisition of data and the solid solution modeling by the method. Based on Fig. 8 the authors recognize the error on predictions originates in the original CALPHAD estimation. Reinforcing the idea on that the model cannot surpass the accuracy of the data is being trained on. Extrapolation of the model to systems with elements not seen previously by it returns poor predictions. By visual inspection, the more previously unseen elements the system has, the further away from full FCC fraction. This indicates the model loses predictability progressively as we explore in the whole HEA space. Extrapolation is one of the cornerstones of the CALPHAD method, but solely to higher-order systems whose components are in the database. Still, extrapolation within those boundaries is not trivial and accurate input data has to be available for database generation. Therefore, to improve the predictability of high complex subsystems the main objective is the generation of new data for the assessment of ternary systems49,50,51.

New alloys on the training system are proposed as candidates for full FCC and BCC HEAs. Although the model is not built to guarantee a single random solution due to its simplification, it has a step forward towards stabilizing inter-metallic free alloys.

By following multiple objective functions PSO can effectively track down FCC stabilized regions that do not account purely by the high molar percentage of an FCC element, however, that is closer to or in the commonly referred high entropy composition region (5 < x < 35%). Accurate control of the phases present in HEAs given the elemental input is of paramount importance. Considering the large sample space of HEAs, marrying machine learning methods to the design of HEAs will be an important tool for the alloy-designing community.

METHODS

CALPHAD sampling

As our proposed method for data retrieval, we propose using Thermo-Calc52 to query compositions for the case study [Mn, Ni, Fe, Al, Cr, Nb, Co]. Preceding the training of the neural network, the data was generated with the TC-Python module feature from Thermo-Calc, using a grid sampling with steps of 5% molar percentage carried over all subsystems starting by the ternaries, consisting of 171 samples each, scaling up to the final septenary system composed of 27,132 samples. This step resulted in a total number of 229,824 alloy samples, of which, 229,156 were successfully retrieved from the CALPHAD engine.

A thorough representation of the seven-dimensional composition space is required to ensure the DNN represents all space. In addition to the composition space, temperature and pressure were maintained constant through all single equilibrium calculations, hence features are only dependent on the composition. The fixed temperature of 800 ∘C is used to mimic that of the common annealing temperatures used in the literature, where single solutions have been reported to be stable.

Post-processing consisted of filtering out faulty runs and summing up all equivalent phases, so even though ordered phases could be present, they are associated with the disordered parent lattice. While this problem simplification lowers the predictability of the final regressor, it serves the objective of differentiating between simple FCC and BCC solutions and more complex intermetallics. Lastly, we isolate the highest recurrent phases in all the data: FCC, BCC, SIGMA, and LAVES, and the rest of the phase fractions, making up an average of 7.8% in the whole data contain the common intermetallics, as well as the HCP and its ordered substructures. Proposing a less thermodynamically selective oriented approach and simplifying the phase fraction to either simple solutions (FCC and BCC) or intermetallics.

Feature construction

The featurization strategy used in ref. 53 is used for each elemental property, resulting in three different composition-unique features. These three transformations, the average, the harmonic average, and the average difference, were applied to 30 features retrieved from the Oliynyk elemental database from the open-source software BestPractices54. Additionally, to further complement the features set, six commonly used features in HEA machine learning are described in ref. 12 are added. Resulting in a total number of 96 alloy-unique features, a summary of the transformations and Formulae used to generate these are listed in Table 2.

While some works have found that Rectified Neural Networks may be unaffected by the curse of dimensionality on specific cases55,56 generalizing this idea to the current work is not trivial and the model here could be benefited by an initial feature selection. Still, feature relations with the phase fraction data are one of the main interests of this work. Therefore, we opt to maintain as many features as possible from our start training dataset to further draw feature-phase relations. As a first step towards unveiling feature significance and phase-feature signature, we study the complete correlations between each feature and the five classes (four major phases and the sum of the rest of the alloy’s fraction).

Deep neural network

The overall data shape of the generated alloy database is of 5 output classes and 96 input features. Hence, the architecture of the Deep Neural Network’s regressor has to be equivalent. The hidden rectified linear layers will handle the complexity of the problem. The architecture proposed takes into account the composition constraint on the output: All phase fractions have to sum up to 1. Therefore, the output layer is a softmax activation layer, which constrains the output layer values to sum to 1 which normally reflects class probability but in this case, is taken as phase fraction values.

Before this last layer, the rest of the NN has a classic dense architecture with a Rectified Linear Unit (ReLU) activation function. Each one has a number of trainable parameters of ln−1 × ln + ln, where ln and ln−1 is the number of nodes in the current and previous layer, respectively. The DNN architecture is composed of an input layer of size 96, 4 hidden ReLU layers of size 128, 64, 64, and 5, and a final output soft-max layer of size 5. The loss chosen is the mean squared error in order to optimize all coefficients of determination having all classes the same priority. The training was carried through with an Adam AMSGrad optimizer57, a learning ratio of 0.02 and a batch size of 1024 datapoints for a 10,000 epochs.

Data availability

In order to comply with Thermo-Calc’s guidelines for sharing of calculated data. A several times smaller data-set consisting of only phase fraction information is shared (inside the CodeOcean capsule) to provide a placeholder for the user’s own data.

Code availability

A reduced version of the code used in this work is available for use in the CodeOcean capsule https://codeocean.com/capsule/6279423/tree.

References

Yeh, J.-W. et al. Formation of simple crystal structures in Cu-Co-Ni-Cr-Al-Fe-Ti-V alloys with multiprincipal metallic elements. Metall. Mater. Trans. A 35, 2533–2536 (2004).

Yeh, J.-W. et al. Nanostructured high-entropy alloys with multiple principal elements: novel alloy design concepts and outcomes. Adv. Eng. Mater. 6, 299–303 (2004).

Huang, P.-K., Yeh, J.-W., Shun, T.-T. & Chen, S.-K. Multi-principal-element alloys with improved oxidation and wear resistance for thermal spray coating. Adv. Eng. Mater. 6, 74–78 (2004).

Cantor, B., Chang, I., Knight, P. & Vincent, A. Microstructural development in equiatomic multicomponent alloys. Mater. Sci. Eng.: A 375, 213–218 (2004).

Zhang, Y. et al. Microstructures and properties of high-entropy alloys. Prog. Mater. Sci. 61, 1–93 (2014).

Tsai, M.-H. & Yeh, J.-W. High-entropy alloys: a critical review. Mater. Res. Lett. 2, 107–123 (2014).

Hume-Rothery, W. The structure of metals and alloys. Indian J. Phys. 11, 74–74 (1969).

Pickering, E. & Jones, N. High-entropy alloys: a critical assessment of their founding principles and future prospects. Int. Mater. Rev. 61, 183–202 (2016).

Takeuchi, A. & Inoue, A. Classification of bulk metallic glasses by atomic size difference, heat of mixing and period of constituent elements and its application to characterization of the main alloying element. Mater. Trans. 46, 2817–2829 (2005).

Boer, F. d., Mattens, W. C., Boom, R., Miedema, A. R. & Niessen, A. K. Cohesion in Metals. Transition Metal Alloys (North Holland, Netherlands, 1988).

Machaka, R. Machine learning-based prediction of phases in high-entropy alloys. Comput. Mater. Sci. 188, 110244 (2021).

Zhou, Z. et al. Machine learning guided appraisal and exploration of phase design for high entropy alloys. npj Comput. Mater. 5, 1–9 (2019).

Zhang, Y., Zhou, Y. J., Lin, J. P., Chen, G. L. & Liaw, P. K. Solid-solution phase formation rules for multi-component alloys. Adv. Eng. Mater. 10, 534–538 (2008).

Guo, S., Hu, Q., Ng, C. & Liu, C. More than entropy in high-entropy alloys: forming solid solutions or amorphous phase. Intermetallics 41, 96–103 (2013).

Agarwal, A. & Rao, A. P. Artificial intelligence predicts body-centered-cubic and face-centered-cubic phases in high-entropy alloys. JOM 71, 3424–3432 (2019).

Wu, Q. et al. Uncovering the eutectics design by machine learning in the Al–Co–Cr–Fe–Ni high entropy system. Acta Mater. 182, 278–286 (2020).

Islam, N., Huang, W. & Zhuang, H. L. Machine learning for phase selection in multi-principal element alloys. Comput. Mater. Sci. 150, 230–235 (2018).

Zhang, Y. et al. Phase prediction in high entropy alloys with a rational selection of materials descriptors and machine learning models. Acta Mater. 185, 528–539 (2020).

Pei, Z., Yin, J., Hawk, J. A., Alman, D. E. & Gao, M. C. Machine-learning informed prediction of high-entropy solid solution formation: beyond the Hume-Rothery rules. npj Comput. Mater. 6, 1–8 (2020).

Choudhury, A., Konnur, T., Chattopadhyay, P. & Pal, S. Structure prediction of multi-principal element alloys using ensemble learning. Eng. Comput. 37, 1003–1022 (2019).

Kaufmann, K. & Vecchio, K. S. Searching for high entropy alloys: a machine learning approach. Acta Mater. 198, 178–222 (2020).

Chang, Y.-J., Jui, C.-Y., Lee, W.-J. & Yeh, A.-C. Prediction of the composition and hardness of high-entropy alloys by machine learning. JOM 71, 3433–3442 (2019).

Roy, A., Babuska, T., Krick, B. & Balasubramanian, G. Machine learned feature identification for predicting phase and Young’s modulus of low-, medium-and high-entropy alloys. Scr. Mater. 185, 152–158 (2020).

Nosratabadi, S. et al. Data science in economics: comprehensive review of advanced machine learning and deep learning methods. Mathematics 8, 1799 (2020).

Hong, Y., Hou, B., Jiang, H. & Zhang, J. Machine learning and artificial neural network accelerated computational discoveries in materials science. Wiley Interdiscip. Rev. Comput. Mol. Sci. 10, e1450 (2020).

Bhadeshia, H. Neural networks and information in materials science. Stat. Anal. Data Min. ASA Data Sci. J. 1, 296–305 (2009).

He, Q., Ye, Y. & Yang, Y. The configurational entropy of mixing of metastable random solid solution in complex multicomponent alloys. J. Appl. Phys. 120, 154902 (2016).

He, Q., Ding, Z., Ye, Y. & Yang, Y. Design of high-entropy alloy: a perspective from nonideal mixing. Jom 69, 2092–2098 (2017).

An, S. et al. Common mechanism for controlling polymorph selection during crystallization in supercooled metallic liquids. Acta Mater. 161, 367–373 (2018).

Debenedetti, P. G. & Stillinger, F. H. Supercooled liquids and the glass transition. Nature 410, 259–267 (2001).

Stillinger, F. H. A topographic view of supercooled liquids and glass formation. Science 267, 1935–1939 (1995).

Carbone, M. R. When not to use machine learning: a perspective on potential and limitations. MRS Bull. 47, 1–7 (2022).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. In Guyon, I. et al. (eds.) Advances in Neural Information Processing Systems 30, 4765–4774 (Curran Associates, Inc., 2017).

Lundberg, S. M. et al. From local explanations to global understanding with explainable ai for trees. Nat. Mach. Intell. 2, 2522–5839 (2020).

Zhang, Y. et al. Guidelines in predicting phase formation of high-entropy alloys. Mrs Commun. 4, 57–62 (2014).

Huang, W., Martin, P. & Zhuang, H. L. Machine-learning phase prediction of high-entropy alloys. Acta Mater. 169, 225–236 (2019).

Guo, S., Ng, C., Lu, J. & Liu, C. Effect of valence electron concentration on stability of fcc or bcc phase in high entropy alloys. J. Appl. Phys. 109, 103505 (2011).

Chen, R. et al. Composition design of high entropy alloys using the valence electron concentration to balance strength and ductility. Acta Mater. 144, 129–137 (2018).

Yang, S., Lu, J., Xing, F., Zhang, L. & Zhong, Y. Revisit the VEC rule in high entropy alloys (HEAS) with high-throughput calphad approach and its applications for material design-a case study with Al–Co–Cr–Fe–Ni system. Acta Mater. 192, 11–19 (2020).

Ji, X. Relative effect of electronegativity on formation of high entropy alloys. Int. J. Cast. Met. Res. 28, 229–233 (2015).

Shahriari, B., Swersky, K., Wang, Z., Adams, R. P. & De Freitas, N. Taking the human out of the loop: a review of Bayesian optimization. Proc. IEEE 104, 148–175 (2015).

Pelikan, M., Goldberg, D. E., Cantú-Paz, E. et al. Boa: the Bayesian optimization algorithm. In Proc. Genetic and Evolutionary Computation Conference GECCO-99, vol. 1, 525–532 (Citeseer, 1999).

GPy. GPy: a Gaussian process framework in Python. http://github.com/SheffieldML/GPy (since 2012).

Talapatra, A. et al. Experiment design frameworks for accelerated discovery of targeted materials across scales. Front. Mater. 6, 82 (2019).

Miranda, L. J. V. PySwarms, a research-toolkit for Particle Swarm Optimization in Python. J. Open Source Softw. 3. https://doi.org/10.21105/joss.00433 (2018).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95-international conference on neural networks, vol. 4, 1942–1948 (IEEE, 1995).

Poli, R., Kennedy, J. & Blackwell, T. Particle swarm optimization. Swarm Intell. 1, 33–57 (2007).

Bartel, C. J. et al. Physical descriptor for the Gibbs energy of inorganic crystalline solids and temperature-dependent materials chemistry. Nat. Commun. 9, 4168 (2018).

Luo, Q., Zhai, C., Sun, D., Chen, W. & Li, Q. Interpolation and extrapolation with the calphad method. J. Mater. Sci. Technol. 35, 2115–2120 (2019).

Chen, H.-L., Mao, H. & Chen, Q. Database development and calphad calculations for high entropy alloys: challenges, strategies, and tips. Mater. Chem. Phys. 210, 279–290 (2018).

Mao, H., Chen, H.-L. & Chen, Q. Tchea1: A thermodynamic database not limited for “high entropy” alloys. J. Phase Equilib. Diffus. 38, 353–368 (2017).

Andersson, J.-O., Helander, T., Höglund, L., Shi, P. & Sundman, B. Thermo-calc & dictra, computational tools for materials science. Calphad 26, 273–312 (2002).

Bartel, C. J. et al. Physical descriptor for the Gibbs energy of inorganic crystalline solids and temperature-dependent materials chemistry. Nat. Commun. 9, 1–10 (2018).

Wang, A. Y.-T. et al. Machine learning for materials scientists: An introductory guide toward best practices. Chem. Mater. 32, 4954–4965 (2020).

Han, J., Jentzen, A. & Weinan, E. Solving high-dimensional partial differential equations using deep learning. P. Natl. Acad. Sci. 115, 8505–8510 (2018).

Poggio, T., Mhaskar, H., Rosasco, L., Miranda, B. & Liao, Q. Why and when can deep-but not shallow-networks avoid the curse of dimensionality: a review. Int. J. Autom. Comput. 14, 503–519 (2017).

Reddi, S. J., Kale, S. & Kumar, S. On the convergence of Adam and beyond. arXiv preprint arXiv:1904.09237 (2019).

Acknowledgements

R.A. and G.V. acknowledge the support of QNRF under Project No. NPRP11S-1203-170056. Support from NSF through Grants No. 1545403, 1905325, and 2119103 is acknowledged. High-throughput CALPHAD calculations were carried out in part at the Texas A&M High-Performance Research Computing (HPRC) Facility. R.G. acknowledges this material is based upon work supported by the National Science Foundation Graduate Research Fellowship under Grant No. (DGE:1746932). Any opinions, findings, conclusions or recommendations expressed in this material are those of the authors(s) and do not necessarily reflect the views of the National Science Foundation. S.C. was supported in part by the Advanced Research Projects Agency-Energy (ARPA-E), U.S. Department of Energy, under award number DE-AR0001356.

Author information

Authors and Affiliations

Contributions

G.V. contributed to the mapping of single equilibrium CALPHAD, and developed the ML framework. C.S. and R.G. assisted in scanning available literature and providing useful feedback for the project. R.A. supervised the project and contributed insightful ideas and feedback, including the main objective of the work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vazquez, G., Chakravarty, S., Gurrola, R. et al. A deep neural network regressor for phase constitution estimation in the high entropy alloy system Al-Co-Cr-Fe-Mn-Nb-Ni. npj Comput Mater 9, 68 (2023). https://doi.org/10.1038/s41524-023-01021-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-023-01021-8

- Springer Nature Limited

This article is cited by

-

A comparative study of predicting high entropy alloy phase fractions with traditional machine learning and deep neural networks

npj Computational Materials (2024)