Abstract

Given high costs of Oncotype DX (ODX) testing, widely used in recurrence risk assessment for early-stage breast cancer, studies have predicted ODX using quantitative clinicopathologic variables. However, such models have incorporated only small cohorts. Using a cohort of patients from the National Cancer Database (NCDB, n = 53,346), we trained machine learning models to predict low-risk (0-25) or high-risk (26-100) ODX using quantitative estrogen receptor (ER)/progesterone receptor (PR)/Ki-67 status, quantitative ER/PR status alone, and no quantitative features. Models were externally validated on a diverse cohort of 970 patients (median follow-up 55 months) for accuracy in ODX prediction and recurrence. Comparing the area under the receiver operating characteristic curve (AUROC) in a held-out set from NCDB, models incorporating quantitative ER/PR (AUROC 0.78, 95% CI 0.77–0.80) and ER/PR/Ki-67 (AUROC 0.81, 95% CI 0.80–0.83) outperformed the non-quantitative model (AUROC 0.70, 95% CI 0.68–0.72). These results were preserved in the validation cohort, where the ER/PR/Ki-67 model (AUROC 0.87, 95% CI 0.81–0.93, p = 0.009) and the ER/PR model (AUROC 0.86, 95% CI 0.80–0.92, p = 0.031) significantly outperformed the non-quantitative model (AUROC 0.80, 95% CI 0.73–0.87). Using a high-sensitivity rule-out threshold, the non-quantitative, quantitative ER/PR and ER/PR/Ki-67 models identified 35%, 30% and 43% of patients as low-risk in the validation cohort. Of these low-risk patients, fewer than 3% had a recurrence at 5 years. These models may help identify patients who can forgo genomic testing and initiate endocrine therapy alone. An online calculator is provided for further study.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Breast cancer is the most common form of cancer in the US, with nearly 300,000 new cases diagnosed in 20221. Hormone receptor positive (HR + ) breast cancer constitutes about 70% of newly diagnosed cases and is generally treated effectively with hormonal therapy, but a subset of more aggressive disease requires treatment with chemotherapy2. The Oncotype DX (ODX) test is a 21-gene expression assay that assigns patients a risk recurrence score from 0 to 100 to identify cases of HR + /HER2- breast cancer that would most likely benefit from adjuvant chemotherapy3. ODX has been extensively validated3,4,5,6 and is currently recommended by national guidelines to identify patients with HR + /HER2- breast cancer with up to 3 lymph nodes involved who require chemotherapy7,8. Though a valuable clinical tool, ODX costs ~$4000 per test in the US, which may reduce accessibility in low-resource settings—especially internationally where breast cancer accounts for another 2 million new cases each year9. In the US, racial disparities exist in ODX testing uptake, and several studies have demonstrated higher risks of recurrence among racial/ethnic minority groups, particularly Black and Hispanic, even in patients with low ODX scores10,11,12,13. Furthermore, genomic testing is time-consuming and can contribute to delays in administration of adjuvant treatment14. Testing is performed in over 50% of newly diagnosed HR + /HER2- breast cancer cases nationwide with this percentage rising, and as such more tumors with low-risk clinical features are undergoing ODX testing.

There have been several attempts to use routinely available clinical features to predict likelihood of a high ODX risk score. A set of equations derived from patient data at Magee-Women’s hospital established linear relationships between quantitative pathologic parameters and the numeric ODX score15, and these equations have been validated in various settings16. However, formulas that account for the changing practice patterns of ODX testing are needed. The National Cancer Database (NCDB) is a hospital-based registry that includes data from ~70% of new invasive cancer diagnoses in the US and is the ideal dataset for such models17. Using patient data captured by the NCDB, machine learning methods have been developed for the prediction of high-risk ODX status based on readily available clinicopathologic features such as patient age, tumor size, grade, HR status, and histologic type18,19,20. For example, a nomogram developed at the University of Tennessee Medical Center using data from the NCDB has been evaluated in various contexts21,22,23. However, until recently, the NCDB did not report quantitative histologic parameters for variables such as estrogen receptor (ER), progesterone receptor (PR), and Ki-67 expression in breast cancer patients, limiting the accuracy of such models. As such, we trained machine learning models in the NCDB incorporating these quantitative histologic variables. We then validated the models in a large and diverse patient cohort from the University of Chicago Medical Center (UCMC), assessing both their predictive accuracy for ODX and correlation with survival outcomes.

Results

Cohort description

We performed preliminary assessment of the univariate accuracy of potential features in the NCDB to identify a minimum feature set—HER2 copy number and HER2/CEP17 ratio were excluded as potential features due to low predictive accuracy and limited availability (Supplementary Fig. 1). From the NCDB cohort, we identified 53,346 patients with HR + /HER2- Stage I-III breast cancer with three or fewer lymph nodes involved and no missing data for candidate features. Patients were predominantly non-Hispanic White (80.0%) with a mean age of 60 years, and a median follow-up time of 28 months (Table 1). With this short follow-up, only 1% (n = 488) of patients had passed away. All included patients had ODX testing, 7% (n = 3815) with high-risk (score ≥ 26) results.

The UCMC cohort with similar inclusion criteria had 970 patients and was more diverse, with 30.8% non-Hispanic Black patients, although most were still non-Hispanic White (61.4%). Patients who met our inclusion criteria had a mean age of 58 years and a longer median follow-up time of 55 months. Of these patients, 305 had ODX testing, and 18% (n = 56) had high-risk results, and 29 recurrence events were documented (Supplementary Table 1). Patients without ODX testing were used to evaluate long-term outcomes of patients based on model predictions.

Model development and performance assessment

To allow applicability in settings where certain markers may be unavailable, we developed models that only incorporated routinely available clinical features without quantitative immunohistochemistry, then added quantitative ER/PR status, and finally quantitative ER/PR/Ki-67 status. A subset of 80% of the data from NCDB was used for hypermeter optimization and feature selection. A grid search was performed to select the optimal model and hyperparameters, and logistic regression was chosen as the base model. Sequential forward feature selection identified the most informative features to include in each model (Supplementary Fig. 2). All models incorporated grade, PR status or percent, and ductal histologic subtype. Grade, PR status, and Ki-67% (when available) had the greatest contributions to model performance. Furthermore, models were compared to a previously published deep learning model predicting ODX from digital histology24—but this was only performed in the validation cohort as digital histology was not available in the NCDB.

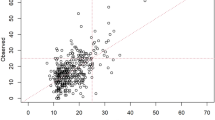

When comparing the area under the receiver operating characteristic curve (AUROC) in the held-out NCDB test cohort, the quantitative ER/PR model (AUROC 0.78, 95% CI 0.77–0.80) and the ER/PR/Ki-67 model (AUROC 0.81, 95% CI 0.80–0.83) both performed better than the non-quantitative model (AUROC 0.70, 95% CI 0.68–0.72, Fig. 1, Table 2). Quantitative models had greater correlation between model predictions and true ODX score in the held-out test set from NCDB with a Pearson correlation coefficient of 0.43 for the quantitative ER/PR/Ki-67 model, and the slope of calibration curves for quantitative models was >0.90 indicating good calibration (Supplementary Fig. 3). Although we designed models based on the availability (or lack thereof) of quantitative IHC results, we also evaluated performance with missing data for other input values using mean imputation to replace values in the testing cohort to simulate missing data (Supplementary Table 2). Missing data for most input variables resulted in decreased model performance, aside from age, tumor size, and quantitative ER status—but meaningful predictions (with AUROC exceeding random chance) could still be made with any single missing variable. We also evaluated performance in select patient subgroups to ensure consistent results in different populations in the held-out NCDB test set. Across models—performance was better in ductal and mixed ductal/lobular tumors, and worse in lobular or mucinous tumors—other histologic subtypes were not evaluated due to small number of cases (Supplementary Table 3). We found no meaningful difference in racial/ethnic subgroups and node-negative versus node-positive cases (Supplementary Tables 4 and 5). In all cases, regardless of subgroup, the ER/PR/Ki-67 model performed the best. When validating the trained models on the subset of the external UCMC dataset with ODX available (n = 305), overall AUROCs were largely preserved (Table 2), with a statistically significant improvement seen in the ER/PR/Ki-67 quantitative model (AUROC 0.87, 95% CI 0.81–0.93, p = 0.009) and the ER/PR model (AUROC 0.86, 95% CI 0.80–0.92, p = 0.031) over the non-quantitative model (AUROC 0.80, 95% CI 0.73–0.87). In the subset of patients who also had digital pathology available (n = 253), AUROC of the performance of the quantitative ER/PR/Ki-67 model (0.86, 95% CI 0.79–0.93) was similar to our previously published deep learning pathologic model (AUROC 0.85, 95% CI 0.78–0.92), suggesting that if quantitative immunohistochemistry is unavailable, this deep learning model may be a reasonable surrogate (Supplementary Fig. 4).

a Receiver operating characteristic curves for prediction of high Oncotype DX using the non-quantitative, quantitative ER/PR, and quantitative ER/PR/Ki-67 models in the National Cancer Database held-out test cohort (n = 10,670). b The same curves plotted for the external University of Chicago Medical Center validation cohort (n = 305).

To enhance the clinical utility of these models we selected cutoffs which achieved 90% and 95% sensitivity for high ODX in the NCDB training cohort to facilitate the use of these models as rule-out tests—i.e., identifying patients who can forgo ODX (Supplementary Table 6). Inclusion of additional quantitative features consistently increased model specificity at each threshold—with a specificity of 54% for the quantitative ER/PR/Ki-67 model seen in the validation cohort at the target 90% sensitivity threshold. To facilitate the study of the presented models, we developed an online calculator to compute and display model predictions with respect to these proposed thresholds for low / very low risk patients, available at rsncdb.cri.uchicago.edu (Supplementary Fig. 5).

Survival analysis

Follow-up was too short to appreciate meaningful differences in survival based on model predictions in the NCDB cohort (Supplementary Table 7). In the UCMC dataset, the quantitative ER/PR/Ki-67 model predictions had greater concordance with recurrence-free interval (c-index 0.71, adjusted hazard ratio [aHR] 1.43, 95% CI 1.11–1.85, p = 0.01) than the quantitative ER/PR model (c-index 0.69, aHR 1.44, 95% CI 1.09–1.89, p = 0.01) or the non-quantitative model (c-index 0.66, aHR 1.33, 95% CI 1.03–1.73, p = 0.03, Table 3). There was a trend towards association with recurrence-free survival for the ER/PR/Ki-67 model predictions (aHR 1.24, 95 % CI 0.98–1.57, p = 0.07). When applying the 90% sensitivity thresholds to the UCMC dataset, patients identified as high risk by all models had a shorter recurrence free interval, with the largest hazard ratio seen for the ER/PR/Ki-67 model (aHR 3.84, 95% CI 1.48–9.97, p = 0.01, Fig. 2). At the 95% sensitivity threshold, patients identified as high risk by the ER/PR/Ki-67 model had a significantly shorter recurrence-free interval (aHR 3.64, 95% CI 1.08–12.24, p = 0.04), with a trend towards shorter recurrence-free interval in high-risk patients per the quantitative ER/PR model (aHR 3.39, 95% CI 0.79–14.53, p = 0.10). Long-term recurrence rates were <3% for quantitative models at both cutoffs, although the ER/PR/Ki-67 model identified more patients as low risk; with 43% (n = 419 out of 964) identified as low risk at the 90% sensitivity threshold. Furthermore, in the subset of patients with digital pathology available (n = 670), both our previous deep learning model on digital histology and the quantitative models provided complementary information (Supplementary Fig. 6). Patients predicted to be low risk by either model had low rates of recurrence (<3%), and the deep learning pathology model identified a sizeable proportion of patients classified as high risk by the quantitative clinical models who could be reclassified as low risk (37% of high risk patients for the ER/PR model and 32% for the ER/PR/Ki-67 model). In an exploratory analysis, we evaluate how quantitative immunohistochemistry and Oncotype could be used together to improve prediction of recurrence. We first applied the 90% sensitivity threshold from above to further stratify risk after ODX testing (Supplementary Fig. 7). No patients with high ODX scores who were low-risk per the quantitative ER/PR or ER/PR/Ki-67 models experienced disease recurrence, and patients with low ODX but high-risk per the quantitative ER/PR/Ki-67 model had a trend towards worse recurrence-free interval (aHR 5.89, 95% CI 0.65–53.73, p = 0.12). We also assessed a model trained to directly predict recurrence in the University of Chicago cohort using Oncotype and features from the quantitative ER/PR/Ki-67 model (Supplementary Table 8). Incorporation of Oncotype with these features improved prediction of recurrence (c-index 0.85) over the quantitative ER/PR/Ki-67 model (c-index 0.71) or Oncotype alone (c-index 0.68), suggesting that more accurate prognostic models can be created through combination of Oncotype with quantitative IHC—although this combined model was fit and evaluated in the same cohort, so further validation is needed.

Kaplan–Meier curves are shown for the recurrence-free intervals of patients (n = 964) in the University of Chicago Medical Center cohort classified as low- and high-risk by the (a) non-quantitative model, (b) quantitative ER/PR model, and (c) quantitative ER/PR/Ki-67 model using a 95% sensitivity cutoff for high-risk disease to stratify patients. Survival analysis results are repeated at the 90% sensitivity cutoff for these same models, shown in (d–f) respectively.

Discussion

Considering the high cost of ODX testing, machine learning methods have emerged as a potential tool for cost-effective prediction of a patient’s recurrence risk using routinely available clinical features. Here we demonstrate that, when predicting high-risk ODX status in patients with breast cancer, logistic regression models trained on a large NCDB dataset incorporating quantitative features for ER/PR% and Ki-67% outperform models utilizing only categorical features for ER/PR status. Our models achieved strong AUROCs at a level of performance comparable or better than models presented in other studies18,19,20,22,25. These performance improvements were preserved when validating our models on a diverse cohort of patients at UCMC.

Correlations between standard histopathologic variables and ODX score were first demonstrated in 2008 by Flanagan et. al in the Magee equations, which were later updated by Klein et al. in 201315,26. Though the original equation utilized grade, HER2 status, ER/PR expression using the semi-quantitative IHC score (H-score), later versions included Ki-67 index. However, H-scores, which range from 0 to 300, are not universally reported, limiting their use. Quantitative components of grade are unavailable in NCDB, limiting direct comparison to the Magee equations.

Orucevic et al. previously developed the University of Tennessee nomogram for ODX using NCDB data incorporating tumor size, grade, PR status, and histologic subtype22. However, quantitative values for ER/PR expression were not available at the time of development of this nomogram. Yoo et al. and Kim et al. also developed logistic regression models incorporating nuclear grade, PR status, and Ki-67%19,25. In another study, Kim et al. applied decision jungles and neural networks for the prediction of high-risk ODX using ER/PR status, HER2 status, Ki-67 index, grade, and histologic subtype20. Moreover, when applying the University of Tennessee nomogram to a cohort of South Korean patients, Kim et al. demonstrated marked reductions in model performance27. These discrepancies may be suggestive of poor generalizability to Asian populations. Our model had equivalent performance in different racial/ethnic groups and maintained strong performance in a diverse validation cohort, which could contribute to the pursuit of health equity in clinical decision making.

Finally, our model was not only predictive of ODX score, but also long-term recurrence, which has rarely been evaluated in other studies28. Both quantitative models identified subsets of patients with very low risk of recurrence, with more low-risk patients identified when incorporating Ki-67. Nonetheless, our validated quantitative ER/PR model maintained higher accuracy for ODX than our non-quantitative model, and can identify a substantial proportion of patients as low-risk in settings where Ki-67 is not routinely obtained.

Despite strong evidence supporting the clinical utility of the ODX test, its high cost can be prohibitive in low-resource settings in the US as well as in other countries, and several studies have shown disparities in testing uptake associated with patient socioeconomic status29,30,31. Given the associated morbidity of adjuvant chemotherapy, there is particular interest in de-escalation of treatment in low-risk breast cancer patients for whom chemotherapy may not only fail to provide additional benefit but also introduce added toxicity and financial burden. The annual cost of ODX testing in the US is projected to increase to $231 million, and the use of highly sensitive cutoffs with computational models based on readily available clinicopathologic features could potentially reduce rates of ODX testing among patients unlikely to have positive results32. Furthermore, a number of recent studies have shown varying ability to predict ODX from digital histology24,33,34,35,36. We demonstrated here that our previously published deep learning model has similar performance to the quantitative clinical models detailed in this study and may have additive value to quantitative immunohistochemistry, as has been previously suggested34. Additionally, imaging-based radiomics approaches have also shown promise in augmenting predictions of a patient’s breast cancer recurrence risk, and may be incorporated in conjunction with clinical / pathologic features in future studies37,38,39,40,41. Finally, although models such as RSClin have been developed that may improve upon the prognostic accuracy of OncotypeDX by including additional clinical variables42,43, no such model incorporates standard quantitative immunohistochemistry. In our exploratory analyses we demonstrate that quantitative immunohistochemistry may have additive value to OncotypeDX testing, and larger well-annotated datasets are needed to confirm this finding and produce the next generation of risk-stratification tools.

There are several limitations to this study. The NCDB cohort captures the data of only patients for whom ODX testing was ordered, which may lead to bias related to practice patterns—such as underrepresentation of older patients who are not chemotherapy candidates. Quantitative values for percentage ER/PR expression have only been available through the NCDB in the past few years, limiting our ability to identify long-term differences in survival between low- and high-risk groups. Potential errors due to miscoding, lack of follow-up, and variability between sites may impact the quality of the NCDB data used to train the model. Furthermore, the training dataset was highly imbalanced with only 7% of cases classified as high-risk per OncotypeDX, (which is similar to other studies—for example, in RxPONDER trial, 10% of patients were excluded for high-risk OncotypeDX scores—and thus represents the national rate of high-risk disease3,6). Although under- or over-sampling is sometimes recommended for skewed data44, such approaches would impair our ability to accurately estimate risk as applicable in national cohorts, and despite skewed training data our models demonstrated strong calibration with true OncotypeDX scores across a spectrum of risk. Despite these limitations, our results were preserved in a single institution cohort with longer term follow-up, suggesting strong external validity and robustness.

Additionally, this study was tuned to predict a risk-of-recurrence assay in a nationally representative cohort from the United States, and although it is appealing to use such a tool in countries where genomic testing is unavailable, validation must be performed to ensure predictions remain robust due to differing demographics. Reassuringly, model accuracy remained robust across racial / ethnic groups despite the majority of training data coming from non-Hispanic white patients, which may indicate generalizability to populations worldwide. While less costly than genomic testing, even the quantitative immunohistochemistry necessary for these models is not uniformly available worldwide—surveys of facilities in sub-Saharan African report only 74–95% of centers utilize immunohistochemistry45,46, and Ki-67 may only be available in half of these centers. Nonetheless, the increase in automated tools for quantification of immunohistochemistry may facilitate the use of our risk prediction models47,48.

In conclusion, using training data from a large dataset of NCDB patients and validation data from a diverse cohort of UCMC patients, we have developed a machine learning model for the prediction of high-risk ODX score from clinicopathologic features which was prognostic for recurrence in an external dataset. This model may assist in the identification of low-risk patients who may safely refrain from adjuvant chemotherapy without further genomic testing.

Methods

Cohort selection

Data were extracted from the NCDB for new cases of invasive, HR-positive, HER2-negative, Stage I-III breast cancer in patients with diagnoses made between 2018 and 2020. Patients were also excluded if they had greater than three lymph nodes positive (reflective of current indications for ODX testing per guidelines) or missing ODX scores (Supplementary Fig. 8). An external validation cohort was identified from UCMC, including 970 invasive, HR-positive, HER2-negative, Stage I-III breast cancer patients diagnosed between 2006 through 2023, of which 305 patients had ODX scores. Association of baseline demographic variables with ODX result was assessed using a two-sided t-test for numeric variables and a chi-squared test for categorical variables.

Model training

NCDB data were used to train and evaluate the performance of a machine learning model that predicted high- or low-risk ODX scores, with high-risk defined as scores of 26 or greater49. A subset comprising 80% of patients was used for model training, while the remaining data were set aside for internal validation. An overview of methods, model training, and model assessment is presented in Supplementary Fig. 9. After inspection of the log-likelihood ratios (LLRs) of the features in the original dataset, features with the greatest LLR were selected as a minimal potential feature set, while the remaining features were excluded (Supplementary Fig. 1). Any patients with missing data in the minimal feature set were excluded from further analysis. We evaluated the performance of four machine learning models—logistic regression, multilayer perceptron, random forest, and AdaBoost to predict high vs low ODX score using 10-fold cross-validation across a limited set of hyperparameters50,51. An informative set of features was selected for each model with sequential forward feature selection, using Akaike information criterion (AIC) as the metric (Supplementary Fig. 2)52. No model out-performed logistic regression with an increase of AUROC of >0.01 on cross validation in the training dataset, so a logistic regression base was used for further analysis given the greater interpretability of logistic regression models (Supplementary Table 9). To assess the comparative value of quantitative clinical models and models trained on digital histology, we also evaluated our previously published model to predict ODX score trained on breast cancer samples from The Cancer Genome Atlas24.

Model assessment

We compared model performance between models with quantitative immunohistochemistry to a model without quantitative features. In the held-out test set from NCDB and the external validation cohort, confidence intervals and p-values for statistical significance of AUROC differences were computed using DeLong’s method; AUPRC confidence intervals were computed using bootstrapping with 1000 iterations53,54. Cutoffs with 95% and 90% sensitivity for high-risk ODX scores were computed in the NCDB training cohort, and model performance metrics were computed at each cutoff, including sensitivity, specificity, negative predictive value (NPV), and positive predictive value (PPV). Performance of the model was further evaluated by examining the correlation between model prediction and the patient’s known ODX score, and calibration plots were also generated based on the predicted and true probability of a high-risk ODX score in the NCDB test set. All statistical testing was performed at the 0.05 significance level, and all analysis was performed in Python 3.10.6 using sci-kit learn 1.2.1, lifelines 0.27.0, and SciPy 1.9.3.

Survival analysis

Long-term survival outcomes were examined with Kaplan–Meier analysis, and hazard ratios and the Harrell’s concordance index (c-index) were calculated and compared between quantitative and non-quantitative models using Cox proportional hazards regression55,56,57. Charlson-Deyo comorbidity index and actuarial life expectancy were included as covariates for the Cox models58. Associations of model predictions with overall survival were analyzed in the NCDB cohort, whereas associations with overall survival, recurrence-free interval, and recurrence-free survival were analyzed in the UCMC cohort. Kaplan–Meier curves were generated using both 95% and 90% sensitivity thresholds to evaluate the model’s utility as a rule-out test, and the survival analysis was also performed using raw model predictions (normalized by standard deviation).

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The NCDB dataset is available to investigators from Commission on Cancer accredited cancer programs who complete an application process https://ncdbapp.facs.org/puf/. Data from the validation cohort is available from the authors upon reasonable request.

Code availability

The code for data preprocessing, model training, and model assessment is provided as a Jupyter notebook. It was written in Python 3.10.6 and is publicly available as a repository on GitHub at github.com/fmhoward/NCDBRS.

References

Siegel, R. L., Miller, K. D., Fuchs, H. E. & Jemal, A. Cancer statistics, 2022. CA Cancer J. Clin. 72, 7–33 (2022).

Brenton, J. D., Carey, L. A., Ahmed, A. A. & Caldas, C. Molecular classification and molecular forecasting of breast cancer: ready for clinical application? J. Clin. Oncol. 23, 7350–7360 (2005).

Sparano, J. A. et al. Adjuvant chemotherapy guided by a 21-gene expression assay in breast cancer. N. Engl. J. Med. 379, 111–121 (2018).

Paik, S. et al. A multigene assay to predict recurrence of tamoxifen-treated, node-negative breast cancer. N. Engl. J. Med. 351, 2817–2826 (2004).

Kwa, M., Makris, A. & Esteva, F. J. Clinical utility of gene-expression signatures in early stage breast cancer. Nat. Rev. Clin. Oncol. 14, 595–610 (2017).

Kalinsky, K. et al. 21-gene assay to inform chemotherapy benefit in node-positive breast cancer. N. Engl. J. Med. 385, 2336–2347 (2021).

Andre, F. et al. Biomarkers for adjuvant endocrine and chemotherapy in early-stage breast cancer: ASCO guideline update. J. Clin. Oncol. 40, 1816–1837 (2022).

Gradishar, W. J. et al. Breast cancer, version 3.2022, NCCN clinical practice guidelines in oncology. J. Natl Compr. Cancer Netw. JNCCN 20, 691–722 (2022).

Arnold, M. et al. Current and future burden of breast cancer: global statistics for 2020 and 2040. Breast 66, 15–23 (2022).

Roberts, M. C. et al. Racial variation in the uptake of oncotype DX testing for early-stage breast cancer. J. Clin. Oncol. 34, 130–138 (2016).

Moore, J. et al. Oncotype DX risk recurrence score and total mortality for early-stage breast cancer by race/ethnicity. Cancer Epidemiol. Biomark. Prev. Publ. Am. Assoc. Cancer Res. Cosponsor. Am. Soc. Prev. Oncol. 31, 821–830 (2022).

Collin, L. J. et al. Oncotype DX recurrence score implications for disparities in chemotherapy and breast cancer mortality in Georgia. NPJ Breast Cancer 5, 32 (2019).

Ibraheem, A., Olopade, O. I. & Huo, D. Propensity score analysis of the prognostic value of genomic assays for breast cancer in diverse populations using the national cancer database. Cancer 126, 4013–4022 (2020).

Vandergrift, J. L. et al. Time to adjuvant chemotherapy for breast cancer in national comprehensive cancer network institutions. J. Natl Cancer Inst. 105, 104–112 (2013).

Klein, M. E. et al. Prediction of the Oncotype DX recurrence score: use of pathology-generated equations derived by linear regression analysis. Mod. Pathol. 26, 658–664 (2013).

Sughayer, M., Alaaraj, R. & Alsughayer, A. Applying new magee equations for predicting the oncotype Dx recurrence score. Breast Cancer 25, 597–604 (2018).

Bilimoria, K. Y., Stewart, A. K., Winchester, D. P. & Ko, C. Y. The national cancer data base: a powerful initiative to improve cancer care in the United States. Ann. Surg. Oncol. 15, 683–690 (2008).

Lee, S. B. et al. A nomogram for predicting the oncotype DX recurrence score in women with T1-3N0-1miM0 hormone receptor‒positive, human epidermal growth factor 2 (HER2)‒negative breast cancer. Cancer Res. Treat. 51, 1073–1085 (2019).

Kim, M. C., Kwon, S. Y., Choi, J. E., Kang, S. H. & Bae, Y. K. Prediction of oncotype DX recurrence score using clinicopathological variables in estrogen receptor-positive/human epidermal growth factor receptor 2-Negative breast cancer. J. Breast Cancer 26, 105–116 (2023).

Kim, I. et al. A predictive model for high/low risk group according to oncotype DX recurrence score using machine learning. Eur. J. Surg. Oncol. 45, 134–140 (2019).

Orucevic, A., Bell, J. L., McNabb, A. P. & Heidel, R. E. Oncotype DX breast cancer recurrence score can be predicted with a novel nomogram using clinicopathologic data. Breast Cancer Res. Treat. 163, 51–61 (2017).

Orucevic, A., Bell, J. L., King, M., McNabb, A. P. & Heidel, R. E. Nomogram update based on TAILORx clinical trial results - oncotype DX breast cancer recurrence score can be predicted using clinicopathologic data. Breast 46, 116–125 (2019).

Robertson, S. J. et al. Selecting patients for oncotype DX testing using standard clinicopathologic information. Clin. Breast Cancer 20, 61–67 (2020).

Howard, F. M. et al. Integration of clinical features and deep learning on pathology for the prediction of breast cancer recurrence assays and risk of recurrence. NPJ Breast Cancer 9, 25 (2023).

Yoo, S. H. et al. Development of a nomogram to predict the recurrence score of 21-gene prediction assay in hormone receptor-positive early breast cancer. Clin. Breast Cancer 20, 98–107.e1 (2020).

Flanagan, M. B., Dabbs, D. J., Brufsky, A. M., Beriwal, S. & Bhargava, R. Histopathologic variables predict oncotype DXTM recurrence score. Mod. Pathol. 21, 1255–1261 (2008).

Kim, J.-M. et al. Verification of a western nomogram for predicting oncotype DXTM recurrence scores in Korean patients with breast cancer. J. Breast Cancer 21, 222–226 (2018).

Bhargava, R., Clark, B. Z., Carter, G. J., Brufsky, A. M. & Dabbs, D. J. The healthcare value of the Magee decision algorithmTM: use of magee equationsTM and mitosis score to safely forgo molecular testing in breast cancer. Mod. Pathol. 33, 1563–1570 (2020).

Lund, M. J. et al. 21-Gene recurrence scores. Cancer 118, 788–796 (2012).

Guth, A. A., Fineberg, S., Fei, K., Franco, R. & Bickell, N. A. Utilization of oncotype DX in an inner city population: race or place? Int. J. Breast Cancer 2013, e653805 (2013).

Dinan, M. A. et al. Initial trends in the use of the 21-gene recurrence score assay for patients with breast cancer in the medicare population, 2005-2009. JAMA Oncol. 1, 158–166 (2015).

Mariotto, A. et al. Expected monetary impact of oncotype DX score-concordant systemic breast cancer therapy based on the TAILORx trial. JNCI J. Natl Cancer Inst. 112, 154–160 (2019).

Romo-Bucheli, D., Janowczyk, A., Gilmore, H., Romero, E. & Madabhushi, A. A deep learning based strategy for identifying and associating mitotic activity with gene expression derived risk categories in estrogen receptor positive breast cancers. Cytom. A 91, 566–573 (2017).

Li, H. et al. Deep learning-based pathology image analysis enhances magee feature correlation with oncotype DX breast recurrence score. Front. Med. 9, 886763 (2022).

Cho, S. Y. et al. Deep learning from HE slides predicts the clinical benefit from adjuvant chemotherapy in hormone receptor-positive breast cancer patients. Sci. Rep. 11, 17363 (2021).

Chen, Y. et al. Computational pathology improves risk stratification of a multi-gene assay for early stage ER+ breast cancer. Npj Breast Cancer 9, 1–10 (2023).

Fan, M. et al. Radiogenomic signatures of oncotype DX recurrence score enable prediction of survival in estrogen receptor-positive breast cancer: a multicohort study. Radiology 302, 516–524 (2022).

Romeo, V. et al. MRI radiomics and machine learning for the prediction of oncotype Dx recurrence score in invasive breast cancer. Cancers 15, 1840 (2023).

Mao, N. et al. Mammography-based radiomics for predicting the risk of breast cancer recurrence: a multicenter study. Br. J. Radiol. 94, 20210348 (2021).

Chiacchiaretta, P. et al. MRI-based radiomics approach predicts tumor recurrence in ER + /HER2 - early breast cancer patients. J. Digit. Imaging 36, 1071–1080 (2023).

Ha, R. et al. Convolutional neural network using a breast MRI tumor dataset can predict oncotype Dx recurrence score. J. Magn. Reson. Imaging JMRI 49, 518–524 (2019).

Sparano, J. A. et al. Development and validation of a tool integrating the 21-gene recurrence score and clinical-pathological features to individualize prognosis and prediction of chemotherapy benefit in early breast cancer. J. Clin. Oncol. 39, 557–564 (2021).

Vannier, A. G. L. et al. Validation of the RSClin risk calculator in the national cancer data base. Cancer 130, 1210–1220 (2024).

Barandela, R., Valdovinos, R. M., Sánchez, J. S. & Ferri, F. J. The imbalanced training sample problem: under or over sampling? in Structural, Syntactic, and Statistical Pattern Recognition ((eds.) Fred, A., Caelli, T. M., Duin, R. P. W., Campilho, A. C. & de Ridder, D.) 806–814 (Springer, Berlin, Heidelberg, 2004). https://doi.org/10.1007/978-3-540-27868-9_88.

Vanderpuye, V., Dadzie, M.-A., Huo, D. & Olopade, O. I. Assessment of breast cancer management in sub-saharan Africa. JCO Glob. Oncol. https://doi.org/10.1200/GO.21.00282 (2021).

Ziegenhorn, H.-V. et al. Breast cancer pathology services in sub-aharan Africa: a survey within population-based cancer registries. BMC Health Serv. Res. 20, 912 (2020).

Shafi, S. et al. Integrating and validating automated digital imaging analysis of estrogen receptor immunohistochemistry in a fully digital workflow for clinical use. J. Pathol. Inform. 13, 100122 (2022).

Lara, H. et al. Quantitative image analysis for tissue biomarker use: a white paper from the digital pathology association. Appl. Immunohistochem. Mol. Morphol. 29, 479–493 (2021).

Sparano, J. A. & Paik, S. Development of the 21-gene assay and its application in clinical practice and clinical trials. J. Clin. Oncol. 26, 721–728 (2008).

Freund, Y. & Schapire, R. E. A desicion-theoretic generalization of on-line learning and an application to boosting. In Computational Learning Theory (ed. Vitányi, P.) 23–37 (Springer, Berlin, Heidelberg, 1995).

Ho, T. K. Random decision forests. in Proceedings of 3rd International Conference on Document Analysis and Recognition Vol. 1, 278–282 (IEEE, 1995).

Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 19, 716–723 (1974).

DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics 44, 837–845 (1988).

Sun, X. & Xu, W. Fast implementation of DeLong’s algorithm for comparing the areas under correlated receiver operating characteristic curves. IEEE Signal Process. Lett. 21, 1389–1393 (2014).

Harrell, F. E., Califf, R. M., Pryor, D. B., Lee, K. L. & Rosati, R. A. Evaluating the yield of medical tests. JAMA 247, 2543–2546 (1982).

Harrell, F. E., Lee, K. L., Califf, R. M., Pryor, D. B. & Rosati, R. A. Regression modelling strategies for improved prognostic prediction. Stat. Med. 3, 143–152 (1984).

Harrell, F. E., Lee, K. L. & Mark, D. B. Multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat. Med. 15, 361–387 (1996).

Deyo, R. A., Cherkin, D. C. & Ciol, M. A. Adapting a clinical comorbidity index for use with ICD-9-CM administrative databases. J. Clin. Epidemiol. 45, 613–619 (1992).

Acknowledgements

A.D. received support from the NIH NCI-SOAR grant 1R25CA240134-01. F.M.H. received support from the NIH/NCI (K08CA283261) and the Cancer Research Foundation. A.T.P received support from the NIH/NIDCR (K08-DE026500), the NCI (U01-CA243075), the Adenoid Cystic Carcinoma Research Foundation, the Cancer Research Foundation, and the American Cancer Society. A.T.P., D.H., and F.M.H. received support from the Department of Defense (BC211095P1). D.H. and O.I.O. received support from the NIH/NCI (1P20-CA233307). O.I.O. received support from Susan G. Komen (SAC 210203). J.Q.F received support from Susan G. Komen (TREND21675016). D.H. and O.I.O received support from Breast Cancer Research Foundation (BCRF-21-071).

Author information

Authors and Affiliations

Contributions

A.D. and F.M.H. were responsible for concept proposal, study design, and essential programming work. A.D., F.M.H., F.Z., J.Q.F., O.I.O., D.H. and A.T.P. contributed to data processing, analysis and statistical approaches. A.D. conducted initial drafting of the manuscript. A.D., A.V., F.Z., J.Q.F., P.S., M.S., K.Y., E.M.F., O.I.O., A.T.P., D.H., and F.M.H. contributed to interpretation of findings and review of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

A.D., A.V., F.Z., J.Q.F, P.S., M.S., K.Y., E.M.F., and D.H. report no competing financial or non-financial conflicts of interest. O.I.O reports ownership interest in 54Gene, CancerIQ, and Tempus and financial interest in Color Genomics, Healthy Life for All Foundation, and Roche/Genetech. A.T.P reports consulting fees from Prelude Biotherapeutics, LLC, Ayala Pharmaceuticals, Elvar Therapeutics, Abbvie, and Privo, and contracted research with Kura Oncology and Abbvie. F.M.H. reports consulting fees from Novartis.

Ethics

All experiments were conducted in accordance with the Declaration of Helsinki and the study was approved by the University of Chicago Institutional Review Board, IRB 22-0707. For model training, anonymized patient data was obtained from the NCDB17, this data is collected passively through cancer registries and is exempt from informed consent. For validation, a cohort of 970 anonymized patients were identified at the University of Chicago Medical Center, with data collected from January 1st 2006 through April 30th 2023. Informed consent for the validation cohort was waived, as patients had previously consented to the secondary use of their clinical data.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dhungana, A., Vannier, A., Zhao, F. et al. Development and validation of a clinical breast cancer tool for accurate prediction of recurrence. npj Breast Cancer 10, 46 (2024). https://doi.org/10.1038/s41523-024-00651-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41523-024-00651-5

- Springer Nature Limited