Abstract

Forecasting influenza activity in tropical and subtropical regions, such as Hong Kong, is challenging due to irregular seasonality and high variability. We develop a diverse set of statistical, machine learning, and deep learning approaches to forecast influenza activity in Hong Kong 0 to 8 weeks ahead, leveraging a unique multi-year surveillance record spanning 32 epidemics from 1998 to 2019. We consider a simple average ensemble (SAE) of the top two individual models, and develop an adaptive weight blending ensemble (AWBE) that dynamically updates model contribution. All models outperform the baseline constant incidence model, reducing the root mean square error (RMSE) by 23%–29% and weighted interval score (WIS) by 25%–31% for 8-week ahead forecasts. The SAE model performed similarly to individual models, while the AWBE model reduces RMSE by 52% and WIS by 53%, outperforming individual models for forecasts in different epidemic trends (growth, plateau, decline) and during both winter and summer seasons. Using the post-COVID data (2023–2024) as another test period, the AWBE model still reduces RMSE by 39% and WIS by 45%. Our framework contributes to comparing and benchmarking models in ensemble forecasts, enhancing evidence for synthesizing multiple models in disease forecasting for geographies with irregular influenza seasonality.

Similar content being viewed by others

Introduction

Influenza virus causes an estimated 3–5 million severe illnesses and 400,000 deaths annually on average1. Forecasting infectious disease activity could inform public health responses to outbreaks, such as preparing for the increase in hospitalization2,3. In regions with stable seasonality such as the continental U.S., forecasting of influenza could be reliable, with the establishment of forecasting hub4 to generate ensemble forecast based on the forecast from several groups based on several mechanistic, statistical, machine learning and deep learning approaches4,5,6,7,8,9,10. In region with regular influenza seasonality, the start and end week of influenza season are stable, and therefore the forecasting tasks are usually conducted in a pre-defined epidemic period, such as October to the May next year in United States.

In subtropical and tropical regions such as Hong Kong, influenza seasonality is irregular and causes difficulty. In these regions, the onset of influenza season is unpredictable, and there is potential of second influenza peak in summer seasons, so that accurate influenza forecast is challenging11,12,13, particularly in leveraging seasonality4,14. Due to this challenge, modification of forecasting approach is necessary, such as conducing forecast throughout the year, instead of a pre-defined epidemic period. Also, the prediction models are required to be sensitive to capture rapid changes in influenza incidence. In Hong Kong. one previous study attempted to forecast the peak time and magnitude13 but based on a single model. Ensemble approaches have proven superior across a range of disease systems and geographies4,9,15,16,17,18, therefore there is a great potential for ensemble forecast in Hong Kong.

To address this challenge, we aim to develop a systematic approach for 0–8 week ahead forecasting of influenza activity in the context of irregular seasonality. Using Hong Kong as an example, we examine >20 years of data (1998–2019) to develop and evaluate various statistical, machine learning, and deep learning approaches. Additionally, we explore several ensemble forecasting methods that have previously demonstrated superior performance in other diseases4,9,17,19 or geographies20,21. We propose dynamically updating model weights to better capture the rapid changes in influenza activity in Hong Kong. Our approach and ensemble framework contribute to the evidence base for forecasting infectious diseases in regions with irregular seasonality.

Results

Influenza epidemics in Hong Kong

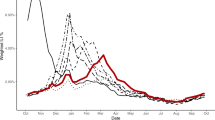

In Hong Kong, an indicator combining influenza-like-illness intensity (ILI+) and the proportion of respiratory specimens positive for influenza each week serves as the gold standard to measure influenza activity22. From 1998 to 2019, 32 epidemics occurred: 20 in winter (November to April) and 12 in summer (May to October). Winter epidemics consistently occurred, except in 2013 and 2016. The start week of epidemics varied (Fig. 1), with winter and summer epidemics ranging from week 5 (Nov, 29th)–21 (Mar, 21st), and week 27 (May, 7th)−47 (Sep, 18th), respectively.

A Influenza activity (ILI + ) in Hong Kong from 1998 to 2019, encompassing the influenza season, and the training and testing periods. Blue dotted vertical lines indicate the start of epidemics. B Influenza trends in a year Hong Kong. Epidemic week is defined as November of the preceding year to the October in the current year.

A 1-week delay exists in ILI+ reporting (i.e., at week t, ILI+ is only available up to week t−1). We mimic real-time analysis considering these delays to generate nowcasts and up to 8-week ahead forecasts. In 2009, the sentinel surveillance system was affected by the establishment of Special Designated Flu clinics (not part of the sentinel network) in response to the H1N1 influenza pandemic. In our previous study, we estimated that this establishment of special clinics inflated the ILI+ values by threefold in 200923. Thus, we exclude 2009 forecasts from all evaluations. This removal is due to the change in the data-generating process from sentinel surveillance to a combination of sentinel surveillance and Special Designated Flu Clinics in 2009. When changes in the data-generating process occur over time periods, our methods would not be applicable to those periods.

Overview of nowcasting and forecasting of ILI+

Given the irregular seasonality of influenza activity in Hong Kong and potential influenza activity in summer, we generate nowcasts and forecasts of ILI+ throughout the year. This approach contrasts with season-based methods, which only forecast influenza activity from December to May in regions with regular influenza seasonality in winter and no influenza activity in summer.

We first develop various statistical models (Appendix Section 1) to nowcast and forecast ILI+ up to 8 weeks ahead, incorporating epidemiological predictors such as past ILI+ values from the previous 14 weeks, week number, and month of the year to reflect seasonality, and meteorological data including weekly temperature, temperature range, absolute and relative humidity, rainfall, solar radiation, wind speed, and atmospheric pressure (Appendix Section 2). Predictions from these individual models are combined into a single ensemble forecast. Eight models are tested, including the Autoregressive Integrated Moving Average model (ARIMA), Generalized Autoregressive Conditional Heteroskedasticity model (GARCH), Random Forests model (RF)24, Extreme Gradient Boosting model (XGB)25, Long Short-Term Memory Network Model (LSTM)26, Gated Recurrent Units Network Model (GRU)27, a Transformer-based time series framework named TSTPlus (TSTPlus)28 and an ensemble of deep Convolutional Neural Network (CNN) models called InceptionTime Plus Model29.

We use January 1998–October 2007 as the training period and November 2007—July 2019 as the test period. To avoid collinearity among meteorological predictors and determine the optimal lag, we compute the Pearson correlation between ILI+ data and predictors with varying lags during the training period, selecting 5 out of 8 meteorological predictors (see methods). Epidemiological predictors are included in all models. Individual models are fitted on the selected predictors and observed ILI+ data, generating forecasts using a rolling method: At each week t during the test period (Nov 2007—Jul 2019), we use meteorological data up to week t and ILI+ data only up to week t−1 to make forecasts for the period t up to t + 8. Statistical models (ARIMA and GARCH) are retrained weekly with the most recent available data. Other models (machine learning and deep learning) are retrained only in the first week of November each year, as weekly updates require higher training costs with no performance improvement (Fig. S1).

Then, we generate ensemble forecasts based on individual models by using a simple averaging of the top two models and a model blending method that weights models based on past performance18,30,31. We also introduce a time adaptive decay weighting scheme, where performance with more recent data contributes more heavily to the estimation of the weight of each model. For comparison with the ensemble and individual models, we consider a baseline “constant” null model, in which the ILI+ from weeks t to t + 8 weeks remains the same as ILI+ at week t−1.

Prediction intervals

Most machine learning and deep learning approaches do not provide prediction intervals, and our trials using Monte Carlo Dropout (MCDropout) show that MCDropout may generate worse point forecasts compared to those without MCDropout (Fig. S2). To address this limitation, we extend the previous approach using a normal distribution with the point forecast as a mean and the standard deviation (SD) calculated using residuals from a rolling 20-week window32. Instead of fixing the 20-week window, we use the training data to determine the optimal week number for the rolling window for each prediction horizon and model (see method). To ensure fair comparisons, we used the same method to generate prediction intervals for baseline models, and statistical models.

Evaluation metrics

We evaluate and compare the performance of individual and ensemble models during the test period (Nov 2007—Jul 2019). Primarily, we use root mean square error (RMSE), symmetric mean absolute percentage error (SMAPE), and weighted interval score (WIS) to compare models, while also providing mean absolute error (MAE) and mean absolute percentage error (MAPE) (Appendix Section 3).

Performance of individual models in nowcasting and forecasting

Eight individual models are used to forecast ILI+ up to an 8-week time horizon. Most of these models broadly capture ILI+ dynamics (Figs. S3 and S4). Across all 9 weeks of the horizon (t = 0 to t + 8), all models outperform the baseline constant model, reducing RMSE by 23%–29%, SMAPE by 17%–22%, and WIS by 25%–31% (Fig. 2). As the prediction horizon lengthens, individual model improvements become more apparent. For instance, all models outperform the baseline, reducing RMSE by 22%–31% for 4 weeks ahead forecast and 33%–37% for 8 weeks ahead forecast (Table S1).

A–E referred to RMSE, MAE, WIS, SMAPE, and MAPE. Respectively. F showed the numerical value of the relative performance of metrics compared to the Baseline. Models: ARIMA Autoregressive Integrated Moving Average Model, GARCH Generalized AutoRegressive Conditional Heteroskedasticity Model, RF Random Forest, XGB Extreme Gradient Boosting, InTimePlus InceptionTime Plus Model, LSTM Long Short-Term Memory Network, GRU Gated Recurrent Neural Network, TSTPlus Transformer-based Framework for Multivariate Time Series Representation Learning Model, SAE Sample Average Ensemble model, NBE Normal Blending Ensemble model, AWAE Adaptive Weighted Average Ensemble model, AWBE Adaptive Weighted Blending Ensemble model.

We then assess model performance during distinct epidemic phases (Fig. 3). The best-performing model varies among phases, with LSTM, InTimePlus, and RF performing best in growth, plateau, and decline phases, respectively, reducing RMSE by 24%, 31%, and 26% compared to the baseline model. We evaluate model performance by season (winter or summer) (Figs. 3 and S5) and find that the best-performing model in one season may not perform as well in the other. The RF model performs best in winter but has the worst performance in summer among all individual models. Conversely, the InTimePlus model performs best in summer but has the worst performance in winter. Since the relative skills of different types of models depend on the epidemic phase and the season, an ensemble would likely be well-positioned to improve overall performance.

Performance of ensemble models in nowcasting and forecasting

We first examine two ensemble approaches: the simple averaging ensemble (SAE) and the normal blending ensemble (NBE). For the SAE model, we select the three best models at each week t based on RMSE calculated using data up to week t−1 and take the unweighted mean of these individual forecasts. Two models are selected based on the RMSE on ensemble models with different numbers of best models in training period (Figs. S6 and S7). For the NBE model, we fit a LASSO regression on observed ILI+ up to week t−1, and the corresponding out-sample prediction of ILI+ from all individual models, assigning weights to the nowcast and forecast periods using regression coefficients. Therefore, the weights of individual models are not constrained to sum up to one and are allowed to be negative. To further improve forecasts, we use exponential time decay to weight previous data, assigning the highest weight to the most recent week (t−1) when averaging or blending. These are named the Adaptive Weighted Average Ensemble (AWAE) and the Adaptive Weighted Blending Ensemble (AWBE) models, respectively. Based on the training data, we set the decay rate to be 0.384 in the AWAE and AWBE models, the weight decayed to 0.01 at 12 weeks before the week of conducting forecast (Figs. S8 and S9).

Compared to the baseline constant model, the SAE and NBE models reduced RMSE by 27%−29%, SMAPE by 19–21%, and WIS by 28–29%, comparable to the performance of the best individual models, without showing a significant advantage. The performance further improved when considering decaying weights based on recent performance; the AWAE and AWBE ensemble models reduced RMSE by 39% and 52%, SMAPE by 35% and 43%, and WIS by 42% and 53%, demonstrating that adaptive weighting could further improve forecasting performance. Further evaluating performance by different forecasting horizons, the AWAE and AWBE improvements were more apparent at longer prediction horizons (Table S1, 2, Figs. 2 and 4). Additionally, the AWAE and AWBE ensembles consistently improved forecast accuracy for different epidemic phases and both summer and winter, compared to the SAE and NBE models or all individual models (Fig. 3).

All metrics are relative to the Baseline model and include RMSE, SMAPE, MAE, WIS, and MAPE. A Model performance for distinct epidemic trends, with black dashed lines representing the best individual models. B Different stages of Hong Kong flu data distinguished by color, with the gray dashed line signifying outbreak threshold. C Model performance by winter and summer seasons.

Model performance in predicting occurrence of epidemic

Despite our framework is designed and optimized to generate 0–8 week ahead forecast, our framework can provide predictions on occurrence of epidemic, peak timing and magnitude (Appendix Section 3.3). We follow the evaluation criteria based on a previous study in Hong Kong13 (Details available in method section). The accuracy of predicting occurrence of outbreaks within the next eight weeks, as well as the sensitivity (TPR), specificity (SPC), precision (PPV), and recall (NPV) indicators, based on AWAE and AWBE are higher than the baseline and individual models, with values equal to 0.9 for these 5 indicators. When compared with previous predictions for Hong Kong (17), our AWAE and AWBE model demonstrates better performance in term of NPV, and comparable performance across all other indicators (Fig. S10). Regarding peak timing (Fig. S11), our individual models could perform better in 0-week ahead forecast under stricter criteria, and in 0–4-week ahead forecast under looser criteria, compared with the previous work (17). The accuracy is comparable for other horizons. It should be noted that the ensemble model may not always better than individual models. Regarding peak magnitude, AWBE model demonstrates better accuracy for 1–6 week ahead forecast under the stricter, compared to previous work (17), and similar performance for 0-week ahead forecast (Appendix Section 3.3).

Feature importance

The feature importance map illustrates the significance of different predictors for various models and time horizons, reflecting model forecasting accuracy results and intuitive model interpretation (Fig. 5). First, the ILI+ value in t−1 is highly important for all models, as expected, since it represents the most updated information about the current epidemic state. Second, different models utilize predictors differently. For statistical models (ARIMA and GARCH), except for the ILI+ value in t−1, meteorological predictors and ILI+ values at t−2 and earlier are equally and weakly important, with little change in predictors across time horizons. For machine learning models (RF and XGB), predictors become more important for longer prediction horizons. Deep learning models exhibit different feature importance maps from other approaches. For LSTM and GRU, epidemiological predictors are more important than meteorological predictors. For InTimePlus and TSTPlus, only past ILI+ values are important in nowcasts, but all other predictors become more important for longer prediction horizons. We examine the differences in feature importance between summer and winter seasons. The importance of most features remains consistent, except for ‘Minimum of temperature’. For the RF, XGB, InTimePlus, and TSTPlus models, this feature is slightly more important for long-term winter predictions than for summer predictions (Fig. S12). The differences in learning capabilities regarding features and lags of various models also support the use of ensemble models and their improvements compared to individual models.

Importance is measured by average regression coefficients in ARIMA and GARCH models, average feature importance in RF and XGB models, average saliency maps for LSTM and GRU models, and average permutation importance for TSTPlus and InTimePlus models. It should be noted that the numerical results of different comparison methods may not be directly comparable.

We conducted an ablation test to compare the prediction performances for models with or without meteorological predictors (Fig. S13). Overall, the inclusion of meteorological variables does not have a consistent impact on the performance. The impact depends on the models, evaluation metrics and evaluation period (Appendix Section 2.3).

Requirement of the amount of training data and associated computational cost

To determine the required amount of training data, we vary the training period duration from 1 to 9 years, retrain the models, generate forecasts, and compute the RMSE (Fig. S14). Decreasing trends in RMSE are observed when the training period increases, particularly for deep learning models. Overall, 7 years of data are needed for a performance comparable to models using all available data (relative RMSE < 20%). When there are only one year of training data, all individual models are worse than baseline (Fig. S15).

We also evaluate the computational cost of each model, measured by the training running time for predicting a single week’s ILI+. The running time of ensemble models is the sum of the running time of all individual models plus the ensemble’s running time. We find that RF and XGB have low training costs regardless of the amount of training data (<1 sec), while TSTPlus and InTimePlus have high training costs, increasing from around 5–6 sec for 1 year of training data to around 170–200 sec of rolling training approach. Overall, one run of training takes at most 196 sec for the complicated deep learning model when using the rolling method. For ensemble models, since running all individual models is required, it takes around 430 sec for 1 year. However, it should be noted that individual models could be run parallelly. If so, the ensemble models required only no more than 200 sec.

Model performance after COVID-19 pandemic

During 2020–2023, Hong Kong implemented substantial public health and social measures to suppress COVID-19 outbreaks, resulting in zero influenza activity with no epidemics in these 3 years33,34. Considering the potential impact on influenza transmission dynamics due to the presence of SARS-CoV-2 viruses, we test our approaches in the post-COVID-19 era, using March 2023 to January 2024 as another testing period.

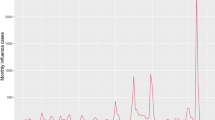

Overall, all models except for ARIMA and GRU perform better than the baseline model during 2023–2024 but slightly worse than the pre-COVID era (Table S2, Fig. S16). Only 6 out of 8 models improve predictions, reducing the RMSE by 5–17% compared to the baseline model (Table S3). Ensemble models further enhance predictions, with NBE, AWAE, and AWBE reducing the RMSE by 24%, 27%, and 39%, respectively, compared to the baseline (Table S3, Fig. 6), but not for SAE.

Red, yellow, blue and purple indicate the point forecasts of 0-week, 2-week, 4-week and 8-week ahead, accompanying the 90% prediction interval by shaded area of corresponding colors. A, B show the results of Adaptive Weighted Average Ensemble model (AWAE) and Adaptive Weighted Blending Ensemble (AWBE) respectively.

Discussion

In this study, we evaluate the performance of various individual models and ensemble approaches to forecast the influenza epidemics (ILI+) in Hong Kong. Based on a unique dataset covering 32 epidemics in a subtropical region from 1998 to 2019, and using the last 11 years as the test period, we examine traditional time series, advanced machine learning and deep learning approaches. We find that the RF and InTimePlus models can reduce forecasting error (RMSE) by 27%, compared with the baseline “constant incidence” model, when evaluating ILI+ forecasts from current week to 8-week ahead. We further develop an adaptively weighted ensemble model that combines forecasts from individual models and reduces the RMSE by 50% and 62% compared with the baseline model. Overall, more sophisticated ensemble methods provide a greater edge over baseline and individual models at longer time horizons.

Influenza seasonality in Hong Kong is irregular, with inconsistent outbreak onset times and potential for a second outbreak in summer, unlike in other regions such as the US and Europe. Consequently, we generate nowcasts and forecasts throughout the year, using various types of statistical, machine learning, and deep learning models. Compared to the baseline, the improvement in accuracy for these models in nowcasts is not readily apparent; however, it becomes more evident when the forecasting horizon is longer. We also find that different approaches are particularly well-skilled for different stages of an epidemic, or for summer/winter epidemics. We investigate the feature importance (Fig. 5) for individual models and the differences in learning capabilities concerning features. Most machine learning and deep learning approaches allow different types and orders of lagged variables to contribute to some extent, particularly for long-term forecasting, indicating the capability to capture the complex relationships between predictor factors and ILI+. These suggest that model structure and learning capability variations may result in performance differences across seasons or epidemic phases, motivating the need for ensemble approaches that can integrate skills from various models.

We examine the SAE that assigning same model weight to the top two models, and NBE that allows for different model weights, but these models could not improve the prediction performance compared to best individual model. This may suggest that using all historical data with equal weight in the estimation of model weights in ensemble may reduce the sensitivity to capture the rapid change of ILI+ dynamics. This motivates the use of adaptive weight ensemble, which assigns higher weights to the most recent data points in estimating model weights to better capture the dynamics. Employing this approach, the accuracy in the AWAE and AWBE could improve the accuracy substantially, reducing RMSE by 41% and 52% compared to the baseline. It should be noted that the decay rate parameters in AWAE and AWBE were selected by a simple grid search. There may be better ways to select this parameter, such as the expectation-maximization algorithm in Ray et al.35, which is worth exploration. Without retraining of using post-COVID data, AWAE and AWBE models could still reduce the RMSE by 27% and 39% compared to baseline in March 2023 to February 2024, after 3-year of COVID-19 control measures in Hong Kong so that influenza activity was zero from 2020 to 2022. The slightly reduced performance may be due to the loss of population immunity against influenza for 3 years34,36 and potential virus interference between influenza and SARS-CoV-237,38. Acquiring more post-COVID era data would likely enhance forecasting performance.

Contrary to the well-established influenza forecasting activity in regions with stable seasonality in winter like the US, there is limited forecasting research in subtropical regions like Hong Kong. To our knowledge, ours is the first study that attempted to forecast influenza activity at 0–8 week time horizons. Compared to a previous study that used a mechanistic model in Hong Kong13, our AWBE model has a comparable performance in predicting the peak timing and occurrence of epidemic, but a much higher accuracy in predicting peak magnitude (Figs. S10 and S11). It should be noted that our models are trained based on minimizing RMSE, and therefore, prediction targets related to values would perform better than timing, and also explained why there was no improvement in predicting the peak timing.

Our systematic evaluation of various forecasting approaches has implications for advancing the science of infectious disease forecast. Regarding the probabilistic forecast of machine learning and deep learning, we test a commonly used approach ‘Monte Carlo dropout’, but it worsens the accuracy of point forecast, in line with previous studies39,40. Motivated by a previous approach32, we use the training data to determine the optimal week number for the rolling window to compute the SD to generate the probabilistic forecast, and this approach could reduce the WIS by 42% and 53% for AWAE and AWBE ensemble model. Regarding the ensemble method, we use simply a grid search to find the optimal value of decay rate (lambda) based on the training data in the AWAE and AWBE ensemble model (Figs. S8 and S9). In the future, it may be possible that this parameter could be estimated, such as using the expectation-maximization algorithm in Ray et al.35. Regarding the number of models in the ensemble forecast, we find that this optimal number is two in our study. However, we propose that it may differ according to evaluation metrics, numbers and types of individual models, so the optimal number of models in an ensemble can vary for each forecasting task, despite previous analyses suggesting four as the most optimal value in the United States41.

Our adaptive weight ensemble framework aims to address the irregular seasonality of influenza in Hong Kong, enabling forecasting tasks to be conducted every week throughout the year, rather than during a pre-defined “epidemic period.” This approach enhances the model’s ability to capture rapid changes through adaptive weighting. While our framework is designed with influenza with irregular seasonality in mind, it can be applied to other diseases or regions with regular seasonality, provided there is sufficient data to train the model (at least seven years in our study). Some parameter tuning may be necessary to adapt to the specific properties of the disease and region, particularly the decay rate parameter for adaptive weight ensemble models. The individual models in our study can feasibly incorporate predictors that are informative for specific diseases. Furthermore, our ensemble forecast models can accommodate new models with strong performance, as there are no restrictions on the individual models. In the future, this approach could be extended to address other diseases or regions with regular seasonality, provided that adequate data is available for model training.

Our study has some limitations. Our retrospective study may tend to overestimate the performance of the forecasting models, compared to real-time forecasting. We do not consider issues such as the holiday-related delay of data release, or sporadic data revisions or corrections. Besides these limitations, we mimic real-time analysis by strictly utilizing data available at the week of forecast to avoid hindsight bias. Second, we use regression coefficient, feature importance or the saliency maps to determine the importance of predictors for various types of models. Therefore, results from different comparison methods may not be directly comparable. Third, due to high computational cost, we only retrain machine learning and deep learning model yearly. Although we find similar accuracy of weekly and yearly retraining, we cannot rule out other approaches that could enhance the performance of weekly retraining. Fourth, the reliability of our forecasting framework depends on the stability of the influenza surveillance system in Hong Kong (i.e., no change in the data-generating process of the prediction target). While there are no documented changes except for 2009 influenza pandemic outbreak, undocumented change may impact forecast accuracy. Finally, ensemble models may require higher computational costs compared to individual models; however, our methods can be executed in parallel, as they can be trained independently. On the other hand, the interactions of predictions from different individual models are not considered during their training phase but only in the construction of the ensemble model. This may result in a lack of diversity in the prediction performance of individual models, as evidenced by the fact that the simple average ensemble model could not improve the prediction performance compared to the best individual model. In the future, exploring methods to train these models concurrently while considering their interactions may enhance their performance.

In conclusion, we systematically evaluate and analyze individual and ensemble models, and develop an adaptive ensemble approach in Hong Kong, a region with irregular influenza seasonality. Our results demonstrate this approach can halve the forecast error compared to a baseline model, in 0–8 week ahead forecast. Our approach is feasible for comparisons and benchmarking of different prediction models, and integration of newly developed models in ensemble forecast, making them applicable to other infectious diseases in geographies with irregular seasonality.

Methods

Data on influenza virus activity in community

In Hong Kong, influenza activity in the general community is monitored through a sentinel surveillance network of outpatient clinics, which report the number of patients with influenza-like illness (ILI) weekly. ILI is defined as the presence of fever >37.8 °C, accompanied by cough or sore throat. Weekly outpatient consultation rates for ILI per 10,000 consultations are reported. The public health laboratory gathers data on the weekly proportion of specimens from sentinel outpatient clinics and local hospitals testing positive for influenza virus. We generated a proxy measure of influenza activity (ILI+), calculated as the weekly rates of outpatients with ILI multiplied by the weekly proportion of laboratory specimens positive for influenza virus, irrespective of subtype. We previously reported that this proxy offered a reliable indication of community-wide virus infection incidence based on hospital admissions42.

We focus on the period from 1998 to 2019, before the outbreak of COVID-19. In 2009, the sentinel surveillance system was affected by the establishment of special Designated Flu Clinics (not part of the sentinel network)23 (Fig. 1). Therefore, the ILI+ value in that period is inflated and we exclude the data from Apr, 2009 to Mar, 2010 in our analyses. A post-COVID period (March 2023–January 2024) is also included as another testing period.

Overview of the modeling approach

We develop a framework to forecast weekly ILI+ up to 8 weeks ahead. Due to a one-week delay in ILI+ data availability, the most recent data accessible in the current week t is ILI+ at week t−1. Consequently, we generate forecasts for all weeks from t to t + 8. We initially develop various statistical models to forecast ILI+ and subsequently combine these individual forecasts into a single ensemble forecast using different aggregation approaches. Our study is divided into a training period (January 1998 to October 2007) and a test period (November 2007 to July 2019). Models are fitted during the training period, and hyperparameters are tuned based on performance within the same period. We then conduct individual forecasting and model ensembles on a rolling basis throughout the test period and evaluate the performance of both individual and ensemble models.

Individual forecasting models

In this article, we have established a total of 8 individual models, including the ARIMA, GARCH, RF24, XGB25, LSTM26, GRU27, a TSTPlus28 and an ensemble of deep CNN models, which is called InceptionTime Plus Model29 (Full details in appendix). We added a persistence model as a null baseline, in which the values of 0–8-week ahead forecast of ILI+ in week t are the same as the ILI+ value of week t−19,32.

For each individual model, we include a set of predictors that may inform the trajectory of ILI+. We consider two types of predictors: epidemiological predictors, including the week number and month of the year, which may capture influenza seasonality, and prior ILI+ values from week t−1 to t−14; and 8 meteorological predictors, including the maximum and minimum values of weekly temperature, the range of weekly temperature, absolute and relative humidity, rainfall, solar radiation, wind speed and atmospheric pressure. Meteorological predictors are included since prior studies reported their association with influenza incidence43,44,45,46,47.

Before model fitting, we conduct a covariate selection for 8 meteorological predictors using Pearson’s correlation between each predictor and ILI+, selecting 5 meteorological predictors with the highest correlations: the maximum and minimum of temperature, the relative humidity, total rainfall, and solar radiation. At each week t, the best lag is automatically selected for each predictor and model based on the Pearson correlation analysis between ILI+ data and the predictors at varying lags. Considering the differences in learning capabilities among models, the ARIMA and GARCH models only have a single predictor \({X}_{t-k}\) with the optimal lag k for each selected predictor, while the RF and XGB models have the predictors from lag 1 to the optimal lags (\({X}_{t-1},\,{X}_{t-2},\,\cdots,\,{X}_{t-k}\)). For deep learning model, lag 1 to 14 is included for each select predictor. Additionally, influenza seasonality in Hong Kong, characterized by winter and summer peaks48, is accounted for by including week number and month of the year by default in all models.

Model evaluation

For each week t in the test period, several evaluation metrics are computed using observed data from t to t + 8. Model performance is primarily evaluated using RMSE49, widely employed in previous literature. Furthermore, we use the SMAPE, a variant of the MAPE with added symmetries to eliminate skewness and improve resistance to outliers, making it more suitable when ILI+ values are near zero50. We also provide MAE and MAPE for reference. Regarding probabilistic forecast, we employ weighted interval score (WIS) as the evaluation metric. WIS is a proper score combining a set of interval scores for probabilistic forecasts that provide quantiles of the predictive forecast distribution (Supplementary method 3.2). It measures how closely the entire distribution aligns with the observation51.

To evaluate the performance of interval prediction, we initially employed Monte Carlo Dropout (MCDropout), the most commonly used interval prediction method for deep learning39,40. However, we found that MCDropout may reduce the accuracy of point forecast (Fig. S2). Therefore, we adopted an interval prediction method in Aiken et al.32, assuming predictions follow normal distributions characterized by a mean of \(\hat{y}\) (the point forecast) and a standard deviation of \(\sigma\). The method for calculating \(\sigma\) is as follows: For a fixed calculation window length \(k\) and a given week \(t\), we first compute the out-of-sample prediction and prediction errors (\(\beta=\,\hat{y}-y\)) for the historical \(k\) weeks, denoted as \({\beta }_{t-k-1},{\beta }_{t-k},\cdots,{\beta }_{t-1}\), and then calculate the standard deviation \(\sigma\) based on them. Instead of setting k = 20 as in Aiken et al., we use the training data to determine the optimal k. We select the optimal k for each model and each prediction horizon by first testing \(k\) from 5–50 and then calculating the 90% coverage rate for each k. Based on this, we obtain the optimal window length for each prediction horizon and for each model, by selecting the k with the corresponding 90% coverage rate closest to 90%. We then apply these optimal window lengths to the testing period.

We also evaluate model performance by winter and summer seasons, and by epidemic trends. We define the trends to be growth (increasing for three consecutive weeks), decline (decreasing for three consecutive weeks) and plateau (the weeks between adjacent growth and decline phases) (Fig. 4).

Model ensemble

Ensemble models combine forecasts from individual models into a single forecast, by assigning weights to each individual forecast. Given a set of m models, denote \({\hat{Y}}_{t}^{i}\) as the forecast of model \(i\) at week \(t\) and \({w}_{i}^{t}\) the ensemble coefficient for model \(i\) at week \(t\), then the ensemble forecast \({\hat{Y}}_{t}\) is:

We test the following approaches to determine the weights: (1) SAE model: The model selects the top two models based on RMSE up to t−1, and then take the unweighted mean of the individual forecast of these 3 models9 (\({w}_{t}^{i}\) = 1/3 if model i is selected and 0 otherwise). 2) NBE model: This model fits a LASSO regression of observed ILI+ up to week t−1 on predicted ILI+ from different models to obtain coefficients to generate ensemble forecast for week t52. Therefore, the weights of individual models in NBE are not constrained to be sum to one and are allowed to be negative.

We introduce adaptive weighting on the past ILI+ value, with higher weights for observations closer to the date of the forecast. We use the exponential time decay function to weight past ILI+ values to generate an ensemble forecast. For week t, the weight of the ILI+ of week t–k is:

We use grid search to select the optimal value of lambda based on the RMSE in training period (Appendix Section 1.4). We apply these weights in the simple averaging ensemble when we compute the RMSE to select the best three models. This approach is called the AWAE model. We also use a similar approach for the blending ensemble, applying these weights when fitting the LASSO regression. This approach is called the AWBE model.

Implementation

The entire experiment was conducted on a Windows system equipped with a 12th-generation Intel i7 CPU processor and an Intel UHD Graphics 770 graphics card, ensuring a fair comparison of model running times. Statistical analyses were performed using Python version 3.9 and R version 4.0.5 (R Foundation for Statistical Computing, Vienna, Austria).

Model performance in prediction targets other than 0–8 weeks ahead forecast

We also test 3 more prediction targets, including occurrence of epidemic, peak magnitude and peak timing and adopt an evaluation approach in Yang et al.13. We evaluate the prediction on occurrence of epidemic in coming 0–8 week, according to the definition of epidemic in above. Since it is a classification problem, we use accuracy, TPR, SPC, PPV, and NPV indicators to evaluate performance.

Regarding peak timing and peak magnitude, given that our maximum prediction horizon is only 8 weeks, the peak of the predicted curve could be censored, i.e. predicting a strict increasing trend from 0 to 8 weeks, but the actual peak may be later than 8 weeks. Therefore, we only consider the model’s predictive performance 0–6 weeks ahead of the outbreak peak. We use the two evaluation criteria (strict and looser). Under the strict criterion, a prediction is considered accurate if the predicted peak week matches the observed result exactly, and if the predicted peak amplitude falls within ±20% of the observed ILI+ value. For the looser standard, predictions of the outbreak’s occurrence or peak within ±1 week of observation, and predictions of the outbreak’s peak amplitude within ±50% of observation are all considered accurate.

Feature importance

For interpretability and better understanding of the role of each predictor, we analyze the feature importance of individual models. Specifically, we obtain regression coefficients for each variable for regression-based model (ARIMA and GARCH) and feature importance for machine learning model (RF and XGB)53. For deep learning models, we use saliency maps for LSTM and GRU54 and permutation importance for transformer-based approach TSTPlus and InTimePlus55,56. As models are trained dynamically, the feature importance in each model may change from week to week. We normalize the importance data and take an average for the entire study period. Figure 5 compares the average feature importance of different predictors and models with different lag order for ILI+ nowcast, and 4 weeks and 8 weeks ahead forecast.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

All the data used in the analysis is available at https://github.com/timktsang/hk_flu_adaptive_forecast.

Code availability

All codes are available at https://github.com/timktsang/hk_flu_adaptive_forecast.

References

Iuliano, A. D. et al. Estimates of global seasonal influenza-associated respiratory mortality: a modelling study. Lancet 391, 1285–1300 (2018).

Center for Disease Control and Prevention. https://www.cdc.gov/flu/weekly/flusight/how-flu-forecasting.htm#:~:text=Flu%20forecasts%20can%20be%20used,future%20flu%20pandemics%20is%20possible (2024).

Center for Health Protection. https://www.chp.gov.hk/en/index.html (2024).

Reich, N. G. et al. A collaborative multiyear, multimodel assessment of seasonal influenza forecasting in the United States. Proc. Natl Acad. Sci. USA 116, 3146–3154 (2019).

Oidtman, R. J. et al. Trade-offs between individual and ensemble forecasts of an emerging infectious disease. Nat. Commun. 12, 5379 (2021).

Osthus, D. & Moran, K. R. Multiscale influenza forecasting. Nat. Commun. 12, 2991 (2021).

Rodriguez, A. et al. DeepCOVID: an operational deep learning-driven framework for explainable real-time COVID-19 forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence. 15393–15400 (2021).

Meakin, S. et al. Comparative assessment of methods for short-term forecasts of COVID-19 hospital admissions in England at the local level. BMC Med. 20, 1–15 (2022).

Paireau, J. et al. An ensemble model based on early predictors to forecast COVID-19 health care demand in France. Proc. Natl Acad. Sci. 119, e2103302119 (2022).

Amendolara, A. B., Sant, D., Rotstein, H. G. & Fortune, E. LSTM-based recurrent neural network provides effective short term flu forecasting. BMC Public Health 23, 1788 (2023).

Wong, C.-M., Chan, K.-P., Hedley, A. J. & Peiris, J. M. Influenza-associated mortality in Hong Kong. Clin. Infect. Dis. 39, 1611–1617 (2004).

Cowling, B. J., Wong, I. O., Ho, L.-M., Riley, S. & Leung, G. M. Methods for monitoring influenza surveillance data. Int. J. Epidemiol. 35, 1314–1321 (2006).

Yang, W., Cowling, B. J., Lau, E. H. & Shaman, J. Forecasting influenza epidemics in Hong Kong. PLoS Comput. Biol. 11, e1004383 (2015).

Bandara, K., Bergmeir, C. & Hewamalage, H. LSTM-MSNet: leveraging forecasts on sets of related time series with multiple seasonal patterns. IEEE Trans. neural Netw. Learn. Syst. 32, 1586–1599 (2020).

Jiang, Z., Sainju, A. M., Li, Y., Shekhar, S. & Knight, J. Spatial ensemble learning for heterogeneous geographic data with class ambiguity. ACM Trans. Intell. Syst. Technol. 10, 1–25 (2019).

McAndrew, T. & Reich, N. G. Adaptively stacking ensembles for influenza forecasting with incomplete data. arXiv https://www.arxiv.org/abs/1908.01675 (2019).

Cramer, E. Y. et al. Evaluation of individual and ensemble probabilistic forecasts of COVID-19 mortality in the United States. Proc. Natl. Acad. Sci. USA 119, e2113561119 (2022).

Yao, J., Zhang, X., Luo, W., Liu, C. & Ren, L. Applications of stacking/blending ensemble learning approaches for evaluating flash flood susceptibility. Int. J. Appl. Earth Obs. Geoinf. 112, 102932 (2022).

Zhu, B., Qian, C., vanden Broucke, S., Xiao, J. & Li, Y. A bagging-based selective ensemble model for churn prediction on imbalanced data. Expert Syst. Appl. 227, 120223 (2023).

Wang, J. & Song, G. A deep spatial-temporal ensemble model for air quality prediction. Neurocomputing 314, 198–206 (2018).

Huo, Z., Wang, L. & Huang, Y. Predicting carbonation depth of concrete using a hybrid ensemble model. J. Build. Eng. 76, 107320 (2023).

Wu, P. et al. Excess mortality associated with influenza A and B virus in Hong Kong, 1998–2009. J. Infect. Dis. 206, 1862–1871 (2012).

Tsang, T. K. et al. Interpreting seroepidemiologic studies of influenza in a context of nonbracketing sera. Epidemiology 27, 152–158 (2016).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Chen, T. & Guestrin, C. XGBoost: a scalable tree boosting system. In: Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining, 785–794 (2016).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Chung, J., Gulcehre, C., Cho, K. & Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv https://arxiv.org/abs/1412.3555 (2014).

Zerveas, G., Jayaraman, S., Patel, D., Bhamidipaty, A. & Eickhoff, C. A Transformer-Based Framework for Multivariate Time Series Representation Learning. In: Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining. 2114–2124 (2020).

Ismail Fawaz, H. et al. Inceptiontime: finding alexnet for time series classification. Data Min. Knowl. Discov. 34, 1936–1962 (2020).

Hwang, Y., Clark, A. J., Lakshmanan, V. & Koch, S. E. Improved nowcasts by blending extrapolation and model forecasts. Weather Forecast. 30, 1201–1217 (2015).

Wu, T., Zhang, W., Jiao, X., Guo, W. & Alhaj Hamoud, Y. Evaluation of stacking and blending ensemble learning methods for estimating daily reference evapotranspiration. Comput. Electron. Agric. 184, 106039 (2021).

Aiken, E. L., Nguyen, A. T., Viboud, C. & Santillana, M. Toward the use of neural networks for influenza prediction at multiple spatial resolutions. Sci. Adv. 7, eabb1237 (2021).

Cowling, B. J. et al. Impact assessment of non-pharmaceutical interventions against coronavirus disease 2019 and influenza in Hong Kong: an observational study. Lancet Public Health 5, e279–e288 (2020).

Xiong, W., Cowling, B. J. & Tsang, T. K. Influenza resurgence after relaxation of public health and social measures, Hong Kong, 2023. Emerg. Infect. Dis. 29, 2556–2559 (2023).

Ray, E. L. & Reich, N. G. Prediction of infectious disease epidemics via weighted density ensembles. PLoS Comput. Biol. 14, e1005910 (2018).

Ali, S. T. et al. Prediction of upcoming global infection burden of influenza seasons after relaxation of public health and social measures during the COVID-19 pandemic: a modelling study. Lancet Glob. Health 10, e1612–e1622 (2022).

Jones, N. How COVID-19 is changing the cold and flu season. Nature 588, 388–390 (2020).

Piret, J. & Boivin, G. Viral interference between respiratory viruses. Emerg. Infect. Dis. 28, 273–281 (2022).

Gal, Y. & Ghahramani, Z. Dropout as a Bayesian approximation: representing model uncertainty in deep learning. In: Proceedings of The 33rd International Conference on Machine Learning 48, 1050–1059 (2016).

Zhang, J., Phoon, K. K., Zhang, D., Huang, H. & Tang, C. Deep learning-based evaluation of factor of safety with confidence interval for tunnel deformation in spatially variable soil. J. Rock. Mech. Geotech. Eng. 13, 1358–1367 (2021).

Fox, S. J., Kim, M., Meyers, L. A., Reich, N. G. & Ray, E. L. Optimizing the number of models included in outbreak forecasting ensembles. medRxiv, https://doi.org/10.1101/2024.01.05.24300909 (2024).

Wong, J. Y. et al. Infection fatality risk of the pandemic A(H1N1)2009 virus in Hong Kong. Am. J. Epidemiol. 177, 834–840 (2013).

Chan, P. K. et al. Seasonal influenza activity in Hong Kong and its association with meteorological variations. J. Med. Virol. 81, 1797–1806 (2009).

Tang, J. W., Lai, F. Y. L., Wong, F. & Hon, K. L. E. Incidence of common respiratory viral infections related to climate factors in hospitalized children in Hong Kong. Epidemiol. Infect. 138, 226–235 (2010).

Chong, K. C., Goggins, W., Zee, B. C. Y. & Wang, M. H. Identifying meteorological drivers for the seasonal variations of influenza infections in a subtropical city — Hong Kong. Int. J. Environ. Res. Public Health 12, 1560–1576 (2015).

Li, Y., Wang, X.-L. & Zheng, X. Impact of weather factors on influenza hospitalization across different age groups in subtropical Hong Kong. Int. J. Biometeorol. 62, 1615–1624 (2018).

Ali, S. T. et al. Influenza seasonality and its environmental driving factors in mainland China and Hong Kong. Sci. Total Environ. 818, 151724 (2022).

Yang, W., Lau, E. H. Y. & Cowling, B. J. Dynamic interactions of influenza viruses in Hong Kong during 1998–2018. PLoS Comput. Biol. 16, e1007989 (2020).

Kolassa, S. Why the “best” point forecast depends on the error or accuracy measure. Int. J. Forecast. 36, 208–211 (2020).

Bhardwaj, R. & Bangia, A. Data driven estimation of novel COVID-19 transmission risks through hybrid soft-computing techniques. Chaos Soliton. Fract. 140, 110152 (2020).

Bracher, J., Ray, E. L., Gneiting, T. & Reich, N. G. Evaluating epidemic forecasts in an interval format. PLoS Comput. Biol. 17, e1008618 (2021).

Diebold, F. X. & Shin, M. Machine learning for regularized survey forecast combination: Partially-egalitarian Lasso and its derivatives. Int. J. Forecast. 35, 1679–1691 (2019).

Gehrke, J. In: Encyclopedia of data warehousing and mining 141–143 (IGI global, 2005).

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv https://arxiv.org/abs/1312.6034 (2013).

Altmann, A., Toloşi, L., Sander, O. & Lengauer, T. Permutation importance: a corrected feature importance measure. Bioinformatics 26, 1340–1347 (2010).

Kaneko, H. Cross‐validated permutation feature importance considering correlation between features. Anal. Sci. Adv. 3, 278–287 (2022).

Acknowledgements

The authors thank Yishen Liu for technical assistance. This project was supported by the Theme-based Research Scheme (Project No. T11-712/19-N) of the Research Grants Council of the Hong Kong SAR Government. TKT is supported by the Health and Medical Research Fund, Food and Health Bureau, Government of the Hong Kong Special Administrative Region (grant no. 21200292). BJC is supported by the AIR@innoHK program of the Innovation and Technology Commission of the Hong Kong SAR Government.

Author information

Authors and Affiliations

Contributions

T.K.T., B.J.C, and C.V. designed research; T.K.T. and Q.D. performed research; Q.D. and T.K.T. contributed new analytic tools; T.K.T., Q.D. analyzed data; and T.K.T., Q.D., B.J.C. and C.V. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

B.J.C. reports honoraria from AstraZeneca, Fosun Pharma, GSK, Haleon, Moderna, Pfizer, Roche and Sanofi Pasteur. All other authors report no other potential conflicts of interest.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tsang, T.K., Du, Q., Cowling, B.J. et al. An adaptive weight ensemble approach to forecast influenza activity in an irregular seasonality context. Nat Commun 15, 8625 (2024). https://doi.org/10.1038/s41467-024-52504-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-024-52504-1

- Springer Nature Limited