Abstract

Functional interactions between brain regions can be viewed as a network, enabling neuroscientists to investigate brain function through network science. Here, we systematically evaluate 768 data-processing pipelines for network reconstruction from resting-state functional MRI, evaluating the effect of brain parcellation, connectivity definition, and global signal regression. Our criteria seek pipelines that minimise motion confounds and spurious test-retest discrepancies of network topology, while being sensitive to both inter-subject differences and experimental effects of interest. We reveal vast and systematic variability across pipelines’ suitability for functional connectomics. Inappropriate choice of data-processing pipeline can produce results that are not only misleading, but systematically so, with the majority of pipelines failing at least one criterion. However, a set of optimal pipelines consistently satisfy all criteria across different datasets, spanning minutes, weeks, and months. We provide a full breakdown of each pipeline’s performance across criteria and datasets, to inform future best practices in functional connectomics.

Similar content being viewed by others

Introduction

The human brain is a remarkably complex system, comprising a large number of regions interacting over time. To address this challenge and obtain insights about distributed brain function and dysfunction, neuroscientists have turned to network science, whereby different parts of the brain can be viewed as nodes in a network, and the statistical relationships between them are used to represent connections between nodes1,2,3,4,5. This powerful approach uses graph theory to quantify key aspects of brain network organisation in vivo, illuminating the neurobiological underpinnings of healthy and pathological cognition, behaviour and individual differences6,7,8,9,10,11,12. In particular, resting-state functional MRI (rs-fMRI) is a very popular imaging tool, due to its excellent spatial resolution and wide applicability13: being task-free, it can be easily administered even to challenging populations, from in-utero foetuses14 to severely injured and even unconscious patients15,16,17. Indeed, aberrant functional connectivity patterns have been observed in many neurological and psychiatric conditions18,19,20,21,22.

However, recent studies have highlighted how different analysis workflows can lead to sometimes drastically different conclusions about the same neuroimaging dataset23, owing to a vast pool of possible methodological choices which effectively constitute a combinatorial explosion problem24. Crucially, such a combinatorial explosion also plagues network analyses of the human brain: even beyond substantial differences introduced by data preprocessing and denoising procedures25,26, a wide variety of approaches have been proposed to derive brain networks from preprocessed functional neuroimaging data27,28. The very definition of nodes in brain networks is controversial: although fMRI voxels have no intrinsic biological meaning, it is well-established based on both functional involvement and lesion studies but also cellular, molecular, and fibre architecture that the brain exhibits biologically meaningful regional organisation, such that voxels can be grouped together into anatomically distinct areas24,29,30,31. However, there is yet no consensus on the most appropriate parcellation of the human brain, or the number and spatial extent of brain regions, or whether they should be discrete or overlapping, spatially contiguous or discontiguous24,30,32,33,34. Similar difficulties arise for the definition of functional connections (edges) between nodes: how to quantify them, which ones to retain for analysis, and whether to emphasise the presence/absence of connections (binary network) or their relative strength (weighted network)21,27, highlighting the intricacies of this issue32. This challenge has practical consequences: even with high-quality data, a poor choice of network construction pipeline may produce misleading conclusions about neurobiology and functional organisation, and possibly misinform biomarker discovery and clinical practice. Thus, to ensure the value of graph-based estimates as clinical biomarkers, it is of paramount priority to establish what is the most appropriate way to construct a functional brain network from rs-fMRI data.

Reliability of network topology is of fundamental importance for any subsequent analysis of network properties35: any pipelines that recover vastly different topologies from two scans of the same individual taken within the same hour, are liable to produce misleading results when used to associate network properties with behavioural traits13 or clinical outcomes36. Thus, identification of reliable network construction pipelines represents a fundamental prerequisite for both network-based investigation of individual differences using functional neuroimaging37,38 and subsequent efforts aimed at clinical translation39. Existing scientific work comparing different network construction steps typically focused on specific global or local network properties (e.g., modularity, small-world character, global or local efficiency, down to individual edges35) and evaluated the different alternatives by maximising the intra-class correlation of the adopted global or local network properties30,35,40,41,42,43,44,45,46,47,48,49,50.

However, these approaches both have limitations. On the one hand, focusing on local aspects (individual edges, node-level properties) runs the risk of “missing the forest for the trees”46, because networks are more than just collections of edges: rather, the way that edges are organised gives rise to micro-, meso- and macro-scale structure, which is precisely what makes network-based approaches so powerful. On the other hand, focusing on specific high-level properties of the network will inevitably limit the generalisability of results, because a vast and ever-growing array of network properties can be defined and used to obtain insights about brain function51,52, but there is no guarantee that recommendations pertaining to one will also apply to others.

In the present study, we introduce a framework to explicitly address and tame the combinatorial explosion. First, we evaluate network construction pipelines end-to-end, rather than restricting our attention to individual steps in isolation, as most previous studies have done. Second, we base our evaluation on the network’s topology, that is, the network’s organisation as a whole. For this purpose, we take advantage of the recently introduced “Portrait divergence” (PDiv) measure of dissimilarity between networks53. This information-theoretic measure simultaneously takes into account all scales of organisation within a network, from local structure to motifs to large-scale connectivity patterns. Therefore, it incorporates all aspects of network topology, enabling us to go beyond the use of specific and arbitrarily-chosen graph-theoretical properties.

Third, test–retest reliability is a necessary but arguably not sufficient condition for a pipeline to be suitable for functional connectomics54. In particular, test–retest reliability would be of limited value if it were driven simply by constant but unimportant features. Rather, a suitable pipeline should also be able to detect meaningful experimental differences, when such exist. Therefore, we seek to identify network construction pipelines that minimise spurious (noise- or motion-induced) differences between brain networks of the same individual across repeated scan sessions, but that also satisfy additional criteria of biological relevance: sensitivity to individual differences, clinical contrasts of interest and experimental manipulations - here operationalised by pharmacological intervention with the general anaesthetic propofol. Fourth, to ensure the generalisability of our results36, each pipeline is evaluated across two independent test–retest datasets, spanning short (45 minutes), medium (2–4 weeks) and long-term delays (5-16 months). Our focus here is not on preprocessing/denoising approaches to fMRI data (where a vast literature exists55,56,57,58), but rather on the workflow that begins with preprocessed fMRI data and results in a brain network. However, to ensure that our recommendations can be further generalised to datasets acquired with different scanning parameters and preprocessed with different methods, we also require that optimal pipelines should meet all the above-mentioned criteria in an additional independent dataset (test–retest dataset from the Human Connectome Project), which was acquired with higher spatial (2 mm) and temporal resolution (TR = 0.72 s) than the other datasets; preprocessed using a surface-based rather than volume-based workflow; and denoised with a different method than the anatomical CompCor used for our main datasets (FIX-ICA, which is designed to affect artifacts specifically and avoid modifying the neural signal of interest)55,59,60.

Through this comprehensive, multi-criterion approach, we compare the topologies of functional brain networks obtained from systematic combinations of different options at each step in the network construction process. (i) First, given our interest in robustness and generalisability, we conduct all our analyses on two versions of the same data: with versus without the controversial preprocessing step of global signal regression (GSR)61. This allows us to make recommendations that are specific for GSR-processed data, or for non-GSR-processed data, as well as identifying network processing pipelines that are suitable for both. (ii) Definition of network nodes: from discrete parcellations of spatially circumscribed regions-of-interest based on anatomical landmarks, or functional characteristics (combination of local homogeneity and global gradients of connectivity, from combined resting-state and task-based fMRI), or multimodal structural and functional MRI features accounting for cortical myeloarchitecture, functional activation, connectivity and topography; or from continuous, spatially overlapping maps from spatial Independent Components Analysis24,29. (ii) Number of nodes: approximately 100, 200 or 300-400, for each type of parcellation. (iv) Two different ways to define network edges from BOLD time-series: Pearson correlation or mutual information. (v) Eight different approaches to filter the network’s edges: by imposing a pre-specified density (retaining 5%, 10%, or 20% of total edges, or matching the density of the structural connectome), or imposing a pre-specified minimum edge weight (0.3 or 0.5), or using data-driven methods (Efficiency Cost Optimisation, ECO and Orthogonal Minimum Spanning Trees, OMST, two different strategies to define and then optimise the balance between network efficiency and wiring cost)62,63,64. (vi) Use of either binary or weighted networks. Figure 1 illustrates the set of choices across the investigated network construction steps that influence the construction of a functional brain network, yielding a total set of 768 pipelines (2 × 4 × 3 × 2 × 8 × 2). We assess each of these pipelines across 18 distinct combinations of criteria and datasets, yielding a total of 13,824 unique evaluations, which we make available to the reader through an interactive Pipeline Selection Tool (Supplementary Data 2).

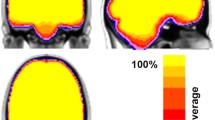

Starting from preprocessed and denoised data, the following steps are involved. (i) Use of data with vs without global signal regression (GSR), in addition to other denoising protocol (aCompCor for NYU-short, NYU-long and Cambridge datasets; FIX-ICA for HCP); (ii) Definition of nodes (based on anatomical features, local and global functional characteristics, or multimodal features; or Independent Components Analysis); (iii) Choice of number of nodes (approximately 100, 200, or 400); (iv) Definition of connectivity measure (from Pearson correlation or mutual information); (v) Choice of edges to retain (8 filtering schemes considered, based on a priori choices of network density, or minimum edge weight, or data-driven strategies to optimise the balance between network efficiency and wiring cost), (vi) Use of binary or weighted edges. In total, we consider 2 × 4 × 3 × 2 × 8 × 2 = 768 unique pipelines. For each pipeline, the resulting functional networks are compared for the same subject across different time-spans (minutes, weeks, or months) using the Portrait Divergence. A network portrait for a binary network is a matrix B whose rows each correspond to a histogram obtained by thresholding the matrix of shortest paths between the networks’s constituent nodes, at each path length l between 0 and the network’s diameter L, such that entry Bl,k encodes the number of nodes that have k nodes at distance l. For weighted networks, the histogram is obtained by binning (see Methods). Illustration of parcellations adapted from refs. 34 and 44; illustration of Portrait Divergence adapted from ref. 53.

Overall, a strength of our current study is our ability to make recommendations for the choice of pipelines end-to-end, not only on the basis of theoretical gold standard metrics (test–retest) but also on the basis of practical relevance: meaningful inference about changes in brain functional network topology and individual differences, and robustness and generalisability. To anticipate our main findings, we discovered large and systematic variability among pipelines’ ability to recover a reliable network topology, with the majority of pipelines failing to meet at least one criterion. Choice of an inappropriate network construction pipeline can lead to results that are not only misleading (statistically significant in the opposite direction as the true effect), but replicably so (being observed in two independent datasets). While our results show that an uninformed choice of pipeline will likely be suboptimal, we also identified a number of pipelines that satisfy all our criteria, in all four test–retest comparisons, making them suitable candidates for functional connectomics and biomarker discovery. Through this multi-dataset, multi-criteria, multi-scale and multi-step approach, we provide a comprehensive set of benchmarks for trustworthy functional connectomics.

Results

We used an information-theoretic measure of distance between network topologies across scales, termed Portrait divergence (PDiv), to systematically compare 768 alternative network construction pipelines in terms of their ability to recover similar brain network topologies from functional MRI scans of the same individual across minutes (NYU dataset, same-session scans), weeks (Cambridge dataset), or months (NYU dataset, between-sessions comparison) (see ”Methods” section and Figs. S1–2 for examples of network portraits and their divergence). Additionally, we considered an additional dataset (HCP test–retest) that was acquired with higher spatial (2 mm isotropic) and temporal resolution (0.72 s TR); with longer duration (1200 volumes); denoised using FIX-ICA instead of aCompCor; and parcellated on the surface rather than in volumetric space, as for the other datasets65,66,67. Our end-to-end approach allowed us to simultaneously assess the effects of parcellation type and number of nodes; connectivity quantification, thresholding and binarisation; and global signal regression; while ensuring robustness to aspects such as acquisition, time between test and retest and denoising method.

Being grounded in information theory, the Portrait divergence between two networks can be interpreted as measuring how much information is lost when using one network to represent another: it ranges from 0 (no information loss) to 1 (complete information loss)53.

To identify suitable pipelines, we required each of the following criteria to be met:

-

Criterion (I): Avoiding spurious differences (“PDiv ranking”). Since the two networks that we consider are derived from different scans of the same healthy individuals under conditions in which no experimentally meaningful changes in functional network topology are expected, we aim to identify pipelines that minimise test–retest PDiv. We consider pipelines as candidates for optimal if they are in the top 20% in terms of the average PDiv rank calculated across all test–retest intervals.

-

Criterion (II): Detecting true experimental differences (“propofol”). Suitable pipelines should detect a significant effect for propofol, in the right direction, in both propofol datasets, i.e., a pipeline is excluded if it fails to detect the expected effect (greater change between wakefulness and anaesthesia than between two awake scans) in either of the two propofol datasets.

-

Criterion (III): Detecting inter-individual differences (“within-between”). A pipeline fails this criterion if the resulting networks are more similar between than within subjects more than 50% of the times, for any of the four test–retest datasets.

-

Criterion (IV): Avoiding motion-induced differences (“motion”). A pipeline fails this criterion if its PDiv has a significant correlation with differences in head motion in any of the four test–retest datasets.

-

Criterion (V): Non-empty networks. As a final check, we also exclude any pipelines that remove all connections from a network, in any of the four test–retest datasets.

These criteria also incorporate the need for recommendations to be generalisable across datasets and acquisition/preprocessing choices, since we only consider a criterion to be met if it is met in all the relevant datasets.

A summary of all pipeline characteristics can be found in the interactive Pipeline Selection Tool (Supplementary Data 2). We provide an Excel spreadsheet with an interactive table, including filters that allow selection based on multiple criteria at once to identify pipelines that adhere to the specific criteria desired by the reader. We encourage readers to view the interactive table concurrently with the results described below, as this will allow a closer inspection of associations between a pipeline’s specific network processing choices and the desirable properties described in each subsection of the Results. A user guide for the interactive Pipeline Selection Tool (Supplementary Data 2) is also included in the Supplementary Material.

Portrait Divergence identifies drastic and systematic variability across pipelines’ capacity to avoid spurious differences

For each dataset, Fig. 2 illustrates the distributions of group-mean test–retest similarities of network topologies (portrait divergence) across the full set of 768 pipelines (See Fig. S3-30 for the distribution of PDiv across pipelines, broken down by network construction step, for each dataset). Clearly, two patterns can be observed. First, network construction pipelines differ widely in how well they are able to recover the same network topology across different scans of the same individual, on average - whether on a timescale of minutes, weeks, or months. The worst pipelines induce a greater than five-fold increase in topological dissimilarity (PDiv) between functional connectomes of the same individual, compared with the best-performing ones.

From top to bottom: Cambridge dataset (rescan within 2–4 weeks). NYU short-term dataset (rescan within 45 minutes). NYU long-term dataset (rescan within 16 months; average 11.4); HCP dataset (rescan 1–11 months). Right-side: highlighting the top 5 (lowest PDiv) and bottom 5 performers (highest PDiv). Each data-point represents one pipeline (n = 768). Red lines mark 2 standard deviations from the mean of the distribution. Box plot centre line, median; box limits, upper and lower quartiles; whiskers, 1.5x interquartile range. GSR Global Signal Regression, OMST Orthogonal Minimal Spanning Trees, PDiv Portrait Divergence. Source data are provided as a Source Data file.

Second, our results indicate high consistency across the four test–retest comparisons considered here, in terms of which data-processing steps feature prominently among the pipelines that are best (and worst) at minimising the average within-subject PDiv. Correlation between all pipelines’ ranks across time intervals revealed very high consistency between all datasets (Spearman’s ρ ranging from 0.73 to 0.97, all p = 2.2 × 10−16 (Fig. 3), indicating that pipelines’ suitability for network construction is not dataset-specific but rather can generalise to independent groups of individuals - spanning time intervals from hours to months. We view a small PDiv in these datasets as a desirable property: even though learning and plasticity could account for some amount of connectome reorganisation over weeks or months in healthy adults, such factors cannot plausibly be expected to be the cause of any network-wide reorganisation observed within the course of a single hour (in the absence of any intervention), which should instead be treated as unwanted noise.

PDiv portrait divergence, HCP Human Connectome Project data, NYU New York University dataset. All p = 2.2 × 10−16 (two-sided) from Spearman correlation. Each data-point represents one pipeline (n = 768). Shading indicates standard error of the fitted line to model the linear relationship between the two respective variables. Source data are provided as a Source Data file.

Sensitivity to experimental differences: Low-PDiv pipelines are more likely to detect pharmacologically-induced connectome reorganisation

We have shown that network construction pipelines vary drastically and systematically in their robustness to noise-induced changes in the functional connectomes of the same individuals scanned multiple times. However, this minimisation of noise-induced differences should not come at the expense of also minimising meaningful changes in network topology, such as control-patient contrasts (an example of this would be a pipeline that never detects any changes). Rather, a good pipeline should simultaneously minimise noise-induced differences, while remaining sensitive to true ones. In other words, test–retest reliability is not the only criterion that neuroscientists need to consider for their choice of network construction pipelines: ultimately, the resulting networks need to also demonstrate empirical usefulness by providing neurobiologically meaningful results43,44. An ideal pipeline would therefore strike a balance between sensitivity to experimental manipulations or contrasts of interest on the one hand, and low portrait divergence in test–retest over relatively short periods of time in healthy individuals and under the same test conditions on the other hand. Therefore, in addition to identifying pipelines that do not detect differences when we know that there should be none or only minor ones (best exemplified by test–retest scanning within the same hour), we should find pipelines that can also detect a difference, when we know that a difference must be present: we need to combine a low rate of false positives (low test–retest PDiv) with a low rate of false negatives.

Perhaps the most drastic possible difference that can be induced between two scans of the same individual, is that between consciousness and unconsciousness. General anaesthetics such as the intravenous agent propofol can rapidly and reversibly induce a state of unconsciousness, whereby the subject is behaviourally unresponsive and has no subjective experiences. There is arguably no short-term, reversible alteration of the mind that is so all-encompassing, and it cannot be expected to leave the functional connectome unaltered. Therefore, if a pipeline is unable to detect anaesthetic-induced differences in the topology of the functional connectome, we can reasonably conclude that it is not sensitive enough for use in network neuroscience.

Following this rationale, we compared the PDiv from the NYU-short dataset (two scans within the same hour) against the PDiv observed between an awake rs-fMRI scan, and a second scan of the same individuals while under propofol-induced general anaesthesia (also acquired within the same visit). We seek to identify pipelines that produce significantly greater PDiv between an awake and an anaesthetised scan of the same individual, than between two awake scans acquired at a comparable distance in time. To ensure the reliability of our approach, we repeat this analysis for two independent datasets of propofol anaesthesia to further bolster the reproducibility and generalisability of our findings.

Across both datasets, our results suggest that pipelines with lower PDiv also tend to have t-scores reflective of the expected effect of propofol (Fig. 4), as demonstrated by significant correlations between short-term test–retest PDiv (based on the NYU dataset) and t-scores both for the Western (ρ = 0.34, p = 2.2 × 10−16) and the Cambridge propofol datasets (ρ = 0.27, p = 4.4 × 10−14). As control test–retest PDiv becomes larger, t-scores also seem to become more variable. Reassuringly, we identified multiple pipelines that provide the expected effect in both datasets (Fig. 4, green dots). Intriguingly, however, we also identified a number of large-PDiv pipelines that detect a statistically significant difference between test–retest and anaesthesia, but in the opposite direction: that is, greater connectome reorganisation between two awake scans, than between an awake and an anaesthetised scan (Fig. 4, red triangles). In other words, these pipelines produce networks that are actively misleading about what we have strong reason to believe must be the ground truth (because there is a very substantial difference introduced by anaesthesia, reflected in the suspension of the brain’s input-processing abilities and cognitive function more broadly). These pipelines can be found in the interactive Pipeline Selection Tool (Supplementary Data 2; pipelines labelled “Opposite” in the columns Status Propofol West and Status Propofol Cam). Worryingly, we find that a non-negligible number of pipelines (38) produce the opposite of the expected effect for both propofol datasets - thereby returning results that are systematically misleading, and highlighting the dangers of an inappropriate choice of network construction workflow. Of note, all the consistently misleading pipelines use an absolute threshold; all but three use weighted edges; and 24/47 use mutual information to quantify connectivity. Overall, 85 pipelines show the expected effect for both propofol datasets, thereby satisfying this criterion, whereas 455 pipelines are neutral (failing to detect statistically significant differences in both propofol datasets).

Left: Cambridge anaesthesia dataset (Spearman’s ρ = 0.27, p = 4.4 × 10−14, two-sided). Right: western anaesthesia dataset (Spearman’s ρ = 0.34, p = 2.2 × 10−16, two-sided). The t-scores are obtained from permutation-based two-sample t-tests comparing PDiv from test–retest NYU short, against PDiv from awake vs anaesthesia. Horizontal red lines indicate t ± 1.96 from two-sample t-test (two-sided), corresponding to a statistically significant difference between the two groups’ mean, with negative t-scores corresponding to PDiv (anaesthesia) > PDiv (test–retest). Each data-point represents one pipeline (n = 768). Green dots indicate pipelines that produce the expected effect in both datasets. Red triangles indicate pipelines that produce a misleading effect in both datasets. PDiv Portrait Divergence. Shading indicates standard error of the fitted line to model the linear relationship between the two respective variables. Source data are provided as a Source Data file.

Sensitivity to inter-individual differences

Another means by which the adequacy of a pipeline may be assessed is by comparing PDiv within subjects (scan 1 vs. scan 2 for subject 1, scan 1 vs. scan 2 for subject 2, etc…) and PDiv between subjects (subject 1 vs. subject 2, etc…). The proportion of participants for whom the within-subjects (WS) PDiv is smaller than between-subjects (BS) PDiv may be used as an additional criterion of pipeline quality, with the rationale that even after accounting for bona fide changes due to plasticity and learning, an individual’s functional connectome should not differ from itself at another point in time, more than it differs from the connectomes of other individuals.

Our results suggest that pipelines with smaller PDiv are also better at producing networks that are sensitive to individual differences, such that the same subject’s brain network diverges less from the same subject’s network than from those of other people. This was the case for the NYU short test–retest data (ρ = −0.31, p = 2.2 × 10−16), the medium-term test–retest time interval for the Cambridge dataset (ρ = −0.44, p = 2.2 × 10−16), the NYU long test–retest data (ρ = −0.28, p = 2.2 × 10−16) and the HCP dataset (ρ = −0.51, p = 8.08 × 10−9; Fig. 5).

Pipeline PDiv (portrait divergence) in a given dataset is plotted against the proportion of participants in the same dataset for whom the within-subject PDiv (baseline vs follow up) is smaller than between-subject PDiv. Each data-point represents one pipeline (n = 768). Pipelines above the red line meet the within-between criterion such that portrait divergence is smaller for within-subject test–retest compared to between subject comparisons. Shading indicates standard error of the fitted line to model the linear relationship between the two respective variables. Spearman correlation coefficient (two-sided) is used to assess the strength of relationship and its statistical significance. Source data are provided as a Source Data file.

Passing and failing pipelines on the basis of this within-between criterion can be found in the interactive Pipeline Selection Tool (Supplementary Data 2; column “Criterion within-between all”). The Pipeline Selection Tool also lists the proportion of participants in a given pipeline for which within-subject PDiv is smaller than between-subjects PDiv in columns Within-between Cam (%), Within-between NYU short (%), Within-between NYU long (%) and Within-between HCP (%). In the Cambridge dataset, 72 pipelines were excluded based on this criterion. This was the case for 62 in the NYU short-term test–retest data and for 67 for the NYU long-term data as well as for 39 pipelines in the HCP data. In total, on the basis of the overall within-between criterion across datasets, 159 unique pipelines were excluded and 609 were retained.

Avoiding motion confound

As a further criterion, we sought to identify and exclude pipelines whose PDiv is significantly correlated with differences in subject motion (mean framewise displacement). For the Cambridge dataset, 45 pipelines showed a significant correlation between PDiv and motion (magnitude of the Spearman correlation coefficient ρ ranging between 0.60 and −0.67). For the NYU short-term dataset, 17 pipelines exhibited a significant correlation between PDiv and motion (magnitude of the correlation ranging between 0.48 and −0.57). For the NYU long-term dataset, 24 pipelines exhibited a significant correlation between PDiv and motion (magnitude of the correlation ranging between −0.53 and 0.56). Finally, for the HCP dataset we found that PDiv and motion were correlated significantly in 59 pipelines (ρ between −0.38 and 0.53).

It is argued in the literature that GSR can help to mitigate the noise induced by subject motion42,45. When contrasting all pipelines with GSR against those without GSR, no significant difference in the strength of the correlation (absolute ρ-statistic) between PDiv and motion based on this option was found in the Cambridge (t(752) = 0.03, p = 0.973, d ~ 0), the NYU short test–retest (t(700) = 1.42, p = 0.157, d = 0.11) or the NYU long-term test–retest (t(700) = 0.75, p = 0.453, d = 0.06). That is, whether GSR was or was not applied, this decision had no bearing on the degree to which test–retest portrait divergence was associated with motion, on average across all pipelines. However, in the HCP data, there was a small but significant effect of GSR on the magnitude of the correlation between motion and PDiv (t(684) = −3.89. p = 1.09 × 10−4, d = −0.29), showing a stronger association between PDiv and motion in pipelines without GSR than with GSR.

Avoiding empty networks

Pipelines employing an a priori threshold on the strength of edges, rather than on their density (i.e., removing all edges with weight below a pre-specified value, also known as an “absolute” threshold) run the risk of removing all edges in the network, if none surpass the threshold value. This would be unquestionably incorrect, but it is conceivable that such an occurrence might never materialise in practice. Indeed, we found that this never occurred when edge weights were defined in terms of Pearson correlation. However, empty networks were returned for at least one subject by a total of 68 unique pipelines employing mutual information for edge weight definition (50 occurrences in the NYU short test–retest, 52 occurrences in the NYU long test–retest, 68 in the HCP dataset, eight in the Cambridge dataset). As expected, all of these pipelines used absolute threshold values: mostly with the 0.5 threshold, but for 20 pipelines this was also the case for the more lenient 0.3 threshold (reported in the interactive Pipeline Selection Tool [Supplementary Data 2] under the column Criterion edge failure). Therefore, any pipeline which removes all edges in any one dataset is excluded from further consideration as a suitable candidate. However, note that pipelines that fail this check would also be eliminated from consideration based on the other four criteria: only one of those that failed the criterion of avoiding empty networks satisfied both the within-between and propofol criteria (Lausanne129 + No GSR + binarisation + Abs 0.3 + Mutual Info).

Overall recommendations for network construction pipelines

As a final step, we combined all the criteria identified above:

-

(I)

Avoiding spurious differences: we operationalise this as having low PDiv (pipelines with the average global rank in the top 20%, as calculated from the average of independent rankings within each dataset; 154 pipelines fulfilled this criterion);

-

(II)

Detecting true experimental differences: ability to correctly identify statistically greater PDiv in anaesthesia than test–retest, across both propofol datasets (85 pipelines passed);

-

(III)

Sensitivity to inter-individual differences: ability to detect smaller within- than between-subjects PDiv in at least 50% of subjects, in each of the four test–retest datasets (609 pipelines passed);

-

(IV)

Avoiding motion confounds: no significant correlation between PDiv and subject motion, in any of the four test–retest datasets (566 passed);

-

(V)

Non-empty networks: we rejected pipelines that produce empty networks for any subject in any of the four test–retest datasets (700 pipelines pass).

Out of the full set of 768 pipelines considered here, we found that only 9 (~1%) jointly satisfied all of our criteria in each of the test–retest datasets that we considered – meaning that the vast majority of pipelines (759 out of 768) may be less than optimal (Fig. 6 and Supplementary Data 1). However, 71 pipelines were excluded from the optimal ones because they each failed one single criterion in one single dataset, such that their failures were neither systematic nor pervasive. In particular, the set of optimal pipelines would expand to 35 (~5% of the total) if a less stringent criterion for the PDiv were adopted, such that all pipelines in the upper 50% were admissible (while still having to satisfy all other criteria in each of the relevant datasets).

Each data-point represents one pipeline (n = 768), with colour and shape reflecting which criteria are met. Criterion (I): Avoiding spurious differences (“PDiv ranking”). We consider pipelines as optimal if they are in the top 20% in terms of the global rank based on PDiv (Portrait Divergence) calculated as the average rank achieved in each dataset. We further show pipelines which fulfil all other criteria while being among the top 50% in terms of the average global rank. The maximum average PDiv among the top 50% pipelines is 0.169. Criterion (II): Detecting true experimental differences (“propofol”). Suitable pipelines should detect a significant effect for propofol, in the right direction, in both propofol datasets, i.e. a pipeline is excluded if it fails to detect the expected effect in either of the two propofol datasets. The Y axis reports the maximum between the two t-statistics obtained for the two propofol datasets, so pipelines satisfy the sensitivity criterion if they score < 1.96 on this axis (i.e., find a significant effect for propofol, in the right direction, in both propofol datasets). Criterion (III): Detecting inter-individual differences (“within-between”). A pipeline fails this criterion if the resulting networks are more similar between than within subjects more than 50% of the times, for any of the four test–retest datasets. Criterion (IV): avoiding motion-induced differences (“motion”). A pipeline fails this criterion if its PDiv has a significant correlation with differences in head motion in any of the four test–retest datasets. Criterion (V): non-empty networks. As a final check, we also exclude any pipelines that remove all connections from a network, in any of the four test–retest datasets. “Fail both” refers to pipelines failing in terms of motion and within-between criteria, while “Pass both” refers to pipelines which satisfy both of these criteria. Points circled in purple represent pipelines that produced empty networks. Overall, 9 pipelines satisfy all criteria in all datasets; this number grows to 35 if a more liberal PDiv criterion is adopted (top 50% global rank). Source data are provided as a Source Data file.

When considering the distribution of individual pipeline steps among the 9 optimal ones, three clear patterns emerge: all pipelines use weighted (rather than binary) edges, and all quantify connectivity in terms of Pearson correlation (rather than mutual information) (Fig. 7). Moreover, the preferred filtering method among the optimal pipelines is the OMST, a method to optimise the balance between network efficiency and wiring cost in a data-driven manner (selected in 5/9 cases). In other words, the single combination of Pearson correlation, weighted edges and OMST accounts for 5 out of 9 optimal pipelines, despite being only one out of 2 × 8 × 2 = 32 equally likely combinations of edge quantification, thresholding and binarisation. This is highly unlikely to occur just by chance: p = 4 × 10−5 for the probability of randomly choosing 9 pipelines out of 768 and having 5 or more of them belong to the same group (out of 32 possible groups), assessed with permutation testing. In contrast to the clear importance of edge definition, parcellation choice seems to have less bearing on a pipeline’s performance, but we do observe greater prevalence of pipelines using GSR than not (7 out of 9 optimal ones, and 22/35 near-optimal). We also find that while edges based on Pearson correlation still dominate under the less stringent criterion (28/35), there is now also a number of well-performing pipelines using proportional thresholds (either fixed or SDM) with binarised edges (18/35). Node type and number remain less clearly decisive: among the 35 near-optimal pipelines, every single combination of parcellation type and size is present at least once.

Pie charts demonstrate, for each network construction step, the proportion and absolute number of each option that is found among the optimal pipelines. FD fixed density, GSR global signal regression, OMST orthogonal minimal spanning tree, SDM structural density. See Fig. S31 for a version of this figure with a breakdown of the pipelines under the more liberal PDiv criterion. Illustration of parcellations adapted from refs. 34 and 44.

Overall, inspecting the whole list of optimal pipelines (Supplementary Data 1) clearly reveals that considering each pipeline step in isolation from the others does not provide the full picture. Specifically, we found that a few combinations of options account for most of the optimal pipelines (Fig. 8), with 5 out of 9 pipelines which meet all inclusion criteria using the combination of weighted edges, Pearson correlation and OMST filtering for edge definition and thresholding. These results suggest that a pipeline’s performance is not solely attributable to any specific step: rather, some combinations of steps seem to be especially favourable.

Pie chart displays the frequency of each combination of edge type definition, filtering, and binarisation among the 9 pipelines which fulfil all criteria for a suitable network construction pipeline. See Fig. S32 for a version of this figure with a breakdown of the pipelines under the more liberal global rank criterion, and Figs. S33–35 for a breakdown of the relationship between PDiv and commonly studied graph properties, in terms of edge quantification, binarisation, and filtering method. FD5%, fixed-density threshold at 5% density; FD10%, fixed-density threshold at 10% density. OMST Orthogonal Minimal Spanning Trees, SDM structural density matching, FD fixed-density.

Evaluating the full range of pipeline performance

Although we defined pass-fail criteria to be able to provide clear recommendations for the reader, it is important to appreciate that the performance of each pipeline is in fact continuous for all our evaluation metrics (except the presence of empty networks). Therefore, in addition to the optimal-nonoptimal distinction presented above, we can also evaluate the full performance of each pipeline, across all datasets and criteria. This approach allows us to identify patterns of similarity between pipelines, and their main drivers (Fig. 9).

Left: hierarchical clustering of pipelines, in terms of similarity (correlation) of their performance across datasets and criteria. The clustering solution highlights the difference between pipelines producing binary versus weighted networks. For the PDiv criterion, best performance refers to the smallest PDiv; for the propofol criterion, best performance is the greatest t-score in the correct direction; for the within-between criterion, best performance means the greatest proportion of participants for whom the within-subjects PDiv is smaller than between-subjects PDiv; for the motion correlation criterion, best performance is identified as the smallest magnitude of correlation with motion. The empty networks criterion is not included, since it is not continuous. Overall rank is the mean across all columns. Right: correlation between each pair of pipelines in terms of performance, arranged by the same hierarchical clustering. See Fig. S37 for the same figure, but sorted by overall rank.

In particular, hierarchical clustering reveals that the main distinction between pipelines, in terms of ranked performance across all datasets and criteria, is between pipelines producing binary versus weighted networks. We confirmed this statistically: the mean rank of weighted pipelines is 330.59 (SD = 73.76), whereas the mean rank for binary pipelines is 400.48 (SD = 59.33), t(698) = 13.81, p < 0.001, Hedge’s g = 1.04, from independent-samples t-test (Fig. S38). Among the other network construction steps, we also found significant differences for edge type (with Pearson correlation outperforming mutual information) (Fig. S39), filtering choice (with OMST and FD20% performing best, on average; Fig. S40), and number of nodes (with a small preference for more fine-grained parcellations; Fig. S41) In contrast, no significant difference in pipelines’ overall rank were found as a result of GSR use, or whether nodes are defined from an anatomical atlas, functional atlas, multimodal atlas, or Independent Components Analysis (Fig. S42-S43).

These results indicate that network construction steps vary in terms of their overall impact on pipeline performance, and are in line with our observation that optimal pipelines tend to share specific steps pertaining to edge type, filtering and binarization. However, not every combination of the individually best-performing steps is optimal, and therefore it is not sufficient to consider individual steps in isolation: only end-to-end evaluation of full pipelines provides the full picture.

Discussion

A tremendous amount of neuroimaging research with functional MRI is devoted to finding reliable functional connectomic biomarkers for brain function and its disorders – but this process involves a combinatorial explosion of arbitrary choices21,22,27.

Here, we tackled this challenge by systematically investigating 768 unique pipelines that a neuroscientist could adopt to obtain brain networks from resting-state fMRI data, arising from the combination of several key data-processing steps. To do so, we departed from most previous studies in a number of key respects. First, we explicitly addressed the combinatorial explosion, by considering pipelines end-to-end, rather than restricting our attention to specific steps. Second, rather than choosing any arbitrary local or global graph-theoretical property for our comparisons, we focused on the pipelines’ ability to recover the networks’ overall topology across all scales. Third, we did not focus exclusively on test–retest reliability, but rather we adopted an entire battery of criteria that any appropriate pipeline for functional connectomics should meet, in order to provide practically useful results: these include minimising both random (noise-induced) and systematic (motion-induced) topological distortions, while also being sensitive to differences between individuals and between experimental conditions. Finally, we required all criteria to be consistently met in each of several independent datasets, encompassing short (minutes), medium (weeks) and long timespans (up to 16 months), and using different spatial and temporal resolution, and different preprocessing/denoising approaches, to ensure the generalisability of our recommendations. Through this multi-dataset, multi-criteria, multi-scale and multi-step approach, our goal was to provide a comprehensive set of benchmarks for trustworthy functional connectomics.

Our first finding is that inappropriate network pipelines are ubiquitous and can produce systematically misleading results. The substantial majority of the pipelines that we considered failed to meet at least one of our criteria for consistent functional connectomics. We also observed drastic and systematic variability among pipelines’ performance: an inappropriate choice of pipeline can greatly impair one’s ability to recover a reliable network topology. Even for scans obtained less than 45 minutes apart, we observed up to a 5-fold increase in topological dissimilarity (PDiv) compared with the best-performing pipelines (Fig. 2), even across several months. Put differently, adoption of an inappropriate pipeline can distort the functional connectome more drastically than the passage of nearly a year – which may have far-reaching repercussions for longitudinal studies of brain network properties.

A recent review of statistical power in network neuroscience suggested that “many real effects may be missed by current studies”68. Our results are in line with this observation: we found that the vast majority (approximately 90%) of pipelines considered were unable to reliably detect the effect of general anaesthesia on the functional connectome. Thus, one potential implication of our work is that some true effects may have been missed due to a suboptimal choice of network construction pipelines for functional connectomics.

Even more worryingly, choice of the wrong pipeline can lead to results that are not only misleading (statistically significant in the opposite direction as the true effect), but replicably so (being observed in two independent propofol datasets): we found this to be the case for 38 pipelines. This means that adopting an inappropriate pipeline for network analysis can turn the replicability of results against researchers, boosting their confidence in results that are actively the opposite of the truth. Being consistently wrong rather than randomly so, these results would not be “washed out” by approaches such as meta-analytic aggregation: on the contrary, they would propagate to the meta-analysis itself. Clearly, such a scenario would have devastating consequences for the use of functional connectomics for biomarker identification; in the worst-case scenario, a treatment that actually makes the disease worse may be systematically mis-identified as making it better.

Finally, our results show that the above-mentioned concerns cannot be easily dismissed, because suboptimal pipelines are not a rare exception, but rather the rule: the vast majority of pipelines among those considered (759 out of 768) failed to meet at least one of our criteria (or 733 if the criterion of having low PDiv on average is relaxed). In other words, our results clearly demonstrate that even when combining steps for network construction that are individually sensible, it is overwhelmingly likely (over 98%, in our sample of pipelines) that the resulting overall pipeline will not be appropriate for functional connectomics – at least not optimal. Indeed, we find that no single step uniquely determines a pipeline’s ability (or inability) to accurately recover the network’s topology: pipelines differing by only one step are largely overlapping in terms of their portrait divergence distributions (Figs. S7–S30). These observations highlight the importance of focusing on entire pipelines as we do here, in contrast to most published approaches that typically consider only one or two steps in isolation. This approach enabled us to identify some pipelines (i.e., specific combinations of steps) that successfully pass all our criteria in every single one of our datasets.

Fortunately, we were able to identify a number of pipelines (9 out of 768) that consistently recover effects in the correct direction, and that additionally satisfy all our other criteria for trustworthy functional connectomics: low PDiv for test–retest scans, indicating that the pipeline minimises spurious differences; greater PDiv across subjects than within the same subject on average, indicating that the pipeline reflects the ground-truth difference between networks; no empty networks; and no correlation between PDiv and motion. We emphasise that each of these criteria had to be met in all our datasets, which included both differences in time-span, and also differences in data resolution and preprocessing/denoising.

Additionally, we found that pipelines’ performance on our criteria is far from random, nor does it vary idiosyncratically with each dataset, instead being highly correlated across different independent datasets spanning short, medium and long timespans (with Spearman’s ρ ranging between 0.73 and 0.97; Fig. 3). Ability to minimise test–retest differences is also correlated with a pipeline’s ability to detect true differences, when they do exist: both between different individuals (Fig. 5), and within the same individual (induced by potent pharmacological intervention; Fig. 4). In other words, there are systematic factors at play. Indeed, patterns of similarity clearly emerge among the pipelines that satisfy all our criteria. Specifically, 5 out of 9 optimal pipelines employ the same procedure for edge definition (out of 32 possible ones), consisting of Pearson correlation, weighted edges, and the OMST method of optimising the balance between network efficiency and wiring cost. This is a statistically unlikely occurrence, suggesting that there may be something about this combination that makes it especially appropriate. In fact, all 9 (or 28/35 under the less stringent PDiv criterion) employ Pearson correlation for edge definition. More combinations for edge construction become available if pipelines with PDiv rank in the top 50% are included, with fixed-density thresholds at 5% and 20% density also performing well in combination with weighted and binary edges, respectively.

The edge construction part of the pipeline therefore appears as the most crucial choice: once it is fixed, both GSR and NoGSR options are available among the optimal pipelines, and many combinations of parcellation type and size. This observation is corroborated by our assessment of pipelines’ overall ranked performance across all criteria and datasets (Fig. 9): significant predictors of better pipeline rank include edge type (Pearson correlation outperforming mutual information), filtering scheme (OMST and FD20% being the best) and especially the use of weighted networks instead of binary ones (Figs. S38–S40). In contrast, pipelines’ overall performance does not significantly differ as a function of use of GSR, or parcellation type, when these steps are considered in isolation (Figs. S42–S43). In other words, the relevance of these network construction steps for our criteria becomes apparent when they are considered as part of a full pipeline.

It is especially reassuring that our results about pipeline performance are shared across multiple independent datasets. Likewise, our results generalise across different popular methods for functional MRI denoising (aCompCor and FIX-ICA). The Cambridge and NYU datasets were acquired with parameters for spatial and temporal resolution that are widely used in functional neuroimaging studies. Therefore, we expect our results to generalise to other datasets with similar specifications, such as the publicly available and intensely studied Cam-CAN69, Philadelphia Neurodevelopmental Cohort70, CENTER-TBI71, Harvard Aging Brain Study72, Autism Brain Imaging Data Exchange (ABIDE)73, and UCLA Neurophenomics74 datasets among others, enabling the functional connectomics community to make the most of these valuable resources to study development, aging, and disease. Importantly though, our results about pipeline performance and choice of optimal pipelines also replicated in the high-quality HCP data, which have higher temporal and spatial resolution (suitable for surface-based analysis). Therefore, we expect that our recommendations should also be applicable to more recent datasets acquired with HCP-like specifications, such as UK Biobank75. However, our recommendations are intended to complement investigators’ domain-expertise, not replace it: each study has its own driving hypotheses and unique challenges. For this reason, we have made available our interactive Pipeline Selection Tool (Supplementary Data 2), which provides a full breakdown of each pipeline’s performance across each criterion and each dataset, totalling 13,824 unique assessments: to enable readers to engage with our results, and identify pipelines that fit their specific requirements.

Our optimal pipelines are those that pass all our tests across all datasets: they minimise noise-driven differences, but correctly detect genuine ones, in a way that is consistent across datasets. While this stringency undoubtedly contributed to the exclusion of many pipelines – 71 of which only due to a single failure in a single dataset – it should equally bolster our confidence about the recommended pipelines’ suitability to provide sensible results, including across different time-spans and different data acquisition and preprocessing choices. By recommending a select number of network construction pipelines that provide the most replicable and generalisable results, we hope that the present work will facilitate future meta-analyses of functional connectomics studies.

We next discuss consistent features among optimal network construction pipelines. Owing to its ease of application and interpretation, Pearson correlation is a cornerstone of functional connectomics, and remains the most widely used method to quantify connectivity between regions across thousands of published studies (accounting for over 75% of the studies reviewed by ref. 21). It is therefore reassuring for the field that our optimal pipelines overwhelmingly favour Pearson correlation to quantify functional connectivity. Consistent with our results, correlation was also shown in previous work to outperform mutual information and partial correlation in terms of test–retest reliability, but also in terms of fingerprinting accuracy76.

At the microscopic level of neurons and circuits, the brain is unquestionably a nonlinear system. However, the good performance of Pearson correlation that we observed in our results dovetails with previous evidence by Hlinka and colleagues, whose extensive modelling led them to conclude that “the practical relevance of nonlinear methods trying to improve over linear correlation might be limited by the fact that the data are indeed almost Gaussian”77. More broadly, although nonlinear aspects of fMRI timeseries can be identified, capturing variability related to patient-control differences78,79, multiple studies have provided quantifiable evidence that at the macroscale level observed by functional MRI, signals may be suitably accounted for as linear80,81. This has been shown in terms of obtaining limited or no additional benefit when using more complex nonlinear methods to relate structural and functional connectivity82,83, or to predict demographic variables from functional MRI84, or when comparing the ability of linear versus nonlinear models to fit high-resolution BOLD timeseries85.

Crucially, the observed predominance of linear dynamics in macroscale brain signals cannot be dismissed as a mere artifact of functional MRI85. Although fMRI’s low temporal resolution does contribute to linearising the signal due to both temporal averaging and the limited number of samples, linear models were also recently shown by Nozari and colleagues (2023) to outperform nonlinear ones in terms of their ability to fit intracranial EEG (iEEG) time-series85, which are electrodynamic rather than haemodynamic in origin, and have much higher temporal resolution. Thus, empirical results from diverse neuroimaging modalities converge with both simulations85 and theoretical analysis86, showing that the dynamics of nonlinear stochastic populations converge to linear dynamics at the macroscale, as a result of spatial averaging. In other words, observing good performance of linear methods at the macroscale should not be viewed as un-physiological, or a mere artifact of a specific imaging modality, or a denial of the brain’s microscale nonlinearity. Rather, linearisation is an inherent consequence of observing brain activity at the macroscale as afforded by modern neuroimaging, and this phenomenon contributes to explaining why Pearson correlation is suitable for quantifying functional connectivity – at least according to the criteria that we adopted. Nonetheless, we note that although none of the 9 optimal pipelines employ MI, 7 out of the 35 near-optimal ones do. In particular, some of the near-optimal pipelines include versions of the same pipeline that only differ in the use of Pearson correlation or MI: ICA-300 GSR-Top20%-binary, ICA-200 NoGSR-Top20%-binary, Lausanne-463 NoGSR-Top20%-binary, Lausanne-463 GSR-Top20%-binary.

Pertaining to edge filtering, the OMST (Orthogonal Minimum Spanning Trees algorithm), our main recommended approach, is a data-driven method that optimises the balance between efficiency and wiring cost of the network. OMST is unique among the filtering schemes considered here, for multiple reasons. First, because it guarantees that the resulting network is not fragmented into disconnected components (Fig. S35), which we know should not be the case in the brain. This feature makes OMST analogous to percolation-based filtering schemes, whereby the weakest edges are iteratively removed from the network, up to the point where further removal would make the network disconnected, which corresponds to the percolation threshold87,88,89. Thus, OMST and percolation thresholding both ensure that global connectivity is not impacted by removal of a few weak but topologically important edges. Unlike percolation, however, OMST is not restricted to preserving only the strongest edges. Rather, weaker edges can be preferred to stronger ones and be included in the OMST-filtered network, if they contribute to an optimal balance of efficiency and cost. Because of this ability to include weaker edges over stronger ones based on their role in the overall topology, OMST avoids a pitfall of percolation thresholding, whereby the presence of a single node whose edges are all relatively weak, can result in a network that is potentially very dense (because the percolation threshold is determined by the weakest edge whose removal would make the network disconnected, and if this edge is very weak, many other edges may survive the threshold).

In other words, the second feature that makes OMST unique among the filtering schemes considered here is that OMST takes into account not only the strength of connections, but also their more general topological role in the network. Therefore, connectomes obtained through OMST can include edges that both absolute and proportional thresholding methods would simply disregard as too weak, regardless of any further role they may play in network organisation. The key role of weak connections acting as shortcuts between segregated modules, often referred to as the “strength of weak ties”89,90,91, has been increasingly recognised across artificial and biological networks, including the human brain – a clear argument in favour of OMST’s ability to reconstruct biologically plausible networks, especially in combination with weighted (rather than binary) edges, which is consistent with our optimal pipelines.

It is essential to note that despite the similar name, OMST is very different both in theory and in practice from simple Minimum Spanning Tree (MST) filtering. Reducing the network to its minimum spanning tree will enforce every individual’s network to have the same number of edges: namely, the minimum number possible. In other words, with Minimum Spanning Tree filtering the density of the reconstructed network is known a priori, given its number of nodes. This is not the case for OMST, which is a data-driven method. Specifically, while the OMST does ensure the desirable property of not leaving any node disconnected from the rest of the brain, it determines the final number of edges in a data-driven manner by optimising the network’s balance of efficiency and wiring cost. This approach therefore produces plausibly sparse networks, but not maximally sparse, and without imposing the same a-priori level across all individuals (arguably a biologically implausible feature of fixed-density methods). This difference has important practical consequences for the physiological plausibility of the reconstructed networks: simple Minimum Spanning Tree filtering would lead to brain networks with the biologically implausible property of a null clustering coefficient64, whereas this is not the case for the OMST method that we use (as clearly demonstrated in Fig. S34). We further illustrate this distinction in Fig. S36, which shows that for all datasets and individuals, pipelines using OMST filtering produce plausible values of mean clustering coefficient. In contrast, as expected the Minimum Spanning Tree filtering always results in a mean clustering coefficient of zero, regardless of any other steps in the network construction pipeline. This clearly illustrates that despite similar names, OMST (which we used) and the Minimum Spanning Tree filtering (which we did not use) lead to very different results and must not be confused.

The good performance of OMST is arguably due to this method being data-driven based on each individual connectome, rather than a one-size-fits-all. Indeed, although OMST is a relatively recent method, its use has already been recommended by several studies on multiple grounds. OMST filtering was shown to minimise topological differences between pipelines62; it has outperformed alternative thresholding schemes for functional networks in terms of recognition accuracy and reliability37,63,92; and it has also been recommended for use with alternative neuroimaging modalities such as electro- and magneto-encephalography37,63,92, suggesting that its applicability may generalise beyond rs-fMRI. Finally, the use of OMST (as well as 20% fixed-density thresholding) was also recommended by another recent study44 that evaluated a large number of individual options (though without combining them, and using as criterion the ICC of specific network properties instead of our topological approach). Therefore, our results suggest a convergence of recommendations for brain network construction across different criteria and different studies – possibly heralding the emergence of consistent analytic practices in the field. This convergence may in part be helped by our choice to use the Portrait divergence (PDiv), which enabled us to take into account both local and global aspects of network organisation across scales53: by considering the network’s topology as a whole, our results are inherently more general than results based on any specific graph-theoretical metric.

In this study, we endeavoured to systematically sample and combine many of the most common options across each step in the process of constructing a functional brain network from rs-fMRI data – resulting in 768 unique pipelines. However, due to combinatorial explosion, it would be unfeasible to consider every single option that has been proposed in the literature, and this inevitable limitation should be borne in mind when interpreting our results.

Pertaining to node definition, we considered both atlas-based parcellations, and Independent Components Analysis. Atlas-based methods are the most widely used approach for defining nodes in functional connectomics21,33, with their prevalence enjoying an exponential growth in recent years33. This enduring popularity is due to multiple reasons. First, biological interpretability and dimensionality reduction. Additionally, parcellations enable integration between data from neuroimaging modalities whose different acquisition methods and resolution are intrinsically different93, such as fMRI and PET94, cortical morphometry95 or post-mortem transcriptomics96. In the words of Revell and colleagues: “The atlases defining anatomical structures (whether they are functionally, histologically, genetically, procedurally, multi-modally, or randomly defined) are the link between structural connectivity and functional connectivity measurements of the brain”33. Reflecting the key role of parcellations in today’s human neuroscience, there have been recent calls to adopt a “standard set of atlases”33 – which includes all the atlases considered here. Indeed, some open neuroimaging resources provide data only in the form of specific parcellations: often precisely those that we evaluated here97,98,99. Therefore, an evaluation of the role of such popular parcellations in the network reconstruction process is especially pressing for informing best practices in the field.

We considered some of the most widely used atlas-based parcellation schemes for defining nodes in the brain, which vary along some of the most relevant dimensions for network construction24,30. Our parcellations range from traditional anatomical atlases based on structural landmarks from one (AAL) or multiple individuals (Lausanne/Desikan-Killiany), to functional parcellations combining task-based and resting-state fMRI in over 1400 individuals (Schaefer), to multimodal parcellations accounting for cortical myeloarchitecture, functional activation, connectivity and topography (Glasser, Brainnetome). However, despite the diversity of approaches covered, encompassing the most common range of network sizes used in the field, we inevitably could not include all the possible atlases in existence24,30,31,33,100,101,102,103,104, and we chose to focus on some of the most widely adopted. In fact, the parcellations that we considered are all among those that an exhaustive multi-criterion evaluation of 55 different brain atlases, recently recommended as the “standard set of atlases” for inclusion in neuroimaging studies, due to their prevalence and coverage of the space of parcellation types and sizes33.

Dividing the brain into discrete, spatially circumscribed regions provides both dimensionality reduction and ease of interpretation. However, atlas-based parcellations also come with implicit assumptions about spatial localisation (e.g., by imposing the constraints that parcels should be spatially contiguous and non-overlapping) and about what should be regarded as the true functional units of the brain31. An alternative to atlas-based methods for node definition is the use of Independent Components Analysis (or analogous approaches such as functional modes105), which can provide spatially overlapping, “soft” parcels without hard boundaries, possibly exhibiting multiple spatially discontiguous peaks, which may be better able to reflect the complexity of brain organisation106,107,108,109,110. Simulations previously suggested that defining nodes based on ICA may outperform the use of regions-of-interest (e.g., atlas-based parcellations)108, and ICA also performed well at behavioural prediction11.

Here, therefore, we also included nodes defined as continuous, spatially overlapping independent components from ICA performed at different dimensionalities. However, we did not find significant differences across parcellation types in terms of overall performance (Fig. S42). Indeed, each type of node definition (anatomical atlas, functional atlas, multimodal atlas and ICA-based) features among the optimal pipelines – suggesting that parcellation type may be of limited relevance on its own (at least among the 768 pipelines that we evaluates), and what matters is more the combination of specific node definition choices as part of specific pipelines.

Although we endeavoured to provide a representative sampling of widely used node definition schemes (including all those in the “standard set of atlases” recently proposed33), many additional atlases exist in the literature, as well as parcellation-free methods24,30. The combinatorial explosion prevented us from extending our investigation to alternatives, but we briefly outline them below. Voxelwise/vertexwise networks with thousands of nodes43,111 provide maximal spatial resolution, but “volumetric pixels” have no more intrinsic biological meaning than the pixels of a 2D image – and the computational burden can be considerable. Additionally, by failing to aggregate biologically meaningful units (since cognitive functions are known to cover cortical areas larger than single voxels27), this approach can incur a loss of both physiological interpretability and statistical power, due to lower signal-to-noise ratio and higher rate of multiple comparisons. Recent gradient-based and eigenmode-based approaches provide alternative representations of the brain that are spatially extended, overlapping, and continuous rather than discrete (analogously to ICA), offering a complementary perspective on the constituent elements of the brain’s functional organisation32,112,113,114,115,116. It is also worth mentioning that, whatever the macroscopic units of brain function may be in terms of space, they need not be temporally invariant: future extensions of the present work may consider node definition schemes that allow for node boundaries to vary, and nodes themselves to merge or split dynamically in time, or as a function of task27,117,118,119,120. Finally, future approaches may enrich nodes with biological annotations such as microstructure, chemoarchitecture, and heterogeneities reflecting additional biological properties93,94,95,121,122, thereby providing a path towards a more integrative neuroscience.

Overall, there are a myriad ways to define nodes for functional connectomics, both using parcellations of different types and sizes, and using parcellation-free methods entirely. Selection criteria specific for this choice have also been proposed in the literature33. Future work may reveal whether some of these alternative approaches to node definition perform consistently better – or consistently worse – across our own criteria, than the parcellation-based node definitions adopted in the present work. However, we reiterate that our results point towards a more prominent role of edge definition than node definition, for determining the success of a pipeline – at least among the 768 pipelines considered here. Joining previous authors, we caution the reader that there may simply not be a “one size fits all” atlas33,119: our results suggest that the remaining steps in the pipeline provide essential context for determining whether a given atlas is suitable or not.

Pertaining to edge definition, future work may adopt more sophisticated methods of quantifying connectivity123: for instance, by adopting multivariate connectivity estimators124 or methods from information decomposition capable of recovering different kinds of information sharing between regions125,126,127,128 or the directionality of connections (transfer entropy, Granger causality, Dynamic Causal Modelling123,129,130), or disambiguating between direct and indirect connections (e.g. partial correlation, regularised partial correlation11,107). In particular, recent studies have suggested that although partial correlation tends to exhibit lower reliability than the commonly used Pearson correlation76,131, this shortcoming may be compensated by higher validity76 and discriminability132.

More broadly, many alternative thresholding methods also exist, whether based on statistical significance133, percolation87,88,89, or shrinkage methods107,134 – or avoiding thresholding entirely, by using analytic methods that can deal with fully connected and signed networks52. Additionally, it remains to be determined how our results will generalise to the case of frequency-specific or even multilayer networks obtained from EEG or MEG135 (although see Jiang et al.44 and Dimitriadis et al.136,137 for recent investigations of frequency bands for fMRI network construction); and time-varying (“dynamic”) networks, an increasingly popular approach in fMRI functional connectivity, whereby edges change over time138,139,140,141.

It is also known that different motion correction strategies can influence the validity of BOLD signals and subsequent network characteristics; however, no correction strategy offered perfect motion correction26. Here, we adopted a widely used denoising strategy (anatomical CompCor), and required our results to also replicate in a dataset denoised with FIX-ICA instead, which unlike aCompCor is designed to affect artifacts specifically and avoid modifying the neural signal of interest59,60. Additionally, we also considered two versions of each dataset, preprocessed with versus without the additional step of global signal regression, due to ongoing controversy about the effect of GSR on functional connectivity61,142. Finally, to further mitigate the potential impact of motion on our recommendations, we also explicitly included as one of our criteria that pipelines should not produce a PDiv distribution that is significantly correlated with the distribution of differences in subject motion, across any of the four test–retest datasets.

Notably, our final recommendations include pipelines both with and without GSR – although the latter is somewhat more prevalent among the very best-performing ones. In particular, we even found that the set of optimal pipelines includes versions of the same pipeline both with and without GSR: Brainnetome-246 for Pearson-OMST-weighted (with GSR and no-GSR versions both featuring among the 9 optimal pipelines); and in the expanded set, Schaefer454 Top20%-binary-Pearson, Lausanne-463 Top20%-binary-Pearson, Lausanne-463 Top20%-binary-MutualInfo, ICA-300 Top20%-binary-Pearson and ICA-200 Top20%-binary-MutualInfo. Therefore, our results suggest that investigators may have some discretion in the choice of using GSR, depending on their specific datasets and hypotheses. As an example, GSR may remove physiological and motion-induced noise56,58, and it may strengthen brain-behaviour associations142, but it can also remove signal of interest pertaining to some pharmacological and pathological conditions39,143, or distort group46 and individual differences144. Likewise, a recent study observed reduced generalisability of graph-theoretical properties across sites, sessions, and paradigms when GSR was used42, although Tozzi et al.48 delineated a more intricate picture, whereby GSR decreases reliability for networks and most edges, but increases it for some others, and GSR appeared overall beneficial in a recent evaluation of multiple denoising strategies for fMRI145. A comprehensive evaluation of the relative advantages and drawbacks of GSR is beyond the scope of this paper, and the reader is referred to Fox & Murphy61 and Liu et al.142 for extensive discussions. Finally, we did not explore potential differences between resting-state conditions (eyes-open vs eyes-closed vs naturalistic viewing)49,146, or the impact of scan duration and spontaneous fluctuations in arousal state – although we did include datasets with different scan duration, up to 1200 volumes21,147.

There are also additional considerations for functional connectomics that deserve to be mentioned. In addition to increasing the number of options and pipelines considered, future work may further expand on the present results in several ways: it remains to be determined to what extent our results apply to task-based rather than resting-state fMRI148,149. The generalisability of the proposed framework beyond healthy individuals is also worthy of future exploration. Compared to healthy controls, some clinical populations have demonstrated lower test–retest reliability150,151. Reliability across the lifespan should be also considered by comparing age groups, as early evidence untangled age-related differences in test–retest reliability of rs-fMRI152. The choice of the optimal pipeline for functional connectomics may, therefore, vary by clinical characteristics, which still remains to be ascertained and may benefit from topology-based approaches such as the one adopted here. This is an important next step following the present work. It is also possible that a different proportion of optimal pipelines would be found when alternative reconstruction methods are included, or different neuroimaging modalities. Additionally, although here we considered numerous criteria for trustworthy functional connectomics, other criteria could also be of relevance for specific research questions, such as fingerprinting accuracy76, discriminating cognitive states132, or structure-function correspondence33,99,153, among others.