Abstract

Quantum channels in free-space, an essential prerequisite for fundamental tests of quantum mechanics and quantum technologies in open space, have so far been based on direct line-of-sight because the predominant approaches for photon-encoding, including polarization and spatial modes, are not compatible with randomly scattered photons. Here we demonstrate a novel approach to transfer and recover quantum coherence from scattered, non-line-of-sight photons analyzed in a multimode and imaging interferometer for time-bins, combined with photon detection based on a 8 × 8 single-photon-detector-array. The observed time-bin visibility for scattered photons remained at a high 95% over a wide scattering angle range of −450 to +450, while the individual pixels in the detector array resolve or track an image in its field of view of ca. 0.5°. Using our method, we demonstrate the viability of two novel applications. Firstly, using scattered photons as an indirect channel for quantum communication thereby enabling non-line-of-sight quantum communication with background suppression, and secondly, using the combined arrival time and quantum coherence to enhance the contrast of low-light imaging and laser ranging under high background light. We believe our method will instigate new lines for research and development on applying photon coherence from scattered signals to quantum sensing, imaging, and communication in free-space environments.

Similar content being viewed by others

Introduction

Quantum coherence is a key ingredient in many fundamental tests and applications of quantum mechanics including quantum communication1, characterization of single-photon sources2, generation of non-classical states3, quantum metrology4, quantum teleportation5, quantum fingerprinting6, quantum cloning7, demonstrating quantum optical phenomena8, and quantum computing9 etc. The ability to transfer quantum coherence via scattering surfaces and its successful recovery from scattered photons enhances several applications of quantum technologies. For instance, quantum communication capable of operating over a scattering channel could accommodate free space communication with non-line-of-sight between multiple users such as indoors around corners, or with short range links with moving systems. Furthermore, the photon coherence recovered from scattered light could be utilized to improve noise performance in low-light and 3D imaging, non-line-of-sight imaging10,11, velocity measurement12, light detection and ranging (LIDAR), surface characterization, or biomedical sample identification.

Currently, the predominant photon encoding used on free-space quantum channels is polarization, because it is not impacted by turbulent atmosphere for clear line-of-sight transmission13. When photons are scattered, however, their polarization states are inherently disturbed, and the quantum encoding is degraded. A previous study14 showed that the observed polarization visibility depends on the scattering surface material, and even the best material (cinematic silver screen) showed a strong dependence on the photon scattering angles with only a total angle of <45° was suitable for quantum communications. Another approach to encode free-space channels is the use of higher-order spatial modes, recently utilized for intracity quantum key distribution15, yet these photon states are directly impacted by wavefront distortion and are expected to completely vanish upon random scattering from a surface16,17.

Interestingly, time-bin encoding1,18,19, – although widely used for single-mode optical fibers19,20,21,22,23 – has only been recently demonstrated for free-space channels24,25,26,27,28,29,30 due to the problem of atmospheric turbulence and scattering. In particular, refs. 28,29,30 solved the problem of atmospheric mode distortion by converting the distorted beam back into a single-mode at the receiver at the expense of additional loss and complexity. Utilization of adaptive optics to correct wavefront could be another solution but it is expensive and technically challenging31,32. Refs. 24,25,26 solved the problem by making use of field-widened interferometers at the analyzer setup. However, all these experiments used direct line-of-sight free-space quantum channels and to the best of our knowledge, no experiment has been reported yet that demonstrates reliable transfer and recovery of time-bin states over non-line-of-sight (nLOS) free-space quantum channels.

Here we utilize quantum coherence encoded in time-bins which is robust upon scattering and suitable for our purpose. The multimode states of light have been utilized using field-widened interferometers, or imaging interferometers, thereby solving the wavefront distortions caused by turbulent media. Such interferometers have the additional benefit of preserving an image. We thus implemented an imaging time-bin interferometer equipped with a single-photon-detector-array (SPDA) sensor, with 8 × 8 pixels covering a field of ~0.5°. The photon detector achieves high temporal precision of ≈120 ps, combined with the ability to spatially resolve the field-of-view with excellent time-bin visibility across the whole sensor area. We demonstrate that our system allows imaging a target that was illuminated with photons prepared with specific phase-signatures, that are recovered from the scattered photons with excellent phase coherence by the illuminated pixels. We discuss the viability of our method in the context of two relevant applications.

Result

Experiment

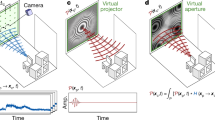

The experimental setup is shown in Fig. 1 (see Methods section for additional details). Each pulse from the laser passes through the Converter – an unbalanced Michelson interferometer (UMI) – that converts it into two coherent pulses separated by a time delay according to the path difference ∆C. These signals are sent towards the target sample covered with a diffusive material (regular white paper) acting as the scattering surface, which can be rotated to vary the angle of incidence. Some of the photons scattered from the surface are captured and guided through the Analyzer, a second UMI with path difference ∆A. Finally, the photons emerging from the Analyzer are focused into a single-photon-detector-array (SPDA) containing 64 single-photon avalanche photodiodes – hereafter referred to as pixels – arranged in a 8 × 8 row-column configuration, which are free-running and individually time-tagged. Each detected photon could have traversed one of the four possible paths: short-short (SS), short-long (SL), long-short (LS), and long-long (LL) with first (second) letter denoting the path taken inside Converter (Analyzer). The path-differences of the two UMI are tightly matched, i.e., ∆C ≈ ∆A, and interference can be observed, as shown in Fig. 2. Piezoelectric actuators are placed at the short arm of each interferometer to vary their respective phase. To compensate for variable angle of incidence and mode-distortion, a 118-mm-long glass cube with refractive index 1.4525 is placed in the long arm of both interferometers.

Optical pulses from a laser are sent through a phase Converter, which creates the initial time-bin states, while the multimode Analyzer measures the signals scattered off the target (regular white paper). A single-photon-detector-array is used as the detection device, with 8 × 8 individual pixels which are time-tagged separately. During the initial alignment, the incident angle = reflected angle = 25°

Top left: histograms of the arrival times of photons at pixel number (4,4) (row 4, column 4). The three separate peaks correspond to photons coming via SS (right), SL or LS (middle), and LL (left) paths (see text for more detail). Five different phase-instances are shown. The visibility at this pixel – calculated by curve-fitting – was V(4,4) ≈ 0.95. Top right: middle-peak intensity at the illuminated pixels at the corresponding phase-instances. Bottom: visibilities of the illuminated pixels calculated after fitting the curves. The visibilities range from 0.9 to 0.95. Both the color and the size of the square marker in each pixel area are indicators of the visibility. Pixel number (1,1) was used as a trigger. The temporal precision was ≈120 ps

A sinusoidal phase difference was introduced between the two interfering (SL and LS) pulses by applying a 0.1-Hz 10 V peak-to-peak ramp voltage at the Analyzer piezoelectric actuator (i.e., the length of the LS path was varied with respect to SL). The resultant outcomes are shown in Fig. 2. The top left columns show histograms of detection times – with respect to trigger signal – at one of the central pixels (4,4) (row 4, column 4) for five different phase-instances. The three peaks correspond to photons coming via SS (right), SL or LS (middle), and the LL (left) paths. The temporal precision was ≈120 ps. The measured visibility – calculated by fitting the curve – was V(4,4) ≈ 0.95. The right column shows the corresponding middle-peak intensity for other illuminated pixels. In this case, only the events detected during the 0.6-ns window – centered around the middle-peak – were post-selected. The data collection time was 1 s for the left and 0.1 s for the right columns. The visibilities of the illuminated pixels – calculated after fitting the curves – are shown at the bottom which range from 0.9 to 0.95.

We note that while the interference visibility V has traditionally been considered as a signature of coherence, there have been cases where – under certain conditions – an enhancement of V was observed with increasing decoherence33,34, thus creating doubt on its efficacy as a true signature. Furthermore, newer measures of coherence, for example, based on the l1-norm of the off-diagonal elements of the density matrix of the quanton, has been developed35,36,37 which replaced V in the generalized duality relations to capture the correct variation of coherence with decoherence38. However, scattering from a ‘smooth’ regular surface ensures that the number of possible interference paths is restricted to two for which the l1-norm based coherence is identical to the interference visibility V38,39. Hence, for the rest of this paper, we use the V as a measure of coherence. In free-space channels, decoherence mechanism such as dispersion and atmospheric turbulence could lead to a degradation of V. However, in our case, turbulence has been taken care of by the imaging (field-widened) interferometers, while for our laser pulse-width of 300 ps, dispersion is negligible40,41.

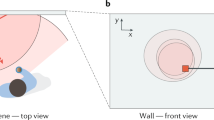

Robust and stable phase coherence under scattering angle variations

During the initial alignment, specular reflection was used by setting the incident angle = reflected angle = 25°. We refer to the incident angle as θ. Then, using a rotational mount, the scattering surface was rotated about P to vary θ while keeping the position of the camera fixed (see Fig. 3a). For different rotation angle φ, the corresponding average intensity and visibilities are shown in Fig. 3b for pixel number (4,4). For = 25° (φ = 0°), the majority of photons collected by the camera was due to specular reflection. As θ (φ) was varied, the intensity followed the typical scattering pattern consisting of specular and diffusive reflection42. At larger rotation angles φ, the collected photons were mainly due to scattering from the diffused surface. For φ > |450|, the amount of photons collected were too low. However, within the range φ∈{–450,+450}, although the number of detected photons varied, the visibility remained fairly constant at ~95% with <10% variation. The same behavior was observed for other illuminated pixels.

a The incident angle θ was varied by rotating the scattering surface about point P by an angle φ from –45° to +45° while keeping the position of laser and camera constant. b Variation of visibility and intensity with rotation angle. Here, rotation angle φ = 0° corresponds to incidence angle of θ = 25° as shown in Fig. 1. For rotation angles higher than ±450, sufficient amount of photon could not be collected by the camera. The average intensity for each angle is shown on the right axis. The collection time for each data point is 20 s. c Variation of counts versus phases applied at the Converter for the illuminated pixels. Pixel number (1,1) was used as a trigger

Recovery of coherence while imaging

As we will demonstrate, this approach offers the unique ability for individual pixels in this imaging analyzer to fully detect the coherence, while at the same time resolving spatial modes of the scattered photons. To ensure that the camera received mostly scattered photons, the scattering surface was rotated to set φ = 200 (see Fig. 3a) at which the value of visibility was still close to 0.95. Similar to Fig. 2, only the pixels in the center of the sensor were illuminated. By applying a periodic voltage to the piezoelectric actuator in the Converter interferometer, a sinusoidal phase variation was induced among the outgoing time-bin photons. For each phase θc, the corresponding counts at the illuminated pixels were recorded. No voltage was applied at the Analyzer piezoelectric actuator. The fitted count-versus-phase curves for the illuminated pixels are shown in Fig. 3c. The result verified that although each pixel saw a different set of spatial modes of the incoming light, the phase θc was preserved by the modes even after going through diffused scattering and was successfully recovered from the scattered photons by each individual pixel independently. The test was repeated by illuminating all pixels of the SPDA for different scattering angles φ, yielding similar results.

Quantum communication with scattered light

In this section, we demonstrate the viability of free-space non-line-of-sight (NLOS) quantum communication experiments with ‘scattered photons’. Here, nLOS refers to the fact that no direct path exists between sender and receiver; neither do they have access to any specular reflector, i.e., mirror surface, at any intermediate point. In particular, we show that a quantum state of the form \(\left. {\left| {\psi _A} \right.} \right\rangle = \left. {\left| e \right.} \right\rangle + e^{i\theta _A}\left. {\left| l \right.} \right\rangle\), with θA ∈ {0,2π} where |e〉 (|l〉) are early (late) pulses produced by the converter, can be transferred over the nLOS channel and measured at any arbitrary basis θB ∈ {0,2π} with high visibility, independently and simultaneously by the illuminated pixels of our SPDA analyzer.

For the demonstration, we use the experimental setup shown in Fig. 1 where the laser and Converter are assumed to be at sender’s (Alice’s) lab while the Analyzer and the camera at receiver’s (Bob’s) lab. The scattering surface is rotated at φ = 20° to ensure that photons detected by Bob are mostly due to scattering from the diffusive surface. By applying specific voltages at the converter (analyzer) Piezo actuator, specific phase values θA (θB) are set. Figure 3c shows the variation of received photon counts at the illuminated pixels for Bob’s measurement basis θB = 0° when θA is varied from 0 to 2π. Similar variations are observed when Bob’s basis is changed to other arbitrary values, or when we scan the values of θB from 0 to 2π for a constant value of θA (not shown). In all cases, the average visibility was in the range of 0.9−0.95 for all the illuminated pixels which is comparable to the values reported in previous free-space quantum communication experiments involving time-bins25,26,32,43,44. The result verifies that our NLOS channel can be suitable for time-bin quantum communication experiments using scattered photons.

We emphasize that when used for quantum communication experiments, our SPDA sensor enhances the robustness of the QKD receiver, in addition to allowing nLOS operation. Firstly, the imaging information for each pixel can be used for tracking a transmitter beam that feeds into an active beam steering mechanism, or simply used to passively suppress background counts in processing, thus reducing complexity26,32. Secondly, the implementation of multiple photon detector pixels appears to be more robust against certain powerful quantum attacks. For example, the class of detector control attacks45,46 could be prevented by adopting the method presented in47. The efficiency mismatch type attacks48 – where an eavesdropper attacks by modifying the spatial modes of the incoming light – could be easily detected as changing the spatial mode will change the spatial distribution of detection events.

We note in passing that the sender-to-receiver distance (loss) in our experiment was ~2m (6 dB). However, for free-space line-of-sight quantum communication experiments, phase-coherence has been shown to be robust for much longer distances and higher losses (ex. 5000 km and 60 dB in ref. 26, 31 dB in ref. 32). The distance is ultimately limited by the dispersion properties of the medium and possibly the structure of the object, as it would cause the pulse width to widen and overlap with each other. Thus, the focus of this section was to investigate the robustness of coherence against scattering property of the channel.

Low-light imaging in high photon background

It is important to note that the ability to reveal quantum coherence can be directly used to enhance the contrast of an image under a low-light and noisy environment. The main idea is as follows. An object is illuminated with photons prepared with a specific phase-signature that is maintained by the scattered photons. As the scattered photons are imaged by the SPDA analyzer, the detected count pattern should follow the applied phase-signature. Hence, correlating the phase-signature with the received count pattern, a scattered photon can be distinguished from a noise photon with enhanced signal-to-noise ratio. Here, we show a proof-of-principle demonstration of this concept. In order to bring coherence into the picture, a periodic ramp voltage (0.1 Hz, ±5 V peak-to-peak) was applied to the Converter piezoelectric actuator that encoded a periodic phase-signature pattern among the outgoing time-bin states. The count pattern at the SPDA pixels in response to this phase-signature was pre-characterized as shown in Fig. 4b. All the pixels followed roughly the same pattern and from now on, we shall refer to this as the reference pattern.

a The top and bottom row show the illuminated object (size 4 mm x 3.6 mm) – with the scattering surface rotated at four different angles \(\varphi \in \{ - 60^0, - 40^0,0^0, + 40^0\}\) – and the corresponding images observed in the camera. Only the events detected during the 0.6 ns window – centered around the middle-peak – were post-selected to form the image. b The variation of counts (normalized) as a function of the applied phase-signature. This is the expected count pattern – the reference pattern – for high signal-to-noise ratio scenario. c The observed pattern in response to the phase-signature for two neighboring pixels (4,3) and (4,4) for rotation φ = 400. d Image captured by the SPDA imager in the low-light and noisy environment. The observed pattern at the same two neighboring pixels (4,3) and (4,4) are also shown. e The image reconstructed by cross-correlating the observed pattern with the reference pattern (see text and Methods section for more details)

The experimental setup is similar to Fig. 1, but now an object is placed on the scattering surface and the laser focusing condition is adjusted such that a sharp image is observed at the SPDA camera. Figure 4a shows the conventional intensity-based images of the object for four different rotations of the scattering surface. From now on, we focus only on the case where the scattering surface was rotated to φ = 400, ensuring that the specular reflection no longer reaches the camera, and the detected photons were only due to diffusive scattering. The coherent variation of intensity across the image in response to the applied phase is shown in Fig. 6 in Methods section.

First, we focus on two neighboring pixels (4,4) and (4,3). Figure 4c shows the variation of counts in these two pixels in response to applied phase-signature. These count patterns are hereafter referred to as observed pattern. The observed patterns appear distorted due to low SNR, however the correlation with the reference pattern is clearly visible. Since pixel (4,4) received more photons than (4,3) – due to the feature of the object – its pattern appears more correlated to the reference pattern. This particular feature has been exploited to enhance the contrast as described next.

In order to simulate a noisy low-light environment, the laser was attenuated to 0.8 photons per pulse and a lamp was placed in front of the camera to create a high level of background signals. The average number of noise photons was 50 per pixel per second while the average number of signal photons varied from 124 to 388 per second depending on the position of the pixels leading to a signal-to-noise ratio of 2.4−7.76. The scattering surface rotation was kept at φ = 400. The image captured by the imaging array is shown in Fig. 4d. As expected, the presence of high background severely degraded the image contrast. The observed pattern at the same two pixels (4,3) and (4,4) are also included in Fig. 4d which shifted up due to the presence of high background photons, yet the correlation with the reference pattern is still visible. For each pixel, we calculated the correlation between the observed and reference pattern (see Methods section for more details). We have chosen a threshold of 2.4, and Fig. 4e shows only those pixels having a correlation higher than this threshold. The result is a reconstruction of the object image with much-enhanced contrast. We note that only photons from the SL and LS paths have been considered here. In other words, from the three peaks shown in Fig. 2, we only post-selected the detections from the middle-peak which required the timing data for each pixel. As a result, our approach is a combination of utilizing arrival times for coherence analysis, that can also be utilized for LIDAR applications.

We would like to elaborate on how our coherence-based imaging could complement the conventional intensity-based imaging. When intensity is used as a distinguishing parameter, the signal-to-noise ratio decreases linearly with an increase in noise or decrease in signal intensity. On the other hand, as shown in Fig. 3b, visibility – the measure of coherence – stays fairly constant with decreasing signal intensity. This means when the number of detected signal photon decreases, the relative amplitude of the count pattern decreases but the shape of the pattern stays fairly same. As a result, correlation with the reference pattern decreases very slowly. In fact, the scheme could be generalized by utilizing both the outputs of the Analyzer interferometer. In that case, the total intensity at the two output arms – as a function of applied-phase θ – P±(θ) can be written as

where N is the background noise, which is assumed equally distributed among the two outputs arms, while S±(θ) are the signal intensity at each output which are inverse (π-phase shifted) of each other. Consequently, the difference function \(P_ + (\theta ) - P_ - (\theta )\), could theoretically cancel out any background noise and increase the signal-to-noise ratio manifold. In a preliminary study, we have already implemented this scheme and were able to distinguish a signal photon from the noise with noise intensity 10 times higher than signal intensity. However, finding a more sophisticated image-reconstruction method is out of this paper’s scope and will be presented elsewhere.

Discussion

This work has demonstrated a novel and robust approach to transfer and recover quantum coherence via scattered photons by realizing a multimode, imaging time-bin interferometer equipped with a single-photon-detector-array sensor. Our quantum receiver achieves excellent temporal precision of ≈120 ps as well as the ability to spatially resolve the field-of-view with excellent time-bin visibility across the whole sensor area. Each pixel independently received the coherence from the spatial modes of the scattered photons. The maximum observed visibility was 95%, which remained within a <10% variation over a wide scattering angle range of –45° to +45°, recovered from photons through a scattering, non-line-of-sight channel. All these features have the potential to open up new avenues in many applications, including quantum communication around-the-corner, low-light and 3D imaging, background noise rejection, around-the-corner imaging10, velocity measurement12, LIDAR, object detection and identification, etc.

We demonstrated the application potential of our method by showing two potential applications, one is non-line-of-sight quantum communications, the other is enhancing the contrast of single-photon images. A more detailed and quantitative analysis of these applications will be given elsewhere. We believe our results will instigate further research on the application of coherence in quantum sensing, imaging, and communication and lead to novel areas of application.

Materials and methods

Detailed experimental setup

The experimental setup (Fig. 5) consists of two unbalanced Michelson interferometers (UMIs). The first UMI – Converter – creates the coherence while the second one – Analyzer – measures the coherence. The laser (Picoquant laser diode LDH 8-1596, PDL-800B driver) emits 697 nm, 300 ps multimode light pulses at a rate of 5 MHz. Lights coming out of the multimode fiber are collimated, attenuated to 0.3 photon per pulse and sent through the Converter. The path difference ∆C between the long and short arms turns each incoming state into a coherent superposition of two time-bins separated by 0.57 ns. They are sent towards the scattering surface and the scattered photons are collected by the Analyzer. The path difference between the short and the long arm in the Analyzer is ∆A. A photon coming out of the Analyzer could have traversed one of the four possible paths: short-short (SS), short-long (SL), long-short (LS), and long-long (LL). If the path-differences in each UMI match, i.e., ∆C ≈ ∆A, the probabilities of a photon coming via SL and LS paths become indistinguishable and result in interference. Piezoelectric crystals are placed in the short arm of each interferometer to adjust the path difference. The photons emerging from the Analyzer are focused into an SPDA containing 64 single-photon avalanche photodiodes. The pixels of the SPDA are arranged in a 8 × 8 row-column configuration, with a pitch of 75 µm. A focusing lens (Canon EF-S 18-200 mm f/3.5-5.6) is used to illuminate the desired range of pixels with its focal length set to about 60 mm, thus yielding an angular resolution of 0.07°, and a total angular field of 0.5°. The detection efficiency at each pixel was 12%.

The Converter creates two pulses separated by 0.6 ns. The path difference between the two short-long (SL) and long-short (LS) pulses can be adjusted using the piezoelectric actuator placed in the short arm. A glass cube is present in the long arm of both interferometers to make the interferometers balanced in the spatial domain. The Analyzer recombines the pulses coming via SL and LS paths and the output is studied by the 8 × 8 SPDA. During the initial alignment, the incident angle was θ = 25° (figure not to scale)

Compensation for spatial-mode distortion

A time-bin encoded photon entering an Analyzer with variable angle of incidence causes a lateral offset between the paths impinging at the exit beamsplitter24,49 causing degradation of interference visibility. Channel induced spatial-mode distortions further lower the interference quality. The root cause of this is the inherent asymmetry of the unbalanced Michelson interferometers. A compensation technique is to use a glass material with appropriate length and refractive index to create a virtual mirror closer to the beamsplitter. In this way, the interferometer, although asymmetric in time, becomes symmetric in the spatial-mode domain. We placed a 118-mm-long glass cube with refractive index 1.4825 at the long arm to match the distance between beamsplitter-to-virtual-mirror with the short arm (see49 for more details and the analytical formula). This compensation not only improves performance at higher AOI, but is also necessary to enable high interference visibility with a multimode beam.

Imaging while observing photon interference

The intensity of image at different instant of time is shown in Fig. 6. As the phase between time-bins is varied at the converter, the intensity varies across the whole image and the variation across all illuminated pixels is coherent, i.e., they vary in unison similar to Fig. 3c. The figure only shows events detected during the 0.6-ns window – centered around the middle-peak. The visibility was in the range 0.85−0.95.

Correlation of patterns

Here we explain the method by which Fig. 4e was plotted. Figure 7a shows the same image (right) along with the observed visibility (left) across all the SPDA pixels. The visibility was calculated as V = (Imax − Imin)/(Imax + Imin) where Imax (Imin) is the maximum (minimum) count in a phase-signature cycle. Pixel (1,1) was the trigger, and (1,2), (2,2), and (5,8) had very high dark counts. The normalized observed pattern at these three defected pixels are shown in Fig. 7b where no correlation with the reference pattern was visible. Figure 7c shows the observed (normalized) pattern at the bottom six pixels of column 4. The resemblance with the reference pattern was quite pronounced. On the other hand, Fig. 7d shows the observed (normalized) patterns for the bottom three pixels of column 1 that detected relatively fewer number of photons and are much more distorted. They are in the middle of the two previous extremes and the correlation value with the reference pattern for them lies in the middle. Before calculating the cross-correlation, both the reference and observed patterns were shifted downward by 0.5 for the ease of analysis.

a Visibility across all the pixels (left) and the reconstructed image drawn from correlation (right). b Observed (normalized) pattern at the three defective pixels having high dark counts. The correlation with the reference pattern is lower than threshold. c Observed (normalized) pattern at the bottom six pixels of column four. The correlation with the reference pattern is higher than the threshold. d Observed pattern (normalized) at bottom three pixels of column one. The correlation with the reference pattern is between the two previous extremes but still lower than the chosen threshold. By plotting the pixels with correlation higher than the threshold, the image was reconstructed with enhanced contrast as shown in fig a (right)

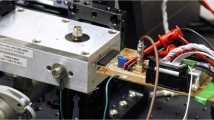

The single-photon-detector-array sensor

The SPDA camera is composed of 64 single-photon avalanche diodes arranged in a square pattern with 8 rows and 8 columns. Each row and column has eight 30 µm diameter detectors with X and Y pitch equal to 75 µm. Each photon arriving on each pixel can be individually detected with a precision of 100 ps, and over 2 billion photons per second can be detected across the array. This places stringent requirements on the time-tagging electronics which cannot be met by existing products. As a result, we have developed a new adapter board to interface the SPDA camera with our time-tagging device. The combination of the SPAD camera, time-tagging electronics and the developed adapter board with the corresponding software provided a unique quantum sensor with exquisite temporal and spatial resolution. The electrical output from the camera (Samtec Cable, SEAC-30-08-XX.X-TU-TU-2) contains 64 LVDS detection signal from the pixels along with other control signals. The developed adapter board translates the 64 LVDS camera outputs into four groups of 16 LVDS channels going into the time-tagger (Universal quantum devices) as shown in Fig. 8. As a result, time stamps from the camera can be read by the tagger at a rate of approximately 1 GTag/s through an external-PCI-express interface. The adapter board provides the option to sacrifice upto four pixels (1, 8, 58, and 64) to be used as external trigger. In this work only one trigger was used. When triggering is not required, the pixels can function as normal detectors. The average dark count rate was 35 cps and the deadtime was 150 ns.

References

Rubenok, A. et al. Real-world two-photon interference and proof-of-principle quantum key distribution immune to detector attacks. Phys. Rev. Lett. 111, 130501 (2013).

Michler, P. et al. A quantum dot single-photon turnstile device. Science 290, 2282–2285 (2000).

Lee, H., Kok, P. & Dowling, J. P. A quantum rosetta stone for interferometry. J. Mod. Opt. 49, 2325–2338 (2002).

Flamini, F. et al. Thermally reconfigurable quantum photonic circuits at telecom wavelength by femtosecond laser micromachining. Light Sci. Appl. 4, e354 (2015).

Pirandola, S. et al. Advances in quantum teleportation. Nat. Photon. 9, 641–652 (2015).

Xu, F. et al. Experimental quantum fingerprinting with weak coherent pulses. Nat. Commun. 6, 8735 (2015).

Irvine, W. T. M. et al. Optimal quantum cloning on a beam splitter. Phys. Rev. Lett. 92, 047902 (2004).

Pan, J. W. et al. Multiphoton entanglement and interferometry. Rev. Mod. Phys. 84, 777–838 (2012).

Spring, J. B. et al. Boson sampling on a photonic chip. Science 339, 798–801 (2013).

Gariepy, G. et al. Detection and tracking of moving objects hidden from view. Nat. Photon. 10, 23–26 (2016).

Wu, C. et al. Non–line-of-sight imaging over 1.43 km. Proc. Natl Acad. Sci. USA 118, e2024468118 (2021).

Erskine, D. J. & Holmes, N. C. White-light velocimetry. Nature 377, 317–320 (1995).

Höhn, D. H. Depolarization of a laser beam at 6328 Å due to atmospheric transmission. Appl. Opt. 8, 367–369 (1969).

Bourgoin, J. P. Experimental And Theoretical Demonstration Of The Feasibility Of Global Quantum Cryptography Using Satellites (University of Waterloo, 2014). PhD thesis.

Sit, A. et al. High-dimensional intracity quantum cryptography with structured photons. Optica 4, 1006–1010 (2017).

Paterson, C. Atmospheric turbulence and orbital angular momentum of single photons for optical communication. Phys. Rev. Lett. 94, 153901 (2005).

Malik, M. et al. Influence of atmospheric turbulence on optical communications using orbital angular momentum for encoding. Opt. Express 20, 13195–13200 (2012).

Brendel, J. et al. Pulsed energy-time entangled twin-photon source for quantum communication. Phys. Rev. Lett. 82, 2594–2597 (1999).

Stucki, D. et al. Fast and simple one-way quantum key distribution. Appl. Phys. Lett. 87, 194108 (2005).

Townsend, P. D., Rarity, J. G. & Tapster, P. R. Single photon interference in 10 km long optical fibre interferometer. Electron. Lett. 29, 634–635 (1993).

Muller, A. et al. “Plug and play” systems for quantum cryptography. Appl. Phys. Lett. 70, 793–795 (1997).

Inoue, K., Waks, E. & Yamamoto, Y. Differential phase shift quantum key distribution. Phys. Rev. Lett. 89, 037902 (2002).

Stucki, D. et al. High rate, long-distance quantum key distribution over 250 km of ultra low loss fibres. New J. Phys. 11, 075003 (2009).

Jin, J. et al. Demonstration of analyzers for multimode photonic time-bin qubits. Phys. Rev. A 97, 043847 (2018).

Jin, J. et al. Genuine time-bin-encoded quantum key distribution over a turbulent depolarizing free-space channel. Opt. Express 27, 37214–37223 (2019).

Vallone, G. et al. Interference at the single photon level along satellite-ground channels. Phys. Rev. Lett. 116, 253601 (2016).

Pan, D. et al. Experimental free-space quantum secure direct communication and its security analysis. Photon. Res. 8, 1522–1531 (2020).

Chen, H. et al. Field demonstration of time-bin reference-frame-independent quantum key distribution via an intracity free-space link. Opt. Lett. 45, 3022–3025 (2020).

Chapman, J. C. et al. Time-bin and polarization superdense teleportation for space applications. Phys. Rev. Appl. 14, 014044 (2020).

Chapman, J. C. et al. Towards hyperentangled time-bin and polarization superdense teleportation in space. Proceedings of SPIE 11167, Quantum Technologies and Quantum Information Science V. (SPIE, Strasbourg, France, 2019).

Liu, C. et al. Single-end adaptive optics compensation for emulated turbulence in a bi-directional 10-mbit/s per channel free-space quantum communication link using orbital-angular-momentum encoding. Research 2019, 8326701 (2019).

Chen, M. et al. Performance verification of adaptive optics for satellite-to-ground coherent optical communications at large zenith angle. Opt. Express 26, 4230–4242 (2018).

Mei, M. & Weitz, M. Controlled decoherence in multiple beam Ramsey interference. Phys. Rev. Lett. 86, 559–563 (2001).

Mei, M. & Weitz, M. Multiple-beam Ramsey interference and quantum decoherence. Appl. Phys. B 72, 91–99 (2001).

Baumgratz, T., Cramer, M. & Plenio, M. B. Quantifying coherence. Phys. Rev. Lett. 113, 140401 (2014).

Yu, X. D. et al. Alternative framework for quantifying coherence. Phys. Rev. A 94, 060302 (2016).

Zhang, D. J. et al. Estimating coherence measures from limited experimental data available. Phys. Rev. Lett. 120, 170501 (2018).

Bera, M. N. et al. Duality of quantum coherence and path distinguishability. Phys. Rev. A 92, 012118 (2015).

Mishra, S., Venugopalan, A. & Qureshi, T. Decoherence and visibility enhancement in multipath interference. Phys. Rev. A 100, 042122 (2019).

Lu, H. Q., Zhao, W. & Xie, X. P. Analysis of temporal broadening of optical pulses by atmospheric dispersion in laser communication system. Opt. Commun. 285, 3169–3173 (2012).

Sunilkumar, K. et al. Enhanced optical pulse broadening in free-space optical links due to the radiative effects of atmospheric aerosols. Opt. Express 29, 865–876 (2021).

Tan, K. & Cheng, X. J. Specular reflection effects elimination in terrestrial laser scanning intensity data using phong model. Remote Sens. 9, 853 (2017).

Marcikic, I. et al. Distribution of time-bin entangled qubits over 50 km of optical fiber. Phys. Rev. Lett. 93, 180502 (2004).

Boaron, A. et al. Simple 2.5 GHz time-bin quantum key distribution. Appl. Phys. Lett. 112, 171108 (2018).

Lydersen, L. et al. Hacking commercial quantum cryptography systems by tailored bright illumination. Nat. Photon. 4, 686–689 (2010).

Lydersen, L., Skaar, J. & Makarov, V. Tailored bright illumination attack on distributed-phase-reference protocols. J. Mod. Opt. 58, 680–685 (2011).

Gras, G. et al. Countermeasure against quantum hacking using detection statistics. Phys. Rev. Appl. 15, 034052 (2021).

Sajeed, S. et al. Security loophole in free-space quantum key distribution due to spatial-mode detector-efficiency mismatch. Phys. Rev. A 91, 062301 (2015).

Agne, S. Exploration of Higher-order Quantum Interference Landscapes. (University of Waterloo, Waterloo, 2017). PhD thesis.

Acknowledgements

We thank Duncan England, Bhashyam Balaji, Ramy Tannous, Youn Seok Lee and Alex Kirillova for discussions and technical support. This work was supported by the National Research Council Canada, Defence Research Development Canada, Industry Canada, Canada Fund for Innovation, Ontario MRI, Ontario Research Fund, and NSERC (programs Discovery, CryptoWorks21, Strategic Partnership Grant) and Canada First Research Excellence Fund (TQT).

Author information

Authors and Affiliations

Contributions

S.S. performed the analyses and experiments. T.J. conceptualized the idea and supervised the study. Both authors contributed to writing the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sajeed, S., Jennewein, T. Observing quantum coherence from photons scattered in free-space. Light Sci Appl 10, 121 (2021). https://doi.org/10.1038/s41377-021-00565-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41377-021-00565-y

- Springer Nature Limited

This article is cited by

-

Characterisation of a single photon event camera for quantum imaging

Scientific Reports (2023)

-

Property of Many-Body Localization in Heisenberg Ising Chain Under Periodic Driving

International Journal of Theoretical Physics (2023)

-

The Behavior of Many-Body Localization of Quasi-Disordered Spin-1/2 Chains

International Journal of Theoretical Physics (2022)