Abstract

Unwise use of irrigation water in water-scarce areas exacerbates water scarcity, diminishes crop yield, and leads to resource wastage. In the Tigray region of Ethiopia, where water is scarce and a determinant resource, no regional-level validation of on-farm irrigation scheduling had been conducted until now. The objectives of this study were to (i) validate farmers’ irrigation scheduling practices and (ii) optimize water consumption for increased irrigated area and number of farmers. Eight different irrigation schemes were purposively selected from the Tigray region. Primary data, acquired through field measurements, observations, and discussions, were supplemented with secondary data. Farmers' irrigation scheduling practices were predominantly of the "Fixed" type, characterized by constant irrigation intervals and amounts. Unfortunately, these practices were non-optimal, with 55% over-irrigation and 45% under-irrigation. Over-irrigation instances ranged from 1350 m3in a garlic plot within the Mesima scheme to a maximum of 1,327,067 m3in a maize plot within the Serenta scheme. The excess water could potentially be utilized to irrigate an additional area of 2 to 148 hectares, thereby benefiting 7 to 296 more farmers, respectively. Conversely, instances of under-irrigation in the Fre Lekatit scheme resulted in yield reductions of 10,445 kg for potatoes and 138,499 kg for maize. In the Tigray region, most of the schemes are semi-arid, emphasizing the imperative need for water conservation. Renovating regional-level irrigation scheduling by integrating performance assessment and enhancing water productivity at the field level, and establishing a remote-sensing-based 'Real-Time Irrigation Scheduling System' is deemed necessary to sustain dryland irrigated agriculture.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Globally, the demand for fresh water is increasing over time as the population grows, and competition among multiple users for water becomes stiff [1,2,3]. In fact, the majority (70%) of the world's fresh water is consumed by irrigated agriculture, with an anticipated 60% increase by 2025 [4,5,6]. However, fresh water remains a declining and scarce resource [1, 7], particularly in drylands where it is a critical and determinant resource for improving agricultural productivity and, thereby, livelihoods [8]. The scarcity of this resource threatens the sustainability of developments [9, 10], while its declining trend puts greater stress on irrigated agriculture.

Considering that farmer-led small-scale farms still contribute over 80% to global agricultural produce [11], it is imperative to advance irrigated agriculture through the use of appropriate soil water monitoring technologies and decision support tools. This advancement aims to improve water use efficiency (WUE), thereby increasing crop production and enhancing food security [3, 6, 7].

However, in developing countries like Ethiopia, much of the focus in irrigation schemes is primarily on the construction of hydraulic structures, with limited or no attention given to field irrigation water management [9, 12, 13]. This construction-focused approach, without proper field water management, is leading to water wastage, along with environmental problems such as waterlogging and secondary soil salinization [9, 14, 15]. Consequently, many irrigation schemes in Ethiopia are not performing as expected based on their design [3, 16].

Proper irrigation scheduling, the determination of when and how much water to apply to a field crop [15, 17, 18], is a crucial tool for monitoring and reducing water losses in the field [7]. It enables increased irrigated area and production [11], while also helping to prevent crop water stress, nutrient leaching, runoff, and other related environmental hazards [6].

In Ethiopia, farmer-led field irrigation management practices are widespread, with farmers making decisions on irrigation scheduling and selecting irrigation methods (field watering techniques) based solely on intuition, without considering soil, plant, and weather parameters [14]. These practices often involve the blanket application of water through traditional surface irrigation methods [9], resulting in low water use efficiency (35–40%) [10, 19]. Consequently, irrigated fields may suffer from either over-irrigation, which increases the cost of production and leads to nutrient leaching, or under-irrigation, resulting in reduced productivity due to water shortage during critical plant growth stages [20].

Poor irrigation scheduling practices are commonly recognized as a major challenge contributing to the under-performance of irrigation schemes and threatening the sustainability of increased production [14, 21]. Traditional irrigation scheduling practices have predominantly led to low production and water productivity, decreased irrigated area, and environmental problems [14].

In regions such as the Tigray of Ethiopia, where water scarcity poses a significant challenge to sustaining dryland agriculture [22, 23], it is imperative to utilize irrigation water efficiently and effectively. This requires a clear and detailed understanding of field water requirements and supply. Unwise use of available water resources, particularly in drylands, exacerbates water scarcity [8] and leads to low and poor-quality yields for farmers who do not practice proper irrigation, resulting in wastage of water, labor, and other related resources [9, 13].

Implementing suitable, precise, and water-saving irrigation strategies [5], along with proper field irrigation management is essential in the Tigray region, one of the most drought-prone regions of Ethiopia [14].

There is a pressing need to scientifically evaluate the performance of irrigation scheme water management at wider scales, spanning from the water source to field levels. This comprehensive evaluation is essential for acquiring complete information, enabling holistic decision-making, and implementing corrective measures in water management. This is especially crucial in water-scarce areas, where there is a growing scarcity and demand for water by multiple users [9].

To this effect, validating field-level farmers’ irrigation scheduling is of paramount importance for water conservation [3]. However, there have been no recent research updates on region-level validation of farmers' field irrigation scheduling in the Tigray region. Most of the irrigation interventions in the region have primarily focused on the construction of major infrastructures, with little emphasis on on-farm irrigation scheduling practices in regional research efforts. Field-level irrigation studies conducted in the irrigation schemes of the region so far have been site-specific, including PhD, MSc, and literature-based research, with a majority of them concentrating on the socio-economic impacts of irrigation development.

To date, unlike this study, no regional-wide field-level validation of farmers’ irrigation scheduling has been conducted in the Tigray region. This study is therefore intended to provide essential field information on the current status of field irrigation practices to inform decision-making and policy formulation on efficient use and optimization of scarce water resources to sustain irrigated agriculture in such a semi-arid region. Furthermore, this study underscores the utilization of irrigation technologies that could potentially evolve to Remote Sensing (RS)-based monitoring and scheduling of field irrigation.

Hence, this regional-level, field-based study on farmers’ irrigation practices can be regarded as a new initiative. The scope of this research was thus limited to the field-level validation of current farmers’ irrigation scheduling practices at different schemes in the Tigray region and the analysis of water-saving possibilities towards improved water management in the region. The objectives of this research were to (i) validate farmers’ irrigation scheduling practices against model scheduling results and (ii) evaluate and optimize water consumption for increased irrigated area and beneficiaries, thus contributing to improvement of regional irrigation water management and crop yield through decision and policy-making.

2 Materials and methods

2.1 Description of selected irrigation schemes

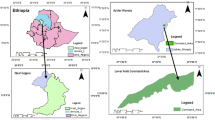

For this study, eight different irrigation schemes were selected from the Tigray region, which are distributed across the region as shown in Fig. 1.

Descriptions of each scheme, in terms of coordinate location, altitude, type, year of construction, capacity, size of command area and number of beneficiaries, are presented in Table 1.

2.2 Scheme selection criteria

The eight irrigation schemes were purposively selected based on their proximity and similarity in topography to the nearest meteorological stations, with complete data and located within a 10 km distance. These stations provide complete data required by the FAO CROPWAT 8.0 model [24,25,26], an internationally recognized model for accurately quantifying Reference Evapotranspiration (ETo), Crop water requirement (ETc), irrigation requirement (IR), and irrigation scheduling [16, 27,28,29].

Accordingly, long-term daily maximum temperature (Tmax), minimum temperature (Tmin), relative humidity, wind speed, and sunshine hours were collected from the nearest stations and used as input for quantifying ETo. Additionally, rainfall data at each scheme were incorporated (Table 2) [24].

2.3 Data collection procedure

Most of the primary data were collected directly from each scheme while farmers were irrigating their fields. The collected data included plot size, type of crop, length of growth period (LGP), planting date, irrigation intervals, application time, furrow dimensions, soil texture, and discharge of irrigation water applied at each field. Discharge measurements were conducted using a portable Parshall flume, as depicted in Fig. 2 [43].

For the on-field farmers’ irrigation scheduling study and validation, a total of 22 plots were monitored through direct observation in the field. All necessary information was obtained from farmers and development agents at each respective plot, supplemented by additional secondary information gathered from Agricultural Offices, as shown in Table 3.

2.4 Processing farmers’ and model’s irrigation scheduling

To process farmers’ and model’s irrigation scheduling, FAO CROPWAT 8.0 model input data (Climate/ETo, Rain, Crop, and Soil) shown in Table 2 and 3 were incorporated and processed to compute the crop water requirement at all 22 plots. Subsequently, farmers’ irrigation intervals and measured irrigation depths, as indicated in Table 4, were considered to process the model’s irrigation scheduling with reference to the field capacity (FC) of the specific soil at each plot.

The farmers’ scheduling, characterized by fixed irrigation intervals and depths throughout a crop growth season, was evaluated through a model reiteration and optimization process based on the principle of 'irrigation scheduling at Readily Available Water (RAW)’. This principle, which considers specific soil texture, crop type, and growth stage, aims to restore soil moisture to field capacity (FC) and thereby to avoid any yield reduction due to water scarcity [30]. The ultimate target is thus to maximize crop transpiration and yield [12].

By comparing the farmers’ scheduling with the corresponding model scheduling for each plot, it was possible to determine whether the farmer’s irrigation scheduling had optimal or excessively long irrigation intervals, over- or under-irrigation practices, the quantity of water losses in reference to the model irrigation depth, the amount of crop yield reduction due to improper irrigation schedules, the additional area to be irrigated, and the number of beneficiaries to be increased, considering the quantity of losses due to over-irrigation [24].

3 Results

3.1 Farmers’ on-field irrigation practices

In all of the studied irrigation schemes in the Tigray region, no metering devices were installed to monitor canal flow and soil moisture in the field. Water was simply applied to the fields based on farmers' observations and guesses. Responsibility for field water control has been entirely left to the farmers in all of the schemes.

Farmers in the Tigray region prepare furrows using animal-drawn plows during land preparation and planting, and they use shovels (locally called 'zaba') to guide the water during irrigation. The width of the furrows is determined based on the widths of these tools. Irrigation is managed by themselves, who are sometimes teenagers. They decide on the application time for each furrow throughout the crop growth period based on the flow advance from the head to the tail-end of a furrow. When it reaches the tail-end, the irrigator cuts off the flow and turns it to the next furrow, often without sufficient knowledge or data on the required water quantity for a plot (Fig. 3). Hussain et al. [30] similarly noted a lack of proper estimation of crop water requirements and proper irrigation scheduling practices by farmers and water managers in their study area.

In other words, the application amount (depth) remains relatively constant throughout the crop growth period, determined by the consistent furrow width, depth and discharge. Similarly, the irrigation intervals remain fixed throughout the crop growth period, as shown in Table 4.

Considering the constant irrigation depth and interval in farmers’ irrigation scheduling practices in Tigray, this type of irrigation scheduling can be regarded as more of a 'Fixed Application Practice.' In agreement with the practices of farmers in the Tigray region, Dechmi et al. [24] observed fixed irrigation depth practices among farmers in northeastern Spain.

However, fixed application does not guarantee the fulfillment of the required amount of water to meet peak water requirements during flowering and grain-setting growth stages of most crops [26], given the variable nature of water requirements throughout the growth stages of crops. Similarly, Burton et al. [15] noted that farmers' application amounts do not always match crop requirements at each growth stage.

Consequently, the Fixed Application Practice is susceptible to either unnecessary over-irrigation (leading to resource wastage) or under-irrigation (leading to crop-water stress and subsequent decrease in crop yield).

3.2 Farmers’ and model’s irrigation scheduling

The irrigation scheduling of both farmers and the model for each plot is presented in Fig. 4, revealing instances of either over- or under-irrigation practices. Upward bars, observed in the majority (55%) of the plots (e.g., Serenta plot 2 maize, Serenta plot 3 tomato, May Nigus plot 3 maize, Mesima plot 1 garlic, Mesima plot 2 spinach, Mesima plot 3 lettuce, Mesima plot 4 cabbage, Fre Lekatit plot 4 potato, Semha plot 1 cabbage, Semha plot 2 cabbage, Hada Alga plot 1 melon, and Hada Alga plot 2 melon), indicate over-irrigation applications (that is beyond the field capacity line and within the saturation state of soil moisture). In contrast, downward bars crossing the 'Readily Available Water' line in the remaining (45%) plots (Serenta plot 1 onion, Meskebet plot 1 tomato, Meskebet plot 2 maize, May Nigus plot 1 maize, May Nigus plot 2 tomato) signify instances of under-irrigation applications.

3.3 Over- or under-irrigation scheduling practices on crop growth stage and seasonal basis

-

i.

On crop growth stage basis

Figure 5 illustrates farmers' over- and under-irrigation applications based on crop growth stages, with 77% and 23% of farmers practicing over- and under-irrigation at the initial stage, 64% and 36% at the crop development stage, 50% and 50% at the midseason stage, and 55% and 45% at the late-season stage, respectively.

The results shown in Fig. 5 confirm that the majority of farmers (77% and 64%) practice over-irrigation during the first two crop growth stages (at the initial stage and the crop development stage, respectively). This indicates that the majority of the farmers, who apply over-irrigation during these initial stages, disregard lower crop water requirements and the potential for water losses beyond the shallower root depth during these stages. In contrast, 50% of the farmers who practice under-irrigation at the midseason stage play a determinant role in crop yield loss due to water shortage during this stage [45].

-

ii.

On seasonal basis

The cumulative irrigation application depths per season for both farmers and the model are illustrated in Fig. 6. The majority of plots (55%) were over-irrigated, while the remaining plots (45%) were under-irrigated. In this context, farmers' irrigation depth bars above the model application depth line are considered as over-irrigation, whereas depths below the model depth line are classified as under-irrigation.

Considering the seasonal over-irrigation depth (Fig. 6) and the type and size of the specific crop area coverage (ha) (Table 3), the excess water resulting from over-irrigation practices in the eight schemes ranged from a minimum of 1350 m3 in the garlic plot (MEG) in the Mesima scheme to a maximum of 1,327,067 m3 in the maize plot (SM) in the Serenta scheme.

4 Discussion

4.1 Implications of farmers’ over-or under- irrigation scheduling practices

In various irrigation schemes across Ethiopia, the application of the right amount of water to the land at the right time has been a subject of concern [16]. Similarly, the results for the eight schemes in the Tigray region, as depicted in Figs. 4–6, demonstrated that farmers' irrigation practices were not optimal in every event. They were characterized by either over- or under-irrigation, being within the soil saturation state or beyond the Readily Available Moisture (RAM), respectively.

The over-irrigation practices of the farmers in the study areas could be attributed to lengthy irrigation intervals. For instance, maize fields in the Fre Lekatit scheme had irrigation intervals of 30 days, while in the Ruba Feleg scheme, the intervals for the same crop were as long as and 40 days, despite the model-validated optimum intervals being 15 and 21 days, respectively. Due to these lengthy intervals, most farmers feel compelled to over-irrigate their fields to the point of saturation. In some schemes, such as in the Ruba Feleg, farmers even engaged in quarrels while queuing to divert canal flow and saturate their plots after long irrigation intervals, which reached up to 40 days in the case of barley plots.

Over-irrigation practices after extended irrigation intervals are not expected to immediately cause waterlogging conditions and yield reduction under well-drained conditions and deep groundwater levels. Instead, the excess applied water would typically be lost as gravitational water within a short period (1 to 3 days), especially in well-drained environments and soils with higher permeability [46]. However, during times when groundwater levels exceed the optimum depth ranges (1.5–2 m) [47], over-irrigation may lead to capillary water rise and potential waterlogged conditions, resulting in secondary soil salinization. Although, there were no indications of waterlogging conditions in any of the 22 plots where farmers practiced over-irrigation, the cumulative effect of continuous over-irrigation may contribute to groundwater level rise and potential waterlogged conditions in the future.

In line with this, Hiekal [17] confirmed that surface irrigation delivery systems are generally characterized by excessively long intervals between successive deliveries. Consequently, irrigators often compensate by applying excessive water during their turns. Under such irrigation practices, deep-rooted crops and soils with sufficient permeability can perform relatively better compared to shallow-rooted crops and/or soils with lower water-holding capacity.

Under-irrigation practices during the midseason stage, particularly during critical stages such as flowering and grain setting, often result in yield loss due to water shortage [31]. Sisay [16] similarly confirmed that over-irrigation practices during early stages and under-irrigation during the flowering stage of crop growth can significantly reduce crop productivity.

In irrigation schemes where farmers practice over-irrigation, there is a pressing need for improvements in their scheduling and application efficiencies [16]. The level of water management, and consequently the amount of water losses, inequitable water distribution, and crop yield reduction due to poor scheduling, can be influenced by various factors. These factors include poor training, low levels of motivation, inadequate data collection, skills, and knowledge, as well as organizational and managerial capacity [32, 33]. A lasting solution is therefore required to prevent both over- and under-irrigation practices by revitalizing irrigation management and establishing reliable irrigation intervals for optimum scheduling.

Moreover, the development of suitable irrigation scheduling techniques can help avoid both excessive and insufficient irrigation applications, thereby enhancing and maintaining crop yields [7, 34]. Efficient irrigation water management approaches have the potential to increase crop production [16]. Montoro et al. [33] also emphasized that irrigation scheduling can be enhanced through the provision of relevant and effective training, motivation, communication, and measurements, along with the availability of financial resources.

4.2 Optimizing over-irrigation water losses for additional irrigated area and beneficiaries

In irrigation schemes, with poor on-farm water management, more water can be lost than utilized. Inadequate irrigation scheduling, both at the main system and the field levels, is considered a major cause of such losses [35]. Although little attention is usually given to poor or inadequate water management at scheme and field levels, it is evident that schemes may experience a ‘loss or forgone productive potential over several years' due to a failure to properly and timely maintain irrigation and drainage systems [33].

If proper field water management practices are implemented in these schemes, the over-irrigation application (losses) can be saved and utilized for productive purposes, such as irrigating more fields, thereby benefiting more beneficiaries. By considering the model seasonal irrigation depth for each crop (Table 4) and the plot area per head in each scheme, the amount of over-irrigation mentioned in Sect. 3.3 (ranging from 1350 m3 in the garlic plot in the Mesima scheme to a maximum of 1,327,067 m3 in the maize plot of the Serenta scheme) can then be converted into additional irrigated area (ranging from a minimum of 2 ha in the Mesima scheme to a maximum of 148 ha in the Serenta scheme), which, in turn, benefits more beneficiaries, ranging from a minimum of 7 to a maximum of 296 more farmers, respectively. In agreement with this, Hussain et al. [30] pointed out that a considerable amount of water was saved through model-based irrigation scheduling compared to farmers' irrigation scheduling practiced in their study area.

On the contrary, in the irrigation schemes practicing under-irrigation, the percentage of crop yield reduction due to water scarcity was estimated using the FAO CROPWAT 8.0 model. Consequently, the yield reduction in the schemes ranged from a minimum of 10,445 kg of potatoes to a maximum of 138,499 kg of maize in the Fre Lekatit scheme. In other words, farmers practicing under-irrigation are obtaining crop yields below those predicted by the model under optimum irrigation conditions. Such losses could have been avoided through practicing proper water management, benefiting more beneficiaries and improving their livelihoods.

4.3 Renovating regional-level irrigation scheduling practices

Most (seven) of the schemes in the study areas, where 55% of the farmers practice over-irrigation, are characterized by a semi-arid condition. The annual reference evapotranspiration exceeded the rainfall by up to threefold (Table 2), with an aridity index (AI) of (0.20 < AI < 0.50) [36]. This indicates that water conservation in such dryland areas should be a top priority requiring efficient and effective management [30]. However, despite being in a water-scarce environment, there are unresolved perceptions regarding water 'loss' or 'inefficient use.' Indeed, the issue of water loss is not given top priority [35]. Even in situations of under-irrigation, crops are often stressed throughout the irrigation season, with water applications falling below the net irrigation requirements [27].

In relatively newer irrigation schemes in the Tigray region, where water availability from dams, diversions, and deep wells is ample, there were tendencies of over-irrigation practices. Conversely, farmers in relatively older schemes, facing water shortages due to various problems such as siltation of reservoirs, gate malfunctioning, and canal breakage, were practicing under-irrigation. Furthermore, farmers practicing over-irrigation had the opportunity to engage in diversified cropping, including vegetables such as onion, tomato, potato, cabbage, lettuce, spinach, garlic, watermelon, and pepper. On the contrary, those farmers applying under-irrigation have shifted from diversified cropping to cereal-based cropping, mostly maize (in the Meskebet, May Nigus, and Fre Lekatit schemes) and barley (in the Ruba Feleg scheme).

Moreover, as time passes and in the absence of regional focus on irrigation management practices, water supply becomes limited, and practicing under-irrigation becomes unavoidable [18]. Farmers shifting to cereal crops due to water shortage and mismanagement of available water in irrigation schemes will lead to low yields and unsustainable irrigated agriculture. To avoid under-irrigation, farmers need to receive a reliable supply of water via the main system, which is usually managed by a governmental agency. The quality of management depends on the motivation, skills, and knowledge of its staff and the effectiveness of their communication with the farmers [21, 32].

Yet, farmers, mostly situated in the lower part of schemes such as the Fre Lekatit scheme, complain of longer irrigation intervals and water shortages affecting their crops, despite sufficient water availability in the sources or storage structures of irrigation schemes. This issue is attributed to improper scheme-level water management, as noted by Cakir [31].

Given the current poor status of irrigation schemes and insights from the literature, the following three key conditions are proposed for renovating regional-level irrigation scheduling practices in the Tigray region. These recommendations are intended to inform policy and decision-making aimed at addressing water management at both the scheme and field levels. The overarching goal is to ensure that every irrigation investment contributes to meeting future food demands and fostering industrial development, particularly in developing countries like Ethiopia.

-

i.

Performance assessment through continuous scheme and plot-level analysis

By ensuring equitable water access for all farmers within the scheme, from upper to lower positions, and implementing proper irrigation scheduling and controlled on-field water application, this key condition opens up possibilities for more farmers to benefit from efficient management of over-irrigation, while also mitigating potential yield reductions due to under-irrigation.

This underscores the need for regular feedback of information from the field into water management, which constitutes a vital component of effective irrigation management. This process serves as the foundation for reliable decision-making, significantly improving the performance of water delivery services. Moreover, it enables the analysis of spatial variations in land and water productivity, while ensuring accountability [37].

Analyzing the water balance between requirement and supply serves as a valuable field-level water-saving technique by assessing crop water shortages and excess irrigation water [31]. This approach facilitates the avoidance of unnecessary over- or under-irrigation, especially for farmers following fixed irrigation schedules. It enables a shift towards appropriate irrigation depth, tailored to meet the water requirements of each crop growth stage.

-

ii.

Enhancing water productivity at field-level

Enhancing water productivity at field-level can be achieved through equipping and training of irrigators, ensuring proper irrigation intervals, and controlling the application amount in each interval. A study reported an increase in maize yield of 10 to 12 ton ha−1 through improvements in irrigation uniformity, control of irrigation depth, and the implementation of proper irrigation scheduling [12]. Similarly, David et al. [38] emphasized that farmers in developing countries could enhance productivity by adopting proven agronomic and water management practices within an enabling policy and institutional environment. To ensure a more inclusive and appropriate water management approach, various agricultural practices, including crop cultivar selection, land cultivation methods, and irrigation strategies, should be specified and considered as water-saving techniques [39].

-

iii.

Establishment of a regional “Real-Time Irrigation Scheduling System”

Drawing on insights from existing literature, this study advocates for the implementation of a 'Real-Time Irrigation Scheduling System' integrated with remote sensing technologies at the regional level to renovate irrigation scheduling and management practices. This proposed system would require a computer-modem-direct phone line connection to access real-time weather data, which would be integrated with irrigation scheduling models. By capturing and scientifically integrating plant, soil, and weather information, this system aims to forecast irrigation schedules on a plot-by-plot basis within specific schemes across the region [11, 40,41,42]. The overarching goal is to optimize regional and national water and land resources, ultimately leading to improved economic prosperity [19, 44].

5 Conclusions

Poor irrigation scheduling practices pose a significant challenge to the performance and sustainability of irrigation schemes, particularly in dryland regions. These practices not only exacerbate water scarcity but also lead to low and poor-quality yields, while wasting labor and related resources. Remarkably, in the water-scarce Tigray region of Ethiopia, a lack of regional-level validation for on-farm irrigation scheduling practices has persisted until now.

The objectives of this study were, therefore, to: (i) validate farmers’ irrigation scheduling practices; and (ii) optimize water consumption to increase irrigated area and benefit more individuals. Through this regional-level study, farmers’ on-field irrigation scheduling was validated using the FAO CROPWAT 8.0 model.

In conclusion:

-

i.

None of the farmers’ irrigation scheduling practices were optimal; the majority (55%) engaged in over-irrigation practices, leading to water losses, while the remaining (45%) practiced under-irrigation, causing crop-water stress and resulting in decreased crop yield,

-

ii.

The amount of over-irrigation was quantified and optimized using the model. Accordingly, over-irrigation ranged from 1350 m3 in the garlic plot in the Mesima scheme to a maximum of 1,327,067 m3 in the maize plot of the Serenta scheme. This unnecessary excess application could have irrigated an additional area of 2 ha in the Mesima scheme and 148 ha in the Serenta scheme, benefiting 7 and 296 more farmers, respectively,

-

iii.

On the contrary, farmers practicing under-irrigation experienced subsequent yield reduction ranging from 10,445 kg of potato to 138,499 kg of maize in the Fre Lekatit scheme due to water scarcity,

-

iv.

These non-optimal irrigation scheduling practices, encompassing over- and under-irrigation, underscores the need for policy attention and decision-making to establish efficient and effective regional-level irrigation practices, and

-

v.

Considering the semi-arid nature of most (seven) of the studied schemes, water conservation should be treated as a top priority particularly in the Tigray region, deserving efficient and effective management. Renovating regional-level irrigation scheduling practices involves integrating performance assessment and enhancing water productivity at the field level. This can be achieved through the establishment of a 'Real-Time Irrigation Scheduling System' integrated with remote sensing technologies at regional level. Such efforts are crucial for meeting future food demands and promoting agriculture-based development, especially in developing countries like Ethiopia.

Availability of data and materials

Data is contained within the article.

References

Okello C, Tomasello B, Greggio N, Wambiji N, Antonellini M. Impact of population growth and climate change on the freshwater resources of Lamu Island. Kenya Water. 2015;7:1264–90. https://doi.org/10.3390/w7031264.

Musie W, Gonfa G. Fresh water resource, scarcity, water salinity challenges and possible remedies: a review. Heliyon. 2023;9: e18685.

Kibret EA, Abera A, Ayele WT, Alemie NA. Performance Evaluation of Surface Irrigation System in the Case of Dirma Small-Scale Irrigation Scheme at Kalu Woreda, Northern Ethiopia. Water Conserv Sci Eng. 2021;6:263–74. https://doi.org/10.1007/s41101-021-00119-8.

Mohamed HK, Michel LP, Ahmed C, Vincent S, Salah E, Lionel J, Lahcen O, Said K, Ghani C. Assessment of equity and adequacy of water delivery in irrigation systems using remote sensing-based indicators in semi-arid region. Morocco Water Resour Manage. 2013;27:4697–714. https://doi.org/10.1007/s11269-013-0438-5.

Asmamaw DK, Janssens P, Dessie M, Tilahun SA, Adgo E, Nyssen J, KristineWalraevens DF, Cornelis WM. Soil and IrrigationWater Management: Farmer’s Practice, Insight, and Major Constraints in Upper Blue Nile Basin, Ethiopia. Agriculture. 2021;11:383. https://doi.org/10.3390/agriculture11050383.

Bondesan L, Ortiz BV, Morlin F, Morata G, Duzy L, van Santen E, Lena BP, Vellidis G. A comparison of precision and conventional irrigation in corn production in Southeast Alabama. Precision Agric. 2023;24:40–67. https://doi.org/10.1007/s11119-022-09930-213.

Zhe Gu, Qi Z, Burghate R, Yuan S, Jiao X, Junzeng Xu. Irrigation scheduling approaches and applications: a review. J Irrig Drain Eng. 2020;146(6):04020007. https://doi.org/10.1061/(ASCE)IR.1943-4774.0001464.

Gebremedhin GH, Qiuhong T, Siao S, Zhongwei H, Xuejun Z, Xingcai L. Droughts in East Africa: causes, impacts and resilience. Earth Sci Rev. 2019;193:146–61.

Haile GG, Gebremicael TG, Kifle M, Gebremedhin T. Effects of irrigation scheduling and different irrigation methods on onion and water productivity in Tigray, northern Ethiopia. bioRxiv. 2019. https://doi.org/10.1101/790105.

Ahmed Z, Gui D, Murtaza G, Yunfei L, Ali S. An overview of smart irrigation management for improving water productivity under climate change in drylands. Agronomy. 2023;13:2113. https://doi.org/10.3390/agronomy13082113.

Mndela Y, Ndou N, Nyamugama A. Irrigation scheduling for small-scale crops based on crop water content patterns derived from UAV multispectral imagery. Sustainability. 2023;15:12034. https://doi.org/10.3390/su151512034.

Enrique P, Luciano M. Modernization and optimization of irrigation systems to increase water productivity. Agric Water Manag. 2006;80:100–16.

Kifle M, Gebretsadikan T. Yield and water use efficiency of furrow irrigated potato under regulated deficit irrigation, Atsibi-Wemberta. North Ethiopia Agricult Water Manag. 2016;170:133–9. https://doi.org/10.1016/j.agwat.2016.01.003.

Yohannes DF, Ritsema CJ, Eyasu Y, Solomon H, van Dam JC, Froebrich J, Ritzema HP, Meressa A. A participatory and practical irrigation scheduling in semiarid areas: the case of Gumselassa irrigation scheme in Northern Ethiopia. Agricult Water Manag. 2019;218:102–14.

George BA, Shende SA, Raghuwanshi NS. Development and testing of an irrigation scheduling model. Agric Water Manag. 2000;46:121–36.

Sisay BA. Evaluating and enhancing irrigation water management in the upper Blue Nile basin, Ethiopia: the case of Koga large scale irrigation scheme. Agric Water Manag. 2016;170:26–35.

Hossam AMH. Improving performance of surface irrigation system by designing pipes for water conveyance and on-farm distribution. Switzerland: Springer; 2020.

Fereres E, Soriano MA. Deficit irrigation for reducing agricultural water use. J Exp Bot. 2007;58(2):147–59. https://doi.org/10.1093/jxb/erl165.

Haile AT, Alemayehu M, Rientjes T, Nakawuka P. Evaluating irrigation scheduling and application efficiency: baseline to revitalize Meki-Ziway irrigation scheme, Ethiopia. SN Appl Sci. 2020;2:1710. https://doi.org/10.1007/s42452-020-03226-8.

Asmamaw DK, Janssens P, Dessie M, Tilahun SA, Adgo E, Nyssen J, KristineWalraevens DF, Wim M. Cornelis Soil and IrrigationWater Management: Farmer’s Practice, Insight, and Major Constraints in Upper Blue Nile Basin, Ethiopia. Agriculture. 2021;11:383. https://doi.org/10.3390/agriculture11050383.

Eshete DG, Sinshaw BG, Legese KG. Critical review on improving irrigation water use efficiency: advances, challenges, and opportunities in the Ethiopia context. Water-Energy Nexus. 2020;3:143–54.

Ali AA, David CN. Use of crop water stress index for monitoring water status and scheduling irrigation in wheat. Agric Water Manag. 2001;47:69–75.

Ling T, Shaozhong K, Lu Z. Temporal and spatial variations of evapotranspiration for spring wheat in the Shiyang river basin in northwest China. Irrig Water Manag. 2007;87:241–50.

Dechmi F, Playan E, Faci JM, Tejero M. Analysis of an irrigation district in northeastern Spain I. Characterization and water use assessment. Agricult Water Manag. 2003;61:75–92.

Neira XX, Alvarez CJA, Cuesta TS, Cancela JJ. Evaluation of water-use in traditional irrigation: An application to the Lemos Valley irrigation district, northwest of Spain. Agric Water Manag. 2005;75:137–51.

Allen RG, Pereira LS, Raes D, Smith M. Crop evapotranspiration (guidelines for computing crop water requirements). FAO Irrig Drain. 1998;56:300.

Dechmi F, Playan E, Faci JM, Tejero M, Bercero A. Analysis of an irrigation district in northeastern Spain II. Irrigation evaluation, simulation and scheduling. Agricult Water Manag. 2003;61:93–109.

Webber HA, Madramootoo CA, Bourgault M, Horst MG, Stulina G, Smith DL. Water use efficiency of common bean and green gram grown using alternate furrow and deficit irrigation. Agric Water Manag. 2006;86:259–68.

Chiew FHS, Kamaladasa NN, Malano HM, McMahon TA. Penman-Monteith, FAO-24 reference crop evapotranspiration and class-A pan data in Australia. Agric Water Manag. 1995;28(9–2):1.

Hussain F, Shahid MA, Majeed MD, Ali S, Zamir MSI. Estimation of the crop water requirements and crop coefficients of multiple crops in a semi-arid region by using lysimeters. Environ Sci Proc. 2023;25:101. https://doi.org/10.3390/ECWS-7-14226.

Cakir R. Effect of water stress at different development stages on vegetative and reproductive growth of corn. Field Crop Res. 2004;89:1–16.

Parry K, van Rooyen AF, Bjornlund H, Kissoly L, Moyo M, de Sousa W. The importance of learning processes in transitioning small-scale irrigation schemes. Int J Water Resour Dev. 2020. https://doi.org/10.1080/07900627.2020.1767542.

Montoro A, Lopez-Fuster P, Fereres E. Improving on-farm water management through an irrigation scheduling service. Irrig Sci. 2011;29:311–9. https://doi.org/10.1007/s00271-010-0235-3.

Cosgrove WJ, Loucks DP. Water management: Current and future challenges and research directions. Water Resour Res. 2015;51:4823–39. https://doi.org/10.1002/2014WR016869.

Bjornlund H, van Rooyen A, Stirzaker R. Profitability and productivity barriers and opportunities in small-scale irrigation schemes. Int J Water Resour Dev. 2017;33(5):690–704. https://doi.org/10.1080/07900627.2016.1263552.

Ward C, Torquebiau R, Xie H. Improved Agricultural Water Management for Africa’s Drylands. Washington: International Bank for Reconstruction and Development/The World Bank; 2016.

Wim GM, David JMB, Ian WM. Remote sensing for irrigated agriculture: examples) from research and possible applications. Agricult Water Manag. 2000;46:137–55.

David M, Theib O, Pasquale S, Prem B, Munir AH, Jacob K. Improving agricultural water productivity: between optimism and caution. Agric Water Manag. 2010;97:528–35.

Leenhardt D, Lemaire P. Estimating the spatial and temporal distribution of sowing dates for regional water management. Irrig Water Manag. 2002;55:37–52.

García IF, Lecina S, Ruiz-Sánchez MC, Vera J, Conejero W, Conesa MR, Domínguez A, Pardo JJ, Léllis BC, Montesinos P. Trends and challenges in irrigation scheduling in the semi-arid area of Spain. Water. 2020;12:785. https://doi.org/10.3390/w12030785.

Flores CM, Cayuela RG, Perea EC, Poyato PM. An ICT-based decision support system for precision irrigation management in outdoor orange and greenhouse tomato crops. Agricult Water Manag. 2022;269:107686.

Boltana SM, Bekele DW, Ukumo TY, Lohani TK. Evaluation of irrigation scheduling to maximize tomato production using comparative assessment of soil moisture and evapotranspiration in restricted irrigated regions. Cogent Food Agricult. 2023;9(1):2214428. https://doi.org/10.1080/23311932.2023.2214428.

Galiotoa F, Chatzinikolaoua P, Raggib M, Viaggi D. The value of information for the management of water resources in agriculture: Assessing the economic viability of new methods to schedule Irrigation. Agric Water Manag. 2020;227: 105848. https://doi.org/10.1016/j.agwat.2019.105848.

Tesfaye A. Effect of deficit irrigation on crop yield and water productivity of crop, a synthesis review. Irrigat Drainage Sys Eng. 2022;11:12.

Bhatt MK, Labanya R. Soil organic matter and its role in soil fertility. Res Trends Agricult Sci. 2018;6:71–92.

Kahlown MA, Ashraf M. Effect of shallow groundwater table on crop water requirements and crop yields. Agricult Water Manag. 2005;76:24–35.

Acknowledgements

The financial support provided by the EAU4Food research project during field work is gratefully acknowledged. I would also like to express my appreciation to Mr. Gebreyohannes Zenebe and the experts at the zonal, district, and scheme levels of the study areas, as well as the farmers at each of the eight irrigation schemes, for their facilitation and assistance during the fieldwork.

Funding

The fieldwork for this research study was funded by the European Commission as part of the ‘European Union and African Union cooperative research project to increase Food Production in Irrigated Farming Systems in Africa (EAU4Food)’. However, no funding was received for the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Solomon Habtu (the author) conceived and designed the study, conducted fieldwork with assistants during data collection, analyzed the data, and prepared the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Habtu, S. Validation of farmers’ on-farm irrigation scheduling for optimal water utilization in Tigray Region, Ethiopia. Discov Agric 2, 9 (2024). https://doi.org/10.1007/s44279-024-00021-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44279-024-00021-6