Abstract

The primary goal of visible-infrared person re-identification (VI-ReID) is to match pedestrian photos obtained during the day and night. The majority of existing methods simply generate auxiliary modalities to reduce the modality discrepancy for cross-modality matching. They capture modality-invariant representations but ignore the extraction of modality-specific representations that can aid in distinguishing among various identities of the same modality. To alleviate these issues, this work provides a novel specific and shared representations learning (SSRL) model for VI-ReID to learn modality-specific and modality-shared representations. We design a shared branch in SSRL to bridge the image-level gap and learn modality-shared representations, while a specific branch retains the discriminative information of visible images to learn modality-specific representations. In addition, we propose intra-class aggregation and inter-class separation learning strategies to optimize the distribution of feature embeddings at a fine-grained level. Extensive experimental results on two challenging benchmark datasets, SYSU-MM01 and RegDB, demonstrate the superior performance of SSRL over state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Single-modality person re-identification (Re-ID) is a pedestrian matching problem between query and gallery photos from separate cameras and has received much attention in computer vision [1–5]. Visible cameras play a limited role in night monitoring and security work, therefore visible-infrared person re-identification (VI-ReID) [6] is proposed to match the images of people captured by visible and infrared cameras. This task aims to solve not only intra-modality discrepancies caused by different camera perspectives and human poses in single-modality visible person Re-ID but also inter-modality discrepancies caused by various spectral cameras.

Reducing the modality discrepancy and learning modality-invariant representations is a significant challenge. Cross-modality matching using grayscale images is a popular method to eliminate the color discrepancy [6, 7]. However, modality-specific representations are discarded along with color information, and specific representations are a crucial decision-making for retrieval, which can help to widen the inter-class discrepancy. Using generative adversarial networks (GANs) to generate concrete visualizations [8, 9] to eliminate image-level gaps allows the task to be reduced as much as possible to a single-modal task, but with the inevitable generation of noise. Another method is to build complex networks [10, 11] for learning modality-invariant representations, but due to the large modality discrepancy, heterogeneous modality features cannot be well projected into a unified space. In summary, extracting and decoupling specific and shared representations is a challenge for current methods. It could not completely decouple specific and shared representations, although Lu et al. [12] made a preliminary attempt.

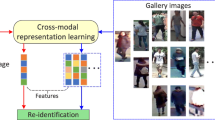

This paper proposes a specific and shared representations learning (SSRL) model for VI-ReID to mitigate the discrepancy between heterogeneous pedestrian images while better capture modality-invariant and modality-specific representations. Specifically, we employ the traditional visible-infrared branch as our specific branch to learn modality-specific representations. The color information in red green blue (RGB) images is an important decision-making accordance for retrieval which may lead to large inter-class differences and slight intra-class differences. Therefore, it is necessary to decompose modality-specific representations. The specific branch takes visible and infrared images as inputs and feature extractors with different parameters to ensure that the extracted features are modality-specifc representations. We design a shared branch, as shown in Fig. 1(a). We can obtain grayscale images directly from visible images using channel conversion, which converts three-channel visible images into single-channel grayscale images and replicates them to the three channels. The converted grayscale images alleviate the modality discrepancy with the infrared images, and feed them into the feature extractor. The shared branch feature extractor is parameter-shared and used to learn modality-invariant representations. Our dual-branch structure has two main benefits. First, the specific branch improves extraction to modality-specific representations by preserving color information from visible images. As illustrated in Fig. 1(b), it is necessary to rely on specific representations in the visible light images to separate them when infrared images from different IDs are similar and visible images from different IDs are different. Second, grayscale operations for shared branch alleviate the modality discrepancy which allow the parameter-shared feature extractor to capture the modality-shared representations more effectively. As illustrated in Fig. 1(c), it is vital to rely on shared representations to bring the features of different modalities of the same ID closer when visible images from different IDs are similar and infrared images from different IDs are different. In addition, we design effective intra-class aggregation and inter-class separation learning strategies to optimize the distribution of feature embeddings on a fine-grained level. It constrains the distance of different class centers from both the same modality and cross-modality and plays a role in expanding the intra-class distance and minimizing the inter-class distance. In the early stages of training, substantial disparities exist between modality-specific features which render them unsuitable for cross-modality tasks. However, we mitigated this issue by using intra-class aggregation learning (IAL) loss, which constrains the differences between modality-specific and modality-shared features. As training progresses, these differences gradually diminish, while both types of features continue to contribute effectively to the network’s accurate identification of individual identities. During the training process, we employed cross-entropy loss and inter-class separation learning (ISL) loss to enhance the representation capability of the final features by extracting modality-specific feature and combining them with modality-shared feature through concatenation. This approach shows advantages in increasing the distinction between features from different IDs and improves the ability of the feature extraction network to extract ID-related information to some extent.

(a) The conversion of visible light images to grayscale images. After that, the large gap between visible and infrared images shrinks to a small gap between infrared and grayscale images. (b) Different IDs are similar under visible images but different under infrared photos. The discriminative information of visible light images can help the network distinguish between different IDs. (c) Different IDs are different under visible photos but similar under infrared images. The shared representations of infrared images can help to find the same identity

Our main contributions are summarized below:

-

1)

A novel specific and shared representations learning model termed SSRL is proposed for the VI-ReID task, which contains a specific branch and a shared branch to learn modality-specific and modality-shared representations.

-

2)

Intra-class aggregation and inter-class separation learning strategies are further developed to optimize the distribution of feature embeddings on a fine-grained level.

-

3)

Extensive experimental results on the SYSU-MM01 and RegDB datasets show that our proposed method achieves a new performance of state-of-the-art.

2 Related work

2.1 Single-modality person re-identification

Single-modality person Re-ID aims at matching the person images captured by different cameras in the daytime, while all the images are from the same visible modality. Existing works have shown desirable performance on widely-used datasets with deep learning techniques [13–15]. Lin et al. [16] presented an attribute-person recognition (APR) network, a multi-task network that learnt a Re-ID embedding and simultaneously predicted pedestrian attributes. Sun et al. [17] proposed a network named part-based convolutional baseline (PCB) which conducted uniform partition on the conv-layer for learning part-level features and an adaptive pooling method named refined part pooling (RPP) to improve the uniform partition. Li et al. [18] formulated a harmonious attention convolutional neural networks (CNNs) model for joint learning of pixel and regional attention to optimize Re-ID performance with misaligned images. Due to the tremendous discrepancy between visible and infrared images, single-modality solutions were not suitable for cross-modality person re-identification, which created a demand for the development of VI-ReID solutions. Zhao et al. [19] introduced a general framework, namely JoT-GAN, to jointly train GAN and the Re-ID model.

2.2 Visible-infrared person re-identification

The main challenge in VI-ReID is the appearance discrepancy, including large intra-class and slight inter-class variations. The existing VI-ReID methods are divided into three categories: representation learning, metric learning and image generation. The approach of representation learning based on feature extraction mainly explores how to construct a reasonable network architecture that can extract the robust and discriminating features shared by the two modality images. Wu et al. [6] first defined the VI-Re-ID problem and contributed a new multiple modality Re-ID dataset SYSU-MM01 for research, and they introduced a deep zero-padding for training one-stream network toward automatically evolving domain-specific nodes in the network for cross-modality matching. Hao et al. [20] offered a novel modality confusion learning network (MCLNet) to confuse two modalities, which ensured that the optimization was explicitly concentrated on the modality-irrelevant perspective. Ye et al. [21] presented a novel hierarchical cross-modality matching model that could simultaneously handle both cross-modality discrepancy and cross-view variations, as well as intra-modality intra-person variations. To explore the potential of both the modality-shared information and the modality-specific characteristics to boost the re-identification performance, Lu et al. [12] proposed a novel cross-modality shared-specific feature transfer algorithm to address the above limitation. A dual-path network with a novel bi-directional dual-constrained top-ranking loss was developed in Ref. [22] to learn discriminative feature representations. Metric learning was used to learn the similarity of two pictures through the network, and the key was to design a reasonable measurement method or loss function. Zhu et al. [23] designed a novel loss function, called hetero-center loss, to constrain the distance between two centers of heterogenous modality. Liu et al. [24] proposed the hetero-center triplet loss to constrain the distance of different class centers from the same modality and cross-modality. Hao et al. [25] used sphere softmax to learn a hypersphere manifold embedding and constrained the intra-modality variations and cross-modality variations on this hypersphere. Liu et al. [26] presented the dual-modality triplet loss to constrain both the cross-modality and intra-modality and address the cross-modality discrepancy and intra-modality variations. Zhao et al. [27] introduced a novel loss function called hard pentaplet loss, which could simultaneously handle cross-modality and intra-modality variations. Image generation means using GANs or other methods to reduce discrepancies by transforming one modality into another. Wang et al. [9] proposed generating cross-modality paired images and performing global set-level and fine-grained instance-level alignments. Choi et al. [28] presented a hierarchical cross-modality disentanglement method that extracted pose-invariant and illumination-invariant features for cross-modality matching. Wang et al. [8] translated an infrared image into its visible counterpart and a visible image into its infrared version, which could unify the representations for images with different modalities. Wang et al. [29] presented a novel and end-to-end alignment generative adversarial network (AlignGAN) for the visible-infrared Re-ID task. Li et al. [30] introduced an auxiliary X modality as an assistant and reformulated infrared-visible dual-mode cross-modality learning as an X-infrared-visible three-mode cross-modality learning problem.

3 Method

This section will detail the SSRL model proposed for visible-infrared person Re-ID. The proposed SSRL model is demonstrated in Fig. 2. We first propose a dual-branch structure containing a shared branch to alleviate modality discrepancy to learn modality-invariant representations and a specific branch to learn more accurate modality-specific representations. Then, we use the intra-class aggregation and inter-class separation learning strategies to further optimize the distribution of features and aggregate instances with the same identity.

Framework of the proposed SSRL model. The cross-modality images are fed into a dual-branch structure, with one branch dedicated to learning specific representations and the other dedicated to learning shared representations and fusing the specific representations and shared representations. For the fusion feature, the identity (ID) loss is leveraged to enhance the discriminative power of the embedding features and the hetero-center triplet (HCT) loss is leveraged to constrain the distance of different class centers from both the same modality and cross-modality. For the specific feature and shared feature, intra-class aggregation learning (IAL) loss and inter-class separation learning (ISL) loss are further developed to optimize the distribution of feature embeddings on a fine-grained level

3.1 Baseline

We adopt ResNet-50 [31] as the backbone, in which each branch contains a pre-trained model. At the same time, we use max pooling to obtain fine-grained features. Inspired by the work of PCBs [17, 32] in extracting discriminant features, the work divides the feature map horizontally into sections and feeds each part into a classifier to learn local clues and sets the part to 6. Following the state-of-the-art methods, we utilize identity loss [33] \(\mathcal{L}_{\mathrm{ID}}\) and hetero-center triplet loss [24] \(\mathcal{L}_{\mathrm{HCT}}\) to constrain the network. The baseline learning loss is denoted as \(\mathcal{L}_{\mathrm{base}}\).

3.2 Dual-branched structure

3.2.1 Specific branch

The color information in the visible light image is crucial discriminative information, which can help the network expand the difference among classes as much as possible. The specific branch takes the original RGB and near-infrared (NIR) images as input and employs two parameter-independent feature extractors to ensure that the network learns specific characteristics more effectively. Through CNN, global max pooling (GMP), batch normalization (BN) operations and feature vectors are input to the fully connected layer for identity classification. The network pays more attention to learning discriminative color information since the input to the specific branch includes RGB images. For this branch, identity loss is formulated as follows:

where N denotes the sample number in a batch, \({y}_{n}\) and \({\boldsymbol{f}}_{n}^{V}\) are the identity and the feature vector of the n-th pedestrian image, U is the number of identities, and \(\boldsymbol{W}_{u}\) denotes the u-th column of the weights. Under the supervision of identity loss, the specific branch can extract information about a specific identity for classification.

3.2.2 Shared branch

The visible image has three channels, which contain the visible light color information of red, green and blue, while the infrared image has only one channel which contains the intensity information of near-infrared light. From the perspective of imaging principles, the wavelength ranges of the two are also different. Different sharpness and lighting conditions can produce very different effects on the two types of images. The large modality discrepancy makes it very challenging to extract modality-shared features directly using feature extractors. Thus we introduce an additional shared branch. The shared branch first performs a grayscale transformation operation on the visible light image to reduce the modality discrepancy. This is done for a given visible image \(x_{v}^{i}\) with three channels \(\mathcal{R}\), \(\mathcal{G}\) and \(\mathcal{B}\), we take the \(\mathcal{R}(x)\), \(\mathcal{G}(x)\) and \(\mathcal{B}(x)\) values for each pixel of the visible image \(x_{v}^{i}\). The corresponding grayscale pixel point \(\mathcal{G}(x)\) can therefore be calculated as

where \(\alpha _{1}\), \(\alpha _{2}\) and \(\alpha _{3}\) are each set to 0.299, 0.587 and 0.114, respectively. The grayscale image is then restored to a three-channel image and sent to the shared branch along with the infrared image. The two feature extractor parameters of shared branch are shared. The shared branch removes color information from the network, allowing it to focus on learning modality-invariant representations such as texture and structural information. The identity loss of the shared branch \(\mathcal{L}_{\mathrm{ID}}^{\mathrm{shared}}\) is the same as that of the specific branch.

3.2.3 Fusion features

We employ concatenation to fuse the obtained specific and shared features from dual-branch cooperative learning on visible and infrared images from specific and shared branches. The discriminative information of specific features in the fusion feature can assist the model in separating different identities. The invariant information of the shared features in the fusion feature can assist the model in identifying the same identity in different modalities. As a result, we implement an identity classification layer for the fusion features for synchronous classification learning. The fusion feature \(\mathcal{L}_{\mathrm{ID}}^{\mathrm{fusion}}\) has the same identity loss computation as the specific branch. Add the above identity loss to obtain the final identity loss function:

The hetero-center triplet loss \(\mathcal{L}_{\mathrm{HCT}}\) is also used for metric learning of the fusion feature.

3.3 Intra-class aggregation learning

The distance between sample pairs among classes is frequently longer than the distance between sample pairs intra-classes due to the camera viewpoint, clothing, posture and other factors. We apply an intra-class aggregation learning strategy to limit the distance in each class to increase cross-modality intra-class similarity. By intra-class aggregation learning strategies, we aggregate different modalities of the same identity. It is challenging to restrict the distance in each class’s distribution of visible-specific features, infrared-specific features, visible-shared features and infrared-shared features, therefore, taking the centers of these feature distributions and penalizing the center distance. As shown in Fig. 3, we suppose that there are P × K images of P identities in a mini-batch, where each identity contains K images. The feature distribution center of identity in visible-specific features is calculated as follows:

where \((vspe)_{k}^{p}\) denotes the feature vector of the k-th image output. Infrared-specific features, visible-shared features, and infrared-shared feature distribution centers are calculated as \(\boldsymbol{c}_{\mathrm{ispe}}^{p}\), \(\boldsymbol{c}_{\mathrm{vsha}}^{p}\) and \(\boldsymbol{c}_{\mathrm{isha}}^{p}\) in the same way. We introduce an intra-class aggregation constraint loss to handle the distance among different modalities of the same identity, which can be interpreted as

As illustrated in Fig. 3, the visible-specific features, infrared-specific features, visible-shared features and infrared-shared features represented by the four colors are focused closer by the intra-class aggregation learning strategy. Our goal is to reduce the distance between different modality centers of the same identity, thereby suppressing cross-modality variations.

3.4 Inter-class separation learning

Intra-class aggregation learning strategy can only ensure that samples of cross-modality of the same identity are aggregated together, but the model also needs to ensure dissimilarity among different identities. The extracted modality-specific representations can help different IDs to achieve inter-class separation. By inter-class separation learning strategies, we separate different identities. We first calculate the center of all samples for each identity. Then we constrain the distance of the distribution of different identity features by cosine distance. The inter-class separation loss is calculated as:

where \(\boldsymbol{c}^{p}\) and \(\boldsymbol{c}^{j}\) denote the centers of (\(\boldsymbol{c}_{\mathrm{vspe}}^{p}\), \(\boldsymbol{c}_{\mathrm{ispe}}^{p}\), \(\boldsymbol{c}_{\mathrm{vsha}}^{p}\), \(\boldsymbol{c}_{\mathrm{isha}}^{p}\)) and (\(\boldsymbol{c}_{ \mathrm{vspe}}^{j}\), \(\boldsymbol{c}_{\mathrm{ispe}}^{j}\), \(\boldsymbol{c}_{\mathrm{vsha}}^{j}\), \(\boldsymbol{c}_{\mathrm{isha}}^{j}\)), cos(⋅, ⋅) represents the cosine distance in centers of different identities, m is a margin term. As illustrated in Fig. 4, with the inter-class separation learning strategy, the feature distributions of different identities are separated.

3.5 Objective function

As mentioned above, the goal of SSRL is to jointly learn the two branches, obtain modality-specific representations and modality-invariant representations, and make full use of them. Combined with the losses mentioned above, we finally define the total loss of the overall network as follows:

where \(\lambda _{1}\), \(\lambda _{2}\) and \(\lambda _{3}\) are the weights of \(\mathcal{L}_{\mathrm{HCT}}\), \(\mathcal{L}_{\mathrm{IAL}}\) and \(\mathcal{L}_{\mathrm{ISL}}\), respectively.

4 Experiments

4.1 Experimental settings

Datasets. We evaluate our proposed method on two publicly available VI-ReID datasets(SYSU-MM01 [6] and RegDB [34]).

SYSU-MM01 dataset contains 287,628 visible images and 15,729 infrared images captured by 4 RGB cameras and 2 thermal imaging cameras, with a total of 491 valid IDs, of which 296 identities are used for training, 99 for verification, and 96 for testing. Infrared images were used to search for visible images during the testing phase. Samples from the visible camera are used in the gallery set, and samples from the infrared camera are used in the probe set. This dataset contains two modes: all search mode and indoor search mode. For the all search mode, visible cameras 1, 2, 4 and 5 are used for the gallery set, and infrared cameras 3 and 6 are used for the probe set. For the indoor search mode, visible cameras 1 and 2 are used for the gallery set, and infrared cameras 3 and 6 are used for the probe. A detailed description of the experimental settings can be found in Ref. [6].

RegDB dataset contains 412 person identities, each with 10 visible light and 10 infrared images, for a total of 4120 visible images and 4120 infrared images. The 10 images of each individual vary in body pose, capture distance, and lighting conditions. However, in the 10 images of the same person, the camera’s weather conditions, viewing angles, and shooting angles are all the same, and the pose of the same identity varies little, so the task of the RegDB dataset is less complicated. Following the evaluation protocol proposed by Ref. [21], we randomly sampled 206 identities for training, and the remaining 206 identities were used for testing. The training/test segmentation process is repeated 10 times.

LLCM dataset utilizes a 9-camera network deployed in low-light environments and contains 46,767 bounding boxes containing 1064 identities. The training set contains 30,921 bounding boxes of 713 identities (16,946 bounding boxes from the visible (VIS) modality, 13,975 bounding boxes from the NIR modality), and the test set contains 13,909 bounding boxes of 351 identities (8680 bounding boxes from the VIS modality, 7166 bounding boxes from the NIR modality.)

Evaluation metrics. For performance evaluation, we employed the widely known cumulative matching characteristic (CMC) [35] curve and mean average precision (mAP).

4.2 Implementation details

The proposed method is implemented by the PyTorch framework and an NVIDIA Tesla V100 GPU. Building on existing person Re-ID work, a pre-trained ResNet-50 model is used as the backbone for a fair comparison. Specifically, the stride of the last convolutional block is changed from 2 to 1 to obtain fine-grained feature maps. In the training phase, the batch-size is set to 32, containing 16 visible light and 16 infrared images from 8 identities. For each identity, 2 visible images and 2 infrared images are selected randomly. For infrared images, three copied channels are fed into the network. The input images are resized to 288 × 144 and padded with 10, then randomly left-right flipped and cropped to 288 × 144 and randomly erased [36] for data augmentation. We use the stochastic gradient descent (SGD) optimizer for optimization with the momentum parameter set to 0.9. We set the initial learning rate of the two datasets to 0.1 and guide the network using a warmup learning rate strategy [37]. For \(\lambda _{1}\), \(\lambda _{2}\) and \(\lambda _{3}\) in Eq. (8), we set them to 1.0, 1.0 and 2.0, respectively.

4.3 Comparison with state-of-the-art methods

Results on SYSU-MM01 dataset. Table 1 demonstrates the comparison results on SYSU-MM01 dataset. It can be seen that the proposed method has reached state-of-the-art results in two settings. Our model achieves 72.68% rank-1 and 68.28% mAP in all search settings and achieves 77.58% rank-1 and 79.50% mAP in indoor settings. Most of the indicators reach the second highest accuracy.

Results on RegDB dataset. Table 1 depicts the comparison results on RegDB dataset. Our SSRL achieves superior performance on both visible-to-infrared and infrared-to-visible settings. Specifically, we achieve a Rank-1 accuracy of 93.64% and an mAP of 82.55% in infrared to visible mode, and a Rank-1 accuracy of 93.52% and an mAP of 82.43% in visible to infrared mode. Hence, our SSRL model is robust against different datasets and query settings.

Results on LLCM dataset. Table 2 illustrates the comparison results on the LLCM dataset. The images in the LLCM dataset are captured in complex low-light environments, which contain severe illumination variations. Our SSRL does not have measures for low-light environments but also achieves decent performance.

4.4 Ablation study

Effectiveness of each component. We evaluate the performance of each component on SYSU-MM01 dataset to verify the effectiveness of each component of SSRL. The ablation experiment is conducted on SYSU-MM01 dataset in the all search single-shot mode. The results are demonstrated in Table 3. The dual-branch structure improves the Rank-1 accuracy and mAP by 10.12% and 10.89%, respectively, compared with the baseline model. The reason for the improvement is mainly because the dual-branch structure fully extracts specific features and shared features. After intra-class aggregation and inter-class separation learning strategy, the performance is greatly improved by 3.87% and up to 72.68% Rank-1.

Effectiveness of the dual-branch structure. We evaluate the performance of the dual-branch structure to verify that the dual-branch structure is better than the single-branch structure. The ablation experiment is conducted on SYSU-MM01 dataset in the all search single-shot mode. For a fair comparison, we keep other structures the same and only use \(\mathcal{L}_{\mathrm{ID}}\) and \(\mathcal{L}_{\mathrm{HTC}}\) in the training phase. The results are depicted in Table 4. It shows that the accuracy is inferior when only using a shared branch or specific branch, but outstanding performance gains can be achieved when the specific and shared features of the dual-branch structure are fully utilized.

Comparison of different augmented modalities. To verify the superiority of the dual-branch structure augmentation, we compare it with other existing modality augmentation strategies, including the X modality [30] and grayscale modality [55]. The ablation experiment is conducted on SYSU-MM01 dataset in the all search single-shot mode. The results are shown in Table 5. Our dual-branch modality augmentation method outperforms the other augmentation methods.

4.5 Visualization

Retrieval result. We compare the SSRL approach with the baseline on SYSU-MM01 dataset using the single-shot setting and the all search mode to further highlight the advantages of our suggested SSRL model. The results of the acquired Rank-10 ranking are displayed in Fig. 5. In general, the ranking list are greatly improved by the proposed SSRL method, with more accurately recovered images placed in the top spots.

Rank-10 retrieval results were obtained by the baseline and the proposed SSRL model on the SYSU-MM01 dataset. For each retrieval case, the query images of the first column are the NIR images, and the gallery images are the VIS images. The retrieved VIS images with green bounding boxes have the same identities with the query images, and those with red bounding boxes have different identities with the query images

Attention to patterns. One of the key goals of the VI-ReID task is to improve the discriminability of features. We visualize the pixel-level pattern mapping learned by SSRL to illustrate further that it can learn modality-specific and modality-invariant features. We apply Grad-Cam [56] to visualize these areas by highlighting them on the image. We separate the feature map into 6 sections since our approach uses the PCB method, as presented in Fig. 6. In the RGB/NIR modality, comparing each part of the segmentation, and the baseline model focuses only on some unconsidered areas. In contrast, our SSRL model focuses on specific and shared information separately through a dual-branch structure.

Visualization of feature response maps within the baseline and the proposed SSRL model. For each example, the 1-st images show the RGB and NIR images, the 2-nd images are the baseline corresponding feature response maps, while the 3-rd and the 4-th images are specific branch and shared branch corresponding feature response maps

Feature distribution. To further analyze the effectiveness of IAL loss and ISL loss, we use t-SNE [57] to transform high-dimensional feature vectors into two-dimensional vectors. As shown in Fig. 7, compared to the visualization results of Fig. 7(a), the features extracted from SSRL including IAL loss and ISL loss are better clustered together. The distance between the centers and boundaries among different identities are more obvious, verifying that our work is more discriminating.

Visualization of learned features, where each color represents an identity in the testing set. The circles and triangles indicate the features extracted from the visible and infrared modalities. A total of 10 persons are selected from the test set. The samples with the same color are from the same person. (a) Features extracted by our SSRL model excluding the IAL loss and ISL loss. (b) Features extracted by our SSRL model

4.6 Parameters analysis

The proposed SSRL involves two key parameters, including intra-class aggregation loss weight \(\lambda _{2}\) and inter-class separation loss weight \(\lambda _{3}\). As seen in Fig. 8 and Fig. 9, the two parameters are investigated by setting them to various values. Setting \(\lambda _{2}\) to 1.0 and \(\lambda _{3}\) to 2.0 achieves the best performance for \(\mathcal{L}_{\mathrm{IAL}}\) and \(\mathcal{L}_{\mathrm{ISL}}\), respectively. Then, we analyze the performance of our SSRL model with a different number of parts p since our method adopts the PCB method. p represents the number of blocks being sliced. The performance is optimal when p is 6, as illustrated in Fig. 10. The proposed SSRL network includes specific-branch and shared-branche, so we compared it with the baseline in floating-point operations per second (FLOPs) and Parameters. As shown in Table 6, the amount of calculations and parameters have increased compared with the baseline, but it can be seen from Table 3 that there is an enormous improvement in accuracy.

5 Conclusion

In this paper, we propose a novel SSRL model which consists of a shared branch to learn modality-invariant representations based on bridging the gap at the image level and a specific branch to learn modality-specific representations under the premise of retaining the discriminative information of visible light images. Through intra-class aggregation and inter-class separation learning, which reduces the intra-class distance and increases the inter-class distance, the distribution of feature embeddings at the fine-grained level is optimized. Comprehensive experiments on two VI-ReID datasets demonstrate that the proposed method outperforms the state-of-the-art methods.

Availability of data and materials

All data generated or analyzed during this study are included in this published article [and its supplementary information files].

Abbreviations

- BN:

-

batch normalization

- CMC:

-

cumulative matching characteristic

- FLOPs:

-

floating-point operations per second

- GANs:

-

generative adversarial networks

- GMP:

-

global max pooling

- HCT:

-

hetero-center triplet

- IAL:

-

intra-class aggregation learning

- ID:

-

identity

- ISL:

-

inter-class separation learning

- mAP:

-

mean average precision

- NIR:

-

near-infrared

- RGB:

-

red green blue

- Re-ID:

-

re-identification

- SGD:

-

stochastic gradient descent

- SSRL:

-

specific and shared representations learning

- VI-ReID:

-

visible-infrared person re-identification

- VIS:

-

visible

References

Ye, M., Shen, J., Lin, G., Xiang, T., Shao, L., & Hoi, S. C. (2021). Deep learning for person re-identification: a survey and outlook. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(6), 2872–2893.

Zhai, Y., Lu, S., Ye, Q., Shan, X., Chen, J., Ji, R., et al. (2020). Ad-cluster: augmented discriminative clustering for domain adaptive person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9021–9030). Piscataway: IEEE.

Liu, F., & Zhang, L. (2019). View confusion feature learning for person re-identification. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 6639–6648). Piscataway: IEEE.

Liu, M., Qu, L., Nie, L., Liu, M., Duan, L., & Chen, B. (2020). Iterative local-global collaboration learning towards one-shot video person re-identification. IEEE Transactions on Image Processing, 29, 9360–9372.

Zahra, A., Perwaiz, N., Shahzad, M., & Fraz, M. M. (2023). Person re-identification: a retrospective on domain specific open challenges and future trends. Pattern Recognition, 142, 109669.

Wu, A., Zheng, W.-S., Yu, H.-X., Gong, S., & Lai, J. (2017). RGB-infrared cross-modality person re-identification. In Proceedings of the IEEE international conference on computer vision (pp. 5380–5389). Piscataway: IEEE.

Liu, H., Xia, D., Jiang, W., & Xu, C. (2022). Towards homogeneous modality learning and multi-granularity information exploration for visible-infrared person re-identification. ArXiv preprint. arXiv:2204.04842.

Wang, Z., Wang, Z., Zheng, Y., Chuang, Y.-Y., & Satoh, S. (2019). Learning to reduce dual-level discrepancy for infrared-visible person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 618–626). Piscataway: IEEE.

Wang, G.-A., Zhang, T., Yang, Y., Cheng, J., Chang, J., Liang, X., et al. (2020). Cross-modality paired-images generation for RGB-infrared person re-identification. In Proceedings of the 34th AAAI conference on artificial intelligence (pp. 12144–12151). Palo Alto: AAAI Press.

Ye, M., Shen, J., Crandall, D. J., Shao, L., & Luo, J. (2020). Dynamic dual-attentive aggregation learning for visible-infrared person re-identification. In A. Vedaldi, H. Bischof, T. Brox, et al. (Eds.), Proceedings of the 16th European conference on computer vision (pp. 229–247). Berlin: Springer.

Wu, A., Zheng, W.-S., Gong, S., & Lai, J. (2020). RGB-IR person re-identification by cross-modality similarity preservation. International Journal of Computer Vision, 128, 1765–1785

Lu, Y., Wu, Y., Liu, B., Zhang, T., Li, B., Chu, Q., et al. (2020). Cross-modality person re-identification with shared-specific feature transfer. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 13379–13389). Piscataway: IEEE.

Jin, X., Lan, C., Zeng, W., Chen, Z., & Zhang, L. (2020). Style normalization and restitution for generalizable person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3143–3152). Piscataway: IEEE.

Wang, G., Yang, S., Liu, H., Wang, Z., Yang, Y., Wang, S., et al. (2020). High-order information matters: learning relation and topology for occluded person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6449–6458). Piscataway: IEEE.

Zhou, J., Su, B., & Wu, Y. (2020). Online joint multi-metric adaptation from frequent sharing-subset mining for person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2909–2918). Piscataway: IEEE.

Lin, Y., Zheng, L., Zheng, Z., Wu, Y., Hu, Z., Yan, C., et al. (2019). Improving person re-identification by attribute and identity learning. Pattern Recognition, 95, 151–161.

Sun, Y., Zheng, L., Yang, Y., Tian, Q., & Wang, S. (2018). Beyond part models: person retrieval with refined part pooling (and a strong convolutional baseline). In V. Ferrari, M. Hebert, C. Sminchisescu, et al. (Eds.), Proceedings of the 15th European conference on computer vision (pp. 480–496). Cham: Springer.

Li, W., Zhu, X., & Gong, S. (2018). Harmonious attention network for person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2285–2294). Piscataway: IEEE.

Zhao, Z., Song, R., Zhang, Q., Duan, P., & Zhang, Y. (2022). JoT-GAN: a framework for jointly training GAN and person re-identification model. ACM Transactions on Multimedia Computing Communications and Applications, 18(1), 1–18.

Hao, X., Zhao, S., Ye, M., & Shen, J. (2021). Cross-modality person re-identification via modality confusion and center aggregation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 16403–16412). Piscataway: IEEE.

Ye, M., Lan, X., Li, J., & Yuen, P. (2018). Hierarchical discriminative learning for visible thermal person re-identification. In S. A. McIlraith & K. Q. Weinberger (Eds.), Proceedings of the 32nd AAAI conference on artificial intelligence (pp. 7501–7508). Palo Alto: AAAI Press.

Ye, M., Wang, Z., Lan, X., & Yuen, P. C. (2018). Visible thermal person re-identification via dual-constrained top-ranking. In Proceddings of the 27th international joint conference on artificial intelligence (pp. 1092–1099). San Francisco: Morgan Kaufmann.

Zhu, Y., Yang, Z., Wang, L., Zhao, S., Hu, X., & Tao, D. (2020). Hetero-center loss for cross-modality person re-identification. Neurocomputing, 386, 97–109.

Liu, H., Tan, X., & Zhou, X. (2020). Parameter sharing exploration and hetero-center triplet loss for visible-thermal person re-identification. IEEE Transactions on Multimedia, 23, 4414–4425.

Hao, Y., Wang, N., Li, J., & Gao, X. (2019). HSME: hypersphere manifold embedding for visible thermal person re-identification. In Proceedings of the 33th AAAI conference on artificial intelligence (pp. 8385–8392). Palo Alto: AAAI Press.

Liu, H., Cheng, J., Wang, W., Su, Y., & Bai, H. (2020). Enhancing the discriminative feature learning for visible-thermal cross-modality person re-identification. Neurocomputing, 398, 11–19.

Zhao, Y.-B., Lin, J.-W., Xuan, Q., & Xi, X. (2019). HPILIN: a feature learning framework for cross-modality person re-identification. IET Image Processing, 13(14), 2897–2904.

Choi, S., Lee, S., Kim, Y., Kim, T., & Kim, C. (2020). Hi-CMD: hierarchical cross-modality disentanglement for visible-infrared person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10257–10266). Piscataway: IEEE.

Wang, G., Zhang, T., Cheng, J., Liu, S., Yang, Y., & Hou, Z. (2019). RGB-infrared cross-modality person re-identification via joint pixel and feature alignment. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 3623–3632). Piscataway: IEEE.

Li, D., Wei, X., Hong, X., & Gong, Y. (2020). Infrared-visible cross-modal person re-identification with an x modality. In Proceedings of the AAAI conference on artificial intelligence (pp. 4610–4617). Palo Alto: AAAI Press.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778). Piscataway: IEEE.

Wang, G., Yuan, Y., Chen, X., Li, J., & Zhou, X. (2018). Learning discriminative features with multiple granularities for person re-identification. In Proceedings of the 26th ACM international conference on multimedia (pp. 274–282). New York: ACM.

Zheng, L., Yang, Y., & Hauptmann, A. G. (2016). Person re-identification: past, present and future. ArXiv preprint. arXiv:1610.02984.

Nguyen, D. T., Hong, H. G., Kim, K. W., & Park, K. R. (2017). Person recognition system based on a combination of body images from visible light and thermal cameras. Sensors, 17(3), 605.

Moon, H., & Phillips, P. J. (2001). Computational and performance aspects of PCA-based face-recognition algorithms. Perception, 30(3), 303–321.

Zhong, Z., Zheng, L., Kang, G., Li, S., & Yang, Y. (2020). Random erasing data augmentation. In Proceedings of the 34th AAAI conference on artificial intelligence (pp. 13001–13008). Palo Alto: AAAI Press.

Luo, H., Jiang, W., Gu, Y., Liu, F., Liao, X., Lai, S., et al. (2019). A strong baseline and batch normalization neck for deep person re-identification. IEEE Transactions on Multimedia, 22(10), 2597–2609.

Dai, P., Ji, R., Wang, H., Wu, Q., & Huang, Y. (2018). Cross-modality person re-identification with generative adversarial training. In Proceddings of the 27th international joint conference on artificial intelligence (pp. 677–683). San Francisco: Morgan Kaufmann.

Chen, Y., Wan, L., Li, Z., Jing, Q., & Sun, Z. (2021). Neural feature search for RGB-infrared person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 587–597). Piscataway: IEEE.

Gao, Y., Liang, T., Jin, Y., Gu, X., Liu, W., Li, Y., et al. (2021). MSO: multi-feature space joint optimization network for RGB-infrared person re-identification. In Proceedings of the 29th ACM international conference on multimedia (pp. 5257–5265). New York: ACM.

Wei, Z., Yang, X., Wang, N., & Gao, X. (2021). Syncretic modality collaborative learning for visible infrared person re-identification. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 225–234). Piscataway: IEEE.

Ye, M., Ruan, W., Du, B., & Zheng, M.Z. (2021). Channel augmented joint learning for visible-infrared recognition. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 13567–13576). Piscataway: IEEE.

Wu, Q., Dai, P., Chen, J., Lin, C.-W., Wu, Y., Huang, F., et al. (2021). Discover cross-modality nuances for visible-infrared person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4330–4339). Piscataway: IEEE.

Zheng, X., Chen, X., & Lu, X. (2022). Visible-infrared person re-identification via partially interactive collaboration. IEEE Transactions on Image Processing, 31, 6951–6963.

Huang, Z., Liu, J., Li, L., Zheng, K., & Zha, Z.-J. (2022). Modality-adaptive mixup and invariant decomposition for RGB-infrared person re-identification. In Proceedings of the 36th AAAI conference on artificial intelligence (pp. 1034–1042). Palo Alto: AAAI Press.

Chen, C., Ye, M., Qi, M., Wu, J., Jiang, J., & Lin, C.-W. (2022). Structure-aware positional transformer for visible-infrared person re-identification. IEEE Transactions on Image Processing, 31, 2352–2364.

Zhang, Q., Lai, C., Liu, J., Huang, N., & Han, J. (2022). FMCNet: feature-level modality compensation for visible-infrared person re-identification. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 7349–7358). Piscataway: IEEE.

Yang, M., Huang, Z., Hu, P., Li, T., Lv, J., & Peng, X. (2022). Learning with twin noisy labels for visible-infrared person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 14308–14317). Piscataway: IEEE.

Zhang, Y., Yan, Y., Lu, Y., & Wang, H. (2021). Towards a unified middle modality learning for visible-infrared person re-identification. In Proceedings of the 29th ACM international conference on multimedia (pp. 788–796). New York: ACM.

Sun, H., Liu, J., Zhang, Z., Wang, C., Qu, Y., Xie, Y., et al. (2022). Not all pixels are matched: dense contrastive learning for cross-modality person re-identification. In Proceedings of the 30th ACM international conference on multimedia (pp. 5333–5341). New York: ACM.

Li, X., Lu, Y., Liu, B., Liu, Y., Yin, G., Chu, Q., et al. (2022). Counterfactual intervention feature transfer for visible-infrared person re-identification. In Proceedings of the 17th European conference on computer vision (pp. 381–398). Berlin: Springer.

Liu, J., Sun, Y., Zhu, F., Pei, H., Yang, Y., & Li, W. (2022). Learning memory-augmented unidirectional metrics for cross-modality person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 19366–19375). Piscataway: IEEE.

Zhang, Y., & Wang, H. (2023). Diverse embedding expansion network and low-light cross-modality benchmark for visible-infrared person re-identification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2153–2162). Piscataway: IEEE.

Park, H., Lee, S., Lee, J., & Ham, B. (2021). Learning by aligning: visible-infrared person re-identification using cross-modal correspondences. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 12046–12055). Piscataway: IEEE.

Ye, M., Shen, J., & Shao, L. (2020). Visible-infrared person re-identification via homogeneous augmented tri-modal learning. IEEE Transactions on Information Forensics and Security, 16, 728–739.

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., & Batra, D. (2017). Grad-CAM: visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision (pp. 618–626). Piscataway: IEEE.

van der Maaten, L., & Hinton, G. (2008). Visualizing data using t-SNE. Journal of Machine Learning Research, 9(11), 2579–2605.

Acknowledgements

I would like to express my gratitude to several individuals who have supported me throughout the process of completing this paper. First, I thank my academic supervisor, Aihua Zheng, for her invaluable guidance, feedback, and encouragement. I am also grateful to Anhui Provincial Key Laboratory of Multimodal Cognitive Computation for providing me with the necessary resources and facilities. Finally, I appreciate all the participants who generously gave their time and shared their experiences for the purpose of this study.

Funding

This work is supported by the National Key R&D Program of China (2022ZD0160605), the National Natural Science Foundation of China (61976002), the University Synergy Innovation Program of Anhui Province (GXXT-2022-036), the Natural Science Foundation of Anhui Province (No. 2208085J18), the National Natural Science Foundation of China under Grant (62106006), and the Natural Science Foundation of Anhui Higher Education Institution (No. 2022AH040014).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by AZ, JL and ZW. The first draft of the manuscript was written by JL and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zheng, A., Liu, J., Wang, Z. et al. Visible-infrared person re-identification via specific and shared representations learning. Vis. Intell. 1, 29 (2023). https://doi.org/10.1007/s44267-023-00032-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44267-023-00032-9