Abstract

Addressing the pressing demand for rapid and inexpensive coagulation testing in cardiovascular care, this study introduces a novel application of repurposed COVID-19 rapid antigen tests (RATs) as paper-based lateral flow assays (LFAs) combined with machine learning for coagulation status evaluation. By further developing a mobile app prototype, we present a platform that enables clinicians to perform immediate and accurate anticoagulant dosing adjustments using existing post-pandemic resources. Our proof-of-concept employs a random forest machine learning classifier to interpret image feature variations on RAT NC membrane, correlating red blood cell (RBC) wicked diffusion distance in recalcified citrated whole blood with changes in coagulative viscosity, easily interpreted. Enhanced by confocal imaging studies of paper microfluidics, our approach provides insights into the mechanisms dissecting coagulation components, achieving high classification precision, recall, and F1-scores. The inverse relationship between RBC wicked diffusion distance and enoxaparin concentration paves the way for machine learning to inform real-time dose prescription adjustments, aligning with individual patient profiles to optimize therapeutic outcomes. This study not only demonstrates the potential of leveraging surplus RATs for coagulation management but also exemplifies a cost-effective, rapid, and smart strategy to enhance clinical decision-making in the post-pandemic era.

Graphical Abstract

Highlights

• Repurposed COVID-19 RATs provide an ideal platform for observing differences in blood coagulability.

• Random Forest image classification algorithms can facilitate rapid coagulation status assessment on a paper-based LFA platform.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The demand for prompt, precise, and cost-efficient coagulability testing is particularly pronounced within the demanding environments of intensive care units and cardiovascular surgery settings, where the judicious administration of anticoagulants is critical. Navigating the fine line between reducing thrombotic events and minimizing bleeding risks is a complex and vital aspect of patient care. Traditional coagulation tests, such as the activated clotting time (ACT), the most commonly used point-of-care (POC) coagulation test, prothrombin time (PT), and activated partial thromboplastin time (APTT), which assess the extrinsic and intrinsic coagulation pathways, are often cumbersome and costly, and their use may be limited in urgent care situations due to time and resource-constrained settings [1,2,3].

Cardiovascular diseases (CVDs) are the most significant cause of death globally, responsible for 32% of all deaths according to World Health Organization (WHO) statistics [4], with an anticipated increase to 23 million deaths by the year 2030 [5]. Although activated clotting time, prothrombin time, and activated partial thromboplastin time provide essential data, they fall short in offering immediate guidance for precise anticoagulant dosing due to their reliance on analyses of platelet-poor plasma samples and the need for blood processing [6].

The optimization of antithrombotic medication is a critical component in preventing severe complications such as stroke, pulmonary embolism, and myocardial infarction. However, without rapid diagnostic methods to evaluate complex thrombotic mechanisms, significant bleeding incidents often result from excessive anticoagulation, contributing to 60% of such adverse outcomes [7]. The financial impact of coagulation status monitoring further challenges healthcare systems and patients, particularly in areas with economic disadvantages [8].

Coagulation testing devices like the CoaguChek® XS Plus (Roche Diagnostics GmbH, Mannheim, Germany), which measure the international normalized ratio (INR) [9], offer accurate coagulation metrics but can be prohibitively expensive due to complex methods of detection, often electrochemical, and sometimes necessitate repeated testing due to complex user instructions and differences in handling. LFAs, with their simple design and use of affordable materials such as cellulose or nitrocellulose (NC), present an affordable approach to accessible diagnostics and means for self-monitoring, thereby reducing the healthcare burden [10, 11]. These devices and other microfluidics are therefore well-suited for POC testing (POCT) across various applications, including sample collection [12, 13], disease detection [14, 15] and drug delivery [16], yet they often lack outputs that are easily interpretable and can be directly translated into clinical action.

This study explores the integration of machine learning with paper-based LFAs for coagulation status determination. It aims to address these gaps by (1) providing automated classification of coagulation severity, thus offering insights into individual platelet function and fibrin production, and (2) enhancing the dosing precision of the antithrombotic medication enoxaparin. By simulating 'normal' coagulation diffusion distances, we seek to elevate the accessibility, affordability, and efficiency of coagulability testing, potentially leading to improved patient outcomes and supporting clinical decision-making processes.

Results

Calcium-dependent wicking diffusion on repurposed RAT NC membrane for coagulability assessment

To assess the coagulability of blood under varying pathological conditions, we utilized citrate-anticoagulated whole blood. This blood was subsequently recalcified with incremental CaCl2 doses ranging from 0 to 100 mM in 25 mM increments. Following recalcification, the blood was introduced to the TESTSEALABS® RAPID TEST KIT COVID-19 NC membrane, repurposed to evaluate blood coagulability through capillary action over a 10 min period (Fig. 1a). The extent of coagulation, influenced by the reactivation of the coagulation cascade and resultant changes in blood viscosity, was quantified by measuring the diffusion distances of the RBC front across different CaCl2 concentrations (n = 7).

Recalcification of citrate-anticoagulant whole blood produces a dose-dependent wicking distance response. a Image of TESTSEALABS® RAPID TEST KIT Cassettes used for experiments and schematic of a sample of citrate-anticoagulated whole blood treated with various concentrations of CaCl2 solutions, 0 mM, 50 mM and 100 mM pipetted onto a RATs cassette to measure diffusion distance. b Image and schematic of changes in wicked distance categorised into bands of coagulation severity treated with different recalcification doses, 1 (Normal)—5 (Severe) following 10 min diffusion. c Cropped images of wicked distance of citrate-anticoagulated whole blood treated with 0 mM, 25 mM, 50 mM, 75 mM and 100 mM recalcification dose (CaCl2) following 10 min of diffusion (Scale bar: 1 mm), with wicked distance equal to the distance travelled by RBC front. d The wicked distance of citrate-anticoagulant treated whole blood with different Ca2+ concentrations was quantified with a bar chart represented as mean ± SD. (n = 7 ns = not significant; *p < 0.05; **p < 0.01; ***p < 0.001; ****p < 0.0001, assessed by one-way ANOVA. The wicked distance over time of RBC (Circle) and plasma (Square) fronts for e 0 mM and f 100 mM CaCl2 recalcification doses for the 10-min experiment period were quantified with a line graph represented as a mean ± SD (n = 3)

The study highlighted a significant impact of recalcification on coagulation dynamics in citrate-anticoagulated whole blood. Notably, non-recalcified blood exhibited a significantly greater diffusion distance (1.304 cm) compared to recalcified groups, which showed progressively shorter diffusion distances at 25 mM (0.997 cm), 50 mM (0.713 cm), 75 mM (0.531 cm), and 100 mM (0.426 cm) of CaCl2 (Fig. 1b, c, d), displaying an inverse relationship between RBC wicked distance and increasing CaCl2 concentration. Statistical analysis revealed marked differences among recalcified groups, especially between 25 and 50 mM (p < 0.0001), 50 mM and 75 mM (p = 0.0004), and 75 mM and 100 mM (p < 0.0488) concentrations (Fig. 1d).

These findings underscore the utility of repurposed paper-based LFAs, such as the TESTSEALABS® RAPID TEST KIT COVID-19 and OnSite®COVID-19 Ag Self-Test (Supplementary Fig. 1a), in detecting variations in blood coagulability with a high degree of sensitivity. By leveraging this approach, we build upon the foundational work of Li et al. [17], demonstrating the capability to discern distinct levels of coagulation using minimal blood volumes akin to those obtained from a finger-prick [10]. This innovative adaptation not only showcases the versatility of LFAs beyond their original purpose but also highlights the potential for rapid, on-site blood coagulability assessments.

Additionally, during the experimental process, the capillary action of the blood sample through the NC membrane led to the separation of the blood into its constituent components: RBCs and plasma, composed of platelets, proteins and coagulation factors [18, 19]. These components accounted for approximately 45% and 55% of the blood volume, respectively [20].

In Fig. 1e and f, we illustrate the relationship between the wicked distances of the RBC and plasma component fronts over time as they diffuse through the NC membrane, comparing 0 mM and 100 mM CaCl2 concentrations. Our experiments demonstrate that, under both recalcification conditions, the plasma components travel faster and further than the RBC front, as reflected by Li et al. [17]. The wicked distances of RBCs stabilize (saturate) after approximately 8 min and 3.5 min for 0 mM and 100 mM recalcification, respectively, reaching maximum diffusion rates of 2.11 mm/min and 0.684 mm/min. This stabilisation occurs alongside a decreasing gradient as the NC membrane becomes saturated. Plasma wicked diffusion rates significantly exceed those observed at the RBC front, peaking at 3.34 mm/min and 1.78 mm/min for 0 mM and 100 mM recalcification, respectively, and saturating simultaneously with the RBC front. Notably, in the case of 0 mM recalcification, the plasma front reaches the end of the NC membrane (~ 22 mm).

The distance travelled and the rate of diffusion of both the RBC and plasma fronts adhere to the relationships governed by Washburn's equation [21, 22] and Darcy’s Law [23]. Furthermore, increasing the CaCl2 concentration to 100 mM dramatically stimulates the coagulation process, enhancing the dynamic viscosity of both the RBC and plasma components resulting in shorter wicked distances and slower rates of diffusion as the blood transitions from a viscoelastic liquid to solid gel, resulting in significant changes in the rheological properties [24]. This occurs as a result of CaCl2-stimulated conversion of fibrinogen into fibrin [25], increasing RBC aggregation, a major determinant of blood viscosity [26, 27], particularly under low shear conditions and as such, larger effects changes can be observed in the RBC front [24]. These findings suggest that observing changes in RBC wicked distance may serve as a more sensitive metric of coagulation and changes in blood viscosity, a metric which has been linked to many CVDs and historically been under-utilised due to difficulties in dynamic measurements.

The stages of dynamic variation in blood viscosity may be better distinguished by increasing the sensitivity of paper-based LFAs by adjusting the porosity and hydrophobicity of the fluid membrane [28]. Smaller pores result in greater capillary forces and slower flow rates because the liquid must overcome greater resistance to move through the narrow passages. Conversely, larger pores allow for faster flow rates as there is less resistance to fluid movement [29, 30], as it influences the effective diffusion coefficient [31]. For paper-based LFAs with very small pore sizes (< 1 μm), the blood cell suspensions are not able to diffuse decreasing the sensitivity of the assay (Supplementary Fig. 2). SEM imaging of the TESTSEALABS® RAPID TEST KIT NC membrane revealed pore sizes of approximately ~ 23.1 μm (Supplementary Fig. 3), this may be near the ideal pore size, larger than native blood cells but smaller than RBC and platelet aggregates, improving assay sensitivity. Finding the optimal balance of porosity will help to improve the sensitivity of the assay while controlling the time required to interpret results.

Differential segregation of blood components on repurposed RAT paper microfluidics under variable coagulation conditions

Our analysis through ANOVA revealed significant variations in the mean fluorescent intensities of platelet integrin receptor glycoprotein IIb (CD41)-labelled platelets across at least three distinct groups (F(3,69) = 19.23, p < 0.0001) within the LFA framework, indicating distinct platelet activation dynamics (Fig. 2a, b). Interestingly, this variation was not pronounced with increasing CaCl2 concentrations from 0 to 100 mM (p = 0.2437), suggesting that while platelet activation as indicated by CD41 signalling may increase, it does not linearly correlate with CaCl2 concentration. Notably, the twofold increase in the CD41 signal, coupled with the observed definition in the resulting images, points towards an increased tendency for platelet aggregation or “clustering” at higher CaCl2 levels (Fig. 2a, c).

Wicked distance is viscosity dependent based on platelet–fibrin interactions. a Maximum projection confocal images of the entire RAT test strips (scale bar = 2000 μm) blank (top left), antibody characterisation (ABC, top right), 0 mM (bottom left) and 100 mM (bottom right) CaCl2 recalcified whole blood sample. Platelets (cyan, first row), fibrin (magenta, second row) and merged (last row). Bar charts representing the mean fluorescent intensity for 8-bit images sampled at random ROI across the entire RAT test strip b Platelets and c Fibrin (n = 4, ns = not significant; *p < 0.05; **p < 0.01; ***p < 0.001; ****p < 0.0001, assessed by one-way ANOVA. d SEM images of blank test strip (left) and the RBC region of 0 mM and 100 mM recalcified blood on test strips (scale bar = 20 μm). White arrow (paper fibre), Magenta arrow (fibrin) and Cyan (platelets)

In parallel, fibrin formation displayed significant inter-group disparities (F(3,72) = 41.72, p < 0.0001), with the 100 mM CaCl2 group showcasing the highest mean fluorescence intensity (203.6 Arbitrary Units, AU), starkly contrasting with the 0 mM group (p < 0.0001). This suggests a pronounced fibrin network formation under high CaCl2 conditions. Furthermore, the emergence of heterogeneously thick fibrin-rich areas post-100 mM CaCl2 treatment manifested in more pronounced deviations in the analysis of regions of interest (ROI), with means of 109.5 ± 47.65 for 100 mM compared to 61.02 ± 7.1 for 0 mM (Fig. 2b, c). These findings underscore the pivotal role of fibrin in modulating droplet coagulation, reducing diffusion distance, and enhancing overall coagulability.

Moreover, SEM imaging of the RAT NC membrane provided visual confirmation of these biochemical dynamics (Fig. 2d). The images vividly illustrated the compaction effect within blood clots, particularly noticeable when comparing whole blood with a recalcification dose of 0 mM and 100 mM CaCl2 against a control test cassette (Fig. 2a). The microscopic analysis revealed a significant reduction in the NC membrane pore size with increasing CaCl2 levels, visually substantiating the biochemical data on the impact of calcium-induced coagulation through platelet–fibrin interactions [32].

These observations collectively highlight the nuanced and complex nature of blood coagulation as captured by the innovative repurposing of RAT paper microfluidics. The technology not only enables the distinct separation of blood components under varying coagulation states but also provides a detailed quantitative and qualitative insight into the coagulation process, underscoring the critical interplay between platelets and fibrin in coagulation dynamics.

Leveraging image-based machine learning for precise coagulation status classification

In the pursuit of advancing quantitative assessments of coagulation, we employed image-based machine learning to automatically classify coagulation statuses. This approach was predicated on analyzing the wicked diffusion distance of the RBC front through detailed binned pixel intensity histograms for each recalcification treatment group. These histograms spanned pixel intensities from 0 (black) to 255 (white), capturing the gamut from non-recalcified to 100 mM recalcified blood (Fig. 3b, Supplementary Fig. 4a, b, c). Notably, there was a discernible shift toward higher pixel intensities with increasing CaCl2 concentrations, signifying a reduction in the wicked diffusion distance of the RBC front. Specifically, the average pixel intensity escalated from 125.3 in the non-recalcified cohort to 162.8 in the 100 mM CaCl2 group (Fig. 3b, c).

Machine learning classification of coagulability statuses. a Diagram showing the conversion of an image of a 0 mM COVID-19 testing strip into a greyscale image and then to a binary mask before the first-order statistics and shape/region features and GLCM features were extracted. b, c Binned pixel histograms representing the number of pixels and pixel intensities of the images for the different treatment groups 0 mM and 100 mM of CaCl2. d within a single model (left), e for different test sizes (middle), and f precision-recall curves for the different test sizes increasing from 0.2 to 0.5 to 0.7. Confusion matrix outlining the prediction of the RF outputs of true verse predicted coagulation bands. g 3D cartesian plot of mean first-order stats, eccentricity and entropy features extracted across the different coagulation bands

To automate the coagulation status classification, images of testing strips were standardized to greyscale (Fig. 3a), enabling the application of a robust machine learning model comprising 100 decision trees (Fig. 7). This model was refined using 22 meticulously extracted features from the images across various treatment groups. These encompassed a broad spectrum of variables, including statistical metrics (mean, min–max), entropy, geometric properties (area, major axis length), and textural features (grey-level co-occurrence matrix), ensuring a comprehensive feature set for analysis.

The efficacy of our model was evidenced by its predictive performance metrics. During validation, our random forest model exhibited outstanding discrimination capabilities, with C-index values for intraclass analysis surpassing 0.95 (Fig. 3d), showcasing the model's precision in classifying coagulation statuses. Multiclass ROC analysis further demonstrated the model's effectiveness across different test sizes, achieving C-index values of 0.83, 0.93, and 0.96 for test sizes of 0.7, 0.5, and 0.2 respectively (Fig. 3e), and Precision-Recall values escalating from 0.65 to 0.90 as test sizes decreased (Fig. 3f). These results underscore the model's robust capacity for accurate positive predictions and comprehensive capture of actual positive instances.

The confusion matrix (Fig. 3g) reaffirmed the model's superior class discrimination accuracy, with an average success rate of 83.3% in correctly identifying true positives and true negatives. This robust classification underscores the method's reliability and efficiency in determining coagulation status, setting a promising benchmark for potential clinical application.

Integration of wicked diffusion distance in anticoagulant dosage optimization for enhanced clinical outcomes

The optimization of antithrombotic medication dosages is a pivotal element in the management of thrombotic risks and bleeding during medical interventions [6]. Achieving an optimal balance requires precise adjustment of anticoagulant levels. In this context, we explored the application of paper-based LFAs for refining antithrombotic medication dosing strategies. Specifically, we investigated the effects of administering a fixed 20U dose of enoxaparin on whole blood samples, which were preconditioned with varying concentrations of CaCl2, to simulate different coagulation states (Fig. 4a). These experiments aimed to compare the coagulation dynamics in the presence and absence of enoxaparin.

Clinical Relevance – Antithrombotic Medication Dosing. a Schematic showing the testing conditions for CaCl2 and enoxaparin dose-dependent wicked distance responses. b Images of COVID-19 test strips comparing the wicked distance of samples with and without 20 U of enoxaparin treatment for recalcification concentrations of CaCl2 for 0 mM, 25 mM, 50 mM, 75 mM and 100 mM. c Images of COVID-19 test strips comparing the wicked distance of whole blood samples recalcified with 50 mM CaCl2 treated with enoxaparin doses ranging from 0 to 100 U in 25 U increments. Analysis of wicked distance comparing d increasing concentrations of CaCl2 with 20 U of enoxaparin and e increasing doses of enoxaparin, as a bar chart represented as mean ± SD. (n = 4, ns = not significant; *p < 0.05; **p < 0.01; ***p < 0.001; ****p < 0.0001, assessed by one-way ANOVA

Our findings revealed that administering 20U of enoxaparin significantly altered the coagulation profile, as evidenced by increased wicked diffusion distances in samples treated with 25 mM and 50 mM CaCl2, compared to controls without enoxaparin (Fig. 4d). Specifically, enoxaparin augmented the wicked diffusion distances from 0.981 cm to 1.14 cm for the 25 mM CaCl2 group, and from 0.715 cm to 0.877 cm for the 50 mM group (Fig. 4b, d), indicating a notable anticoagulant effect at these concentrations (p < 0.05). Conversely, at higher CaCl2 concentrations (75 mM and 100 mM), the addition of 20U enoxaparin did not significantly extend the wicked diffusion distance, suggesting a plateau in the efficacy of this enoxaparin dosage under conditions of more pronounced coagulation (Fig. 4d).

Further experiments were conducted to evaluate the dose–response relationship of enoxaparin in modulating coagulation, particularly at the 50 mM CaCl2 concentration, identified as a critical threshold for assessing the sensitivity and effectiveness of enoxaparin in the paper-based LFA system (Fig. 4c, e). Incremental enoxaparin dosages ranging from 0 to 100 U were administered, demonstrating a positive correlation between enoxaparin dosage and wicked diffusion distance. Specifically, wicked diffusion distances progressively increased with higher enoxaparin doses, recording measurements of 0.897 cm, 0.962 cm, 1.023 cm, and 1.125 cm for 20 U, 50 U, 75 U, and 100 U of enoxaparin, respectively (Fig. 4e). Statistical analysis confirmed the significance of these findings, with all enoxaparin-treated groups showing substantial increases in wicked distances compared to the control, achieving statistical significance at p < 0.05 for the 20 U group, and p < 0.001 and p < 0.0001 for the 50 U, 75 U, and 100 U groups, respectively (Fig. 4e).

These results illuminate the potential of utilizing paper-based LFAs as an innovative and practical tool for clinicians to fine-tune antithrombotic dosages. By providing a rapid, quantitative method to assess the anticoagulant effect of varying enoxaparin doses in real-time, this approach promises to contribute significantly to personalized patient care, optimizing therapeutic outcomes while minimizing bleeding risks.

Discussion

The innovative repurposing of widely available COVID-19 RATs for coagulability testing is a strategic and intelligent adaptation to the post-pandemic era's abundance of resources and established infrastructure. These LFAs, originally designed to detect viral proteins, have the potential to be modified for visualizing changes in fluid viscosities, thereby providing a direct measure of coagulation status. Such an adaptation is not only aligned with the urgent need for cost-saving measures in healthcare but also effectively utilizes the surplus supplies available to address a critical clinical demand. The use of ACT, APTT, and PT in clinical settings underscores some of the challenges in accurately monitoring and managing anticoagulation therapies, which is compounded by variations due to blood draw site preference/suitability and different anticoagulants used. Since its adaptation in the late 1960’s ACT is widely employed as a “gold standard”, requiring between 2–3 mL of blood, it is and utilised during cardiac surgeries, yet it demonstrates significant output variability depending on patient haematocrit levels, with devices like Hemochron® showing positive biases of about 56 s during heparinization [33, 34]. This variability is made more pronounced when considering that blood samples from various sites like arterial lines or central venous catheters often show no consistent correlation with low-dose heparin administration, as observed in ICU patients where ACT does not correlate well with UFH dosages [35, 36], which causes concern for the efficacy of coagulation measurement for CVD treatment.

Similarly, APTT is crucial for monitoring the intrinsic and common pathways of the coagulation cascade but experiences significant inter-laboratory variability of 20–30 s due to different reagents used [3, 36]. It is also affected by lupus anticoagulants, which can falsely prolong results. When using citrated plasma, aPTT test results can vary from 48 to 108 s depending on the assay used [3]. PT, primarily used to monitor warfarin therapy, also exhibits variability; differences in tissue factor among testing kits can lead to INR variations of about 0.5–1.0 units, highlighting the need for meticulous calibration and issues with non-standardization across testing platforms being used today in primary/secondary healthcare setting [3]. Alarmingly, Stettler et al. reported that 44.6% and 49.5% of PT/INR and aPTT variance was unexplained by issues with clotting factors [37]. Furthermore, usage of these traditional methods often requires separation of blood components. In an effort to mitigate the influence of hemolysis, they often overlook the role of RBCs in the coagulation cascade and require extended periods to produce results. This becomes critical in time-sensitive situations, like those encountered in cardiopulmonary bypass surgery [38] where rapid adjustments to anticoagulant dosing are imperative due to the swift onset of thrombotic or hemorrhagic events [39].

This study serves as a preliminary proof-of-concept demonstrating how the integration of machine learning algorithms with paper-based LFA can expedite the analysis of patient coagulation status, as alternative POCT to ACT. We have shown that even with minimal blood volumes, on the scale that may be obtained through fingerprick collection [10], sensitive classifications of coagulation severity are achievable without the need for highly trained personnel. The system we introduced is not only portable, accessible, and cost-effective but also automates the classification of coagulation into predefined severity bands, from "Normal, 0 mM" to "Severe, 100 mM," based on the principle physics of fluid diffusion observed on NC membranes (Table 1). The significant differences in mean fluorescent intensities and clot formations observed across the different CaCl2 treatment groups underscore the impact of platelet–fibrin interactions on coagulation severity (Fig. 2a (bottom right), c). Additionally, optimising porosity and utilising dynamic wicked diffusion distances and rates of the RBC and plasma components provides information on the dynamic changes of blood viscosity which has been attributed to RBC aggregation, a major risk factor of atherosclerosis, hyperfibrinogenemia and hypertension [40].

The sensitivity of our paper-based LFAs in measuring coagulation changes through viscosity variations in recalcified, citrated whole blood represents a notable advance over previous methodologies that required higher CaCl2 concentrations and larger sample volumes [17], although further optimisation may be achieved through modulating the material porosity and hydrophobicity influencing the rate of capillary action [41], which would influence the diffusion rates and sensitivity. Our work confirms an inverse relationship between the diffusion distance of the RBC front and the concentration of CaCl2, supporting findings by Li et al., Sweeney et al. and complemented by Saidykhan et al.'s research on thrombin-modified LFA for fibrinogen diffusion, which are essential for establishing clinical reference ranges [17, 42, 43].

In response to the SARS-CoV-2 pandemic, telehealth and mhealth technologies [44] rapidly emerged to address the stresses of healthcare provider shortages, reduce healthcare costs and enhance access to healthcare. This led to a significant focus on developing mhealth POCT technologies, with a projected market size of US$ 332.7 billion by 2025 [45], driven largely by machine learning [46]. Machine learning’s continued evolution in healthcare is tackling challenges of data fragmentation and enabling the exploration of various disease pathologies. The performance of our random forest classifier, as indicated in Table 1, exemplifies the potential of machine learning to differentiate coagulation statuses with high sensitivity and specificity.

While clinical adoption of machine learning algorithms is still nascent, studies by Peng et al. and Arumugam et al. demonstrate the superiority of machine learning classifiers to outperform traditional models in cardiovascular disease detection and differentiation of hypercoagulability in acute coronary syndromes and coronary artery disease with notable accuracy [47, 48]. However, further research is needed. Optimizing porosities of the NC membrane can improve sensitivity and test times, enhancing the accuracy and precision of machine learning classifiers. Technical improvements, such as optimizing lighting and resolution for machine learning classification and blood sample delivery onto the RAT test, can reduce handling errors and inaccuracies.

These findings, combined with our own, suggest that machine learning algorithms hold promise in the assessment of coagulation status via paper-based LFAs as a POCT, facilitating clinical adoption for anticoagulant management [49], informed decision-making for pathology monitoring, particularly crucial in patients with sepsis [50] and cancer [51], and reduction of financial burdens for patients. This is crucial in communities with limited access to coagulation testing machines. Our solution serves as a potentially effective and inexpensive alternative for lower socioeconomic regions.

Furthermore, the limited accessibility of more conventional coagulation testing devices, may be addressed through mhealth technologies. To this end, we propose a standalone mobile-based machine learning platform for the automated analysis of images collected by the smartphones of users and clinicians (Fig. 5b) which can interpret image data collected from experiments involving the use of paper-based LFAs (Fig. 5a) in CVD improving classifications of coagulation status and provide recommendations in anticoagulant drug doses improving patient outcomes (Fig. 5c, Supplementary Video 1).

Mobile app prototype for RAT repurposed coagulation status assessment. a Diagram of different commercially available paper-based LFAs for Flu (ImmunoCard STAT!®, Meridian Bioscience ®) and HIV virus (DETERMINE™HIV-1/2 AG/AB COMBO) detection, pregnancy (Pregnosis®) and SARS-CoV-2 diagnosis. b mobile phone image data collection and coagulation status diagnosis using RF classification. c interactive analysis of the TESTSEALABS® RAPID TEST KIT cassette with coagulation status and recommended antithrombotic medication dosage (Supplementary Video 1)

Methods

Paper-based lateral flow assay (LFA Preparation)

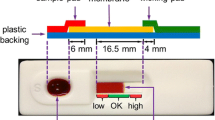

The LFA platform utilised in our experiments was developed from TESTSEALABS® RAPID TEST KIT (Dublin, Ireland) and OnSite®COVID-19 Ag Self-Test Cassettes (CTK Biotech, USA) (Fig. 6), due to their high availability and low costs.

Prior to experimentation, the sample pad of the test cassette was removed to reduce the effect of differences in the manufacturing process. To ensure consistency in data gathering, strips were placed back into the cassette prior to testing, providing a level platform to reduce variability. During experiments, the blood sample was dispensed onto the NC membrane and allowed to be absorbed and diffused for 10 min, following which images of the strip were taken for further analysis.

Blood collection

All procedures involving blood collection from healthy donors were approved by the University of Sydney (USYD) Human Research Ethics Committee (HREC, project 2023/582). Donor blood samples were obtained using a 19G butterfly needle with written informed consent and by trained on-site phlebotomists, and anticoagulated in a 3.8% sodium citrate (Sigma Aldrich, USA) syringe as previously described [52, 53] in a 1:10 blood to anticoagulant ratio, this offers greater control the anticoagulant ratio compared to vacutainers, while limiting platelet and coagulation pre-activation. Blood for experimentation was used within a 4-h period following collection as recommended [54].

Whole blood sample preparation

Whole blood (WB) was recalcified with CaCl2 solution ranging from 0 and 100 mM final concentrations, in 25 mM increments gently mixed and incubated for 2 min to initiate the coagulation cascade [17], recalcified samples are only used once to ensure consistency in coagulation. 10 µL of the recalcified WB was then transferred onto the processed TESTSEALABS® RAPID TEST KIT and OnSite®COVID-19 Ag Self-Test Cassettes NC membrane using a P20 pipette with cut tips for minimised shearing (Fig. 1a), immediately prior to experimentation. Images and videos of the sample diffusion diffused droplet were taken after 10 min and during the experimentation process from an iPhone 14 camera, and the wicked distance of the RBC and plasma fronts were measured for analysis. For antithrombotic dosing experiments, 20U of enoxaparin (Sanofi S,A, France) was added to CaCl2 prior to recalcification. To investigate the effects of higher enoxaparin doses on wicked distance measurements in comparison to uncalcified WB, dose-dependent assays using 0-100U enoxaparin in 50 mM CaCl2 solution were performed.

Immunofluorescence staining and analysis

Whole blood was stained for platelets with in-house conjugated anti-CD41(2 µL mL−1, clone P2) (Beckman Coulter, USA) and anti-fibrin (2 µL mL−1, clone 59D8) (Antibody System, France) for 30 min at 37 degrees. For diffusive antibody characterisation (ABC), similar concentrations were dissolved in 10 µL PBS solution, and the strips were run as previously described. Fluorescence imaging was conducted on an Olympus IX-83 inverted microscope with FV3000 laser confocal imaging package (Olympus Lifescience, Japan). Z-stacks of inverted strip surfaces were imaged using a 10 × air objective (NA 0.4) with tile-scan functions. The Z-range was defined by boundaries in which “fluorescent speckles'' could be seen on the surface at the lowest part (furthermost away from the objective) of the strip. Images were processed using Fiji’s image J processing program. Stacks were max intensity projected and converted to 8-bit (from 16) depth to allow for faster processing. Mean fluorescence intensity data was gathered using a custom, randomised region of interest (ROI) Java script Image J plugin.

Scanning Electron Microscopy (SEM)

SEM was performed using a benchtop Phenom XL scanning electron microscope (Thermofisher Scientific, USA), on dried, non-gold coated samples at the USYD Research and Prototype Foundry. Images were taken at 7000 × magnification under low vacuum conditions (60 Pa) using a full backscatter detector (BSD) configuration with a working distance of 4 mm at 5 keV, and average pore size was measured using ImageJ.

Machine learning classifier

To develop a machine learning classifier to determine the level of coagulation in the paper-based LFA platform, cropped images of the test strips corresponding to the different levels of CaCl2 recalcification (Fig. 1c) were first converted to greyscale before they were separated and stored in collection variables. Using a normalised area, the binned pixel intensities of 20 different repeats of each concentration were plotted on an image histogram Feature extraction was performed subsequently, first by defining specific functions to obtain some first-order statistics, shape and region and GLCM texture features. A “for” loop is defined to run through the different sets of the stored images in each of the 5 collections, and through data augmentation exceeding > 100 images and storing the extracted features into one variable through’v-stacking’. A feature data frame was then produced using the “pandas'' function containing the extract features from the 5 numerical bands representing coagulation severity (Fig. 1b) with associated labels “Normal”, “Mild”, “Moderate”, “Significant” and “Severe” corresponding to the different recalcification concentrations in ascending order (Table 2). The produced data frame was then split into training and testing sets based on 0.3 allocation, before a random forest learning model (Fig. 7) was trained using the training data and associated labels. A separate verification dataset was then used to test the model using blinded recalcification amounts, and the prediction metrics of precision, recall and F1-score were displayed in a classification report (Table 1). Receiver operating characteristics curves, precision-recall curves for the different classes (associated labels) and across different sized test sets (0.2, 0.5, 0.7) were plotted to assess the quality of the classification model, and a confusion matrix outlining the proportions of correct and incorrect predictions was produced (Fig. 3d, e, f, g).

Statistical analysis

Data was analysed using an ordinary one-way ANOVA, with a post-hoc Holm-Šídák's multiple comparisons test for fluorescent analysis ( * p < 0.05) and plotted using Graphpad’s Prism 9 statistical analysis software.

References

Guy S, Kitchen S, Maclean R, Van Veen JJ. Limitation of the activated partial thromboplastin time as a monitoring method of the direct thrombin inhibitor argatroban. Int J Lab Hematol. 2015;37(6):834–43. https://doi.org/10.1111/ijlh.12414.

Avidan MS, Despotis GJ. Is the activated clotting time dangerous? Anesthesiology. 2005;103(4):904–904. https://doi.org/10.1097/00000542-200510000-00040.

Chlebowski MM, Baltagi S, Carlson M, Levy JH, Spinella PC. Clinical controversies in anticoagulation monitoring and antithrombin supplementation for ECMO. Crit Care. 2020;24(1):19. https://doi.org/10.1186/s13054-020-2726-9.

Cardiovascular diseases (CVDs). 21. [Online]. Available: https://www.who.int/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds)#:~:text=Cardiovascular%20diseases%20%28CVDs%29%20are%20the%20leading%20cause%20of,85%25%20were%20due%20to%20heart%20attack%20and%20stroke.

Amini M, Zayeri F, Salehi M. Trend analysis of cardiovascular disease mortality, incidence, and mortality-to-incidence ratio: results from global burden of disease study 2017. BMC Public Health. 2021;21(1):401. https://doi.org/10.1186/s12889-021-10429-0.

McRae HL, Militello L, Refaai MA. Updates in anticoagulation therapy monitoring. Biomedicines. 2021;9(3):262. https://doi.org/10.3390/biomedicines9030262.

Michaels AD, et al. Medication errors in acute cardiovascular and stroke patients: a scientific statement from the American Heart Association. Circulation. 2010;121(14):1664–82. https://doi.org/10.1161/CIR.0b013e3181d4b43e.

Leonard T, Hughes AE, Pruitt SL. Understanding how low-socioeconomic status households cope with health shocks: An analysis of multi-sector linked data. Ann Am Acad Pol Soc Sci. 2017;669(1):125–45. https://doi.org/10.1177/0002716216680989.

Nam M, et al. Performance evaluation of coaguchek pro II in comparison with coaguchek XS plus and sta-r Max using a sta-neoplastine CI plus. Int J Lab Hematol. 2021;43(5):1191–7. https://doi.org/10.1111/ijlh.13466.

Lee D, Ozkaya-Ahmadov T, Chu C-H, Boya M, Liu R, Sarioglu AF. Capillary flow control in lateral flow assays via delaminating timers. Sci Adv. 2021;7(40):eabf9833. https://doi.org/10.1126/sciadv.abf9833.

Nishat S, Jafry AT, Martinez AW, Awan FR. Paper-based microfluidics: Simplified fabrication and assay methods. Sens Actuators B Chem. 2021;336:129681. https://doi.org/10.1016/j.snb.2021.129681.

Burgos-Flórez F, Rodríguez A, Cervera E, De Ávila M, Sanjuán M, Villalba PJ. Microfluidic paper-based blood plasma separation device as a potential tool for timely detection of protein biomarkers. Micromachines. 2022;13(5):706. https://doi.org/10.3390/mi13050706.

Sonker M, Sahore V, Woolley AT. Recent advances in microfluidic sample preparation and separation techniques for molecular biomarker analysis: A critical review. Anal Chim Acta. 2017;986:1–11. https://doi.org/10.1016/j.aca.2017.07.043.

Mabbott S, et al. Detection of cardiovascular disease associated miR-29a using paper-based microfluidics and surface enhanced Raman scattering. Analyst. 2020;145(3):983–91. https://doi.org/10.1039/C9AN01748H.

Campbell J, Balhoff J, Landwehr G, Rahman S, Vaithiyanathan M, Melvin A. Microfluidic and paper-based devices for disease detection and diagnostic research. Int J Mol Sci. 2018;19(9):2731. https://doi.org/10.3390/ijms19092731.

Mao K, et al. Paper-based microfluidics for rapid diagnostics and drug delivery. J Controlled Release. 2020;322:187–99. https://doi.org/10.1016/j.jconrel.2020.03.010.

Li H, Han D, Pauletti GM, Steckl AJ. Blood coagulation screening using a paper-based microfluidic lateral flow device. Lab Chip. 2014;14(20):4035–41. https://doi.org/10.1039/C4LC00716F.

Basu D, Kulkarni R. Overview of blood components and their preparation. Indian J Anaesth. 2014;58(5):529–37. https://doi.org/10.4103/0019-5049.144647.

Winter WE, et al. Clotting factors: Clinical biochemistry and their roles as plasma enzymes. Adv Clin Chem. 2020;94:31–84. https://doi.org/10.1016/bs.acc.2019.07.008.

Mathew J, Sankar P, Varacallo M. Physiology, Blood Plasma. In: StatPearls. Treasure Island: StatPearls Publishing, 2024. Accessed: 06 May 2024. [Online]. Available: http://www.ncbi.nlm.nih.gov/books/NBK531504/

Labajos-Broncano L, González-Martın ML, Bruque JM. Washburn’s equation facing Galileo’s transformation: some remarks. J Colloid Interface Sci. 2002;253(2):472–4. https://doi.org/10.1006/jcis.2002.8521.

Deutsch S. A preliminary study of the fluid mechanics of liquid penetrant testing. J Res Natl Bur Stand. 1979;84(4):287. https://doi.org/10.6028/jres.084.012.

Atangana A. Principle of Groundwater Flow. In: Fractional operators with constant and variable order with application to geo-hydrology. Elsevier, 2018, pp. 15–47. https://doi.org/10.1016/B978-0-12-809670-3.00002-3.

Ranucci M, Laddomada T, Ranucci M, Baryshnikova E. Blood viscosity during coagulation at different shear rates. Physiol Rep. 2014;2(7): e12065. https://doi.org/10.14814/phy2.12065.

Brass E, Forman W, Edwards R, Lindan O. Fibrin formation: effect of calcium ions. Blood. 1978;52(4):654–8. https://doi.org/10.1182/blood.V52.4.654.654.

Alexy T, et al. Physical properties of blood and their relationship to clinical conditions. Front Physiol. 2022;13:906768. https://doi.org/10.3389/fphys.2022.906768.

Valério De Arruda M, Cruz Silva A, Fernandes Galduróz JC, Ferreira Galduróz R. Standardization for obtaining blood viscosity: a systematic review. Eur J Haematol. 2021;106(5):597–605.

Jensen B, Jacobsen NG, Christensen ED. Investigations on the porous media equations and resistance coefficients for coastal structures. Coast Eng. 2014;84:56–72. https://doi.org/10.1016/j.coastaleng.2013.11.004.

Guo J-T, et al. Measurement of pore diffusion factor of porous solid materials. Pet Sci. 2022;19(4):1897–904. https://doi.org/10.1016/j.petsci.2022.04.008.

Langford JF, Schure MR, Yao Y, Maloney SF, Lenhoff AM. Effects of pore structure and molecular size on diffusion in chromatographic adsorbents. J Chromatogr A. 2006;1126(1–2):95–106. https://doi.org/10.1016/j.chroma.2006.06.060.

Mehrdel P, Khosravi H, Karimi S, Martínez JAL, Casals-Terré J. Flow control in porous media: from numerical analysis to quantitative μPAD for Ionic strength measurements. Sensors. 2021;21(10):3328. https://doi.org/10.3390/s21103328.

Risman RA, Abdelhamid A, Weisel JW, Bannish BE, Tutwiler V. Effects of clot contraction on clot degradation: a mathematical and experimental approach. Biophys J. 2022;121(17):3271–85. https://doi.org/10.1016/j.bpj.2022.07.023.

Hussein HM, Georgiadis AL, Qureshi AI. Point-of-care testing for anticoagulation monitoring in neuroendovascular procedures. Am J Neuroradiol. 2012;33(7):1211–20. https://doi.org/10.3174/ajnr.A2621.

Hoffmann RF, Horsten S, Mariani MA, De Vries AJ. Clinical monitoring of activated clotting time during cardiothoracic surgery: comparing the Hemochron ® Response and Hemochron ® Signature Elite. Perfusion. 2023;38(2):285–91. https://doi.org/10.1177/02676591211049316.

Morris JH, Lee JA, McNitt S, Goldenberg I, Narins CR. Variability of activated clotting time by site of sample draw during percutaneous coronary intervention: a prospective single-center study. Angiology. 2021;72(7):673–8. https://doi.org/10.1177/0003319721992237.

Wehner JE, Boehne M, David S, Brand K, Tiede A, Bikker R. Activated Clotting Time (ACT) for monitoring of low-dose heparin: performance characteristics in healthy adults and critically ill patients. Clin Appl Thromb. 2020;26:107602962097549. https://doi.org/10.1177/1076029620975494.

Stettler GR, et al. Variability in international normalized ratio and activated partial thromboplastin time after injury are not explained by coagulation factor deficits. J Trauma Acute Care Surg. 2019;87(3):582–9. https://doi.org/10.1097/TA.0000000000002385.

Melly L, Torregrossa G, Lee T, Jansens J-L, Puskas JD. Fifty years of coronary artery bypass grafting. J Thorac Dis. 2018;10(3):1960–7. https://doi.org/10.21037/jtd.2018.02.43.

Peng GE, Wilson SR, Weiner OD. A pharmacological cocktail for arresting actin dynamics in living cells. Mol Biol Cell. 2011;22(21):3986–94. https://doi.org/10.1091/mbc.E11-04-0379.

Michel J-B, Martin-Ventura JL. Red blood cells and hemoglobin in human atherosclerosis and related arterial diseases. Int J Mol Sci. 2020;21(18):6756. https://doi.org/10.3390/ijms21186756.

Kasetsirikul S, Shiddiky MJA, Nguyen N-T. Challenges and perspectives in the development of paper-based lateral flow assays. Microfluid Nanofluidics. 2020;24(2):17. https://doi.org/10.1007/s10404-020-2321-z.

Sweeney RE, Nguyen V, Alouidor B, Budiman E, Wong RK, Yoon J-Y. Flow rate and raspberry pi-based paper microfluidic blood coagulation assay device. IEEE Sens J. 2019;19(13):4743–51. https://doi.org/10.1109/jsen.2019.2902065.

Saidykhan J, Selevic L, Cinti S, May JE, Killard AJ. Paper-based lateral flow device for the sustainable measurement of human plasma fibrinogen in low-resource settings. Anal Chem. 2021;93(41):14007–13. https://doi.org/10.1021/acs.analchem.1c03665.

Labrique A, Agarwal S, Tamrat T, Mehl G. WHO digital health guidelines: a milestone for global health. Npj Digit Med. 2020;3(1):120. https://doi.org/10.1038/s41746-020-00330-2.

Alanzi TM. Users’ satisfaction levels about mHealth applications in post-Covid-19 times in Saudi Arabia. PLoS ONE. 2022;17(5):e0267002. https://doi.org/10.1371/journal.pone.0267002.

Chiarito M, Luceri L, Oliva A, Stefanini G, Condorelli G. Artificial intelligence and cardiovascular risk prediction: all that glitters is not gold. Eur Cardiol Rev. 2022;e29 https://doi.org/10.15420/ecr.2022.11.

Peng S-Y, Chuang Y-C, Kang T-W, Tseng K-H. Random forest can predict 30-day mortality of spontaneous intracerebral hemorrhage with remarkable discrimination. Eur J Neurol. 2010;17(7):945–50. https://doi.org/10.1111/j.1468-1331.2010.02955.x.

Arumugam J, Bukkapatnam STS, Narayanan KR, Srinivasa AR. random forests are able to identify differences in clotting dynamics from kinetic models of thrombin generation. PLoS ONE. 2016;11(5):e0153776. https://doi.org/10.1371/journal.pone.0153776.

Wool GD. Benefits and pitfalls of point-of-care coagulation testing for anticoagulation management. Am J Clin Pathol. 2019;151(1):1–17. https://doi.org/10.1093/ajcp/aqy087.

Galli E, Maggio E, Pomero F. Venous thromboembolism in sepsis: from bench to bedside. Biomedicines. 2022;10(7):1651. https://doi.org/10.3390/biomedicines10071651.

Jiang F, Zhang Y, Ju LA. A microfluidic approach for early prediction of thrombosis in patients with cancer. Cell Rep Methods. 2023;3(7):100536. https://doi.org/10.1016/j.crmeth.2023.100536.

Zhao YC. et al. Novel movable typing for personalized vein‐chips in large scale: recapitulate patient‐specific Virchow’s triad and its contribution to cerebral venous sinus thrombosis. Adv Funct Mater. 2023;2214179. https://doi.org/10.1002/adfm.202214179.

Zhang Y. et al. Microvasculature‐on‐a‐post chip that recapitulates prothrombotic vascular geometries and 3D flow disturbance. Adv Mater Interfaces. 2023;2300234. https://doi.org/10.1002/admi.202300234.

Ernstmeyer K, Christman E, editors. Nursing advanced skills. Eau Claire: Wisconsin Technical College System Pressbooks; 2023.

Acknowledgements

The authors thank Freda Passam, Timothy Ang, James McFadyen, Paul Monagle, Chantal Attard, Paul Davies, Yehuan Zhou and Westmead nursing staff for helpful discussion on clinical imaging and treatment/diagnosis related to thrombosis. The authors also thank Nurul Aisha Zainal Abidin, Savindi Ramasundara, Laura Moldovan and Yiyao Chen for support with the blood collection ethics and preparing the syringes and reagents used for experimentation at the J03 Engineering Precinct – Mechanobiology and Biomechanics Laboratory. We also thank Lawrence Farrar for assistance with SEM microscopy. This work was conducted (in part) using the Research Prototype Foundry core research facilities at the NSW node of the Australian National Fabrication Facility (ANFF-NSW), a company established under the National Collaborative Research Infrastructure Strategy to provide nano and micro-fabrication facilities for Australia’s researchers.

Funding

This work was supported by the National Health and Medical Research Council (NHMRC) of Australia (APP2003904 – L.A.J.); NSW Cardiovascular Capacity Building Program (Early-Mid Career Researcher Grant – L.A.J., P.Q. and Z.W.); MRFF Cardiovascular Health Mission Grants (MRF2016165 – L.A.J.; MRF2023977 – L.A.J.,and MRFF Early-to-Mid Career Researchers Grant (MRF2028865 – L.A.J.); NSW Government Boosting Business Innovation Program (BBIP) International Stream (L.A.J.); National Heart Foundation Vanguard Grant (106979 – L.A.J.), University of Sydney External Research Collaboration Seed Fund (L.A.J. and Z.W.). Lining Arnold Ju is a Snow Medical Research Foundation Fellow (2022SF176) and a National Heart Foundation Future Leader Fellow Level 2 (105863). Y.C.Z. is a NHMRC PhD Scholar (GNT2022247 – Y.C.Z) and a National Heart Foundation PhD Scholar (106879). National Health and Medical Research Council (Australia); Investigator Emerging Leadership 1 grant (GNT2018376), Heart foundation future leader fellowship with Paul Korner Award (106780); McCusker Charitable Foundation.

Author information

Authors and Affiliations

Contributions

A.S., C.C. and L.A.J. designed the experiments, performed and analysed by A.S and A.N.; A.S., N.A.Z.A. and Y.C.Z. assisted in blood sample collection and preparation. A.S and H.Z developed the machine learning algorithm. Z.W., P.Q and W.C. provided clinical and engineering design and integration expertise; L.A.J. was the senior author who supervised the study and led the team. All authors co-wrote the paper together. Research activities related to this work were complied with relevant ethical regulations.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The research protocol was approved by the University of Sydney Human Research Ethics Committee (HREC, project 2023/582). Data will be made available upon reasonable request.

All experiments were performed in accordance with relevant guidelines and approved by the University of Sydney Human Research Ethics Committee. Informed consents were obtained from human participants of this study. All data that support the findings of this study are available on request from the corresponding author.

Competing interests

All authors state they have no conflicts to declare.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Material 2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, A., Nasser, A., Chen, C. et al. Strategic reuse of rapid antigen tests for coagulation status assessment: an integrated machine learning approach. Med-X 2, 10 (2024). https://doi.org/10.1007/s44258-024-00025-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44258-024-00025-3